Id

stringlengths 1

6

| PostTypeId

stringclasses 7

values | AcceptedAnswerId

stringlengths 1

6

⌀ | ParentId

stringlengths 1

6

⌀ | Score

stringlengths 1

4

| ViewCount

stringlengths 1

7

⌀ | Body

stringlengths 0

38.7k

| Title

stringlengths 15

150

⌀ | ContentLicense

stringclasses 3

values | FavoriteCount

stringclasses 3

values | CreationDate

stringlengths 23

23

| LastActivityDate

stringlengths 23

23

| LastEditDate

stringlengths 23

23

⌀ | LastEditorUserId

stringlengths 1

6

⌀ | OwnerUserId

stringlengths 1

6

⌀ | Tags

list |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

433 | 2 | null | 423 | 73 | null | Here's [another one from Dilbert](http://dilbert.com/strips/comic/2010-07-02/):

| null | CC BY-SA 3.0 | null | 2010-07-21T15:43:44.457 | 2015-02-24T20:50:13.917 | 2015-02-24T20:50:13.917 | 7290 | 5 | null |

434 | 2 | null | 22 | 85 | null | Very crudely I would say that:

Frequentist: Sampling is infinite and decision rules can be sharp. Data are a repeatable random sample - there is a frequency. Underlying parameters are fixed i.e. they remain constant during this repeatable sampling process.

Bayesian: Unknown quantities are treated probabilistically and the state of the world can always be updated. Data are observed from the realised sample. Parameters are unknown and described probabilistically. It is the data which are fixed.

There is a brilliant [blog post](http://www.behind-the-enemy-lines.com/2008/01/are-you-bayesian-or-frequentist-or.html) which gives an indepth example of how a Bayesian and Frequentist would tackle the same problem. Why not answer the problem for yourself and then check?

The problem (taken from Panos Ipeirotis' blog):

>

You have a coin that when flipped ends up head with probability $p$ and ends up tail with probability $1-p$. (The value of $p$ is unknown.)

Trying to estimate $p$, you flip the coin 100 times. It ends up head 71 times.

Then you have to decide on the following event: "In the next two tosses we will get two heads in a row."

Would you bet that the event will happen or that it will not happen?

| null | CC BY-SA 4.0 | null | 2010-07-21T15:50:43.377 | 2020-04-03T14:53:28.790 | 2020-06-11T14:32:37.003 | -1 | 215 | null |

435 | 2 | null | 423 | 197 | null | One more [Dilbert cartoon](http://dilbert.com/fast/2008-05-08/):

...

| null | CC BY-SA 3.0 | null | 2010-07-21T15:53:59.553 | 2015-02-05T11:28:02.847 | 2017-03-09T17:30:36.203 | -1 | null | null |

436 | 2 | null | 421 | 24 | null | The answer would most definitely depend on their discipline, the methods/techniques that they would like to learn and their existing mathematical/statistical abilities.

For example, economists/social scientists who want to learn about cutting edge empirical econometrics could read Angrist and Pischke's [Mostly Harmless Econometrics](http://rads.stackoverflow.com/amzn/click/0691120358). This is a non-technical book covering the "natural experimental revolution" in economics. The book only presupposes that they know what regression is.

But I think the best book on applied regression is Gelman and Hill's [Data Analysis Using Regression and Multilevel/Hierarchical Models](http://rads.stackoverflow.com/amzn/click/052168689X). This covers basic regression, multilevel regression, and Bayesian methods in a clear and intuitive way. It would be good for any scientist with a basic background in statistics.

| null | CC BY-SA 2.5 | null | 2010-07-21T16:04:18.627 | 2010-08-11T08:46:15.107 | 2010-08-11T08:46:15.107 | 509 | 215 | null |

437 | 2 | null | 414 | 5 | null | For you I would suggest:

[Introduction to the Mathematical and Statistical Foundations of Econometrics](https://www.cambridge.org/core/books/introduction-to-the-mathematical-and-statistical-foundations-of-econometrics/0AB780F0657A93807188838B1E7C529A) by Herman J. Bierens, CUP. The word "Introduction" in the title is a sick joke for most PhD econometrics students.

[Markov Chain Monte Carlo](https://www.routledge.com/Markov-Chain-Monte-Carlo-Stochastic-Simulation-for-Bayesian-Inference/Gamerman-Lopes/p/book/9781584885870) by Dani Gamerman, Chapman & Hall is also concise.

| null | CC BY-SA 4.0 | null | 2010-07-21T16:07:20.810 | 2023-02-11T10:07:35.567 | 2023-02-11T10:07:35.567 | 362671 | 215 | null |

438 | 2 | null | 165 | 33 | null | OK here's my best attempt at an informal and crude explanation.

A Markov Chain is a random process that has the property that the future depends only on the current state of the process and not the past i.e. it is memoryless. An example of a random process could be the stock exchange. An example of a Markov Chain would be a board game like Monopoly or Snakes and Ladders where your future position (after rolling the die) would depend only on where you started from before the roll, not any of your previous positions. A textbook example of a Markov Chain is the "drunkard's walk". Imagine somebody who is drunk and can move only left or right by one pace. The drunk moves left or right with equal probability. This is a Markov Chain where the drunk's future/next position depends only upon where he is at present.

Monte Carlo methods are computational algorithms (simply sets of instructions) which randomly sample from some process under study. They are a way of estimating something which is too difficult or time consuming to find deterministically. They're basically a form of computer simulation of some mathematical or physical process. The Monte Carlo moniker comes from the analogy between a casino and random number generation. Returning to our board game example earlier, perhaps we want to know if some properties on the Monopoly board are visited more often than others. A Monte Carlo experiment would involve rolling the dice repeatedly and counting the number of times you land on each property. It can also be used for calculating numerical integrals. (Very informally, we can think of an integral as the area under the graph of some function.) Monte Carlo integration works great on a high-dimensional functions by taking a random sample of points of the function and calculating some type of average at these various points. By increasing the sample size, the law of large numbers tells us we can increase the accuracy of our approximation by covering more and more of the function.

These two concepts can be put together to solve some difficult problems in areas such as Bayesian inference, computational biology, etc where multi-dimensional integrals need to be calculated to solve common problems. The idea is to construct a Markov Chain which converges to the desired probability distribution after a number of steps. The state of the chain after a large number of steps is then used as a sample from the desired distribution and the process is repeated. There many different MCMC algorithms which use different techniques for generating the Markov Chain. Common ones include the Metropolis-Hastings and the Gibbs Sampler.

| null | CC BY-SA 2.5 | null | 2010-07-21T16:42:43.820 | 2010-07-21T16:42:43.820 | null | null | 215 | null |

439 | 2 | null | 414 | 28 | null | As you said, it's not necessarily the case that a mathematician may want a rigorous book. Maybe the goal is to get some intuition of the concepts quickly, and then fill in the details. I recommend two books from CMU professors, both published by Springer: "[All of Statistics](https://link.springer.com/book/10.1007/978-0-387-21736-9)" by Larry Wasserman is quick and informal. "[Theory of Statistics](https://link.springer.com/book/10.1007/978-1-4612-4250-5)" by Mark Schervish is rigorous and relatively complete. It has decision theory, finite sample, some asymptotics and sequential analysis.

Added 7/28/10: There is one additional reference that is orthogonal to the other two: very rigorous, focused on learning theory, and short. It's by Smale (Steven Smale!) and Cucker, "[On the Mathematical Foundations of Learning](http://www.ams.org/journals/bull/2002-39-01/S0273-0979-01-00923-5/home.html)". Not easy read, but the best crash course on the theory.

| null | CC BY-SA 4.0 | null | 2010-07-21T16:50:35.697 | 2023-02-11T10:15:04.673 | 2023-02-11T10:15:04.673 | 362671 | 30 | null |

440 | 2 | null | 421 | 21 | null | [Peter Dalgaard's Introductory Statistics with R](http://rads.stackoverflow.com/amzn/click/0387790535) is a great book for some introductory statistics with a focus on the R software for data analysis.

| null | CC BY-SA 2.5 | null | 2010-07-21T16:53:35.677 | 2010-07-21T16:53:35.677 | null | null | 36 | null |

441 | 2 | null | 290 | 5 | null | UCLA has the best free resources you'll find anywhere.

[https://stats.idre.ucla.edu/stata/](https://stats.idre.ucla.edu/stata/)

| null | CC BY-SA 3.0 | null | 2010-07-21T16:54:38.483 | 2017-10-12T15:20:16.267 | 2017-10-12T15:20:16.267 | 27422 | 36 | null |

442 | 2 | null | 363 | 8 | null | I say the [visual display of quantitative information](http://rads.stackoverflow.com/amzn/click/0961392142) by Tufte, and [Freakonomics](http://rads.stackoverflow.com/amzn/click/0060731338) for something fun.

| null | CC BY-SA 2.5 | null | 2010-07-21T16:56:45.453 | 2010-07-21T16:56:45.453 | null | null | 36 | null |

443 | 2 | null | 36 | 11 | null | There's two aspects to this post hoc ergo propter hoc problem that I like to cover: (i) reverse causality and (ii) endogeneity

An example of "possible" reverse causality:

Social drinking and earnings - drinkers earn more money according to Bethany L. Peters & Edward Stringham (2006. "No Booze? You May Lose: Why Drinkers Earn More Money Than Nondrinkers," Journal of Labor Research, Transaction Publishers, vol. 27(3), pages 411-421, June). Or do people who earn more money drink more either because they have a greater disposable income or due to stress? This is a great paper to discuss for all sorts of reasons including measurement error, response bias, causality, etc.

An example of "possible" endogeneity:

The Mincer Equation explains log earnings by education, experience and experience squared. There is a long literature on this topic. Labour economists want to estimate the causal relationship of education on earnings but perhaps education is endogenous because "ability" could increase the amount of education an individual has (by lowering the cost of obtaining it) and could lead to an increase in earnings, irrespective of the level of education. A potential solution to this could be an instrumental variable. Angrist and Pischke's book, Mostly Harmless Econometrics covers this and relates topics in great detail and clarity.

Other silly examples that I have no support for include:

- Number of televisions per capita and the numbers of mortality rate. So let's send TVs to developing countries. Obviously both are endogenous to something like GDP.

- Number of shark attacks and ice cream sales. Both are endogenous to the temperature perhaps?

I also like to tell the terrible joke about the lunatic and the spider. A lunatic is wandering the corridors of an asylum with a spider he's carrying in the palm of his hand. He sees the doctor and says, "Look Doc, I can talk to spiders. Watch this. "Spider, go left!" The spider duly moves to the left. He continues, "Spider, go right." The spider shuffles to the right of his palm. The doctor replies, "Interesting, maybe we should talk about this in the next group session." The lunatic retorts, "That's nothing Doc. Watch this." He pulls off each of the spider's legs one by one and then shouts, "Spider, go left!" The spider lies motionless on his palm and the lunatic turns to the doctor and concludes, "If you pull off a spider's legs he'll go deaf."

| null | CC BY-SA 3.0 | null | 2010-07-21T17:06:32.303 | 2012-03-31T10:45:48.593 | 2012-03-31T10:45:48.593 | 9007 | 215 | null |

445 | 2 | null | 290 | 5 | null | The UCLA resource listed by Stephen Turner (below) is excellent if you just want to apply methods you're already familiar with using Stata.

If you're looking for textbooks which teach you statistics/econometrics while using Stata then these are solid recommendations (but it depends at what level you're looking at):

Introductory Methods

An Introduction to Modern Econometrics Using Stata by Chris Baum

Introduction to Econometrics by Chris Dougherty

Advanced/Specialised Methods

Multilevel and Longitudinal Modeling Using Stata by Rabe-Hesketh and Skrondal

Regression Models for Categorical Dependent Variables Using Stata by Long and Freese

| null | CC BY-SA 3.0 | null | 2010-07-21T17:13:33.117 | 2017-10-12T11:29:26.230 | 2017-10-12T11:29:26.230 | 27422 | 215 | null |

446 | 2 | null | 125 | 18 | null | The Gelman books are all excellent but not necessarily introductory in that they assume that you know some statistics already. Therefore they are an introduction to the Bayesian way of doing statistics rather than to statistics in general. I would still give them the thumbs up, however.

As an introductory statistics/econometrics book which takes a Bayesian perspective, I would recommend Gary Koop's [Bayesian Econometrics](http://en.wikipedia.org/wiki/Special%3aBookSources/0521855713).

| null | CC BY-SA 3.0 | null | 2010-07-21T17:17:45.780 | 2012-07-24T05:47:17.923 | 2012-07-24T05:47:17.923 | 9007 | 215 | null |

447 | 2 | null | 170 | 11 | null | I really like these two books by Daniel McFadden of Berkeley:

- Lecture Notes: Econometric Tools

http://elsa.berkeley.edu/users/mcfadden/e240a_sp98/e240a.html

- Lecture Notes: Econometrics/Statistics http://elsa.berkeley.edu/users/mcfadden/e240b_f01/e240b.html

| null | CC BY-SA 4.0 | null | 2010-07-21T17:26:14.377 | 2020-09-23T05:50:43.943 | 2020-09-23T05:50:43.943 | 17760 | 215 | null |

448 | 2 | null | 396 | 6 | null | Here are my guidelines, based on the most common errors I see (in addition to all the other good points mentioned)

- Use scatter graphs, not line plots, if element order is not relevant.

- When preparing plots that are meant to be compared, use the same scale factor for all of them.

- Even better - find a way to combine the data in a single graph (eg: boxplots are a better than several histograms to compare a large number of distributions).

- Do not forget to specify units

- Use a legend only if you must - it's generally clearer to label curves directly.

- If you must use a legend, move it inside the plot, in a blank area.

- For line graphs, aim for an aspect ratio which yields lines that are roughly at 45o with the page.

| null | CC BY-SA 4.0 | null | 2010-07-21T17:26:15.483 | 2022-11-23T09:56:13.210 | 2022-11-23T09:56:13.210 | 362671 | 77 | null |

449 | 2 | null | 328 | 1 | null | More from an economics perspectives I think these two sets of lecture notes are very good:

[http://home.datacomm.ch/paulsoderlind/Courses/OldCourses/FinEcmtAll.pdf](http://home.datacomm.ch/paulsoderlind/Courses/OldCourses/FinEcmtAll.pdf)

[http://personal.lse.ac.uk/mele/files/fin_eco.pdf](http://personal.lse.ac.uk/mele/files/fin_eco.pdf)

The first provides econometric methods for analysing financial data whereas the second provides the financial economics theory behind the models being applied. They're both MSc level texts.

| null | CC BY-SA 2.5 | null | 2010-07-21T17:28:57.803 | 2010-07-21T17:28:57.803 | null | null | 215 | null |

450 | 2 | null | 418 | 8 | null | For us, it is just one example of a robust regression -- I believe it is used by statisticians also, but maybe not so wide because it has some better known alternatives.

| null | CC BY-SA 2.5 | null | 2010-07-21T17:30:02.077 | 2010-07-21T17:30:02.077 | null | null | null | null |

451 | 2 | null | 26 | 6 | null | When describing a variable we typically summarise it using two measures: a measure of centre and a measure of spread. Common measures of centre include the mean, median and mode. Common measure of spread include the variance and interquartile range.

The variance (represented by the Greek lowercase sigma raised to the power two) is commonly used when the mean is reported. The variance is the average squared deviation of variable. The deviation is calculated by subtracting the mean from each observation. This is squared because the sum would otherwise be zero and squaring removes this problem while maintaining the relative size of the deviations. The problem with using the variation as a measure of spread is that it is in squared units. For example if our variable of interest was height measured in inches then the variance would be reported in squared-inches which makes little sense. The standard deviation (represented by the Greek lowercase sigma) is the square-root of the variance and returns the measure of spread to the original units. This is much more intuitive and is therefore more popular than the variance.

When using the standard deviation, one has to be careful of outliers as they will skew the standard deviation (and the mean) as they are not resistant measures of spread. A simple example will illustrate this property. The mean of my terrible cricket batting scores of 13, 14, 16, 23, 26, 28, 33, 39, and 61 is 28.11. If we consider 61 to be an outlier and deleted it, the mean would be 24.

| null | CC BY-SA 3.0 | null | 2010-07-21T17:38:08.583 | 2013-04-20T01:19:50.947 | 2013-04-20T01:19:50.947 | 24560 | 215 | null |

452 | 1 | 711 | null | 25 | 67691 | It has been suggested by Angrist and Pischke that Robust (i.e. robust to heteroskedasticity or unequal variances) Standard Errors are reported as a matter of course rather than testing for it. Two questions:

- What is impact on the standard errors of doing so when there is homoskedasticity?

- Does anybody actually do this in their work?

| Always Report Robust (White) Standard Errors? | CC BY-SA 2.5 | null | 2010-07-21T17:45:01.570 | 2019-12-27T02:55:23.200 | 2017-02-13T10:08:10.600 | 28666 | 215 | [

"regression",

"standard-error",

"heteroscedasticity",

"robust-standard-error"

]

|

453 | 2 | null | 421 | 9 | null | [Statistics in Plain English](http://rads.stackoverflow.com/amzn/click/041587291X) is pretty good.

4.5 on Amazon, 11 reviews.

Explains ANOVA pretty well too.

| null | CC BY-SA 2.5 | null | 2010-07-21T17:54:05.170 | 2010-07-21T17:54:05.170 | null | null | 74 | null |

454 | 2 | null | 396 | 1 | null | It depends on the way in which the plots will be discussed.

For instance, if I'm sending out plots for a group meeting that will be done with callers from different locations, I prefer putting them together in Powerpoint as opposed to Excel, so it's easier to flip around.

For one-on-one technical calls, I'll put something in excel so that the client be able to move a plot aside, and view the raw data. Or, I can enter p-values into cells along side regression coefficients, e.g.

Keep in mind: plots are cheap, especially for a slide show, or for emailing to a group. I'd rather make 10 clear plots that we can flip through than 5 plots where I try to put distinct cohorts (e.g. "males and females") on the same graph.

| null | CC BY-SA 2.5 | null | 2010-07-21T18:11:59.113 | 2010-07-21T18:11:59.113 | null | null | 62 | null |

455 | 2 | null | 423 | 47 | null | Allright, I think this one is hilarious- but let's see if it passes the Statistical Analysis Miller test.

## Fermirotica

[](http://xkcd.com/563/)

>

I love how Google handles dimensional analysis. Stats are ballpark and vary wildly by time of day and whether your mom is in town.

| null | CC BY-SA 2.5 | null | 2010-07-21T18:18:13.170 | 2010-07-23T15:27:06.463 | 2017-03-09T17:30:36.220 | -1 | 13 | null |

456 | 2 | null | 277 | 19 | null | As the [Encyclopedia of GIS](http://books.google.com/books?id=6q2lOfLnwkAC&dq=Encyclopedia+of+GIS) states, the conditional autoregressive model (CAR) is appropriate for situations with first order dependency or relatively local spatial autocorrelation, and simultaneous autoregressive model (SAR) is more suitable where there are second order dependency or a more global spatial autocorrelation.

This is made clear by the fact that CAR obeys the spatial version of the [Markov property](http://en.wikipedia.org/wiki/Markov_property), namely it assumes that the state of a particular area is influenced its neighbors and not neighbors of neighbors, etc. (i.e. it is spatially “memoryless”, instead of temporally), whereas SAR does not assume such. This is due to the different ways in which they specify their variance-covariance matrixes. So, when the spatial Markov property obtains, CAR provides a simpler way to model autocorrelated geo-referenced areal data.

See [Gis And Spatial Data Analysis: Converging Perspectives](http://www.geog.ucsb.edu/~good/papers/387.pdf) for more details.

| null | CC BY-SA 2.5 | null | 2010-07-21T18:26:02.873 | 2010-07-27T19:11:57.330 | 2010-07-27T19:11:57.330 | 39 | 39 | null |

457 | 2 | null | 359 | 5 | null | I do not know the literature in the area well enough to offer a direct response. However, it seems to me that if the three tests differ then that is an indication that you need further research/data collection in order to definitively answer your question.

You may also want to look at [this](http://scholar.google.com/scholar?q=small%20sample%20properties%20of%20wald%20likelihood%20ratio&um=1&ie=UTF-8&sa=N&hl=en&tab=ws) Google Scholar search

Update in response to your comment:

If collecting additional data is not possible then there is one workaround. Do a simulation which mirrors your data structure, sample size and your proposed model. You can set the parameters to some pre-specified values. Estimate the model using the data generated and then check which one of the three tests points you to the right model. Such a simulation would offer some guidance as to which test to use for your real data. Does that make sense?

| null | CC BY-SA 2.5 | null | 2010-07-21T18:28:27.423 | 2010-07-22T01:21:24.837 | 2010-07-22T01:21:24.837 | null | null | null |

458 | 2 | null | 194 | 3 | null | One way to test patterns in stock market data is discussed [here](http://www.evidencebasedta.com/). A similar approach would be to randomise the stock market data and identify your patterns of interest, which would obviously be devoid of any meaning due to the deliberate randomising process. These randomly generated patterns and their returns would form your null hypothesis. By statistically comparing the pattern returns in the actual data with the returns from the null hypothesis randomised data patterns you may be able to distinguish patterns which actually have some meaning or predictive value.

| null | CC BY-SA 2.5 | null | 2010-07-21T18:41:12.033 | 2010-07-21T18:41:12.033 | null | null | 226 | null |

459 | 1 | 479 | null | 9 | 1075 | We're plotting time-series metrics in the context of network/server operations. The data has a 5-minute sample rate, and consists of things like CPU utilization, error rate, etc.

We're adding a horizontal "threshold" line to the graphs, to visually indicate a value threshold above which people should worry/take notice. For example, in the CPU utilization example, perhaps the "worry" threshold is 75%.

My team has some internal debate over what color this line should be:

- Something like a bright red that clearly stands out from the background grid and data lines, and indicates this is a warning condition

- Something more subtle and definitely NOT red, since the "ink" for the line doesn't represent any actual data, and thus attention shouldn't be drawn to it unnecessarily.

Would appreciate guidance / best practices...

| Good line color for "threshold" line in a time-series graph? | CC BY-SA 3.0 | null | 2010-07-21T19:59:48.020 | 2013-05-15T04:14:18.790 | 2013-05-15T04:14:18.790 | 805 | 259 | [

"time-series",

"data-visualization"

]

|

460 | 2 | null | 213 | 7 | null | For moderate dimensions, like 3, then some sort of kernel cross-validation technique as suggested elsewhere seems reasonable and is the best I can come up with.

For higher dimensions, I'm not sure that the problem is solvable; it lands pretty squarely into 'curse-of-dimensionality' territory. The issue is that distance functions tend to converge to very large values very quickly as you increase dimensionality, including distances derived from distributions. If you're defining an outlier as "a point with a comparatively large distance function relative to the others", and all your distance functions are beginning to converge because you're in a high-dimensional space, well, you're in trouble.

Without some sort of distributional assumption that will let you turn it into a probabilistic classification problem, or at least some rotation that lets you separate your space into "noise dimensions" and "informative dimensions", I think that the geometry of high-dimensional spaces is going to prohibit any easy -- or at least robust -- identification of outliers.

| null | CC BY-SA 2.5 | null | 2010-07-21T20:03:40.367 | 2010-07-21T20:03:40.367 | null | null | 61 | null |

461 | 2 | null | 459 | 4 | null | To me, whether or not the line represents actual data seems irrelevant. What's the point of the plot? If it's so that somebody will do something when utilization crosses a threshold, the line marking the threshold had better be very visible. If the point of the plot is to give an overview of utilization over time, then why include the line at all? Just put the major gridlines of your plot at intervals that will coincide with your threshold (25% in your example), and let the reader figure it out.

... y'all been reading too much Tufte.

| null | CC BY-SA 2.5 | null | 2010-07-21T20:07:32.057 | 2010-07-21T20:07:32.057 | null | null | 71 | null |

462 | 2 | null | 396 | 9 | null | In the physics field there is a rule that the whole paper/report should be understandable only from quick look at the plots. So I would mainly advise that they should be self-explanatory.

This also implies that you must always check whether your audience is familiar with some kind of plot -- I had once made a big mistake assuming that every scientist knows what boxplots are, and then wasted an hour to explain it.

| null | CC BY-SA 3.0 | null | 2010-07-21T20:11:02.580 | 2016-09-17T13:45:15.090 | 2016-09-17T13:45:15.090 | 22047 | null | null |

463 | 2 | null | 363 | 7 | null | In addition to "The History of Statistics" suggested by Graham, another Stigler book worth reading is

[Statistics on the Table: The History of Statistical Concepts and Methods](http://rads.stackoverflow.com/amzn/click/0674009797)

| null | CC BY-SA 2.5 | null | 2010-07-21T20:29:12.783 | 2010-07-21T20:29:12.783 | null | null | 90 | null |

464 | 2 | null | 459 | 3 | null | If this is about your "Qnotifier" I think that you should plot the threshold line in some darker gray so it is distinguishable but not disturbing. Then I would color the part of the plot that reaches over the threshold in some alarmistic hue, like red.

| null | CC BY-SA 2.5 | null | 2010-07-21T20:34:43.147 | 2010-07-21T20:34:43.147 | null | null | null | null |

465 | 2 | null | 452 | 3 | null | I thought that the White Standard Error and the Standard Error computed in the "normal" way (eg, Hessian and/or OPG in the case of maximum likelihood) were asymptotically equivalent in the case of homoskedasticity?

Only if there is heteroskedasticity will the "normal" standard error be inappropriate, which means that the White Standard Error is appropriate with or without heteroskedasticity, that is, even when your model is homoskedastic.

I can't really talk about 2, but I don't see the why one wouldn't want to calculate the White SE and include in the results.

| null | CC BY-SA 2.5 | null | 2010-07-21T20:45:08.177 | 2010-07-21T20:45:08.177 | null | null | 90 | null |

466 | 2 | null | 170 | 12 | null | Not Statistics specific, but a good resource is: [http://www.reddit.com/r/mathbooks](http://www.reddit.com/r/mathbooks)

Also, George Cain at Georgia Tech maintains a list of freely available maths texts that includes some statistical texts. [http://people.math.gatech.edu/~cain/textbooks/onlinebooks.html](http://people.math.gatech.edu/~cain/textbooks/onlinebooks.html)

| null | CC BY-SA 2.5 | null | 2010-07-21T20:53:54.800 | 2010-07-21T20:53:54.800 | null | null | 115 | null |

467 | 2 | null | 7 | 7 | null | [http://www.reddit.com/r/datasets](http://www.reddit.com/r/datasets) and also, [http://www.reddit.com/r/opendata](http://www.reddit.com/r/opendata) both contain a constantly growing list of pointers to various datasets.

| null | CC BY-SA 2.5 | null | 2010-07-21T21:04:03.950 | 2010-07-21T21:04:03.950 | null | null | 115 | null |

468 | 2 | null | 124 | 4 | null | If you're coming from the programming side, one option is to use the [Natural Language Toolkit](http://www.nltrk.org) (NLTK) for Python. There's an O'Reilly book, [available freely](http://www.nltk.org/book), which might be a less dense and more practical introduction to building classifiers for documents among other things.

If you're interested in beefing up on the statistical side, Roger Levy's book in progress, [Probabilistic Models in Study of Language](http://idiom.ucsd.edu/~rlevy/textbook/text.html), might not be bad to peruse. It's written for cogsci/compsci grad students starting out with statistical NLP techniques.

| null | CC BY-SA 2.5 | null | 2010-07-21T22:05:04.773 | 2010-07-21T22:05:04.773 | null | null | 251 | null |

470 | 2 | null | 170 | 63 | null | The Elements of Statistical Learning by Hastie, Tibshirani, and Friedman is a standard text for statistics and data mining, and is now free:

[https://web.stanford.edu/~hastie/ElemStatLearn/](https://web.stanford.edu/~hastie/ElemStatLearn/)

Also Available [here](https://rads.stackoverflow.com/amzn/click/0387848576).

| null | CC BY-SA 3.0 | null | 2010-07-21T22:35:38.850 | 2017-10-15T12:25:14.017 | 2017-10-15T12:25:14.017 | 35740 | 36 | null |

471 | 2 | null | 363 | 22 | null | [Darrell Huff -- How to Lie with Statistics](http://rads.stackoverflow.com/amzn/click/0393310728)

| null | CC BY-SA 2.5 | null | 2010-07-21T22:57:58.260 | 2011-02-20T02:34:52.907 | 2011-02-20T02:34:52.907 | 159 | 168 | null |

472 | 2 | null | 170 | 11 | null | For getting into stochastic processes and SDEs, Tom Kurtz's [lecture notes](http://www.math.wisc.edu/~kurtz/m735.htm) are hard to beat. It starts with a decent review of probability and some convergence results, and then dives right into continuous time stochastic processes in fairly clear, comprehensible language. In general it's one of the best books on the topic -- free or otherwise -- I've found.

| null | CC BY-SA 2.5 | null | 2010-07-21T23:00:34.700 | 2010-07-21T23:00:34.700 | null | null | 61 | null |

473 | 2 | null | 369 | 3 | null | The traditional solution to this problem is to use the [vector representation](http://dx.doi.org/10.1137/S0036144598347035) for the news stories and then cluster the vectors. The vectors are arrays where each entry represents a word or word class. The value associated to each word will be the [tf-idf](http://en.wikipedia.org/wiki/TF_IDF) weight. This value goes up the more frequent the word in the document and down the more frequent the word is in the whole collection of documents.

You may think of the titles as the documents, but sticking to just the title for news stories may be a bit risky for clustering similar stories. The problem is that by using word counts you are discarding all information on the order of the words. Longer texts compensate for that loss information by distinguishing documents by the vocabulary used (articles mentioning finance, money, ... are closer to each other than those mentioning ergodic, Poincare).

If you want to stick to titles, one idea is to think of word pairs as the words you use in the vector representation. So for the title The eagle has landed, you would think of the eagle, eagle has, has landed. as the “words.”

To discover when a cluster has become much bigger or different from the others you will need to develop a decision procedure.

| null | CC BY-SA 2.5 | null | 2010-07-22T02:37:18.820 | 2010-07-22T02:37:18.820 | null | null | 260 | null |

474 | 2 | null | 30 | 8 | null | For testing the numbers produced by random number generators the [Diehard tests](http://en.wikipedia.org/wiki/Diehard_tests) are a practical approach. But those tests seem kind of arbitrary and one is may be left wondering if more should be included or if there is any way to really check the randomness.

The best candidate for a definition of a random sequence seems to be the [Martin-Löf randomness](http://en.wikipedia.org/wiki/Algorithmically_random_sequence). The main idea for this kind of randomness, is beautifully developed in [Knuth, section 3.5](http://en.wikipedia.org/wiki/The_Art_of_Computer_Programming), is to test for uniformity for all types of sub-sequences of the sequence of random numbers. Getting that all type of subsequences definition right turned out to be be really hard even when one uses notions of computability.

The Diehard tests are just some of the possible subsequences one may consider and their failure would exclude Martin-Löf randomness.

| null | CC BY-SA 2.5 | null | 2010-07-22T03:21:18.913 | 2010-07-22T03:21:18.913 | null | null | 260 | null |

475 | 2 | null | 224 | 4 | null | There is also [Gephi](http://gephi.org/) for plotting social networks.

(p.s: Here is how to [connect it with R](http://www.r-bloggers.com/data-preparation-for-social-network-analysis-using-r-and-gephi/))

| null | CC BY-SA 2.5 | null | 2010-07-22T03:55:48.147 | 2010-07-22T03:55:48.147 | null | null | 253 | null |

476 | 2 | null | 359 | 8 | null | I won't give a definitive answer in terms of ranking the three. Build 95% CIs around your parameters based on each, and if they're radically different, then your first step should be to dig deeper. Transform your data (though the LR will be invariant), regularize your likelihood, etc. In a pinch though, I would probably opt for the LR test and associated CI. A rough argument follows.

The LR is invariant under the choice of parametrization (e.g. T versus logit(T)). The Wald statistic assumes normality of (T - T0)/SE(T). If this fails, your CI is bad. The nice thing about the LR is that you don't need to find a transform f(T) to satisfy normality. The 95% CI based on T will be the same. Also, if your likelihood isn't quadratic, the Wald 95% CI, which is symmetric, can be kooky since it may prefer values with lower likelihood to those with higher likelihood.

Another way to think about the LR is that it's using more information, loosely speaking, from the likelihood function. The Wald is based on the MLE and the curvature of the likelihood at null. The Score is based on the slope at null and curvature at null. The LR evaluates the likelihood under the null, and the likelihood under the union of the null and alternative, and combines the two. If you're forced to pick one, this may be intuitively satisfying for picking the LR.

Keep in mind that there are other reasons, such as convenience or computational, to opt for the Wald or Score. The Wald is the simplest and, given a multivariate parameter, if you're testing for setting many individual ones to 0, there are convenient ways to approximate the likelihood. Or if you want to add a variable at a time from some set, you may not want to maximize the likelihood for each new model, and the implementation of Score tests offers some convenience here. The Wald and Score become attractive as your models and likelihood become unattractive. (But I don't think this is what you were questioning, since you have all three available ...)

| null | CC BY-SA 2.5 | null | 2010-07-22T04:34:32.110 | 2010-07-22T04:34:32.110 | null | null | 251 | null |

477 | 2 | null | 223 | 1 | null | I assume your friend prefers something that's biostatistics oriented. Glantz's [Primer of Biostatistics](http://www.powells.com/biblio/62-9780071435093-1) is a small book, an easy and quick read, and tends to get rave reviews from a similar audience. If an online reference works, I like Gerard Dallal's [Handbook of Statistical Practice](https://web.archive.org/web/20100212183607/http://www.tufts.edu/%7Egdallal/LHSP.HTM), which may do the trick if he's just refreshing previous knowledge.

| null | CC BY-SA 4.0 | null | 2010-07-22T04:58:50.427 | 2022-11-23T12:47:35.583 | 2022-11-23T12:47:35.583 | 362671 | 251 | null |

478 | 2 | null | 421 | 17 | null | I'm going to assume some basic statistics knowledge and recommend:

- The Statistical Sleuth (Ramsey, Schafer) which contain a good deal of mini case studies as they cover the basic statistical tools for data analysis.

- A First Course in Multivariate Statistics (Flury) which covers the essential statistics required for data mining and the like.

| null | CC BY-SA 3.0 | null | 2010-07-22T05:13:17.627 | 2012-08-16T01:32:09.280 | 2012-08-16T01:32:09.280 | 13280 | 251 | null |

479 | 2 | null | 459 | 10 | null | If it does not break your styleguide I would rather color the background of the plots red/(yellow/)green than just plotting a line. In my imagination this should make it pretty clear to a user that values are fine on green and to be checked on red. Just my 5¢.

| null | CC BY-SA 2.5 | null | 2010-07-22T07:28:42.960 | 2010-07-22T07:28:42.960 | null | null | 128 | null |

480 | 1 | 635 | null | 20 | 4413 | I am not an expert of random forest but I clearly understand that the key issue with random forest is the (random) tree generation. Can you explain me how the trees are generated? (i.e. What is the used distribution for tree generation?)

Thanks in advance !

| How does random forest generate the random forest | CC BY-SA 2.5 | null | 2010-07-22T08:58:36.800 | 2022-06-18T13:42:20.083 | 2010-07-26T16:57:59.560 | 217 | 223 | [

"machine-learning",

"r",

"algorithms",

"cart",

"random-forest"

]

|

481 | 1 | 482 | null | 11 | 5413 | Another question about time series from me.

I have a dataset which gives daily records of violent incidents in a psychiatric hospital over three years. With the help from my previous question I have been fiddling with it and am a bit happier about it now.

The thing I have now is that the daily series is very noisy. It fluctuates wildly, up and down, from 0 at times up to 20. Using loess plots and the forecast package (which I can highly recommend for novices like me) I just get a totally flat line, with massive confidence intervals from the forecast.

However, aggregating weekly or monthly the data make a lot more sense. They sweep down from the start of the series, and then increase again in the middle. Loess plotting and the forecast package both produce something that looks a lot more meaningful.

It does feel a bit like cheating though. Am I just preferring the aggregated versions because they look nice with no real validity to it?

Or would it be better to compute a moving average and use that as the basis? I'm afraid I don't understand the theory behind all this well enough to be confident about what is acceptable

| Is it valid to aggregate a time series to make it look more meaningful? | CC BY-SA 2.5 | null | 2010-07-22T09:17:27.490 | 2010-07-23T08:15:23.430 | null | null | 199 | [

"time-series",

"forecasting"

]

|

482 | 2 | null | 481 | 8 | null | This totally depends on your time series and what effect you want to discover/proof etc.

An important thing here is, what kind of periods do you have in your data. Make a spectrum of you data and see what frequencies are common in you data.

Anyway, you are not lying when you decide to display aggregated values. When you are looking to effects that are occurring over weeks (like, more violence in summer when it's hot weather) it is the right thing to do.

Maybe you can also take a look at the Hilbert Huang Transform. This will give you Intrinsic Mode Functions that are very handy for visual analyses.

| null | CC BY-SA 2.5 | null | 2010-07-22T09:24:52.503 | 2010-07-22T09:24:52.503 | null | null | 190 | null |

483 | 2 | null | 480 | 19 | null | The main idea is the bagging procedure, not making trees random. In detail, each tree is built on a sample of objects drawn with replacement from the original set; thus each tree has some objects that it hasn't seen, which is what makes the whole ensemble more heterogeneous and thus better in generalizing.

Furthermore, trees are being weakened in such a way that on the each split only M (or `mtry`) randomly selected attributes are considered; M is usually a square root of the number of attributes in the set. This ensures that the trees are overfitted less, since they are not pruned. You can find more details [here](https://www.stat.berkeley.edu/%7Ebreiman/RandomForests/cc_home.htm).

On the other hand, there is a variant of RF called Extreme Random Forest, in which trees are made in a random way (there is no optimization of splits) -- consult, I think [this reference](https://doi.org/10.1007/s10994-006-6226-1).

| null | CC BY-SA 4.0 | null | 2010-07-22T09:53:52.400 | 2022-06-18T13:42:20.083 | 2022-06-18T13:42:20.083 | 361019 | null | null |

485 | 1 | null | null | 57 | 9120 | A question previously sought recommendations for [textbooks on mathematical statistics](https://stats.stackexchange.com/questions/414/intro-to-statistics-for-mathematicians)

Does anyone know of any good online video lectures on mathematical statistics?

The closest that I've found are:

- Machine Learning

- Econometrics

UPDATE: A number of the suggestions mentioned below are good statistics-101 type videos.

However, I'm specifically wondering whether there are any videos that provide a rigorous mathematical presentation of statistics.

i.e., videos that might accompany a course that use a textbook mentioned in this [discussion on mathoverflow](https://mathoverflow.net/questions/31655/statistics-for-mathematicians)

| Mathematical Statistics Videos | CC BY-SA 2.5 | null | 2010-07-22T10:08:10.067 | 2022-12-31T07:32:40.610 | 2017-04-13T12:58:32.177 | -1 | 183 | [

"mathematical-statistics",

"references"

]

|

486 | 1 | 720 | null | 46 | 91129 | I have calculated AIC and AICc to compare two general linear mixed models; The AICs are positive with model 1 having a lower AIC than model 2. However, the values for AICc are both negative (model 1 is still < model 2). Is it valid to use and compare negative AICc values?

| Negative values for AICc (corrected Akaike Information Criterion) | CC BY-SA 2.5 | null | 2010-07-22T10:11:15.210 | 2016-09-01T12:56:57.487 | 2011-01-30T18:09:40.193 | 449 | 266 | [

"mixed-model",

"model-selection",

"aic"

]

|

487 | 2 | null | 363 | 14 | null | Not a book, but I recently discovered an article by Jacob Cohen in American Psychologist entitled "Things I have learned (so far)." It's available as a pdf [here](https://pdfs.semanticscholar.org/fa77/0a7fb7c45a59abbc4c2bc7d174fa51e5d946.pdf).

| null | CC BY-SA 4.0 | null | 2010-07-22T10:15:28.313 | 2019-02-07T13:12:24.100 | 2019-02-07T13:12:24.100 | 77222 | 266 | null |

488 | 2 | null | 421 | 14 | null | A lot of Social Science / Psychology students with minimal mathematical background like Andy Field's book: [Discovering Statistics Using SPSS](http://rads.stackoverflow.com/amzn/click/0761944524). He also has a website that shares a [lot of material](http://www.statisticshell.com/html/woodofsuicides.html).

| null | CC BY-SA 3.0 | null | 2010-07-22T10:16:18.263 | 2013-09-28T00:27:23.313 | 2013-09-28T00:27:23.313 | 30807 | 183 | null |

489 | 2 | null | 396 | 3 | null | It also depends on where you wan't to publish your plots. You'll save yourself a lot of trouble by consulting the guide for authors before making any plots for a journal.

Also save the plots in a format that is easy to modify or save the code you have used to create them. Chances are that you need to make corrections.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:05:55.197 | 2010-07-22T11:05:55.197 | null | null | 214 | null |

490 | 1 | 606 | null | 30 | 17314 | What are the variable/feature selection that you prefer for binary classification when there are many more variables/feature than observations in the learning set? The aim here is to discuss what is the feature selection procedure that reduces the best the classification error.

We can fix notations for consistency: for $i \in \{0, 1\}$, let $\{x_1^i,\dots, x_{n_i}^i\}$ be the learning set of observations from group $i$. So $n_0 + n_1 = n$ is the size of the learning set. We set $p$ to be the number of features (i.e. the dimension of the feature space). Let $x[i]$ denote the $i$-th coordinate of $x \in \mathbb{R}^p$.

Please give full references if you cannot give the details.

EDIT (updated continuously): Procedures proposed in the answers below

- Greedy forward selection Variable selection procedure for binary classification

- Backward elimination Variable selection procedure for binary classification

- Metropolis scanning / MCMC Variable selection procedure for binary classification

- penalized logistic regression Variable selection procedure for binary classification

As this is community wiki there can be more discussion and update

I have one remark: in a certain sense, you all give a procedure that permit ordering of variables but not variable selection (you are quite evasive on how to select the number of features, I guess you all use cross validation?) Can you improve the answers in this direction? (as this is community wiki you don't need to be the answer writter to add an information about how to select the number of variables? I have openned a question in this direction here [Cross validation in very high dimension (to select the number of used variables in very high dimensional classification)](https://stats.stackexchange.com/questions/880/cross-validation-to-select-the-number-of-used-variables-in-very-high-dimensional))

| Variable selection procedure for binary classification | CC BY-SA 3.0 | null | 2010-07-22T11:10:29.417 | 2016-04-05T12:33:34.737 | 2017-04-13T12:44:33.237 | -1 | 223 | [

"machine-learning",

"classification",

"multiple-comparisons",

"multivariate-analysis",

"feature-selection"

]

|

491 | 2 | null | 421 | 3 | null | I recently found [Even You Can Learn Statistics](http://rads.stackoverflow.com/amzn/click/0131467573) to be pretty useful.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:19:10.587 | 2010-07-22T11:19:10.587 | null | null | 268 | null |

492 | 1 | null | null | 11 | 1214 | I am proposing to try and find a trend in some very noisy long term data. The data is basically weekly measurements of something which moved about 5mm over a period of about 8 months. The data is to 1mm accuracey and is very noisy regularly changing +/-1 or 2mm in a week. We only have the data to the nearest mm.

We plan to use some basic signal processing with a fast fourier transform to separate out the noise from the raw data. The basic assumption is if we mirror our data set and add it to the end of our existing data set we can create a full wavelength of the data and therefore our data will show up in a fast fourier transform and we can hopefully then separate it out.

Given that this sounds a little dubious to me, is this a method worth purusing or is the method of mirroring and appending our data set somehow fundamentally flawed? We are looking at other approaches such as using a low pass filter as well.

| Dubious use of signal processing principles to identify a trend | CC BY-SA 2.5 | null | 2010-07-22T11:31:16.777 | 2015-04-23T14:38:18.937 | 2012-06-07T20:31:39.863 | 919 | 210 | [

"time-series",

"data-mining",

"signal-processing",

"trend"

]

|

493 | 2 | null | 170 | 7 | null | Statsoft's [Electronic Statistics Handbook](http://www.statsoft.com/textbook/) ('The only Internet Resource about Statistics Recommended by Encyclopedia Britannica') is worth checking out.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:33:47.037 | 2010-07-22T11:33:47.037 | null | null | 268 | null |

494 | 2 | null | 486 | 14 | null | Generally, it is assumed that AIC (and so AICc) is defined up to adding a constant, so the fact if it is negative or positive is not meaningful at all. So the answer is yes, it is valid.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:42:42.967 | 2010-07-22T20:16:08.967 | 2010-07-22T20:16:08.967 | null | null | null |

495 | 2 | null | 485 | 6 | null | There is one called [Math and probability for life sciences](http://www.academicearth.org/courses/math-and-proability-for-life-sciences), but I haven't followed it so I can't tell you if its good or not.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:43:42.560 | 2010-07-22T11:43:42.560 | null | null | 214 | null |

496 | 2 | null | 492 | 7 | null | I think you can get some distortion on the pasting point as not all the underlying waves will connect very well.

I would suggest using a Hilbert Huang transform for this. Just do the split into intrinsic mode functions and see what is left over as residue when calculating them.

| null | CC BY-SA 2.5 | null | 2010-07-22T11:44:13.230 | 2010-07-22T11:44:13.230 | null | null | 190 | null |

497 | 2 | null | 490 | 4 | null | Greedy forward selection.

The steps for this method are:

- Make sure you have a train and validation set

- Repeat the following

Train a classifier with each single feature separately that is not selected yet and with all the previously selected features

If the result improves, add the best performing feature, else stop procedure

| null | CC BY-SA 2.5 | null | 2010-07-22T11:53:51.010 | 2010-07-22T11:53:51.010 | null | null | 190 | null |

498 | 1 | null | null | 2 | 14808 | Sometimes, I just want to do a copy & paste from the output window in SAS. I can highlight text with a mouse-drag, but only SOMETIMES does that get copied to the clipboard. It doesn't matter if I use "CTRL-C" or right click -> copy, or edit -> copy

Any other SAS users experience this, and do you know a workaround/option/technique that can fix it?

Sometimes, I can fix it by clicking in another window, and coming back to the output window, but sometimes I just have to save the output window as a .lst and get the text from another editor.

| In SAS, how do you copy & paste from the output window? | CC BY-SA 3.0 | null | 2010-07-22T11:58:21.923 | 2015-07-08T21:53:12.013 | 2015-07-08T21:53:12.013 | 28666 | 62 | [

"sas"

]

|

499 | 1 | 3728 | null | 20 | 685 | I've heard that when many regression model specifications (say, in OLS) are considered as possibilities for a dataset, this causes multiple comparison problems and the p-values and confidence intervals are no longer reliable. One extreme example of this is stepwise regression.

When can I use the data itself to help specify the model, and when is this not a valid approach? Do you always need to have a subject-matter-based theory to form the model?

| When can you use data-based criteria to specify a regression model? | CC BY-SA 2.5 | null | 2010-07-22T12:06:28.817 | 2010-10-19T01:50:06.413 | 2010-07-22T13:26:10.600 | 267 | 267 | [

"regression",

"frequentist",

"multiple-comparisons"

]

|

500 | 2 | null | 499 | 2 | null | If I understand your question right, than the answer to your problem is to correct the p-values accordingly to the number of hypothesis.

For example Holm-Bonferoni corrections, where you sort the hypothesis (= your different models) by their p-value and reject those with a p samller than (desired p-value / index).

More about the topic can be found on [Wikipedia](http://en.wikipedia.org/wiki/Multiple_comparisons)

| null | CC BY-SA 2.5 | null | 2010-07-22T12:16:29.217 | 2010-07-22T12:16:29.217 | null | null | 190 | null |

501 | 2 | null | 490 | 2 | null | Backward elimination.

Start with the full set, then iteratively train the classifier on the remaining features and remove the feature with the smallest importance, stop when the classifier error rapidly increases/becomes unacceptable high.

Importance can be even obtained by removing iteratively each feature and check the error increase or adapted from the classifier if it produces it (like in case of Random Forest).

| null | CC BY-SA 2.5 | null | 2010-07-22T12:17:20.900 | 2010-07-22T13:05:25.687 | 2010-07-22T13:05:25.687 | 223 | null | null |

502 | 2 | null | 485 | 5 | null | I do not know at what level you want the videos to be but I have heard good things about Khan's Academy: [http://www.khanacademy.org/#Statistics](http://www.khanacademy.org/#Statistics)

| null | CC BY-SA 2.5 | null | 2010-07-22T12:25:42.333 | 2010-07-22T12:25:42.333 | null | null | null | null |

503 | 2 | null | 498 | 2 | null | I have been using SAS a long time and have never had an issue with highlighting results from the output window.

However since you are having an issue... there are alarge number of solutions!

Perhaps the most i like... and probably a good habit to get into is to output your results into datasets... or into excel spread sheets directly (using the ODS) you can also output directly into pdf, rtf with 2 lines of the simplest code you can imagine!

if your code produces alot of output and you only have one table you want to copy you can specify the name of the table and it alone will be output using the ODS output.

usually you just need to wrap your Procedure (like Proc Means for example)

with

ods PDF;

Proc Means Data = blah N NMISS MEAN STD;

class upto you;

var you name it;

run;

ods PDF close;

of course there are many ways to get fancy with the way the output looks but that is a matter of trial and error and finding what you like or meets your needs.

| null | CC BY-SA 2.5 | null | 2010-07-22T12:25:48.810 | 2010-07-22T12:25:48.810 | null | null | 256 | null |

504 | 2 | null | 485 | 5 | null | Many of the Berkeley introductory statistics courses are available online (and on iTunes). Here's an example: [Stats 2](http://webcast.berkeley.edu/course_details.php?seriesid=1906978493). You can [find more here](http://www.google.com/search?hl=en&rlz=1C1CHMP_en-USUS292US307&q=statistics+video+site%3awebcast.berkeley.edu+&aq=f&aqi=&aql=&oq=&gs_rfai=).

| null | CC BY-SA 2.5 | null | 2010-07-22T12:33:49.683 | 2010-07-22T12:33:49.683 | null | null | 5 | null |

505 | 2 | null | 490 | 7 | null | Metropolis scanning / MCMC

- Select few features randomly for a

start, train classifier only on them

and obtain the error.

- Make some

random change to this working set --

either remove one feature, add

another at random or replace some

feature with one not being currently

used.

- Train new classifier and get

its error; store in dE the difference

the error on the new set minus the error on the previous set.

- With probability min(1;exp(-beta*dE)) accept this change, otherwise reject it and try another random change.

- Repeat it for a long time and finally return the working set that has globally achieved the smallest error.

You may extend it with some wiser control of `beta` parameter. Simpler way is to use simulated annealing when you increase `beta` (lower the temperature in physical analogy) over the time to reduce fluctuations and drive the algorithm towards minimum. Harder is to use [replica exchange](http://en.wikipedia.org/wiki/Replica_exchange).

| null | CC BY-SA 2.5 | null | 2010-07-22T12:42:13.893 | 2010-07-22T12:42:13.893 | null | null | null | null |

506 | 2 | null | 287 | 30 | null | Both MOM and GMM are very general methods for estimating parameters of statistical models. GMM is - as the name suggests - a generalisation of MOM. It was developed by Lars Peter Hansen and first published in Econometrica [1]. As there are numerous textbooks on the subject (e.g. [2]) I presume you want a non-technical answer here.

Traditional or Classical Method of Moments Estimator

The MOM estimator is a consistent but inefficient estimator. Assume a vector of data $y$ which were generated by a probability distribution indexed by a parameter vector $\theta$ with $k$ elements. In the method of moments, $\theta$ is estimated by computing $k$ sample moments of $y$, setting them equal to population moments derived from the assumed probability distribution, and solving for $\theta$. For example, the population moment of $\mu$ is the expectation of $y$, whereas the sample moment of $\mu$ is the sample mean of $y$. You would repeat this for each of the $k$ elements of $\theta$. As sample moments are generally consistent estimators of population moments, $\hat\theta$ will be consistent for $\theta$.

Generalised Method of Moments

In the example above, we had the same number of moment conditions as unknown parameters, so all we would have done is solved the $k$ equations in $k$ unknowns to obtain the parameter estimates. Hansen asked: What happens when we have more moment conditions than parameters as usually occurs in econometric models? How can we combine them optimally? That is the purpose of the GMM estimator. In GMM we estimate the parameter vector by minimising the sum of squares of the differences between the population moments and the sample moments, using the variance of the moments as a metric. This is the minimum variance estimator in the class of estimators that use these moment conditions.

[1] Hansen, L. P. (1982): Large Sample Properties of Generalized Method of Moments Estimators, Econometrica, 50, 1029-1054

[2] Hall, A. R. (2005). Generalized Method of Moments (Advanced Texts in Econometrics). Oxford University Press

| null | CC BY-SA 4.0 | null | 2010-07-22T13:10:57.267 | 2021-02-26T17:25:32.740 | 2021-02-26T17:25:32.740 | 53690 | 215 | null |

507 | 1 | 560 | null | 31 | 23717 | What is your preferred method of checking for convergence when using Markov chain Monte Carlo for Bayesian inference, and why?

| What is the best method for checking convergence in MCMC? | CC BY-SA 2.5 | null | 2010-07-22T13:40:30.237 | 2017-12-07T20:01:56.177 | null | null | 215 | [

"bayesian",

"markov-chain-montecarlo"

]

|

508 | 2 | null | 396 | 2 | null | I would add that the choice of plot should reflect the type of statistical test used to analyse the data. In other words, whatever characteristics of the data were used for analysis should be shown visually - so you would show means and standard errors if you used a t-test but boxplots if you used a Mann-Whitney test.

| null | CC BY-SA 2.5 | null | 2010-07-22T13:41:53.637 | 2010-07-22T13:41:53.637 | null | null | 266 | null |

509 | 2 | null | 507 | 2 | null | I like to do trace plots primarily and sometimes I use the Gelman-Rubin convergence diagnostic.

| null | CC BY-SA 2.5 | null | 2010-07-22T13:43:18.233 | 2010-07-22T13:43:18.233 | null | null | null | null |

510 | 2 | null | 423 | 65 | null | More about design and power than analysis, but I like this one

| null | CC BY-SA 2.5 | null | 2010-07-22T13:49:37.283 | 2010-07-22T13:49:37.283 | 2017-03-09T17:30:36.233 | -1 | 266 | null |

511 | 2 | null | 423 | 38 | null | From [xkcd](http://xkcd.com/701/):

If some people who really believe that everything should be scientifically tested would actually walk their talk than they this comic might even show an event that actually happens.

| null | CC BY-SA 2.5 | null | 2010-07-22T14:32:20.363 | 2010-07-22T14:32:20.363 | 2017-03-09T17:30:36.247 | -1 | 3807 | null |

512 | 2 | null | 492 | 8 | null | If you want to filter the long term trend out using signal processing, why not just use a low-pass?

The simplest thing I can think of would be an exponential moving average.

| null | CC BY-SA 2.5 | null | 2010-07-22T14:52:54.510 | 2010-07-22T14:52:54.510 | null | null | 33 | null |

513 | 2 | null | 276 | 3 | null | I like to use resampling: I repeat whatever method I used with a subsample of the data (say 80% or even 50% of the total). By doing this with many different subsamples, I get a feel for how robust the estimates are. For many estimation procedures this can be made into a real (meaning publishable) estimate of your errors.

| null | CC BY-SA 2.5 | null | 2010-07-22T15:22:20.527 | 2010-07-22T15:22:20.527 | null | null | 260 | null |

514 | 2 | null | 26 | 3 | null | Here's how I would answer this question using a diagram.

Let's say we weigh 30 cats and calculate the mean weight. Then we produce a scatter plot, with weight on the y axis and cat identity on the x axis. The mean weight can be drawn in as a horizontal line. We can then draw in vertical lines which connect each data point to the mean line - these are the deviations of each data point from the mean, and we call them residuals. Now, these residuals can be useful because they can tell us something about the spread of the data: if there are many big residuals, then cats vary a lot in mass. Conversely, if the residuals are mainly small, then cats are fairly closely clustered around the average weight. So if we could have some metric which tells us the average length of a residual in this data set, this would be a handy way of denoting how much spread there is in the data. The standard deviation is, effectively, the length of the average residual.

I would follow on on from this by giving the calculation for s.d., explaining why we square and then square root (I like Vaibhav's short and sweet explanation). Then I would mention the problems of outliers, as Graham does in his last paragraph.

| null | CC BY-SA 2.5 | null | 2010-07-22T15:36:53.510 | 2010-07-22T15:36:53.510 | null | null | 266 | null |

515 | 2 | null | 155 | 10 | null | 1) A good demonstration of how "random" needs to be defined in order to work out probability of certain events:

What is the chance that a random line drawn across a circle will be longer than the radius?

The question totally depends how you draw your line. Possibilities which you can describe in a real-world way for a circle drawn on the ground might include:

Draw two random points inside the circle and draw a line through those. (See where two flies / stones fall...)

Choose a fixed point on the circumference, then a random one elsewhere in the circle and join those. (In effect this is laying a stick across the circle at a variable angle through a given point and a random one e.g. where a stone falls.)

Draw a diameter. Randomly choose a point along it and draw a perpendicular through that. (Roll a stick along in a straight line so it rests across the circle.)

It is relatively easy to show someone who can do some geometry (but not necessarily stats) the answer to the question can vary quite widely (from about 2/3 to about 0.866 or so).

2) A reverse-engineered coin-toss: toss it (say) ten times and write down the result. Work out the probability of this exact sequence $\left(\frac{1}{2^{10}}\right)$. A tiny chance, but you just saw it happen with your own eyes!... Every sequence might come up, including ten heads in a row, but it is hard for lay people to get their head round it. As an encore, try to convince them they have just as good a chance of winning the lottery with the numbers 1 through 6 as any other combination.

3) Explaining why medical diagnosis may seem really flawed. A test for disease foo which is 99.9% accurate at identifying those who have it but .1% false-positively diagnoses those who don't really have it may seem to be wrong really so often when the prevalence of the disease is really low (e.g. 1 in 1000) but many patients are tested for it.

This is one that is best explained with real numbers - imagine 1 million people are tested, so 1000 have the disease, 999 are correctly identified, but 0.1% of 999,000 is 999 who are told they have it but don't. So half those who are told they have it actually do not, despite the high level of accuracy (99.9%) and low level of false positives (0.1%). A second (ideally different) test will then separate these groups out.

[Incidentally, I chose the numbers because they are easy to work with, of course they do not have to add up to 100% as the accuracy / false positive rates are independent factors in the test.]

| null | CC BY-SA 3.0 | null | 2010-07-22T15:49:54.270 | 2013-10-23T15:29:05.390 | 2013-10-23T15:29:05.390 | 17230 | 270 | null |

516 | 2 | null | 486 | 6 | null | Yes it's valid to compare negative AICc values, in the same way as you would negative AIC values. The correction factor in the AICc can become large with small sample size and relatively large number of parameters, and penalize heavier than the AIC. So positive AIC values can correspond to negative AICc values.

| null | CC BY-SA 2.5 | null | 2010-07-22T16:00:44.953 | 2010-07-22T16:00:44.953 | null | null | 251 | null |

517 | 1 | 522 | null | 29 | 30264 | In the context of machine learning, what is the difference between

- unsupervised learning

- supervised learning and

- semi-supervised learning?

And what are some of the main algorithmic approaches to look at?

| Unsupervised, supervised and semi-supervised learning | CC BY-SA 4.0 | null | 2010-07-22T16:21:06.090 | 2019-05-11T14:47:37.750 | 2019-03-20T16:30:26.923 | 128677 | 68 | [

"machine-learning",

"unsupervised-learning",

"supervised-learning",

"semi-supervised-learning"

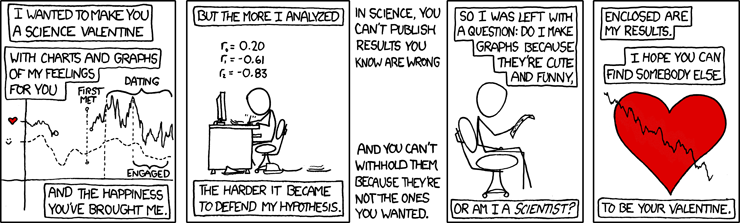

]

|

518 | 2 | null | 183 | 3 | null | Try [R](http://www.r-project.org/). [Here](http://cran.at.r-project.org/web/views/Cluster.html) you have a list of clustering packages available.

| null | CC BY-SA 2.5 | null | 2010-07-22T16:24:10.190 | 2010-07-22T16:24:10.190 | null | null | null | null |

519 | 2 | null | 36 | 5 | null | I read (a long time ago) of an interesting example about a decline in birth rates (or fertility rates if you prefer that measure) especially in the US, starting in the early 1960's, as nuclear weapons testing was at an all-time high (in 1961 the biggest nuclear bomb ever detonated was tested in the USSR). Rates continued to deline until towards the end of the twentieth century when most countried finally stopped doing this.

I can't find a reference which combines these figures now, but this Wikipedia article has figures on [nuclear weapons test](http://en.wikipedia.org/wiki/Nuclear_weapons_testing) numbers by country.

Of course, it might make better sense to look at the correlation of birth rate with the introduction and legalisation of the contraceptive pill 'coincidentally' starting in the early 1960s. (In only some states first, then all states for married women only, then some for unmarried, then across the board), But even that could only be part of the cause; lots of other aspects of equality, economic changes and other factors play a significant part.

| null | CC BY-SA 2.5 | null | 2010-07-22T16:32:57.967 | 2010-07-22T16:32:57.967 | null | null | 270 | null |

520 | 2 | null | 183 | 2 | null | Python will give you all the flexibility you need. With the NumPy and [SciPy cluster module](http://docs.scipy.org/doc/scipy/reference/cluster.html) you have the tools you need, and the datatypes of NumPy give you a good insight in how much memory you will use.

| null | CC BY-SA 2.5 | null | 2010-07-22T16:33:43.240 | 2010-07-22T16:33:43.240 | null | null | 190 | null |

521 | 2 | null | 517 | 14 | null | Unsupervised Learning

Unsupervised learning is when you have no labeled data available for training. Examples of this are often clustering methods.

Supervised Learning

In this case your training data exists out of labeled data. The problem you solve here is often predicting the labels for data points without label.

Semi-Supervised Learning

In this case both labeled data and unlabeled data are used. This for example can be used in Deep belief networks, where some layers are learning the structure of the data (unsupervised) and one layer is used to make the classification (trained with supervised data)

| null | CC BY-SA 2.5 | null | 2010-07-22T16:39:05.870 | 2010-07-22T16:39:05.870 | null | null | 190 | null |

522 | 2 | null | 517 | 24 | null | Generally, the problems of machine learning may be considered variations on function estimation for classification, prediction or modeling.

In supervised learning one is furnished with input ($x_1$, $x_2$, ...,) and output ($y_1$, $y_2$, ...,) and are challenged with finding a function that approximates this behavior in a generalizable fashion. The output could be a class label (in classification) or a real number (in regression)-- these are the "supervision" in supervised learning.

In the case of unsupervised learning, in the base case, you receives inputs $x_1$, $x_2$, ..., but neither target outputs, nor rewards from its environment are provided. Based on the problem (classify, or predict) and your background knowledge of the space sampled, you may use various methods: density estimation (estimating some underlying PDF for prediction), k-means clustering (classifying unlabeled real valued data), k-modes clustering (classifying unlabeled categorical data), etc.

Semi-supervised learning involves function estimation on labeled and unlabeled data. This approach is motivated by the fact that labeled data is often costly to generate, whereas unlabeled data is generally not. The challenge here mostly involves the technical question of how to treat data mixed in this fashion. See this [Semi-Supervised Learning Literature Survey](http://pages.cs.wisc.edu/~jerryzhu/pub/ssl_survey.pdf) for more details on semi-supervised learning methods.

In addition to these kinds of learning, there are others, such as reinforcement learning whereby the learning method interacts with its environment by producing actions $a_1$, $a_2$, . . .. that produce rewards or punishments $r_1$, $r_2$, ...

| null | CC BY-SA 4.0 | null | 2010-07-22T18:03:43.437 | 2019-03-20T16:29:31.593 | 2019-03-20T16:29:31.593 | 128677 | 39 | null |

523 | 2 | null | 517 | 8 | null | I don't think that supervised/unsupervised is the best way to think about it. For basic data mining, it's better to think about what you are trying to do. There are four main tasks:

- prediction. if you are predicting a real number, it is called regression. if you are predicting a whole number or class, it is called classification.

- modeling. modeling is the same as prediction, but the model is comprehensible by humans. Neural networks and support vector machines work great, but do not produce comprehensible models [1]. decision trees and classic linear regression are examples of easy-to-understand models.

- similarity. if you are trying to find natural groups of attributes, it is called factor analysis. if you are trying to find natural groups of observations, it is called clustering.

- association. it's much like correlation, but for enormous binary datasets.

[1] Apparently Goldman Sachs created tons of great neural networks for prediction, but then no one understood them, so they had to write other programs to try to explain the neural networks.

| null | CC BY-SA 2.5 | null | 2010-07-22T18:16:08.797 | 2010-08-13T17:59:07.270 | 2010-08-13T17:59:07.270 | 74 | 74 | null |

524 | 1 | 525 | null | 12 | 830 | Debugging MCMC programs is notoriously difficult. The difficulty arises because of several issues some of which are:

(a) Cyclic nature of the algorithm

We iteratively draw parameters conditional on all other parameters. Thus, if a implementation is not working properly it is difficult to isolate the bug as the issue can be anywhere in the iterative sampler.

(b) The correct answer is not necessarily known.

We have no way to tell if we have achieved convergence. To some extent this can be mitigated by testing the code on simulated data.

In light of the above issues, I was wondering if there is a standard technique that can be used to debug MCMC programs.

Edit

I wanted to share the approach I use to debug my own programs. I, of course, do all of the things that PeterR mentioned. Apart from those, I perform the following tests using simulated data:

- Start all parameters from true values and see if the sampler diverges too far from the true values.

- I have flags for each parameter in my iterative sampler that determines whether I am drawing that parameter in the iterative sampler. For example, if a flag 'gen_param1' is set to true then I draw 'param1' from its full conditional in the iterative sampler. If this is set to false then 'param1' is set to its true value.

Once I finish writing up the sampler, I test the program using the following recipe:

- Set the generate flag for one parameter to true and everything else to false and assess convergence with respect to true value.

- Set the generate flag for another parameter in conjunction with the first one and again assess convergence.

The above steps have been incredibly helpful to me.

| Is there a standard technique to debug MCMC programs? | CC BY-SA 2.5 | null | 2010-07-22T18:25:54.250 | 2018-08-27T16:10:58.463 | 2018-08-27T16:10:58.463 | 11887 | null | [

"markov-chain-montecarlo"

]

|

525 | 2 | null | 524 | 11 | null | Standard programming practice:

- when debugging run the simulation with fixed sources of randomness (i.e. same seed) so that any changes are due to code changes and not different random numbers.

- try your code on a model (or several models) where the answer IS known.

- adopt good programming habits so that you introduce fewer bugs.

- think very hard & long about the answers you do get, whether they make sense, etc.

I wish you good luck, and plenty of coffee!

| null | CC BY-SA 3.0 | null | 2010-07-22T18:33:47.900 | 2016-10-27T19:03:15.123 | 2016-10-27T19:03:15.123 | 123561 | 247 | null |

526 | 1 | 585 | null | 9 | 551 | As you know, there are two popular types of cross-validation, K-fold and random subsampling (as described in [Wikipedia](http://en.wikipedia.org/wiki/Cross-validation_%28statistics%29)). Nevertheless, I know that some researchers are making and publishing papers where something that is described as a K-fold CV is indeed a random subsampling one, so in practice you never know what is really in the article you're reading.

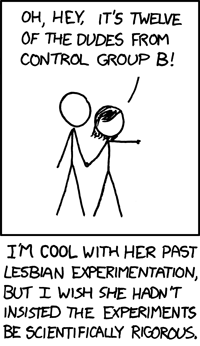

Usually of course the difference is unnoticeable, and so goes my question -- can you think of an example when the result of one type is significantly different from another?

| Does the cross validation implementation influence its results? | CC BY-SA 2.5 | null | 2010-07-22T19:17:41.870 | 2010-07-24T22:54:13.430 | null | null | null | [

"machine-learning",

"cross-validation"

]

|

527 | 1 | 2834 | null | 12 | 12417 | I have two different analytical methods that can measure the concentration of a particular molecule in a matrix (for instance measure the amount of salt in water)

The two methods are different, and each has it's own error. What ways exist to show the two methods are equivalent (or not).

I'm thinking that plotting the results from a number of samples measured by both methods on a scatter graph is a good first step, but are there any good statistical methods ?

| What ways are there to show two analytical methods are equivalent? | CC BY-SA 2.5 | null | 2010-07-22T21:15:52.347 | 2016-07-13T08:11:43.100 | 2016-07-13T08:11:43.100 | 1352 | 114 | [

"hypothesis-testing",

"measurement-error",

"method-comparison",

"bland-altman-plot"

]

|

528 | 2 | null | 36 | 2 | null | Teaching "Correlation does not mean causation" doesn't really help anyone because at the end of the day all deductive arguments are based in part on correlation.

Human are very bad at learning not to do something.

The goal should rather be constructive: Always think about alternatives to your starting assumptions that might produce the same data.

| null | CC BY-SA 2.5 | null | 2010-07-22T22:33:37.800 | 2010-07-22T22:33:37.800 | null | null | 3807 | null |

529 | 2 | null | 527 | 0 | null | Your use of the phrase 'analytical methods' is a bit confusing to me. I am going to assume that by 'analytical methods' you mean some specific model/estimation strategy.

Broadly, speaking there are two types of metrics you could use to choose between estimators.

In-sample Metrics

- Likelihood ratio / Wald test / Score test

- R2

- In-sample Hit Rates (Percentage of correct predictions for sample data)

- (Lots of other metrics depending on model / estimation context)

Out-of-sample Metrics

- Out-of-sample Hit Rates (Percentage of correct predictions for out-of-sample data)

If the estimates are equivalent they would perform equally well on these metrics. You could also see if the estimates are not statistically different from one another (like the two-sample test of equality of means) but the methodology for this would depend on model and method specifics.

| null | CC BY-SA 2.5 | null | 2010-07-22T22:38:05.597 | 2010-07-22T22:38:05.597 | null | null | null | null |

530 | 2 | null | 481 | 12 | null | It's very common in forecasting to aggregate data in order to increase the signal/noise ratio. There are several papers on the effect of temporal aggregation on forecast accuracy in economics, for example. What you're probably seeing in the daily data is a weak signal that is being swamped by noise, whereas the weekly and monthly data are showing a stronger signal that is more visible.