licenses

sequencelengths 1

3

| version

stringclasses 677

values | tree_hash

stringlengths 40

40

| path

stringclasses 1

value | type

stringclasses 2

values | size

stringlengths 2

8

| text

stringlengths 25

67.1M

| package_name

stringlengths 2

41

| repo

stringlengths 33

86

|

|---|---|---|---|---|---|---|---|---|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 2367 | # Author: Mathias Louboutin

# Date: June 2021

#

using JUDI, LinearAlgebra, Images, PyPlot, DSP, ImageGather, SlimPlotting

# Set up model structure

n = (601, 333) # (x,y,z) or (x,z)

d = (15., 15.)

o = (0., 0.)

# Velocity [km/s]

v = ones(Float32,n) .+ 0.5f0

for i=1:12

v[:,25*i+1:end] .= 1.5f0 + i*.25f0

end

v0 = imfilter(v, Kernel.gaussian(5))

# Slowness squared [s^2/km^2]

m = (1f0 ./ v).^2

m0 = (1f0 ./ v0).^2

# Setup info and model structure

nsrc = 1 # number of sources

model = Model(n, d, o, m; nb=40)

model0 = Model(n, d, o, m0; nb=40)

# Set up receiver geometry

nxrec = 401

xrec = range(0f0, stop=(n[1] -1)*d[1], length=nxrec)

yrec = 0f0

zrec = range(20f0, stop=20f0, length=nxrec)

# receiver sampling and recording time

timeR = 4000f0 # receiver recording time [ms]

dtR = 4f0 # receiver sampling interval [ms]

# Set up receiver structure

recGeometry = Geometry(xrec, yrec, zrec; dt=dtR, t=timeR, nsrc=nsrc)

# Set up source geometry (cell array with source locations for each shot)

xsrc = 4500f0

ysrc = 0f0

zsrc = 20f0

# source sampling and number of time steps

timeS = 4000f0 # ms

dtS = 4f0 # ms

# Set up source structure

srcGeometry = Geometry(xsrc, ysrc, zsrc; dt=dtS, t=timeS)

# setup wavelet

f0 = 0.015f0 # kHz

wavelet = ricker_wavelet(timeS, dtS, f0)

q = judiVector(srcGeometry, wavelet)

###################################################################################################

opt = Options(space_order=16, IC="as")

# Setup operators

F = judiModeling(model, srcGeometry, recGeometry; options=opt)

F0 = judiModeling(model0, srcGeometry, recGeometry; options=opt)

# Nonlinear modeling

dD = F*q

# Make rtms

J = judiJacobian(F0, q)

# Get offsets and mute data

offs = abs.(xrec .- xsrc)

res = deepcopy(dD)

mute!(res.data[1], offs)

reso = deepcopy(res)

I = inv(judiIllumination(J))

rtm = I*J'*res

omap = Array{Any}(undef, 2)

i = 1

# try a bunch of weighting functions

for (wf, iwf) = zip([x-> x .+ 5f3, x-> log.(x .+ 10)], [x -> x .- 5f3, x-> exp.(x) .- 10])

reso.data[1] .= res.data[1] .* wf(offs)'

rtmo = I*J'*reso

omap[i] = iwf(offset_map(rtm.data, rtmo.data))

global i+=1

end

figure()

for (i, name)=enumerate(["shift", "log"])

subplot(1,2,i)

plot_velocity(omap[i]', (1,1); cmap="jet", aspect="auto", perc=98, new_fig=false, vmax=5000)

colorbar()

title(name)

end

tight_layout()

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 640 | module ImageGather

using JUDI

using JUDI.DSP, JUDI.PyCall

import Base: getindex, *

import JUDI: judiAbstractJacobian, judiMultiSourceVector, judiComposedPropagator, judiJacobian, make_input, propagate

import JUDI.LinearAlgebra: adjoint

const impl = PyNULL()

function __init__()

pushfirst!(PyVector(pyimport("sys")."path"),dirname(pathof(ImageGather)))

copy!(impl, pyimport("implementation"))

end

# Utility functions

include("utils.jl")

# Surface offset gathers

include("surface_gather.jl")

# Subsurface offset gathers

include("subsurface_gather.jl")

end # module

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 6023 | export judiExtendedJacobian

struct judiExtendedJacobian{D, O, FT} <: judiAbstractJacobian{D, O, FT}

m::AbstractSize

n::AbstractSize

F::FT

q::judiMultiSourceVector

offsets::Vector{D}

dims::Vector{Symbol}

end

"""

J = judiExtendedJacobian(F, q, offsets; options::JUDIOptions, omni=false, dims=nothing)

Extended jacobian (extended Born modeling operator) for subsurface horsizontal offsets `offsets`. Its adjoint

comput the subsurface common offset volume. In succint way, the extened born modeling Operator can summarized in a linear algebra frmaework as:

Options structure for seismic modeling.

`omni`: If `true`, the extended jacobian will be computed for all dimensions.

`dims`: If `omni` is `false`, the extended jacobian will be computed for the dimension(s) specified in `dims`.

"""

function judiExtendedJacobian(F::judiComposedPropagator{D, O}, q::judiMultiSourceVector, offsets;

options=nothing, omni=false, dims=nothing) where {D, O}

JUDI.update!(F.options, options)

offsets = Vector{D}(offsets)

ndim = length(F.model.n)

if omni

dims = [:x, :y, :z][1:ndim]

else

if isnothing(dims)

dims = [:x]

else

dims = symvec(dims)

if ndim == 2

dims[dims .== :z] .= :y

end

end

end

return judiExtendedJacobian{D, :born, typeof(F)}(F.m, space(F.model.n), F, q, offsets, dims)

end

symvec(s::Symbol) = [s]

symvec(s::Tuple) = [symvec(ss)[1] for ss in s]::Vector{Symbol}

symvec(s::Vector) = [symvec(ss)[1] for ss in s]::Vector{Symbol}

adjoint(J::judiExtendedJacobian{D, O, FT}) where {D, O, FT} = judiExtendedJacobian{D, adjoint(O), FT}(J.n, J.m, J.F, J.q, J.offsets, J.dims)

getindex(J::judiExtendedJacobian{D, O, FT}, i) where {D, O, FT} = judiExtendedJacobian{D, O, FT}(J.m[i], J.n[i], J.F[i], J.q[i], J.offsets, J.dims)

function make_input(J::judiExtendedJacobian{D, :adjoint_born, FT}, q) where {D, FT}

srcGeom, srcData = JUDI.make_src(J.q, J.F.qInjection)

recGeom, recData = JUDI.make_src(q, J.F.rInterpolation)

return srcGeom, srcData, recGeom, recData, nothing

end

function make_input(J::judiExtendedJacobian{D, :born, FT}, dm) where {D<:Number, FT}

srcGeom, srcData = JUDI.make_src(J.q, J.F.qInjection)

return srcGeom, srcData, J.F.rInterpolation.data[1], nothing, dm

end

*(J::judiExtendedJacobian{T, :born, O}, dm::Array{T, 3}) where {T, O} = J*vec(dm)

*(J::judiExtendedJacobian{T, :born, O}, dm::Array{T, 4}) where {T, O} = J*vec(dm)

JUDI.process_input_data(::judiExtendedJacobian{D, :born, FT}, q::Vector{D}) where {D<:Number, FT} = q

############################################################

function propagate(J::judiExtendedJacobian{T, :born, O}, q::AbstractArray{T}, illum::Bool) where {T, O}

srcGeometry, srcData, recGeometry, _, dm = make_input(J, q)

# Load full geometry for out-of-core geometry containers

recGeometry = Geometry(recGeometry)

srcGeometry = Geometry(srcGeometry)

# Avoid useless propage without perturbation

if minimum(dm) == 0 && maximum(dm) == 0

return judiVector(recGeometry, zeros(Float32, recGeometry.nt[1], length(recGeometry.xloc[1])))

end

# Set up Python model structure

modelPy = devito_model(J.model, J.options)

nh = [length(J.offsets) for _=1:length(J.dims)]

dmd = reshape(dm, nh..., J.model.n...)

dtComp = convert(Float32, modelPy."critical_dt")

# Extrapolate input data to computational grid

qIn = time_resample(srcData, srcGeometry, dtComp)

# Set up coordinates

src_coords = setup_grid(srcGeometry, J.model.n) # shifts source coordinates by origin

rec_coords = setup_grid(recGeometry, J.model.n) # shifts rec coordinates by origin

# Devito interface

dD = JUDI.wrapcall_data(impl."cig_lin", modelPy, src_coords, qIn, rec_coords,

dmd, J.offsets, ic=J.options.IC, space_order=J.options.space_order, dims=J.dims)

dD = time_resample(dD, dtComp, recGeometry)

# Output shot record as judiVector

return judiVector{Float32, Matrix{Float32}}(1, recGeometry, [dD])

end

function propagate(J::judiExtendedJacobian{T, :adjoint_born, O}, q::AbstractArray{T}, illum::Bool) where {T, O}

srcGeometry, srcData, recGeometry, recData, _ = make_input(J, q)

# Load full geometry for out-of-core geometry containers

recGeometry = Geometry(recGeometry)

srcGeometry = Geometry(srcGeometry)

# Set up Python model

modelPy = devito_model(J.model, J.options)

dtComp = convert(Float32, modelPy."critical_dt")

# Extrapolate input data to computational grid

qIn = time_resample(srcData, srcGeometry, dtComp)

dObserved = time_resample(recData, recGeometry, dtComp)

# Set up coordinates

src_coords = setup_grid(srcGeometry, J.model.n) # shifts source coordinates by origin

rec_coords = setup_grid(recGeometry, J.model.n) # shifts rec coordinates by origin

# Devito

g = JUDI.pylock() do

pycall(impl."cig_grad", PyArray, modelPy, src_coords, qIn, rec_coords, dObserved, J.offsets,

illum=false, ic=J.options.IC, space_order=J.options.space_order, dims=J.dims)

end

g = remove_padding_cig(g, modelPy.padsizes; true_adjoint=J.options.sum_padding)

return g

end

function remove_padding_cig(gradient::AbstractArray{DT}, nb::NTuple{Nd, NTuple{2, Int64}}; true_adjoint::Bool=false) where {DT, Nd}

no = ndims(gradient) - length(nb)

N = size(gradient)[no+1:end]

hd = tuple([Colon() for _=1:no]...)

if true_adjoint

for (dim, (nbl, nbr)) in enumerate(nb)

diml = dim+no

selectdim(gradient, diml, nbl+1) .+= dropdims(sum(selectdim(gradient, diml, 1:nbl), dims=diml), dims=diml)

selectdim(gradient, diml, N[dim]-nbr) .+= dropdims(sum(selectdim(gradient, diml, N[dim]-nbr+1:N[dim]), dims=diml), dims=diml)

end

end

out = gradient[hd..., [nbl+1:nn-nbr for ((nbl, nbr), nn) in zip(nb, N)]...]

return out

end

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 4124 | import JUDI: AbstractModel, rlock_pycall, devito

export surface_gather, double_rtm_cig

"""

surface_gather(model, q, data; offsets=nothing, options=Options())

Compute the surface offset gathers volume (nx (X ny) X nz X no) for via the double rtm method whith `no` offsets.

Parameters

* `model`: JUDI Model structure.

* `q`: Source, judiVector.

* `data`: Obeserved data, judiVector.

* `offsets`: List of offsets to compute the gather at. Optional (defaults to 0:10*model.d:model.extent)

* `options`: JUDI Options structure.

"""

function surface_gather(model::AbstractModel, q::judiVector, data::judiVector; offsets=nothing, mute=true, options=Options())

isnothing(offsets) && (offsets = 0f0:10*model.d[1]:(model.n[1]-1)*model.d[1])

offsets = collect(offsets)

pool = JUDI._worker_pool()

# Distribute source

arg_func = i -> (model, q[i], data[i], offsets, options[i], mute)

# Distribute source

ncig = (model.n..., length(offsets))

out = PhysicalParameter(ncig, (model.d..., 1f0), (model.o..., minimum(offsets)), zeros(Float32, ncig...))

out = out + JUDI.run_and_reduce(double_rtm_cig, pool, q.nsrc, arg_func)

return out.data

end

"""

double_rtm_cig(model, q, data, offsets, options)

Compute the single shot contribution to the surface offset gather via double rtm. This single source contribution consists of the following steps:

1. Mute direct arrival in the data.

2. Compute standard RTM ``R``.

3. Compute the offset RTM ``R_o`` for the the weighted data where each trace is weighted by its offset `(rec_x - src_x)`.

4. Compute the envelope ``R_e = \\mathcal{E}(R)`` and ``R_{oe} = \\mathcal{E}(R_o)``.

5. Compute the offset map ``\\frac{R_e \\odot R_{oe}}{R_e \\odot R_e + \\epsilon}``.

6. Apply illumination correction and laplace filter ``R_l = \\mathcal{D} \\Delta R``.

7. Compute each offset contribution ``\\mathcal{I}[:, h] = R_l \\odot \\delta[ha - h]_{tol}`` [`delta_h`](@ref).

8. Return ``\\mathcal{I}``.

"""

function double_rtm_cig(model_full, q::judiVector, data::judiVector, offs, options, mute)

GC.gc(true)

devito.clear_cache()

# Load full geometry for out-of-core geometry containers

data.geometry = Geometry(data.geometry)

q.geometry = Geometry(q.geometry)

# Limit model to area with sources/receivers

if options.limit_m == true

model = deepcopy(model_full)

model, _ = limit_model_to_receiver_area(q.geometry, data.geometry, model, options.buffer_size)

else

model = model_full

end

# Set up Python model

modelPy = devito_model(model, options)

dtComp = convert(Float32, modelPy."critical_dt")

# Extrapolate input data to computational grid

qIn = time_resample(make_input(q), q.geometry, dtComp)

res = time_resample(make_input(data), data.geometry, dtComp)

# Set up coordinates

src_coords = setup_grid(q.geometry, model.n) # shifts source coordinates by origin

rec_coords = setup_grid(data.geometry, model.n) # shifts rec coordinates by origin

# Src-rec offsets

scale = 1f1

off_r = log.(abs.(data.geometry.xloc[1] .- q.geometry.xloc[1]) .+ scale)

inv_off(x) = exp.(x) .- scale

# mute

if mute

mute!(res, off_r .- scale; dt=dtComp/1f3, t0=.25)

end

res_o = res .* off_r'

# Double rtm

rtm, rtmo, illum = rlock_pycall(impl."double_rtm", Tuple{PyArray, PyArray, PyArray},

modelPy, qIn, src_coords, res, res_o, rec_coords,

ic=options.IC)

rtm = remove_padding(rtm, modelPy.padsizes)

rtmo = remove_padding(rtmo, modelPy.padsizes)

illum = remove_padding(illum, modelPy.padsizes)

# offset map

h_map = inv_off(offset_map(rtm, rtmo))

rtm = laplacian(rtm)

rtm[illum .> 0] ./= illum[illum .> 0]

soffs = zeros(Float32, size(model)..., length(offs))

for (i, h) in enumerate(offs)

soffs[:, :, i] .+= rtm .* delta_h(h_map, h, 2*diff(offs)[1])

end

d = (spacing(model)..., 1f0)

n = size(soffs)

o = (origin(model)..., minimum(offs))

return PhysicalParameter(n, d, o, soffs)

end

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 2857 | export mv_avg_2d, delta_h, envelope, mute, mute!, laplacian, offset_map

"""

mv_avg_2d(x; k=5)

2D moving average with a square window of width k

"""

function mv_avg_2d(x::AbstractArray{T, 2}; k=5) where T

out = 1f0 * x

nx, ny = size(x)

kl, kr = k÷2 + 1, k÷2

for i=kl:nx-kr, j=kl:ny-kr

out[i, j] = sum(x[i+k, j+l] for k=-kr:kr, l=-kr:kr)/k^2

end

out

end

"""

envelope(x)

Envelope of a vector or a 2D matrix. The envelope over the first dimension is taken for a 2D matrix (see DSP `hilbert`)

"""

envelope(x::AbstractArray{T, 1}) where T = abs.(hilbert(x))

"""

envelope(x)

Envelope of a vector or a 1D vector (see DSP `hilbert`)

"""

envelope(x::AbstractArray{T, 2}) where T = abs.(hilbert(x))

"""

delta_h(ha, h, tol)

Compute the binary mask where `ha` is within `tol` of `h`.

"""

delta_h(ha::AbstractArray{T, 2}, h::Number, tol::Number) where T = Float32.(abs.(h .- ha) .<= tol)

"""

mute!(shot, offsets;vp=1500, t0=1/10, dt=0.004)

In place direct wave muting of a shot record with water sound speed `vp`, time sampling `dt` and firing time `t0`.

"""

function mute!(shot::AbstractArray{Ts, 2}, offsets::Vector{To}; vp=1500, t0=1/10, dt=.004) where {To, Ts}

length(offsets) == size(shot, 2) || throw(DimensionMismatch("Number of offsets has to match the number of traces"))

inds = trunc.(Integer, (offsets ./ vp .+ t0) ./ dt)

inds = min.(max.(1, inds), size(shot, 1))

for (rx, i) = enumerate(inds)

shot[1:i, rx] .= 0f0

end

end

"""

mute(shot, offsets;vp=1500, t0=1/10, dt=0.004)

Direct wave muting of a shot record with water sound speed `vp`, time sampling `dt` and firing time `t0`.

"""

function mute(shot::AbstractArray{Ts, 2}, offsets::Vector{To}; vp=1500, t0=1/10, dt=.004) where {To, Ts}

out = Ts(1) .* shot

mute!(out, offsets; vp=vp, t0=t0, dt=dt)

out

end

"""

laplacian(image; hx=1, hy=1)

2D laplacian of an image with grid spacings (hx, hy)

"""

function laplacian(image::AbstractArray{T, 2}; hx=1, hy=1) where T

scale = 1/(hx*hy)

out = 1 .* image

@views begin

out[2:end-1, 2:end-1] .= -4 .* image[2:end-1, 2:end-1]

out[2:end-1, 2:end-1] .+= image[1:end-2, 2:end-1] + image[2:end-1, 1:end-2]

out[2:end-1, 2:end-1] .+= image[3:end, 2:end-1] + image[2:end-1, 3:end]

end

return scale .* out

end

"""

offset_map(rtm, rtmo; scale=0)

Return the regularized least-square division of rtm and rtmo. The regularization consists of the envelope and moving average

followed by the least-square division [`surface_gather`](@ref)

"""

function offset_map(rtm::AbstractArray{T, 2}, rtmo::AbstractArray{T, 2}; scale=0) where T

rtmn = mv_avg_2d(envelope(rtm))

rtmo = mv_avg_2d(envelope(rtmo))

offset_map = rtmn .* rtmo ./ (rtmn .* rtmn .+ eps(Float32)) .- scale

return offset_map

end

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | code | 2340 | using ImageGather, Test

using JUDI, LinearAlgebra

# Set up model structure

n = (301, 151) # (x,y,z) or (x,z)

d = (10., 10.)

o = (0., 0.)

# Velocity [km/s]

v = 1.5f0 .* ones(Float32,n)

v[:, 76:end] .= 2.5f0

v0 = 1.5f0 .* ones(Float32,n)

# Slowness squared [s^2/km^2]

m = (1f0 ./ v).^2

m0 = (1f0 ./ v0).^2

# Setup info and model structure

nsrc = 1 # number of sources

model = Model(n, d, o, m; nb=40)

model0 = Model(n, d, o, m0; nb=40)

dm = model.m - model0.m

# Set up receiver geometry

nxrec = 151

xrec = range(0f0, stop=(n[1] -1)*d[1], length=nxrec)

yrec = 0f0

zrec = range(20f0, stop=20f0, length=nxrec)

# receiver sampling and recording time

timeD = 2000f0 # receiver recording time [ms]

dtD = 4f0 # receiver sampling interval [ms]

# Set up receiver structure

recGeometry = Geometry(xrec, yrec, zrec; dt=dtD, t=timeD, nsrc=nsrc)

# Set up source geometry (cell array with source locations for each shot)

xsrc = 1500f0

ysrc = 0f0

zsrc = 20f0

# Set up source structure

srcGeometry = Geometry(xsrc, ysrc, zsrc; dt=dtD, t=timeD)

# setup wavelet

f0 = 0.015f0 # kHz

wavelet = ricker_wavelet(timeD, dtD, f0)

q = diff(judiVector(srcGeometry, wavelet))

###################################################################################################

opt = Options()

# Setup operators

F = judiModeling(model, srcGeometry, recGeometry; options=opt)

J0 = judiJacobian(F(model0), q)

# Nonlinear modeling

dD = J0*dm

rtm = J0'*dD

# Common surface offset image gather

offsets = -40f0:model.d[1]:40f0

nh = length(offsets)

for dims in ((:x, :z), :z, :x)

J = judiExtendedJacobian(F(model0), q, offsets, dims=dims)

ssodm = J'*dD

@show size(ssodm)

@test size(ssodm, 1) == nh

ssor = zeros(Float32, size(ssodm)...)

for h=1:size(ssor, 1)

if dims == (:x, :z)

for h2=1:size(ssor, 2)

ssor[h, h2, :, :] .= dm.data

end

else

ssor[h, :, :] .= dm.data

end

end

dDe = J*ssor

# @show norm(dDe - dD), norm(ssor[:] - dm[:])

a, b = dot(dD, dDe), dot(ssodm[:], ssor[:])

@test (a-b)/(a+b) ≈ 0 atol=1f-3 rtol=0

# Make sure zero offset is the rtm, remove the sumpadding

ih = div(nh, 2)+1

rtmc = dims == (:x, :z) ? ssodm[ih, ih, :, :] : ssodm[ih, :, :]

@test norm(rtm.data - rtmc, Inf) ≈ 0f0 atol=1f-4 rtol=0

end | ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | docs | 2381 |

[](https://slimgroup.github.io/ImageGather.jl/dev)

[](https://zenodo.org/badge/latestdoi/376881077)

# ImageGather.jl

This package implements image gather functions for seismic inversion and QC. We currently offer surface gathers via the double RTM method and subsurface offset image gather. The subsurface offset common image gather are implemented via the extended born modeling operator and its adjoint allowing for extended LSRTM.

# Example

A simple example of a surface image gather for a layered model can be found in `examples/layers_cig.jl`. This example produces the following image gathers:

: Offset gather for a good and bad background velocity model at a different position along X.

This first plot shows the expected behavior with respect to the offset. We clearly see the flat events with a correct velocity while we obtain the predicted upwards and downwards parabolic events for a respectively low and high velocity at large offset.

: Stack of offset gather along the X direction showing the difference in flatness and alignment for a good and bad background velocity model.

This second plot shows the stack along X of different gathers that shows the focusing onto the reflectors with a correct velocity while the high and low-velocity models produce unfocused and misplaced events.

# Contributions

Contributions are welcome.

# References

This work is inspired by the original [double RTM](https://library.seg.org/doi/pdfplus/10.1190/segam2012-1007.1) paper for the surface gather and the [Extended Born]() paper for extended jacobian for subsurface gathers.

- Matteo Giboli, Reda Baina, Laurence Nicoletis, and Bertrand Duquet, "Reverse Time Migration surface offset gathers part 1: a new method to produce ‘classical’ common image gathers", SEG Technical Program Expanded Abstracts 2012.

- Raanan Dafni, William W Symes, "Generalized reflection tomography formulation based on subsurface offset extended imaging",

Geophysical Journal International, Volume 216, Issue 2, February 2019, Pages 1025–1042, https://doi.org/10.1093/gji/ggy478

# Authors

This package is developed and maintained by Mathias Louboutin <[email protected]>

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.3.0 | e7f8a3e4bafabc4154558c1f3cd6f3e5915a534f | docs | 877 | # ImageGather.jl documentation

ImageGather.jl provides computational QC tools for wave-equation based inversion. Namely we provides two main widely used workflows:

- Surface offset gathers (also called surface common image gather). Surface gather compute images of (RTMs) for different offset to verify the accuracy of the background velocity. The method we implement here is based on the double-rtm method [Giboli](@cite) that allows the computation of the gather with two RTMs only instead of one per offset (or offset bin).

- Subsurface offset gathers (also called subsurface common image gather) [sscig](@cite).

## Surface offset gathers

```@docs

surface_gather

double_rtm_cig

```

## Subsurface offset gathers

```@docs

judiExtendedJacobian

```

## Utility functions

```@autodocs

Modules = [ImageGather]

Pages = ["utils.jl"]

```

# References

```@bibliography

```

| ImageGather | https://github.com/slimgroup/ImageGather.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 1357 | using ClusterDepth

using Documenter

using Glob

using Literate

DocMeta.setdocmeta!(ClusterDepth, :DocTestSetup, :(using ClusterDepth); recursive=true)

GENERATED = joinpath(@__DIR__, "src")

for subfolder ∈ ["explanations", "HowTo", "tutorials", "reference"]

local SOURCE_FILES = Glob.glob(subfolder * "/*.jl", GENERATED)

#config=Dict(:repo_root_path=>"https://github.com/unfoldtoolbox/UnfoldSim")

foreach(fn -> Literate.markdown(fn, GENERATED * "/" * subfolder), SOURCE_FILES)

end

makedocs(;

modules=[ClusterDepth],

authors="Benedikt V. Ehinger, Maanik Marathe",

repo="https://github.com/s-ccs/ClusterDepth.jl/blob/{commit}{path}#{line}",

sitename="ClusterDepth.jl",

format=Documenter.HTML(;

prettyurls=get(ENV, "CI", "false") == "true",

canonical="https://s-ccs.github.io/ClusterDepth.jl",

edit_link="main",

assets=String[],

),

pages=[

"Home" => "index.md",

"Tutorials" => [

"An EEG Example" => "tutorials/eeg.md",

"EEG Example - Multichannel data" => "tutorials/eeg-multichannel.md",

],

"Reference" => [

"Clusterdepth FWER" => "reference/type1.md",

"Troendle FWER" => "reference/type1_troendle.md",

],

],

)

deploydocs(;

repo="github.com/s-ccs/ClusterDepth.jl",

devbranch="main",

)

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 4439 | using ClusterDepth

using Random

using CairoMakie

using UnfoldSim

using StatsBase

using Distributions

using DataFrames

# # Family Wise Error of ClusterDepth

# Here we calculate the Family Wise Error the ClusterDepth Correct

# That is, we want to check that pvalues we get, indeed return us a type-1 of 5% for all time-points

# The point being, that if you do tests on 113 timepoints, the chance that one is significant is not 5% but

(1 - (1 - 0.05)^113) * 100 ##%

# ## Setup Simulation

# Let's setup a simulation using UnfoldSim.jl. We simulate a simple 1x2 design with 20 subjects

n_subjects = 20

design = MultiSubjectDesign(n_subjects=n_subjects, n_items=2, items_between=Dict(:condition => ["small", "large"]))

first(generate_events(design), 5)

#

# Next we define a ground-truth signal + relation to events/design with Wilkinson Formulas.

# we want no condition effect, therefore β for the condition should be 0. We further add some inter-subject variability with the mixed models.

# We will use a simulated P300 signal, which at 250Hz has 113 samples.

signal = MixedModelComponent(;

basis=UnfoldSim.p300(; sfreq=250),

formula=@formula(0 ~ 1 + condition + (1 | subject)),

β=[1.0, 0.0],

σs=Dict(:subject => [1]),

);

#

# Let's move the actual simulation into a function, so we can call it many times.

# Note that we use (`RedNoise`)[https://unfoldtoolbox.github.io/UnfoldSim.jl/dev/literate/reference/noisetypes/] which has lot's of Autocorrelation between timepoints. nice!

function run_fun(r)

data, events = simulate(MersenneTwister(r), design, signal, UniformOnset(; offset=5, width=4), RedNoise(noiselevel=1); return_epoched=true)

data = reshape(data, size(data, 1), :)

data = data[:, events.condition.=="small"] .- data[:, events.condition.=="large"]

return data, clusterdepth(data'; τ=quantile(TDist(n_subjects - 1), 0.95), nperm=1000)

end;

# ## Understanding the simulation

# let's have a look at the actual data by running it once, plotting condition wise trials, the ERP and histograms of uncorrected and corrected p-values

data, pval = run_fun(5)

conditionSmall = data[:, 1:2:end]

conditionLarge = data[:, 2:2:end]

pval_uncorrected = 1 .- cdf.(TDist(n_subjects - 1), abs.(ClusterDepth.studentt(conditionSmall .- conditionLarge)))

sig = pval_uncorrected .<= 0.025;

# For the uncorrected p-values based on the t-distribution, we get a type1 error over "time":

mean(sig)

# this is the type 1 error of 5% we expected.

# !!! note

# Type-I error is not the FWER (family wise error rate). FWER is the property of a set of tests (in this case tests per time-point), we can calculate it by repeating such tests,

# and checking for each repetition whether any sample of a repetition is significant (e.g. `any(sig)` followed by a `mean(repetitions_anysig)`).

f = Figure();

series!(Axis(f[1, 1], title="condition==small"), conditionSmall', solid_color=:red)

series!(Axis(f[1, 2], title="condition==large"), conditionLarge', solid_color=:blue)

ax = Axis(f[2, 1:2], title="ERP (mean over trials)")

sig = allowmissing(sig)

sig[sig.==0] .= missing

@show sum(skipmissing(sig))

lines!(sig, color=:gray, linewidth=4)

lines!(ax, mean(conditionSmall, dims=2)[:, 1], solid_color=:red)

lines!(ax, mean(conditionLarge, dims=2)[:, 1], solid_color=:blue)

hist!(Axis(f[3, 1], title="uncorrected pvalues"), pval_uncorrected, bins=0:0.01:1.1)

hist!(Axis(f[3, 2], title="clusterdepth corrected pvalues"), pval, bins=0:0.01:1.1)

f

#----

# ## Run simulations

# This takes some seconds (depending on your infrastructure)

reps = 500

res = fill(NaN, reps, 2)

Threads.@threads for r = 1:reps

data, pvals = run_fun(r)

res[r, 1] = mean(pvals .<= 0.05)

res[r, 2] = mean(abs.(ClusterDepth.studentt(data)) .>= quantile(TDist(n_subjects - 1), 0.975))

end;

# Finally, let's calculate the percentage of simulations where we find a significant effect somewhere

mean(res .> 0, dims=1) |> x -> (:clusterdepth => x[1], :uncorrected => x[2])

# Nice, correction seems to work in principle :) Clusterdepth is not exactly 5%, but with more repetitions we should get there (e.g. with 5000 repetitions, we get 0.051%).

# !!! info

# if you look closely, the `:uncorrected` value (around 60%) is not as bad as the 99% promised in the introduction. This is due to the correlation between the tests introduced by the noise. Indeed, a good exercise is to repeat everything, but put `RedNoise` to `WhiteNoise` | ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 2456 | using ClusterDepth

using Random

using CairoMakie

using UnfoldSim

using StatsBase

using ProgressMeter

using Distributions

# ### Family Wise Error of Troendle

# Here we calculate the Family Wise Error of doing `ntests` at the same time.

# That is, we want to check that Troendle indeed returns us a type-1 of 5% for a _set_ of tests.

#

# The point being, that if you do 30 tests, the chance that one is significant is not 5% but actually

(1 - (1 - 0.05)^30) * 100 ##%

# Let's setup some simulation parameters

reps = 1000

perms = 1000

ntests = 30;

# we will use the student-t in it's 2-sided variant (abs of it)

fun = x -> abs.(ClusterDepth.studentt(x));

# this function simulates data without any effect (H0), then the permutations, and finally calls troendle

function run_fun(r, perms, fun, ntests)

rng = MersenneTwister(r)

data = randn(rng, ntests, 50)

perm = Matrix{Float64}(undef, size(data, 1), perms)

stat = fun(data)

for p = 1:perms

ClusterDepth.sign_permute!(rng, data)

perm[:, p] = fun(data)

end

return data, stat, troendle(perm, stat)

end;

# let's test it once

data, stats_t, pvals = run_fun(1, perms, fun, ntests);

println("data:", size(data), " t-stats:", size(stats_t), " pvals:", size(pvals))

# run the above function `reps=1000`` times - we also save the uncorrected t-based pvalue

pvals_all = fill(NaN, reps, 2, ntests)

Threads.@threads for r = 1:reps

data, stat, pvals = run_fun(r, perms, fun, ntests)

pvals_all[r, 1, :] = pvals

pvals_all[r, 2, :] = (1 .- cdf.(TDist(size(data, 2)), abs.(stat))) .* 2 # * 2 becaue of twosided. Troendle takes this into account already

end;

# Let's check in how many of our simlations we have a significant p-value =<0.05

res = any(pvals_all[:, :, :] .<= 0.05, dims=3)[:, :, 1]

mean(res .> 0, dims=1) |> x -> (:troendle => x[1], :uncorrected => x[2])

# Nice. Troendle fits perfectly and the uncorrected is pretty close to what we calculated above!

# Finally we end this with a short figure to get a better idea of how this data looks like and a histogram of the p-values

f = Figure()

ax = f[1, 1] = Axis(f)

lines!(ax, abs.(ClusterDepth.studentt(data)))

heatmap!(Axis(f[2, 1]), data)

series!(Axis(f[2, 2]), data[:, 1:7]')

h1 = scatter!(Axis(f[1, 2]; yscale=log10), pvals, label="troendle")

hlines!([0.05, 0.01])

hist!(Axis(f[3, 1]), pvals_all[:, 1, :][:], bins=0:0.01:1.1)

hist!(Axis(f[3, 2]), pvals_all[:, 2, :][:], bins=0:0.01:1.1)

f

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 746 | using ClusterDepth

using Random

using CairoMakie

n_t =40 # timepoints

n_sub = 50

n_perm = 5000

snr = 0.5 # signal to nois

## add a signal to the middle

signal = vcat(zeros(n_t÷4), sin.(range(0,π,length=n_t÷2)), zeros(n_t÷4))

## same signal for all subs

signal = repeat(signal,1,n_sub)

## add noise

data = randn(MersenneTwister(123),n_t,n_sub).+ snr .* signal

## by default assumes τ=2.3 (~alpha=0.05), and one-sample ttest

@time pvals = clusterdepth(data);

f = Figure()

ax = f[1,1] = Axis(f)

lines!(abs.(ClusterDepth.studentt(data)))

h1 = scatter(f[1,2],pvals;axis=(;yscale=log10),label="troendle")

pvals2 = clusterdepth(data;pval_type=:naive)

h2 = scatter!(1.2:40.2,pvals2,color="red",label="naive")

#hlines!(([0.05]))

axislegend()

f | ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 3245 | using ClusterDepth

using Random

using CairoMakie

using UnfoldSim

using Unfold

using UnfoldMakie

using Statistics

# ## How to use clusterDepth multiple comparison correction on multichannel data

# This tutorial is adapted from the first EEG example and uses the HArtMuT NYhead model (https://github.com/harmening/HArtMuT) to simulate multiple channels.

# First set up the EEG simulation as before, with one subject and 40 trials:

design = SingleSubjectDesign(conditions=Dict(:condition => ["car", "face"])) |> x -> RepeatDesign(x, 40);

p1 = LinearModelComponent(;

basis=p100(; sfreq=250),

formula=@formula(0 ~ 1),

β=[1.0]

);

n170 = LinearModelComponent(;

basis=UnfoldSim.n170(; sfreq=250),

formula=@formula(0 ~ 1 + condition),

β=[1.0, 0.5], # condition effect - faces are more negative than cars

);

p300 = LinearModelComponent(;

basis=UnfoldSim.p300(; sfreq=250),

formula=@formula(0 ~ 1 + condition),

β=[1.0, 0], # no p300 condition effect

);

# Now choose some source coordinates for each of the p100, n170, p300 that we want to simulate, and use the helper function closest_srcs to get the HArtMuT sources that are closest to these coordinates:

src_coords = [

[20, -78, -10], #p100

[-20, -78, -10], #p100

[50, -40, -25], #n170

[0, -50, 40], #p300

[0, 5, 20], #p300

];

headmodel_HArtMuT = headmodel()

get_closest = coord -> UnfoldSim.closest_src(coord, headmodel_HArtMuT.cortical["pos"]) |> pi -> magnitude(headmodel_HArtMuT; type="perpendicular")[:, pi]

p1_l = p1 |> c -> MultichannelComponent(c, get_closest([-20, -78, -10]))

p1_r = p1 |> c -> MultichannelComponent(c, get_closest([20, -78, -10]))

n170_r = n170 |> c -> MultichannelComponent(c, get_closest([50, -40, -25]))

p300_do = p300 |> c -> MultichannelComponent(c, get_closest([0, -50, -40]))

p300_up = p300 |> c -> MultichannelComponent(c, get_closest([0, 5, 20]))

data, events = simulate(MersenneTwister(1), design, [p1_l, p1_r, n170_r, p300_do, p300_up],

UniformOnset(; offset=0.5 * 250, width=100),

RedNoise(noiselevel=1); return_epoched=true);

# ## Plotting

# This is what the data looks like, for one channel/trial respectively:

f = Figure()

Axis(f[1, 1], title="Single channel, all trials", xlabel="time", ylabel="y")

series!(data[1, :, :]', solid_color=:black)

lines!(mean(data[1, :, :], dims=2)[:, 1], color=:red)

hlines!([0], color=:gray)

Axis(f[2, 1], title="All channels, average over trials", xlabel="time", ylabel="y")

series!(mean(data, dims=3)[:, :, 1], solid_color=:black)

hlines!([0], color=:gray)

f

# And some topoplots:

positions = [Point2f(p[1] + 0.5, p[2] + 0.5) for p in to_positions(headmodel_HArtMuT.electrodes["pos"]')]

df = UnfoldMakie.eeg_matrix_to_dataframe(mean(data, dims=3)[:, :, 1], string.(1:length(positions)));

Δbin = 20 # 20 samples / bin

plot_topoplotseries(df, Δbin; positions=positions, visual=(; enlarge=1, label_scatter=false))

# ## ClusterDepth

# Now that the simulation is done, let's try out ClusterDepth and plot our results

# Note that this is a simple test of "activity" vs. 0

pvals = clusterdepth(data; τ=1.6, nperm=100);

fig, ax, hm = heatmap(transpose(pvals))

ax.title = "pvals";

ax.xlabel = "time";

ax.ylabel = "channel";

Colorbar(fig[:, end+1], hm);

fig

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 3918 | using ClusterDepth

using Random

using CairoMakie

using UnfoldSim

using StatsBase

using Distributions

using DataFrames

using Unfold

using UnfoldMakie

# ## How to use clusterDepth multiple comparison correction

# !!! info

# This tutorial focuses on single-channel data. For multichannel data, see the tutorial "Further EEG Example".

# Let's setup an EEG simulation using UnfoldSim.jl. We simulate a simple 1x2 design with 20 subjects, each with 40 trials

n_subjects = 20

design = MultiSubjectDesign(n_subjects=n_subjects, n_items=40, items_between=Dict(:condition => ["car", "face"]))

first(generate_events(design), 5)

# next we define a ground-truth signal + relation to events/design with Wilkinson Formulas

# let's simulate a P100, a N170 and a P300 - but an effect only on the N170

p1 = MixedModelComponent(;

basis=UnfoldSim.p100(; sfreq=250),

formula=@formula(0 ~ 1 + (1 | subject)),

β=[1.0],

σs=Dict(:subject => [1]),

);

n170 = MixedModelComponent(;

basis=UnfoldSim.n170(; sfreq=250),

formula=@formula(0 ~ 1 + condition + (1 + condition | subject)),

β=[1.0, -0.5], # condition effect - faces are more negative than cars

σs=Dict(:subject => [1, 0.2]), # random slope yes please!

);

p300 = MixedModelComponent(;

basis=UnfoldSim.p300(; sfreq=250),

formula=@formula(0 ~ 1 + condition + (1 + condition | subject)),

β=[1.0, 0], ## no p300 condition effect

σs=Dict(:subject => [1, 1.0]), # but a random slope for condition

);

## Start the simulation

data, events = simulate(MersenneTwister(1), design, [p1, n170, p300], UniformOnset(; offset=500, width=100), RedNoise(noiselevel=1); return_epoched=true)

times = range(0, stop=size(data, 1) / 250, length=size(data, 1));

# let's fit an Unfold Model for each subject

# !!! note

# In principle, we do not need Unfold here - we could simply calculate (subjectwise) means of the conditions, and their time-resolved difference. Using Unfold.jl here simply generalizes it to more complex design, e.g. with continuous predictors etc.

models = map((d, ev) -> (fit(UnfoldModel, @formula(0 ~ 1 + condition), DataFrame(ev), d, times), ev.subject[1]),

eachslice(data; dims=3),

groupby(events, :subject))

# now we can inspect the data easily, and extract the face-effect

function add_subject!(df, s)

df[!, :subject] .= s

return df

end

allEffects = map((x) -> (effects(Dict(:condition => ["car", "face"]), x[1]), x[2]) |> (x) -> add_subject!(x[1], x[2]), models) |> e -> reduce(vcat, e)

plot_erp(allEffects; mapping=(color=:condition, group=:subject))

# extract the face-coefficient from the linear model

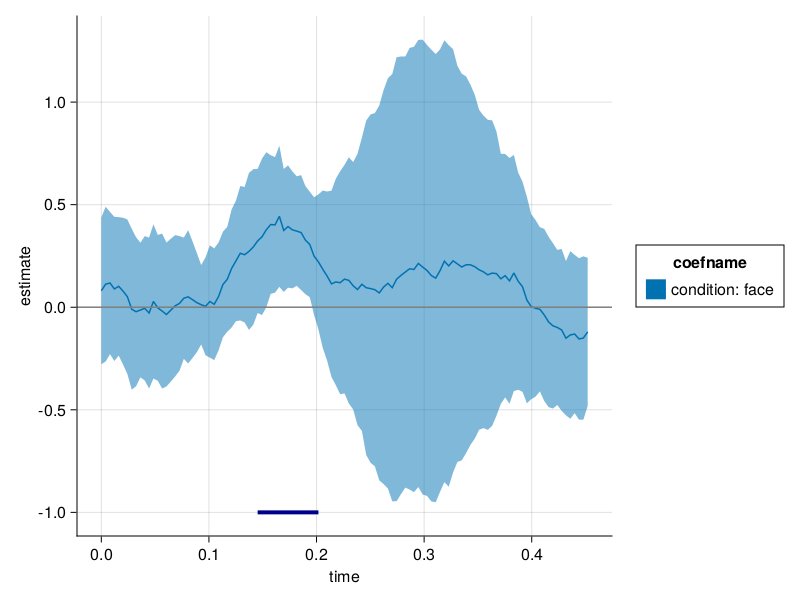

allCoefs = map(m -> (coeftable(m[1]), m[2]) |> (x) -> add_subject!(x[1], x[2]), models) |> e -> reduce(vcat, e)

plot_erp(allCoefs; mapping=(group=:subject, col=:coefname))

# let's unstack the tidy-coef table into a matrix and put it to clusterdepth for clusterpermutation testing

faceCoefs = allCoefs |> x -> subset(x, :coefname => x -> x .== "condition: face")

erpMatrix = unstack(faceCoefs, :subject, :time, :estimate) |> x -> Matrix(x[:, 2:end])' |> collect

summary(erpMatrix)

# ## Clusterdepth

pvals = clusterdepth(erpMatrix; τ=quantile(TDist(n_subjects - 1), 0.95), nperm=5000);

# well - that was fast, less than a second for a cluster permutation test. not bad at all!

# ## Plotting

# Some plotting, and we add the identified cluster

# first calculate the ERP

faceERP = groupby(faceCoefs, [:time, :coefname]) |>

x -> combine(x, :estimate => mean => :estimate,

:estimate => std => :stderror);

# put the pvalues into a nicer format

pvalDF = ClusterDepth.cluster(pvals .<= 0.05) |> x -> DataFrame(:from => x[1] ./ 250, :to => (x[1] .+ x[2]) ./ 250, :coefname => "condition: face")

plot_erp(faceERP; stderror=true, pvalue=pvalDF)

# Looks good to me! We identified the cluster :-)

# old unused code to use extra=(;pvalue=pvalDF) in the plotting function, but didnt work.

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 569 | module ClusterDepth

using Random

using Images

using SparseArrays

#using ExtendableSparse

using StatsBase

import Base.show

struct ClusterDepthMatrix{T} <: AbstractMatrix{T}

J::Any

ClusterDepthMatrix(x) = new{SparseMatrixCSC}(sparse(x))

end

Base.show(io::IO, x::ClusterDepthMatrix) = show(io, x.J)

Base.show(io::IO, m::MIME"text/plain", x::ClusterDepthMatrix) = show(io, m, x.J)

struct test{T} <: AbstractArray{T,2}

J::Any

end

include("cluster.jl")

include("pvals.jl")

include("utils.jl")

include("troendle.jl")

export troendle

export clusterdepth

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 7523 | """

clusterdepth(rng,data::AbstractArray;τ=2.3, statfun=x->abs.(studentt(x)),permfun=sign_permute!,nperm=5000,pval_type=:troendle)

calculate clusterdepth of given datamatrix.

- `data`: `statfun` will be applied on last dimension of data (typically this will be subjects)

Optional

- `τ`: Cluster-forming threshold

- `nperm`: number of permutations, default 5000

- `stat_type`: default the one-sample `t-test`, custom function can be specified (see `statfun!` and `statfun`)

- `side_type`: default: `:abs` - what function should be applied after the `statfun`? could be `:abs`, `:square`, `:positive` to test positive clusters, `:negative` to test negative clusters. Custom function can be provided, see `sidefun``

- `perm_type`: default `:sign` for one-sample data (e.g. differences), performs sign flips. custom function can be provided, see `permfun`

- `pval_type`: how to calculate pvalues within each cluster, default `:troendle`, see `?pvals`

- `statfun` / `statfun!` a function that either takes one or two arguments and aggregates over last dimension. in the two argument case we expect the first argument to be modified inplace and provide a suitable Vector/Matrix.

- `sidefun`: default `abs`. Provide a function to be applied on each element of the output of `statfun`.

- `permfun` function to permute the data, should accept an RNG-object and the data. can be inplace, the data is copied, but the same array is shared between permutations

"""

clusterdepth(data::AbstractArray, args...; kwargs...) =

clusterdepth(MersenneTwister(1), data, args...; kwargs...)

function clusterdepth(

rng,

data::AbstractArray;

τ = 2.3,

stat_type = :onesample_ttest,

perm_type = :sign,

side_type = :abs,

nperm = 5000,

pval_type = :troendle,

(statfun!) = nothing,

statfun = nothing,

)

if stat_type == :onesample_ttest

statfun! = studentt!

statfun = studentt

end

if perm_type == :sign

permfun = sign_permute!

end

if side_type == :abs

sidefun = abs

elseif side_type == :square

sidefun = x -> x^2

elseif side_type == :negative

sidefun = x -> -x

elseif side_type == :positive

sidefun = nothing # the default :)

else

@assert isnothing(side_type) "unknown side_type ($side_type) specified. Check your spelling and ?clusterdepth"

end

cdmTuple = perm_clusterdepths_both(

rng,

data,

permfun,

τ;

nₚ = nperm,

(statfun!) = statfun!,

statfun = statfun,

sidefun = sidefun,

)

return pvals(statfun(data), cdmTuple, τ; type = pval_type)

end

function perm_clusterdepths_both(

rng,

data,

permfun,

τ;

statfun = nothing,

(statfun!) = nothing,

nₚ = 1000,

sidefun = nothing,

)

@assert !(isnothing(statfun) && isnothing(statfun!)) "either statfun or statfun! has to be defined"

data_perm = deepcopy(data)

rows_h = Int[]

cols_h = Int[]

vals_h = Float64[]

rows_t = Int[]

cols_t = Int[]

vals_t = Float64[]

if ndims(data_perm) == 2

d0 = Array{Float64}(undef, size(data_perm, 1))

else

d0 = Array{Float64}(undef, size(data_perm)[[1, 2]])

end

#@debug size(d0)

#@debug size(data_perm)

for i = 1:nₚ

# permute

d_perm = permfun(rng, data_perm)

if isnothing(statfun!)

d0 = statfun(d_perm)

else

# inplace!

statfun!(d0, d_perm)

end

if !isnothing(sidefun)

d0 .= sidefun.(d0)

end

# get clusterdepth

(fromTo, head, tail) = calc_clusterdepth(d0, τ)

# save it

if !isempty(head)

append!(rows_h, fromTo)

append!(cols_h, fill(i, length(fromTo)))

append!(vals_h, head)

#Jₖ_head[fromTo,i] .+=head

end

if !isempty(tail)

append!(rows_t, fromTo)

append!(cols_t, fill(i, length(fromTo)))

append!(vals_t, tail)

#Jₖ_tail[fromTo,i] .+=tail

end

end

Jₖ_head = sparse(rows_h, cols_h, vals_h)#SparseMatrixCSC(nₚ,maximum(rows_h), cols_h,rows_h,vals_h)

Jₖ_tail = sparse(rows_t, cols_t, vals_t)#SparseMatrixCSC(nₚ,maximum(rows_t), cols_t,rows_t,vals_t)

return ClusterDepthMatrix((Jₖ_head)), ClusterDepthMatrix((Jₖ_tail))

end

"""

calc_clusterdepth(data,τ)

returns tuple with three entries:

1:maxLength, maximal clustervalue per clusterdepth head, same for tail

We assume data and τ have already been transformed for one/two sided testing, so that we can do d0.>τ for finding clusters

"""

function calc_clusterdepth(d0::AbstractArray{<:Real,2}, τ)

nchan = size(d0, 1)

# save all the results from calling calc_clusterdepth on individual channels

(allFromTo, allHead, allTail) = (

Array{Vector{Integer}}(undef, nchan),

Array{Vector{Float64}}(undef, nchan),

Array{Vector{Float64}}(undef, nchan),

)

fromTo = []

for i = 1:nchan

(a, b, c) = calc_clusterdepth(d0[i, :], τ)

allFromTo[i] = a

allHead[i] = b

allTail[i] = c

if (length(a) > length(fromTo)) # running check to find the length ('fromTo') of the largest cluster

fromTo = a

end

end

# for each clusterdepth value, select the largest cluster value found across all channels

(head, tail) = (zeros(length(fromTo)), zeros(length(fromTo)))

for i = 1:nchan

for j in allFromTo[i]

if allHead[i][j] > head[j]

head[j] = allHead[i][j]

end

if allTail[i][j] > tail[j]

tail[j] = allTail[i][j]

end

end

end

return fromTo, head, tail

end

function calc_clusterdepth(d0, τ)

startIX, len = cluster(d0 .> τ)

if isempty(len) # if nothing above threshold, just go on

return [], [], []

end

maxL = 1 + maximum(len) # go up only to max-depth

valCol_head = Vector{Float64}(undef, maxL)

valCol_tail = Vector{Float64}(undef, maxL)

fromTo = 1:maxL

for j in fromTo

# go over clusters implicitly

# select clusters that are larger (at least one)

selIX = len .>= (j - 1)

if !isempty(selIX)

ix = startIX[selIX] .+ (j - 1)

valCol_head[j] = maximum(d0[ix])

# potential optimization is that for j = 0 and maxL = 0, tail and head are identical

ix = startIX[selIX] .+ (len[selIX]) .- (j - 1)

valCol_tail[j] = maximum(d0[ix])

end

end

return fromTo, valCol_head, valCol_tail

end

"""

finds neighbouring clusters in the vector and returns start + length vectors

if the first and last cluster start on the first/last sample, we dont know their real depth

Input is assumed to be a thresholded Array with only 0/1

"""

function cluster(data)

label = label_components(data)

K = maximum(label)

start = fill(0, K)

stop = fill(0, K)

for k = 1:K

#length[k] = sum(label.==k)

start[k] = findfirst(==(k), label)

stop[k] = findlast(==(k), label)

end

len = stop .- start

# if the first and last cluster start on the first/last sample, we dont know their real depth

if length(start) > 0 && start[end] + len[end] == length(data)

start = start[1:end-1]

len = len[1:end-1]

end

if length(start) > 0 && start[1] == 1

start = start[2:end]

len = len[2:end]

end

return start, len

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 1071 | """

permute data and returns clusterdepth

returns for each cluster-depth, the maximum stat-value over all detected clusters, from both sides at the same time

Conversely, perm_clusterdepths_both, returns the maximum stat-value from head and tail separately (combined after calcuating pvalues)

"""

function perm_clusterdepths_combined(rng, data, statFun)

Jₖ = spzeros(m - 1, nₚ)

for i = 1:nₚ

d0 = perm(rng, data, statFun)

startIX, len = get_clusterdepths(d0, τ)

maxL = maximum(len)

# go up to max-depth

valCol = Vector{Float64}(undef, maxL)

fromTo = 1:maxL

for j in fromTo

# go over clusters implicitly

# select clusters that are larger (at least one)

selIX = len .>= j

if !isempty(selIX)

ix_head = startIX[selIX] .+ j

ix_tail = len[selIX] .+ startIX[selIX] .- j

valCol[j] = maximum(d0[vcat(ix_head, ix_tail)])

end

end

Jₖ[fromTo, i] = valCol

end

return Jₖ

end;

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 2697 |

"""

pvals(data;kwargs...) = pvals(data[2:end],data[1];kwargs...)

pvals(data::AbstractVector,stat::Real;type=:twosided)

calculates pvalues based on permutation results

if called with `stat`, first entry is assumed to be the observation

"""

pvals(data; kwargs...) = pvals(data[2:end], data[1]; kwargs...)

function pvals(data::AbstractVector, stat::Real; type = :twosided)

data = vcat(stat, data)

if type == :greater || type == :twosided

comp = >=

if type == :twosided

data = abs.(data)

stat = abs.(stat)

end

elseif type == :lesser

comp = <=

else

error("not implemented")

end

pvals = (sum(comp(stat[1]), data)) / (length(data))

return pvals

end

"""

Calculate pvals from cluster-depth permutation matrices

"""

pvals(stat::Matrix, args...; kwargs...) =

mapslices(x -> pvals(x, args...; kwargs...), stat; dims = (2))

pvals(stat, J::ClusterDepthMatrix, args...; kwargs...) =

pvals(stat, (J,), args...; kwargs...)

function pvals(

stat::AbstractVector,

Jₖ::NTuple{T,ClusterDepthMatrix},

τ;

type = :troendle,

) where {T}

start, len = cluster(stat .> τ) # get observed clusters

p = fill(1.0, size(stat, 1))

if type == :troendle

for k = 1:length(start) # go over clusters

s = start[k]

l = len[k]

forwardIX = s:(s+l)

@views t_head = troendle(Jₖ[1], sparsevec(1:(l+1), stat[forwardIX], l + 1))

@views p[forwardIX] = t_head[1:(l+1)]

if length(Jₖ) == 2

backwardIX = (s+l):-1:s

@views t_tail = troendle(Jₖ[2], sparsevec(1:(l+1), stat[backwardIX], l + 1))

@views p[backwardIX] = max.(p[backwardIX], t_tail[1:(l+1)])

end

end

elseif type == :naive

function getJVal(Jₖ, l)

if l >= size(Jₖ, 1)

valsNull = 0

else

valsNull = @view Jₖ[l+1, :]

end

return valsNull

end

for k = 1:length(start) # go over clusters

for ix = start[k]:(start[k]+len[k])

p[ix] =

(1 + sum(stat[ix] .<= getJVal(Jₖ[1].J, len[k]))) /

(size(Jₖ[1].J, 2) + 1)

if length(Jₖ) == 2

tail_p =

(1 + sum((stat[ix] .<= getJVal(Jₖ[2].J, len[k])))) ./

(size(Jₖ[2].J, 2) + 1)

p[ix] = max(p[ix], tail_p)

end

end

end

#p = p .+ 1/size(Jₖ[1].J,2)

else

error("unknown type")

end

# add conservative permutation fix

return p

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 5098 | """

pvals_rankbased(perm::AbstractMatrix,stat::AbstractVector;kwargs...)

pvals_rankbased(cdm::ClusterDepthMatrix,stat::AbstractSparseVector;kwargs...)

Takes a matrix filled with permutation results and a observed matrix (size ntests) and calculates the p-values for each entry along

the permutation dimension

`perm`: Matrix of permutations with dimensions `(ntests x permutations)`

`stat`: Vector of observed statistics size `ntests`

For `cdm::ClusterDepthMatrix` we can easily trim the permutation matrix towards the end (as it is actually a ragged Matrix).

That is, the permutation matrix might look like:

perm = [

x x x x x x x;

x x . x . x x;

x x . x . . x;

x x . . . . x;

. x . . . . .;

. . . . . . .;

. . . . . . .;

. . . . . . .;

]

Then the last three rows can simply be removed. rowwise-Ranks/pvalues would be identical anyway

The same will be checked for the stat-vector, if the stat vector is only "3" depths long, but the permutation has been calculated for "10" depths, we do not need to check the last 7 depths

of the permutation matrix.

**Output** will always be Dense Matrix (length(stat),nperm+1) with the first column being the pvalues of the observations

"""

function pvals_rankbased(cdm::ClusterDepthMatrix, stat::AbstractSparseVector; kwargs...)

perm = cdm.J

if length(stat) > size(perm, 1) # larger cluster in stat than perms

perm = sparse(findnz(perm)..., length(stat), size(perm, 2))

elseif length(stat) < size(perm, 1)

#stat = sparsevec(findnz(stat)...,size(perm,1))

i, j, v = findnz(perm)

ix = i .<= length(stat)

perm = sparse(i[ix], j[ix], v[ix], length(stat), size(perm, 2))

end

pvals = pvals_rankbased(perm, stat; kwargs...)

return pvals

end

function pvals_rankbased(perm::AbstractMatrix, stat::AbstractVector; type = :twosided)

# add stat to perm

d = hcat(stat, perm)

# fix specific testing

if type == :twosided

d = .-abs.(d)

elseif type == :greater

d = .-d

elseif type == :lesser

else

error("unknown type")

end

d = Matrix(d)

# potential improvement, competerank 1224 -> but should be modified competerank 1334, then we could skip the expensive ceil below

d = mapslices(tiedrank, d, dims = 2)

# rank & calc p-val

#@show(d[1:10,1:10])

d .= ceil.(d) ./ (size(d, 2))

return d

end

"""

in some sense: `argsort(argunique(x))`, returns the indices to get a sorted unique of x

"""

function ix_sortUnique(x)

uniqueidx(v) = unique(i -> v[i], eachindex(v))

un_ix = uniqueidx(x)

sort_ix = sortperm(x[un_ix])

sortUn_ix = un_ix[sort_ix]

return sortUn_ix

end

"""

calculates the minimum in `X` along `dims=2` in the columns specified by àrrayOfIndicearrays` which could be e.g. `[[1,2],[5,6],[3,4,7]]`

"""

function multicol_minimum(x::AbstractMatrix, arrayOfIndicearrays::AbstractVector)

min = fill(NaN, size(x, 2), length(arrayOfIndicearrays))

for to = 1:length(arrayOfIndicearrays)

@views min[:, to] = minimum(x[arrayOfIndicearrays[to], :], dims = 1)

end

return min

end

"""

function troendle(perm::AbstractMatrix,stat::AbstractVector;type=:twosided)

Multiple Comparison Correction as in Troendle 1995

`perm` with size ntests x nperms

`stat` with size ntests

`type` can be :twosided (default), :lesser, :greater

Heavily inspired by the R implementation in permuco from Jaromil Frossard

Note: While permuco is released under BSD, the author Jaromil Frossard gave us an MIT license for the troendle and the clusterdepth R-functions.

"""

function troendle(perm::AbstractMatrix, stat::AbstractVector; type = :twosided)

pAll = pvals_rankbased(perm, stat; type = type)

# get uncorrected pvalues of data

#@show size(pAll)

pD = pAll[:, 1] # get first observation

# rank the pvalues

pD_rank = tiedrank(pD)

# test in ascending order, same p-vals will be combined later

# the following two lines are

sortUn_ix = ix_sortUnique(pD_rank)

# these two lines would be identical

#testOrder = pD_rank[sortUn_ix]

#testOrder = sort(unique(pD_rank))

# as ranks can be tied, we have to take those columns, and run a "min" on them

testOrder_all = [findall(x .== pD_rank) for x in pD_rank[sortUn_ix]]

minPermPArray = multicol_minimum(pAll, testOrder_all)

# the magic happens here, per permutation

resortPermPArray = similar(minPermPArray)

# the following line can be made much faster by using views & reverse the arrays

#resortPermPArray = reverse(accumulate(min,reverse(minPermPArray),dims=2))

@views accumulate!(

min,

resortPermPArray[:, end:-1:1],

minPermPArray[:, end:-1:1],

dims = 2,

)

pval_testOrder = pvals.(eachcol(resortPermPArray); type = :lesser)

pval_testOrderMax = accumulate(max, pval_testOrder) # no idea why though

uniqueToNonUnique = vcat([fill(x, length(v)) for (x, v) in enumerate(testOrder_all)]...)

return pval_testOrderMax[uniqueToNonUnique][invperm(vcat(testOrder_all...))]

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 1827 | function studentt!(out::AbstractMatrix, x::AbstractArray{<:Real,3}; kwargs...)

for (x_ch, o_ch) in zip(eachslice(x, dims = 1), eachslice(out, dims = 1))

#@debug size(x_ch),size(o_ch)

studentt!(o_ch, x_ch; kwargs...)

end

return out

end

"""

studentt_test!(out,x;type=:abs)

strongly optimized one-sample t-test function.

Implements: t = mean(x) / ( sqrt(var(x))) / sqrt(size(x,2)-1)

Accepts 2D or 3D matrices, always aggregates over the last dimension

"""

function studentt_test!(out, x::AbstractMatrix)

mean!(out, x)

df = 1 ./ sqrt(size(x, 2) - 1)

#@debug size(out),size(x)

tmp = [1.0]

for k in eachindex(out)

std!(tmp, @view(x[k, :]), out[k])

@views out[k] /= (sqrt(tmp[1]) * df)

end

return out

end

function std!(tmp, x_slice, μ)

@views x_slice .= (x_slice .- μ) .^ 2

sum!(tmp, x_slice)

tmp .= sqrt.(tmp ./ (length(x_slice) - 1))

end

function studentt!(out, x)

#@debug size(out),size(x)

mean!(out, x)

out .= out ./ (std(x, mean = out, dims = 2)[:, 1] ./ sqrt(size(x, 2) - 1))

end

function studentt(x::AbstractMatrix)

# more efficient than this one liner

# studentt(x::AbstractMatrix) = (mean(x,dims=2)[:,1])./(std(x,dims=2)[:,1]./sqrt(size(x,2)-1))

μ = mean(x, dims = 2)[:, 1]

μ .= μ ./ (std(x, mean = μ, dims = 2)[:, 1] ./ sqrt(size(x, 2) - 1))

end

studentt(x::AbstractArray{<:Real,3}) =

dropdims(mapslices(studentt, x, dims = (2, 3)), dims = 3)

"""

Permutation via random sign-flip

Flips signs along the last dimension

"""

function sign_permute!(rng, x::AbstractArray)

n = ndims(x)

@assert n > 1 "vectors cannot be permuted"

fl = rand(rng, [-1, 1], size(x, n))

for (flip, xslice) in zip(fl, eachslice(x; dims = n))

xslice .= xslice .* flip

end

return x

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 562 | @testset "cluster" begin

s, l = ClusterDepth.cluster(

[4.0, 0.0, 10.0, 0.0, 3.0, 4.0, 0, 4.0, 4.0, 0.0, 0.0, 5.0] .> 0.9,

)

@test s == [3, 5, 8]

@test l == [0, 1, 1]

s, l = ClusterDepth.cluster([0.0, 0.0, 0.0, 0.0] .> 0.9)

@test s == []

@test l == []

end

@testset "Tests for 2D data" begin

data = randn(StableRNG(1), 4, 5)

@show ClusterDepth.calc_clusterdepth(data, 0)

end

@testset "Tests for 3D data" begin

data = randn(StableRNG(1), 3, 20, 5)

@show ClusterDepth.clusterdepth(data; τ = 0.4, nperm = 5)

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 1960 | @testset "pvals" begin

@testset "pvals-onePerm" begin

@test ClusterDepth.pvals(zeros(100), 1) == 1 / 101

@test ClusterDepth.pvals(vcat(1, zeros(100))) == 1 / 101

@test ClusterDepth.pvals(vcat(0, ones(100))) == 101 / 101

@test ClusterDepth.pvals(vcat(80, 0:99)) == 21 / 101

end

@testset "pvals-ClusterDepthMatrix" begin

cdm = ClusterDepth.ClusterDepthMatrix(sparse(ones(10, 1000)))

p = ClusterDepth.pvals([0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 2.0, 1.0, 0.0, 0.0], cdm, 0.1)

@test p[7] ≈ 1 / 1001

p = ClusterDepth.pvals([0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 2.0, 1.0, 0.0, 0.0], cdm, 2.1)

p .≈ 1000 / 1000

p = ClusterDepth.pvals(

[0.0, 0.0, 0.0, 0.0, 0.0, 1.0, 2.0, 1.0, 0.0, 0.0],

cdm,

0.1,

type = :naive,

)

@test p[7] ≈ 1 / 1001

J = zeros(10, 1000)

J[1, :] .= 5

J[2, :] .= 3

J[3, :] .= 1

cdm = ClusterDepth.ClusterDepthMatrix(sparse(J))

p = ClusterDepth.pvals(

[4.0, 0.0, 10.0, 0.0, 3.0, 4.0, 0.0, 4.0, 4.0, 0.0, 0.0],

cdm,

0.9,

)

@test all((p .> 0.05) .== [1, 1, 0, 1, 1, 0, 1, 1, 0, 1, 1])

#two tailed

p = ClusterDepth.pvals(

[4.0, 0.0, 10.0, 0.0, 3.0, 4.0, 0.0, 4.0, 4.0, 0.0, 0.0],

(cdm, cdm),

0.9,

)

@test all((p .> 0.05) .== [1, 1, 0, 1, 1, 1, 1, 1, 1, 1, 1])

p = ClusterDepth.pvals([0.0, 1.0, 2.0, 3.0, 4.0, 5.1, 2.0, 6.0], (cdm, cdm), 0.9)

@test all((p .> 0.05) .== [1, 1, 1, 1, 1, 1, 1, 1])

p = ClusterDepth.pvals([0.0, 1.0, 2.0, 3.0, 4.0, 5.1, 2.0, 0], (cdm, cdm), 0.9)

@test all((p .> 0.05) .== [1, 1, 1, 0, 0, 0, 1, 1])

p = ClusterDepth.pvals([0.0, 1.0, 2.0, 3.0, 4.0, 2.0, 1.0, 0.0, 6], (cdm, cdm), 0.9)

@test all((p .> 0.05) .== [1, 1, 1, 0, 0, 1, 1, 1, 1])

end

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 179 | using ClusterDepth

include("setup.jl")

@testset "ClusterDepth.jl" begin

include("troendle.jl")

include("cluster.jl")

include("utils.jl")

include("pvals.jl")

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 96 | using Test

using Random

using LinearAlgebra

using StableRNGs

using StatsBase

using SparseArrays

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 2542 | @testset "troendle" begin

@testset "troendle()" begin

nperm = 900

ntests = 9

rng = StableRNG(1)

perm = randn(rng, ntests, nperm)

stat = [0.0, 0.0, -1, 1, -3.5, 3, 10, 10, -10]

p_cor = troendle(perm, stat)

@test length(p_cor) == ntests

@test all(p_cor[1:2] .== 1.0)

@test all(p_cor[7:9] .< 0.008)

#

statSig = [100.0, 100, 100, 100, 100, 100, 100, 100, 100]

p_cor = troendle(perm, statSig)

@test length(unique(p_cor)) == 1

@test all(p_cor .== (1 / (1 + nperm)))

# sidedness

h_cor = troendle(perm, stat; type = :greater) .< 0.05

@test h_cor == [0, 0, 0, 0, 0, 1, 1, 1, 0]

h_cor = troendle(perm, stat; type = :lesser) .< 0.05

@test h_cor == [0, 0, 0, 0, 1, 0, 0, 0, 1]

end

@testset "pvals_rankbased" begin

nperm = 9

ntests = 5

perm = randn(StableRNG(1), ntests, nperm)

perm[4:5, :] .= 0

J = sparse(perm)

stat_short = sparsevec(1:2, [3, 3])

stat_longer = sparsevec(1:2, [3, 3], ntests + 2)

stat = sparsevec(1:2, [3, 3], ntests)

cdm = ClusterDepth.ClusterDepthMatrix(J)

p = ClusterDepth.pvals_rankbased(perm, stat)

@test ClusterDepth.pvals_rankbased(cdm, stat) == p

# trimming shorter vec

p_short = ClusterDepth.pvals_rankbased(cdm, stat_short)

@test size(p_short) == (2, nperm + 1)

@test p_short == p[1:2, :]

# extending longer vec

p_long = ClusterDepth.pvals_rankbased(cdm, stat_longer)

@test size(p_long) == (ntests + 2, nperm + 1)

p = ClusterDepth.pvals_rankbased([1 2 3 4 5; 1 2 3 4 5], [3.5, 3])

@test p[:, 1] ≈ [0.5, 2.0 / 3]

@test p[1, 2:end] ≈ p[2, 2:end]

p = ClusterDepth.pvals_rankbased([1 2 3 4 5; 1 2 3 4 5], [0, 6])

@test p[:, 1] ≈ [1.0, 1.0 / 6]

p = ClusterDepth.pvals_rankbased(

ClusterDepth.ClusterDepthMatrix([1 2 3 4 5; 1 2 3 4 5]),

sparse([-6, -6, -6, -6, -6]),

)

@test all(p[:, 1] .== [1 / 6.0])

# test sidedness

p = ClusterDepth.pvals_rankbased([1 2 3 4 5; 1 2 3 4 5], [-6, 6])

@test p[:, 1] ≈ [1.0 / 6, 1.0 / 6]

p = ClusterDepth.pvals_rankbased([1 2 3 4 5; 1 2 3 4 5], [-6, 6]; type = :lesser)

@test p[:, 1] ≈ [1.0 / 6, 1.0]

p = ClusterDepth.pvals_rankbased([1 2 3 4 5; 1 2 3 4 5], [-6, 6]; type = :greater)

@test p[:, 1] ≈ [1.0, 1.0 / 6]

end

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | code | 1587 | @testset "sign_permute" begin

m = [1 1 1; 2 2 2; 3 3 3; 4 4 4]

p = ClusterDepth.sign_permute!(StableRNG(2), deepcopy(m))

@test p[1, :] == [1, -1, 1]

# different seeds are different

@test p != ClusterDepth.sign_permute!(StableRNG(3), deepcopy(m))

# same seeds are the same

@test p == ClusterDepth.sign_permute!(StableRNG(2), deepcopy(m))

m = ones(1, 1000000)

@test abs(mean(ClusterDepth.sign_permute!(StableRNG(1), deepcopy(m)))) < 0.001

m = ones(1, 2, 3, 4, 5, 6, 7, 100)

o = ClusterDepth.sign_permute!(StableRNG(1), deepcopy(m))

@test sort(unique(mean(o, dims = 1:ndims(o)-1))) == [-1.0, 1.0]

end

@testset "studentt" begin

x = randn(StableRNG(1), 10000, 50)

t = ClusterDepth.studentt(x)

@test length(t) == 10000

@test maximum(abs.(t)) < 10 # we'd need to be super lucky ;)

@test mean(abs.(t) .> 2) < 0.06

#2D input data

data = randn(StableRNG(1), 4, 5)

@test size(ClusterDepth.studentt(data)) == (4,)

#3D input data

data = randn(StableRNG(1), 3, 4, 5)

@test size(ClusterDepth.studentt(data)) == (3, 4)

#

t = rand(10000)

ClusterDepth.studentt!(t, x)

@test t ≈ ClusterDepth.studentt(x)

@test length(t) == 10000

@test maximum(abs.(t)) < 10 # we'd need to be super lucky ;)

@test mean(abs.(t) .> 2) < 0.06

#2D input data

data = randn(StableRNG(1), 4, 5)

t = rand(4)

ClusterDepth.studentt!(t, data)

@test size(t) == (4,)

#3D input data

data = randn(StableRNG(1), 3, 4, 5)

@test size(ClusterDepth.studentt(data)) == (3, 4)

end

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | docs | 2277 | # ClusterDepth

[](https://s-ccs.github.io/ClusterDepth.jl/dev/)

[](https://github.com/s-ccs/ClusterDepth.jl/actions/workflows/CI.yml?query=branch%3Amain)

[](https://codecov.io/gh/s-ccs/ClusterDepth.jl)

Fast implementation (~>100x faster than R/C++) of the ClusterDepth multiple comparison algorithm from Frossard and Renaud [Neuroimage 2022](https://doi.org/10.1016/j.neuroimage.2021.118824)

This is especially interesting to EEG signals. Currently only acts on a single channel/timeseries. Multichannel as discussed in the paper is the next step.

## Quickstart

```julia

using ClusterDepth

pval_corrected = clusterdepth(erpMatrix; τ=2.3,nperm=5000)

```

## FWER check

We checked FWER for `troendle(...)` and `clusterdepth(...)` [(link to docs)](https://www.s-ccs.de/ClusterDepth.jl/dev/reference/type1/)

For clustedepth we used 5000 repetitions, 5000 permutations, 200 tests.

|simulation|noise|uncorrected|type|

|---|---|---|---|

|clusterdepth|white|1.0|0.0554|

|clusterdepth|red*|0.835|0.0394|

|troendle|white|XX|XX|

|troendle|red*|XX|XX|

Uncorrected should be 1 - it is very improbable, than none of the 200 tests in one repetition is not significant (we expect 5% to be).

Corrected should be 0.05 (CI-95 [0.043,0.0564])

\* red noise introduces strong correlations between individual trials, thus makes the tests correlated while following the H0.

## Citing

Algorithm published in https://doi.org/10.1016/j.neuroimage.2021.118824 - Frossard & Renaud 2022, Neuroimage

Please also cite this toolbox: [](https://zenodo.org/badge/latestdoi/593411464)

Some functions are inspired by [R::permuco](https://cran.r-project.org/web/packages/permuco/index.html), written by Jaromil Frossard. Note: Permuco is GPL licensed, but Jaromil Frossard released the relevant clusteterdepth functions to me under MIT. Thereby, this repository can be licensed under MIT.

1

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 06fdba84326a461861f836d352308d4a17f3370b | docs | 627 | ```@meta

CurrentModule = ClusterDepth

```

# ClusterDepth

## Comparison to permuco R implementation

The implementation to Permuco is similar, but ClusterDepth.jl is more barebone - that is, we dont offer many permutation schemes, focus on the ClusterDepth Algorithm, and don't provide the nice wrappers like `clusterLM`.

Timing wise, a simple test on 50 subjects, 100 repetitions, 5000 permutations shows the following results:

|timepoints|ClusterDepth.jl|permuco|julia-speedup|

|---|---|---|---|

|40|0.03s|2.9s|~100x|

|400|0.14s|22s|~160x|

|4000|1.88s|240s|~120x|

```@index

```

```@autodocs

Modules = [ClusterDepth]

```

| ClusterDepth | https://github.com/s-ccs/ClusterDepth.jl.git |

|

[

"MIT"

] | 0.2.1 | 6c676e79f98abb6d33fa28122cad099f1e464afe | code | 4059 | module Bench

using IncompleteLU

using BenchmarkTools

using SparseArrays

using LinearAlgebra: axpy!, I

using Random: seed!

using Profile

function go()

seed!(1)

A = sprand(10_000, 10_000, 10 / 10_000) + 15I

LU = ilu(A)

Profile.clear_malloc_data()

@profile ilu(A)

end

function axpy_perf()

A = sprand(1_000, 1_000, 10 / 1_000) + 15I

y = SparseVectorAccumulator{Float64}(1_000)

axpy!(1.0, A, 1, A.colptr[1], y)

axpy!(1.0, A, 2, A.colptr[2], y)

axpy!(1.0, A, 3, A.colptr[3], y)

Profile.clear_malloc_data()

axpy!(1.0, A, 1, A.colptr[1], y)

axpy!(1.0, A, 2, A.colptr[2], y)

axpy!(1.0, A, 3, A.colptr[3], y)

end

function sum_values_row_wise(A::SparseMatrixCSC)

n = size(A, 1)

reader = RowReader(A)

sum = 0.0

for row = 1 : n

column = first_in_row(reader, row)

while is_column(column)

sum += nzval(reader, column)

column = next_column!(reader, column)

end

end

sum

end

function sum_values_column_wise(A::SparseMatrixCSC)

n = size(A, 1)

sum = 0.0

for col = 1 : n

for idx = A.colptr[col] : A.colptr[col + 1] - 1

sum += A.nzval[idx]

end

end

sum

end

function bench_alloc()

A = sprand(1_000, 1_000, 10 / 1_000) + 15I

sum_values_row_wise(A)

Profile.clear_malloc_data()

sum_values_row_wise(A)

end

function bench_perf()

A = sprand(10_000, 10_000, 10 / 10_000) + 15I

@show sum_values_row_wise(A)

@show sum_values_column_wise(A)

fst = @benchmark Bench.sum_values_row_wise($A)

snd = @benchmark Bench.sum_values_column_wise($A)

fst, snd

end

function bench_ILU()

seed!(1)

A = sprand(10_000, 10_000, 10 / 10_000) + 15I

LU = ilu(A, τ = 0.1)

@show nnz(LU.L) nnz(LU.U)

# nnz(LU.L) = 44836

# nnz(LU.U) = 54827

result = @benchmark ilu($A, τ = 0.1)

# BenchmarkTools.Trial:

# memory estimate: 16.24 MiB

# allocs estimate: 545238

# --------------

# minimum time: 116.923 ms (0.00% GC)

# median time: 127.514 ms (2.18% GC)

# mean time: 130.932 ms (1.75% GC)

# maximum time: 166.202 ms (3.05% GC)

# --------------

# samples: 39

# evals/sample: 1

# After switching to row reader.

# BenchmarkTools.Trial:

# memory estimate: 15.96 MiB

# allocs estimate: 545222

# --------------

# minimum time: 55.264 ms (0.00% GC)

# median time: 61.872 ms (4.73% GC)

# mean time: 61.906 ms (3.72% GC)

# maximum time: 74.615 ms (4.12% GC)

# --------------

# samples: 81

# evals/sample: 1

# After skipping off-diagonal elements in A

# BenchmarkTools.Trial:

# memory estimate: 15.96 MiB

# allocs estimate: 545222

# --------------

# minimum time: 51.187 ms (0.00% GC)

# median time: 55.767 ms (4.27% GC)

# mean time: 56.586 ms (3.50% GC)

# maximum time: 72.987 ms (7.53% GC)

# --------------

# samples: 89

# evals/sample: 1

# After moving L and U to Row Reader structs

# BenchmarkTools.Trial:

# memory estimate: 13.03 MiB

# allocs estimate: 495823

# --------------

# minimum time: 43.062 ms (0.00% GC)

# median time: 46.205 ms (2.83% GC)

# mean time: 47.076 ms (1.76% GC)

# maximum time: 65.956 ms (1.96% GC)

# --------------

# samples: 107

# evals/sample: 1

# After emptying the fill-in vecs during copy.

# BenchmarkTools.Trial:

# memory estimate: 13.03 MiB

# allocs estimate: 495823

# --------------

# minimum time: 41.930 ms (0.00% GC)

# median time: 44.583 ms (2.25% GC)

# mean time: 45.785 ms (1.38% GC)

# maximum time: 66.683 ms (1.59% GC)

# --------------

# samples: 110

# evals/sample: 1

end

end

# Bench.go() | IncompleteLU | https://github.com/haampie/IncompleteLU.jl.git |

|

[

"MIT"

] | 0.2.1 | 6c676e79f98abb6d33fa28122cad099f1e464afe | code | 1026 | module BenchInsertion

using IncompleteLU

using BenchmarkTools

function do_stuff(A)

n = size(A, 1)

U_row = IncompleteLU.InsertableSparseVector{Float64}(n);

A_reader = IncompleteLU.RowReader(A)

for k = 1 : n

col = first_in_row(A_reader, k)

while is_column(col)

IncompleteLU.add!(U_row, nzval(A_reader, col), col, n + 1)