licenses

sequencelengths 1

3

| version

stringclasses 677

values | tree_hash

stringlengths 40

40

| path

stringclasses 1

value | type

stringclasses 2

values | size

stringlengths 2

8

| text

stringlengths 25

67.1M

| package_name

stringlengths 2

41

| repo

stringlengths 33

86

|

|---|---|---|---|---|---|---|---|---|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 753 | function Header(io::IOStream)

seek(io, 0)

offset = read(io, UInt32)

header_length = read(io, UInt32)

n_variants = read(io, UInt32)

n_samples = read(io, UInt32)

magic = read(io, 4)

# check magic number

@assert String(magic) == "bgen" || all(magic .== 0) "Magic number mismatch"

seek(io, header_length)

flags = read(io, UInt32)

compression = flags & 0x03

layout = (flags & 0x3c) >> 2

has_sample_ids = convert(Bool, flags & 0x80000000 >> 31)

Header(offset, header_length, n_variants, n_samples, compression, layout,

has_sample_ids)

end

function Header(filename::String)

io = open(filename)

Header(io)

end

@inline function offset_first_variant(h::Header)

return h.offset + 4

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 6752 | function Index(path::AbstractString)

db = SQLite.DB(path)

Index(path, db, [], [], [], [])

end

@inline function _check_idx(b::Bgen)

@assert b.idx !== nothing "bgen index (.bgi) is needed"

end

function select_region(idx::Index, chrom::AbstractString;

start=nothing, stop=nothing)

if start === nothing && stop === nothing

q = "SELECT file_start_position FROM Variant WHERE chromosome=?"

params = (chrom,)

elseif stop === nothing

q = "SELECT file_start_position FROM Variant" *

" WHERE chromosome=? AND position>=?"

params = (chrom, start)

else

q = "SELECT file_start_position FROM Variant" *

" WHERE chromosome=? AND position>=? AND position<=?"

params = (chrom, start, stop)

end

r = (DBInterface.execute(idx.db, q, params) |> columntable)[1]

end

"""

select_region(bgen, chrom; start=nothing, stop=nothing)

Select variants from a region. Returns variant start offsets on the file.

Returns a `BgenVariantIteratorFromOffsets` object.

"""

function select_region(b::Bgen, chrom::AbstractString;

start=nothing, stop=nothing)

_check_idx(b)

offsets = select_region(b.idx, chrom; start=start, stop=stop)

BgenVariantIteratorFromOffsets(b, offsets)

end

function variant_by_rsid(idx::Index, rsid::AbstractString; allele1=nothing, allele2=nothing, allow_multiple=false)

q = "SELECT file_start_position, allele1, allele2 FROM Variant WHERE rsid= ?"

params = (rsid,)

r = (DBInterface.execute(idx.db, q, params) |> columntable)#[1]

if length(r) == 0

error("variant with rsid $rsid not found")

end

if allow_multiple

return r[1]

end

if allele1 === nothing && allele2 === nothing

if length(r[1]) > 1

if !allow_multiple

error("multiple variant matches with $rsid. Try to specify allele1 and allele2, or set allow_multiple=true.")

end

end

return r[1][1]

else

firstidx = nothing

lastidx = nothing

if allele1 !== nothing && allele2 === nothing

firstidx = findall(x -> x == allele1, r.allele1)

if length(firstidx) > 1

error("Nonunique match with $rsid, allele1: $allele1.")

elseif length(firstidx) == 0

error("No match with $rsid, allele1: $allele1.")

end

return r.file_start_position[firstidx[1]]

elseif allele2 !== nothing && allele1 === nothing

lastidx = findall(x -> x == allele2, r.allele2)

if length(lastidx) > 1

error("Nonunique match with $rsid, allele2: $allele2.")

elseif length(lastidx) == 0

error("No match with $rsid, allele2: $allele2.")

end

return r.file_start_position[lastidx[1]]

else

firstidx = findall(x -> x == allele1, r.allele1)

lastidx = findall(x -> x == allele2, r.allele2)

jointidx = intersect(firstidx, lastidx)

if length(jointidx) > 1

error("Nonunique match with $rsid, allele1: $allele1, allele2: $allele2.")

elseif length(jointidx) == 0

error("No match with $rsid, allele1: $allele1, allele2: $allele2.")

end

return r.file_start_position[jointidx[1]]

end

end

end

"""

variant_by_rsid(bgen, rsid)

Find a variant by rsid

"""

function variant_by_rsid(b::Bgen, rsid::AbstractString; allele1=nothing, allele2=nothing, varid=nothing)

@assert !(varid !== nothing && (allele1 !== nothing || allele2 !== nothing)) "either define varid or alleles"

_check_idx(b)

offset = variant_by_rsid(b.idx, rsid; allele1=allele1, allele2=allele2, allow_multiple = varid !== nothing)

if varid !== nothing

vs = map(x -> BgenVariant(b, x), offset)

v_idxs = findall(x -> x.varid == varid, vs)

if length(v_idxs) > 1

error("Multiple matches.")

elseif length(v_idxs) == 0

error("no matches.")

else

return vs[v_idxs[1]]

end

end

return BgenVariant(b, offset)

end

function variant_by_pos(idx::Index, pos::Integer)

q = "SELECT file_start_position FROM Variant WHERE position= ?"

params = (pos,)

r = (DBInterface.execute(idx.db, q, params) |> columntable)[1]

if length(r) == 0

error("variant match at $pos not found")

elseif length(r) > 1

error("multiple variant matches at $pos")

end

return r[1]

end

"""

variant_by_pos(bgen, pos)

Get the variant of bgen variant given `pos` in the index file

"""

function variant_by_pos(b::Bgen, pos::Integer)

_check_idx(b)

offset = variant_by_pos(b.idx, pos)

return BgenVariant(b, offset)

end

function variant_by_index(idx::Index, first::Integer, last::Union{Nothing, Integer}=nothing)

if last === nothing

q = "SELECT file_start_position FROM Variant LIMIT 1 OFFSET ?"

params = (first - 1,)

else

q = "SELECT file_start_position FROM Variant LIMIT ? OFFSET ?"

params = (last - first + 1, first - 1)

end

r = (DBInterface.execute(idx.db, q, params) |> columntable)[1]

return r

end

"""

variant_by_index(bgen, n)

get the `n`-th variant (1-based).

"""

function variant_by_index(b::Bgen, first::Integer, last::Union{Nothing, Integer}=nothing)

_check_idx(b)

if last === nothing

offset = variant_by_index(b.idx, first)[1]

return BgenVariant(b, offset)

else

offsets = variant_by_index(b.idx, first, last)

return BgenVariantIteratorFromOffsets(b, offsets)

end

end

function offsets(idx::Index)

if length(idx.offsets) != 0

return idx.offsets

end

q = "SELECT file_start_position FROM Variant"

r = (DBInterface.execute(idx.db, q) |> columntable)[1]

resize!(idx.offsets, length(r))

idx.offsets .= r

return r

end

function rsids(idx::Index)

if length(idx.rsids) != 0

return idx.rsids

end

q = "SELECT rsid FROM Variant"

r = (DBInterface.execute(idx.db, q) |> columntable)[1]

resize!(idx.rsids, length(r))

idx.rsids .= r

return r

end

function chroms(idx::Index)

if length(idx.chroms) != 0

return idx.chroms

end

q = "SELECT chromosome FROM Variant"

r = (DBInterface.execute(idx.db, q) |> columntable)[1]

resize!(idx.chroms, length(r))

idx.chroms .= r

return r

end

function positions(idx::Index)

if length(idx.positions) != 0

return idx.positions

end

q = "SELECT position FROM Variant"

r = (DBInterface.execute(idx.db, q) |> columntable)[1]

resize!(idx.positions, length(r))

idx.positions .= r

return r

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 4889 | abstract type BgenVariantIterator <: VariantIterator end

@inline function Base.eltype(vi::BgenVariantIterator)

BgenVariant

end

"""

BgenVariantIteratorFromStart(b::Bgen)

Variant iterator that iterates from the beginning of the Bgen file

"""

struct BgenVariantIteratorFromStart <: BgenVariantIterator

b::Bgen

end

function Base.iterate(vi::BgenVariantIteratorFromStart,

state=offset_first_variant(vi.b))

if state >= vi.b.fsize

return nothing

else

v = BgenVariant(vi.b, state)

nextstate = v.next_var_offset

return (v, nextstate)

end

end

@inline function Base.length(vi::BgenVariantIteratorFromStart)

vi.b.header.n_variants

end

@inline function Base.size(vi::BgenVariantIteratorFromStart)

(vi.b.header.n_variants, )

end

"""

BgenVariantIteratorFromOffsets(b::Bgen, offsets::Vector{UInt})

BgenVariant iterator that iterates over a vector of offsets

"""

struct BgenVariantIteratorFromOffsets <: BgenVariantIterator

b::Bgen

offsets::Vector{UInt}

end

function Base.iterate(vi::BgenVariantIteratorFromOffsets, state=1)

state > length(vi.offsets) ? nothing :

(BgenVariant(vi.b, vi.offsets[state]), state + 1)

end

@inline function Base.length(vi::BgenVariantIteratorFromOffsets)

length(vi.offsets)

end

@inline function Base.size(vi::BgenVariantIteratorFromOffsets)

size(vi.offsets)

end

struct Filter{I, T} <: BgenVariantIterator

itr::I

min_maf::AbstractFloat

min_hwe_pval::AbstractFloat

min_info_score::AbstractFloat

min_success_rate_per_variant::AbstractFloat

rmask::Union{Nothing,BitVector}

cmask::Union{Nothing,BitVector}

decompressed::Union{Nothing, Vector{UInt8}}

end

"""

BGEN.filter(itr; min_maf=NaN, min_hwe_pval=NaN, min_success_rate_per_variant=NaN,

cmask=trues(n_variants(itr.b)), rmask=trues(n_variants(itr.b)))

"Filtered" iterator for variants based on min_maf, min_hwe_pval, min_success_rate_per_variant,

cmask, and rmask.

"""

filter(itr::BgenVariantIterator;

T=Float32,

min_maf=NaN, min_hwe_pval=NaN, min_info_score=NaN,

min_success_rate_per_variant=NaN,

cmask = trues(n_variants(itr.b)),

rmask = nothing,

decompressed = nothing) =

Filter{typeof(itr), T}(itr, min_maf, min_hwe_pval, min_info_score, min_success_rate_per_variant,

rmask, cmask, decompressed)

function Base.iterate(f::Filter{I,T}, state...) where {I,T}

io, h = f.itr.b.io, f.itr.b.header

if state !== ()

y = Base.iterate(f.itr, state[1][2:end]...)

cnt = state[1][1]

else

y = Base.iterate(f.itr)

cnt = 1

end

while y !== nothing

v, s = y

passed = true

if !f.cmask[cnt]

passed = false

end

if passed && (v.genotypes === nothing || v.genotypes.decompressed === nothing)

decompressed = decompress(io, v, h; decompressed=f.decompressed)

elseif passed

decompressed = v.genotypes.decompressed

end

startidx = 1

if passed && v.genotypes === nothing

p = parse_preamble(decompressed, h, v)

v.genotypes = Genotypes{T}(p, decompressed)

elseif passed

p = v.genotypes.preamble

end

if passed && h.layout == 2

startidx += 10 + h.n_samples

end

if passed && !isnan(f.min_maf)

current_maf = maf(p, v.genotypes.decompressed, startidx, h.layout, f.rmask)

if current_maf < f.min_maf && passed

passed = false

end

end

if passed && !isnan(f.min_hwe_pval)

hwe_pval = hwe(p, v.genotypes.decompressed, startidx, h.layout, f.rmask)

if hwe_pval < f.min_hwe_pval

passed = false

end

end

if passed && !isnan(f.min_info_score)

current_info_score = info_score(p, v.genotypes.decompressed, startidx, h.layout, f.rmask)

if current_info_score < f.min_info_score && passed

passed = false

end

end

if passed && !isnan(f.min_success_rate_per_variant)

successes = length(intersect(p.missings, (1:n_samples(f.itr.b)[f.rmask])))

success_rate = successes / count(f.rmask)

if success_rate < f.min_success_rate_per_variant && passed

passed = false

end

end

cnt += 1

if passed

return v, (cnt, s...)

end

y = iterate(f.itr, s...)

end

nothing

end

eltype(::Type{Filter{I,T}}) where {I,T} = BgenVariant

IteratorEltype(::Type{Filter{I,T}}) where {I,T} = IteratorEltype(I)

IteratorSize(::Type{<:Filter}) = SizeUnknown()

reverse(f::Filter) = Filter(reverse(f.itr), f.min_maf, f.min_hwe_pval,

f.min_info_score, f.min_success_rate_per_varinat, f.rmask, f.cmask, f.decompressed)

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 407 | """

minor_certain(freq, n_checked, z)

Check if minor allele is certain.

- `freq`: frequency of minor or major allele

- `n_checked`: number of individuals checked so far

- `z`: cutoff of "z" value, defaults to `5.0`

"""

function minor_certain(freq::Float64, n_checked::Integer, z::Float64=5.0)

delta = z * sqrt(freq * (1-freq) / n_checked)

return !(freq - delta < 0.5 && freq + delta > 0.5)

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 1087 | function get_samples(io::IOStream, n_samples::Integer)

sample_header_length = read(io, UInt32)

sample_n_check = read(io, UInt32)

if sample_n_check != 0

@assert n_samples == sample_n_check "Inconsistent number of samples"

else

@warn "Sample names unavailable. Do you have a separate '.sample' file?"

end

samples = String[]

for i in 1:n_samples

id_length = read(io, UInt16)

id = read(io, id_length)

push!(samples, String(id))

end

samples

end

function get_samples(path::String, n_samples::Integer)

@assert endswith(path, ".sample") "Extension of the file should be .sample"

io = open(path)

keys = split(readline(io)) # header

key_idx = ("ID_1" in keys && "ID_2" in keys) ? 2 : 1

readline(io) # types

samples = readlines(io)

samples = map(x -> split(x, " ")[key_idx], samples)

@assert length(samples) == n_samples "Inconsistent number of samples"

close(io)

samples

end

function get_samples(n_samples::Integer)

samples = [string(i) for i in 1:n_samples]

samples

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 1653 | struct Header

offset::UInt32

header_length::UInt32

n_variants::UInt32

n_samples::UInt32

compression::UInt8

layout::UInt8

has_sample_ids::Bool

end

const Samples = Vector{String}

struct Index

path::String

db::SQLite.DB

offsets::Vector{UInt64}

rsids::Vector{String}

chroms::Vector{String}

positions::Vector{UInt32}

end

struct Preamble

n_samples::UInt32

n_alleles::UInt16

phased::UInt8

min_ploidy::UInt8

max_ploidy::UInt8

ploidy::Union{UInt8, Vector{UInt8}}

bit_depth::UInt8

max_probs::Int

missings::Vector{Int}

end

mutable struct Genotypes{T}

preamble::Preamble # Once parsed, it will not be destroyed unless Genotypes is destroyed

decompressed::Union{Nothing,Vector{UInt8}}

probs::Union{Nothing, Vector{T}}

minor_idx::UInt8 # index of minor allele

dose::Union{Nothing, Vector{T}}

dose_mean_imputed::Bool

minor_allele_dosage::Bool

end

mutable struct BgenVariant <: Variant

offset::UInt64

geno_offset::UInt64 # to the start of genotype block

next_var_offset::UInt64

geno_block_size::UInt32

n_samples::UInt32

varid::String

rsid::String

chrom::String

pos::UInt32

n_alleles::UInt16

alleles::Vector{String}

# length-1 for parsed one, empty array for not parsed yet or destroyed,

genotypes::Union{Nothing, Genotypes}

end

struct Bgen <: GeneticData

io::IOStream

fsize::UInt64

header::Header

samples::Samples

idx::Union{Index, Nothing}

# note: detailed information of variables stored in Variable struct,

# accessed thru VariantIterator

ref_first::Bool

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 11050 | """

hwe(b::Bgen, v::BgenVariant; T=Float32, decompressed=nothing)

hwe(p::Preamble, d::Vector{UInt8}, idx::Vector{<:Integer}, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

Hardy-Weinberg equilibrium test for diploid biallelic case

"""

function hwe(p::Preamble, d::Vector{UInt8}, startidx::Integer, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

@assert layout == 2 "hwe only supported for layout 2"

@assert p.bit_depth == 8 && p.max_probs == 3 && p.max_ploidy == p.min_ploidy

idx1 = startidx

# "counts" times 255.

n00 = 0

n01 = 0

n11 = 0

if p.n_samples >= 16

@inbounds for n in 1:16:(p.n_samples - p.n_samples % 16)

idx_base = idx1 + ((n-1) >> 1) << 2

if rmask !== nothing

rs = vload(Vec{16,UInt16}, rmask, n)

if sum(rs) == 0

continue

end

end

r = reinterpret(Vec{16, UInt16}, vload(Vec{32, UInt8}, d, idx_base))

first = (r & mask_odd)

second = (r & mask_even) >> 8

third = 0x00ff - first - second

if rmask !== nothing

first = first * rs

second = second * rs

third = third * rs

end

n00 += sum(first)

n01 += sum(second)

n11 += sum(third)

end

end

rem = p.n_samples % 16

if rem != 0

@inbounds for n in ((p.n_samples - rem) + 1) : p.n_samples

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

n00 += d[idx_base]

n01 += d[idx_base + 1]

n11 += 255 - d[idx_base] - d[idx_base + 1]

end

end

@inbounds for n in p.missings

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

n00 -= d[idx_base]

n01 -= d[idx_base + 1]

n11 -= 255 - d[idx_base] - d[idx_base + 1]

end

n00 *= one_255th

n01 *= one_255th

n11 *= one_255th

return hwe(n00, n01, n11)

end

@inline ccdf_chisq_1(x) = gamma_inc(convert(typeof(x), 1/2), x/2, 0)[2]

"""

hwe(n00, n01, n11)

Hardy-Weinberg equilibrium test. `n00`, `n01`, `n11` are counts of homozygotes

and heterozygoes respectively. Output is the p-value of type Float64.

"""

function hwe(n00::Real, n01::Real, n11::Real)

n = n00 + n01 + n11

n == 0 && return 1.0

p0 = (n01 + 2n00) / 2n

(p0 ≤ 0.0 || p0 ≥ 1.0) && return 1.0

p1 = 1 - p0

# Pearson's Chi-squared test

e00 = n * p0 * p0

e01 = 2n * p0 * p1

e11 = n * p1 * p1

ts = (n00 - e00)^2 / e00 + (n01 - e01)^2 / e01 + (n11 - e11)^2 / e11

#pval = ccdf(Chisq(1), ts)

pval = ccdf_chisq_1(ts)

# TODO Fisher exact test

return pval

end

"""

maf(b::Bgen, v::BgenVariant; T=Float32, decompressed=nothing)

maf(p::Preamble, d::Vector{UInt8}, idx::Vector{<:Integer}, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

Minor-allele frequency for diploid biallelic case

"""

function maf(p::Preamble, d::Vector{UInt8}, startidx::Integer, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

@assert layout == 2 "maf only supported for layout 2"

@assert p.bit_depth == 8 && p.max_probs == 3 && p.max_ploidy == p.min_ploidy

idx1 = startidx

# "counts" times 255.

dosage_total = 0

if p.n_samples >= 16

@inbounds for n in 1:16:(p.n_samples - p.n_samples % 16)

idx_base = idx1 + ((n-1) >> 1) << 2

if rmask !== nothing

rs = vload(Vec{16,UInt16}, rmask, n)

if sum(rs) == 0

continue

end

end

r = reinterpret(Vec{16, UInt16}, vload(Vec{32, UInt8}, d, idx_base))

first = (r & mask_odd) << 1

second = (r & mask_even) >> 8

s = first + second

if rmask !== nothing

s = s * rs

end

dosage_total += sum(s)

end

end

rem = p.n_samples % 16

if rem != 0

@inbounds for n in ((p.n_samples - rem) + 1) : p.n_samples

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

dosage_total += 2 * d[idx_base] + d[idx_base + 1]

end

end

@inbounds for n in p.missings

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

dosage_total -= 2 * d[idx_base] + d[idx_base + 1]

end

dosage_total *= one_255th

dosage_total /= (rmask !== nothing ? sum(rmask) : p.n_samples - length(p.missings))

dosage_total < 1.0 ? dosage_total / 2 : 1 - dosage_total / 2

end

"""

info_score(b::Bgen, v::BgenVariant; T=Float32, decompressed=nothing)

info_score(p::Preamble, d::Vector{UInt8}, idx::Vector{<:Integer}, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

Information score of the variant.

"""

function info_score(p::Preamble, d::Vector{UInt8}, startidx::Integer, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}})

@assert layout == 2 "info_score only supported for layout 2"

@assert p.bit_depth == 8 && p.max_probs == 3 && p.max_ploidy == p.min_ploidy

@assert length(p.missings) == 0 "current implementation does not allow missingness"

idx1 = startidx

# "counts" times 255.

samples_cum = 0

mean_cum = 0.0

sumsq_cum = 0.0

dosage_sum = 0.0

dosage_sumsq = 0.0

rs = Vec{16,UInt16}((1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1))

rs_float = Vec{16, Float32}((1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1))

rs_float_ones = rs_float

rs_floatarr = ones(Float32, 16)

if p.n_samples >= 16

@inbounds for n in 1:16:(p.n_samples - p.n_samples % 16)

idx_base = idx1 + ((n-1) >> 1) << 2

if rmask !== nothing

rs = vload(Vec{16,UInt16}, rmask, n)

if sum(rs) == 0

continue

elseif sum(rs) == 16

rs_float = rs_float_ones

else

rs_floatarr .= @view(rmask[n:n+15])

rs_float = vload(Vec{16, Float32}, rs_floatarr, 1)

end

end

r = reinterpret(Vec{16, UInt16}, vload(Vec{32, UInt8}, d, idx_base))

first = (r & mask_odd) << 1

second = (r & mask_even) >> 8

s = first + second

if rmask !== nothing

s = s * rs

end

dosage_float = one_255th * convert(

Vec{16, Float32}, s)

samples_new = sum(rs)

sum_new = sum(dosage_float)

samples_prev = samples_cum

samples_cum = samples_cum + samples_new

mean_new = sum_new / samples_new

diff = dosage_float - mean_new

if rmask !== nothing

diff *= rs_float

end

sumsq_new = sum(diff ^ 2)

delta = mean_new - mean_cum

mean_cum += delta * samples_new / samples_cum

sumsq_cum += sumsq_new + delta ^ 2 * samples_prev * samples_new / samples_cum

end

end

rem = p.n_samples % 16

if rem != 0

@inbounds for n in ((p.n_samples - rem) + 1) : p.n_samples

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

dosage = one_255th * (2 * d[idx_base] + d[idx_base + 1])

delta = dosage - mean_cum

samples_cum += 1

mean_cum += delta / samples_cum

delta2 = dosage - mean_cum

sumsq_cum += delta * delta2

end

end

n_samples = rmask !== nothing ? sum(rmask) : p.n_samples

p = mean_cum / 2

v = sumsq_cum / (n_samples - 1)

v / (2p * (1-p))

end

function counts!(p::Preamble, d::Vector{UInt8}, startidx::Integer, layout::UInt8,

rmask::Union{Nothing, Vector{UInt16}}; r::Union{Nothing,Vector{<:Integer}}=nothing, dosage::Bool=true)

if dosage

@assert layout == 2 "hwe only supported for layout 2"

@assert p.bit_depth == 8 && p.max_probs == 3 && p.max_ploidy == p.min_ploidy

idx1 = startidx

if r !== nothing

@assert length(r) == 512

fill!(r, 0)

else

r = zeros(UInt, 512)

end

rs = Vec{16,UInt16}((1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1))

if p.n_samples >= 16

@inbounds for n in 1:16:(p.n_samples - p.n_samples % 16)

idx_base = idx1 + ((n-1) >> 1) << 2

if rmask !== nothing

rs = vload(Vec{16,UInt16}, rmask, n)

if sum(rs) == 0

continue

end

end

q = reinterpret(Vec{16, UInt16}, vload(Vec{32, UInt8}, d, idx_base))

first = (q & mask_odd) << 1

second = (q & mask_even) >> 8

s = first + second

if rmask !== nothing

s = s * rs

end

@inbounds for i in 1:16

if rs[i] != 0

ss = s[i]

r[ss + 1] += 1

end

end

end

end

rem = p.n_samples % 16

if rem != 0

@inbounds for n in ((p.n_samples - rem) + 1) : p.n_samples

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

r[2 * d[idx_base] + d[idx_base + 1] + 1] += 1

end

end

# subtract back counts for missings

@inbounds for n in p.missings

rmask !== nothing && rmask[n] == 0 && continue

idx_base = idx1 + ((n - 1) << 1)

r[2 * d[idx_base] + d[idx_base + 1] + 1] -= 1

end

r[end] = length(p.missings)

else

@error "counts for non-dosage not implemented yet"

end

r

end

for ftn in [:maf, :hwe, :info_score, :counts!]

@eval begin

function $(ftn)(b::Bgen, v::BgenVariant; T=Float32, decompressed=nothing,

is_decompressed=false, rmask=nothing, kwargs...)

io, h = b.io, b.header

if (decompressed !== nothing && !is_decompressed) ||

(decompressed === nothing && (v.genotypes === nothing ||

v.genotypes.decompressed === nothing))

decompressed = decompress(io, v, h; decompressed=decompressed)

else

decompressed = v.genotypes.decompressed

end

startidx = 1

if v.genotypes === nothing

p = parse_preamble(decompressed, h, v)

v.genotypes = Genotypes{T}(p, decompressed)

else

p = v.genotypes.preamble

end

if h.layout == 2

startidx += 10 + h.n_samples

end

$(ftn)(p, decompressed, startidx, h.layout, rmask; kwargs...)

end

end

end | BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 3197 | """

BgenVariant(b::Bgen, offset::Integer)

BgenVariant(io, offset, compression, layout, expected_n)

Parse information of a single variant beginning from `offset`.

"""

function BgenVariant(io::IOStream, offset::Integer,

compression::Integer, layout::Integer,

expected_n::Integer)

seek(io, offset)

if eof(io)

@error "reached end of file"

end

if layout == 1

n_samples = read(io, UInt32)

else

n_samples = expected_n

end

if n_samples != expected_n

@error "number of samples does not match"

end

varid_len = read(io, UInt16)

varid = String(read(io, varid_len))

rsid_len = read(io, UInt16)

rsid = String(read(io, rsid_len))

chrom_len = read(io, UInt16)

chrom = String(read(io, chrom_len))

pos = read(io, UInt32)

if layout == 1

n_alleles = 2

else

n_alleles = read(io, UInt16)

end

alleles = Array{String}(undef, n_alleles)

for i in 1:n_alleles

allele_len = read(io, UInt32)

alleles_bytes = read(io, allele_len)

alleles[i] = String(alleles_bytes)

end

if compression == 0 && layout == 1

geno_block_size = 6 * n_samples

else

geno_block_size = read(io, UInt32)

end

geno_offset = position(io)

next_var_offset = geno_offset + geno_block_size

BgenVariant(offset, geno_offset, next_var_offset, geno_block_size, n_samples,

varid, rsid, chrom, pos, n_alleles, alleles, nothing)

end

function BgenVariant(b::Bgen, offset::Integer)

h = b.header

if offset >= b.fsize

@error "reached end of file"

end

BgenVariant(b.io, offset, h.compression, h.layout, h.n_samples)

end

@inline n_samples(v::BgenVariant)::Int = v.n_samples

@inline varid(v::BgenVariant) = v.varid

@inline rsid(v::BgenVariant) = v.rsid

@inline chrom(v::BgenVariant) = v.chrom

@inline pos(v::BgenVariant)::Int = v.pos

@inline n_alleles(v::BgenVariant)::Int = v.n_alleles

@inline alleles(v::BgenVariant) = v.alleles

# The following functions are valid only after calling `probabilities!()`

# or `minor_allele_dosage!()`

@inline phased(v::BgenVariant) = v.genotypes.preamble.phased

@inline min_ploidy(v::BgenVariant) = v.genotypes.preamble.min_ploidy

@inline max_ploidy(v::BgenVariant) = v.genotypes.preamble.max_ploidy

@inline ploidy(v::BgenVariant) = v.genotypes.preamble.ploidy

@inline bit_depth(v::BgenVariant) = v.genotypes.preamble.bit_depth

@inline missings(v::BgenVariant) = v.genotypes.preamble.missings

# The below are valid after calling `minor_allele_dosage!()`

@inline function minor_allele(v::BgenVariant)

midx = v.genotypes.minor_idx

if midx == 0

@error "`minor_allele_dosage!()` must be called before `minor_allele()`"

else

v.alleles[v.genotypes.minor_idx]

end

end

@inline function major_allele(v::BgenVariant)

midx = v.genotypes.minor_idx

if midx == 0

@error "`minor_allele_dosage!()` must be called before `minor_allele()`"

else

v.alleles[3 - midx]

end

end

"""

destroy_genotypes!(v::BgenVariant)

Destroy any parsed genotype information.

"""

function clear!(v::BgenVariant)

v.genotypes = nothing

return

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 656 | using BGEN

using Statistics, Test, Printf

using GeneticVariantBase

const example_8bits = BGEN.datadir("example.8bits.bgen")

const example_10bits = BGEN.datadir("example.10bits.bgen")

const example_16bits = BGEN.datadir("example.16bits.bgen")

const example_sample = BGEN.datadir("example.sample")

include("utils.jl")

const gen_data = load_gen_data()

const vcf_data = load_vcf_data()

const haps_data = load_haps_data()

include("test_basics.jl")

include("test_getters.jl")

include("test_select_region.jl")

include("test_index.jl")

include("test_load_example_files.jl")

include("test_minor_allele_dosage.jl")

include("test_utils.jl")

include("test_filter.jl")

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 2107 | @testset "basics" begin

header_ref = BGEN.Header(example_10bits)

@testset "header" begin

header_test = BGEN.Header(example_10bits)

@test header_test.offset == 0x0000178c # 6028

@test header_test.header_length == 0x00000014 # 20

@test header_test.n_variants == 0x000000c7 # 199

@test header_test.n_samples == 0x000001f4 # 500

@test header_test.compression == 1

@test header_test.layout == 2

@test header_test.has_sample_ids == 1

end

@testset "samples_separate" begin

n_samples = 500

samples_test2 = BGEN.get_samples(example_sample, n_samples)

samples_correct = [(@sprintf "sample_%03d" i) for i in 1:n_samples]

@test all(samples_correct .== samples_test2)

samples_test3 = BGEN.get_samples(n_samples)

@test all([string(i) for i in 1:n_samples] .== samples_test3)

end

bgen = BGEN.Bgen(example_10bits)

@testset "bgen" begin

@test bgen.fsize == 223646

@test bgen.header == header_ref

n_samples = bgen.header.n_samples

samples_correct = [(@sprintf "sample_%03d" i) for i in 1:n_samples]

@test all(samples_correct .== bgen.samples)

variants = parse_variants(bgen)

var = variants[4]

@test length(variants) == 199

@test var.offset == 0x0000000000002488

@test var.geno_offset == 0x00000000000024b1

@test var.next_var_offset == 0x0000000000002902

@test var.geno_block_size == 0x00000451

@test var.n_samples == 0x000001f4

@test var.varid == "SNPID_5"

@test var.rsid == "RSID_5"

@test var.chrom == "01"

@test var.pos == 0x00001388

@test var.n_alleles == 2

@test all(var.alleles.== ["A", "G"])

end

@testset "preamble" begin

io, v, h = bgen.io, parse_variants(bgen)[1], bgen.header

decompressed = BGEN.decompress(io, v, h)

preamble = BGEN.parse_preamble(decompressed, h, v)

@test preamble.phased == 0

@test preamble.min_ploidy == 2

@test preamble.max_ploidy == 2

@test all(preamble.ploidy .== 2)

@test preamble.bit_depth == 10

@test preamble.max_probs == 3

@test length(preamble.missings) == 1

@test preamble.missings[1] == 1

end

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 2227 | @testset "filter" begin

b = Bgen(BGEN.datadir("example.8bits.bgen"))

vidx = falses(b.header.n_variants)

vidx[1:10] .= true

BGEN.filter("test.bgen", b, vidx)

b2 = Bgen("test.bgen"; sample_path="test.sample")

@test all(b.samples .== b2.samples)

for (v1, v2) in zip(iterator(b), iterator(b2)) # length of two iterators are different.

# it stops when the shorter one (b2) ends.

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.chrom == v2.chrom

@test v1.pos == v2.pos

@test v1.n_alleles == v2.n_alleles

@test all(v1.alleles .== v2.alleles)

decompressed1 = BGEN.decompress(b.io, v1, b.header)

decompressed2 = BGEN.decompress(b2.io, v2, b2.header)

@test all(decompressed1 .== decompressed2)

end

sidx = falses(b.header.n_samples)

sidx[1:10] .= true

BGEN.filter("test2.bgen", b, trues(b.header.n_variants), sidx)

b3 = Bgen("test2.bgen"; sample_path="test2.sample")

for (v1, v3) in zip(iterator(b), iterator(b3))

@test v1.varid == v3.varid

@test v1.rsid == v3.rsid

@test v1.chrom == v3.chrom

@test v1.pos == v3.pos

@test v1.n_alleles == v3.n_alleles

@test all(v1.alleles .== v3.alleles)

@test isapprox(probabilities!(b, v1)[:, 1:10], probabilities!(b3, v3); nans=true)

end

b4 = Bgen(BGEN.datadir("complex.24bits.bgen"))

BGEN.filter("test3.bgen", b4, trues(b4.header.n_variants), BitVector([false, false, true, true]))

b5 = Bgen("test3.bgen"; sample_path = "test3.sample")

for (v4, v5) in zip(iterator(b4), iterator(b5))

@test v4.varid == v5.varid

@test v4.rsid == v5.rsid

@test v4.chrom == v5.chrom

@test v4.pos == v5.pos

@test v4.n_alleles == v5.n_alleles

@test all(v4.alleles .== v5.alleles)

@test isapprox(probabilities!(b4, v4)[:, 3:4], probabilities!(b5, v5); nans=true)

end

close(b)

close(b2)

close(b3)

close(b4)

close(b5)

rm("test.bgen", force=true)

rm("test.sample", force=true)

rm("test2.bgen", force=true)

rm("test2.sample", force=true)

rm("test3.bgen", force=true)

rm("test3.sample", force=true)

end | BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 792 | @testset "getters" begin

b = BGEN.Bgen(example_8bits)

@test fsize(b) == 128746

@test all(samples(b) .== b.samples)

@test n_samples(b) == 500

@test n_variants(b) == 199

@test compression(b) == "Zlib"

v = first(iterator(b; from_bgen_start=true))

@test n_samples(v::Variant) == 500

@test varid(v::Variant) == "SNPID_2"

@test rsid(v::Variant) == "RSID_2"

@test chrom(v::Variant) == "01"

@test pos(v::Variant) == 2000

@test n_alleles(v::Variant) == 2

@test length(alleles(v::Variant)) == 2

@test all(alleles(v) .== ["A", "G"])

minor_allele_dosage!(b, v)

@test phased(v) == 0

@test min_ploidy(v) == 2

@test max_ploidy(v) == 2

@test all(ploidy(v) .== 2)

@test bit_depth(v) == 8

@test length(missings(v)) == 1

@test missings(v)[1] == 1

@test minor_allele(v) == "A"

@test major_allele(v) == "G"

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 987 | @testset "index" begin

@testset "index_opens" begin

@test Bgen(BGEN.datadir("example.15bits.bgen")).idx === nothing

@test Bgen(BGEN.datadir("example.16bits.bgen")).idx !== nothing

end

@testset "index_region" begin

chrom = "01"

start = 5000

stop = 50000

b = Bgen(BGEN.datadir("example.16bits.bgen"))

idx = b.idx

@test length(select_region(b, chrom)) == length(gen_data)

@test length(select_region(b, "02")) == 0

@test length(select_region(b, chrom;

start=start * 100, stop=stop * 100)) == 0

after_pos_offsets = select_region(b, chrom; start=start)

@test length(after_pos_offsets) ==

length(filter(x -> x.pos >= start, gen_data))

in_region_offsets = select_region(b, chrom; start=start, stop=stop)

@test length(in_region_offsets) == length(filter(x -> start <= x.pos <=

stop, gen_data))

@test rsid(variant_by_rsid(b, "RSID_10")) == "RSID_10"

@test rsid(variant_by_index(b, 4)) == "RSID_3"

end

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 2840 | @testset "example files" begin

@testset "bit depths" begin

for i in 1:32

b = Bgen(BGEN.datadir("example.$(i)bits.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, gen_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), i)

end

end

end

@testset "zstd" begin

b = Bgen(BGEN.datadir("example.16bits.zstd.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, gen_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), 16)

end

end

@testset "v11" begin

b = Bgen(BGEN.datadir("example.v11.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, gen_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), 16)

end

end

@testset "haplotypes" begin

b = Bgen(BGEN.datadir("haplotypes.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, haps_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), 16)

end

end

@testset "complex" begin

b = Bgen(BGEN.datadir("complex.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, vcf_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), 16)

end

end

@testset "complex bit depths" begin

for i in 1:32

b = Bgen(BGEN.datadir("complex.$(i)bits.bgen"); idx_path = nothing)

v = parse_variants(b)

for (j, (v1, v2)) in enumerate(zip(v, vcf_data))

@test v1.chrom == v2.chrom

@test v1.varid == v2.varid

@test v1.rsid == v2.rsid

@test v1.pos == v2.pos

@test all(v1.alleles .== v2.alleles)

@test array_equal(v2.probs, probabilities!(b, v1), i)

end

end

end

@testset "null" begin

@test_throws SystemError Bgen(BGEN.datadir("Hello_World.bgen"))

end

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 3961 | @testset "minor allele dosage" begin

@testset "slow" begin

path = BGEN.datadir("example.16bits.zstd.bgen")

b = Bgen(path)

for v in iterator(b)

probs = probabilities!(b, v)

dose = minor_allele_dosage!(b, v)

# dosages for each allele

a1 = probs[1, :] .* 2 .+ probs[2, :]

a2 = probs[3, :] .* 2 .+ probs[2, :]

dose_correct = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? a1 : a2

minor_allele_index = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? 1 : 2

@test v.genotypes.minor_idx == minor_allele_index

@test all(isapprox.(dose, dose_correct; atol=2e-7, nans=true))

end

end

@testset "fast" begin

path = BGEN.datadir("example.8bits.bgen")

b = Bgen(path)

for v in iterator(b)

probs = probabilities!(b, v)

dose = minor_allele_dosage!(b, v)

# dosages for each allele

a1 = probs[1, :] .* 2 .+ probs[2, :]

a2 = probs[3, :] .* 2 .+ probs[2, :]

dose_correct = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? a1 : a2

minor_allele_index = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? 1 : 2

@test v.genotypes.minor_idx == minor_allele_index

@test all(isapprox.(dose, dose_correct; atol=2e-7, nans=true))

end

end

@testset "v11" begin

path = BGEN.datadir("example.v11.bgen")

b = Bgen(path)

for v in iterator(b)

probs = probabilities!(b, v)

dose = minor_allele_dosage!(b, v)

# dosages for each allele

a1 = probs[1, :] .* 2 .+ probs[2, :]

a2 = probs[3, :] .* 2 .+ probs[2, :]

dose_correct = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? a1 : a2

minor_allele_index = sum(a1[.!isnan.(a1)]) < sum(a2[.!isnan.(a2)]) ? 1 : 2

@test v.genotypes.minor_idx == minor_allele_index

@test all(isapprox.(dose, dose_correct; atol=7e-5, nans=true))

end

end

@testset "multiple_calls" begin

b = Bgen(

BGEN.datadir("example.8bits.bgen");

sample_path=BGEN.datadir("example.sample"),

idx_path=BGEN.datadir("example.8bits.bgen.bgi")

)

# second allele minor

v = variant_by_rsid(b, "RSID_110")

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.9621725f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.9621725f0)

@test isapprox(mean(first_allele_dosage!(b, v)), 2 - 0.9621725f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.9621725f0)

clear!(v)

@test isapprox(mean(first_allele_dosage!(b, v)), 2 - 0.9621725f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.9621725f0)

clear!(v)

@test isapprox(mean(BGEN.ref_allele_dosage!(b, v)), 2 - 0.9621725f0)

@test isapprox(mean(BGEN.alt_allele_dosage!(b, v)), 0.9621725f0)

# first allele minor

v = variant_by_rsid(b, "RSID_198")

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.48411763f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.48411763f0)

@test isapprox(mean(first_allele_dosage!(b, v)), 0.48411763f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.48411763f0)

clear!(v)

@test isapprox(mean(first_allele_dosage!(b, v)), 0.48411763f0)

@test isapprox(mean(minor_allele_dosage!(b, v)), 0.48411763f0)

clear!(v)

@test isapprox(mean(BGEN.ref_allele_dosage!(b, v)), 0.48411763f0)

@test isapprox(mean(BGEN.alt_allele_dosage!(b, v)), 2 - 0.48411763f0)

clear!(v)

@test isapprox(mean(GeneticVariantBase.alt_dosages!(Vector{Float32}(undef, n_samples(b)), b, v)), 2 - 0.48411763f0)

end

@testset "mean_impute" begin

path = BGEN.datadir("example.8bits.bgen")

b = Bgen(path)

v = first(iterator(b; from_bgen_start=true))

m = minor_allele_dosage!(b, v; mean_impute=true)

@test isapprox(m[1], 0.3958112303037447)

end

@testset "haplotypes" begin

path = BGEN.datadir("haplotypes.bgen")

b = Bgen(path)

for v in iterator(b)

probs = probabilities!(b, v)

dose = first_allele_dosage!(b, v)

dose_correct = probs[1, :] .+ probs[3, :]

@test all(isapprox.(dose, dose_correct))

end

end

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 1480 | @testset "select_region" begin

@testset "select_region_null" begin

chrom, start, stop = "01", 5000, 50000

b = Bgen(example_16bits)

@test b.idx !== nothing

@test length(select_region(b, "02")) == 0

end

@testset "select_whole_chrom" begin

chrom, start, stop = "01", 5000, 50000

b = Bgen(example_16bits)

lt = (x, y) -> isless((x.chrom, x.pos), (y.chrom, y.pos))

variants = collect(select_region(b, chrom))

for (x, y) in zip(sort(variants; lt=lt), sort(gen_data; lt=lt))

@test (x.rsid, x.chrom, x.pos) == (y.rsid, y.chrom, y.pos)

end

end

@testset "select_after_position" begin

chrom, start, stop = "01", 5000, 50000

b = Bgen(example_16bits)

lt = (x, y) -> isless((x.chrom, x.pos), (y.chrom, y.pos))

variants = collect(select_region(b, chrom; start=start))

gen_data_f = filter(x -> x.pos >= start, gen_data)

for (x, y) in zip(sort(variants; lt=lt), sort(gen_data_f; lt=lt))

@test (x.rsid, x.chrom, x.pos) == (y.rsid, y.chrom, y.pos)

end

end

@testset "select_in_region" begin

chrom, start, stop = "01", 5000, 50000

b = Bgen(example_16bits)

lt = (x, y) -> isless((x.chrom, x.pos), (y.chrom, y.pos))

variants = collect(select_region(b, chrom; start=start, stop=stop))

gen_data_f = filter(x -> start <= x.pos <= stop, gen_data)

for (x, y) in zip(sort(variants; lt=lt), sort(gen_data_f; lt=lt))

@test (x.rsid, x.chrom, x.pos) == (y.rsid, y.chrom, y.pos)

end

end

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 582 | @testset "utils" begin

@testset "counts" begin

path = BGEN.datadir("example.8bits.bgen")

b = Bgen(path)

for v in iterator(b)

cnt = counts!(b, v)

dose = first_allele_dosage!(b, v)

correct_cnt = zeros(Int, 512)

for v in dose

if !isnan(v)

ind = convert(Int, round(v * 255)) + 1

correct_cnt[ind] += 1

end

end

correct_cnt[512] = count(isnan.(dose))

@test all(cnt .== correct_cnt)

end

end

end | BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | code | 3922 | struct GenVariant

chrom::String

varid::String

rsid::String

pos::UInt

alleles::Vector{String}

probs::Matrix{Float64}

ploidy::Vector{UInt8}

end

"""

load_gen_data()

load data from "example.gen"

"""

function load_gen_data()

variants = GenVariant[]

open(BGEN.datadir("example.gen")) do io

for l in readlines(io)

tokens = split(l)

chrom, varid, rsid, pos, ref, alt = tokens[1:6]

pos = parse(UInt, pos)

probs = reshape(parse.(Float64, tokens[7:end]), 3, :)

nan_col_idx = transpose(sum(probs; dims=1)) .== 0

for (i, v) in enumerate(nan_col_idx)

if v

probs[:, i] .= NaN

end

end

push!(variants, GenVariant(chrom, varid, rsid, pos, [ref, alt], probs, []))

end

end

variants

end

"""

parse_vcf_samples(format, samples)

Parse sample data from VCF.

"""

function parse_vcf_samples(format, samples)

samples = [Dict(zip(split(format, ":"), split(x, ":"))) for x in samples]

ks = Dict([("GT", r"[/|]"), ("GP", ","), ("HP", ",")])

samples2 = [Dict{String, Vector{SubString{String}}}() for x in samples]

for (x, y) in zip(samples, samples2)

for k in keys(x)

y[k] = split(x[k], ks[k])

end

end

probs = [occursin("GP", format) ? x["GP"] : x["HP"] for x in samples2]

probs = map(x -> parse.(Float64, x), probs)

max_len = maximum(length(x) for x in probs)

probs_out = Matrix{Float64}(undef, max_len, length(samples))

fill!(probs_out, NaN)

for i in 1:length(probs)

probs_out[1:length(probs[i]), i] .= probs[i]

end

ploidy = [length(y["GT"]) for y in samples2]

probs_out, ploidy

end

"""

load_vcf_data()

Load data from "complex.vcf" for comparison

"""

function load_vcf_data()

variants = GenVariant[]

open(BGEN.datadir("complex.vcf")) do io

for l in readlines(io)

if startswith(l, "#")

continue

end

tokens = split(l)

chrom, pos, varid, ref, alts = tokens[1:5]

pos = parse(UInt, pos)

format = tokens[9]

samples = tokens[10:end]

varid = split(varid, ",")

if length(varid) > 1

rsid, varid = varid

else

rsid, varid = varid[1], ""

end

probs, ploidy = parse_vcf_samples(format, samples)

var = GenVariant(string(chrom), varid, string(rsid), pos, vcat(String[ref],

split(alts, ",")), probs, ploidy)

push!(variants, var)

end

end

variants

end

"""

load_haps_data()

Load data from "haplotypes.haps" for comparison.

"""

function load_haps_data()

variants = GenVariant[]

open(BGEN.datadir("haplotypes.haps")) do io

for l in readlines(io)

tokens = split(l)

chrom, varid, rsid, pos, ref, alt = tokens[1:6]

pos = parse(UInt, pos)

probs = tokens[7:end]

probs = [x == "0" ? [1.0, 0.0] : [0.0, 1.0] for x in probs]

probs = [probs[pos:pos+1] for pos in 1:2:length(probs)]

probs = [hcat(x[1], x[2]) for x in probs]

probs = hcat(probs...)

probs = reshape(probs, 4, :)

var = GenVariant(chrom, varid, rsid, pos, [ref, alt], probs, [])

push!(variants, var)

end

end

variants

end

"""

epsilon(bit_depth)

Max difference expected for the bit depth

"""

@inline function epsilon(bit_depth)

return 2 / (2 ^ (bit_depth - 1))

end

"""

array_equal(truth, parsed, bit_depth)

check if the two arrays are sufficiently equal

"""

@inline function array_equal(truth, parsed, bit_depth)

eps_abs = 3.2e-5

all(isapprox.(truth, parsed; atol=max(eps_abs, epsilon(bit_depth)),

nans=true))

end

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | docs | 2381 | # BGEN.jl

[](https://OpenMendel.github.io/BGEN.jl/dev)

[](https://OpenMendel.github.io/BGEN.jl/stable)

[](https://codecov.io/gh/OpenMendel/BGEN.jl)

[](https://github.com/OpenMendel/BGEN.jl/actions)

Routines for reading compressed storage of genotyped or imputed markers

[*Genome-wide association studies (GWAS)*](https://en.wikipedia.org/wiki/Genome-wide_association_study) data with imputed markers are often saved in the [**BGEN format**](https://www.well.ox.ac.uk/~gav/bgen_format/) or `.bgen` file.

It can store both hard calls and imputed data, unphased genotypes and phased haplotypes. Each variant is compressed separately to make indexing simple. An index file (`.bgen.bgi`) may be provided to access each variant easily. [UK Biobank](https://www.ukbiobank.ac.uk/) uses this format for genome-wide imputed genotypes.

## Installation

This package requires Julia v1.0 or later, which can be obtained from

https://julialang.org/downloads/ or by building Julia from the sources in the

https://github.com/JuliaLang/julia repository.

This package is registered in the default Julia package registry, and can be installed through standard package installation procedure: e.g., running the following code in Julia REPL.

```julia

using Pkg

pkg"add BGEN"

```

## Citation

If you use [OpenMendel](https://openmendel.github.io) analysis packages in your research, please cite the following reference in the resulting publications:

*Zhou H, Sinsheimer JS, Bates DM, Chu BB, German CA, Ji SS, Keys KL, Kim J, Ko S, Mosher GD, Papp JC, Sobel EM, Zhai J, Zhou JJ, Lange K. OPENMENDEL: a cooperative programming project for statistical genetics. Hum Genet. 2020 Jan;139(1):61-71. doi: 10.1007/s00439-019-02001-z. Epub 2019 Mar 26. PMID: 30915546; PMCID: [PMC6763373](https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6763373/).*

## Acknowledgments

Current implementation incorporates ideas in a [bgen Python package](https://github.com/jeremymcrae/bgen).

This project has been supported by the National Institutes of Health under awards R01GM053275, R01HG006139, R25GM103774, and 1R25HG011845.

| BGEN | https://github.com/OpenMendel/BGEN.jl.git |

|

[

"MIT"

] | 0.2.1 | 6512022b637fc17c297bb3050ba2e6cdd50b6fa0 | docs | 33436 | # BGEN.jl

Routines for reading compressed storage of genotyped or imputed markers

## The BGEN Format

[*Genome-wide association studies (GWAS)*](https://en.wikipedia.org/wiki/Genome-wide_association_study) data with imputed markers are often saved in the [**BGEN format**](https://www.well.ox.ac.uk/~gav/bgen_format/) or `.bgen` file.

Used in:

* Wellcome Trust Case-Control Consortium 2

* the MalariaGEN project

* the ALSPAC study

* [__UK Biobank__](https://enkre.net/cgi-bin/code/bgen/wiki/?name=BGEN+in+the+UK+Biobank): for genome-wide imputed genotypes and phased haplotypes

### Features

* Can store both hard-calls and imputed data

* Can store both phased haplotypes and phased genotypes

* Efficient variable-precision bit reapresntations

* Per-variant compression $\rightarrow$ easy to index

* Supported compression method: [zlib](http://www.zlib.net/) and [Zstandard](https://facebook.github.io/zstd/).

* Index files are often provided as `.bgen.bgi` files, which are plain [SQLite3](http://www.sqlite.org) databases.

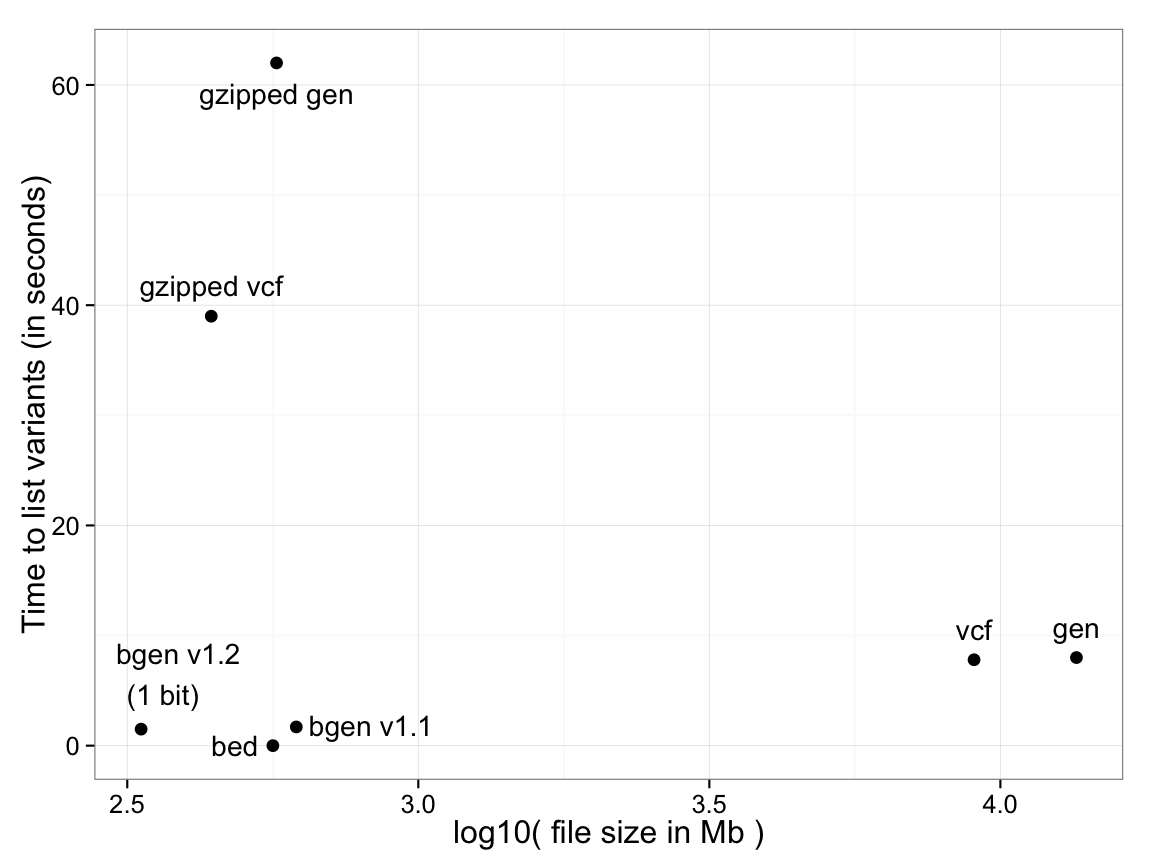

Time to list variant identifying information (genomic location, ID and alleles): 18,496 samples, 121,668 SNPs

(image source: https://www.well.ox.ac.uk/~gav/bgen_format/images/bgen_comparison.png)

_Plink 1 format (`.bed`/`.bim`/`.fam`) has the list of variants as a separate file (`.bim`), effectively zero time._

### Structure

A header block followed by a series of [variant data block - (compressed) genotype data block] pairs.

* Header block

* number of variants and samples

* compression method (none, zlib or zstandard)

* version of layout

* Only "layout 2" is discussed below. "Layout 1" is also supported.

* sample identifiers (optional)

* Variant data block

* variant id

* genomic position (chromosome, bp coordinate)

* list of alleles

* Genotype data block (often compressed)

* ploidy of each sample (may vary sample-by-sample)

* if the genotype data are phased

* precision ($B$, number of bits to represent probabilities)

* probabilitiy data (e.g. an unsigned $B$-bit integer $x$ represents the probability of ($\frac{x}{2^{B}-1}$)

_`BGEN.jl` provides tools for iterating over the variants and parsing genotype data efficiently. It has been optimized for UK Biobank's zlib-compressed, 8-bit byte-aligned, all-diploid, all-biallelic datafiles._

## Installation

This package requires Julia v1.0 or later, which can be obtained from

https://julialang.org/downloads/ or by building Julia from the sources in the

https://github.com/JuliaLang/julia repository.

The package can be installed by running the following code:

```julia

using Pkg

pkg"add BGEN"

```

In order to run the examples below, the `Glob` package is also needed.

```julia

pkg"add Glob"

```

```julia

versioninfo()

```

Julia Version 1.8.5

Commit 17cfb8e65ea (2023-01-08 06:45 UTC)

Platform Info:

OS: macOS (arm64-apple-darwin21.5.0)

CPU: 8 × Apple M2

WORD_SIZE: 64

LIBM: libopenlibm

LLVM: libLLVM-13.0.1 (ORCJIT, apple-m1)

Threads: 1 on 4 virtual cores

```julia

using BGEN, Glob

```

## Example Data

The example datafiles are stored in `/data` directory of this repository. It can be accessed through the function `BGEN.datadir()`. These files come from [the reference implementation](https://enkre.net/cgi-bin/code/bgen/dir?ci=trunk) for the BGEN format.

```julia

Glob.glob("*", BGEN.datadir())

```

79-element Vector{String}:

"/Users/kose/.julia/dev/BGEN/src/../data/LICENSE.md"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.10bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.11bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.12bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.13bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.14bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.15bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.16bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.17bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.18bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.19bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.1bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/complex.20bits.bgen"

⋮

"/Users/kose/.julia/dev/BGEN/src/../data/example.6bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/example.7bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen.bgi"

"/Users/kose/.julia/dev/BGEN/src/../data/example.9bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/example.gen"

"/Users/kose/.julia/dev/BGEN/src/../data/example.sample"

"/Users/kose/.julia/dev/BGEN/src/../data/example.v11.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/examples.16bits.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/haplotypes.bgen"

"/Users/kose/.julia/dev/BGEN/src/../data/haplotypes.bgen.bgi"

"/Users/kose/.julia/dev/BGEN/src/../data/haplotypes.haps"

There are three different datasets with different format versions, compressions, or number of bits to represent probability values.

- `example.*.bgen`: imputed genotypes.

- `haplotypes.bgen`: phased haplotypes.

- `complex.*.bgen`: includes imputed genotypes and phased haplotypes, and multiallelic genotypes.

Some of the `.bgen` files are indexed with `.bgen.bgi` files:

```julia

Glob.glob("*.bgen.bgi", BGEN.datadir())

```

4-element Vector{String}:

"/Users/kose/.julia/dev/BGEN/src/../data/complex.bgen.bgi"

"/Users/kose/.julia/dev/BGEN/src/../data/example.16bits.bgen.bgi"

"/Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen.bgi"

"/Users/kose/.julia/dev/BGEN/src/../data/haplotypes.bgen.bgi"

Sample identifiers may be either contained in the `.bgen` file, or is listed in an external `.sample` file.

```julia

Glob.glob("*.sample", BGEN.datadir())

```

2-element Vector{String}:

"/Users/kose/.julia/dev/BGEN/src/../data/complex.sample"

"/Users/kose/.julia/dev/BGEN/src/../data/example.sample"

## Type `Bgen`

The type `Bgen` is the fundamental type for `.bgen`-formatted files. It can be created using the following line.

```julia

b = Bgen(BGEN.datadir("example.8bits.bgen");

sample_path=BGEN.datadir("example.sample"),

idx_path=BGEN.datadir("example.8bits.bgen.bgi"))

```

Bgen(IOStream(<file /Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen>), 0x000000000001f6ea, BGEN.Header(0x0000178c, 0x00000014, 0x000000c7, 0x000001f4, 0x01, 0x02, true), ["sample_001", "sample_002", "sample_003", "sample_004", "sample_005", "sample_006", "sample_007", "sample_008", "sample_009", "sample_010" … "sample_491", "sample_492", "sample_493", "sample_494", "sample_495", "sample_496", "sample_497", "sample_498", "sample_499", "sample_500"], Index("/Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen.bgi", SQLite.DB("/Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen.bgi"), UInt64[], String[], String[], UInt32[]))

The first argument is the path to the `.bgen` file. The optional keyword argument `sample_path` defines the location of the `.sample` file. The second optional keyword argument `idx_path` determines the location of `.bgen.bgi` file.

When a `Bgen` object is created, information in the header is parsed, and the index files are loaded if provided. You may retrieve basic information as follows. Variants are not yet parsed, and will be discussed later.

- `io(b::Bgen)`: IOStream for the bgen file. You may also close this stream using `close(b::Bgen)`.

- `fsize(b::Bgen)`: the size of the bgen file.

- `samples(b::Bgen)`: the list of sample names.

- `n_samples(b::Bgen)`: number of samples in the file.

- `n_variants(b::Bgen)`: number of variants

- `compression(b::Bgen)`: the method each genotype block is compressed. It is either "None", "Zlib", or "Zstd".

```julia

io(b)

```

IOStream(<file /Users/kose/.julia/dev/BGEN/src/../data/example.8bits.bgen>)

```julia

fsize(b)

```

128746

```julia

samples(b)

```

500-element Vector{String}:

"sample_001"

"sample_002"

"sample_003"

"sample_004"

"sample_005"

"sample_006"

"sample_007"

"sample_008"

"sample_009"

"sample_010"

"sample_011"

"sample_012"

"sample_013"

⋮

"sample_489"

"sample_490"

"sample_491"

"sample_492"

"sample_493"

"sample_494"

"sample_495"

"sample_496"

"sample_497"

"sample_498"

"sample_499"

"sample_500"

```julia

n_samples(b)

```

500

```julia

n_variants(b)

```

199

```julia

compression(b)

```

"Zlib"

One may also access the list of RSIDs, chromosomes, and positions in chromosome of each variant stored using functions `rsids()`, `chroms()`, and `positions()`, respectively.

```julia

rsids(b)

```

199-element Vector{String}:

"RSID_101"

"RSID_2"

"RSID_102"

"RSID_3"

"RSID_103"

"RSID_4"

"RSID_104"

"RSID_5"

"RSID_105"

"RSID_6"

"RSID_106"

"RSID_7"

"RSID_107"

⋮

"RSID_194"

"RSID_95"

"RSID_195"

"RSID_96"

"RSID_196"

"RSID_97"

"RSID_197"

"RSID_98"

"RSID_198"

"RSID_99"

"RSID_199"

"RSID_200"

```julia

chroms(b)

```

199-element Vector{String}:

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

⋮

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

"01"

```julia

positions(b)

```

199-element Vector{Int64}:

1001

2000

2001

3000

3001

4000

4001

5000

5001

6000

6001

7000

7001

⋮

94001

95000

95001

96000

96001

97000

97001

98000

98001

99000

99001

100001

## `Variant` and `VariantIterator`

As noted earlier, genotype information of each variant is compressed separately in `.bgen` files. The offsets (starting points in bgen file) of the genotypes may or may not be indexed by an external `.bgen.bgi` file. Thus, two ways to iterate over variants is provided through the function `iterator(b; offsets=nothing, from_bgen_start=false)`.

- If `offsets` is provided, or `.bgen.bgi` is provided and

`from_bgen_start` is `false`, it returns a `VariantIteratorFromOffsets`, iterating over the list of offsets.

- Otherwise, it returns a `VariantIteratorFromStart`, iterating from the start of bgen file to the end of it sequentially.

`VariantIteratorFromOffsets` and `VariantIteratorFromStart` are the subtypes of `VariantIterator`.

Each element of `VariantIterator` is a `Variant`, containing the information of variants. We have following utility functions to access its information.

- `n_samples(v::Variant)`

- `varid(v::Variant)`

- `rsid(v::Variant)`

- `chrom(v::Variant)`

- `pos(v::Variant)`

- `n_alleles(v::Variant)`: number of alleles.

- `alleles(v::Variant)`: list of alleles.

Merely the basic information of a variant is parsed for creating a `Variant` object. Nothing is decompressed, and genotype probabilities are not yet parsed yet. Decompression happens lazily, and is delayed until when we try to compute genotype probabilites or minor allele dosages (to be discussed later).

Since `.bgen.bgi` file is provided, the following order is based on the index file, sorted by genomic location.

```julia

for v in iterator(b) #

println(rsid(v))

end

```

RSID_101

RSID_2

RSID_102

RSID_3

RSID_103

RSID_4

RSID_104

RSID_5

RSID_105

RSID_6

RSID_106

RSID_7

RSID_107

RSID_8

RSID_108

RSID_9

RSID_109

RSID_10

RSID_100

RSID_110

RSID_11

RSID_111

RSID_12

RSID_112

RSID_13

RSID_113

RSID_14

RSID_114

RSID_15

RSID_115

RSID_16

RSID_116

RSID_17

RSID_117

RSID_18

RSID_118

RSID_19

RSID_119

RSID_20

RSID_120

RSID_21

RSID_121

RSID_22

RSID_122

RSID_23

RSID_123

RSID_24

RSID_124

RSID_25

RSID_125

RSID_26

RSID_126

RSID_27

RSID_127

RSID_28

RSID_128

RSID_29

RSID_129

RSID_30

RSID_130

RSID_31

RSID_131

RSID_32

RSID_132

RSID_33

RSID_133

RSID_34

RSID_134

RSID_35

RSID_135

RSID_36

RSID_136

RSID_37

RSID_137

RSID_38

RSID_138

RSID_39

RSID_139

RSID_40

RSID_140

RSID_41

RSID_141

RSID_42

RSID_142

RSID_43

RSID_143

RSID_44

RSID_144

RSID_45

RSID_145

RSID_46

RSID_146

RSID_47

RSID_147

RSID_48

RSID_148

RSID_49

RSID_149

RSID_50

RSID_150

RSID_51

RSID_151

RSID_52

RSID_152

RSID_53

RSID_153

RSID_54

RSID_154

RSID_55

RSID_155

RSID_56

RSID_156

RSID_57

RSID_157

RSID_58

RSID_158

RSID_59

RSID_159

RSID_60

RSID_160

RSID_61

RSID_161

RSID_62

RSID_162

RSID_63

RSID_163

RSID_64

RSID_164

RSID_65

RSID_165

RSID_66

RSID_166

RSID_67

RSID_167

RSID_68

RSID_168

RSID_69

RSID_169

RSID_70

RSID_170

RSID_71

RSID_171

RSID_72

RSID_172

RSID_73

RSID_173

RSID_74

RSID_174

RSID_75

RSID_175

RSID_76

RSID_176

RSID_77

RSID_177

RSID_78

RSID_178

RSID_79

RSID_179

RSID_80

RSID_180

RSID_81

RSID_181

RSID_82

RSID_182

RSID_83

RSID_183

RSID_84

RSID_184

RSID_85

RSID_185

RSID_86

RSID_186

RSID_87

RSID_187

RSID_88

RSID_188

RSID_89

RSID_189

RSID_90

RSID_190

RSID_91

RSID_191

RSID_92

RSID_192

RSID_93

RSID_193

RSID_94

RSID_194

RSID_95

RSID_195

RSID_96

RSID_196

RSID_97

RSID_197

RSID_98

RSID_198

RSID_99

RSID_199

RSID_200

Setting `from_bgen_start=true` forces the iterator to iterate in the order of appearence in the bgen file. This may be different from the order in the index file.

```julia

for v in iterator(b; from_bgen_start=true)

println(rsid(v))

end

```

RSID_2

RSID_3

RSID_4

RSID_5

RSID_6

RSID_7

RSID_8

RSID_9

RSID_10

RSID_11

RSID_12

RSID_13

RSID_14

RSID_15

RSID_16

RSID_17

RSID_18

RSID_19

RSID_20

RSID_21

RSID_22

RSID_23

RSID_24

RSID_25

RSID_26

RSID_27

RSID_28

RSID_29

RSID_30

RSID_31

RSID_32

RSID_33

RSID_34

RSID_35

RSID_36

RSID_37

RSID_38

RSID_39

RSID_40

RSID_41

RSID_42

RSID_43

RSID_44

RSID_45

RSID_46

RSID_47

RSID_48

RSID_49

RSID_50

RSID_51

RSID_52

RSID_53

RSID_54

RSID_55

RSID_56

RSID_57

RSID_58

RSID_59

RSID_60

RSID_61

RSID_62

RSID_63

RSID_64

RSID_65

RSID_66

RSID_67

RSID_68

RSID_69

RSID_70

RSID_71

RSID_72

RSID_73

RSID_74

RSID_75

RSID_76

RSID_77

RSID_78

RSID_79

RSID_80

RSID_81

RSID_82

RSID_83

RSID_84

RSID_85

RSID_86

RSID_87

RSID_88

RSID_89

RSID_90

RSID_91

RSID_92

RSID_93

RSID_94

RSID_95

RSID_96

RSID_97

RSID_98

RSID_99

RSID_100

RSID_101

RSID_102

RSID_103

RSID_104

RSID_105

RSID_106

RSID_107

RSID_108

RSID_109

RSID_110

RSID_111

RSID_112

RSID_113

RSID_114

RSID_115

RSID_116

RSID_117

RSID_118

RSID_119

RSID_120

RSID_121

RSID_122

RSID_123

RSID_124

RSID_125

RSID_126

RSID_127

RSID_128

RSID_129

RSID_130

RSID_131

RSID_132

RSID_133

RSID_134

RSID_135

RSID_136

RSID_137

RSID_138

RSID_139

RSID_140

RSID_141

RSID_142

RSID_143

RSID_144

RSID_145

RSID_146

RSID_147

RSID_148

RSID_149

RSID_150

RSID_151

RSID_152

RSID_153

RSID_154

RSID_155

RSID_156

RSID_157

RSID_158

RSID_159

RSID_160

RSID_161

RSID_162

RSID_163

RSID_164

RSID_165

RSID_166

RSID_167

RSID_168

RSID_169

RSID_170

RSID_171

RSID_172

RSID_173

RSID_174

RSID_175

RSID_176

RSID_177

RSID_178

RSID_179

RSID_180

RSID_181

RSID_182

RSID_183

RSID_184

RSID_185

RSID_186

RSID_187

RSID_188

RSID_189

RSID_190

RSID_191

RSID_192

RSID_193

RSID_194

RSID_195

RSID_196

RSID_197

RSID_198

RSID_199

RSID_200

With the presence of `.bgen.bgi` index file, one may select variants on a certain region using the function `select_region(b, chrom; start=nothing, stop=nothing)`.

The following shows that all 199 variants in the bgen file are located on chromosome 01.

```julia

length(select_region(b, "01"))

```

199

We can see that the first variant since position 5000 at chromosome 01 is "RSID_5":

```julia

first(select_region(b, "01"; start=5000))

```

Variant(0x0000000000001ef8, 0x0000000000001f21, 0x0000000000002169, 0x00000248, 0x000001f4, "SNPID_5", "RSID_5", "01", 0x00001388, 0x0002, ["A", "G"], nothing)

And that the number of variants in chr01:5000-50000 is 92.

```julia

length(select_region(b, "01"; start=5000, stop=50000))

```

92

Finally, one may use the `parse_variants()` function to retrieve the variant information as a `Vector{Variant}`. This is equivalent to calling `collect()` on the corresponding `VariantIterator`. It takes the same arguments as `iterator()`. This keeps all the information of variants in-memory. If the size of bgen file is too large, you might want to avoid this.

```julia

variants = parse_variants(b; from_bgen_start=true)

```

199-element Vector{Variant}:

Variant(0x0000000000001790, 0x00000000000017b9, 0x0000000000001a82, 0x000002c9, 0x000001f4, "SNPID_2", "RSID_2", "01", 0x000007d0, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000001a82, 0x0000000000001aab, 0x0000000000001ced, 0x00000242, 0x000001f4, "SNPID_3", "RSID_3", "01", 0x00000bb8, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000001ced, 0x0000000000001d16, 0x0000000000001ef8, 0x000001e2, 0x000001f4, "SNPID_4", "RSID_4", "01", 0x00000fa0, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000001ef8, 0x0000000000001f21, 0x0000000000002169, 0x00000248, 0x000001f4, "SNPID_5", "RSID_5", "01", 0x00001388, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000002169, 0x0000000000002192, 0x0000000000002389, 0x000001f7, 0x000001f4, "SNPID_6", "RSID_6", "01", 0x00001770, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000002389, 0x00000000000023b2, 0x00000000000025df, 0x0000022d, 0x000001f4, "SNPID_7", "RSID_7", "01", 0x00001b58, 0x0002, ["A", "G"], nothing)

Variant(0x00000000000025df, 0x0000000000002608, 0x00000000000027a4, 0x0000019c, 0x000001f4, "SNPID_8", "RSID_8", "01", 0x00001f40, 0x0002, ["A", "G"], nothing)

Variant(0x00000000000027a4, 0x00000000000027cd, 0x00000000000029de, 0x00000211, 0x000001f4, "SNPID_9", "RSID_9", "01", 0x00002328, 0x0002, ["A", "G"], nothing)

Variant(0x00000000000029de, 0x0000000000002a09, 0x0000000000002c43, 0x0000023a, 0x000001f4, "SNPID_10", "RSID_10", "01", 0x00002710, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000002c43, 0x0000000000002c6e, 0x0000000000002e8a, 0x0000021c, 0x000001f4, "SNPID_11", "RSID_11", "01", 0x00002af8, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000002e8a, 0x0000000000002eb5, 0x00000000000030e0, 0x0000022b, 0x000001f4, "SNPID_12", "RSID_12", "01", 0x00002ee0, 0x0002, ["A", "G"], nothing)

Variant(0x00000000000030e0, 0x000000000000310b, 0x0000000000003375, 0x0000026a, 0x000001f4, "SNPID_13", "RSID_13", "01", 0x000032c8, 0x0002, ["A", "G"], nothing)

Variant(0x0000000000003375, 0x00000000000033a0, 0x00000000000035dd, 0x0000023d, 0x000001f4, "SNPID_14", "RSID_14", "01", 0x000036b0, 0x0002, ["A", "G"], nothing)

⋮

Variant(0x000000000001d991, 0x000000000001d9be, 0x000000000001dc12, 0x00000254, 0x000001f4, "SNPID_189", "RSID_189", "01", 0x00015ba9, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001dc12, 0x000000000001dc3f, 0x000000000001ddf2, 0x000001b3, 0x000001f4, "SNPID_190", "RSID_190", "01", 0x00015f91, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001ddf2, 0x000000000001de1f, 0x000000000001e011, 0x000001f2, 0x000001f4, "SNPID_191", "RSID_191", "01", 0x00016379, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001e011, 0x000000000001e03e, 0x000000000001e214, 0x000001d6, 0x000001f4, "SNPID_192", "RSID_192", "01", 0x00016761, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001e214, 0x000000000001e241, 0x000000000001e407, 0x000001c6, 0x000001f4, "SNPID_193", "RSID_193", "01", 0x00016b49, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001e407, 0x000000000001e434, 0x000000000001e6c9, 0x00000295, 0x000001f4, "SNPID_194", "RSID_194", "01", 0x00016f31, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001e6c9, 0x000000000001e6f6, 0x000000000001e8e1, 0x000001eb, 0x000001f4, "SNPID_195", "RSID_195", "01", 0x00017319, 0x0002, ["A", "G"], nothing)

Variant(0x000000000001e8e1, 0x000000000001e90e, 0x000000000001ec86, 0x00000378, 0x000001f4, "SNPID_196", "RSID_196", "01", 0x00017701, 0x0002, ["A", "G"], nothing)