code

stringlengths 2.5k

150k

| kind

stringclasses 1

value |

|---|---|

```

%run ../setup/nb_setup

%matplotlib inline

```

# Compute a Galactic orbit for a star using Gaia data

Author(s): Adrian Price-Whelan

## Learning goals

In this tutorial, we will retrieve the sky coordinates, astrometry, and radial velocity for a star — [Kepler-444](https://en.wikipedia.org/wiki/Kepler-444) — and compute its orbit in the default Milky Way mass model implemented in Gala. We will compare the orbit of Kepler-444 to the orbit of the Sun and a random sample of nearby stars.

### Notebook Setup and Package Imports

```

import astropy.coordinates as coord

import astropy.units as u

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

from pyia import GaiaData

# Gala

import gala.dynamics as gd

import gala.potential as gp

```

## Define a Galactocentric Coordinate Frame

We will start by defining a Galactocentric coordinate system using `astropy.coordinates`. We will adopt the latest parameter set assumptions for the solar Galactocentric position and velocity as implemented in Astropy, but note that these parameters are customizable by passing parameters into the `Galactocentric` class below (e.g., you could change the sun-galactic center distance by setting `galcen_distance=...`).

```

with coord.galactocentric_frame_defaults.set("v4.0"):

galcen_frame = coord.Galactocentric()

galcen_frame

```

## Define the Solar Position and Velocity

In this coordinate system, the sun is along the $x$-axis (at a negative $x$ value), and the Galactic rotation at this position is in the $+y$ direction. The 3D position of the sun is therefore given by:

```

sun_xyz = u.Quantity(

[-galcen_frame.galcen_distance, 0 * u.kpc, galcen_frame.z_sun] # x,y,z

)

```

We can combine this with the solar velocity vector (defined in the `astropy.coordinates.Galactocentric` frame) to define the sun's phase-space position, which we will use as initial conditions shortly to compute the orbit of the Sun:

```

sun_vxyz = galcen_frame.galcen_v_sun

sun_vxyz

sun_w0 = gd.PhaseSpacePosition(pos=sun_xyz, vel=sun_vxyz)

```

To compute the sun's orbit, we need to specify a mass model for the Galaxy. Here, we will use the default Milky Way mass model implemented in Gala, which is defined in detail in the Gala documentation: [Defining a Milky Way model](define-milky-way-model.html). Here, we will initialize the potential model with default parameters:

```

mw_potential = gp.MilkyWayPotential()

mw_potential

```

This potential is composed of four mass components meant to represent simple models of the different structural components of the Milky Way:

```

for k, pot in mw_potential.items():

print(f"{k}: {pot!r}")

```

With a potential model for the Galaxy and initial conditions for the sun, we can now compute the Sun's orbit using the default integrator (Leapfrog integration): We will compute the orbit for 4 Gyr, which is about 16 orbital periods.

```

sun_orbit = mw_potential.integrate_orbit(sun_w0, dt=0.5 * u.Myr, t1=0, t2=4 * u.Gyr)

```

Let's plot the Sun's orbit in 3D to get a feel for the geometry of the orbit:

```

fig, ax = sun_orbit.plot_3d()

lim = (-12, 12)

ax.set(xlim=lim, ylim=lim, zlim=lim)

```

## Retrieve Gaia Data for Kepler-444

As a comparison, we will compute the orbit of the exoplanet-hosting star "Kepler-444." To get Gaia data for this star, we first have to retrieve its sky coordinates so that we can do a positional cross-match query on the Gaia catalog. We can retrieve the sky position of Kepler-444 from Simbad using the `SkyCoord.from_name()` classmethod, which queries Simbad under the hood to resolve the name:

```

star_sky_c = coord.SkyCoord.from_name("Kepler-444")

star_sky_c

```

We happen to know a priori that Kepler-444 has a large proper motion, so the sky position reported by Simbad could be off from the Gaia sky position (epoch=2016) by many arcseconds. To run and retrieve the Gaia data, we will use the [pyia](http://pyia.readthedocs.io/) package: We can pass in an ADQL query, which `pyia` uses to query the Gaia science archive using `astroquery`, and returns the data as a `pyia.GaiaData` object. To run the query, we will do a sky position cross-match with a large positional tolerance by setting the cross-match radius to 15 arcseconds, but we will take the brightest cross-matched source within this region as our match:

```

star_gaia = GaiaData.from_query(

f"""

SELECT TOP 1 * FROM gaiaedr3.gaia_source

WHERE 1=CONTAINS(

POINT('ICRS', {star_sky_c.ra.degree}, {star_sky_c.dec.degree}),

CIRCLE('ICRS', ra, dec, {(15*u.arcsec).to_value(u.degree)})

)

ORDER BY phot_g_mean_mag

"""

)

star_gaia

```

We will assume (and hope!) that this source is Kepler-444, but we know that it is fairly bright compared to a typical Gaia source, so we should be safe.

We can now use the returned `pyia.GaiaData` object to retrieve an astropy `SkyCoord` object with all of the position and velocity measurements taken from the Gaia archive record for this source:

```

star_gaia_c = star_gaia.get_skycoord()

star_gaia_c

```

To compute this star's Galactic orbit, we need to convert its observed, Heliocentric (actually solar system barycentric) data into the Galactocentric coordinate frame we defined above. To do this, we will use the `astropy.coordinates` transformation framework using the `.transform_to()` method, and we will pass in the `Galactocentric` coordinate frame we defined above:

```

star_galcen = star_gaia_c.transform_to(galcen_frame)

star_galcen

```

Let's print out the Cartesian position and velocity for Kepler-444:

```

print(star_galcen.cartesian)

print(star_galcen.velocity)

```

Now with Galactocentric position and velocity components for Kepler-444, we can create Gala initial conditions and compute its orbit on the time grid used to compute the Sun's orbit above:

```

star_w0 = gd.PhaseSpacePosition(star_galcen.data)

star_orbit = mw_potential.integrate_orbit(star_w0, t=sun_orbit.t)

```

We can now compare the orbit of Kepler-444 to the solar orbit we computed above. We will plot the two orbits in two projections: First in the $x$-$y$ plane (Cartesian positions), then in the *meridional plane*, showing the cylindrical $R$ and $z$ position dependence of the orbits:

```

fig, axes = plt.subplots(1, 2, figsize=(10, 5), constrained_layout=True)

sun_orbit.plot(["x", "y"], axes=axes[0])

star_orbit.plot(["x", "y"], axes=axes[0])

axes[0].set_xlim(-10, 10)

axes[0].set_ylim(-10, 10)

sun_orbit.cylindrical.plot(

["rho", "z"],

axes=axes[1],

auto_aspect=False,

labels=["$R$ [kpc]", "$z$ [kpc]"],

label="Sun",

)

star_orbit.cylindrical.plot(

["rho", "z"],

axes=axes[1],

auto_aspect=False,

labels=["$R$ [kpc]", "$z$ [kpc]"],

label="Kepler-444",

)

axes[1].set_xlim(0, 10)

axes[1].set_ylim(-5, 5)

axes[1].set_aspect("auto")

axes[1].legend(loc="best", fontsize=15)

```

### Exercise: How does Kepler-444's orbit differ from the Sun's?

- What are the guiding center radii of the two orbits?

- What is the maximum $z$ height reached by each orbit?

- What are their eccentricities?

- Can you guess which star is older based on their kinematics?

- Which star do you think has a higher metallicity?

### Exercise: Compute orbits for Monte Carlo sampled initial conditions using the Gaia error distribution

*Hint: Use the `pyia.GaiaData.get_error_samples()` method to generate samples from the Gaia error distribution*

- Generate 128 samples from the error distribution

- Construct a `SkyCoord` object with all of these Monte Carlo samples

- Transform the error sample coordinates to the Galactocentric frame and define Gala initial conditions (a `PhaseSpacePosition` object)

- Compute orbits for all error samples using the same time grid we used above

- Compute the eccentricity and $L_z$ for all samples: what is the standard deviation of the eccentricity and $L_z$ values?

- With what fractional precision can we measure this star's eccentricity and $L_z$? (i.e. what is $\textrm{std}(e) / \textrm{mean}(e)$ and the same for $L_z$)

### Exercise: Comparing these orbits to the orbits of other Gaia stars

Retrieve Gaia data for a set of 100 random Gaia stars within 200 pc of the sun with measured radial velocities and well-measured parallaxes using the query:

SELECT TOP 100 * FROM gaiaedr3.gaia_source

WHERE dr2_radial_velocity IS NOT NULL AND

parallax_over_error > 10 AND

ruwe < 1.2 AND

parallax > 5

ORDER BY random_index

```

# random_stars_g = ..

```

Compute orbits for these stars for the same time grid used above to compute the sun's orbit:

```

# random_stars_c = ...

# random_stars_galcen = ...

# random_stars_w0 = ...

# random_stars_orbits = ...

```

Plot the initial (present-day) positions of all of these stars in Galactocentric Cartesian coordinates:

Now plot the orbits of these stars in the x-y and R-z planes:

```

fig, axes = plt.subplots(1, 2, figsize=(10, 5), constrained_layout=True)

random_stars_orbits.plot(["x", "y"], axes=axes[0])

axes[0].set_xlim(-15, 15)

axes[0].set_ylim(-15, 15)

random_stars_orbits.cylindrical.plot(

["rho", "z"],

axes=axes[1],

auto_aspect=False,

labels=["$R$ [kpc]", "$z$ [kpc]"],

)

axes[1].set_xlim(0, 15)

axes[1].set_ylim(-5, 5)

axes[1].set_aspect("auto")

```

Compute maximum $z$ heights ($z_\textrm{max}$) and eccentricities for all of these orbits. Compare the Sun, Kepler-444, and this random sampling of nearby stars. Where do the Sun and Kepler-444 sit relative to the random sample of nearby stars in terms of $z_\textrm{max}$ and eccentricity? (Hint: plot $z_\textrm{max}$ vs. eccentricity and highlight the Sun and Kepler-444!) Are either of them outliers in any way?

```

# rand_zmax = ...

# rand_ecc = ...

fig, ax = plt.subplots(figsize=(8, 6))

ax.scatter(

rand_ecc, rand_zmax, color="k", alpha=0.4, s=14, lw=0, label="random nearby stars"

)

ax.scatter(sun_orbit.eccentricity(), sun_orbit.zmax(), color="tab:orange", label="Sun")

ax.scatter(

star_orbit.eccentricity(), star_orbit.zmax(), color="tab:cyan", label="Kepler-444"

)

ax.legend(loc="best", fontsize=14)

ax.set_xlabel("eccentricity, $e$")

ax.set_ylabel(r"max. $z$ height, $z_{\rm max}$ [kpc]")

```

| github_jupyter |

```

import os

import tarfile

import urllib

DOWNLOAD_ROOT = "https://raw.githubusercontent.com/ageron/handson-ml2/master/"

HOUSING_PATH = os.path.join("datasets","housing")

HOUSING_URL = DOWNLOAD_ROOT + "datasets/housing/housing.tgz"

def fetch_housing_data(housing_url = HOUSING_URL,housing_path = HOUSING_PATH):

os.makedirs(housing_path,exist_ok=True)

tgz_path = os.path.join(housing_path,"housing.tgz")

urllib.request.urlretrieve(housing_url, tgz_path)

housing_tgz = tarfile.open(tgz_path)

housing_tgz.extractall(path=housing_path)

housing_tgz.close()

fetch_housing_data()

import pandas as pd

def load_housing_data(housing_path = HOUSING_PATH):

csv_path = os.path.join(housing_path,"housing.csv")

return pd.read_csv(csv_path)

housing = load_housing_data()

housing.head()

housing.info()

housing["ocean_proximity"].value_counts()

housing.describe()

import matplotlib.pyplot as plt

%matplotlib inline

housing.hist(bins=50, figsize=(20,15))

plt.show()

import numpy as np

def split_test_data(data,test_ratio):

shuffled_indices = np.random.permutation(len(data))

test_set_size = int(len(data)*test_ratio)

test_indices = shuffled_indices[:test_set_size]

train_indices = shuffled_indices[test_set_size:]

return data.iloc[train_indices], data.iloc[test_indices]

train_set, test_set = split_test_data(housing,0.2)

len(train_set)

len(test_set)

from zlib import crc32

def test_set_check(identifier, test_ratio):

return crc32(np.int64(identifier)) & 0xffffffff < test_ratio * 2**32

def split_train_test_by_id(data, test_ratio, id_column):

ids = data[id_column]

in_test_set = ids.apply(lambda id_ : test_set_check(id_ , test_ratio))

return data.loc[~in_test_set], data.loc[in_test_set]

housing_with_id = housing.reset_index()

train_set, test_set = split_train_test_by_id(housing_with_id,0.2,"index")

housing_with_id["id"] = housing["longitude"] * 1000 + housing["latitude"]

train_set, test_set = split_train_test_by_id(housing_with_id,0.2,"id")

from sklearn.model_selection import train_test_split

train_set, test_set = train_test_split(housing,test_size = 0.2, random_state = 42)

housing["income_cat"] = pd.cut(housing["median_income"],bins=[0.,1.5,3.0,4.5,6.,np.inf],labels=[1,2,3,4,5])

housing["income_cat"].hist()

from sklearn.model_selection import StratifiedShuffleSplit

split = StratifiedShuffleSplit(n_splits = 1,test_size=0.2,random_state=42)

for train_index, test_index in split.split(housing,housing["income_cat"]):

strat_train_set = housing.loc[train_index]

strat_test_set = housing.loc[test_index]

strat_test_set["income_cat"].value_counts()/len(strat_test_set)

strat_train_set["income_cat"].value_counts()/len(strat_train_set)

for set_ in (strat_train_set,strat_test_set):

set_.drop("income_cat",axis=1,inplace=True)

housing = strat_train_set.copy()

housing.plot(kind="scatter",x="longitude",y="latitude")

housing.plot(kind="scatter",x="longitude",y="latitude",alpha=0.1)

housing.plot(kind="scatter",x="longitude",y="latitude",alpha=0.4,s=housing["population"]/100,label = "population",figsize =(10,7),c="median_house_value",cmap=plt.get_cmap("jet"),colorbar=True)

plt.legend()

housing.plot(kind="scatter",x="longitude",y="latitude",alpha=0.4,s=housing["population"]/100,label = "population",figsize =(10,7),c="median_house_value",cmap=plt.get_cmap("jet"),colorbar=True)

plt.legend()

corr_matrix = housing.corr()

corr_matrix["median_house_value"].sort_values(ascending=False)

corr_matrix

from pandas.plotting import scatter_matrix

attributes = ["median_house_value","median_income","total_rooms","housing_median_age"]

scatter_matrix(housing[attributes],figsize=(12,8))

housing.plot(kind="scatter",x="median_income",y="median_house_value",alpha = 0.1)

housing["rooms_per_household"] = housing["total_rooms"]/housing["households"]

housing["bedrooms_per_room"] = housing["total_bedrooms"]/housing["total_rooms"]

housing["population_per_household"] = housing["population"]/housing["households"]

corr_matrix = housing.corr()

corr_matrix["median_house_value"].sort_values(ascending=False)

housing = strat_train_set.drop("median_house_value",axis=1)

housing_labels = strat_train_set["median_house_value"].copy()

# housing.dropna(subset=["total_bedrooms"]) //option 1

# housing.drop("total_bedrooms",axis=1) //option 2

median = housing["total_bedrooms"].median() #//option 3

housing["total_bedrooms"].fillna(median,inplace=True)

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(strategy="median")

housing_num = housing.drop("ocean_proximity",axis=1)

imputer.fit(housing_num)

imputer.statistics_

housing_num.median().values

X = imputer.transform(housing_num)

housing_tr = pd.DataFrame(X,columns=housing_num.columns,index=housing_num.index)

housing_cat = housing[["ocean_proximity"]]

housing_cat.head(10)

from sklearn.preprocessing import OrdinalEncoder

ordinal_encoder = OrdinalEncoder()

housing_cat_encoded = ordinal_encoder.fit_transform(housing_cat)

housing_cat_encoded[0:10]

ordinal_encoder.categories_

from sklearn.preprocessing import OneHotEncoder

cat_encoder = OneHotEncoder()

housing_cat_1hot = cat_encoder.fit_transform(housing_cat)

housing_cat_1hot

housing_cat_1hot.toarray()

cat_encoder.categories_

from sklearn.base import BaseEstimator, TransformerMixin

rooms_ix, bedrooms_ix, population_ix, households_ix = 3,4,5,6

class CombinedAttributesAdder(BaseEstimator,TransformerMixin):

def __init__(self,add_bedrooms_per_room=True):

self.add_bedrooms_per_room = add_bedrooms_per_room

def fit(self,X,y=None):

return self

def transform(self,X,y=None):

rooms_per_households = X[:,rooms_ix]/X[:,households_ix]

population_per_household = X[:,population_ix]/X[:,households_ix]

if self.add_bedrooms_per_room:

bedrooms_per_room = X[:,bedrooms_ix]/X[:,rooms_ix]

return np.c_[X,rooms_per_households,population_per_household,bedrooms_per_room]

else:

return np.c_[X,rooms_per_households,population_per_household]

attr_adder = CombinedAttributesAdder(add_bedrooms_per_room=False)

housing_extra_attribs = attr_adder.transform(housing.values)

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

num_pipeine = Pipeline([('imputer',SimpleImputer(strategy="median")),("attribs_adder",CombinedAttributesAdder()),("std_scaler",StandardScaler())])

housing_num_tr = num_pipeine.fit_transform(housing_num)

from sklearn.compose import ColumnTransformer

num_attribs = list(housing_num)

cat_attribs = ["ocean_proximity"]

full_pipeline = ColumnTransformer([("num",num_pipeine,num_attribs),("cat",OneHotEncoder(),cat_attribs)])

housing_prepared = full_pipeline.fit_transform(housing)

from sklearn.linear_model import LinearRegression

lin_reg = LinearRegression()

lin_reg.fit(housing_prepared, housing_labels)

some_data = housing.iloc[:5]

some_labels = housing_labels.iloc[:5]

some_data_prepared = full_pipeline.transform(some_data)

print("Predictions:",lin_reg.predict(some_data_prepared))

print("Labels:",list(some_labels))

from sklearn.metrics import mean_squared_error

housing_predictions = lin_reg.predict(housing_prepared)

lin_mse = mean_squared_error(housing_labels,housing_predictions)

lin_rmse = np.sqrt(lin_mse)

print(lin_rmse)

from sklearn.model_selection import cross_val_score

from sklearn.tree import DecisionTreeRegressor

tree_reg = DecisionTreeRegressor()

tree_reg.fit(housing_prepared,housing_labels)

housing_predictions = tree_reg.predict(housing_prepared)

tree_mse = mean_squared_error(housing_labels, housing_predictions)

tree_rmse = np.sqrt(tree_mse)

tree_rmse

scores = cross_val_score(tree_reg, housing_prepared,housing_labels,scoring="neg_mean_squared_error",cv=10)

tree_rmse_scores = np.sqrt(-scores)

def display_scores(scores):

print("Scores:",scores)

print("Mean:",scores.mean())

print("Standard deviation:",scores.std())

display_scores(tree_rmse_scores)

lin_scores = cross_val_score(lin_reg,housing_prepared,housing_labels,scoring="neg_mean_squared_error",cv=10)

lin_rmse_scores = np.sqrt(-lin_scores)

display_scores(lin_rmse_scores)

from sklearn.ensemble import RandomForestRegressor

forest_reg = RandomForestRegressor()

forest_reg.fit(housing_prepared,housing_labels)

housing_predictions = forest_reg.predict(housing_prepared)

forest_mse = mean_squared_error(housing_labels,housing_predictions)

forest_rmse = np.sqrt(forest_mse)

print(forest_rmse)

forest_scores = cross_val_score(forest_reg,housing_prepared,housing_labels,scoring="neg_mean_squared_error",cv=10)

forest_rmse_scores = np.sqrt(-forest_scores)

display_scores(forest_rmse_scores)

# imp+

```

| github_jupyter |

# Image Denoising with Autoencoders

## Introduction and Importing Libraries

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

import numpy as np

from tensorflow.keras.datasets import mnist

from matplotlib import pyplot as plt

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.layers import Dense, Input

from tensorflow.keras.callbacks import EarlyStopping, LambdaCallback

from tensorflow.keras.utils import to_categorical

%matplotlib inline

```

## Data Preprocessing

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float')/255.

x_test = x_test.astype('float')/255.

x_train = np.reshape(x_train, (60000, 784))

x_test = np.reshape(x_test, (10000, 784))

```

## Adding Noise

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

x_train_noisy = x_train + np.random.rand(60000, 784)*0.9

x_test_noisy = x_test + np.random.rand(10000, 784)*0.9

x_train_noisy = np.clip(x_train_noisy, 0., 1.)

x_test_noisy = np.clip(x_test_noisy, 0., 1.)

def Plot(x, p, labels = False):

plt.figure(figsize = (20, 2))

for i in range(10):

plt.subplot(1, 10, i + 1)

plt.imshow(x[i].reshape(28,28), cmap = 'viridis')

plt.xticks([])

plt.yticks([])

if labels:

plt.xlabel(np.argmax(p[i]))

plt.show()

Plot(x_train, None)

Plot(x_train_noisy, None)

```

## Building and Training a Classifier

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

classifier = Sequential([

Dense(256, activation = 'relu', input_shape = (784,)),

Dense(256, activation = 'relu'),

Dense(256, activation = 'softmax')

])

classifier.compile(optimizer = 'adam',

loss = 'sparse_categorical_crossentropy',

metrics = ['accuracy'])

classifier.fit(x_train, y_train, batch_size = 512, epochs = 3)

loss, acc = classifier.evaluate(x_test, y_test)

print(acc)

loss, acc = classifier.evaluate(x_test_noisy, y_test)

print(acc)

```

## Building the Autoencoder

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

input_image = Input(shape = (784,))

encoded = Dense(64, activation = 'relu')(input_image)

decoded = Dense(784, activation = 'sigmoid')(encoded)

autoencoder = Model(input_image, decoded)

autoencoder.compile(loss = 'binary_crossentropy', optimizer = 'adam')

```

## Training the Autoencoder

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

autoencoder.fit(

x_train_noisy,

x_train,

epochs = 100,

batch_size = 512,

validation_split = 0.2,

verbose = False,

callbacks = [

EarlyStopping(monitor = 'val_loss', patience = 5),

LambdaCallback(on_epoch_end = lambda e,l: print('{:.3f}'.format(l['val_loss']), end = ' _ '))

]

)

print(' _ ')

print('Training is complete!')

```

## Denoised Images

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

preds = autoencoder.predict(x_test_noisy)

Plot(x_test_noisy, None)

Plot(preds, None)

loss, acc = classifier.evaluate(preds, y_test)

print(acc)

```

## Composite Model

___

Note: If you are starting the notebook from this task, you can run cells from all previous tasks in the kernel by going to the top menu and then selecting Kernel > Restart and Run All

___

```

input_image=Input(shape=(784,))

x=autoencoder(input_image)

y=classifier(x)

denoise_and_classfiy = Model(input_image, y)

predictions=denoise_and_classfiy.predict(x_test_noisy)

Plot(x_test_noisy, predictions, True)

Plot(x_test, to_categorical(y_test), True)

```

| github_jupyter |

# Lab 04 : Train vanilla neural network -- solution

# Training a one-layer net on FASHION-MNIST

```

# For Google Colaboratory

import sys, os

if 'google.colab' in sys.modules:

from google.colab import drive

drive.mount('/content/gdrive')

file_name = 'train_vanilla_nn_solution.ipynb'

import subprocess

path_to_file = subprocess.check_output('find . -type f -name ' + str(file_name), shell=True).decode("utf-8")

print(path_to_file)

path_to_file = path_to_file.replace(file_name,"").replace('\n',"")

os.chdir(path_to_file)

!pwd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from random import randint

import utils

```

### Download the TRAINING SET (data+labels)

```

from utils import check_fashion_mnist_dataset_exists

data_path=check_fashion_mnist_dataset_exists()

train_data=torch.load(data_path+'fashion-mnist/train_data.pt')

train_label=torch.load(data_path+'fashion-mnist/train_label.pt')

print(train_data.size())

print(train_label.size())

```

### Download the TEST SET (data only)

```

test_data=torch.load(data_path+'fashion-mnist/test_data.pt')

print(test_data.size())

```

### Make a one layer net class

```

class one_layer_net(nn.Module):

def __init__(self, input_size, output_size):

super(one_layer_net , self).__init__()

self.linear_layer = nn.Linear( input_size, output_size , bias=False)

def forward(self, x):

y = self.linear_layer(x)

prob = F.softmax(y, dim=1)

return prob

```

### Build the net

```

net=one_layer_net(784,10)

print(net)

```

### Take the 4th image of the test set:

```

im=test_data[4]

utils.show(im)

```

### And feed it to the UNTRAINED network:

```

p = net( im.view(1,784))

print(p)

```

### Display visually the confidence scores

```

utils.show_prob_fashion_mnist(p)

```

### Train the network (only 5000 iterations) on the train set

```

criterion = nn.NLLLoss()

optimizer=torch.optim.SGD(net.parameters() , lr=0.01 )

for iter in range(1,5000):

# choose a random integer between 0 and 59,999

# extract the corresponding picture and label

# and reshape them to fit the network

idx=randint(0, 60000-1)

input=train_data[idx].view(1,784)

label=train_label[idx].view(1)

# feed the input to the net

input.requires_grad_()

prob=net(input)

# update the weights (all the magic happens here -- we will discuss it later)

log_prob=torch.log(prob)

loss = criterion(log_prob, label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

```

### Take the 34th image of the test set:

```

im=test_data[34]

utils.show(im)

```

### Feed it to the TRAINED net:

```

p = net( im.view(1,784))

print(p)

```

### Display visually the confidence scores

```

utils.show_prob_fashion_mnist(prob)

```

### Choose image at random from the test set and see how good/bad are the predictions

```

# choose a picture at random

idx=randint(0, 10000-1)

im=test_data[idx]

# diplay the picture

utils.show(im)

# feed it to the net and display the confidence scores

prob = net( im.view(1,784))

utils.show_prob_fashion_mnist(prob)

```

| github_jupyter |

<a href="https://colab.research.google.com/github/TerradasExatas/IA_e_Machine_Learning/blob/main/IA_ConvNN_classificacao_MNIST.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

#https://machinelearningmastery.com/how-to-develop-a-convolutional-neural-network-from-scratch-for-mnist-handwritten-digit-classification/

#https://towardsdatascience.com/convolutional-neural-networks-for-beginners-using-keras-and-tensorflow-2-c578f7b3bf25

#https://github.com/jorditorresBCN/python-deep-learning/blob/master/08_redes_neuronales_convolucionales.ipynb

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

#importa o dataset (as imagens da base "mnist")

mnist = tf.keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

#inspeciona o data set

print('train imagens original shape:',train_images.shape)

print('train labels original shape:',train_labels.shape)

plt.rcParams.update({'font.size':14})

plt.figure(figsize=(8,4))

for i in range(2*4):

plt.subplot(2,4,i+1)

plt.xticks([]);plt.yticks([])

plt.imshow(train_images[i],cmap=plt.cm.binary)

plt.xlabel(str(train_labels[i]))

plt.show()

#prepara o data set

train_images = train_images.reshape((60000, 28, 28, 1))

train_images = train_images.astype('float32') / 255

test_images = test_images.reshape((10000, 28, 28, 1))

test_images = test_images.astype('float32') / 255

#inspeciona os dados preparados

print ('train images new shape:',train_images.shape)

N_class=10

#Criando a rede neural

model = tf.keras.Sequential(name='rede_IF_CNN_MNIST')

#Adicionando as camadas

model.add(tf.keras.layers.Conv2D(12, (5, 5),

activation='relu', input_shape=(28, 28, 1)))

model.add(tf.keras.layers.MaxPooling2D((2, 2)))

model.add(tf.keras.layers.Conv2D(24, (3, 3), activation='relu'))

model.add(tf.keras.layers.MaxPooling2D((2, 2)))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(N_class, activation='softmax'))

#compilando a rede

opt=tf.keras.optimizers.Adam(learning_rate=0.002)

model.compile(optimizer=opt, loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

# treinando a rede

history=model.fit(train_images, train_labels,epochs=8,verbose=1)

#mostra a performace do treinamento da rede

plt.figure()

plt.subplot(2,1,1);plt.semilogy(history.history['loss'],'k')

plt.legend(['loss'])

plt.subplot(2,1,2);plt.plot(history.history['accuracy'],'k')

plt.legend(['acuracia'])

plt.tight_layout()

#testando a rede com os dados de teste

pred = model.predict(test_images)

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print('\n accuracia dos dados de teste: ', test_acc)

#encontra a classe de maior probabilidade

labels_pred=np.argmax(pred,axis=1)

#mostra 15 resultados esperados e os alcançados lado a lado

print('data and pred = \n',np.concatenate(

(test_labels[None].T[0:15], labels_pred[None].T[0:15]),axis=1))

```

| github_jupyter |

```

from pandas import read_csv

import cv2

import glob

import os

import numpy as np

import logging

import coloredlogs

logger = logging.getLogger(__name__)

coloredlogs.install(level='DEBUG')

coloredlogs.install(level='DEBUG', logger=logger)

IM_EXTENSIONS = ['png', 'jpg', 'jpeg', 'bmp']

def read_img(img_path, img_shape=(128, 128)):

"""

load image file and divide by 255.

"""

img = cv2.imread(img_path)

img = cv2.resize(img, img_shape)

img = img.astype('float')

img /= 255.

return img

dataset_dir = './data/images/'

label_path = './data/label.csv'

batch_size=32,

img_shape=(128, 128)

label_df = read_csv(label_path)

# img_files = glob.glob(dataset_dir + '*')

# img_files = [f for f in img_files if f[-3:] in IM_EXTENSIONS]

label_idx = label_df.set_index('filename')

img_files = label_idx.index.unique().values

label_idx.loc['0_Parade_Parade_0_628.jpg'].head()

label_idx.iloc[0:5]

len(img_files)

def append_zero(arr):

return np.append([0], arr)

# temp = label_idx.loc[img_files[0]].values[:, :4] #[0, 26, 299, 36, 315]

# np.apply_along_axis(append_zero, 1, temp)

"""

data loader

return image, [class_label, class_and_location_label]

"""

numofData = len(img_files) # endwiths(png,jpg ...)

data_idx = np.arange(numofData)

while True:

batch_idx = np.random.choice(data_idx, size=batch_size, replace=False)

batch_img = []

batch_label = []

batch_label_cls = []

for i in batch_idx:

img = read_img(dataset_dir + img_files[i], img_shape=img_shape)

label_idx = label_df.set_index('filename')

img_files = label_idx.index.unique().values

label = label_idx.loc[img_files[i]].values

label = np.array(label, ndmin=2)

label = label[:, :4]

cls_loc_label = np.apply_along_axis(append_zero, 1, label)

batch_img.append(img)

batch_label.append(label)

batch_label_cls.append(0) # label[0:1]) ---> face

# yield ({'input_1': np.array(batch_img, dtype=np.float32)},

# {'clf_output': np.array(batch_label_cls, dtype=np.float32),

# 'bb_output': np.array(batch_label, dtype=np.float32)})

import tensorflow as tf

def dataloader(dataset_dir, label_path, batch_size=1000, img_shape=(128, 128)):

"""

data loader

return image, [class_label, class_and_location_label]

"""

label_df = read_csv(label_path)

label_idx = label_df.set_index('filename')

img_files = label_idx.index.unique().values

numofData = len(img_files) # endwiths(png,jpg ...)

data_idx = np.arange(numofData)

while True:

batch_idx = np.random.choice(data_idx, size=batch_size, replace=False)

batch_img = []

batch_label = []

batch_class = []

for i in batch_idx:

img = read_img(dataset_dir + img_files[i], img_shape=img_shape)

label = label_idx.loc[img_files[i]].values

label = np.array(label, ndmin=2)

label = label[:, :4]

cls_loc_label = np.apply_along_axis(append_zero, 1, label)

batch_img.append(img)

batch_label.append(cls_loc_label) # face + bb

batch_class.append(cls_loc_label[:, 0:1]) # label[:, 0:1]) ---> face

# yield {'input_1': np.array(batch_img, dtype=np.float32)}, {'clf_output': np.array(batch_class, dtype=np.float32),'bb_output': np.array(batch_label, dtype=np.float32)}

yield np.array(batch_img, dtype=np.float32), [np.array(batch_class, dtype=np.float32), np.array(batch_label, dtype=np.float32)]

data_gen = dataloader(dataset_dir, label_path, batch_size=1, img_shape=(128, 128))

data = next(data_gen)

len(data)

```

| github_jupyter |

<font color = "mediumblue">Note: Notebook was updated July 2, 2019 with bug fixes.</font>

#### If you were working on the older version:

* Please click on the "Coursera" icon in the top right to open up the folder directory.

* Navigate to the folder: Week 3/ Planar data classification with one hidden layer. You can see your prior work in version 5: Planar data classification with one hidden layer v5.ipynb

#### List of bug fixes and enhancements

* Clarifies that the classifier will learn to classify regions as either red or blue.

* compute_cost function fixes np.squeeze by casting it as a float.

* compute_cost instructions clarify the purpose of np.squeeze.

* compute_cost clarifies that "parameters" parameter is not needed, but is kept in the function definition until the auto-grader is also updated.

* nn_model removes extraction of parameter values, as the entire parameter dictionary is passed to the invoked functions.

# Planar data classification with one hidden layer

Welcome to your week 3 programming assignment. It's time to build your first neural network, which will have a hidden layer. You will see a big difference between this model and the one you implemented using logistic regression.

**You will learn how to:**

- Implement a 2-class classification neural network with a single hidden layer

- Use units with a non-linear activation function, such as tanh

- Compute the cross entropy loss

- Implement forward and backward propagation

## 1 - Packages ##

Let's first import all the packages that you will need during this assignment.

- [numpy](https://www.numpy.org/) is the fundamental package for scientific computing with Python.

- [sklearn](http://scikit-learn.org/stable/) provides simple and efficient tools for data mining and data analysis.

- [matplotlib](http://matplotlib.org) is a library for plotting graphs in Python.

- testCases provides some test examples to assess the correctness of your functions

- planar_utils provide various useful functions used in this assignment

```

# Package imports

import numpy as np

import matplotlib.pyplot as plt

from testCases_v2 import *

import sklearn

import sklearn.datasets

import sklearn.linear_model

from planar_utils import plot_decision_boundary, sigmoid, load_planar_dataset, load_extra_datasets

%matplotlib inline

np.random.seed(1) # set a seed so that the results are consistent

```

## 2 - Dataset ##

First, let's get the dataset you will work on. The following code will load a "flower" 2-class dataset into variables `X` and `Y`.

```

X, Y = load_planar_dataset()

```

Visualize the dataset using matplotlib. The data looks like a "flower" with some red (label y=0) and some blue (y=1) points. Your goal is to build a model to fit this data. In other words, we want the classifier to define regions as either red or blue.

```

# Visualize the data:

plt.scatter(X[0, :], X[1, :], c=Y, s=40, cmap=plt.cm.Spectral);

```

You have:

- a numpy-array (matrix) X that contains your features (x1, x2)

- a numpy-array (vector) Y that contains your labels (red:0, blue:1).

Lets first get a better sense of what our data is like.

**Exercise**: How many training examples do you have? In addition, what is the `shape` of the variables `X` and `Y`?

**Hint**: How do you get the shape of a numpy array? [(help)](https://docs.scipy.org/doc/numpy/reference/generated/numpy.ndarray.shape.html)

```

### START CODE HERE ### (≈ 3 lines of code)

shape_X = None

shape_Y = None

m = X.shape[1] # training set size

### END CODE HERE ###

print ('The shape of X is: ' + str(shape_X))

print ('The shape of Y is: ' + str(shape_Y))

print ('I have m = %d training examples!' % (m))

```

**Expected Output**:

<table style="width:20%">

<tr>

<td>**shape of X**</td>

<td> (2, 400) </td>

</tr>

<tr>

<td>**shape of Y**</td>

<td>(1, 400) </td>

</tr>

<tr>

<td>**m**</td>

<td> 400 </td>

</tr>

</table>

## 3 - Simple Logistic Regression

Before building a full neural network, lets first see how logistic regression performs on this problem. You can use sklearn's built-in functions to do that. Run the code below to train a logistic regression classifier on the dataset.

```

# Train the logistic regression classifier

clf = sklearn.linear_model.LogisticRegressionCV();

clf.fit(X.T, Y.T);

```

You can now plot the decision boundary of these models. Run the code below.

```

# Plot the decision boundary for logistic regression

plot_decision_boundary(lambda x: clf.predict(x), X, Y)

plt.title("Logistic Regression")

# Print accuracy

LR_predictions = clf.predict(X.T)

print ('Accuracy of logistic regression: %d ' % float((np.dot(Y,LR_predictions) + np.dot(1-Y,1-LR_predictions))/float(Y.size)*100) +

'% ' + "(percentage of correctly labelled datapoints)")

```

**Expected Output**:

<table style="width:20%">

<tr>

<td>**Accuracy**</td>

<td> 47% </td>

</tr>

</table>

**Interpretation**: The dataset is not linearly separable, so logistic regression doesn't perform well. Hopefully a neural network will do better. Let's try this now!

## 4 - Neural Network model

Logistic regression did not work well on the "flower dataset". You are going to train a Neural Network with a single hidden layer.

**Here is our model**:

<img src="images/classification_kiank.png" style="width:600px;height:300px;">

**Mathematically**:

For one example $x^{(i)}$:

$$z^{[1] (i)} = W^{[1]} x^{(i)} + b^{[1]}\tag{1}$$

$$a^{[1] (i)} = \tanh(z^{[1] (i)})\tag{2}$$

$$z^{[2] (i)} = W^{[2]} a^{[1] (i)} + b^{[2]}\tag{3}$$

$$\hat{y}^{(i)} = a^{[2] (i)} = \sigma(z^{ [2] (i)})\tag{4}$$

$$y^{(i)}_{prediction} = \begin{cases} 1 & \mbox{if } a^{[2](i)} > 0.5 \\ 0 & \mbox{otherwise } \end{cases}\tag{5}$$

Given the predictions on all the examples, you can also compute the cost $J$ as follows:

$$J = - \frac{1}{m} \sum\limits_{i = 0}^{m} \large\left(\small y^{(i)}\log\left(a^{[2] (i)}\right) + (1-y^{(i)})\log\left(1- a^{[2] (i)}\right) \large \right) \small \tag{6}$$

**Reminder**: The general methodology to build a Neural Network is to:

1. Define the neural network structure ( # of input units, # of hidden units, etc).

2. Initialize the model's parameters

3. Loop:

- Implement forward propagation

- Compute loss

- Implement backward propagation to get the gradients

- Update parameters (gradient descent)

You often build helper functions to compute steps 1-3 and then merge them into one function we call `nn_model()`. Once you've built `nn_model()` and learnt the right parameters, you can make predictions on new data.

### 4.1 - Defining the neural network structure ####

**Exercise**: Define three variables:

- n_x: the size of the input layer

- n_h: the size of the hidden layer (set this to 4)

- n_y: the size of the output layer

**Hint**: Use shapes of X and Y to find n_x and n_y. Also, hard code the hidden layer size to be 4.

```

# GRADED FUNCTION: layer_sizes

def layer_sizes(X, Y):

"""

Arguments:

X -- input dataset of shape (input size, number of examples)

Y -- labels of shape (output size, number of examples)

Returns:

n_x -- the size of the input layer

n_h -- the size of the hidden layer

n_y -- the size of the output layer

"""

### START CODE HERE ### (≈ 3 lines of code)

n_x = None # size of input layer

n_h = None

n_y = None # size of output layer

### END CODE HERE ###

return (n_x, n_h, n_y)

X_assess, Y_assess = layer_sizes_test_case()

(n_x, n_h, n_y) = layer_sizes(X_assess, Y_assess)

print("The size of the input layer is: n_x = " + str(n_x))

print("The size of the hidden layer is: n_h = " + str(n_h))

print("The size of the output layer is: n_y = " + str(n_y))

```

**Expected Output** (these are not the sizes you will use for your network, they are just used to assess the function you've just coded).

<table style="width:20%">

<tr>

<td>**n_x**</td>

<td> 5 </td>

</tr>

<tr>

<td>**n_h**</td>

<td> 4 </td>

</tr>

<tr>

<td>**n_y**</td>

<td> 2 </td>

</tr>

</table>

### 4.2 - Initialize the model's parameters ####

**Exercise**: Implement the function `initialize_parameters()`.

**Instructions**:

- Make sure your parameters' sizes are right. Refer to the neural network figure above if needed.

- You will initialize the weights matrices with random values.

- Use: `np.random.randn(a,b) * 0.01` to randomly initialize a matrix of shape (a,b).

- You will initialize the bias vectors as zeros.

- Use: `np.zeros((a,b))` to initialize a matrix of shape (a,b) with zeros.

```

# GRADED FUNCTION: initialize_parameters

def initialize_parameters(n_x, n_h, n_y):

"""

Argument:

n_x -- size of the input layer

n_h -- size of the hidden layer

n_y -- size of the output layer

Returns:

params -- python dictionary containing your parameters:

W1 -- weight matrix of shape (n_h, n_x)

b1 -- bias vector of shape (n_h, 1)

W2 -- weight matrix of shape (n_y, n_h)

b2 -- bias vector of shape (n_y, 1)

"""

np.random.seed(2) # we set up a seed so that your output matches ours although the initialization is random.

### START CODE HERE ### (≈ 4 lines of code)

W1 = None

b1 = None

W2 = None

b2 = None

### END CODE HERE ###

assert (W1.shape == (n_h, n_x))

assert (b1.shape == (n_h, 1))

assert (W2.shape == (n_y, n_h))

assert (b2.shape == (n_y, 1))

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters

n_x, n_h, n_y = initialize_parameters_test_case()

parameters = initialize_parameters(n_x, n_h, n_y)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))

```

**Expected Output**:

<table style="width:90%">

<tr>

<td>**W1**</td>

<td> [[-0.00416758 -0.00056267]

[-0.02136196 0.01640271]

[-0.01793436 -0.00841747]

[ 0.00502881 -0.01245288]] </td>

</tr>

<tr>

<td>**b1**</td>

<td> [[ 0.]

[ 0.]

[ 0.]

[ 0.]] </td>

</tr>

<tr>

<td>**W2**</td>

<td> [[-0.01057952 -0.00909008 0.00551454 0.02292208]]</td>

</tr>

<tr>

<td>**b2**</td>

<td> [[ 0.]] </td>

</tr>

</table>

### 4.3 - The Loop ####

**Question**: Implement `forward_propagation()`.

**Instructions**:

- Look above at the mathematical representation of your classifier.

- You can use the function `sigmoid()`. It is built-in (imported) in the notebook.

- You can use the function `np.tanh()`. It is part of the numpy library.

- The steps you have to implement are:

1. Retrieve each parameter from the dictionary "parameters" (which is the output of `initialize_parameters()`) by using `parameters[".."]`.

2. Implement Forward Propagation. Compute $Z^{[1]}, A^{[1]}, Z^{[2]}$ and $A^{[2]}$ (the vector of all your predictions on all the examples in the training set).

- Values needed in the backpropagation are stored in "`cache`". The `cache` will be given as an input to the backpropagation function.

```

# GRADED FUNCTION: forward_propagation

def forward_propagation(X, parameters):

"""

Argument:

X -- input data of size (n_x, m)

parameters -- python dictionary containing your parameters (output of initialization function)

Returns:

A2 -- The sigmoid output of the second activation

cache -- a dictionary containing "Z1", "A1", "Z2" and "A2"

"""

# Retrieve each parameter from the dictionary "parameters"

### START CODE HERE ### (≈ 4 lines of code)

W1 = None

b1 = None

W2 = None

b2 = None

### END CODE HERE ###

# Implement Forward Propagation to calculate A2 (probabilities)

### START CODE HERE ### (≈ 4 lines of code)

Z1 = None

A1 = None

Z2 = None

A2 = None

### END CODE HERE ###

assert(A2.shape == (1, X.shape[1]))

cache = {"Z1": Z1,

"A1": A1,

"Z2": Z2,

"A2": A2}

return A2, cache

X_assess, parameters = forward_propagation_test_case()

A2, cache = forward_propagation(X_assess, parameters)

# Note: we use the mean here just to make sure that your output matches ours.

print(np.mean(cache['Z1']) ,np.mean(cache['A1']),np.mean(cache['Z2']),np.mean(cache['A2']))

```

**Expected Output**:

<table style="width:50%">

<tr>

<td> 0.262818640198 0.091999045227 -1.30766601287 0.212877681719 </td>

</tr>

</table>

Now that you have computed $A^{[2]}$ (in the Python variable "`A2`"), which contains $a^{[2](i)}$ for every example, you can compute the cost function as follows:

$$J = - \frac{1}{m} \sum\limits_{i = 1}^{m} \large{(} \small y^{(i)}\log\left(a^{[2] (i)}\right) + (1-y^{(i)})\log\left(1- a^{[2] (i)}\right) \large{)} \small\tag{13}$$

**Exercise**: Implement `compute_cost()` to compute the value of the cost $J$.

**Instructions**:

- There are many ways to implement the cross-entropy loss. To help you, we give you how we would have implemented

$- \sum\limits_{i=0}^{m} y^{(i)}\log(a^{[2](i)})$:

```python

logprobs = np.multiply(np.log(A2),Y)

cost = - np.sum(logprobs) # no need to use a for loop!

```

(you can use either `np.multiply()` and then `np.sum()` or directly `np.dot()`).

Note that if you use `np.multiply` followed by `np.sum` the end result will be a type `float`, whereas if you use `np.dot`, the result will be a 2D numpy array. We can use `np.squeeze()` to remove redundant dimensions (in the case of single float, this will be reduced to a zero-dimension array). We can cast the array as a type `float` using `float()`.

```

# GRADED FUNCTION: compute_cost

def compute_cost(A2, Y, parameters):

"""

Computes the cross-entropy cost given in equation (13)

Arguments:

A2 -- The sigmoid output of the second activation, of shape (1, number of examples)

Y -- "true" labels vector of shape (1, number of examples)

parameters -- python dictionary containing your parameters W1, b1, W2 and b2

[Note that the parameters argument is not used in this function,

but the auto-grader currently expects this parameter.

Future version of this notebook will fix both the notebook

and the auto-grader so that `parameters` is not needed.

For now, please include `parameters` in the function signature,

and also when invoking this function.]

Returns:

cost -- cross-entropy cost given equation (13)

"""

m = Y.shape[1] # number of example

# Compute the cross-entropy cost

### START CODE HERE ### (≈ 2 lines of code)

logprobs = None

cost = None

### END CODE HERE ###

cost = float(np.squeeze(cost)) # makes sure cost is the dimension we expect.

# E.g., turns [[17]] into 17

assert(isinstance(cost, float))

return cost

A2, Y_assess, parameters = compute_cost_test_case()

print("cost = " + str(compute_cost(A2, Y_assess, parameters)))

```

**Expected Output**:

<table style="width:20%">

<tr>

<td>**cost**</td>

<td> 0.693058761... </td>

</tr>

</table>

Using the cache computed during forward propagation, you can now implement backward propagation.

**Question**: Implement the function `backward_propagation()`.

**Instructions**:

Backpropagation is usually the hardest (most mathematical) part in deep learning. To help you, here again is the slide from the lecture on backpropagation. You'll want to use the six equations on the right of this slide, since you are building a vectorized implementation.

<img src="images/grad_summary.png" style="width:600px;height:300px;">

<!--

$\frac{\partial \mathcal{J} }{ \partial z_{2}^{(i)} } = \frac{1}{m} (a^{[2](i)} - y^{(i)})$

$\frac{\partial \mathcal{J} }{ \partial W_2 } = \frac{\partial \mathcal{J} }{ \partial z_{2}^{(i)} } a^{[1] (i) T} $

$\frac{\partial \mathcal{J} }{ \partial b_2 } = \sum_i{\frac{\partial \mathcal{J} }{ \partial z_{2}^{(i)}}}$

$\frac{\partial \mathcal{J} }{ \partial z_{1}^{(i)} } = W_2^T \frac{\partial \mathcal{J} }{ \partial z_{2}^{(i)} } * ( 1 - a^{[1] (i) 2}) $

$\frac{\partial \mathcal{J} }{ \partial W_1 } = \frac{\partial \mathcal{J} }{ \partial z_{1}^{(i)} } X^T $

$\frac{\partial \mathcal{J} _i }{ \partial b_1 } = \sum_i{\frac{\partial \mathcal{J} }{ \partial z_{1}^{(i)}}}$

- Note that $*$ denotes elementwise multiplication.

- The notation you will use is common in deep learning coding:

- dW1 = $\frac{\partial \mathcal{J} }{ \partial W_1 }$

- db1 = $\frac{\partial \mathcal{J} }{ \partial b_1 }$

- dW2 = $\frac{\partial \mathcal{J} }{ \partial W_2 }$

- db2 = $\frac{\partial \mathcal{J} }{ \partial b_2 }$

!-->

- Tips:

- To compute dZ1 you'll need to compute $g^{[1]'}(Z^{[1]})$. Since $g^{[1]}(.)$ is the tanh activation function, if $a = g^{[1]}(z)$ then $g^{[1]'}(z) = 1-a^2$. So you can compute

$g^{[1]'}(Z^{[1]})$ using `(1 - np.power(A1, 2))`.

```

# GRADED FUNCTION: backward_propagation

def backward_propagation(parameters, cache, X, Y):

"""

Implement the backward propagation using the instructions above.

Arguments:

parameters -- python dictionary containing our parameters

cache -- a dictionary containing "Z1", "A1", "Z2" and "A2".

X -- input data of shape (2, number of examples)

Y -- "true" labels vector of shape (1, number of examples)

Returns:

grads -- python dictionary containing your gradients with respect to different parameters

"""

m = X.shape[1]

# First, retrieve W1 and W2 from the dictionary "parameters".

### START CODE HERE ### (≈ 2 lines of code)

W1 = None

W2 = None

### END CODE HERE ###

# Retrieve also A1 and A2 from dictionary "cache".

### START CODE HERE ### (≈ 2 lines of code)

A1 = None

A2 = None

### END CODE HERE ###

# Backward propagation: calculate dW1, db1, dW2, db2.

### START CODE HERE ### (≈ 6 lines of code, corresponding to 6 equations on slide above)

dZ2 = None

dW2 = None

db2 = None

dZ1 = None

dW1 = None

db1 = None

### END CODE HERE ###

grads = {"dW1": dW1,

"db1": db1,

"dW2": dW2,

"db2": db2}

return grads

parameters, cache, X_assess, Y_assess = backward_propagation_test_case()

grads = backward_propagation(parameters, cache, X_assess, Y_assess)

print ("dW1 = "+ str(grads["dW1"]))

print ("db1 = "+ str(grads["db1"]))

print ("dW2 = "+ str(grads["dW2"]))

print ("db2 = "+ str(grads["db2"]))

```

**Expected output**:

<table style="width:80%">

<tr>

<td>**dW1**</td>

<td> [[ 0.00301023 -0.00747267]

[ 0.00257968 -0.00641288]

[-0.00156892 0.003893 ]

[-0.00652037 0.01618243]] </td>

</tr>

<tr>

<td>**db1**</td>

<td> [[ 0.00176201]

[ 0.00150995]

[-0.00091736]

[-0.00381422]] </td>

</tr>

<tr>

<td>**dW2**</td>

<td> [[ 0.00078841 0.01765429 -0.00084166 -0.01022527]] </td>

</tr>

<tr>

<td>**db2**</td>

<td> [[-0.16655712]] </td>

</tr>

</table>

**Question**: Implement the update rule. Use gradient descent. You have to use (dW1, db1, dW2, db2) in order to update (W1, b1, W2, b2).

**General gradient descent rule**: $ \theta = \theta - \alpha \frac{\partial J }{ \partial \theta }$ where $\alpha$ is the learning rate and $\theta$ represents a parameter.

**Illustration**: The gradient descent algorithm with a good learning rate (converging) and a bad learning rate (diverging). Images courtesy of Adam Harley.

<img src="images/sgd.gif" style="width:400;height:400;"> <img src="images/sgd_bad.gif" style="width:400;height:400;">

```

# GRADED FUNCTION: update_parameters

def update_parameters(parameters, grads, learning_rate = 1.2):

"""

Updates parameters using the gradient descent update rule given above

Arguments:

parameters -- python dictionary containing your parameters

grads -- python dictionary containing your gradients

Returns:

parameters -- python dictionary containing your updated parameters

"""

# Retrieve each parameter from the dictionary "parameters"

### START CODE HERE ### (≈ 4 lines of code)

W1 = None

b1 = None

W2 = None

b2 = None

### END CODE HERE ###

# Retrieve each gradient from the dictionary "grads"

### START CODE HERE ### (≈ 4 lines of code)

dW1 = None

db1 = None

dW2 = None

db2 = None

## END CODE HERE ###

# Update rule for each parameter

### START CODE HERE ### (≈ 4 lines of code)

W1 = None

b1 = None

W2 = None

b2 = None

### END CODE HERE ###

parameters = {"W1": W1,

"b1": b1,

"W2": W2,

"b2": b2}

return parameters

parameters, grads = update_parameters_test_case()

parameters = update_parameters(parameters, grads)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))

```

**Expected Output**:

<table style="width:80%">

<tr>

<td>**W1**</td>

<td> [[-0.00643025 0.01936718]

[-0.02410458 0.03978052]

[-0.01653973 -0.02096177]

[ 0.01046864 -0.05990141]]</td>

</tr>

<tr>

<td>**b1**</td>

<td> [[ -1.02420756e-06]

[ 1.27373948e-05]

[ 8.32996807e-07]

[ -3.20136836e-06]]</td>

</tr>

<tr>

<td>**W2**</td>

<td> [[-0.01041081 -0.04463285 0.01758031 0.04747113]] </td>

</tr>

<tr>

<td>**b2**</td>

<td> [[ 0.00010457]] </td>

</tr>

</table>

### 4.4 - Integrate parts 4.1, 4.2 and 4.3 in nn_model() ####

**Question**: Build your neural network model in `nn_model()`.

**Instructions**: The neural network model has to use the previous functions in the right order.

```

# GRADED FUNCTION: nn_model

def nn_model(X, Y, n_h, num_iterations = 10000, print_cost=False):

"""

Arguments:

X -- dataset of shape (2, number of examples)

Y -- labels of shape (1, number of examples)

n_h -- size of the hidden layer

num_iterations -- Number of iterations in gradient descent loop

print_cost -- if True, print the cost every 1000 iterations

Returns:

parameters -- parameters learnt by the model. They can then be used to predict.

"""

np.random.seed(3)

n_x = layer_sizes(X, Y)[0]

n_y = layer_sizes(X, Y)[2]

# Initialize parameters

### START CODE HERE ### (≈ 1 line of code)

parameters = None

### END CODE HERE ###

# Loop (gradient descent)

for i in range(0, num_iterations):

### START CODE HERE ### (≈ 4 lines of code)

# Forward propagation. Inputs: "X, parameters". Outputs: "A2, cache".

A2, cache = None

# Cost function. Inputs: "A2, Y, parameters". Outputs: "cost".

cost = None

# Backpropagation. Inputs: "parameters, cache, X, Y". Outputs: "grads".

grads = None

# Gradient descent parameter update. Inputs: "parameters, grads". Outputs: "parameters".

parameters = None

### END CODE HERE ###

# Print the cost every 1000 iterations

if print_cost and i % 1000 == 0:

print ("Cost after iteration %i: %f" %(i, cost))

return parameters

X_assess, Y_assess = nn_model_test_case()

parameters = nn_model(X_assess, Y_assess, 4, num_iterations=10000, print_cost=True)

print("W1 = " + str(parameters["W1"]))

print("b1 = " + str(parameters["b1"]))

print("W2 = " + str(parameters["W2"]))

print("b2 = " + str(parameters["b2"]))

```

**Expected Output**:

<table style="width:90%">

<tr>

<td>

**cost after iteration 0**

</td>

<td>

0.692739

</td>

</tr>

<tr>

<td>

<center> $\vdots$ </center>

</td>

<td>

<center> $\vdots$ </center>

</td>

</tr>

<tr>

<td>**W1**</td>

<td> [[-0.65848169 1.21866811]

[-0.76204273 1.39377573]

[ 0.5792005 -1.10397703]

[ 0.76773391 -1.41477129]]</td>

</tr>

<tr>

<td>**b1**</td>

<td> [[ 0.287592 ]

[ 0.3511264 ]

[-0.2431246 ]

[-0.35772805]] </td>

</tr>

<tr>

<td>**W2**</td>

<td> [[-2.45566237 -3.27042274 2.00784958 3.36773273]] </td>

</tr>

<tr>

<td>**b2**</td>

<td> [[ 0.20459656]] </td>

</tr>

</table>

### 4.5 Predictions

**Question**: Use your model to predict by building predict().

Use forward propagation to predict results.

**Reminder**: predictions = $y_{prediction} = \mathbb 1 \text{{activation > 0.5}} = \begin{cases}

1 & \text{if}\ activation > 0.5 \\

0 & \text{otherwise}

\end{cases}$

As an example, if you would like to set the entries of a matrix X to 0 and 1 based on a threshold you would do: ```X_new = (X > threshold)```

```

# GRADED FUNCTION: predict

def predict(parameters, X):

"""

Using the learned parameters, predicts a class for each example in X

Arguments:

parameters -- python dictionary containing your parameters

X -- input data of size (n_x, m)

Returns

predictions -- vector of predictions of our model (red: 0 / blue: 1)

"""

# Computes probabilities using forward propagation, and classifies to 0/1 using 0.5 as the threshold.

### START CODE HERE ### (≈ 2 lines of code)

A2, cache = None

predictions = None

### END CODE HERE ###

return predictions

parameters, X_assess = predict_test_case()

predictions = predict(parameters, X_assess)

print("predictions mean = " + str(np.mean(predictions)))

```

**Expected Output**:

<table style="width:40%">

<tr>

<td>**predictions mean**</td>

<td> 0.666666666667 </td>

</tr>

</table>

It is time to run the model and see how it performs on a planar dataset. Run the following code to test your model with a single hidden layer of $n_h$ hidden units.

```

# Build a model with a n_h-dimensional hidden layer

parameters = nn_model(X, Y, n_h = 4, num_iterations = 10000, print_cost=True)

# Plot the decision boundary

plot_decision_boundary(lambda x: predict(parameters, x.T), X, Y)

plt.title("Decision Boundary for hidden layer size " + str(4))

```

**Expected Output**:

<table style="width:40%">

<tr>

<td>**Cost after iteration 9000**</td>

<td> 0.218607 </td>

</tr>

</table>

```

# Print accuracy

predictions = predict(parameters, X)

print ('Accuracy: %d' % float((np.dot(Y,predictions.T) + np.dot(1-Y,1-predictions.T))/float(Y.size)*100) + '%')

```

**Expected Output**:

<table style="width:15%">

<tr>

<td>**Accuracy**</td>

<td> 90% </td>

</tr>

</table>

Accuracy is really high compared to Logistic Regression. The model has learnt the leaf patterns of the flower! Neural networks are able to learn even highly non-linear decision boundaries, unlike logistic regression.

Now, let's try out several hidden layer sizes.

### 4.6 - Tuning hidden layer size (optional/ungraded exercise) ###

Run the following code. It may take 1-2 minutes. You will observe different behaviors of the model for various hidden layer sizes.

```

# This may take about 2 minutes to run

plt.figure(figsize=(16, 32))

hidden_layer_sizes = [1, 2, 3, 4, 5, 20, 50]

for i, n_h in enumerate(hidden_layer_sizes):

plt.subplot(5, 2, i+1)

plt.title('Hidden Layer of size %d' % n_h)

parameters = nn_model(X, Y, n_h, num_iterations = 5000)

plot_decision_boundary(lambda x: predict(parameters, x.T), X, Y)

predictions = predict(parameters, X)

accuracy = float((np.dot(Y,predictions.T) + np.dot(1-Y,1-predictions.T))/float(Y.size)*100)

print ("Accuracy for {} hidden units: {} %".format(n_h, accuracy))

```

**Interpretation**:

- The larger models (with more hidden units) are able to fit the training set better, until eventually the largest models overfit the data.

- The best hidden layer size seems to be around n_h = 5. Indeed, a value around here seems to fits the data well without also incurring noticeable overfitting.

- You will also learn later about regularization, which lets you use very large models (such as n_h = 50) without much overfitting.

**Optional questions**:

**Note**: Remember to submit the assignment by clicking the blue "Submit Assignment" button at the upper-right.

Some optional/ungraded questions that you can explore if you wish:

- What happens when you change the tanh activation for a sigmoid activation or a ReLU activation?

- Play with the learning_rate. What happens?

- What if we change the dataset? (See part 5 below!)

<font color='blue'>

**You've learnt to:**

- Build a complete neural network with a hidden layer

- Make a good use of a non-linear unit

- Implemented forward propagation and backpropagation, and trained a neural network

- See the impact of varying the hidden layer size, including overfitting.

Nice work!

## 5) Performance on other datasets

If you want, you can rerun the whole notebook (minus the dataset part) for each of the following datasets.

```

# Datasets

noisy_circles, noisy_moons, blobs, gaussian_quantiles, no_structure = load_extra_datasets()

datasets = {"noisy_circles": noisy_circles,

"noisy_moons": noisy_moons,

"blobs": blobs,

"gaussian_quantiles": gaussian_quantiles}

### START CODE HERE ### (choose your dataset)

dataset = "noisy_moons"

### END CODE HERE ###

X, Y = datasets[dataset]

X, Y = X.T, Y.reshape(1, Y.shape[0])

# make blobs binary

if dataset == "blobs":

Y = Y%2

# Visualize the data

plt.scatter(X[0, :], X[1, :], c=Y, s=40, cmap=plt.cm.Spectral);

```

Congrats on finishing this Programming Assignment!

Reference:

- http://scs.ryerson.ca/~aharley/neural-networks/

- http://cs231n.github.io/neural-networks-case-study/

| github_jupyter |

<a href="https://cognitiveclass.ai/">

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Ad/CCLog.png" width="200" align="center">

</a>

<h1>2D <code>Numpy</code> in Python</h1>

<p><strong>Welcome!</strong> This notebook will teach you about using <code>Numpy</code> in the Python Programming Language. By the end of this lab, you'll know what <code>Numpy</code> is and the <code>Numpy</code> operations.</p>

<h2>Table of Contents</h2>

<div class="alert alert-block alert-info" style="margin-top: 20px">

<ul>

<li><a href="create">Create a 2D Numpy Array</a></li>

<li><a href="access">Accessing different elements of a Numpy Array</a></li>

<li><a href="op">Basic Operations</a></li>

</ul>

<p>

Estimated time needed: <strong>20 min</strong>

</p>

</div>

<hr>

<h2 id="create">Create a 2D Numpy Array</h2>

```

# Import the libraries

import numpy as np

import matplotlib.pyplot as plt

```

Consider the list <code>a</code>, the list contains three nested lists **each of equal size**.

```

# Create a list

a = [[11, 12, 13], [21, 22, 23], [31, 32, 33]]

a

```

We can cast the list to a Numpy Array as follow

```

# Convert list to Numpy Array

# Every element is the same type

A = np.array(a)

A

```

We can use the attribute <code>ndim</code> to obtain the number of axes or dimensions referred to as the rank.

```

# Show the numpy array dimensions

A.ndim

```

Attribute <code>shape</code> returns a tuple corresponding to the size or number of each dimension.

```

# Show the numpy array shape

A.shape

```

The total number of elements in the array is given by the attribute <code>size</code>.

```

# Show the numpy array size

A.size

```

<hr>

<h2 id="access">Accessing different elements of a Numpy Array</h2>

We can use rectangular brackets to access the different elements of the array. The correspondence between the rectangular brackets and the list and the rectangular representation is shown in the following figure for a 3x3 array:

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoEg.png" width="500" />

We can access the 2nd-row 3rd column as shown in the following figure:

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoFT.png" width="400" />

We simply use the square brackets and the indices corresponding to the element we would like:

```

# Access the element on the second row and third column

A[1, 2]

```

We can also use the following notation to obtain the elements:

```

# Access the element on the second row and third column

A[1][2]

```

Consider the elements shown in the following figure

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoFF.png" width="400" />

We can access the element as follows

```

# Access the element on the first row and first column

A[0][0]

```

We can also use slicing in numpy arrays. Consider the following figure. We would like to obtain the first two columns in the first row

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoFSF.png" width="400" />

This can be done with the following syntax

```

# Access the element on the first row and first and second columns

A[0][0:2]

```

Similarly, we can obtain the first two rows of the 3rd column as follows:

```

# Access the element on the first and second rows and third column

A[0:2, 2]

```

Corresponding to the following figure:

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoTST.png" width="400" />

<hr>

<h2 id="op">Basic Operations</h2>

We can also add arrays. The process is identical to matrix addition. Matrix addition of <code>X</code> and <code>Y</code> is shown in the following figure:

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoAdd.png" width="500" />

The numpy array is given by <code>X</code> and <code>Y</code>

```

# Create a numpy array X

X = np.array([[1, 0], [0, 1]])

X

# Create a numpy array Y

Y = np.array([[2, 1], [1, 2]])

Y

```

We can add the numpy arrays as follows.

```

# Add X and Y

Z = X + Y

Z

```

Multiplying a numpy array by a scaler is identical to multiplying a matrix by a scaler. If we multiply the matrix <code>Y</code> by the scaler 2, we simply multiply every element in the matrix by 2 as shown in the figure.

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoDb.png" width="500" />

We can perform the same operation in numpy as follows

```

# Create a numpy array Y

Y = np.array([[2, 1], [1, 2]])

Y

# Multiply Y with 2

Z = 2 * Y

Z

```

Multiplication of two arrays corresponds to an element-wise product or Hadamard product. Consider matrix <code>X</code> and <code>Y</code>. The Hadamard product corresponds to multiplying each of the elements in the same position, i.e. multiplying elements contained in the same color boxes together. The result is a new matrix that is the same size as matrix <code>Y</code> or <code>X</code>, as shown in the following figure.

<img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Chapter%205/Images/NumTwoMul.png" width="500" />

We can perform element-wise product of the array <code>X</code> and <code>Y</code> as follows:

```

# Create a numpy array Y

Y = np.array([[2, 1], [1, 2]])

Y

# Create a numpy array X

X = np.array([[1, 0], [0, 1]])

X

# Multiply X with Y

Z = X * Y

Z

```

We can also perform matrix multiplication with the numpy arrays <code>A</code> and <code>B</code> as follows:

First, we define matrix <code>A</code> and <code>B</code>:

```

# Create a matrix A

A = np.array([[0, 1, 1], [1, 0, 1]])

A

# Create a matrix B

B = np.array([[1, 1], [1, 1], [-1, 1]])

B

```

We use the numpy function <code>dot</code> to multiply the arrays together.

```

# Calculate the dot product

Z = np.dot(A,B)

Z

# Calculate the sine of Z

np.sin(Z)

```

We use the numpy attribute <code>T</code> to calculate the transposed matrix

```

# Create a matrix C

C = np.array([[1,1],[2,2],[3,3]])

C

# Get the transposed of C

C.T

```

<hr>

<h2>The last exercise!</h2>

<p>Congratulations, you have completed your first lesson and hands-on lab in Python. However, there is one more thing you need to do. The Data Science community encourages sharing work. The best way to share and showcase your work is to share it on GitHub. By sharing your notebook on GitHub you are not only building your reputation with fellow data scientists, but you can also show it off when applying for a job. Even though this was your first piece of work, it is never too early to start building good habits. So, please read and follow <a href="https://cognitiveclass.ai/blog/data-scientists-stand-out-by-sharing-your-notebooks/" target="_blank">this article</a> to learn how to share your work.

<hr>

<div class="alert alert-block alert-info" style="margin-top: 20px">

<h2>Get IBM Watson Studio free of charge!</h2>

<p><a href="https://cocl.us/bottemNotebooksPython101Coursera"><img src="https://s3-api.us-geo.objectstorage.softlayer.net/cf-courses-data/CognitiveClass/PY0101EN/Ad/BottomAd.png" width="750" align="center"></a></p>

</div>

<h3>About the Authors:</h3>

<p><a href="https://www.linkedin.com/in/joseph-s-50398b136/" target="_blank">Joseph Santarcangelo</a> is a Data Scientist at IBM, and holds a PhD in Electrical Engineering. His research focused on using Machine Learning, Signal Processing, and Computer Vision to determine how videos impact human cognition. Joseph has been working for IBM since he completed his PhD.</p>

Other contributors: <a href="www.linkedin.com/in/jiahui-mavis-zhou-a4537814a">Mavis Zhou</a>

<hr>

<p>Copyright © 2018 IBM Developer Skills Network. This notebook and its source code are released under the terms of the <a href="https://cognitiveclass.ai/mit-license/">MIT License</a>.</p>

| github_jupyter |

```

import scipy.special as sps

import pyro

import pyro.distributions as dist

import torch

from torch.distributions import constraints

from pyro.infer import MCMC, NUTS

from scipy.stats import norm

from torch import nn

from pyro.infer.autoguide import AutoDiagonalNormal

from pyro.nn import PyroModule

from pyro import optim

from pyro.infer import SVI, Trace_ELBO

from pyro.nn import PyroSample

from pyro.infer import Predictive

pyro.enable_validation(True)

pyro.set_rng_seed(1)

pyro.enable_validation(True)

import os

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

import statsmodels.api as sm

import statsmodels

#import HNSCC_analysis_pipeline_lib as lib

import pickle as pkl

import seaborn as sbn

print(pyro.__version__)

assert pyro.__version__.startswith('1.1.0')

import time

from __future__ import print_function

from ipywidgets import interact, interactive, fixed, interact_manual

import ipywidgets as widgets

from scipy.stats import norm, gamma, poisson, beta

%matplotlib inline

```

# Hill-Langmuir Bayesian Regression

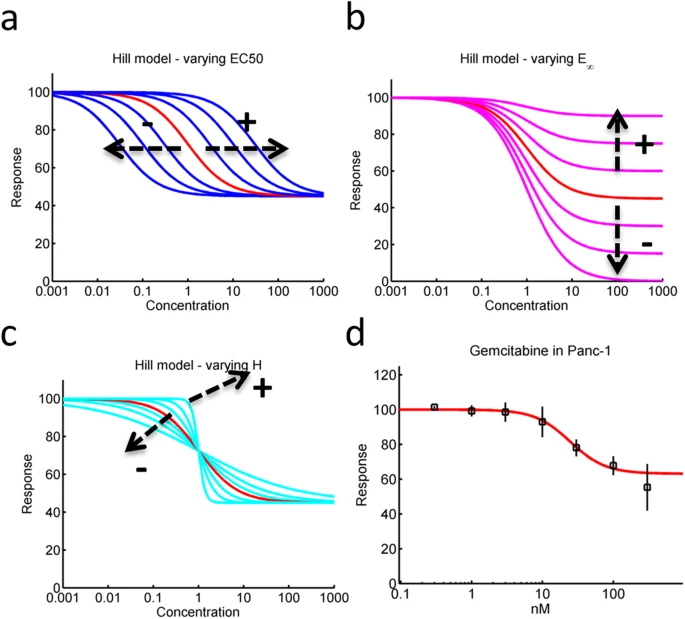

Goals similar to: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3773943/pdf/nihms187302.pdf

However, they use a different paramerization that does not include Emax

# Bayesian Hill Model Regression

The Hill model is defined as:

$$ F(c, E_{max}, E_0, EC_{50}, H) = E_0 + \frac{E_{max} - E_0}{1 + (\frac{EC_{50}}{C})^H} $$

Where concentration, $c$ is in uM, and is *not* in logspace.