text

stringlengths 2.5k

6.39M

| kind

stringclasses 3

values |

|---|---|

### Introduction

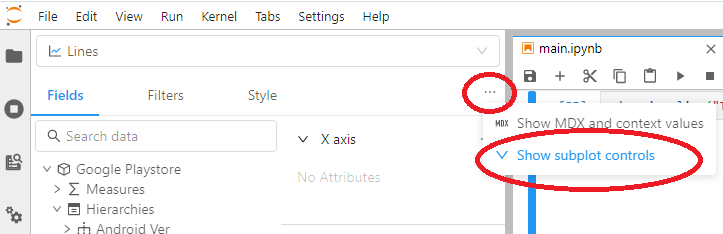

The `Lines` object provides the following features:

1. Ability to plot a single set or multiple sets of y-values as a function of a set or multiple sets of x-values

2. Ability to style the line object in different ways, by setting different attributes such as the `colors`, `line_style`, `stroke_width` etc.

3. Ability to specify a marker at each point passed to the line. The marker can be a shape which is at the data points between which the line is interpolated and can be set through the `markers` attribute

The `Lines` object has the following attributes

| Attribute | Description | Default Value |

|:-:|---|:-:|

| `colors` | Sets the color of each line, takes as input a list of any RGB, HEX, or HTML color name | `CATEGORY10` |

| `opacities` | Controls the opacity of each line, takes as input a real number between 0 and 1 | `1.0` |

| `stroke_width` | Real number which sets the width of all paths | `2.0` |

| `line_style` | Specifies whether a line is solid, dashed, dotted or both dashed and dotted | `'solid'` |

| `interpolation` | Sets the type of interpolation between two points | `'linear'` |

| `marker` | Specifies the shape of the marker inserted at each data point | `None` |

| `marker_size` | Controls the size of the marker, takes as input a non-negative integer | `64` |

|`close_path`| Controls whether to close the paths or not | `False` |

|`fill`| Specifies in which way the paths are filled. Can be set to one of `{'none', 'bottom', 'top', 'inside'}`| `None` |

|`fill_colors`| `List` that specifies the `fill` colors of each path | `[]` |

| **Data Attribute** | **Description** | **Default Value** |

|`x` |abscissas of the data points | `array([])` |

|`y` |ordinates of the data points | `array([])` |

|`color` | Data according to which the `Lines` will be colored. Setting it to `None` defaults the choice of colors to the `colors` attribute | `None` |

## pyplot's plot method can be used to plot lines with meaningful defaults

```

import numpy as np

from pandas import date_range

import bqplot.pyplot as plt

from bqplot import *

security_1 = np.cumsum(np.random.randn(150)) + 100.

security_2 = np.cumsum(np.random.randn(150)) + 100.

```

## Basic Line Chart

```

fig = plt.figure(title='Security 1')

axes_options = {'x': {'label': 'Index'}, 'y': {'label': 'Price'}}

# x values default to range of values when not specified

line = plt.plot(security_1, axes_options=axes_options)

fig

```

**We can explore the different attributes by changing each of them for the plot above:**

```

line.colors = ['DarkOrange']

```

In a similar way, we can also change any attribute after the plot has been displayed to change the plot. Run each of the cells below, and try changing the attributes to explore the different features and how they affect the plot.

```

# The opacity allows us to display the Line while featuring other Marks that may be on the Figure

line.opacities = [.5]

line.stroke_width = 2.5

```

To switch to an area chart, set the `fill` attribute, and control the look with `fill_opacities` and `fill_colors`.

```

line.fill = 'bottom'

line.fill_opacities = [0.2]

line.line_style = 'dashed'

line.interpolation = 'basis'

```

While a `Lines` plot allows the user to extract the general shape of the data being plotted, there may be a need to visualize discrete data points along with this shape. This is where the `markers` attribute comes in.

```

line.marker = 'triangle-down'

```

The `marker` attributes accepts the values `square`, `circle`, `cross`, `diamond`, `square`, `triangle-down`, `triangle-up`, `arrow`, `rectangle`, `ellipse`. Try changing the string above and re-running the cell to see how each `marker` type looks.

## Plotting a Time-Series

The `DateScale` allows us to plot time series as a `Lines` plot conveniently with most `date` formats.

```

# Here we define the dates we would like to use

dates = date_range(start='01-01-2007', periods=150)

fig = plt.figure(title='Time Series')

axes_options = {'x': {'label': 'Date'}, 'y': {'label': 'Security 1'}}

time_series = plt.plot(dates, security_1,

axes_options=axes_options)

fig

```

## Plotting multiples sets of data

The `Lines` mark allows the user to plot multiple `y`-values for a single `x`-value. This can be done by passing an `ndarray` or a list of the different `y`-values as the y-attribute of the `Lines` as shown below.

```

dates_new = date_range(start='06-01-2007', periods=150)

```

We pass each data set as an element of a `list`

```

fig = plt.figure()

axes_options = {'x': {'label': 'Date'}, 'y': {'label': 'Price'}}

line = plt.plot(dates, [security_1, security_2],

labels=['Security 1', 'Security 2'],

axes_options=axes_options,

display_legend=True)

fig

```

Similarly, we can also pass multiple `x`-values for multiple sets of `y`-values

```

line.x, line.y = [dates, dates_new], [security_1, security_2]

```

### Coloring Lines according to data

The `color` attribute of a `Lines` mark can also be used to encode one more dimension of data. Suppose we have a portfolio of securities and we would like to color them based on whether we have bought or sold them. We can use the `color` attribute to encode this information.

```

fig = plt.figure()

axes_options = {'x': {'label': 'Date'},

'y': {'label': 'Security 1'},

'color' : {'visible': False}}

# add a custom color scale to color the lines

plt.scales(scales={'color': ColorScale(colors=['Red', 'Green'])})

dates_color = date_range(start='06-01-2007', periods=150)

securities = 100. + np.cumsum(np.random.randn(150, 10), axis=0)

# we generate 10 random price series and 10 random positions

positions = np.random.randint(0, 2, size=10)

# We pass the color scale and the color data to the plot method

line = plt.plot(dates_color, securities.T, color=positions,

axes_options=axes_options)

fig

```

We can also reset the colors of the Line to their defaults by setting the `color` attribute to `None`.

```

line.color = None

```

## Patches

The `fill` attribute of the `Lines` mark allows us to fill a path in different ways, while the `fill_colors` attribute lets us control the color of the `fill`

```

fig = plt.figure(animation_duration=1000)

patch = plt.plot([],[],

fill_colors=['orange', 'blue', 'red'],

fill='inside',

axes_options={'x': {'visible': False}, 'y': {'visible': False}},

stroke_width=10,

close_path=True,

display_legend=True)

patch.x = [[0, 2, 1.2, np.nan, np.nan, np.nan, np.nan], [0.5, 2.5, 1.7 , np.nan, np.nan, np.nan, np.nan], [4, 5, 6, 6, 5, 4, 3]],

patch.y = [[0, 0, 1 , np.nan, np.nan, np.nan, np.nan], [0.5, 0.5, -0.5, np.nan, np.nan, np.nan, np.nan], [1, 1.1, 1.2, 2.3, 2.2, 2.7, 1.0]]

fig

patch.opacities = [0.1, 0.2]

patch.x = [[2, 3, 3.2, np.nan, np.nan, np.nan, np.nan], [0.5, 2.5, 1.7, np.nan, np.nan, np.nan, np.nan], [4,5,6, 6, 5, 4, 3]]

patch.close_path = False

```

|

github_jupyter

|

# Clean-Label Feature Collision Attacks on a Keras Classifier

In this notebook, we will learn how to use ART to run a clean-label feature collision poisoning attack on a neural network trained with Keras. We will be training our data on a subset of the CIFAR-10 dataset. The methods described are derived from [this paper](https://arxiv.org/abs/1804.00792) by Shafahi, Huang, et. al. 2018.

```

import os, sys

from os.path import abspath

module_path = os.path.abspath(os.path.join('..'))

if module_path not in sys.path:

sys.path.append(module_path)

import warnings

warnings.filterwarnings('ignore')

from keras.models import load_model

from art import config

from art.utils import load_dataset, get_file

from art.estimators.classification import KerasClassifier

from art.attacks.poisoning import FeatureCollisionAttack

import numpy as np

%matplotlib inline

import matplotlib.pyplot as plt

np.random.seed(301)

(x_train, y_train), (x_test, y_test), min_, max_ = load_dataset('cifar10')

num_samples_train = 1000

num_samples_test = 1000

x_train = x_train[0:num_samples_train]

y_train = y_train[0:num_samples_train]

x_test = x_test[0:num_samples_test]

y_test = y_test[0:num_samples_test]

class_descr = ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

```

## Load Model to be Attacked

In this example, we using a RESNET50 model pretrained on the CIFAR dataset.

```

path = get_file('cifar_alexnet.h5',extract=False, path=config.ART_DATA_PATH,

url='https://www.dropbox.com/s/ta75pl4krya5djj/cifar_alexnet.h5?dl=1')

classifier_model = load_model(path)

classifier = KerasClassifier(clip_values=(min_, max_), model=classifier_model, use_logits=False,

preprocessing=(0.5, 1))

```

## Choose Target Image from Test Set

```

target_class = "bird" # one of ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

target_label = np.zeros(len(class_descr))

target_label[class_descr.index(target_class)] = 1

target_instance = np.expand_dims(x_test[np.argmax(y_test, axis=1) == class_descr.index(target_class)][3], axis=0)

fig = plt.imshow(target_instance[0])

print('true_class: ' + target_class)

print('predicted_class: ' + class_descr[np.argmax(classifier.predict(target_instance), axis=1)[0]])

feature_layer = classifier.layer_names[-2]

```

## Poison Training Images to Misclassify Test

The attacker wants to make it such that whenever a prediction is made on this particular cat the output will be a horse.

```

base_class = "frog" # one of ['airplane', 'automobile', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

base_idxs = np.argmax(y_test, axis=1) == class_descr.index(base_class)

base_instances = np.copy(x_test[base_idxs][:10])

base_labels = y_test[base_idxs][:10]

x_test_pred = np.argmax(classifier.predict(base_instances), axis=1)

nb_correct_pred = np.sum(x_test_pred == np.argmax(base_labels, axis=1))

print("New test data to be poisoned (10 images):")

print("Correctly classified: {}".format(nb_correct_pred))

print("Incorrectly classified: {}".format(10-nb_correct_pred))

plt.figure(figsize=(10,10))

for i in range(0, 9):

pred_label, true_label = class_descr[x_test_pred[i]], class_descr[np.argmax(base_labels[i])]

plt.subplot(330 + 1 + i)

fig=plt.imshow(base_instances[i])

fig.axes.get_xaxis().set_visible(False)

fig.axes.get_yaxis().set_visible(False)

fig.axes.text(0.5, -0.1, pred_label + " (" + true_label + ")", fontsize=12, transform=fig.axes.transAxes,

horizontalalignment='center')

```

The captions on the images can be read: `predicted label (true label)`

## Creating Poison Frogs

```

attack = FeatureCollisionAttack(classifier, target_instance, feature_layer, max_iter=10, similarity_coeff=256, watermark=0.3)

poison, poison_labels = attack.poison(base_instances)

poison_pred = np.argmax(classifier.predict(poison), axis=1)

plt.figure(figsize=(10,10))

for i in range(0, 9):

pred_label, true_label = class_descr[poison_pred[i]], class_descr[np.argmax(poison_labels[i])]

plt.subplot(330 + 1 + i)

fig=plt.imshow(poison[i])

fig.axes.get_xaxis().set_visible(False)

fig.axes.get_yaxis().set_visible(False)

fig.axes.text(0.5, -0.1, pred_label + " (" + true_label + ")", fontsize=12, transform=fig.axes.transAxes,

horizontalalignment='center')

```

Notice how the network classifies most of theses poison examples as frogs, and it's not incorrect to do so. The examples look mostly froggy. A slight watermark of the target instance is also added to push the poisons closer to the target class in feature space.

## Training with Poison Images

```

classifier.set_learning_phase(True)

print(x_train.shape)

print(base_instances.shape)

adv_train = np.vstack([x_train, poison])

adv_labels = np.vstack([y_train, poison_labels])

classifier.fit(adv_train, adv_labels, nb_epochs=5, batch_size=4)

```

## Fooled Network Misclassifies Bird

```

fig = plt.imshow(target_instance[0])

print('true_class: ' + target_class)

print('predicted_class: ' + class_descr[np.argmax(classifier.predict(target_instance), axis=1)[0]])

```

These attacks allow adversaries who can poison your dataset the ability to mislabel any particular target instance of their choosing without manipulating labels.

|

github_jupyter

|

# Lecture 12: Canonical Economic Models

[Download on GitHub](https://github.com/NumEconCopenhagen/lectures-2022)

[<img src="https://mybinder.org/badge_logo.svg">](https://mybinder.org/v2/gh/NumEconCopenhagen/lectures-2022/master?urlpath=lab/tree/12/Canonical_economic_models.ipynb)

1. [OverLapping Generations (OLG) model](#OverLapping-Generations-(OLG)-model)

2. [Ramsey model](#Ramsey-model)

3. [Further perspectives](#Further-perspectives)

You will learn how to solve **two canonical economic models**:

1. The **overlapping generations (OLG) model**

2. The **Ramsey model**

**Main take-away:** Hopefully inspiration to analyze such models on your own.

```

%load_ext autoreload

%autoreload 2

import numpy as np

from scipy import optimize

# plotting

import matplotlib.pyplot as plt

plt.style.use('seaborn-whitegrid')

plt.rcParams.update({'font.size': 12})

# models

from OLGModel import OLGModelClass

from RamseyModel import RamseyModelClass

```

<a id="OverLapping-Generations-(OLG)-model"></a>

# 1. OverLapping Generations (OLG) model

## 1.1 Model description

**Time:** Discrete and indexed by $t\in\{0,1,\dots\}$.

**Demographics:** Population is constant. A life consists of

two periods, *young* and *old*.

**Households:** As young a household supplies labor exogenously, $L_{t}=1$, and earns a after tax wage $(1-\tau_w)w_{t}$. Consumption as young and old

are denoted by $C_{1t}$ and $C_{2t+1}$. The after-tax return on saving is $(1-\tau_{r})r_{t+1}$. Utility is

$$

\begin{aligned}

U & =\max_{s_{t}\in[0,1]}\frac{C_{1t}^{1-\sigma}}{1-\sigma}+\beta\frac{C_{1t+1}^{1-\sigma}}{1-\sigma},\,\,\,\beta > -1, \sigma > 0\\

& \text{s.t.}\\

& S_{t}=s_{t}(1-\tau_{w})w_{t}\\

& C_{1t}=(1-s_{t})(1-\tau_{w})w_{t}\\

& C_{2t+1}=(1+(1-\tau_{r})r_{t+1})S_{t}

\end{aligned}

$$

The problem is formulated in terms of the saving rate $s_t\in[0,1]$.

**Firms:** Firms rent capital $K_{t-1}$ at the rental rate $r_{t}^{K}$,

and hires labor $E_{t}$ at the wage rate $w_{t}$. Firms have access

to the production function

$$

\begin{aligned}

Y_{t}=F(K_{t-1},E_{t})=(\alpha K_{t-1}^{-\theta}+(1-\alpha)E_{t}^{-\theta})^{\frac{1}{-\theta}},\,\,\,\theta>-1,\alpha\in(0,1)

\end{aligned}

$$

Profits are

$$

\begin{aligned}

\Pi_{t}=Y_{t}-w_{t}E_{t}-r_{t}^{K}K_{t-1}

\end{aligned}

$$

**Government:** Choose public consumption, $G_{t}$, and tax rates $\tau_w \in [0,1]$ and $\tau_r \in [0,1]$. Total tax revenue is

$$

\begin{aligned}

T_{t} &=\tau_r r_{t} (K_{t-1}+B_{t-1})+\tau_w w_{t}

\end{aligned}

$$

Government debt accumulates according to

$$

\begin{aligned}

B_{t} &=(1+r^b_{t})B_{t-1}-T_{t}+G_{t}

\end{aligned}

$$

A *balanced budget* implies $G_{t}=T_{t}-r_{t}B_{t-1}$.

**Capital:** Depreciates with a rate of $\delta \in [0,1]$.

**Equilibrium:**

1. Households maximize utility

2. Firms maximize profits

3. No-arbitrage between bonds and capital

$$

r_{t}=r_{t}^{K}-\delta=r_{t}^{b}

$$

4. Labor market clears: $E_{t}=L_{t}=1$

5. Goods market clears: $Y_{t}=C_{1t}+C_{2t}+G_{t}+I_{t}$

6. Asset market clears: $S_{t}=K_{t}+B_{t}$

7. Capital follows its law of motion: $K_{t}=(1-\delta)K_{t-1}+I_{t}$

**For more details on the OLG model:** See chapter 3-4 [here](https://web.econ.ku.dk/okocg/VM/VM-general/Material/Chapters-VM.htm).

## 1.2 Solution and simulation

**Implication of profit maximization:** From FOCs

$$

\begin{aligned}

r_{t}^{k} & =F_{K}(K_{t-1},E_{t})=\alpha K_{t-1}^{-\theta-1}Y_{t}^{1+\theta}\\

w_{t} & =F_{E}(K_{t-1},E_{t})=(1-\alpha)E_{t}^{-\theta-1}Y_{t}^{1+\theta}

\end{aligned}

$$

**Implication of utility maximization:** From FOC

$$

\begin{aligned}

C_{1t}^{-\sigma}=\beta (1+(1-\tau_r)r_{t+1})C_{2t+1}^{-\sigma}

\end{aligned}

$$

**Simulation algorithm:** At the beginning of period $t$, the

economy can be summarized in the state variables $K_{t-1}$ and $B_{t-1}$. *Before* $s_t$ is known, we can calculate:

$$

\begin{aligned}

Y_{t} & =F(K_{t-1},1)\\

r_{t}^{k} & =F_{K}(K_{t-1},1)\\

w_{t} & =F_{E}(K_{t-1},1)\\

r_{t} & =r^k_{t}-\delta\\

r_{t}^{b} & =r_{t}\\

\tilde{r}_{t} & =(1-\tau_{r})r_{t}\\

C_{2t} & =(1+\tilde{r}_{t})(K_{t-1}+B_{t-1})\\

T_{t} & =\tau_{r}r_{t}(K_{t-1}+B_{t-1})+\tau_{w}w_{t}\\

B_{t} & =(1+r^b_{t})B_{t-1}+T_{t}-G_{t}\\

\end{aligned}

$$

*After* $s_t$ is known we can calculate:

$$

\begin{aligned}

C_{1t} & = (1-s_{t})(1-\tau_{w})w_{t}\\

I_{t} & =Y_{t}-C_{1t}-C_{2t}-G_{t}\\

K_{t} & =(1-\delta)K_{t-1} + I_t

\end{aligned}

$$

**Solution algorithm:** Simulate forward choosing $s_{t}$ so

that we always have

$$

\begin{aligned}

C_{1t}^{-\sigma}=\beta(1+\tilde{r}_{t+1})C_{2t+1}^{-\sigma}

\end{aligned}

$$

**Implementation:**

1. Use a bisection root-finder to determine $s_t$

2. Low $s_t$: A lot of consumption today. Low marginal utility. LHS < RHS.

3. High $s_t$: Little consumption today. High marginal utility. LHS > RHS.

4. Problem: Too low $s_t$ might not be feasible if $B_t > 0$.

**Note:** Never errors in the Euler-equation due to *perfect foresight*.

**Question:** Are all the requirements for the equilibrium satisfied?

## 1.3 Test case

1. Production is Cobb-Douglas ($\theta = 0$)

2. Utility is logarithmic ($\sigma = 1$)

3. The government is not doing anything ($\tau_w=\tau_r=0$, $T_t = G_t = 0$ and $B_t = 0$)

**Analytical steady state:** It can be proven

$$ \lim_{t\rightarrow\infty} K_t = \left(\frac{1-\alpha}{1+1/\beta}\right)^{\frac{1}{1-\alpha}} $$

**Setup:**

```

model = OLGModelClass()

par = model.par # SimpeNamespace

sim = model.sim # SimpeNamespace

# a. production

par.production_function = 'cobb-douglas'

par.theta = 0.0

# b. households

par.sigma = 1.0

# c. government

par.tau_w = 0.0

par.tau_r = 0.0

sim.balanced_budget[:] = True # G changes to achieve this

# d. initial values

K_ss = ((1-par.alpha)/((1+1.0/par.beta)))**(1/(1-par.alpha))

par.K_lag_ini = 0.1*K_ss

```

### Simulate first period manually

```

from OLGModel import simulate_before_s, simulate_after_s, find_s_bracket, calc_euler_error

```

**Make a guess:**

```

s_guess = 0.41

```

**Evaluate first period:**

```

# a. initialize

sim.K_lag[0] = par.K_lag_ini

sim.B_lag[0] = par.B_lag_ini

simulate_before_s(par,sim,t=0)

print(f'{sim.C2[0] = : .4f}')

simulate_after_s(par,sim,s=s_guess,t=0)

print(f'{sim.C1[0] = : .4f}')

simulate_before_s(par,sim,t=1)

print(f'{sim.C2[1] = : .4f}')

print(f'{sim.rt[1] = : .4f}')

LHS_Euler = sim.C1[0]**(-par.sigma)

RHS_Euler = (1+sim.rt[1])*par.beta * sim.C2[1]**(-par.sigma)

print(f'euler-error = {LHS_Euler-RHS_Euler:.8f}')

```

**Implemented as function:**

```

euler_error = calc_euler_error(s_guess,par,sim,t=0)

print(f'euler-error = {euler_error:.8f}')

```

**Find bracket to search in:**

```

s_min,s_max = find_s_bracket(par,sim,t=0,do_print=True);

```

**Call root-finder:**

```

obj = lambda s: calc_euler_error(s,par,sim,t=0)

result = optimize.root_scalar(obj,bracket=(s_min,s_max),method='bisect')

print(result)

```

**Check result:**

```

euler_error = calc_euler_error(result.root,par,sim,t=0)

print(f'euler-error = {euler_error:.8f}')

```

### Full simulation

```

model.simulate()

```

**Check euler-errors:**

```

for t in range(5):

LHS_Euler = sim.C1[t]**(-par.sigma)

RHS_Euler = (1+sim.rt[t+1])*par.beta * sim.C2[t+1]**(-par.sigma)

print(f't = {t:2d}: euler-error = {LHS_Euler-RHS_Euler:.8f}')

```

**Plot and check with analytical solution:**

```

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(model.sim.K_lag,label=r'$K_{t-1}$')

ax.axhline(K_ss,ls='--',color='black',label='analytical steady state')

ax.legend(frameon=True)

fig.tight_layout()

K_lag_old = model.sim.K_lag.copy()

```

**Task:** Test if the starting point matters?

**Additional check:** Not much should change with only small parameter changes.

```

# a. production (close to cobb-douglas)

par.production_function = 'ces'

par.theta = 0.001

# b. household (close to logarithmic)

par.sigma = 1.1

# c. goverment (weakly active)

par.tau_w = 0.001

par.tau_r = 0.001

# d. simulate

model.simulate()

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(model.sim.K_lag,label=r'$K_{t-1}$')

ax.plot(K_lag_old,label=r'$K_{t-1}$ ($\theta = 0.0, \sigma = 1.0$, inactive government)')

ax.axhline(K_ss,ls='--',color='black',label='analytical steady state (wrong)')

ax.legend(frameon=True)

fig.tight_layout()

```

## 1.4 Active government

```

model = OLGModelClass()

par = model.par

sim = model.sim

```

**Baseline:**

```

model.simulate()

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(sim.K_lag/(sim.Y),label=r'$\frac{K_{t-1}}{Y_t}$')

ax.plot(sim.B_lag/(sim.Y),label=r'$\frac{B_{t-1}}{Y_t}$')

ax.legend(frameon=True)

fig.tight_layout()

```

**Remember steady state:**

```

K_ss = sim.K_lag[-1]

B_ss = sim.B_lag[-1]

G_ss = sim.G[-1]

```

**Spending spree of 5% in $T=3$ periods:**

```

# a. start from steady state

par.K_lag_ini = K_ss

par.B_lag_ini = B_ss

# b. spending spree

T0 = 0

dT = 3

sim.G[T0:T0+dT] = 1.05*G_ss

sim.balanced_budget[:T0] = True #G adjusts

sim.balanced_budget[T0:T0+dT] = False # B adjusts

sim.balanced_budget[T0+dT:] = True # G adjusts

```

**Simulate:**

```

model.simulate()

```

**Crowding-out of capital:**

```

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(sim.K/(sim.Y),label=r'$\frac{K_{t-1}}{Y_t}$')

ax.plot(sim.B/(sim.Y),label=r'$\frac{B_{t-1}}{Y_t}$')

ax.legend(frameon=True)

fig.tight_layout()

```

**Question:** Would the households react today if the spending spree is say 10 periods in the future?

## 1.5 Getting an overview

1. Spend 3 minutes looking at `OLGModel.py`

2. Write one question at [https://b.socrative.com/login/student/](https://b.socrative.com/login/student/) with `ROOM=NUMECON`

## 1.6 Potential analysis and extension

**Potential analysis:**

1. Over-accumulation of capital relative to golden rule?

2. Calibration to actual data

3. Generational inequality

4. Multiple equilibria

**Extensions:**

1. Add population and technology growth

2. More detailed tax and transfer system

3. Utility and productive effect of government consumption/investment

4. Endogenous labor supply

5. Bequest motive

6. Uncertain returns on capital

7. Additional assets (e.g. housing)

8. More than two periods in the life-cycle (life-cycle)

9. More than one dynasty (cross-sectional inequality dynamics)

<a id="Ramsey-model"></a>

# 2. Ramsey model

... also called the Ramsey-Cass-Koopman model.

## 2.1 Model descripton

**Time:** Discrete and indexed by $t\in\{0,1,\dots\}$.

**Demographics::** Population is constant. Everybody lives forever.

**Household:** Households supply labor exogenously, $L_{t}=1$, and earns a wage $w_{t}$. The return on saving is $r_{t+1}$. Utility is

$$

\begin{aligned}

U & =\max_{\{C_{t}\}_{t=0}^{\infty}}\sum_{t=0}^{\infty}\beta^{t}\frac{C_{t}^{1-\sigma}}{1-\sigma},\beta\in(0,1),\sigma>0\\

& \text{s.t.}\\

& M_{t}=(1+r_{t})N_{t-1}+w_{t}\\

& N_{t}=M_{t}-C_{t}

\end{aligned}

$$

where $M_{t}$ is cash-on-hand and $N_{t}$ is end-of-period assets.

**Firms:** Firms rent capital $K_{t-1}$ at the rental rate $r_{t}^{K}$

and hires labor $E_{t}$ at the wage rate $w_{t}$. Firms have access

to the production function

$$

\begin{aligned}

Y_{t}= F(K_{t-1},E_{t})=A_t(\alpha K_{t-1}^{-\theta}+(1-\alpha)E_{t}^{-\theta})^{\frac{1}{-\theta}},\,\,\,\theta>-1,\alpha\in(0,1),A_t>0

\end{aligned}

$$

Profits are

$$

\begin{aligned}

\Pi_{t}=Y_{t}-w_{t}E_{t}-r_{t}^{K}K_{t-1}

\end{aligned}

$$

**Equilibrium:**

1. Households maximize utility

2. Firms maximize profits

3. Labor market clear: $E_{t}=L_{t}=1$

4. Goods market clear: $Y_{t}=C_{t}+I_{t}$

5. Asset market clear: $N_{t}=K_{t}$ and $r_{t}=r_{t}^{k}-\delta$

6. Capital follows its law of motion: $K_{t}=(1-\delta)K_{t-1}+I_{t}$

**Implication of profit maximization:** From FOCs

$$

\begin{aligned}

r_{t}^{k} & = F_{K}(K_{t-1},E_{t})=A_t \alpha K_{t-1}^{-\theta-1}Y_{t}^{-1}\\

w_{t} & = F_{E}(K_{t-1},E_{t})=A_t (1-\alpha)E_{t}^{-\theta-1}Y_{t}^{-1}

\end{aligned}

$$

**Implication of utility maximization:** From FOCs

$$

\begin{aligned}

C_{t}^{-\sigma}=\beta(1+r_{t+1})C_{t+1}^{-\sigma}

\end{aligned}

$$

**Solution algorithm:**

We can summarize the model in the **non-linear equation system**

$$

\begin{aligned}

\boldsymbol{H}(\boldsymbol{K},\boldsymbol{C},K_{-1})=\left[\begin{array}{c}

H_{0}\\

H_{1}\\

\begin{array}{c}

\vdots\end{array}

\end{array}\right]=\left[\begin{array}{c}

0\\

0\\

\begin{array}{c}

\vdots\end{array}

\end{array}\right]

\end{aligned}

$$

where $\boldsymbol{K} = [K_0,K_1\dots]$, $\boldsymbol{C} = [C_0,C_1\dots]$, and

$$

\begin{aligned}

H_{t}

=\left[\begin{array}{c}

C_{t}^{-\sigma}-\beta(1+r_{t+1})C_{t+1}^{-\sigma}\\

K_{t}-[(1-\delta)K_{t-1}+Y_t-C_{t}]

\end{array}\right]

=\left[\begin{array}{c}

C_{t}^{-\sigma}-\beta(1+F_{K}(K_{t},1))C_{t+1}^{-\sigma}\\

K_{t}-[(1-\delta)K_{t-1} + F(K_{t-1},1)-C_{t}])

\end{array}\right]

\end{aligned}

$$

**Path:** We refer to $\boldsymbol{K}$ and $\boldsymbol{C}$ as *transition paths*.

**Implementation:** We solve this equation system in **two steps**:

1. Assume all variables are in steady state after some **truncation horizon**.

1. Calculate the numerical **jacobian** of $\boldsymbol{H}$ wrt. $\boldsymbol{K}$

and $\boldsymbol{C}$ around the steady state

2. Solve the equation system using a **hand-written Broyden-solver**

**Note:** The equation system can also be solved directly using `scipy.optimize.root`.

**Remember:** The jacobian is just a gradient. I.e. the matrix of what the implied errors are in $\boldsymbol{H}$ when a *single* $K_t$ or $C_t$ change.

## 2.2 Solution

```

model = RamseyModelClass()

par = model.par

ss = model.ss

path = model.path

```

**Find steady state:**

1. Target steady-state capital-output ratio, $K_{ss}/Y_{ss}$ of 4.0.

2. Force steady-state output $Y_{ss} = 1$.

3. Adjust $\beta$ and $A_{ss}$ to achieve this.

```

model.find_steady_state(KY_ss=4.0)

```

**Test that errors and the path are 0:**

```

# a. set initial value

par.K_lag_ini = ss.K

# b. set path

path.A[:] = ss.A

path.C[:] = ss.C

path.K[:] = ss.K

# c. check errors

errors_ss = model.evaluate_path_errors()

assert np.allclose(errors_ss,0.0)

model.calculate_jacobian()

```

**Solve:**

```

par.K_lag_ini = 0.50*ss.K # start away from steady state

model.solve() # find transition path

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(path.K_lag,label=r'$K_{t-1}$')

ax.legend(frameon=True)

fig.tight_layout()

```

## 2.3 Comparison with scipy solution

**Note:** scipy computes the jacobian internally

```

model_scipy = RamseyModelClass()

model_scipy.par.solver = 'scipy'

model_scipy.find_steady_state(KY_ss=4.0)

model_scipy.par.K_lag_ini = 0.50*model_scipy.ss.K

model_scipy.path.A[:] = model_scipy.ss.A

model_scipy.solve()

fig = plt.figure(figsize=(6,6/1.5))

ax = fig.add_subplot(1,1,1)

ax.plot(path.K_lag,label=r'$K_{t-1}$, broyden')

ax.plot(model_scipy.path.K_lag,ls='--',label=r'$K_{t-1}$, scipy')

ax.legend(frameon=True)

fig.tight_layout()

```

## 2.4 Persistent technology shock

**Shock:**

```

par.K_lag_ini = ss.K # start from steady state

path.A[:] = 0.95**np.arange(par.Tpath)*0.1*ss.A + ss.A # shock path

```

**Terminology:** This is called an MIT-shock. Households do not expect shocks. Know the full path of the shock when it arrives. Continue to believe no future shocks will happen.

**Solve:**

```

model.solve()

fig = plt.figure(figsize=(2*6,6/1.5))

ax = fig.add_subplot(1,2,1)

ax.set_title('Capital, $K_{t-1}$')

ax.plot(path.K_lag)

ax = fig.add_subplot(1,2,2)

ax.plot(path.A)

ax.set_title('Technology, $A_t$')

fig.tight_layout()

```

**Question:** Could a much more persistent shock be problematic?

## 2.5 Future persistent technology shock

**Shock happing after period $H$:**

```

par.K_lag_ini = ss.K # start from steady state

# shock

H = 50

path.A[:] = ss.A

path.A[H:] = 0.95**np.arange(par.Tpath-H)*0.1*ss.A + ss.A

```

**Solve:**

```

model.solve()

fig = plt.figure(figsize=(2*6,6/1.5))

ax = fig.add_subplot(1,2,1)

ax.set_title('Capital, $K_{t-1}$')

ax.plot(path.K_lag)

ax = fig.add_subplot(1,2,2)

ax.plot(path.A)

ax.set_title('Technology, $A_t$')

fig.tight_layout()

par.K_lag_ini = path.K[30]

path.A[:] = ss.A

model.solve()

```

**Take-away:** Households are forward looking and responds before the shock hits.

## 2.6 Getting an overview

1. Spend 3 minutes looking at `RamseyModel.py`

2. Write one question at [https://b.socrative.com/login/student/](https://b.socrative.com/login/student/) with `ROOM=NUMECON`

## 2.7 Potential analysis and extension

**Potential analysis:**

1. Different shocks (e.g. discount factor)

2. Multiple shocks

3. Permanent shocks ($\rightarrow$ convergence to new steady state)

4. Transition speed

**Extensions:**

1. Add a government and taxation

2. Endogenous labor supply

3. Additional assets (e.g. housing)

4. Add nominal rigidities (New Keynesian)

<a id="Further-perspectives"></a>

# 3. Further perspectives

**The next steps beyond this course:**

1. The **Bewley-Huggett-Aiyagari** model. A multi-period OLG model or Ramsey model with households making decisions *under uncertainty and borrowing constraints* as in lecture 11 under "dynamic optimization". Such heterogenous agent models are used in state-of-the-art research, see [Quantitative Macroeconomics with Heterogeneous Households](https://www.annualreviews.org/doi/abs/10.1146/annurev.economics.050708.142922).

2. Further adding nominal rigidities this is called a **Heterogenous Agent New Keynesian (HANK)** model. See [Macroeconomics with HANK models](https://drive.google.com/file/d/16Qq7NJ_AZh5NmjPFSrLI42mfT7EsCUeH/view).

3. This extends the **Representative Agent New Keynesian (RANK)** model, which itself is a Ramsey model extended with nominal rigidities.

4. The final frontier is including **aggregate risk**, which either requires linearization or using a **Krussell-Smith method**. Solving the model in *sequence-space* as we did with the Ramsey model is a frontier method (see [here](https://github.com/shade-econ/sequence-jacobian/#sequence-space-jacobian)).

**Next lecture:** Agent Based Models

|

github_jupyter

|

```

##### Copyright 2020 Google LLC.

#@title Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

```

# We are using NitroML on Kubeflow:

This notebook allows users to analyze NitroML benchmark results.

```

# This notebook assumes you have followed the following steps to setup port-forwarding:

# Step 1: Configure your cluster with gcloud

# `gcloud container clusters get-credentials <cluster_name> --zone <cluster-zone> --project <project-id>

# Step 2: Get the port where the gRPC service is running on the cluster

# `kubectl get configmap metadata-grpc-configmap -o jsonpath={.data}`

# Use `METADATA_GRPC_SERVICE_PORT` in the next step. The default port used is 8080.

# Step 3: Port forwarding

# `kubectl port-forward deployment/metadata-grpc-deployment 9898:<METADATA_GRPC_SERVICE_PORT>`

# Troubleshooting

# If getting error related to Metadata (For examples, Transaction already open). Try restarting the metadata-grpc-service using:

# `kubectl rollout restart deployment metadata-grpc-deployment`

import sys, os

PROJECT_DIR=os.path.join(sys.path[0], '..')

%cd {PROJECT_DIR}

from ml_metadata.proto import metadata_store_pb2

from ml_metadata.metadata_store import metadata_store

from nitroml.benchmark import results

```

## Connect to the ML Metadata (MLMD) database

First we need to connect to our MLMD database which stores the results of our

benchmark runs.

```

connection_config = metadata_store_pb2.MetadataStoreClientConfig()

connection_config.host = 'localhost'

connection_config.port = 9898

store = metadata_store.MetadataStore(connection_config)

```

## Display benchmark results

Next we load and visualize `pd.DataFrame` containing our benchmark results.

These results contain contextual features such as the pipeline ID, and

benchmark metrics as computed by the downstream Evaluators. If your

benchmark included an `EstimatorTrainer` component, its hyperparameters may also

display in the table below.

```

#@markdown ### Choose how to aggregate metrics:

mean = False #@param { type: "boolean" }

stdev = False #@param { type: "boolean" }

min_and_max = False #@param { type: "boolean" }

agg = []

if mean:

agg.append("mean")

if stdev:

agg.append("std")

if min_and_max:

agg += ["min", "max"]

df = results.overview(store, metric_aggregators=agg)

df.head()

```

### We can display an interactive table using qgrid

Please follow the latest instructions on downloading qqgrid package from here: https://github.com/quantopian/qgrid

```

import qgrid

qgid_wdget = qgrid.show_grid(df, show_toolbar=True)

qgid_wdget

```

|

github_jupyter

|

# Group Metrics

The `fairlearn` package contains algorithms which enable machine learning models to minimise disparity between groups. The `metrics` portion of the package provides the means required to verify that the mitigation algorithms are succeeding.

```

import numpy as np

import pandas as pd

import sklearn.metrics as skm

```

## Ungrouped Metrics

At their simplest, metrics take a set of 'true' values $Y_{true}$ (from the input data) and predicted values $Y_{pred}$ (by applying the model to the input data), and use these to compute a measure. For example, the _recall_ or _true positive rate_ is given by

\begin{equation}

P( Y_{pred}=1 | Y_{true}=1 )

\end{equation}

That is, a measure of whether the model finds all the positive cases in the input data. The `scikit-learn` package implements this in [sklearn.metrics.recall_score](https://scikit-learn.org/stable/modules/generated/sklearn.metrics.recall_score.html).

Suppose we have the following data:

```

Y_true = [0, 1, 1, 1, 1, 0, 1, 0, 1, 0, 0, 0, 1, 1, 1, 1]

Y_pred = [0, 0, 1, 0, 1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1]

```

we can see that the prediction is 1 in five of the ten cases where the true value is 1, so we expect the recall to be 0.0.5:

```

skm.recall_score(Y_true, Y_pred)

```

## Metrics with Grouping

When considering fairness, each row of input data will have an associated group label $g \in G$, and we will want to know how the metric behaves for each $g$. To help with this, Fairlearn provides wrappers, which take an existing (ungrouped) metric function, and apply it to each group within a set of data.

Suppose in addition to the $Y_{true}$ and $Y_{pred}$ above, we had the following set of labels:

```

group_membership_data = ['d', 'a', 'c', 'b', 'b', 'c', 'c', 'c', 'b', 'd', 'c', 'a', 'b', 'd', 'c', 'c']

df = pd.DataFrame({ 'Y_true': Y_true, 'Y_pred': Y_pred, 'group_membership_data': group_membership_data})

df

```

```

import fairlearn.metrics as flm

group_metrics = flm.group_summary(skm.recall_score, Y_true, Y_pred, sensitive_features=group_membership_data, sample_weight=None)

print("Overall recall = ", group_metrics.overall)

print("recall by groups = ", group_metrics.by_group)

```

Note that the overall recall is the same as that calculated above in the Ungrouped Metric section, while the `by_group` dictionary matches the values we calculated by inspection from the table above.

In addition to these basic scores, `fairlearn.metrics` also provides convenience functions to recover the maximum and minimum values of the metric across groups and also the difference and ratio between the maximum and minimum:

```

print("min recall over groups = ", flm.group_min_from_summary(group_metrics))

print("max recall over groups = ", flm.group_max_from_summary(group_metrics))

print("difference in recall = ", flm.difference_from_summary(group_metrics))

print("ratio in recall = ", flm.ratio_from_summary(group_metrics))

```

## Supported Ungrouped Metrics

To be used by `group_summary`, the supplied Python function must take arguments of `y_true` and `y_pred`:

```python

my_metric_func(y_true, y_pred)

```

An additional argument of `sample_weight` is also supported:

```python

my_metric_with_weight(y_true, y_pred, sample_weight=None)

```

The `sample_weight` argument is always invoked by name, and _only_ if the user supplies a `sample_weight` argument.

## Convenience Wrapper

Rather than require a call to `group_summary` each time, we also provide a function which turns an ungrouped metric into a grouped one. This is called `make_metric_group_summary`:

```

recall_score_group_summary = flm.make_metric_group_summary(skm.recall_score)

results = recall_score_group_summary(Y_true, Y_pred, sensitive_features=group_membership_data)

print("Overall recall = ", results.overall)

print("recall by groups = ", results.by_group)

```

|

github_jupyter

|

# The importance of space

Agent based models are useful when the aggregate system behavior emerges out of local interactions amongst the agents. In the model of the evolution of cooperation, we created a set of agents and let all agents play against all other agents. Basically, we pretended as if all our agents were perfectly mixed. In practice, however, it is much more common that agents only interact with some, but not all, other agents. For example, in models of epidemiology, social interactions are a key factors. Thus, interactions are dependend on your social network. In other situations, our behavior might be based on what we see around us. Phenomena like fashion are at least partly driven by seeing what others are doing and mimicking this behavior. The same is true for many animals. Flocking dynamics as exhibited by starling, or shoaling behavior in fish, can be explained by the animal looking at its neirest neighbors and staying within a given distance of them. In agent based models, anything that structures the interaction amongst agents is typically called a space. This space can be a 2d or 3d space with euclidian distances (as in models of flocking and shoaling), it can also be a grid structure (as we will show below), or it can be a network structure.

MESA comes with several spaces that we can readily use. These are

* **SingleGrid;** an 'excel-like' space with each agent occopying a single grid cell

* **MultiGrid;** like grid, but with more than one agent per grid cell

* **HexGrid;** like grid, but on a hexagonal grid (*e.g.*, the board game Catan) thus changing who your neighbours are

* **ConinuousSpace;** a 2d continous space were agents can occupy any coordinate

* **NetworkGrid;** a network structure were one or more agents occupy a given node.

A key concern when using a none-networked space, is to think carefull about what happens at the edges of the space. In a basic implementation, agents in for example the top left corner has only 2 neighbors, while an agent in the middle has four neighbors. This can give rise to artifacts in the results. Basically, the dynamics at the edges are different from the behavior further away from the edges. It is therefore quite common to use a torus, or donut, shape for the space. In this way, there is no longer any edge and artifacts are thus removed.

# The emergence of cooperation in space

The documentation of MESA on the different spaces is quite limited. Therefore, this assignment is largely a tutorial continuing on the evolution of cooperation.

We make the following changes to the model

* Each agent gets a position, which is an x,y coordinate indicating the grid cell the agent occupies.

* The model has a grid, with an agent of random class. We initialize the model with equal probabilities for each type of class.

* All agents play against their neighbors. On a grid, neighborhood can be defined in various ways. For example, a Von Neumann neighborhood contains the four cells that share a border with the central cell. A Moore neighborhood with distance one contains 8 cells by also considering the diagonal. Below, we use a neighborhood distance of 1, and we do include diagonal neighbors. So we set Moore to True. Feel free to experiment with this model by setting it to False,

* The evolutionary dynamic, after all agents having played, is that each agent compares its scores to its neighbors. It will adopt whichever strategy within its neighborhood performed best.

* Next to using a SingleGrid from MESA, we also use a DataCollector to handle collecting statistics.

Below, I discuss in more detail the code containing the most important modifications.

```

from collections import deque, Counter, defaultdict

from enum import Enum

from itertools import combinations

from math import floor

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from mesa import Model, Agent

from mesa.space import SingleGrid

from mesa.datacollection import DataCollector

class Move(Enum):

COOPERATE = 1

DEFECT = 2

class AxelrodAgent(Agent):

"""An Abstract class from which all strategies have to be derived

Attributes

----------

points : int

pos : tuple

"""

def __init__(self, unique_id, pos, model):

super().__init__(unique_id, model)

self.points = 0

self.pos = pos

def step(self):

'''

This function defines the move and any logic for deciding

on the move goes here.

Returns

-------

Move.COOPERATE or Move.DEFECT

'''

raise NotImplemetedError

def receive_payoff(self, payoff, my_move, opponent_move):

'''receive payoff and the two moves resulting in this payoff

Parameters

----------

payoff : int

my_move : {Move.COOPERATE, Move.DEFECT}

opponements_move : {Move.COOPERATE, Move.DEFECT}

'''

self.points += payoff

def reset(self):

'''

This function is called after playing N iterations against

another player.

'''

raise NotImplementedError

class TitForTat(AxelrodAgent):

"""This class defines the following strategy: play nice, unless,

in the previous move, the other player betrayed you."""

def __init__(self, unique_id, pos, model):

super().__init__(unique_id, pos, model)

self.opponent_last_move = Move.COOPERATE

def step(self):

return self.opponent_last_move

def receive_payoff(self, payoff, my_move, opponent_move):

super().receive_payoff(payoff, my_move, opponent_move)

self.opponent_last_move = opponent_move

def reset(self):

self.opponent_last_move = Move.COOPERATE

class ContriteTitForTat(AxelrodAgent):

"""This class defines the following strategy: play nice, unless,

in the previous two moves, the other player betrayed you."""

def __init__(self, unique_id, pos, model):

super().__init__(unique_id, pos, model)

self.opponent_last_two_moves = deque([Move.COOPERATE, Move.COOPERATE], maxlen=2)

def step(self):

if (self.opponent_last_two_moves[0] == Move.DEFECT) and\

(self.opponent_last_two_moves[1] == Move.DEFECT):

return Move.DEFECT

else:

return Move.COOPERATE

def receive_payoff(self, payoff, my_move, opponent_move):

super().receive_payoff(payoff, my_move, opponent_move)

self.opponent_last_two_moves.append(opponent_move)

def reset(self):

self.opponent_last_two_moves = deque([Move.COOPERATE, Move.COOPERATE], maxlen=2)

class NoisySpatialEvolutionaryAxelrodModel(Model):

def __init__(self, N, noise_level=0.01, seed=None,

height=20, width=20,):

super().__init__(seed=seed)

self.noise_level = noise_level

self.num_iterations = N

self.agents = set()

self.payoff_matrix = {}

self.payoff_matrix[(Move.COOPERATE, Move.COOPERATE)] = (2, 2)

self.payoff_matrix[(Move.COOPERATE, Move.DEFECT)] = (0, 3)

self.payoff_matrix[(Move.DEFECT, Move.COOPERATE)] = (3, 0)

self.payoff_matrix[(Move.DEFECT, Move.DEFECT)] = (1, 1)

self.grid = SingleGrid(width, height, torus=True)

strategies = AxelrodAgent.__subclasses__()

num_strategies = len(strategies)

self.agent_id = 0

for cell in self.grid.coord_iter():

_, x, y = cell

pos = (x, y)

self.agent_id += 1

strategy_index = int(floor(self.random.random()*num_strategies))

agent = strategies[strategy_index](self.agent_id, pos, self)

self.grid.position_agent(agent, (x, y))

self.agents.add(agent)

self.datacollector = DataCollector(model_reporters={klass.__name__:klass.__name__

for klass in strategies})

def count_agent_types(self):

counter = Counter()

for agent in self.agents:

counter[agent.__class__.__name__] += 1

for k,v in counter.items():

setattr(self, k, v)

def step(self):

'''Advance the model by one step.'''

self.count_agent_types()

self.datacollector.collect(self)

for (agent_a, x, y) in self.grid.coord_iter():

for agent_b in self.grid.neighbor_iter((x,y), moore=True):

for _ in range(self.num_iterations):

move_a = agent_a.step()

move_b = agent_b.step()

#insert noise in movement

if self.random.random() < self.noise_level:

if move_a == Move.COOPERATE:

move_a = Move.DEFECT

else:

move_a = Move.COOPERATE

if self.random.random() < self.noise_level:

if move_b == Move.COOPERATE:

move_b = Move.DEFECT

else:

move_b = Move.COOPERATE

payoff_a, payoff_b = self.payoff_matrix[(move_a, move_b)]

agent_a.receive_payoff(payoff_a, move_a, move_b)

agent_b.receive_payoff(payoff_b, move_b, move_a)

agent_a.reset()

agent_b.reset()

# evolution

# tricky, we need to determine for each grid cell

# is a change needed, if so, log position, agent, and type to change to

agents_to_change = []

for agent_a in self.agents:

neighborhood = self.grid.iter_neighbors(agent_a.pos, moore=True,

include_center=True)

neighborhood = ([n for n in neighborhood])

neighborhood.sort(key=lambda x:x.points, reverse=True)

best_strategy = neighborhood[0].__class__

# if best type of strategy in neighborhood is

# different from strategy type of agent, we need

# to change our strategy

if not isinstance(agent_a, best_strategy):

agents_to_change.append((agent_a, best_strategy))

for entry in agents_to_change:

agent, klass = entry

self.agents.remove(agent)

self.grid.remove_agent(agent)

pos = agent.pos

self.agent_id += 1

new_agent = klass(self.agent_id, pos, self)

self.grid.position_agent(new_agent, pos)

self.agents.add(new_agent)

```

In the `__init__`, we now instantiate a SingleGrid, with a specified width and height. We set the kwarg torus to True indicating we are using a donut shape grid to avoid edge effects. Next, we fill this grid with random agents of the different types. This can be implemented in various ways. What I do here is using a list with the different classes (*i.e.*, types of strategies). By drawing a random number from a unit interval, multiplying it with the lenght of the list of classes and flooring the resulting number to an integer, I now have a random index into this list with the different classes. Next, I can get the class from the list and instantiate the agent object.

Some minor points with instantiating the agents. First, we give the agent a position, called pos, this is a default attribute assumed by MESA. We also still need a unique ID for the agent, we do this with a simple counter (`self.agent_id`). `self.grid.coord_iter` is a method on the grid. It returns an iterator over the cells in the grid. This iterator returns the agent occupying the cell and the x and y coordinate. Since the first item is `null` because we are filling the grid, we can ignore this. We do this by using the underscore variable name (`_`). This is a python convention.

Once we have instantiated the agent, we place the agent in the grid and add it to our collection of agents. If you look in more detail at the model class, you will see that I use a set for agents, rather than a list. The reason for this is that we are going to remove agents in the evolutionary phase. Removing agents from a list is memory and compute intensive, while it is computationally easy and cheap when we have a set.

```python

self.grid = SingleGrid(width, height, torus=True)

strategies = AxelrodAgent.__subclasses__()

num_strategies = len(strategies)

self.agent_id = 0

for cell in self.grid.coord_iter():

_, x, y = cell

pos = (x, y)

self.agent_id += 1

strategy_index = int(floor(self.random.random()*num_strategies))

agent = strategies[strategy_index](self.agent_id, pos, self)

self.grid.position_agent(agent, (x, y))

self.agents.add(agent)

```

We also use a DataCollector. This is a default class provided by MESA that can be used for keeping track of relevant statistics. It can store both model level variables as well as agent level variables. Here we are only using model level variables (i.e. attributes of the model). Specifically, we are going to have an attribute on the model for each type of agent strategy (i.e. classes). This attribute is the current count of agents in the grid of the specific type. To implement this, we need to do several things.

1. initialize a data collector instance

2. at every step update the current count of agents of each strategy

3. collect the current counts with the data collector.

For step 1, we set a DataCollector as an attribute. This datacollector needs to know the names of the attributes on the model it needs to collect. So we pass a dict as kwarg to model_reporters. This dict has as key the name by which the variable will be known in the DataCollector. As value, I pass the name of the attribute on the model, but it can also be a function or method which returns a number. Note th at the ``klass`` misspelling is deliberate. The word ``class`` is protected in Python, so you cannot use it as a variable name. It is common practice to use ``klass`` instead in the rare cases were you are having variable refering to a specific class.

```python

self.datacollector = DataCollector(model_reporters={klass.__name__:klass.__name__

for klass in strategies})

```

For step 2, we need to count at every step the number of agents per strategy type. To help keep track of this, we define a new method, `count_agent_types`. The main magic is the use of `setattr` which is a standard python function for setting attributes to a particular value on a given object. This reason for writing our code this way is that we automatically adapt our attributes to the classes of agents we have, rather than hardcoding the agent classes as attributes on our model. If we now add new classes of agents, we don't need to change the model code itself. There is also a ``getattr`` function, which is used by for example the DataCollector to get the values for the specified attribute names.

```python

def count_agent_types(self):

counter = Counter()

for agent in self.agents:

counter[agent.__class__.__name__] += 1

for k,v in counter.items():

setattr(self, k, v)

```

For step 3, we modify the first part of the ``step`` method. We first count the types of agents and next collect this data with the datacollector.

```python

self.count_agent_types()

self.datacollector.collect(self)

```

The remainder of the ``step`` method has also been changed quite substantially. First, We have to change against whom each agent is playing. We do this by iterating over all agents in the model. Next, we use the grid to give us the neighbors of a given agent. By setting the kwarg ``moore`` to ``True``, we indicate that we include also our diagonal neighbors. Next, we play as we did before in the noisy version of the Axelrod model.

```python

for agent_a in self.agents:

for agent_b in self.grid.neighbor_iter(agent_a.pos, moore=True):

for _ in range(self.num_iterations):

```

Second, we have to add the evolutionary dynamic. This is a bit tricky. First, we loop again over all agents in the model. We check its neighbors and see which strategy performed best. If this is of a different type (``not isinstance(agent_a, best_strategy)``, we add it to a list of agents that needs to be changed and the type of agent to which it needs to be changed. Once we know all agents that need to be changed, we can make this change.

Making the change is quite straighforward. We remove the agent from the set of agents (`self.agents`) and from the grid. Next we get the position of the agent, we increment our unique ID counter, and create a new agent. This new agent is than added to the grid and to the set of agents.

```python

# evolution

agents_to_change = []

for agent_a in self.agents:

neighborhood = self.grid.iter_neighbors(agent_a.pos, moore=True,

include_center=True)

neighborhood = ([n for n in neighborhood])

neighborhood.sort(key=lambda x:x.points, reverse=True)

best_strategy = neighborhood[0].__class__

# if best type of strategy in neighborhood is

# different from strategy type of agent, we need

# to change our strategy

if not isinstance(agent_a, best_strategy):

agents_to_change.append((agent_a, best_strategy))

for entry in agents_to_change:

agent, klass = entry

self.agents.remove(agent)

self.grid.remove_agent(agent)

pos = agent.pos

self.agent_id += 1

new_agent = klass(self.agent_id, pos, self)

self.grid.position_agent(new_agent, pos)

self.agents.add(new_agent)

```

## Assignment 1

Can you explain why we need to first loop over all agents before we are changing a given agent to a different strategy?

## Assignment 2

Add all agents classes (i.e., strategie) from the previous assignment to this model. Note that you might have to update the ``__init__`` method to reflect the new pos keyword argument and attribute.

## Assignment 3

Run the model for 50 steps, and with 200 rounds of the iterated game. Use the defaults for all other keyword arguments.

Plot the results.

*hint: the DataCollector can return the statistics it has collected as a dataframe, which in turn you can plot directly.*

This new model is quite a bit noisier than previously. We have a random initialization of the grid and depending on the initial neighborhood, different evolutionary dynamics can happen. On top, we have the noise in game play, and the random agent.

## Assignment 4

Let's explore the model for 10 replications. Run the model 10 times, with 200 rounds of the iterated prisoners dilemma. Run each model for fifty steps. Plot the results for each run.

1. Can you say anything generalizable about the behavioral dynamics of the model?

2. What do you find striking in the results and why?

3. If you compare the results for this spatially explicit version of the Emergence of Cooperation with the none spatially explicit version, what are the most important differences in dynamics. Can you explain why adding local interactions results in these changes?

|

github_jupyter

|

## Getting Data

```

#import os

#import requests

#DATASET = (

# "https://archive.ics.uci.edu/ml/machine-learning-databases/abalone/abalone.data",

# "https://archive.ics.uci.edu/ml/machine-learning-databases/abalone/abalone.names"

#)

#def download_data(path='data', urls=DATASET):

# if not os.path.exists(path):

# os.mkdir(path)

#

# for url in urls:

# response = requests.get(url)

# name = os.path.basename(url)

# with open(os.path.join(path, name), 'wb') as f:

# f.write(response.content)

#download_data()

#DOWNLOAD AND LOAD IN DATA FROM URL!!!!!!!!!!!!!!!!!!!

#import requests

#import io

#data = io.BytesIO(requests.get('URL HERE'))

#whitedata = pd.read_csv(data.content)

#whitedata.head()

```

## Load Data

```

import pandas as pd

import yellowbrick

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

import io

import sklearn

columns = [

"fixed acidity",

"volatile acidity",

"citric acid",

"residual sugar",

"chlorides",

"free sulfur dioxide",

"total sulfur dioxide",

"density",

"pH",

"sulphates",

"alcohol",

"quality"

]

reddata = pd.read_csv('data/winequality-red.csv', sep=";")

whitedata = pd.read_csv('data/winequality-white.csv', sep=";")

```

## Check it out

```

whitedata.head(10)

whitedata.describe()

whitedata.info()

whitedata.pH.describe()

whitedata['poor'] = np.where(whitedata['quality'] < 5, 1, 0)

whitedata['poor'].value_counts()

whitedata['expected'] = np.where(whitedata['quality'] > 4, 1, 0)

whitedata['expected'].value_counts()

whitedata['expected'].describe()

whitedata.head()

#set up the figure size

%matplotlib inline

plt.rcParams['figure.figsize'] = (30, 30)

#make the subplot

fig, axes = plt.subplots(nrows = 6, ncols = 2)

#specify the features of intersest

num_features = ['pH', 'alcohol', 'citric acid', 'chlorides', 'residual sugar', 'free sulfur dioxide', 'total sulfur dioxide', 'density', 'sulphates']

xaxes = num_features

yaxes = ['Counts', 'Counts', 'Counts', 'Counts', 'Counts', 'Counts', 'Counts', 'Counts', 'Counts']

#draw the histogram

axes = axes.ravel()

for idx, ax in enumerate(axes):

ax.hist(whitedata[num_features[idx]].dropna(), bins = 30)

ax.set_xlabel(xaxes[idx], fontsize = 20)

ax.set_ylabel(yaxes[idx], fontsize = 20)

ax.tick_params(axis = 'both', labelsize = 20)

features = ['pH', 'alcohol', 'citric acid', 'residual sugar', 'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density', 'sulphates']

classes = ['unexpected', 'expected']

X = whitedata[features].as_matrix()

y = whitedata['quality'].as_matrix()

from sklearn.naive_bayes import GaussianNB

viz = GaussianNB()

viz.fit(X, y)

viz.score(X, y)

from sklearn.naive_bayes import MultinomialNB

viz = MultinomialNB()

viz.fit(X, y)

viz.score(X, y)

from sklearn.naive_bayes import BernoulliNB

viz = BernoulliNB()

viz.fit(X, y)

viz.score(X, y)

```

## Features and Targets

```

y = whitedata["quality"]

y

X = whitedata.iloc[:,1:-1]

X

from sklearn.ensemble import RandomForestClassifier as rfc

estimator = rfc(n_estimators=7)

estimator.fit(X,y)

y_hat = estimator.predict(X)

print(y_hat)

%matplotlib notebook

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

data = pd.read_csv(".../data/occupency.csv")

data.head()

X = data(['temperature', 'relative humidity', 'light', 'CO2', 'humidity'])

y = data('occupency')

def n_estimators_tuning(X, y, min_estimator=1, max_estimator=50, ax=None, save=None):

if ax is None:

_, ax = plt.subplots()

mean = []

stds = []

n_estimators = np.arrange(min_estimators, mx_estimators+1)

for n in n_estimators:

model = RandomForestClassifier(n_estimators=n)

scores = cross_val_score(model, X, y, cv=cv)

means.append(scores.mean())

stds.append(scores.std())

means = np.array(means)

stds = np.array(stds)

ax.plot(n_estimatrors, scores, label='CV+{} scores'.forest(cv))

ax.fill_between(n_estimators, means-stds, means+stds, alpha=0.3)

max_score = means.max()

max_score_idx = np.where(means==max_score)[0]

ax.hline#???????????????

ax.set_xlim(min_estimators, max_estimators)

ax.set_xlabel("n_estimators")

ax.set_ylabel("F1 Score")

ax.set_title("Random Forest Hyperparameter Tuning")

ax.legend(loc='best')

if save:

plt.savefig(save)

return ax

#print(scores)

n_estimators_tuning(X, y)

whitedata[winecolor] = 0

reddata[winecolor] = 1

df3 = [whitedata, reddata]

df = pd.concat(df3)

df.reset_index(drop = True, inplace = True)

df.isnull().sum()

whitedata.head()

reddata.head()

df = df.drop(columns=['poor', 'expected'])

df.isnull().sum()

df.head()

df.describe()

df['recommended'] = np.where(df['quality'] < 6, 0, 1)

df.head(50)

df["quality"].value_counts().sort_values(ascending = False)

df["recommended"].value_counts().sort_values(ascending = False)

df['recommended'] = np.where(df['quality'] < 6, 0, 1)

df["recommended"].value_counts().sort_values(ascending = False)

from pandas.plotting import radviz

plt.figure(figsize=(8,8))

radviz(df, 'recommended', color=['blue', 'red'])

plt.show()

features = ['pH', 'alcohol', 'citric acid', 'residual sugar', 'chlorides', 'free sulfur dioxide', 'total sulfur dioxide', 'density', 'sulphates']

#classes = ['unexpected', 'expected']

X = df[features].as_matrix()

y = df['recommended'].as_matrix()

viz = GaussianNB()

viz.fit(X, y)

viz.score(X, y)

viz = MultinomialNB()

viz.fit(X, y)

viz.score(X, y)

viz = BernoulliNB()

viz.fit(X, y)

viz.score(X, y)

from sklearn.ensemble import RandomForestClassifier

viz = RandomForestClassifier()

viz.fit(X, y)

viz.score(X, y)

from sklearn.metrics import (auc, roc_curve, recall_score, accuracy_score, confusion_matrix, classification_report, f1_score, precision_score)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=11)

predicted = viz.predict(X_test)

cm = confusion_matrix(y_test, predicted)

fig = plt.figure(figsize=(7, 5))

ax = plt.subplot()

cm1 = (cm.astype(np.float64) / cm.sum(axis=1, keepdims=1))

cmap = sns.cubehelix_palette(light=1, as_cmap=True)

sns.heatmap(cm1, annot=True, ax = ax, cmap=cmap); #annot=True to annotate cells

# labels, title and ticks

ax.set_xlabel('Features');

ax.set_ylabel('Recommended');

ax.set_title('Normalized confusion matrix');

ax.xaxis.set_ticklabels(['Good', 'Bad']);

ax.yaxis.set_ticklabels(['Good', 'Bad']);

print(cm)

# Recursive Feature Elimination (RFE)

from sklearn.feature_selection import (chi2, RFE)

model = RandomForestClassifier()

rfe = RFE(model, 38)

fit = rfe.fit(X, y)

print("Num Features: ", fit.n_features_)

print("Selected Features: ", fit.support_)

print("Feature Ranking: ", fit.ranking_)

from sklearn.model_selection import StratifiedKFold

from sklearn.datasets import make_classification

from yellowbrick.features import RFECV

sns.set(font_scale=3)

cv = StratifiedKFold(5)

oz = RFECV(RandomForestClassifier(), cv=cv, scoring='f1')

oz.fit(X, y)

oz.poof()

# Ridge

# Create a new figure

#mpl.rcParams['axes.prop_cycle'] = cycler('color', ['red'])

from yellowbrick.features.importances import FeatureImportances

from sklearn.linear_model import (LogisticRegression, LogisticRegressionCV, RidgeClassifier, Ridge, Lasso, ElasticNet)

fig = plt.gcf()

fig.set_size_inches(10,10)

ax = plt.subplot(311)

labels = features

viz = FeatureImportances(Ridge(alpha=0.5), ax=ax, labels=labels, relative=False)

ax.spines['right'].set_visible(False)

ax.spines['top'].set_visible(False)

ax.grid(False) # Fit and display

viz.fit(X, y)

viz.poof()

estimator = RandomForestClassifier(class_weight='balanced')

y_pred_proba = RandomForestClassifier(X_test)

#y_pred_proba[:5]

def plot_roc_curve(y_test, y_pred_proba):

fpr, tpr, thresholds = roc_curve(y_test, y_pred_proba[:, 1])

roc_auc = auc(fpr, tpr)

plt.plot(fpr, tpr, label='ROC curve (area = %0.3f)' % roc_auc, color='darkblue')

plt.plot([0, 1], [0, 1], 'k--') # random predictions curve

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.0])

plt.xlabel('False Positive Rate or (1 - Specifity)')

plt.ylabel('True Positive Rate or (Sensitivity)')

plt.title('Receiver Operating Characteristic')

plt.legend(loc="lower right")

plt.grid(False)

plot_roc_curve(y_test, y_pred_proba)

```

|

github_jupyter

|

# Draw an isochrone map with OSMnx

How far can you travel on foot in 15 minutes?

- [Overview of OSMnx](http://geoffboeing.com/2016/11/osmnx-python-street-networks/)

- [GitHub repo](https://github.com/gboeing/osmnx)

- [Examples, demos, tutorials](https://github.com/gboeing/osmnx-examples)

- [Documentation](https://osmnx.readthedocs.io/en/stable/)

- [Journal article/citation](http://geoffboeing.com/publications/osmnx-complex-street-networks/)

```

import geopandas as gpd

import matplotlib.pyplot as plt

import networkx as nx

import osmnx as ox

from descartes import PolygonPatch

from shapely.geometry import Point, LineString, Polygon

ox.config(log_console=True, use_cache=True)

ox.__version__

# configure the place, network type, trip times, and travel speed

place = 'Berkeley, CA, USA'

network_type = 'walk'

trip_times = [5, 10, 15, 20, 25] #in minutes

travel_speed = 4.5 #walking speed in km/hour

```

## Download and prep the street network

```

# download the street network

G = ox.graph_from_place(place, network_type=network_type)

# find the centermost node and then project the graph to UTM

gdf_nodes = ox.graph_to_gdfs(G, edges=False)

x, y = gdf_nodes['geometry'].unary_union.centroid.xy

center_node = ox.get_nearest_node(G, (y[0], x[0]))

G = ox.project_graph(G)

# add an edge attribute for time in minutes required to traverse each edge

meters_per_minute = travel_speed * 1000 / 60 #km per hour to m per minute

for u, v, k, data in G.edges(data=True, keys=True):

data['time'] = data['length'] / meters_per_minute

```

## Plots nodes you can reach on foot within each time

How far can you walk in 5, 10, 15, 20, and 25 minutes from the origin node? We'll use NetworkX to induce a subgraph of G within each distance, based on trip time and travel speed.

```

# get one color for each isochrone

iso_colors = ox.get_colors(n=len(trip_times), cmap='Reds', start=0.3, return_hex=True)

# color the nodes according to isochrone then plot the street network

node_colors = {}

for trip_time, color in zip(sorted(trip_times, reverse=True), iso_colors):

subgraph = nx.ego_graph(G, center_node, radius=trip_time, distance='time')

for node in subgraph.nodes():

node_colors[node] = color

nc = [node_colors[node] if node in node_colors else 'none' for node in G.nodes()]

ns = [20 if node in node_colors else 0 for node in G.nodes()]

fig, ax = ox.plot_graph(G, fig_height=8, node_color=nc, node_size=ns, node_alpha=0.8, node_zorder=2)

```

## Plot the time-distances as isochrones

How far can you walk in 5, 10, 15, 20, and 25 minutes from the origin node? We'll use a convex hull, which isn't perfectly accurate. A concave hull would be better, but shapely doesn't offer that.

```

# make the isochrone polygons

isochrone_polys = []

for trip_time in sorted(trip_times, reverse=True):

subgraph = nx.ego_graph(G, center_node, radius=trip_time, distance='time')

node_points = [Point((data['x'], data['y'])) for node, data in subgraph.nodes(data=True)]

bounding_poly = gpd.GeoSeries(node_points).unary_union.convex_hull

isochrone_polys.append(bounding_poly)

# plot the network then add isochrones as colored descartes polygon patches

fig, ax = ox.plot_graph(G, fig_height=8, show=False, close=False, edge_color='k', edge_alpha=0.2, node_color='none')

for polygon, fc in zip(isochrone_polys, iso_colors):

patch = PolygonPatch(polygon, fc=fc, ec='none', alpha=0.6, zorder=-1)

ax.add_patch(patch)

plt.show()

```

## Or, plot isochrones as buffers to get more faithful isochrones than convex hulls can offer

in the style of http://kuanbutts.com/2017/12/16/osmnx-isochrones/

```

def make_iso_polys(G, edge_buff=25, node_buff=50, infill=False):

isochrone_polys = []

for trip_time in sorted(trip_times, reverse=True):

subgraph = nx.ego_graph(G, center_node, radius=trip_time, distance='time')

node_points = [Point((data['x'], data['y'])) for node, data in subgraph.nodes(data=True)]

nodes_gdf = gpd.GeoDataFrame({'id': subgraph.nodes()}, geometry=node_points)

nodes_gdf = nodes_gdf.set_index('id')

edge_lines = []

for n_fr, n_to in subgraph.edges():

f = nodes_gdf.loc[n_fr].geometry

t = nodes_gdf.loc[n_to].geometry

edge_lines.append(LineString([f,t]))

n = nodes_gdf.buffer(node_buff).geometry

e = gpd.GeoSeries(edge_lines).buffer(edge_buff).geometry

all_gs = list(n) + list(e)

new_iso = gpd.GeoSeries(all_gs).unary_union

# try to fill in surrounded areas so shapes will appear solid and blocks without white space inside them

if infill:

new_iso = Polygon(new_iso.exterior)

isochrone_polys.append(new_iso)

return isochrone_polys

isochrone_polys = make_iso_polys(G, edge_buff=25, node_buff=0, infill=True)

fig, ax = ox.plot_graph(G, fig_height=8, show=False, close=False, edge_color='k', edge_alpha=0.2, node_color='none')

for polygon, fc in zip(isochrone_polys, iso_colors):

patch = PolygonPatch(polygon, fc=fc, ec='none', alpha=0.6, zorder=-1)

ax.add_patch(patch)

plt.show()

```

|

github_jupyter

|

# Raven annotations

Raven Sound Analysis Software enables users to inspect spectrograms, draw time and frequency boxes around sounds of interest, and label these boxes with species identities. OpenSoundscape contains functionality to prepare and use these annotations for machine learning.

## Download annotated data

We published an example Raven-annotated dataset here: https://doi.org/10.1002/ecy.3329

```

from opensoundscape.commands import run_command

from pathlib import Path

```

Download the zipped data here:

```

link = "https://esajournals.onlinelibrary.wiley.com/action/downloadSupplement?doi=10.1002%2Fecy.3329&file=ecy3329-sup-0001-DataS1.zip"

name = 'powdermill_data.zip'

out = run_command(f"wget -O powdermill_data.zip {link}")

```

Unzip the files to a new directory, `powdermill_data/`

```

out = run_command("unzip powdermill_data.zip -d powdermill_data")

```

Keep track of the files we have now so we can delete them later.

```

files_to_delete = [Path("powdermill_data"), Path("powdermill_data.zip")]

```

## Preprocess Raven data

The `opensoundscape.raven` module contains preprocessing functions for Raven data, including:

* `annotation_check` - for all the selections files, make sure they all contain labels

* `lowercase_annotations` - lowercase all of the annotations

* `generate_class_corrections` - create a CSV to see whether there are any weird names

* Modify the CSV as needed. If you need to look up files you can use `query_annotations`

* Can be used in `SplitterDataset`

* `apply_class_corrections` - replace incorrect labels with correct labels

* `query_annotations` - look for files that contain a particular species or a typo

```

import pandas as pd

import opensoundscape.raven as raven

import opensoundscape.audio as audio

raven_files_raw = Path("./powdermill_data/Annotation_Files/")

```

### Check Raven files have labels

Check that all selections files contain labels under one column name. In this dataset the labels column is named `"species"`.

```

raven.annotation_check(directory=raven_files_raw, col='species')

```

### Create lowercase files

Convert all the text in the files to lowercase to standardize them. Save these to a new directory. They will be saved with the same filename but with ".lower" appended.

```

raven_directory = Path('./powdermill_data/Annotation_Files_Standardized')

if not raven_directory.exists(): raven_directory.mkdir()

raven.lowercase_annotations(directory=raven_files_raw, out_dir=raven_directory)

```

Check that the outputs are saved as expected.

```

list(raven_directory.glob("*.lower"))[:5]

```

### Generate class corrections

This function generates a table that can be modified by hand to correct labels with typos in them. It identifies the unique labels in the provided column (here `"species"`) in all of the lowercase files in the directory `raven_directory`.

For instance, the generated table could be something like the following:

```

raw,corrected

sparrow,sparrow

sparow,sparow

goose,goose

```

```

print(raven.generate_class_corrections(directory=raven_directory, col='species'))

```