repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 1,589 | closed | Fix architectures count | 10-22-2019 00:30:00 | 10-22-2019 00:30:00 | Hi! Actually, if we count DistilGPT-2 as a standalone architecture, it should be 10. Do you think you could update it to 10 before we merge? Thanks. |

|

transformers | 1,588 | closed | Using HuggingFace pre-trained transformer to tokenize and generate iterator for a different text than the one it was trained on | Hello,

I am trying to do NLP by using HuggingFace transformers, and I have a question. Is it possible to use the pre-trained HuggingFace Transformer-XL and its pre-trained vocabulary to tokenize and generate BPTTIterator for the WikiText2 dataset instead of the WikiText103 that the transformer was originally trained on? If yes, could someone provide me example codes to illustrate how to 1.tokenize and 2. generate BPTTIterator to analyze the WikiText2, based on the pre-trained HuggingFace transformer XL model and its vocabulary?

NOTE: the WikiText2 can be obtained via

```js

import torchtext

# load WikiText-2 dataset and split it into train and test set

train_Wiki2, val_Wiki2, test_Wiki2 = torchtext.datasets.WikiText2.splits(TEXT)

```

or

```js

import lineflow as lf

import lineflow.datasets as lfds

# load WikiText-103 dataset

train_Wiki103 = lfds.WikiText2('train')

test_Wiki103 = lfds.WikiText2('test')

```

Thank you, | 10-21-2019 18:59:42 | 10-21-2019 18:59:42 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,587 | closed | Sequence to sequence with GPT model | Hi, I really appreciate if you could tell me if I can build a seq2seq model with gpt2 like this:

I am getting GPT2 run_generation codes, and I want to finetune it in a way, that I give a

sequence as a context, and then generate another sequence with gpt2, and then I minimize

the cross-entropy loss between the generated sequence and the expected out, and I want to

modify the run_finetune_lm in a way to do it, I was wondering if this way I can make a seq2seq

model with GPT, thank you. | 10-21-2019 08:31:25 | 10-21-2019 08:31:25 | We are currently working on implementing seq2seq for most models in the library (see https://github.com/huggingface/transformers/pull/1455). I won't be ready before a week or two.<|||||>I'm closing this issue, but feel free to reply in #1506 that we leave open for comments on this implementation. |

transformers | 1,586 | closed | Add special tokens to documentation for bert examples to resolve issue: #1561 | **Currently the BERT examples only show the strings encoded without the inclusion of special tokens (e.g. [CLS] and [SEP]) as illustrated below:**

```

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

sentence = "Hello there, General Kenobi!"

print(tokenizer.encode(sentence))

print(tokenizer.cls_token_id, tokenizer.sep_token_id)

# [7592, 2045, 1010, 2236, 6358, 16429, 2072, 999]

# 101 102

```

**In this pull request i set add_special_tokens=True in order to include special tokens in the documented examples as illustrated below:**

```

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

sentence = "Hello there, General Kenobi!"

print(tokenizer.encode(sentence, add_special_tokens=True))

print(tokenizer.cls_token_id, tokenizer.sep_token_id)

# [101, 7592, 2045, 1010, 2236, 6358, 16429, 2072, 999, 102]

# 101 102

``` | 10-21-2019 04:59:55 | 10-21-2019 04:59:55 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=h1) Report

> Merging [#1586](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/82f6abd98aaa691ca0adfe21e85a17dc6f386497?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1586 +/- ##

=======================================

Coverage 86.16% 86.16%

=======================================

Files 91 91

Lines 13593 13593

=======================================

Hits 11713 11713

Misses 1880 1880

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_gpt2.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2dwdDIucHk=) | `84.19% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_tf\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX29wZW5haS5weQ==) | `96.04% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_tf\_transfo\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3RyYW5zZm9feGwucHk=) | `92.21% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `80.57% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_transfo\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RyYW5zZm9feGwucHk=) | `75.16% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_tf\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX2N0cmwucHk=) | `97.75% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_xlm.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbS5weQ==) | `88.42% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2JlcnQucHk=) | `88.17% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2N0cmwucHk=) | `95.45% <ø> (ø)` | :arrow_up: |

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.75% <ø> (ø)` | :arrow_up: |

| ... and [7 more](https://codecov.io/gh/huggingface/transformers/pull/1586/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=footer). Last update [82f6abd...d36680d](https://codecov.io/gh/huggingface/transformers/pull/1586?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>This is great thanks. Actually we should be adding this to all the examples for all the models...<|||||>@thomwolf Would be happy to make the changes for the rest of the models.<|||||>@thomwolf added changes for the rest of the pytorch model examples and all the tensorflow model examples

I used the two bash scripts below to identify files to edit:

```

# Pytorch model examples

grep -iR "input_ids = torch.tensor(tokenizer.encode(" .

# Tensorflow model examples

grep -iR "input_ids = tf.constant(tokenizer.encode(" .

```

**UPDATE:** Example documentation changes were implemented for all tensorflow models except for ```modeling_tf_distilbert.py``` since ```TFDistilBertModelTest.test_pt_tf_model_equivalence``` would fail under **build_py3_torch_and_tf** (details in error [logs](https://circleci.com/gh/huggingface/transformers/5245?utm_campaign=vcs-integration-link&utm_medium=referral&utm_source=github-build-link) for commit: ec276d6abad7eae800f1a1a039ddc78fde406009)<|||||>Thanks for that.

@LysandreJik even though we will have special tokens added by default in the coming release, maybe we still want to update the doc for the current release with this? (not sure this is possible)<|||||>I agree that the necessity to add special tokens should be explicit. However, the documentation is based on previous commits so changing the previous documentation would require to change the commit history of the repo (which we should not do).

We might need to think of a way to work around that to update the misleading documentation of previous versions like in this case. |

transformers | 1,585 | closed | AdamW requires torch>=1.2.0 | ## 🐛 Bug

<!-- Important information -->

AdamW requires torch>=1.2.0, torch < 1.2.0 will cause an importError: cannot import name 'AdamW'

Model I am using (Bert, XLNet....):

Language I am using the model on (English, Chinese....):

The problem arise when using:

* [ ] the official example scripts: (give details)

* [ ] my own modified scripts: (give details)

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS:

* Python version:

* PyTorch version:

* PyTorch Transformers version (or branch):

* Using GPU ?

* Distributed of parallel setup ?

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

| 10-21-2019 03:52:13 | 10-21-2019 03:52:13 | I don't think that's right. AdamW is implemented in transformers.optimization

https://github.com/huggingface/transformers/blob/82f6abd98aaa691ca0adfe21e85a17dc6f386497/transformers/optimization.py#L107

As far as I can see that does not require anything specific to torch 1.2. _However_, if you are trying to import [AdamW from torch ](https://pytorch.org/docs/stable/optim.html#torch.optim.AdamW), you may indeed be required to use torch 1.2.

I haven't compared the implementation in torch vs. transformers, but I'd go with torch's native implementation if you can and otherwise fallback to transformers' implementation.<|||||>sorry, I didn't show the details:

the error is from 29 line in transformers/examples/distillation/distiller.py

from torch.optim import AdamW

this AdamW is imported from torch.optim<|||||>does this really need torch >=1.2? I met this problem<|||||>my torch version is 1.1<|||||>No. Importing AdamW from transformers should work with earlier versions. If you're trying to import it directly from torch, then you'll need 1.2+.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Unstale. <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,584 | closed | Add special tokens to documentation for bert examples to resolve issue: #1561 | **Currently the BERT examples only show the strings encoded without the inclusion of special tokens (e.g. [CLS] and [SEP]) as illustrated below:**

```

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

sentence = "Hello there, General Kenobi!"

print(tokenizer.encode(sentence))

print(tokenizer.cls_token_id, tokenizer.sep_token_id)

# [7592, 2045, 1010, 2236, 6358, 16429, 2072, 999]

# 101 102

```

**In this pull request i set ```add_special_tokens=True``` in order to include special tokens in the documented examples as illustrated below:**

```

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

sentence = "Hello there, General Kenobi!"

print(tokenizer.encode(sentence, add_special_tokens=True))

print(tokenizer.cls_token_id, tokenizer.sep_token_id)

# [101, 7592, 2045, 1010, 2236, 6358, 16429, 2072, 999, 102]

# 101 102

``` | 10-21-2019 03:51:09 | 10-21-2019 03:51:09 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=h1) Report

> Merging [#1584](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/82f6abd98aaa691ca0adfe21e85a17dc6f386497?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1584 +/- ##

=======================================

Coverage 86.16% 86.16%

=======================================

Files 91 91

Lines 13593 13593

=======================================

Hits 11713 11713

Misses 1880 1880

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/1584/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2JlcnQucHk=) | `88.17% <ø> (ø)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=footer). Last update [82f6abd...1972e0e](https://codecov.io/gh/huggingface/transformers/pull/1584?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

transformers | 1,583 | closed | Question answering for SQuAD with XLNet | ## ❓ Questions & Help

Dear huggingface,

Thank you very much for your great implementation of NLP architectures! I'm currently trying to train an XLNet model for question answering in French.

I studied your code to understand how question answering is done with XLNet, but I am struggling to follow how it works. Especially, I would like to understand the reasoning behind `PoolerStartLogits`, `PoolerStartLogits` and `PoolerAnswerClass`.

I also don't quite understand how prediction of the answer indices works during inference time.

I know this is a lot of questions, I appreciate any help you can give me!

Thank you very much!

| 10-21-2019 02:25:08 | 10-21-2019 02:25:08 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,582 | closed | How does arg --vocab_transform help in extract_distilbert.py? | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

Hi everyone, I'm new to experiment with bert model distillation. When running extract_distilbert.py on my fine tuned bert model, I came across the argusment vocat_transform.

```

if args.vocab_transform:

for w in ['weight', 'bias']:

compressed_sd[f'vocab_transform.{w}'] = state_dict[f'cls.predictions.transform.dense.{w}']

compressed_sd[f'vocab_layer_norm.{w}'] = state_dict[f'cls.predictions.transform.LayerNorm.{w}']

```

When should we use this argument in running extract_distilbert? Any scenario we could benefit doing so?

Thanks! | 10-21-2019 01:01:33 | 10-21-2019 01:01:33 | Hello @evehsu,

BERT uses an additional non-linearity before the vocabulary projection (see [here](https://github.com/huggingface/transformers/blob/master/transformers/modeling_bert.py#L381)).

It's a design choice, as far as I know, XLM doesn't a non-linearity right before the vocab projection (the language modeling head).

I left this option because I experimented with it, but if you want to keep the BERT architecture as unchanged as possible, you should use the `--vocab_transform` to ensure you also extract the pre-trained weights for this non-linearity.

Victor<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,581 | closed | Is there a computation/speed advantage to batching inputs into `TransformerModel` to reduce its number calls | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

For my particular application, I need to have several `output = TransformerModel(inputIDs)` calls per step, from different datasets.

so

```

output1 = TransformerModel(inputIDs_dataset1)

output2 = TransformerModel(inputIDs_dataset2)

output3 = TransformerModel(inputIDs_dataset3)

```

Initially I preferred to have these calls separate, as each dataset has a different average and distribution of sequence length, so keeping these separated would decrease the number of paddings I need to do within each batch.

On the other hand, I imagine that the TransformerModel objects have some optimizations which would make it overall more computationally efficient just to concatenate all the datasets, and make only one call to the `TransformerModel`.

My intuition is towards the latter approach, but would hear takes from those who designed it. | 10-20-2019 23:07:27 | 10-20-2019 23:07:27 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,580 | closed | Gradient norm clipping should be done right before calling the optimiser | Right now it's done after each step in the gradient accumulation. What do you think? | 10-20-2019 21:36:20 | 10-20-2019 21:36:20 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=h1) Report

> Merging [#1580](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/82f6abd98aaa691ca0adfe21e85a17dc6f386497?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1580 +/- ##

=======================================

Coverage 86.16% 86.16%

=======================================

Files 91 91

Lines 13593 13593

=======================================

Hits 11713 11713

Misses 1880 1880

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=footer). Last update [82f6abd...abd7110](https://codecov.io/gh/huggingface/transformers/pull/1580?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Oh yes, great, thanks, Pasquale. Would you mind fixing the `run_glue` and `run_ner` examples as well?<|||||>@thomwolf done! what's the best way to check this code before merging?<|||||>Thanks a lot!

It should be fine, we have continuous integration tests on `run_glue` and `run_squad` so if it passed at least the code run. |

transformers | 1,579 | closed | seq2seq with gpt2 | Hi,

I want to have a seq2seq model from gpt2, if I change the script of "run_lm_finetuning.py" in a way that it gets a sequence, then make it a context ids, and let it generate another sequence, like "run_generation.py" code, then minimize the cross-entropy loss, does it this way, create a seq2seq model? I rgreatly appreciate your help. thanks a lot. | 10-20-2019 17:40:48 | 10-20-2019 17:40:48 | Merging with #1506 |

transformers | 1,578 | closed | distilled gpt2 to be added to run_generation and run_lm_fintuning | Hi

I greatly appreciated also adding distilled GPT2 to the codes above, thanks | 10-20-2019 15:37:12 | 10-20-2019 15:37:12 | Hi, DistilGPT-2 is considered to be a checkpoint of GPT-2 in our library (differently to DistilBERT). You can already use DistilGPT-2 for both of these scripts with the following:

```bash

python run_generation --model_type=gpt2 --model_name_or_path=distilgpt2

``` |

transformers | 1,577 | closed | Add feature #1572 which gives support for multiple candidate sequences | **Multiple candidate sequences can be generated by setting ```num_samples > 1``` (still 1 by default).**

EXAMPLE with ```num_samples == 2``` for a GPT2 model:

```

INPUT:

Why did the chicken

OUTPUT:

cross the road <eoq> To go to the other side. <eoa>

eat food <eoq> Because it was hungry <eoa>

```

(above is illustrative w some words changed from the actual output)

**UPDATE:** Multiple candidate sequences can now be generated with _repetition penalty_ and ```top_k_top_p_filtering``` applied separately to each candidate. This allows for independent probability distributions across candidate sequences.

~Samples are generated with replacement to allow for sequences that have similar tokens at the same index (e.g. [CLS], stopwords, punctuations).~

~When ```temperature == 0```, the tokens returned are the top ```num_samples``` logits (first sample gets top 1, second gets top 2, and so on). I realize this might not be the best implementation because it doesn't allow for similar tokens at the same index across samples. I will consider later changing this to just returning ```num_samples``` copies of the top 1 logits (argmax).~ | 10-20-2019 15:27:47 | 10-20-2019 15:27:47 | Please note that main() now returns a list with ```num_samples``` elements inside.

Because of this, the test for run_generation.py should be updated to test for ```length``` for each element within the list. This explains why **build_py3_torch** test failed.

I will update ```ExamplesTests.test_generation``` to reflect the new output format.<|||||># [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=h1) Report

> Merging [#1577](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ef1b8b2ae5ad1057154a126879f7eb8de685f862?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1577 +/- ##

=======================================

Coverage 86.17% 86.17%

=======================================

Files 91 91

Lines 13595 13595

=======================================

Hits 11715 11715

Misses 1880 1880

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=footer). Last update [ef1b8b2...17dd64e](https://codecov.io/gh/huggingface/transformers/pull/1577?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Latest commit 17dd64e now applies repetition penalty and ```top_k_top_p_filtering``` to each candidate sequence separately.<|||||>Thanks @enzoampil

Superseded by https://github.com/huggingface/transformers/pull/1333 which was just merged to master.

Let me know if this works for your use case. |

transformers | 1,576 | closed | evaluating on race dataset with checkpoints fine tuned on roberta with fairseq | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I fine tuned a model on race dataset with reberta, following the fairseq instruction, got the result:

| epoch 004 | valid on 'valid' subset: | loss 0.913 | nll_loss 0.003 | ppl 1.00 | num_updates 21849 | best_accuracy 0.846563 | accuracy 0.836129

| epoch 004 | valid on 'valid' subset: | loss 0.913 | nll_loss 0.003 | ppl 1.00 | num_updates 21849 | best_accuracy 0.846563 | accuracy 0.836129

| epoch 004 | valid on 'valid' subset: | loss 0.913 | nll_loss 0.003 | ppl 1.00 | num_updates 21849 | best_accuracy 0.846563 | accuracy 0.836129

| epoch 004 | valid on 'valid' subset: | loss 0.913 | nll_loss 0.003 | ppl 1.00 | num_updates 21849 | best_accuracy 0.846563 | accuracy 0.836129

| saved checkpoint checkpoints/checkpoint4.pt (epoch 4 @ 21849 updates) (writing took 145.8246190547943 seconds)

| done training in 76377.9 seconds

But I load the weight to the transformers with convert_roberta_original_pytorch_checkpoint_to_pytorch script:

python convert_roberta_original_pytorch_checkpoint_to_pytorch.py --roberta_checkpoint_path ../pytorch-transformers-master/data/roberta-best-checkpoint/ --pytorch_dump_folder_path ../pytorch-transformers-master/data/roberta-best-checkpoint/

then evaluate on RACE dataset, I got terrible results on dev set:

model =data/models_roberta_race/

total batch size=8

train num epochs=5

fp16 =False

max seq length =512

eval_acc = 0.4808676079394311

eval_loss = 1.352066347319484

and on test set:

model =data/models_roberta_race/

total batch size=8

train num epochs=5

fp16 =False

max seq length =512

eval_acc = 0.6015403323875153

eval_loss = 1.3087183478393092

I don't know why. Could anyone can help? Thank you! | 10-20-2019 13:37:25 | 10-20-2019 13:37:25 | Do you get any improvement?

The ACC of eval and test has a huge gap.

<|||||> --classification-head when converting the models <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I am unable to reproduce the results on the RACE dataset. If anyone has been able to reproduce it, could you kindly share the weights of the fine-tuned model ? |

transformers | 1,575 | closed | use gpt2 as a seq2seq model | Hi

could you assist me please and show me with example on how I can use GPT-2 language model decoding method so train seq2seq model? thanks a lot | 10-20-2019 12:04:19 | 10-20-2019 12:04:19 | Merging with #1506 |

transformers | 1,574 | closed | Why the output is same within a batch use BertForSequenceClassification? | ## ❓ Questions & Help

I use BertForSequenceClassification for classification task, but the output witin a batch became same after just 2 or 3 batch, the value between different batch is different, really strange.

batch 1 output:

[-0.5966, 0.6081],

[-0.4659, 0.3766],

[-0.3595, 0.1334],

[-0.4178, 0.6873],

[-0.3884, 0.2640],

[-0.5017, 0.3465],

[-0.5978, 0.4961],

[-0.3146, 0.6879],

[-0.6525, 0.2702],

[-0.2500, 0.1232],

[-0.3137, 0.4212],

[-0.2663, 0.5169],

[-0.5225, 0.7992],

[-0.4844, 0.1942],

[-0.1459, 0.4033],

[-0.9007, 0.5122],

[-0.5833, 0.8187],

[-0.5552, 0.1253],

[-0.5420, -0.1123]], device='cuda:0', grad_fn=<AddmmBackward>))

2:

(tensor(1.2256, device='cuda:0', grad_fn=<NllLossBackward>), tensor([[ 0.7105, -0.8978],

[ 0.7925, -0.9382],

[ 0.6098, -0.9100],

[ 0.7522, -0.9534],

[ 0.7706, -0.9142],

[ 0.7778, -0.9246],

[ 0.7703, -0.8327],

[ 0.5850, -0.8817],

[ 0.6266, -0.9271],

[ 0.8061, -0.8157],

[ 0.8036, -0.9927],

[ 0.7619, -0.9277],

[ 0.7773, -0.7931],

[ 0.8458, -0.8186],

[ 0.6291, -0.8925],

[ 0.5919, -0.8709],

[ 0.6222, -0.9173],

[ 0.8290, -0.9817],

[ 0.7155, -0.9171],

[ 0.8107, -0.9364]], device='cuda:0', grad_fn=<AddmmBackward>))

3

(tensor(0.7688, device='cuda:0', grad_fn=<NllLossBackward>), tensor([[-0.7892, 0.5464],

[-0.7873, 0.5431],

[-0.7914, 0.5424],

[-0.7938, 0.5448],

[-0.7934, 0.5449],

[-0.7876, 0.5430],

[-0.7973, 0.5446],

[-0.7905, 0.5430],

[-0.7924, 0.5451],

[-0.7900, 0.5438],

[-0.7879, 0.5449],

[-0.7869, 0.5408],

[-0.7924, 0.5458],

[-0.7928, 0.5436],

[-0.7954, 0.5469],

[-0.7900, 0.5429],

[-0.7945, 0.5453],

[-0.8027, 0.5492],

[-0.7937, 0.5437],

[-0.7934, 0.5506]], device='cuda:0', grad_fn=<AddmmBackward>))

(tensor(1.1733, device='cuda:0', grad_fn=<NllLossBackward>), tensor([[ 1.3647, -0.3074],

[ 1.3588, -0.2927],

[ 1.3581, -0.2915],

[ 1.3628, -0.3009],

[ 1.3625, -0.3001],

[ 1.3630, -0.3016],

[ 1.3666, -0.3157],

[ 1.3604, -0.2953],

[ 1.3655, -0.3108],

[ 1.3604, -0.2942],

[ 1.3623, -0.3041],

[ 1.3555, -0.2866],

[ 1.3600, -0.2943],

[ 1.3654, -0.3091],

[ 1.3628, -0.3004],

[ 1.3658, -0.3080],

[ 1.3643, -0.3041],

[ 1.3599, -0.2967],

[ 1.3629, -0.3024],

[ 1.3688, -0.3206]], device='cuda:0', grad_fn=<AddmmBackward>))

<!-- A clear and concise description of the question. -->

| 10-20-2019 07:54:24 | 10-20-2019 07:54:24 | Hello, could you provide a script so that we may better understand the problem here?<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,573 | closed | GPT2 attention mask and output masking | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I have couple of questions:

1. In the original gpt2 they didn't pad the sequence, so they didn't need a attention mask, but in other cases where we our input sequence is small and we pad the input, don't we need a attention mask?

2. I have padded the labels in the left with -1, in the cost function how do I skip the padded elements in labels? and same for logits how do I skip the padded elements ? | 10-20-2019 05:31:35 | 10-20-2019 05:31:35 | Closing this because I found my answer<|||||>Hi, would you mind sharing what the answer you found is? Thank you so much!<|||||>Sorry for the delay. Gpt2 was trained as a CLM model with a fixed block size of data. So there was no need for attention mask. (That is what I understood). |

transformers | 1,572 | closed | Can we generate multiple possible sentences using GPT? | Hi,

Is there any way to generate multiple candidate text sequences using the pretrained generators? | 10-20-2019 04:46:35 | 10-20-2019 04:46:35 | @zhaoxy92 I happen to have a use case for this as well. I'll add in this feature to the ```run_generation.py```<|||||>@zhaoxy92 Added this functionality in ```run_generation.py```. You can set the number of candidates generated by setting the argument ```num_samples``` which is set to 1 by default.<|||||>I think you need to change `top_k_top_p_filtering()` as well.<|||||>@s-js not sure why we'd have to change```top_k_top_p_filtering()``` since the sampling only happens at ```sample_sequence()```. ```top_k_top_p_filtering()``` only filters the logits, so we can still generate multiple candidate sequences (independent of the filtered distribution).<|||||>@enzoampil Sorry, I meant repetition penalty (https://github.com/enzoampil/transformers/blob/7facbbe9871fe458b530ae8ce1b4bfefabd47c74/examples/run_generation.py#L142). Each sample has a different set of seen tokens.

At first I thought you were doing it inside `top_k_top_p_filtering()`.<|||||>Hi, thanks very much for adding this functionality – I'm trying to implement this into my own notebook and hitting a tensor mismatch error I can't figure out. I hope this is the right forum to post this question, since I'm using the new functionality you created.

At line 150: `generated = torch.cat((generated, next_token.unsqueeze(0)), dim=1)`

I'm getting this error:

```RuntimeError: invalid argument 0: Sizes of tensors must match except in dimension 1. Got 1 and 3 in dimension 0 at /opt/conda/conda-bld/pytorch_1556653114079/work/aten/src/THC/generic/THCTensorMath.cu:71```

The debugger shows me the sizes:

generated = (3,37)

next_token.unsqueeze(0) = (1,3)

So I figure that next_token tensor shape ought to be (3,1) instead, so I tried changing the line to `next_token.unsqueeze(1)` instead. When I do that I get a `CUDA error: device-side assert triggered`. Did that change fix my problem or just cause a new one?

Any ideas are greatly appreciated, thank you!<|||||>hi @buttchurch , did you run ```run_generation.py``` (with the multiple sentence functionality) as a CLI? It should work if you run it from my [fork](https://github.com/enzoampil/transformers/blob/7facbbe9871fe458b530ae8ce1b4bfefabd47c74/examples/run_generation.py#L142). Can you please post here the exact script you ran and the complete error message.

Also, my pull [request](https://github.com/enzoampil/transformers/blob/7facbbe9871fe458b530ae8ce1b4bfefabd47c74/examples/run_generation.py#L142) shows ```generated = torch.cat((generated, next_token.unsqueeze(1)), dim=1)``` in the last line of ```sample_sequence``` so I'm not sure where you got that line 150 code snippet.<|||||>@s-js noted on repetition penalty support. I'll try to find time for this within the next week.<|||||>hi @enzoampil, thanks for such a quick response! I still don't understand navigating git forks and branches and the different versions of git projects very well, so I have been just going off the main code I find in the transformers github.

It's probably not the 'right' way to do it, but I've pulled my own jupyter notebook together from a couple of the transformer example.py files, rather than using the run_generation.py. I think it might be way too long to post here, but I will now try implementing the changes in your fork to my notebook. I'll report back – thanks again for your help, and for creating this new functionality :)

Edit: It works! Seems like the important bit I was missing was `replacement=True` on the previous line.<|||||>@buttchurch glad it works for you :) Very welcome!<|||||>@s-js Latest [commit](https://github.com/huggingface/transformers/commit/17dd64ed939e09c1c9b1fa666390dd69a4731387) now implements _repetition penalty_ and ```top_k_top_p_filtering``` separately per candidate sequence generated.<|||||>We just merged https://github.com/huggingface/transformers/pull/1333 to master (+ subsequent fixes), can you check that it does what you guys want?

I'll close the issue for now, re-open if needed. |

transformers | 1,571 | closed | Pytorch Transformers no longer loads SciBert weights, getting `UnicodeDecodeError`. Worked in pytorch_pretrained_bert | ## 🐛 Bug

<!-- Important information -->

When using the old pytorch_pretrained_bert library, I could point the model with `from_pretrained` to the SciBert weights.tar.gz file, and it would load this just. However, if I try this with the Pytorch Transformers, I get this error.

```

UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte

```

Model I am using: Bert

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [ X] my own modified scripts: (give details)

I have a colab notebook that loads the SciBert weights using the old pytorch_pretrained_bert library, and the new Transformers library.

## To Reproduce

Steps to reproduce the behavior:

Here is the code

```

import requests

import os

import tarfile

import zipfile

import multiprocess

import json

if not os.path.exists('TempDir'):

os.makedirs('TempDir')

#Download SciBert weights and vocab file

import urllib.request

# Download the file from `url` and save it locally under `file_name`:

urllib.request.urlretrieve('https://s3-us-west-2.amazonaws.com/ai2-s2-research/scibert/pytorch_models/scibert_scivocab_uncased.tar', 'scibert.tar')

#Untar weights

import tarfile

tar = tarfile.open('scibert.tar', "r:")

tar.extractall()

tar.close()

#Extract weights

tar = tarfile.open('scibert_scivocab_uncased/weights.tar.gz', "r:gz")

tar.extractall('scibert_scivocab_uncased')

tar.close()

os.listdir('scibert_scivocab_uncased')

!pip install pytorch-pretrained-bert

from pytorch_pretrained_bert import BertModel as OldBertModel

#Works

oldBert = OldBertModel.from_pretrained('/content/scibert_scivocab_uncased/weights.tar.gz', cache_dir= 'TempDir')

!pip install transformers

from transformers import BertModel as NewBertModel

#Doesn't work

newBert = NewBertModel.from_pretrained('/content/scibert_scivocab_uncased/weights.tar.gz', cache_dir= 'TempDir')

```

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

Here is the error

```

---------------------------------------------------------------------------

UnicodeDecodeError Traceback (most recent call last)

<ipython-input-14-7e88a8c51c18> in <module>()

----> 1 newBert = NewBertModel.from_pretrained('/content/scibert_scivocab_uncased/weights.tar.gz', cache_dir= 'TempDir')

3 frames

/usr/local/lib/python3.6/dist-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs)

285 cache_dir=cache_dir, return_unused_kwargs=True,

286 force_download=force_download,

--> 287 **kwargs

288 )

289 else:

/usr/local/lib/python3.6/dist-packages/transformers/configuration_utils.py in from_pretrained(cls, pretrained_model_name_or_path, **kwargs)

152

153 # Load config

--> 154 config = cls.from_json_file(resolved_config_file)

155

156 if hasattr(config, 'pruned_heads'):

/usr/local/lib/python3.6/dist-packages/transformers/configuration_utils.py in from_json_file(cls, json_file)

184 """Constructs a `BertConfig` from a json file of parameters."""

185 with open(json_file, "r", encoding='utf-8') as reader:

--> 186 text = reader.read()

187 return cls.from_dict(json.loads(text))

188

/usr/lib/python3.6/codecs.py in decode(self, input, final)

319 # decode input (taking the buffer into account)

320 data = self.buffer + input

--> 321 (result, consumed) = self._buffer_decode(data, self.errors, final)

322 # keep undecoded input until the next call

323 self.buffer = data[consumed:]

UnicodeDecodeError: 'utf-8' codec can't decode byte 0x8b in position 1: invalid start byte

```

For convenience, here is a colab notebook with the code that you can run

https://colab.research.google.com/drive/1xzYYM1_Vo4wRMicBfnfzfAg_47SitwQi

## Expected behavior

pretrained weights should load just fine.

## Environment

* OS: Google Colab

* Python version:

* PyTorch version:

* PyTorch Transformers version (or branch): Current

* Using GPU ?

* Distributed of parallel setup ?

* Any other relevant information:

| 10-19-2019 22:11:59 | 10-19-2019 22:11:59 | `from_pretrained` expects the following files: `vocab.txt`, `config.json` and `pytorch_model.bin`.

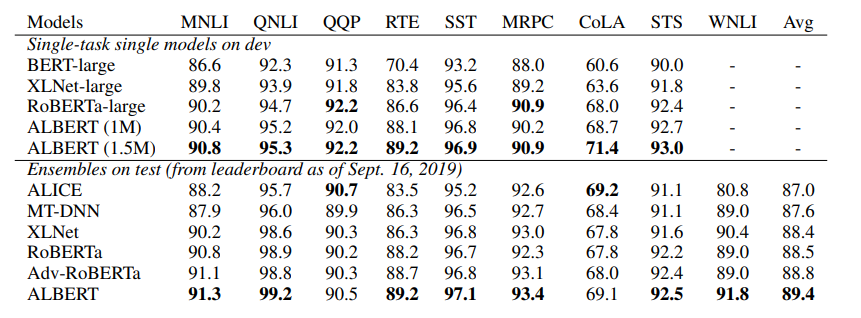

Thus, you only need to extract the `weights.tar.gz` archive.

Then rename `bert_config.json` to `config.json` and pass the path name to the `from_pretrained` method: this should be `/content/scibert_scivocab_uncased` in your example :) |

transformers | 1,570 | closed | Fix Roberta on TPU | Fixes #1569

- Revert tf.print() to logger , since tf.print() is an unsupported TPU ops. | 10-19-2019 21:38:10 | 10-19-2019 21:38:10 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=h1) Report

> Merging [#1570](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/82f6abd98aaa691ca0adfe21e85a17dc6f386497?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `100%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1570 +/- ##

=======================================

Coverage 86.16% 86.16%

=======================================

Files 91 91

Lines 13593 13593

=======================================

Hits 11713 11713

Misses 1880 1880

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_tf\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1570/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3JvYmVydGEucHk=) | `100% <100%> (ø)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=footer). Last update [82f6abd...55c3ae1](https://codecov.io/gh/huggingface/transformers/pull/1570?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Hum this situation is a bit annoying because we switched from `logger.error` to `tf.print` to solve #1350<|||||>Is there a specific reason why we have such a warning message for Roberta but not for other models? All models based on BERT are require the special tokens.

I was having the same issues as #1350 on my end using the logger (lots of zmq , operationnotallowed errors) . The solution for me was to remove the entire warning message altogether.

Is that viable in this scenario? <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,569 | closed | TFRobertaForSequenceClassification fails on TPU on Transformers >2.0.0 | ## 🐛 Bug

<!-- Important information -->

Model I am using (TFRobertaForSequenceClassification):

Language I am using the model on (English):

The problem arise when using:

* [ ] the official example scripts: (give details)

* [x] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1. Use a TPU runtime on colab

2. ```python

resolver = tf.distribute.cluster_resolver.TPUClusterResolver()

tf.config.experimental_connect_to_cluster(resolver)

tf.tpu.experimental.initialize_tpu_system(resolver)

strategy = tf.distribute.experimental.TPUStrategy(resolver)

with tf.device('/job:worker'):

with strategy.scope():

# model = TFRobertaForSequenceClassification.from_pretrained('bert-large-uncased-whole-word-masking',num_labels = (len(le.classes_)))

model = TFRobertaForSequenceClassification.from_pretrained('roberta-large',num_labels = 2)

print('model loaded')

inp = np.random.randint(10,100, size=(12800, 64))

inp[:,0]=0

inp[:,63]=2

labels = np.random.randint(2,size = (12800,1))

print('data generated')

optimizer = tf.keras.optimizers.Adam(learning_rate=3e-5, epsilon=1e-08, clipnorm=1.0)

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

metric = tf.keras.metrics.SparseCategoricalAccuracy('accuracy')

model.compile(optimizer=optimizer, loss=loss, metrics=[metric])

print('starting fitting')

# model.fit([train_input_ids,train_input_masks],y_train,epochs = 3,batch_size = 64,validation_data=([test_input_ids,test_input_masks], y_test),verbose=1)

model.fit(inp,labels,epochs = 2,batch_size = 64,verbose=1)

## Environment

* OS: Google Colab TPU runtime

* Python version:3.6

* PyTorch version:NA

* PyTorch Transformers version (or branch):2.1.1

* Using GPU ? No

* Distributed of parallel setup ? Yes

## Additional context

The following error gets thrown when calling model.fit()

```

InvalidArgumentError Traceback (most recent call last)

<ipython-input-4-b77065bb89ae> in <module>()

15 print('starting fitting')

16 # model.fit([train_input_ids,train_input_masks],y_train,epochs = 3,batch_size = 64,validation_data=([test_input_ids,test_input_masks], y_test),verbose=1)

---> 17 model.fit(inp,labels,epochs = 2,batch_size = 64,verbose=1)

11 frames

/usr/local/lib/python3.6/dist-packages/six.py in raise_from(value, from_value)

InvalidArgumentError: Compilation failure: Detected unsupported operations when trying to compile graph tf_roberta_for_sequence_classification_roberta_cond_true_122339[] on XLA_TPU_JIT: PrintV2 (No registered 'PrintV2' OpKernel for XLA_TPU_JIT devices compatible with node {{node PrintV2}}

. Registered: device='CPU'

){{node PrintV2}}

[[tf_roberta_for_sequence_classification/roberta/cond]]

TPU compilation failed

[[tpu_compile_succeeded_assert/_5504150486074133972/_3]]

Additional GRPC error information:

{"created":"@1571518085.015232162","description":"Error received from peer","file":"external/grpc/src/core/lib/surface/call.cc","file_line":1039,"grpc_message":" Compilation failure: Detected unsupported operations when trying to compile graph tf_roberta_for_sequence_classification_roberta_cond_true_122339[] on XLA_TPU_JIT: PrintV2 (No registered 'PrintV2' OpKernel for XLA_TPU_JIT devices compatible with node {{node PrintV2}}\n\t. Registered: device='CPU'\n){{node PrintV2}}\n\t [[tf_roberta_for_sequence_classification/roberta/cond]]\n\tTPU compilation failed\n\t [[tpu_compile_succeeded_assert/_5504150486074133972/_3]]","grpc_status":3} [Op:__inference_distributed_function_154989]

Function call stack:

distributed_function -> distributed_function

```

The reason behind this error seems to be the tf.print() in the following code , which is not supported on TPU.

https://github.com/huggingface/transformers/blob/82f6abd98aaa691ca0adfe21e85a17dc6f386497/transformers/modeling_tf_roberta.py#L78-L80

| 10-19-2019 21:30:37 | 10-19-2019 21:30:37 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,568 | closed | Fix hanging when loading pretrained models | - Fix hanging when loading pretrained models from the cache without having internet access. This is a widespread issue on supercomputers whose internal compute nodes are firewalled. | 10-19-2019 20:20:02 | 10-19-2019 20:20:02 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=h1) Report

> Merging [#1568](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/82f6abd98aaa691ca0adfe21e85a17dc6f386497?src=pr&el=desc) will **decrease** coverage by `0.02%`.

> The diff coverage is `100%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1568 +/- ##

==========================================

- Coverage 86.16% 86.14% -0.03%

==========================================

Files 91 91

Lines 13593 13593

==========================================

- Hits 11713 11710 -3

- Misses 1880 1883 +3

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1568/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL2ZpbGVfdXRpbHMucHk=) | `74.17% <100%> (ø)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_common\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1568/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `95.21% <0%> (-1.6%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=footer). Last update [82f6abd...a2c8c8e](https://codecov.io/gh/huggingface/transformers/pull/1568?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Ok, LGTM thanks |

transformers | 1,567 | closed | Added mixed precision (AMP) to inference benchmark | I added a mixed precision option to the benchmark script and ran it on a DGX Station to get the results. As you can see, we can get between 1.2x to up to 4.5x inference speed depending on model, batch size and sequence length.

**Summary**

| Batch Size | Speedup (XLA only) | Speedup (XLA + AMP) | Min. Seq Len* |

| -------------- | --------------------------- | ------------------------------- | ------------------ |

| 1 | 1.1 ~ 1.9 | 1.4 ~ 2.9 | 512 |

| 2 | 1.1 ~ 1.9 | 1.4 ~ 3.4 | 256 |

| 4 | 1.1 ~ 2.1 | 1.2 ~ 3.8 | 128 |

| 8 | 1.1 ~ 3.1 | 1.2 ~ 4.5 | 64 |

*Min. Seq Len refers to minimum sequence length required to not see **any** performance regression at all. For example, at batch size 1:

* Seq Len of 512 tokens see speed up of 1.4~2.1x depending on model

* Seq Len of 256 tokens see speed up of 0.8~1.2x depending on model

Google Sheets with the results [here](https://docs.google.com/spreadsheets/d/1IW7Xbv-yfE8j-T0taqdyoSehca4mNcsyx6u0IXTzSJ4/edit#gid=0). GPU used is a single V100 (16GB). | 10-19-2019 09:02:26 | 10-19-2019 09:02:26 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=h1) Report

> Merging [#1567](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/079bfb32fba4f2b39d344ca7af88d79a3ff27c7c?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1567 +/- ##

======================================

Coverage 85.9% 85.9%

======================================

Files 91 91

Lines 13653 13653

======================================

Hits 11728 11728

Misses 1925 1925

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=footer). Last update [079bfb3...079bfb3](https://codecov.io/gh/huggingface/transformers/pull/1567?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Any reason why you kept the batch sizes so small? With a V100, you should be able to easily pull of a batch size of 64 for seq len 64. (Perhaps that's what the benchmark script uses as default, I don't know EDIT: yes, those are the values from the benchmark script. Not sure why, though.) I'm a bit surprised by the relatively small speed up. I've experienced **much** greater speed ups when using AMP, but that was on PyTorch with apex.<|||||>@BramVanroy this is for **inference** hence the emphasis on low batch size.<|||||>Oh, my bad. I was under the impression that the benchmark script included training profiling with PyProf. |

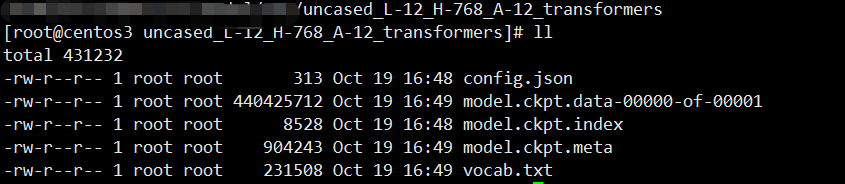

transformers | 1,566 | closed | error load bert model :not found model file | Error content:

OSError: Error no file named ['pytorch_model.bin', 'tf_model.h5', 'model.ckpt.index'] found in directory ./uncased_L-12_H-768_A-12_transformers or `from_tf` set to False

but file exists

| 10-19-2019 08:56:17 | 10-19-2019 08:56:17 | I don't understand when you get this error?<|||||>In order to understand when you've encountered this bug, as suggested by @iedmrc , you've to write down the source code that generates the bug! And please show your environment (Python, Transformers, PyTorch, TensorFlow versions) too!

> Error content:

> OSError: Error no file named ['pytorch_model.bin', 'tf_model.h5', 'model.ckpt.index'] found in directory ./uncased_L-12_H-768_A-12_transformers or `from_tf` set to False

> but file exists

> <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I got the same error when loading a TF BERT model:

```

dir = "/Users/danielk/ideaProjects/farsi-language-models/src/models/perbert_L-12_H-768_A-12/"

tokenizer = BertTokenizer.from_pretrained(dir)

config = BertConfig.from_json_file(dir + '/bert_config.json')

model = TFBertForMaskedLM.from_pretrained(dir, config=config)

```

The error happens in the last line.

```

Traceback (most recent call last):

File "6.2.try_tf_bert_transformers.py", line 8, in <module>

model = TFBertForMaskedLM.from_pretrained(dir, config=config)

File "/Users/danielk/opt/anaconda3/lib/python3.7/site-packages/transformers/modeling_tf_utils.py", line 353, in from_pretrained

[WEIGHTS_NAME, TF2_WEIGHTS_NAME], pretrained_model_name_or_path

OSError: Error no file named ['pytorch_model.bin', 'tf_model.h5'] found in directory /Users/danielk/ideaProjects/farsi-language-models/src/models/perbert_L-12_H-768_A-12/ or `from_pt` set to False

``` |

transformers | 1,565 | closed | How to add the output word vector of bert to my model | ## ❓ Questions & Help

Hello, I am a student who is learning nlp.

Now I want to use the word vector output by bert to apply to my model, but **I can't connect the word vector to the network**. Could you give me an example program or tutorial about this which use textCNN or LSTM. You can sent e-mail to **[email protected]** or reply me, please.

Thank you for your kind cooperation!

<!-- A clear and concise description of the question. -->

| 10-19-2019 03:14:58 | 10-19-2019 03:14:58 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I think you can see [here](https://jalammar.github.io/a-visual-guide-to-using-bert-for-the-first-time/). In more details, this tutorial uses **BERT** as **feature extractor** and on-top they have used a **Logistic Regression** model from [Scikit-learn](https://scikit-learn.org/stable/) for the **sentiment analysis** task.

Question: which is the problem in details? Are you not able to connect the feature vector extracted by BERT to a custom classifier on-top? Is the shape of the feature vector fixed?

> ## Questions & Help

> Hello, I am a student who is learning nlp.

> Now I want to use the word vector output by bert to apply to my model, but **I can't connect the word vector to the network**. Could you give me an example program or tutorial about this which use textCNN or LSTM. You can sent e-mail to **[[email protected]](mailto:[email protected])** or reply me, please.

> Thank you for your kind cooperation!<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

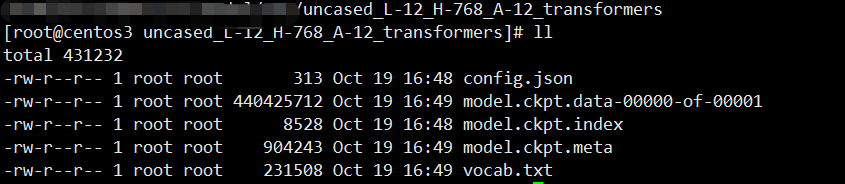

transformers | 1,564 | closed | ALBERT: will it be supported? | will you release an ALBERT model?

it sets the new state of art;

# 🌟New model addition

ALBERT: A LITE BERT FOR SELF-SUPERVISED

LEARNING OF LANGUAGE REPRESENTATIONS

https://arxiv.org/pdf/1909.11942.pdf

## Model description

<!-- Important information -->

## Open Source status

* [ ] the model implementation is available: (give details)

* [ ] the model weights are available: (give details)

## Additional context

<!-- Add any other context about the problem here. -->

| 10-19-2019 02:42:16 | 10-19-2019 02:42:16 | https://github.com/brightmart/albert_zh<|||||>Please direct all your questions to the main albert topic. https://github.com/huggingface/transformers/issues/1370

Please close this current topic. It does not add anything.<|||||>... We should extend the issue template and redirect all ALBERT questions to #1370 😂 |

transformers | 1,563 | closed | The implementation of grad clipping is not correct when gradient accumulation is enabled | torch.nn.utils.clip_grad_norm_ should be applied before optimizer.step() not after each backward

## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....):

Language I am using the model on (English, Chinese....):

The problem arise when using:

* [ ] the official example scripts: (give details)

* [ ] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS:

* Python version:

* PyTorch version:

* PyTorch Transformers version (or branch):

* Using GPU ?

* Distributed of parallel setup ?

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

| 10-19-2019 00:12:56 | 10-19-2019 00:12:56 | This should be fixed in https://github.com/huggingface/transformers/pull/1580<|||||>yeah @yangyiben please let me know if that merge fixes it<|||||>#1580 is now merged |

transformers | 1,562 | closed | training BERT on coreference resolution | Hi

I really appreciate if you could add codes to train BERT on coref resolution dataset of CONLL-2012, thanks | 10-18-2019 14:32:57 | 10-18-2019 14:32:57 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>There's a newer [approach](https://github.com/mandarjoshi90/coref) using BERT but it's using tensorflow 1.14. I wish if we could get this into hugginface.

|

transformers | 1,561 | closed | [CLS] & [SEP] tokens missing in documentation | https://github.com/huggingface/transformers/blob/fd97761c5a977fd22df789d2851cf57c7c9c0930/transformers/modeling_bert.py#L1017-L1023

In this example of bert for token classification input sentence is encoded, but [CLS] & [SEP] tokens are not added. Is this intentional or just a typo?

Do I need to add [CLS] & [SEP] tokens when I fine tune base bert for sequence classification or token classification? | 10-18-2019 14:12:41 | 10-18-2019 14:12:41 | @hawkeoni [CLS] and [SEP] tokens are added automatically as long as you use the tokenizer, ```BertTokenizer```<|||||>@enzoampil It doesn't seem to work.

The following code

```python

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

sentence = "Hello there, General Kenobi!"

print(tokenizer.encode(sentence))

print(tokenizer.cls_token_id, tokenizer.sep_token_id)

```

produces the next output:

[7592, 2045, 1010, 2236, 6358, 16429, 2072, 999]

101 102

As you can see, cls and sep tokens are not in the list.<|||||>@hawkeoni please try ```print(tokenizer.encode(sentence, add_special_tokens=True))```<|||||>@enzoampil I'm sorry, but you're missing the point that the documentation is plainly wrong and misleading. That's why I asked whether the tokens should be added at all.<|||||>@hawkeoni Apologies, yes I did miss your point.

Is this intentional or just a typo? **Looks like a typo since special tokens weren't added. Setting ```add_special_tokens=True``` should make this correct (will add this in).**

Do I need to add [CLS] & [SEP] tokens when I fine tune base bert for sequence classification or token classification? **Yes, I believe this is currently handled by ```load_and_cache_examples``` in the sample training scripts (e.g. ```run_ner.py```)**<|||||>@enzoampil Thanks for your answer! If you plan on fixing this typo, please, fix it everywhere, so this issue never occurs again.

You can find it with

```bash

grep -iR "input_ids = torch.tensor(tokenizer.encode(" .

```

<|||||>@hawkeoni thanks for the bash script reco. Ended up using it :)<|||||>Thank you both for that.

Please note that the special tokens will be added by default from now on (already on master and in the coming release). |

transformers | 1,560 | closed | Finetuning OpenAI GPT-2 for another language. | ## ❓ Questions & Help

Hi,

Is there any option to finetune and use OpenAI GPT-2 for another language except English? | 10-18-2019 11:54:33 | 10-18-2019 11:54:33 | Hello, if you want to try and fine-tune GPT-2 to another language, you can just give the `run_lm_finetuning` script your text in the other language on which you want to fine-tune your model.

However, please be aware that according to the language and its distance to the English language (language on which GPT-2 was pre-trained), you may find it hard to obtain good results. <|||||>@0x01h

GPT-2 can produce great results given a proper vocabulary. If you just run `run_lm_finetuning` on your lang dataset it will give you poor results, regardless of language distance from English because the vocab.

I'd suggest that you train your tokenizer model first and then fine-tune GPT-2 with it. I'm doing that here

https://github.com/mgrankin/ru_transformers

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,559 | closed | Compatibility between DistilBert and Bert models | ## ❓ Questions & Help

I have a regular classification task for sentences in russian language.

I used to train `BertForSequenceClassification` with pretrained Bert from [DeepPavlov](http://docs.deeppavlov.ai/en/master/features/models/bert.html) [RuBERT](http://files.deeppavlov.ai/deeppavlov_data/bert/rubert_cased_L-12_H-768_A-12_v2.tar.gz) (using PyTorch). Then I switched to `DistilBertForSequenceClassification`, but still using pretrained RuBert (because there is no pretrained DistilBert with russian language). And it worked.

Then after [this changing](https://github.com/huggingface/transformers/pull/1203/commits/465870c33fe4ade66863ca0edfe13616f9d24da5#diff-9dc1f6db4a89dbf13c19d02a9f27093dL178) there is impossible to load `DistilBertConfig` from `BertConfig` config.json. `DistilBertConfig` uses property decorator for compatibility between `DistilBertConfig` and `BertConfig`, that's why using setattr() causes error `AttributeError: can't set attribute`.

So my question is following: Is it a bug or feature? Is it OK to load DistilBert from pretrained Bert or not? Or maybe the best way for me is to distill RuBERT by myself using your script for distillation? | 10-18-2019 11:05:16 | 10-18-2019 11:05:16 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,558 | closed | unable to parse E:/litao/bert/bert-base-cased\config.json as a URL or as a local path | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

train_file = 'E:/litao/bert/SQuAD 1.1/train-v1.1.json'

predict_file = 'E:/litao/bert/SQuAD 1.1/dev-v1.1.json'

model_type = 'bert'

model_name_or_path = 'E:/litao/bert/bert-base-cased'

output_dir = 'E:/litao/bert/transformers-master/examples/output'

| 10-18-2019 10:06:42 | 10-18-2019 10:06:42 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Did you find a solution?<|||||>> Did you find a solution?

Rename "bert_config.json" to "config.json". |

transformers | 1,557 | closed | Tuning BERT on our own data set for multi-class classification problem | I want to tune pre-trained BERT for multi-class classification with **6 million class, 30 million rows & highly imbalance data set.**

Can we tune BERT in batch of classes?

For example, I will take 15 classes (last layer will have only 15 neuron) and train my BERT model & in next batch use that trained model to train batch of next 15 classes, I just want to understand cons of this process. | 10-18-2019 06:53:23 | 10-18-2019 06:53:23 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,556 | closed | Does the function of 'evaluate()' change the result? | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

when i run RTE task , and logging steps=50: the result is:

gloabl_step 50: 0.8953

global_step 100: 0.8953

gloabl_step 150: 0.8916

global_step 200: 0.8736

but when logging steps =100:

global_step 100: 0.8953

global_step 200: 0.8880

when global_step is 200,what cause the difference? i didn't modify any code

| 10-18-2019 05:10:50 | 10-18-2019 05:10:50 | Could you specify what script you're running, with which parameters? Did you set a random seed?<|||||>> Could you specify what script you're running, with which parameters? Did you set a random seed?

for SEEDS in 99

do

CUDA_VISIBLE_DEVICES=2 python run_glue.py \

--data_dir '/data/transformers/data/RTE/' \

--model_type 'roberta' \

--model_name_or_path '/data/transformers/examples/pretrained_model/roberta-mnli/' \

--task_name 'rte' \

--output_dir ./$SEEDS \

--overwrite_output_dir \

--max_seq_length 128 \

--do_train \

--do_eval \

--evaluate_during_training \

--per_gpu_train_batch_size 8 \

--per_gpu_eval_batch_size 8 \

--gradient_accumulation_steps 2 \

--learning_rate 1e-5 \

--num_train_epochs 10 \

--logging_steps 50 \

--save_steps -1 \

--seed $SEEDS \

done

<|||||>> Could you specify what script you're running, with which parameters? Did you set a random seed?

i change 'roberta' to 'bert' and set the same seed, the result is also different, Is there any wrong with my shell script? |

transformers | 1,555 | closed | Sample a constant number of tokens for masking in LM finetuning | For Masked LM fine-tuning, I think both the original BERT and RoBERTa implementations uniformly sample x number of tokens in *each* sequence for masking (where x = mlm_probability * 100 * sequence_length)

However, The current logic in run_lm_finetuning.py does an indepdendent sampling (from bernoulli distribution) for each token in the sequence. This leads to variance in the number of masked tokens (with the average number still close to x%).

The below example illustrates an extreme case, of the current logic, where no token in the input sequence is masked.

```

In [1]: import numpy as np

...: import torch

...: from transformers import BertTokenizer

...:

...: mlm_probability = 0.15

...: tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

...:

...: tokens = tokenizer.convert_ids_to_tokens(tokenizer.encode('please mask me, o lord!', add_special_tokens=True))

...:

...: input_ids = tokenizer.convert_tokens_to_ids(tokens)

...:

...: inputs = torch.Tensor([input_ids])

...:

...: labels = inputs.clone()

...:

...: probability_matrix = torch.full(labels.shape, mlm_probability)

...:

...: special_tokens_mask = [tokenizer.get_special_tokens_mask(val, already_has_special_tokens=True) for val in labels.tolist()]

...: probability_matrix.masked_fill_(torch.tensor(special_tokens_mask, dtype=torch.bool), value=0.0)

...: masked_indices = torch.bernoulli(probability_matrix).bool()

...:

...:

In [2]: masked_indices

Out[2]: tensor([[False, False, False, False, False, False, False, False, False]])

```

This PR modifies the logic so the percentage of masked tokens is constant (at x).

Separately, the existing and the new masking logic both rely on boolean tensors of pytorch.

So, this also updates README to include the minimum pytorch version needed. (1.2.0) | 10-18-2019 01:54:02 | 10-18-2019 01:54:02 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=h1) Report

> Merging [#1555](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/fd97761c5a977fd22df789d2851cf57c7c9c0930?src=pr&el=desc) will **increase** coverage by `1.42%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1555 +/- ##

==========================================

+ Coverage 84.74% 86.16% +1.42%

==========================================

Files 91 91

Lines 13593 13593

==========================================

+ Hits 11519 11713 +194

+ Misses 2074 1880 -194

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.75% <0%> (+1.35%)` | :arrow_up: |

| [transformers/modeling\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2N0cmwucHk=) | `95.45% <0%> (+2.27%)` | :arrow_up: |

| [transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `73.28% <0%> (+2.46%)` | :arrow_up: |

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `80.57% <0%> (+15.1%)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_common\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `96.8% <0%> (+17.02%)` | :arrow_up: |

| [transformers/modeling\_tf\_pytorch\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1555/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3B5dG9yY2hfdXRpbHMucHk=) | `92.95% <0%> (+83.09%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=footer). Last update [fd97761...090cbd6](https://codecov.io/gh/huggingface/transformers/pull/1555?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Thanks @rakeshchada. Closing as superseded by #1814 |

transformers | 1,554 | closed | GPT2 not in modeltype | ## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): GPT2

Language I am using the model on (English, Chinese....): ENGLISH

The problem arise when using:

* [ ] the official example scripts: (give details) run_glue.py

* [ ] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name) MRPC GLUE

* [ ] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1.

2.

3.

python ./examples/run_glue.py --model_type gpt2 --model_name_or_path gp

t2 --task_name MRPC --do_train --do_eval --do_lower_case --data_dir ./fake --max_seq_length 512 --per_gpu_eval_batc

h_size=8 --per_gpu_train_batch_size=8 --learning_rate 2e-5 --num_train_epochs 3.0 --output_dir /tmp/bot/

10/18/2019 00:22:28 - WARNING - __main__ - Process rank: -1, device: cuda, n_gpu: 1, distributed training: False,

16-bits training: False

Traceback (most recent call last):

File "./examples/run_glue.py", line 541, in <module>

main()

File "./examples/run_glue.py", line 476, in main

config_class, model_class, tokenizer_class = MODEL_CLASSES[args.model_type]

KeyError: 'gpt2'

## Environment

* OS: UBUNTU LINUX

* Python version: 3.7

* PyTorch version: LATEST

* PyTorch Transformers version (or branch): LATEST

* Using GPU ? YES

* Distributed of parallel setup ? NO

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. -->

| 10-18-2019 00:24:40 | 10-18-2019 00:24:40 | Hey @tuhinjubcse gpt2 is a text generation model. If you look in the run_glue.py file you will see your options for model selection for using the run_glue.py script.

```

MODEL_CLASSES = {

'bert': (BertConfig, BertForSequenceClassification, BertTokenizer),

'xlnet': (XLNetConfig, XLNetForSequenceClassification, XLNetTokenizer),

'xlm': (XLMConfig, XLMForSequenceClassification, XLMTokenizer),

'roberta': (RobertaConfig, RobertaForSequenceClassification, RobertaTokenizer),

'distilbert': (DistilBertConfig, DistilBertForSequenceClassification, DistilBertTokenizer)

}

```<|||||>gpt2 is a transformer model. Why would it be limited to only generation ??<|||||>It's not that you can't use it for classification, etc. It's that you would need to make a few changes to the code and model. #1248 . Right now the changes are not made for gpt2. Generally speaking, people use gpt2 for text generation.<|||||>Autoregressive models are not as good as mlms, at classification tasks. You should check masked language models (mlm) or similars. But it's not impossible, Open AI has shown some example use cases on original [GPT paper](https://s3-us-west-2.amazonaws.com/openai-assets/research-covers/language-unsupervised/language_understanding_paper.pdf). Check the figure 1 and page 6 for more details.

Also, this is not a bug but just not implemented. <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,553 | closed | Add speed log to examples/run_squad.py | Add a speed estimate log (time per example)

for evaluation to examples/run_squad.py | 10-17-2019 21:44:08 | 10-17-2019 21:44:08 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1553?src=pr&el=h1) Report

> Merging [#1553](https://codecov.io/gh/huggingface/transformers/pull/1553?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/fd97761c5a977fd22df789d2851cf57c7c9c0930?src=pr&el=desc) will **increase** coverage by `1.42%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1553?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1553 +/- ##

==========================================

+ Coverage 84.74% 86.16% +1.42%

==========================================

Files 91 91

Lines 13593 13593

==========================================

+ Hits 11519 11713 +194

+ Misses 2074 1880 -194

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1553?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1553/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.75% <0%> (+1.35%)` | :arrow_up: |