repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

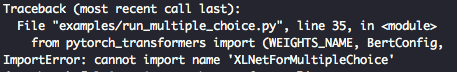

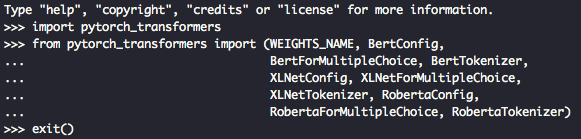

transformers | 1,389 | closed | Fix compatibility issue with PyTorch 1.2 | Using PyTorch 1.2.0 give an error when running XLNet.

We should use the new way to reverse mask : instead of using `1 - mask`, we should use `~mask` | 10-01-2019 07:44:23 | 10-01-2019 07:44:23 | Hi,

We can accept this since it breaks lower versions of PyTorch.

You can just feed your mask as a FloatTensor (as indicated in the docstrings I think). |

transformers | 1,388 | closed | Add Roberta SQuAD model | There is the realisation of a RoBERTa SQuAD finetuning.

On 2x1080Ti on RoBERTa Base it gives:

python3 run_squad.py \

--model_type roberta \

--model_name_or_path roberta-base \

--do_train \

--do_eval \

--train_file $SQUAD_DIR/train-v1.1.json \

--predict_file $SQUAD_DIR/dev-v1.1.json \

--per_gpu_train_batch_size 8 \

--per_gpu_eval_batch_size 8 \

--learning_rate 3e-5 \

--num_train_epochs 2.0 \

--max_seq_length 384 \

--doc_stride 128 \

--save_steps 2000 \

--overwrite_output_dir \

--verbose_logging \

--output_dir /tmp/debug_squad/

Results: {'exact': 85.80889309366131, 'f1': 92.09291402361669, 'total': 10570, 'HasAns_exact': 85.80889309366131, 'HasAns_f1': 92.09291402361669, 'HasAns_total': 10570}

On RoBERTa Large:

python3 run_squad.py \

--model_type roberta \

--model_name_or_path roberta-large \

--do_train \

--do_eval \

--train_file $SQUAD_DIR/train-v1.1.json \

--predict_file $SQUAD_DIR/dev-v1.1.json \

--per_gpu_train_batch_size 2 \

--per_gpu_eval_batch_size 2 \

--learning_rate 3e-5 \

--num_train_epochs 2.0 \

--max_seq_length 384 \

--doc_stride 128 \

--save_steps 2000 \

--overwrite_output_dir \

--verbose_logging \

--output_dir /tmp/debug_squad/

Results: {'exact': 87.04824976348155, 'f1': 93.14253401654709, 'total': 10570, 'HasAns_exact': 87.04824976348155, 'HasAns_f1': 93.14253401654709, 'HasAns_total': 10570} | 10-01-2019 04:50:09 | 10-01-2019 04:50:09 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=h1) Report

> Merging [#1388](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/5c3b32d44d0164aaa9b91405f48e53cf53a82b35?src=pr&el=desc) will **decrease** coverage by `0.16%`.

> The diff coverage is `23.52%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1388 +/- ##

==========================================

- Coverage 84.69% 84.52% -0.17%

==========================================

Files 84 84

Lines 12596 12627 +31

==========================================

+ Hits 10668 10673 +5

- Misses 1928 1954 +26

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1388/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `61.17% <23.52%> (-10.05%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=footer). Last update [5c3b32d...1ba42ca](https://codecov.io/gh/huggingface/transformers/pull/1388?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>I am also working on reproducing the results reported in the Roberta paper and found two issues in this PR. One issue is explained in the comment above. The other issue is that it is required to insert two sep_tokens between question tokens and answer tokens for Roberta as implemented [here](https://github.com/huggingface/transformers/blob/master/transformers/tokenization_roberta.py#L101). Therefore, `max_tokens_for_doc` should be `max_seq_length - len(query_tokens) - 4`.<|||||>In the Fairseq realisation of RoBERTa on Commonsense QA:

https://github.com/pytorch/fairseq/tree/master/examples/roberta/commonsense_qa

https://github.com/pytorch/fairseq/blob/master/examples/roberta/commonsense_qa/commonsense_qa_task.py

There is the only one sep_token between question and answer:

`<s> Q: Where would I not want a fox? </s> A: hen house </s>`<|||||>> In the Fairseq realisation of RoBERTa on Commonsense QA:

> There is the only one sep_token between question and answer:

> `<s> Q: Where would I not want a fox? </s> A: hen house </s>`

Thank you very much for your prompt reply. I did not know this. It seems to be appropriate to use single `sep_token` here because Commonsense QA is somewhat more similar to SQuAD than other tasks (e.g., GLUE).<|||||>Thanks for this @vlarine! (and @ikuyamada)

Would you agree to share the weights on our S3 as well?

Also, did you try with the same separators encoding scheme as the other RoBERTa models?

`<s> Q: Where would I not want a fox? </s> </s> A: hen house </s>` – did the results differ significantly?<|||||>No, I have not tried. But why there are two `</s>` tokens? I think more natural way is:

`<s> Q: Where would I not want a fox? </s> <s> A: hen house </s>`<|||||>@vlarine See this docstring in `fairseq`: https://github.com/pytorch/fairseq/pull/969/files

Do you think you could try with this sep encoding scheme? Otherwise I'll do it in the next couple of days.

I would like to merge your PR soon. Any way you can give me write access to your fork, cf. https://help.github.com/en/articles/committing-changes-to-a-pull-request-branch-created-from-a-fork – so that i can add commits on top of your PR?

<|||||>@vlarine @julien-c thanks for the amazing work! I can try it on SQuAD 2.0 and let you know if anything pops up there<|||||>Nice work. I also tried to add roberta into run_squad.py several days ago. Hope that my implementation would be useful. [run_squad.py with roberta](https://github.com/erenup/pytorch-transformers/pull/4) <|||||>Folding this PR into #1386, which is close to being ready to being merged.

@vlarine @ikuyamada @pminervini @erenup Can you guys please check it out?<|||||>Closing in favor of #1386. |

transformers | 1,387 | closed | TFTransfoXLLMHeadModel doesn't accept lm_labels parameter | ## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): TFTransfoXLLMHeadModel

Language I am using the model on (English, Chinese....): Other

The problem arise when using:

* [ ] the official example scripts: (give details)

* [ X ] my own modified scripts: I have a script that trains a new TransformerXL

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ X ] my own task or dataset: The dataset is a language modeling dataset of novel symbolic data.

## To Reproduce

Steps to reproduce the behavior:

Call the TFTransfoXLLMHeadModel as such:

mems = transformer(data,

lm_labels = lm_labels,

mems = mems,

training=True)

File "/home/tom/.local/lib/python3.7/site-packages/tensorflow_core/python/keras/engine/base_layer.py", line 891, in __call__

outputs = self.call(cast_inputs, *args, **kwargs)

TypeError: call() got an unexpected keyword argument 'lm_labels'

If I instead include lm_labels in a dict, it is simply ignored.

## Expected behavior

The model documentation says that including lm_labels is recommended for training because it allows the adaptive softmax to be calculated more efficiently

## Environment

* OS: Ubuntu 19

* PyTorch Transformers version (or branch): 2.0.0

* Using GPU Yes

| 09-30-2019 23:19:16 | 09-30-2019 23:19:16 | I see now that I missed something. The documentation uses the parameter 'lm_labels' but the correct parameter is just 'labels'. The documentation says that when this parameter is present, prediction logits will not be output, but this is incorrect. They are output regardless of the presence of 'labels'.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,386 | closed | Add RoBERTa question answering & Update SQuAD runner to support RoBERTa | 09-30-2019 22:26:33 | 09-30-2019 22:26:33 | @thomwolf / @LysandreJik / @VictorSanh / @julien-c Could you help review this PR? Thanks!<|||||># [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=h1) Report

> Merging [#1386](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/be916cb3fb4579e278ceeaec11a6524662797d7f?src=pr&el=desc) will **decrease** coverage by `0.15%`.

> The diff coverage is `21.21%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1386 +/- ##

==========================================

- Coverage 86.16% 86.01% -0.16%

==========================================

Files 91 91

Lines 13593 13626 +33

==========================================

+ Hits 11713 11720 +7

- Misses 1880 1906 +26

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1386/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `69.18% <21.21%> (-11.39%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=footer). Last update [be916cb...ee83f98](https://codecov.io/gh/huggingface/transformers/pull/1386?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Hi @thomwolf / @LysandreJik / @VictorSanh / @julien-c

I have also run experiments using RoBERT large setting in original paper and reproduced their results,

- **SQuAD v1.1**

{

"exact": 88.25922421948913,

"f1": 94.43790487416292,

"total": 10570,

"HasAns_exact": 88.25922421948913,

"HasAns_f1": 94.43790487416292,

"HasAns_total": 10570

}

- **SQuAD v2.0**

{

"exact": 86.05238777057188,

"f1": 88.99602665148535,

"total": 11873,

"HasAns_exact": 83.38394062078272,

"HasAns_f1": 89.27965999208608,

"HasAns_total": 5928,

"NoAns_exact": 88.71320437342304,

"NoAns_f1": 88.71320437342304,

"NoAns_total": 5945,

"best_exact": 86.5914259243662,

"best_exact_thresh": -2.146007537841797,

"best_f1": 89.43104312625539,

"best_f1_thresh": -2.146007537841797

}<|||||>Awesome @stevezheng23. Can I push on top of your PR to change a few things before we merge?

(We refactored the tokenizer to handle the encoding of sequence pairs, including special tokens. So we don't need to do it inside each example script anymore)<|||||>@julien-c sure, please add changes in this PR if needed 👍 <|||||>@julien-c I've also upload the roberta large model finetuned on squad v2.0 data together with its prediction & evaluation results to public cloud storage https://storage.googleapis.com/mrc_data/squad/roberta.large.squad.v2.zip<|||||>Can you check my latest commit @stevezheng23? Main change is that I removed the `add_prefix_space` for RoBERTa (which the RoBERTa authors don't use, as far as I know) which doesn't seem to make a significant difference.

@thomwolf @LysandreJik this is ready for review.<|||||>Everything looks good.

As for the `add_prefix_space` flag,

- For `add_prefix_space=True`, I have run the experiment, the F1 score is around 89.4

- For `add_prefix_space=False`, I have also run the experiment, the F1 score is around 88.2<|||||>Great! Good job on reimplementing the cross-entropy loss when start/end positions are given.<|||||>Look good to me.

We'll probably be able to simplify `utils_squad` a lot soon but that will be fine for now.

Do you want to add your experimental results with RoBERTa in `examples/readme`, with a recommendation to use `add_prefix_space=True` (fyi it's the opposite for NER)?<|||||>@julien-c do you want to add the roberta model finetuned on squad by @stevezheng23 in our library?<|||||>Yep @thomwolf <|||||>@thomwolf I have updated README file as you suggested, you can merge this PR when you think it's good to go. BTW, it seems CI build is broken<|||||>Ok thanks, I'll let @julien-c finish to handle this PR when he's back.<|||||>> @julien-c I've also upload the roberta large model finetuned on squad v2.0 data together with its prediction & evaluation results to public cloud storage https://storage.googleapis.com/mrc_data/squad/roberta.large.squad.v2.zip

Hey @stevezheng23 !

I just tried to reproduce your model with slightly different hyperparameters (`batch_size=2` and `gradient_accumulation=6` instead of `batch_size=12`), and I am currently getting worse results.

Results with your model:

```

{

"exact": 86.05238777057188,

"f1": 88.99602665148535,

"total": 11873,

"HasAns_exact": 83.38394062078272,

"HasAns_f1": 89.27965999208608,

"HasAns_total": 5928,

"NoAns_exact": 88.71320437342304,

"NoAns_f1": 88.71320437342304,

"NoAns_total": 5945

}

```

Results with the model I trained, on the best checkpoint I was able to obtain after training for 8 epochs:

```

{

"exact": 82.85184873241809,

"f1": 85.85477834702593,

"total": 11873,

"HasAns_exact": 77.80026990553306,

"HasAns_f1": 83.8147407750069,

"HasAns_total": 5928,

"NoAns_exact": 87.88898233809924,

"NoAns_f1": 87.88898233809924,

"NoAns_total": 5945

}

```

Your hyperparameters:

```

Namespace(adam_epsilon=1e-08, cache_dir='', config_name='', device=device(type='cuda', index=0), do_eval=True, do_lower_case=False, do_train=True, doc_stride=128, eval_all_checkpoints=False, evaluate_during_training=False, fp16=False, fp16_opt_level='O1', gradient_accumulation_steps=1, learning_rate=1.5e-05, local_rank=0, logging_steps=50, max_answer_length=30, max_grad_norm=1.0, max_query_length=64, max_seq_length=512, max_steps=-1, model_name_or_path='roberta-large', model_type='roberta', n_best_size=20, n_gpu=1, no_cuda=False, null_score_diff_threshold=0.0, num_train_epochs=2.0, output_dir='output/squad/v2.0/roberta.large', overwrite_cache=False, overwrite_output_dir=False, per_gpu_eval_batch_size=12, per_gpu_train_batch_size=12, predict_file='data/squad/v2.0/dev-v2.0.json', save_steps=500, seed=42, server_ip='', server_port='', tokenizer_name='', train_batch_size=12, train_file='data/squad/v2.0/train-v2.0.json', verbose_logging=False, version_2_with_negative=True, warmup_steps=500, weight_decay=0.01)

```

My hyperparameters:

```

Namespace(adam_epsilon=1e-08, cache_dir='', config_name='', device=device(type='cuda'), do_eval=True, do_lower_case=False, do_train=True, doc_stride=128, eval_all_checkpoints=False, evaluate_during_training=False, fp16=False, fp16_opt_level='O1', gradient_accumulation_steps=6, learning_rate=1.5e-05, local_rank=-1, logging_steps=50, max_answer_length=30, max_grad_norm=1.0, max_query_length=64, max_seq_length=512, max_steps=-1, model_name_or_path='roberta-large', model_type='roberta', n_best_size=20, n_gpu=1, no_cuda=False, null_score_diff_threshold=0.0, num_train_epochs=8.0, output_dir='../roberta.large.squad2.v1p', overwrite_cache=False, overwrite_output_dir=False, per_gpu_eval_batch_size=2, per_gpu_train_batch_size=2, predict_file='/home/testing/drive/invariance//workspace/data/squad/dev-v2.0.json', save_steps=500, seed=42, server_ip='', server_port='', tokenizer_name='', train_batch_size=2, train_file='/home/testing/drive/invariance//workspace/data/squad/train-v2.0.json', verbose_logging=False, version_2_with_negative=True, warmup_steps=500, weight_decay=0.01)

```

Do you have any ideas why this is happening ?

One thing that may be happening is that, when using `max_grad_norm` and `gradient_accumulation=n`, the clipping of the gradient norm seems to be done `n` times rather than just 1, but I need to look deeper into this.

I'd like to see what happens without the need of gradient accumulation - anyone with a spare TPU to share? 😬<|||||>> Ok thanks, I'll let @julien-c finish to handle this PR when he's back.

thanks, @thomwolf <|||||>@pminervini I haven't tried out using `max_grad_norm` and `gradient_accumulation=n` combination before. One thing you could pay attention to is that the checkpoint is trained with `add_prefix_space=True` for RoBERTa tokenizer.<|||||>@stevezheng23 if you look at it, the `max_grad_norm` is performed on all the gradients in the accumulation - I think it should be done just before the `optimizer.step()` call.

https://github.com/huggingface/transformers/blob/master/examples/run_squad.py#L163

@thomwolf what do you think ? should I go and do a PR ?<|||||>@LysandreJik just significantly rewrote our SQuAD integration in https://github.com/huggingface/transformers/pull/1984 so we were holding out on merging this.

Does anyone here want to revisit this PR with the changes from #1984? Otherwise, we'll do it, time permitting.<|||||>cool, I'm willing to revisit it. I will take a look at your changes and tansformers' recent updates today (have been away from the Master branch for some time😊).<|||||>> > @julien-c I've also upload the roberta large model finetuned on squad v2.0 data together with its prediction & evaluation results to public cloud storage https://storage.googleapis.com/mrc_data/squad/roberta.large.squad.v2.zip

>

> Hey @stevezheng23 !

>

> I just tried to reproduce your model with slightly different hyperparameters (`batch_size=2` and `gradient_accumulation=6` instead of `batch_size=12`), and I am currently getting worse results.

> Your hyperparameters:

>

> ```

> Namespace(adam_epsilon=1e-08, cache_dir='', config_name='', device=device(type='cuda', index=0), do_eval=True, do_lower_case=False, do_train=True, doc_stride=128, eval_all_checkpoints=False, evaluate_during_training=False, fp16=False, fp16_opt_level='O1', gradient_accumulation_steps=1, learning_rate=1.5e-05, local_rank=0, logging_steps=50, max_answer_length=30, max_grad_norm=1.0, max_query_length=64, max_seq_length=512, max_steps=-1, model_name_or_path='roberta-large', model_type='roberta', n_best_size=20, n_gpu=1, no_cuda=False, null_score_diff_threshold=0.0, num_train_epochs=2.0, output_dir='output/squad/v2.0/roberta.large', overwrite_cache=False, overwrite_output_dir=False, per_gpu_eval_batch_size=12, per_gpu_train_batch_size=12, predict_file='data/squad/v2.0/dev-v2.0.json', save_steps=500, seed=42, server_ip='', server_port='', tokenizer_name='', train_batch_size=12, train_file='data/squad/v2.0/train-v2.0.json', verbose_logging=False, version_2_with_negative=True, warmup_steps=500, weight_decay=0.01)

> ```

>

> My hyperparameters:

>

> ```

> Namespace(adam_epsilon=1e-08, cache_dir='', config_name='', device=device(type='cuda'), do_eval=True, do_lower_case=False, do_train=True, doc_stride=128, eval_all_checkpoints=False, evaluate_during_training=False, fp16=False, fp16_opt_level='O1', gradient_accumulation_steps=6, learning_rate=1.5e-05, local_rank=-1, logging_steps=50, max_answer_length=30, max_grad_norm=1.0, max_query_length=64, max_seq_length=512, max_steps=-1, model_name_or_path='roberta-large', model_type='roberta', n_best_size=20, n_gpu=1, no_cuda=False, null_score_diff_threshold=0.0, num_train_epochs=8.0, output_dir='../roberta.large.squad2.v1p', overwrite_cache=False, overwrite_output_dir=False, per_gpu_eval_batch_size=2, per_gpu_train_batch_size=2, predict_file='/home/testing/drive/invariance//workspace/data/squad/dev-v2.0.json', save_steps=500, seed=42, server_ip='', server_port='', tokenizer_name='', train_batch_size=2, train_file='/home/testing/drive/invariance//workspace/data/squad/train-v2.0.json', verbose_logging=False, version_2_with_negative=True, warmup_steps=500, weight_decay=0.01)

> ```

>

> Do you have any ideas why this is happening ?

You're using num_train_epochs=8 instead of 2, which makes the learning rate decay more slowly. Maybe that is causing the difference?<|||||>Regarding `max_grad_norm` - RoBERTa doesn't use gradient clipping, so the `max_grad_norm` changes aren't strictly necessary here

RoBERTa also uses `adam_epsilon=1e-06` as I understand, but I'm not sure if it would change the results here<|||||>Hi @stevezheng23 @julien-c @thomwolf @ethanjperez , I updated the run squad with roberta in #2173

based on #1984 and #1386. Could you please help to review it? Thank you very much.<|||||>Closed in favor of #2173 which should be merged soon.

|

|

transformers | 1,385 | closed | [multiple-choice] Simplify and use tokenizer.encode_plus | Our base tokenizer `PreTrainedTokenizer` now has the ability to encode a sentence pair up to a `max_length`, adding special tokens for each model and returning a mask of `token_type_ids`.

In this PR we upgrade `run_multiple_choice` by adopting this factorized tokenizer API.

To ensure the results are strictly the same as before, we implement a new `TruncatingStrategy` (ideally this could be an enum).

@erenup as you spent a lot of time on this script, would you be able to review this PR?

Result of eval with parameters from [examples/readme](https://github.com/huggingface/transformers/blob/julien_multiple-choice/examples/README.md#multiple-choice):

```

eval_acc = 0.8352494251724483

eval_loss = 0.42866929549320487

``` | 09-30-2019 20:10:23 | 09-30-2019 20:10:23 | Great addition. I feel like using enums would be especially helpful for the truncating strategy, indeed.<|||||># [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=h1) Report

> Merging [#1385](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/5c3b32d44d0164aaa9b91405f48e53cf53a82b35?src=pr&el=desc) will **decrease** coverage by `0.07%`.

> The diff coverage is `41.66%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1385 +/- ##

==========================================

- Coverage 84.69% 84.61% -0.08%

==========================================

Files 84 84

Lines 12596 12610 +14

==========================================

+ Hits 10668 10670 +2

- Misses 1928 1940 +12

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1385/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rva2VuaXphdGlvbl91dGlscy5weQ==) | `87.73% <41.66%> (-2.46%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=footer). Last update [5c3b32d...9e136ff](https://codecov.io/gh/huggingface/transformers/pull/1385?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>I have reviewed this PR and It looks good to me. Thank you! @julien-c . I added two lines of comments above. Hope they are useful. Thank you. <|||||>Merged in, and superseded by, #1384 |

transformers | 1,384 | closed | Quality of life enhancements in encoding + patch MLM masking | This PR aims to add quality of life features to the encoding mechanism and patches an issue with the masked language modeling masking function.

1 - ~It introduces an `always_truncate` argument to the `encode` method.~ The `always_truncate` argument is now used as default, with no option to set it to `False` when a `max_length` is specified. Currently, if a `max_length` is specified to the `encode` method with a sequence pair, with both sequences being longer than the max length, then the sequence pair won't be truncated. This may then result in a sequence longer than the specified max length, which may crash the preprocessing mechanism (see current `run_glue.py` with the QNLI task). This argument may be further improved by truncating according to the pair of sequences length ratio.

2 - It adds a new return to the `encode_plus` return dictionary: `sequence_ids`. This is a list of numbers corresponding to the position of special/sequence ids. As an example:

```py

sequence = "This is a sequence"

input_ids_no_special = tok.encode(sequence) # [1188, 1110, 170, 4954]

input_ids = tok.encode(sequence, add_special_tokens=True) # [101, 1188, 1110, 170, 4954, 102]

# Special tokens ─────────────────────────────────────────────┴───────────────────────────┘

```

The new method offers several choices: single sequence (with or without special tokens), sequence pairs, and already existing special tokens:

```py

tok.get_sequence_ids(input_ids_no_special) # [0, 1, 1, 1, 1, 0]

tok.get_sequence_ids(input_ids, special_tokens_present=True) # [0, 1, 1, 1, 1, 0]

```

This offers several quality of life changes:

1 - The users are now aware of the location of the encoded sequences in their input ids: they can have custom truncating methods while leveraging model agnostic encoding

2 - Being aware of the location of special tokens is essential in the case of masked language modeling: we do not want to mask special tokens. An example of this is shown in the modified `run_lm_finetuning.py` script.

Considering sequence ids, the naming may not be optimal, therefore I'm especially open to propositions @thomwolf. Furthermore, I'm not sure it is necessary to consider the cases where no special tokens are currently in the sequence. | 09-30-2019 18:43:32 | 09-30-2019 18:43:32 | I think we should drop the `always_truncate` param, and just set it to `True` iff `max_length is not None`<|||||>Other than that I like it.<|||||>As seen with @julien-c , `always_truncate` really should be enabled by default when a `max_length` is specified. |

transformers | 1,383 | closed | Adding CTRL | EDIT 10/04

Almost complete (tests pass / generation makes sense).

Please comment with issues if you find them.

**Incomplete - Adding to facilitate collaboration**

This PR would add functionality to perform inference on CTRL (https://github.com/salesforce/ctrl) in the `🤗/transformers` repo.

Commits will be squashed later before merging. | 09-30-2019 18:25:55 | 09-30-2019 18:25:55 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=h1) Report

> Merging [#1383](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/1c5079952f5f10eeac4cb6801b4fd1f36b0eff73?src=pr&el=desc) will **increase** coverage by `1.63%`.

> The diff coverage is `92.38%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1383 +/- ##

==========================================

+ Coverage 83.79% 85.42% +1.63%

==========================================

Files 84 91 +7

Lines 12587 13464 +877

==========================================

+ Hits 10547 11502 +955

+ Misses 2040 1962 -78

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `80.57% <ø> (+15.1%)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_gpt2\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2dwdDJfdGVzdC5weQ==) | `94.73% <0%> (ø)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_common\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `95.38% <100%> (+7.88%)` | :arrow_up: |

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.75% <100%> (+1.35%)` | :arrow_up: |

| [transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL2ZpbGVfdXRpbHMucHk=) | `74.17% <100%> (ø)` | :arrow_up: |

| [transformers/modeling\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2F1dG8ucHk=) | `51.85% <20%> (-2.1%)` | :arrow_down: |

| [transformers/configuration\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL2NvbmZpZ3VyYXRpb25fYXV0by5weQ==) | `58.82% <33.33%> (-2.47%)` | :arrow_down: |

| [transformers/tokenization\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rva2VuaXphdGlvbl9hdXRvLnB5) | `67.64% <33.33%> (-3.33%)` | :arrow_down: |

| [transformers/tokenization\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rva2VuaXphdGlvbl9jdHJsLnB5) | `83.6% <83.6%> (ø)` | |

| [transformers/configuration\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL2NvbmZpZ3VyYXRpb25fY3RybC5weQ==) | `88.88% <88.88%> (ø)` | |

| ... and [18 more](https://codecov.io/gh/huggingface/transformers/pull/1383/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=footer). Last update [1c50799...d9e60f4](https://codecov.io/gh/huggingface/transformers/pull/1383?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Ok for merge<|||||>Thanks for adding this! I'm currently doing some experiments with the CTRL model, and I've a question about the tokenization:

```bash

tokenizer.tokenize("Munich and Berlin are nice cities.")

Out[6]: ['m@@', 'unic@@', 'h', 'and', 'ber@@', 'lin', 'are', 'nice', 'cities', '.']

```

Do you have any idea, why the output returns lowercased tokens only - `Berlin` and `Munich` do both appear in the vocab file (cased, and the splitting of `Munich` looks really weird 😅).<|||||>Yes, we are aware of the issue.

We are fixing this problem in #1480. |

transformers | 1,382 | closed | Issue with `decode` in the presence of special tokens | ## 🐛 Bug

<!-- Important information -->

Model I am using (Bert, XLNet....): GPT-2

Language I am using the model on (English, Chinese....): English

The problem arise when using:

* [ ] the official example scripts: (give details)

* [x] my own modified scripts: (give details)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

Run the following:

```bash

from transformers.tokenization_gpt2 import GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

tokenizer.add_special_tokens({"sep_token": "[SEP]"})

# this works, outputting "[SEP]"

tokenizer.convert_tokens_to_string(tokenizer.convert_ids_to_tokens(tokenizer.encode("[SEP]")))

# this fails

tokenizer.decode(tokenizer.encode("[SEP]"))

```

The last command gives this error:

```

miniconda3/envs/deepnlg/lib/python3.7/site-packages/transformers/tokenization_utils.py", line 937, in decode

text = text.replace(self._cls_token, self._sep_token)

TypeError: replace() argument 1 must be str, not None

```

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

## Expected behavior

The expectation is that [SEP] is output from the `decode` function.

## Environment

* OS: OSX

* Python version: 3.7

* PyTorch version: 1.1.0

* PyTorch Transformers version (or branch): Master (2dc8cb87341223e86220516951bb4ad84f880b4a)

* Using GPU ? No

* Distributed of parallel setup ? No

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. --> | 09-30-2019 14:18:37 | 09-30-2019 14:18:37 | Can't reproduce this on master now. Seems to be fixed.<|||||>Thanks a lot. It seems to be fixed. Now I get `'[SEP]'` and `' [SEP]'` consecutively with the first and the second command above. So we can close this issue. |

transformers | 1,381 | closed | how to train RoBERTa from scratch | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I want to train RoBERTa model from scratch on different language. Is there any implementation available here to do this? | 09-30-2019 14:09:17 | 09-30-2019 14:09:17 | https://github.com/pytorch/fairseq/blob/master/examples/roberta/README.pretraining.md<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>You can now leave `--model_name_or_path` to None in `run_language_modeling.py` to train a model from scratch.

See also https://huggingface.co/blog/how-to-train<|||||>When I put new --config_name and --tokenizer_name. It shows me that

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

Anyone can help me? |

transformers | 1,380 | closed | Confusing tokenizer result on single word | Not sure if this is expected, but it seems confusing to me:

```python

import transformers

t=transformers.AutoTokenizer.from_pretrained('roberta-base')

t.tokenize("mystery")

```

yields two tokens, `['my', 'stery']`.

Yet

```

t.tokenize("a mystery")

```

*also* yields two tokens, `['a', 'Ġmystery']`. I would have thought this should yield one more token than tokenizing "mystery" alone.

| 09-30-2019 04:38:54 | 09-30-2019 04:38:54 | Hey @malmaud I think this #1196 can help you. The Roberta/GPT2 tokenizer expect a space to start. Without that, it sounds like you'll get strange behaviors.

To get the same output, in your first example, change it to

```

t.tokenize("mystery", add_prefix_space=True)

['Ġmystery']

```<|||||>That does work, thanks. I'm still confused why this doesn't work, though:

```

t.tokenize("<s> mystery </s>")

```

gives `['<s>', 'my', 'stery', '</s>']`<|||||>Hey @malmaud, spent some time going through the source code. So like above this gives the correct result:

```

t.tokenize("mystery", add_prefix_space=True)

['Ġmystery']

```

However

```

t.tokenizer(" mystery")

['my', 'stery']

```

I thought these should be doing the same thing. In the tokenization_gpt2.py file, it says:

```

if add_prefix_space:

text = ' ' + text

```

This should give the same results in both files then however when I add a print(text) statement before and after that I noticed I got these results. (using your example now)

```

t.tokenize("<s> mystery")

mystery

mystery

['<s>', 'my', 'stery']

t.tokenize("<s> mystery", add_prefix_space=True)

mystery

mystery

['<s>', 'Ġmystery']

```

This means that even though we are putting a single word in with a leading space, something in the preprocessing is getting rid of the initial space(s). So we need to use the add_prefix_space=True in order to get the space back or else the function won't be using the string we are expecting it will be using.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,379 | closed | TransfoXLCorpus requires pytorch to tokenize files | ## 🐛 Bug

The current TransfoXLCorpus code requires pytorch, and fails if it is not installed.

Model I am using (Bert, XLNet....): Transformer-XL

Language I am using the model on (English, Chinese....): Other

The problem arise when using:

* [ X ] my own modified scripts: I'm using a very simple script to read in text files, see code below

The tasks I am working on is:

* [ X ] my own task or dataset: I am attempting to build a corpus from my own dataset of long text sentences.

## To Reproduce

Steps to reproduce the behavior:

corpus = TransfoXLCorpus(lower_case=True, delimiter=" ")

corpus.build_corpus(EXAMPLE_DIR, "text8")

Traceback (most recent call last):

File "build_xl_corpus.py", line 26, in <module>

corpus.build_corpus(EXAMPLE_DIR, "text8")

File "/home/tom/.local/lib/python3.7/site-packages/transformers/tokenization_transfo_xl.py", line 521, in build_corpus

os.path.join(path, 'train.txt'), ordered=True, add_eos=False)

File "/home/tom/.local/lib/python3.7/site-packages/transformers/tokenization_transfo_xl.py", line 187, in encode_file

encoded.append(self.convert_to_tensor(symbols))

File "/home/tom/.local/lib/python3.7/site-packages/transformers/tokenization_transfo_xl.py", line 246, in convert_to_tensor

return torch.LongTensor(self.convert_tokens_to_ids(symbols))

NameError: name 'torch' is not defined

## Expected behavior

I did not expect this behavior to require pytorch

## Environment

* OS: Ubuntu

* Python version:

* PyTorch version: None

* PyTorch Transformers version (or branch): 2.0.0

* Using GPU ? Yes

* Distributed of parallel setup ? No

* Any other relevant information:

| 09-30-2019 00:23:32 | 09-30-2019 00:23:32 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,378 | closed | TFDistilBertForSequenceClassification - TypeError: len is not well defined for symbolic Tensors during model.fit() | ## 🐛 Bug

<!-- Important information -->

Model I am using (TFDistilBertForSequenceClassification):

Language I am using the model on (English):

The problem arise when using: model.fit()

* [ ] the official example scripts:

* [x] my own modified scripts:

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task:

* [x] my own task or dataset:

## To Reproduce

Steps to reproduce the behavior:

1. create a random classification train,test set

2. get the pretrained TFDistilBertForSequenceClassification model

3. call fit() on the model for finetuning

```python

x_train = np.random.randint(2000, size=(100, 12))

x_train[:,0]=101

x_train[:,11]=102

y_train = np.random.randint(2, size=100)

model = TFDistilBertForSequenceClassification.from_pretrained('distilbert-base-uncased',num_labels = 2)

model.compile()

model.fit(x_train,y_train,epochs = 1,batch_size = 32,verbose=1)

```

```

TypeError: in converted code:

relative to /usr/local/lib/python3.6/dist-packages:

transformers/modeling_tf_distilbert.py:680 call *

distilbert_output = self.distilbert(inputs, **kwargs)

tensorflow_core/python/keras/engine/base_layer.py:842 __call__

outputs = call_fn(cast_inputs, *args, **kwargs)

transformers/modeling_tf_distilbert.py:447 call *

tfmr_output = self.transformer([embedding_output, attention_mask, head_mask], training=training)

tensorflow_core/python/keras/engine/base_layer.py:891 __call__

outputs = self.call(cast_inputs, *args, **kwargs)

transformers/modeling_tf_distilbert.py:382 call

layer_outputs = layer_module([hidden_state, attn_mask, head_mask[i]], training=training)

tensorflow_core/python/keras/engine/base_layer.py:891 __call__

outputs = self.call(cast_inputs, *args, **kwargs)

transformers/modeling_tf_distilbert.py:324 call

sa_output = self.attention([x, x, x, attn_mask, head_mask], training=training)

tensorflow_core/python/keras/engine/base_layer.py:891 __call__

outputs = self.call(cast_inputs, *args, **kwargs)

transformers/modeling_tf_distilbert.py:229 call

assert 2 <= len(tf.shape(mask)) <= 3

tensorflow_core/python/framework/ops.py:741 __len__

"shape information.".format(self.name))

TypeError: len is not well defined for symbolic Tensors. (tf_distil_bert_for_sequence_classification/distilbert/transformer/layer_._0/attention/Shape_2:0) Please call `x.shape` rather than `len(x)` for shape information.

```

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS: Colab Notebook

* Python version:3.6.8

* PyTorch version:N/A

* Tensorflow version:tf-nightly-gpu-2.0-preview

* PyTorch Transformers version (or branch): 2.0/0

* Using GPU ? yes

* Distributed of parallel setup ? No

## Additional context

Calling the model directly with the input as mentioned in the example model doc works fine | 09-29-2019 18:44:18 | 09-29-2019 18:44:18 | so, how to solve this problem?<|||||>Should be solved on master and the latest release. |

transformers | 1,377 | closed | Error when calculate tokens_id and Mask LM | ## 🐛 Bug

<!-- Important information -->

Model I am using (DistilBert):

Language I am using the model on (English):

The problem arise when using: Distiller.prepare_batch( )

Error when token_ids is masked by mask LM matrix

* the official example scripts:

_token_ids_real = token_ids[pred_mask]

* my own modified scripts:

_token_ids_real=torch.mul(token_ids, pred_mask)

The tasks I am working on is:

* [GLUE ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details)

## To Reproduce

Steps to reproduce the behavior:

1. pred_mask is matrix with 0,1.

Operation token_ids[pred_mask] seems to make some same matrix, instead of masking token_ids

<!-- If you have a code sample, error messages, stack traces, please provide it here as well. -->

## Expected behavior

<!-- A clear and concise description of what you expected to happen. -->

## Environment

* OS: Win10

* Python version: 3.6

* PyTorch version: 1.1

* PyTorch Transformers version (or branch): 2.0/0

* Using GPU ? Yes

* Distributed of parallel setup ? No

* Any other relevant information:

## Additional context

<!-- Add any other context about the problem here. --> | 09-29-2019 15:28:18 | 09-29-2019 15:28:18 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,376 | closed | Is it save the best model when used example like run_glue? | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

I read the code of `run_glue.py`, I think it just save model checkpoint and the last step.

Is it wrong for me, or do I have to do some other operations? | 09-29-2019 13:21:15 | 09-29-2019 13:21:15 | |

transformers | 1,375 | closed | cannot import name 'TFBertForSequenceClassification' | I am unable to import TFBertForSequenceClassification.

from transformers import TFBertForSequenceClassification shows an error of cannot import name 'TFBertForSequenceClassification' | 09-29-2019 12:43:32 | 09-29-2019 12:43:32 | Hi! The TensorFlow components are only available when you have TF2 installed on your system. Could you please check that you have it in the environment in which you're running your code?<|||||>It worked. Thanks |

transformers | 1,374 | closed | Fix run_glue.py on QNLI part | In QNLI task, the ids should be truncated is the pair cuz that is the huge one. Or we can't load QNLI dataset successfully. | 09-29-2019 12:39:19 | 09-29-2019 12:39:19 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,373 | closed | Fixed critical css font-family issues | Fixed critical css font-family issues to ensure compatibility with multiple web browsers | 09-29-2019 11:51:32 | 09-29-2019 11:51:32 | Amazing! |

transformers | 1,372 | closed | Simplify code by using six.string_types | https://six.readthedocs.io/#six.string_types | 09-29-2019 08:40:51 | 09-29-2019 08:40:51 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=h1) Report

> Merging [#1372](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/fd97761c5a977fd22df789d2851cf57c7c9c0930?src=pr&el=desc) will **increase** coverage by `1.42%`.

> The diff coverage is `83.33%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1372 +/- ##

==========================================

+ Coverage 84.74% 86.16% +1.42%

==========================================

Files 91 91

Lines 13593 13593

==========================================

+ Hits 11519 11713 +194

+ Misses 2074 1880 -194

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rva2VuaXphdGlvbl91dGlscy5weQ==) | `91.43% <83.33%> (ø)` | :arrow_up: |

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `81.75% <0%> (+1.35%)` | :arrow_up: |

| [transformers/modeling\_ctrl.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2N0cmwucHk=) | `95.45% <0%> (+2.27%)` | :arrow_up: |

| [transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `73.28% <0%> (+2.46%)` | :arrow_up: |

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `80.57% <0%> (+15.1%)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_common\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `96.8% <0%> (+17.02%)` | :arrow_up: |

| [transformers/modeling\_tf\_pytorch\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1372/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3B5dG9yY2hfdXRpbHMucHk=) | `92.95% <0%> (+83.09%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=footer). Last update [fd97761...ba6f2d6](https://codecov.io/gh/huggingface/transformers/pull/1372?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>We'll handle this by dropping python2 support in the next release (and using flake8) cc @aaugustin |

transformers | 1,371 | closed | Make activation functions available from modeling_utils (PyTorch) | * This commit replaces references to PyTorch activation functions/modules by a dict of functions that lives in `modeling_utils`. This ensures that all activation functions are available to all modules, praticularly custom functions such as swish and new_gelu.

* In addition, when available (PT1.2) the native PyTorch gelu function will be used - it supports a CPP/CUDA implementation.

**NOTE** that this replaces all `nn.Module`'s by bare functions except for one which was required for testing to be of the type `nn.Module`. If requested, this can be reverted so that only function calls are replaced by ACT2FN functions, and that existing `nn.Module`s are untouched.

**NOTE** that one would thus also expect that _all_ usages of activation functions are taken from `ACT2FN` for consistency's sake.

**NOTE** since the Module counter-part of PyTorch's GeLU [isn't available (yet)](https://github.com/pytorch/pytorch/pull/20665#issuecomment-536359684), it might be worth waiting to implement this pull, and then use Modules and functions in the right places where one would expect, i.e. `Module` when part of architecture, function when processing other kinds of data. | 09-29-2019 07:40:10 | 09-29-2019 07:40:10 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=h1) Report

> Merging [#1371](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ae50ad91ea2fedb64ecd2e7c8e2d0d4778dc03aa?src=pr&el=desc) will **increase** coverage by `0.97%`.

> The diff coverage is `85.71%`.

[](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1371 +/- ##

==========================================

+ Coverage 83.76% 84.74% +0.97%

==========================================

Files 84 84

Lines 12596 12559 -37

==========================================

+ Hits 10551 10643 +92

+ Misses 2045 1916 -129

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_openai.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX29wZW5haS5weQ==) | `80.41% <100%> (ø)` | :arrow_up: |

| [transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `72.02% <100%> (+0.77%)` | :arrow_up: |

| [transformers/modeling\_gpt2.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2dwdDIucHk=) | `83.88% <100%> (-0.11%)` | :arrow_down: |

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `71.01% <100%> (+5.54%)` | :arrow_up: |

| [transformers/modeling\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2Rpc3RpbGJlcnQucHk=) | `95.8% <100%> (-0.03%)` | :arrow_down: |

| [transformers/modeling\_transfo\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RyYW5zZm9feGwucHk=) | `75.16% <100%> (ø)` | :arrow_up: |

| [transformers/modeling\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX2JlcnQucHk=) | `88.41% <100%> (+0.23%)` | :arrow_up: |

| [transformers/modeling\_xlm.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbS5weQ==) | `88.36% <100%> (-0.07%)` | :arrow_down: |

| [transformers/modeling\_transfo\_xl\_utilities.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RyYW5zZm9feGxfdXRpbGl0aWVzLnB5) | `54.16% <37.5%> (+0.27%)` | :arrow_up: |

| [transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3V0aWxzLnB5) | `92.57% <90%> (-0.12%)` | :arrow_down: |

| ... and [7 more](https://codecov.io/gh/huggingface/transformers/pull/1371/diff?src=pr&el=tree-more) | |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=footer). Last update [ae50ad9...716d783](https://codecov.io/gh/huggingface/transformers/pull/1371?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Unstale. <|||||>Would feel syntactically cleaner if we could do `ACT2FN.gelu()` instead of a dict (also gives some IDE goodness like autocomplete) (I guess through a class or namespace or something), what do you guys think?<|||||>> Would feel syntactically cleaner if we could do `ACT2FN.gelu()` instead of a dict (also gives some IDE goodness like autocomplete) (I guess through a class or namespace or something), what do you guys think?

Sounds good but note that this is not something I introduced. The ACT2FN dict already existed, but wasn't used consistently it seemed.<|||||>Ah yeah, I see. Would you want to do this change, if you have the time/bandwidth? (+ rebasing on current master so we can merge easily?)<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>AFAICT, this has been done by @sshleifer on master. Re-open if necessary! |

transformers | 1,370 | closed | considerd to add albert? | ## 🚀 Feature

<!-- A clear and concise description of the feature proposal. Please provide a link to the paper and code in case they exist. -->

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too. -->

## Additional context

<!-- Add any other context or screenshots about the feature request here. --> | 09-29-2019 02:21:47 | 09-29-2019 02:21:47 | Would definitely love to see an implementation of ALBERT added to this repository. Just for completeness:

* paper: https://arxiv.org/abs/1909.11942

* reddit: https://www.reddit.com/r/MachineLearning/comments/d9tdfo/albert_a_lite_bert_for_selfsupervised_learning_of/

* medium: https://medium.com/syncedreview/googles-albert-is-a-leaner-bert-achieves-sota-on-3-nlp-benchmarks-f64466dd583

That said, it could be even more interesting to implement the core improvements (factorized embedding parameterization, cross-layer parameter sharing) from ALBERT in (some?/all?) other transformers as optional features?

<|||||>Knowing how fast the team works, I would expect ALBERT to be implemented quite soon. That being said, I haven't had time to read the ALBERT paper yet, so it might be more difficult than previous BERT iterations such as distilbert and RoBERTa.<|||||>I think ALBERT is very cool! Expect...<|||||>And in pytorch (using code from this repo and weights from brightmart) https://github.com/lonePatient/albert_pytorch<|||||>Any Update on the progress?<|||||>The ALBERT paper will be presented at ICLR in April 2020. From what I last heard, the huggingface team has been talking with the people over at Google AI to share the details of the model, but I can imagine that the researchers rather wait until the paper has been presented. One of those reasons being that they want to get citations from their ICLR talk rather than an arXiv citation which, in the field, is "worth less" than a big conference proceeding.

For now, just be patient. I am sure that the huggingface team will have a big announcement (follow their Twitter/LinkedIn channels) with a new version bump. No need to keep bumping this topic.<|||||>https://github.com/interviewBubble/Google-ALBERT<|||||>The official code and models got released :slightly_smiling_face:

https://github.com/google-research/google-research/tree/master/albert <|||||>[WIP]

ALBERT in tensorflow 2.0

https://github.com/kamalkraj/ALBERT-TF2.0

<|||||>https://github.com/lonePatient/albert_pytorch

Dataset: MNLI

Model: ALBERT_BASE_V2

Dev accuracy : 0.8418

Dataset: SST-2

Model: ALBERT_BASE_V2

Dev accuracy :0.926<|||||>PR was created, see here:

https://github.com/huggingface/transformers/pull/1683<|||||>> [WIP]

> ALBERT in tensorflow 2.0

> https://github.com/kamalkraj/ALBERT-TF2.0

Verison 2 weights added.

Support for SQuAD 1.1 and 2.0 added.

Reproduces the same results from paper. From my experiments, ALBERT model is very sensitive to hyperparameter like Batch Size. FineTuning using AdamW as Default in Original Repo. AdamW performs better than LAMB on Model finetuning. <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,369 | closed | Update README.md | Lines 183 - 200, fixed indentation. Line 198, replaced `tokenizer_class` with `BertTokenizer`, since `tokenizer_class` is not defined in the loop it belongs to. | 09-28-2019 23:37:05 | 09-28-2019 23:37:05 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=h1) Report

> Merging [#1369](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ae50ad91ea2fedb64ecd2e7c8e2d0d4778dc03aa?src=pr&el=desc) will **increase** coverage by `0.92%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1369 +/- ##

==========================================

+ Coverage 83.76% 84.69% +0.92%

==========================================

Files 84 84

Lines 12596 12596

==========================================

+ Hits 10551 10668 +117

+ Misses 2045 1928 -117

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [transformers/modeling\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/1369/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3hsbmV0LnB5) | `72.14% <0%> (+0.89%)` | :arrow_up: |

| [transformers/modeling\_roberta.py](https://codecov.io/gh/huggingface/transformers/pull/1369/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3JvYmVydGEucHk=) | `71.22% <0%> (+5.75%)` | :arrow_up: |

| [transformers/tests/modeling\_tf\_common\_test.py](https://codecov.io/gh/huggingface/transformers/pull/1369/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL3Rlc3RzL21vZGVsaW5nX3RmX2NvbW1vbl90ZXN0LnB5) | `95% <0%> (+7.5%)` | :arrow_up: |

| [transformers/modeling\_tf\_pytorch\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/1369/diff?src=pr&el=tree#diff-dHJhbnNmb3JtZXJzL21vZGVsaW5nX3RmX3B5dG9yY2hfdXRpbHMucHk=) | `76.92% <0%> (+66.43%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=footer). Last update [ae50ad9...d1176d5](https://codecov.io/gh/huggingface/transformers/pull/1369?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Great, thanks for updating the README! |

transformers | 1,368 | closed | Tried to import TFBertForPreTraining in google colab | Tried to import TFBertForPreTraining and received an error

from transformers import BertTokenizer, TFBertForPreTraining

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

<ipython-input-24-91f8709e090f> in <module>()

----> 1 from transformers import BertTokenizer, TFBertForPreTraining

ImportError: cannot import name 'TFBertForPreTraining'

---------------------------------------------------------------------------

NOTE: If your import is failing due to a missing package, you can

manually install dependencies using either !pip or !apt.

To view examples of installing some common dependencies, click the

"Open Examples" button below.

--------------------------------------------------------------------------- | 09-28-2019 23:34:22 | 09-28-2019 23:34:22 | Hey @mandavachetana its not just a google colab thing. Take a look here #1375 You need to make sure you are using tensorflow 2.0 and it should work.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,367 | closed | Model does not train when using new BertModel, but does with old BertModel | ## 📚 Migration

I am currently working on using Transformers with Snorkel's classification library [https://github.com/snorkel-team/snorkel](https://github.com/snorkel-team/snorkel) (for MTL learning in the future). I currently am trying to troubleshoot why the model is not learning, and so have my experiment set up such that the Snorkel library learns one task, essentially training a BERT model and linear layer.

The code for this experiment can be found at [https://github.com/Peter-Devine/test_cls_snorkel_mtl]( https://github.com/Peter-Devine/test_cls_snorkel_mtl ). To run it, you will need torch, snorkel, numpy, pytorch_pretrained_bert and transformers.

My problem is as follows.

When I run the code in `test_cls_snorkel_mtl/tutorials/ISEAR_pretrain_tutorial.py`, my code runs fine and the model's validation accuracy scores are good. This is because I am using the old pytorch_pretrained_bert BertModel in `test_cls_snorkel_mtl/modules/bert_module.py`. If you uncomment line 6 of `test_cls_snorkel_mtl/modules/bert_module.py` and use the new transformers BertModel, then running `test_cls_snorkel_mtl/tutorials/ISEAR_pretrain_tutorial.py` will result in a model that never converges and bad validation accuracy.

From reading the code on Snorkel, I cannot seem to find the reason as to why this would be. What are the major changes in training a model between versions of pytorch_pretrained_bert and transformers. Do back-passes etc. work the same way in both models?

Thanks | 09-28-2019 20:04:12 | 09-28-2019 20:04:12 | You can check the two migration guides, they explain all the differences:

- https://github.com/huggingface/transformers#Migrating-from-pytorch-transformers-to-transformers

- https://github.com/huggingface/transformers#migrating-from-pytorch-pretrained-bert-to-transformers <|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,366 | closed | fix redundant initializations of Embeddings in RobertaEmbeddings | Based on the discussion with @julien-c in #1258, this PR fixes the issue of redundant multiple initializations of the embeddings in the constructor of `RobertaEmbeddings` by removing the constructor call of its parent class (i.e., `BertEmbeddings`) and creating `token_type_embeddings`, `LayerNorm`, and `dropout` in the constructor. | 09-28-2019 16:29:23 | 09-28-2019 16:29:23 | Sorry, I will fix this |

transformers | 1,365 | closed | Why add the arguments 'head_mask' and when to use this arguments | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

**head_mask**: (`optional`) ``torch.FloatTensor`` of shape ``(num_heads,)`` or ``(num_layers, num_heads)``:

Mask to nullify selected heads of the self-attention modules.

Mask values selected in ``[0, 1]``:

``1`` indicates the head is **not masked**, ``0`` indicates the head is **masked**. | 09-28-2019 13:40:38 | 09-28-2019 13:40:38 | This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 1,364 | closed | Is there any plan for Roberta in SQuAD? | ## 🚀 Feature

<!-- A clear and concise description of the feature proposal. Please provide a link to the paper and code in case they exist. -->

Hello, thx for the RoBERTa implementation. But I want to know is there any plan for the RoBERTa in SQuAD, because it is complex. And I simple changed the run_squad code as the run_gule code, I got some bugs. And the fairseq doesn't give a official code, too. I really want to know how to use the RoBERTa in SQuAD use the transformers.

## Motivation

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too. -->

## Additional context

<!-- Add any other context or screenshots about the feature request here. --> | 09-28-2019 12:56:27 | 09-28-2019 12:56:27 | |

transformers | 1,363 | closed | Why the RoBERTa's max_position_embeddings size is 512+2=514? | ## ❓ Questions & Help

<!-- A clear and concise description of the question. -->

When I see the code of Roberta, I have a question about the padding_idx = 1, I don't know very well. And the comment is still confused for me. | 09-28-2019 11:52:45 | 09-28-2019 11:52:45 | What's your precise question?<|||||>> What's your precise question?

the self.padding_idx's meaning in modeling_roberta.py<|||||>It's the position of the padding vector. It's not unique to RoBERTa but far more general, especially for embeddings. Take a look at [the PyTorch documentation](https://pytorch.org/docs/stable/nn.html#embedding).<|||||>> It's the position of the padding vector. It's not unique to RoBERTa but far more general, especially for embeddings. Take a look at [the PyTorch documentation](https://pytorch.org/docs/stable/nn.html#embedding).

I know that, but I confuse about why there is 1 and the \<s\> is 0, is it ignore and why the max_position_embeddings size is 512+2=514?<|||||>Because that's their index [in the vocab](https://s3.amazonaws.com/models.huggingface.co/bert/roberta-base-vocab.json). The max_position_embeddings size is indeed 514, I'm not sure why. The tokenizer seems to handle text correctly with a max of 512. Perhaps someone of the developers can help with that. I would advise you to change the title of your topic.

https://github.com/huggingface/transformers/blob/ae50ad91ea2fedb64ecd2e7c8e2d0d4778dc03aa/transformers/tokenization_roberta.py#L84-L85<|||||>@LysandreJik can chime in if I’m wrong, but afaik `max_position_embeddings` is just the name of the variable that we use to encode the size of the embedding matrix. Max_len is correctly set to 512.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Answer here in case anyone from the future is curious: https://github.com/pytorch/fairseq/issues/1187<|||||>> Answer here in case anyone from the future is curious: [pytorch/fairseq#1187](https://github.com/pytorch/fairseq/issues/1187)

@morganmcg1 Tks for this, was getting all kinds of CUDA errors because i setted `max_position_embeddings=512`, now that i setted 514 it's running ok... |

transformers | 1,362 | closed | fix link | 09-28-2019 08:22:16 | 09-28-2019 08:22:16 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=h1) Report

> Merging [#1362](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/a6a6d9e6382961dc92a1a08d1bab05a52dc815f9?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1362 +/- ##

=======================================

Coverage 84.69% 84.69%

=======================================

Files 84 84

Lines 12596 12596

=======================================

Hits 10668 10668

Misses 1928 1928

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=footer). Last update [a6a6d9e...60f7916](https://codecov.io/gh/huggingface/transformers/pull/1362?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>👍 |

|

transformers | 1,361 | closed | distil-finetuning in run_squad | - Add the option for double loss: fine-tuning + distillation from a larger squad-finetune model.

- Fix `inputs` for `DistilBERT` (also see fix in `run_glue.py` 702f589848baba97ea4897aa3f0bb937e1ec3bcf) | 09-27-2019 21:47:59 | 09-27-2019 21:47:59 | cf https://github.com/huggingface/transformers/issues/1193#issuecomment-534740929<|||||>Ok, as discussed let's copy this script to the `examples/distillation` folder and keep `run_squad` barebone for now as it's going to evolve in the short term.<|||||># [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=h1) Report

> Merging [#1361](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/2dc8cb87341223e86220516951bb4ad84f880b4a?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1361 +/- ##

=======================================

Coverage 84.69% 84.69%

=======================================

Files 84 84

Lines 12596 12596

=======================================

Hits 10668 10668

Misses 1928 1928

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=footer). Last update [2dc8cb8...b4df865](https://codecov.io/gh/huggingface/transformers/pull/1361?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>no squash @VictorSanh? 😬 |

transformers | 1,360 | closed | Chunking Long Documents for Classification Tasks | ## 🚀 Feature

A way to process long documents for downstream classification tasks. One approach is to chunk long sequences with a specific stride similar to what is done in the run_squad example.

## Motivation

For classification tasks using datasets that are on average longer than 512 tokens, I believe it would improve performance.

## Additional context

https://github.com/google-research/bert/issues/27#issuecomment-435265194 | 09-27-2019 20:03:14 | 09-27-2019 20:03:14 | I'm not sure that I understand. As you say, you can see it implemented in the run_squad example. What else would you like? <|||||>Hello Bram,

I mean I want to apply it with a sequence classification task like BertForSequenceClassification, for example, versus what is being done in squad.

I don't think it should be too hard but I'm not exactly sure how a long document that is being chunked gets trained. Do we ignore the fact that these are chunks of the same document and just treat them as independent docs? Or do we do some sort of trick to join the tokens/embeddings with the first chunk?

How would this be implemented for sequence classification?<|||||>I quickly glared over the `convert_examples_to_features` function, and it seems that given some stride different parts are used as input. So, yes, as far as I can see they are treated as independent docs.

https://github.com/huggingface/transformers/blob/ae50ad91ea2fedb64ecd2e7c8e2d0d4778dc03aa/examples/utils_squad.py#L189-L397

<|||||>isn't there a way to deal with long documents without ignoring the fact that the chunks represent the same doc?

Maybe something along the lines of https://finetune.indico.io/chunk.html?highlight=long or https://explosion.ai/blog/spacy-pytorch-transformers#batching<|||||>After a first look, I don't see how `spacy-pytorch-transformers` does anything special rather than processing a document sentence-per-sentence. `finetune`'s approach might be what you after (taking the mean over all the slided windows), but as always: "a mean is just a mean", so the question remains how representative it is of the whole document. I am not saying that slicing is _better_ by any means, but averaging can distort "real" values greatly.<|||||>Yeah I see your point. I'm starting to think that maybe trying out chunking with a couple of different strides and maybe at inference time taking a voting approach would be a better option.

In any case, thank you for your feedback!<|||||>I agree that that might be the more efficient approach. No worries, thanks for the interesting question. If you think it's okay the question, please close it so it's easy to keep track of all open issues.<|||||>Hi, just to let you know that there is an option to manage strides in the `encode_plus` method. It handles special tokens and returns the overflowing elements in the `overflowing_tokens` field of the returned dictionary. |

transformers | 1,359 | closed | Update run_lm_finetuning.py | The previous method, just as phrased, did not exist in the class. | 09-27-2019 18:19:34 | 09-27-2019 18:19:34 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=h1) Report

> Merging [#1359](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/ca559826c4188be8713e46f191ddf5f379c196e7?src=pr&el=desc) will **not change** coverage.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #1359 +/- ##

=======================================

Coverage 84.73% 84.73%

=======================================

Files 84 84

Lines 12573 12573

=======================================

Hits 10654 10654

Misses 1919 1919

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=footer). Last update [ca55982...9478590](https://codecov.io/gh/huggingface/transformers/pull/1359?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Thanks @dennymarcels! |