modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-08-01 12:29:10

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 547

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-08-01 12:28:04

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Elitay/Reptilian

|

Elitay

| 2022-11-20T15:12:39Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2022-11-20T02:22:58Z |

---

license: creativeml-openrail-m

---

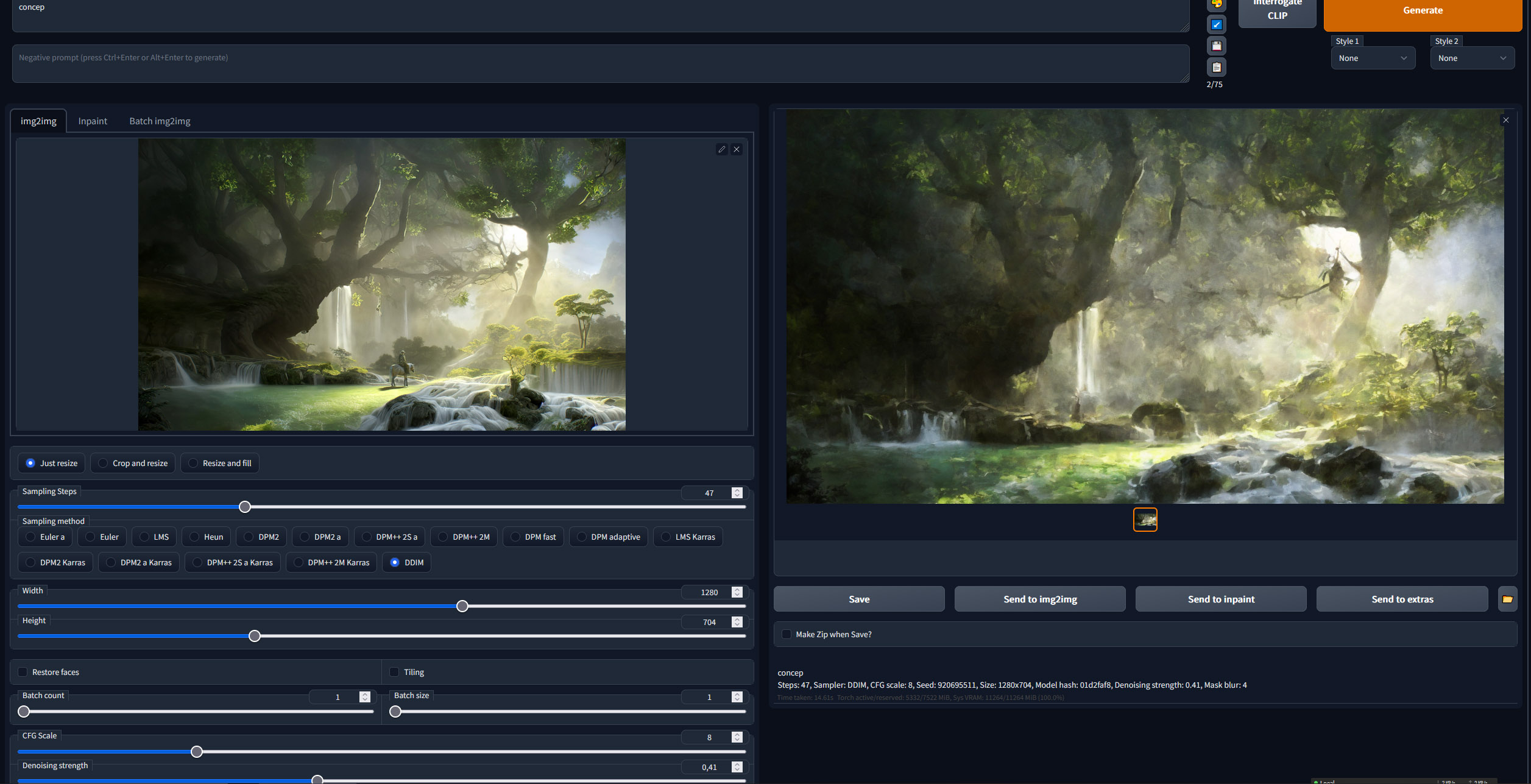

Trained on "kobold", "lizardfolk", and "dragonborn". Using Dreambooth, trained for 6000, 10000, or 14000 steps. I recommend using the 14000 step model with a CFG 4-8. You may need to use the models that were trained for fewer steps if you're having difficulty getting certain elements in the image (e.g. hats).

You can also use a higher CFG if attempting to generate inked images. E.g: CFG 9 and "photo octane 3d render" in the negative prompt:

|

dpkmnit/bert-finetuned-squad

|

dpkmnit

| 2022-11-20T14:58:13Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"bert",

"question-answering",

"generated_from_keras_callback",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-18T06:19:21Z |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: dpkmnit/bert-finetuned-squad

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# dpkmnit/bert-finetuned-squad

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.7048

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 66549, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 1.2092 | 0 |

| 0.7048 | 1 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.1

- Datasets 2.7.0

- Tokenizers 0.13.2

|

blkpst/ddpm-butterflies-128

|

blkpst

| 2022-11-20T14:36:54Z | 4 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-11-20T13:20:58Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/blackpansuto/ddpm-butterflies-128/tensorboard?#scalars)

|

Bauyrjan/wav2vec2-kazakh

|

Bauyrjan

| 2022-11-20T14:31:30Z | 192 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-11-11T05:35:48Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-kazakh

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-kazakh

This model is a fine-tuned version of [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 30

### Training results

### Framework versions

- Transformers 4.11.3

- Pytorch 1.13.0+cu117

- Datasets 1.13.3

- Tokenizers 0.10.3

|

akreal/mbart-large-50-finetuned-media

|

akreal

| 2022-11-20T13:32:58Z | 101 | 0 |

transformers

|

[

"transformers",

"pytorch",

"mbart",

"text2text-generation",

"mbart-50",

"fr",

"dataset:MEDIA",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-20T13:14:49Z |

---

language:

- fr

tags:

- mbart-50

license: apache-2.0

datasets:

- MEDIA

metrics:

- cer

- cver

---

This model is `mbart-large-50-many-to-many-mmt` model fine-tuned on the text part of [MEDIA](https://catalogue.elra.info/en-us/repository/browse/ELRA-S0272/) spoken language understanding dataset.

The scores on the test set are 16.50% and 19.09% for CER and CVER respectively.

|

Western1234/Modelop

|

Western1234

| 2022-11-20T12:55:18Z | 0 | 0 | null |

[

"license:openrail",

"region:us"

] | null | 2022-11-20T12:53:42Z |

---

license: openrail

---

git lfs install

git clone https://huggingface.co/Western1234/Modelop

|

hungngocphat01/Checkpoint_zaloAI_11_19_2022

|

hungngocphat01

| 2022-11-20T11:59:05Z | 161 | 0 |

transformers

|

[

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-11-20T11:53:29Z |

---

license: cc-by-nc-4.0

tags:

- generated_from_trainer

model-index:

- name: Checkpoint_zaloAI_11_19_2022

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Checkpoint_zaloAI_11_19_2022

This model is a fine-tuned version of [nguyenvulebinh/wav2vec2-base-vietnamese-250h](https://huggingface.co/nguyenvulebinh/wav2vec2-base-vietnamese-250h) on the None dataset.

It achieves the following results on the evaluation set:

- eval_loss: 1.3926

- eval_wer: 0.6743

- eval_runtime: 23.1283

- eval_samples_per_second: 39.865

- eval_steps_per_second: 5.016

- epoch: 25.07

- step: 26000

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.17.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

youa/CreatTitle

|

youa

| 2022-11-20T11:54:27Z | 1 | 0 | null |

[

"pytorch",

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2022-11-07T13:56:12Z |

---

license: bigscience-bloom-rail-1.0

---

|

zhiguoxu/bert-base-chinese-finetuned-ner-split_food

|

zhiguoxu

| 2022-11-20T09:32:56Z | 110 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-20T08:25:39Z |

---

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: bert-base-chinese-finetuned-ner-split_food

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-chinese-finetuned-ner-split_food

This model is a fine-tuned version of [bert-base-chinese](https://huggingface.co/bert-base-chinese) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0077

- F1: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 30

- eval_batch_size: 30

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.6798 | 1.0 | 1 | 1.6743 | 0.0 |

| 1.8172 | 2.0 | 2 | 0.6580 | 0.0 |

| 0.746 | 3.0 | 3 | 0.4864 | 0.0 |

| 0.4899 | 4.0 | 4 | 0.3927 | 0.0 |

| 0.401 | 5.0 | 5 | 0.2753 | 0.0 |

| 0.2963 | 6.0 | 6 | 0.2160 | 0.0 |

| 0.2452 | 7.0 | 7 | 0.1848 | 0.5455 |

| 0.2188 | 8.0 | 8 | 0.1471 | 0.7692 |

| 0.1775 | 9.0 | 9 | 0.1131 | 0.7692 |

| 0.1469 | 10.0 | 10 | 0.0864 | 0.8293 |

| 0.1145 | 11.0 | 11 | 0.0621 | 0.9333 |

| 0.0881 | 12.0 | 12 | 0.0432 | 1.0 |

| 0.0702 | 13.0 | 13 | 0.0329 | 1.0 |

| 0.0531 | 14.0 | 14 | 0.0268 | 1.0 |

| 0.044 | 15.0 | 15 | 0.0184 | 1.0 |

| 0.0321 | 16.0 | 16 | 0.0129 | 1.0 |

| 0.0255 | 17.0 | 17 | 0.0101 | 1.0 |

| 0.0236 | 18.0 | 18 | 0.0087 | 1.0 |

| 0.0254 | 19.0 | 19 | 0.0080 | 1.0 |

| 0.0185 | 20.0 | 20 | 0.0077 | 1.0 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu102

- Datasets 1.18.4

- Tokenizers 0.12.1

|

OpenMatch/cocodr-base-msmarco-warmup

|

OpenMatch

| 2022-11-20T08:26:41Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"fill-mask",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2022-11-20T08:20:01Z |

---

license: mit

---

---

license: mit

---

This model has been pretrained on BEIR corpus then finetuned on MS MARCO with BM25 warmup only, following the approach described in the paper **COCO-DR: Combating Distribution Shifts in Zero-Shot Dense Retrieval with Contrastive and Distributionally Robust Learning**. The associated GitHub repository is available here https://github.com/OpenMatch/COCO-DR.

This model is trained with BERT-base as the backbone with 110M hyperparameters.

|

zhiguoxu/bert-base-chinese-finetuned-ner-food

|

zhiguoxu

| 2022-11-20T08:20:01Z | 122 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-19T05:47:41Z |

---

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: bert-base-chinese-finetuned-ner-food

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-chinese-finetuned-ner-food

This model is a fine-tuned version of [bert-base-chinese](https://huggingface.co/bert-base-chinese) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0039

- F1: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 3.0829 | 1.0 | 3 | 1.6749 | 0.0 |

| 1.5535 | 2.0 | 6 | 1.0327 | 0.6354 |

| 1.0573 | 3.0 | 9 | 0.6295 | 0.7097 |

| 0.5854 | 4.0 | 12 | 0.3763 | 0.8271 |

| 0.4292 | 5.0 | 15 | 0.2165 | 0.9059 |

| 0.2235 | 6.0 | 18 | 0.1121 | 0.9836 |

| 0.1535 | 7.0 | 21 | 0.0597 | 0.9975 |

| 0.0846 | 8.0 | 24 | 0.0337 | 0.9975 |

| 0.0613 | 9.0 | 27 | 0.0214 | 1.0 |

| 0.0365 | 10.0 | 30 | 0.0144 | 1.0 |

| 0.0302 | 11.0 | 33 | 0.0103 | 1.0 |

| 0.0182 | 12.0 | 36 | 0.0078 | 1.0 |

| 0.0175 | 13.0 | 39 | 0.0064 | 1.0 |

| 0.0115 | 14.0 | 42 | 0.0055 | 1.0 |

| 0.0124 | 15.0 | 45 | 0.0049 | 1.0 |

| 0.0117 | 16.0 | 48 | 0.0045 | 1.0 |

| 0.0111 | 17.0 | 51 | 0.0042 | 1.0 |

| 0.0102 | 18.0 | 54 | 0.0041 | 1.0 |

| 0.0096 | 19.0 | 57 | 0.0040 | 1.0 |

| 0.0095 | 20.0 | 60 | 0.0039 | 1.0 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.12.0+cu102

- Datasets 1.18.4

- Tokenizers 0.12.1

|

huggingtweets/iwriteok

|

huggingtweets

| 2022-11-20T06:14:50Z | 109 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"huggingtweets",

"en",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-03-02T23:29:05Z |

---

language: en

thumbnail: http://www.huggingtweets.com/iwriteok/1668924855688/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/598663964340301824/im3Wzn-o_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Robert Evans (The Only Robert Evans)</div>

<div style="text-align: center; font-size: 14px;">@iwriteok</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Robert Evans (The Only Robert Evans).

| Data | Robert Evans (The Only Robert Evans) |

| --- | --- |

| Tweets downloaded | 3218 |

| Retweets | 1269 |

| Short tweets | 142 |

| Tweets kept | 1807 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3hjcp2ib/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @iwriteok's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/wq4n95ia) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/wq4n95ia/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/iwriteok')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

amitjohn007/mpnet-finetuned

|

amitjohn007

| 2022-11-20T05:51:01Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"mpnet",

"question-answering",

"generated_from_keras_callback",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-20T04:59:44Z |

---

tags:

- generated_from_keras_callback

model-index:

- name: amitjohn007/mpnet-finetuned

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# amitjohn007/mpnet-finetuned

This model is a fine-tuned version of [shaina/covid_qa_mpnet](https://huggingface.co/shaina/covid_qa_mpnet) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.5882

- Epoch: 2

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 16602, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: mixed_float16

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 1.0499 | 0 |

| 0.7289 | 1 |

| 0.5882 | 2 |

### Framework versions

- Transformers 4.24.0

- TensorFlow 2.9.2

- Datasets 2.7.0

- Tokenizers 0.13.2

|

andreaschandra/unifiedqa-v2-t5-base-1363200-finetuned-causalqa-squad

|

andreaschandra

| 2022-11-20T05:42:57Z | 115 | 0 |

transformers

|

[

"transformers",

"pytorch",

"t5",

"text2text-generation",

"generated_from_trainer",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2022-11-16T13:33:49Z |

---

tags:

- generated_from_trainer

model-index:

- name: unifiedqa-v2-t5-base-1363200-finetuned-causalqa-squad

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# unifiedqa-v2-t5-base-1363200-finetuned-causalqa-squad

This model is a fine-tuned version of [allenai/unifiedqa-v2-t5-base-1363200](https://huggingface.co/allenai/unifiedqa-v2-t5-base-1363200) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2574

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.7378 | 0.05 | 73 | 1.1837 |

| 0.6984 | 0.1 | 146 | 0.8918 |

| 0.4511 | 0.15 | 219 | 0.8342 |

| 0.4696 | 0.2 | 292 | 0.7642 |

| 0.295 | 0.25 | 365 | 0.7996 |

| 0.266 | 0.3 | 438 | 0.7773 |

| 0.2372 | 0.35 | 511 | 0.8592 |

| 0.2881 | 0.39 | 584 | 0.8440 |

| 0.2578 | 0.44 | 657 | 0.8306 |

| 0.2733 | 0.49 | 730 | 0.8228 |

| 0.2073 | 0.54 | 803 | 0.8419 |

| 0.2683 | 0.59 | 876 | 0.8241 |

| 0.2693 | 0.64 | 949 | 0.8573 |

| 0.355 | 0.69 | 1022 | 0.8204 |

| 0.2246 | 0.74 | 1095 | 0.8530 |

| 0.2468 | 0.79 | 1168 | 0.8410 |

| 0.3102 | 0.84 | 1241 | 0.8035 |

| 0.2115 | 0.89 | 1314 | 0.8262 |

| 0.1855 | 0.94 | 1387 | 0.8560 |

| 0.1772 | 0.99 | 1460 | 0.8747 |

| 0.1509 | 1.04 | 1533 | 0.9132 |

| 0.1871 | 1.09 | 1606 | 0.8920 |

| 0.1624 | 1.14 | 1679 | 0.9085 |

| 0.1404 | 1.18 | 1752 | 0.9460 |

| 0.1639 | 1.23 | 1825 | 0.9812 |

| 0.0983 | 1.28 | 1898 | 0.9790 |

| 0.1395 | 1.33 | 1971 | 0.9843 |

| 0.1439 | 1.38 | 2044 | 0.9877 |

| 0.1397 | 1.43 | 2117 | 1.0338 |

| 0.1095 | 1.48 | 2190 | 1.0589 |

| 0.1228 | 1.53 | 2263 | 1.0498 |

| 0.1246 | 1.58 | 2336 | 1.0923 |

| 0.1438 | 1.63 | 2409 | 1.0995 |

| 0.1305 | 1.68 | 2482 | 1.0867 |

| 0.1077 | 1.73 | 2555 | 1.1013 |

| 0.2104 | 1.78 | 2628 | 1.0765 |

| 0.1633 | 1.83 | 2701 | 1.0796 |

| 0.1658 | 1.88 | 2774 | 1.0314 |

| 0.1358 | 1.92 | 2847 | 0.9823 |

| 0.1571 | 1.97 | 2920 | 0.9826 |

| 0.1127 | 2.02 | 2993 | 1.0324 |

| 0.0927 | 2.07 | 3066 | 1.0679 |

| 0.0549 | 2.12 | 3139 | 1.1069 |

| 0.0683 | 2.17 | 3212 | 1.1624 |

| 0.0677 | 2.22 | 3285 | 1.1174 |

| 0.0615 | 2.27 | 3358 | 1.1431 |

| 0.0881 | 2.32 | 3431 | 1.1721 |

| 0.0807 | 2.37 | 3504 | 1.1885 |

| 0.0955 | 2.42 | 3577 | 1.1991 |

| 0.0779 | 2.47 | 3650 | 1.1999 |

| 0.11 | 2.52 | 3723 | 1.1774 |

| 0.0852 | 2.57 | 3796 | 1.2095 |

| 0.0616 | 2.62 | 3869 | 1.1824 |

| 0.072 | 2.67 | 3942 | 1.2397 |

| 0.1055 | 2.71 | 4015 | 1.2181 |

| 0.0806 | 2.76 | 4088 | 1.2159 |

| 0.0684 | 2.81 | 4161 | 1.1864 |

| 0.0869 | 2.86 | 4234 | 1.1816 |

| 0.1023 | 2.91 | 4307 | 1.1717 |

| 0.0583 | 2.96 | 4380 | 1.1477 |

| 0.0684 | 3.01 | 4453 | 1.1662 |

| 0.0319 | 3.06 | 4526 | 1.2174 |

| 0.0609 | 3.11 | 4599 | 1.1947 |

| 0.0435 | 3.16 | 4672 | 1.1821 |

| 0.0417 | 3.21 | 4745 | 1.1964 |

| 0.0502 | 3.26 | 4818 | 1.2140 |

| 0.0844 | 3.31 | 4891 | 1.2028 |

| 0.0692 | 3.36 | 4964 | 1.2215 |

| 0.0366 | 3.41 | 5037 | 1.2136 |

| 0.0615 | 3.46 | 5110 | 1.2224 |

| 0.0656 | 3.5 | 5183 | 1.2468 |

| 0.0469 | 3.55 | 5256 | 1.2554 |

| 0.0475 | 3.6 | 5329 | 1.2804 |

| 0.0998 | 3.65 | 5402 | 1.2035 |

| 0.0505 | 3.7 | 5475 | 1.2095 |

| 0.0459 | 3.75 | 5548 | 1.2064 |

| 0.0256 | 3.8 | 5621 | 1.2164 |

| 0.0831 | 3.85 | 5694 | 1.2154 |

| 0.0397 | 3.9 | 5767 | 1.2126 |

| 0.0449 | 3.95 | 5840 | 1.2174 |

| 0.0322 | 4.0 | 5913 | 1.2288 |

| 0.059 | 4.05 | 5986 | 1.2274 |

| 0.0382 | 4.1 | 6059 | 1.2228 |

| 0.0202 | 4.15 | 6132 | 1.2177 |

| 0.0328 | 4.2 | 6205 | 1.2305 |

| 0.0407 | 4.24 | 6278 | 1.2342 |

| 0.0356 | 4.29 | 6351 | 1.2448 |

| 0.0414 | 4.34 | 6424 | 1.2537 |

| 0.0448 | 4.39 | 6497 | 1.2540 |

| 0.0545 | 4.44 | 6570 | 1.2552 |

| 0.0492 | 4.49 | 6643 | 1.2570 |

| 0.0293 | 4.54 | 6716 | 1.2594 |

| 0.0498 | 4.59 | 6789 | 1.2562 |

| 0.0349 | 4.64 | 6862 | 1.2567 |

| 0.0497 | 4.69 | 6935 | 1.2550 |

| 0.0194 | 4.74 | 7008 | 1.2605 |

| 0.0255 | 4.79 | 7081 | 1.2590 |

| 0.0212 | 4.84 | 7154 | 1.2571 |

| 0.0231 | 4.89 | 7227 | 1.2583 |

| 0.0399 | 4.94 | 7300 | 1.2580 |

| 0.0719 | 4.99 | 7373 | 1.2574 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

yip-i/wav2vec2-demo-F03

|

yip-i

| 2022-11-20T04:56:47Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-11-15T03:43:04Z |

---

tags:

- generated_from_trainer

model-index:

- name: wav2vec2-demo-F03

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-demo-F03

This model is a fine-tuned version of [yip-i/uaspeech-pretrained](https://huggingface.co/yip-i/uaspeech-pretrained) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.8742

- Wer: 1.2914

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:-----:|:---------------:|:------:|

| 6.4808 | 0.97 | 500 | 3.0628 | 1.1656 |

| 2.9947 | 1.94 | 1000 | 3.0334 | 1.1523 |

| 2.934 | 2.91 | 1500 | 3.0520 | 1.1648 |

| 2.9317 | 3.88 | 2000 | 3.3808 | 1.0 |

| 3.0008 | 4.85 | 2500 | 3.0342 | 1.2559 |

| 3.112 | 5.83 | 3000 | 3.1228 | 1.1258 |

| 2.8972 | 6.8 | 3500 | 2.9885 | 1.2914 |

| 2.8911 | 7.77 | 4000 | 3.2586 | 1.2754 |

| 2.9884 | 8.74 | 4500 | 3.0487 | 1.2090 |

| 2.873 | 9.71 | 5000 | 2.9382 | 1.2914 |

| 3.3551 | 10.68 | 5500 | 3.2607 | 1.2844 |

| 3.6426 | 11.65 | 6000 | 3.0053 | 1.0242 |

| 2.9184 | 12.62 | 6500 | 2.9219 | 1.2828 |

| 2.8384 | 13.59 | 7000 | 2.9530 | 1.2816 |

| 2.8855 | 14.56 | 7500 | 2.9978 | 1.0121 |

| 2.8479 | 15.53 | 8000 | 2.9722 | 1.0977 |

| 2.8241 | 16.5 | 8500 | 2.9670 | 1.3082 |

| 2.807 | 17.48 | 9000 | 2.9841 | 1.2914 |

| 2.8115 | 18.45 | 9500 | 2.9484 | 1.2977 |

| 2.8123 | 19.42 | 10000 | 2.9310 | 1.2914 |

| 3.0291 | 20.39 | 10500 | 2.9665 | 1.2902 |

| 2.8735 | 21.36 | 11000 | 2.9245 | 1.1160 |

| 2.8164 | 22.33 | 11500 | 2.9137 | 1.2914 |

| 2.8084 | 23.3 | 12000 | 2.9543 | 1.1891 |

| 2.8079 | 24.27 | 12500 | 2.9179 | 1.4516 |

| 2.7916 | 25.24 | 13000 | 2.8971 | 1.2926 |

| 2.7824 | 26.21 | 13500 | 2.8990 | 1.2914 |

| 2.7555 | 27.18 | 14000 | 2.9004 | 1.2914 |

| 2.7803 | 28.16 | 14500 | 2.8747 | 1.2910 |

| 2.753 | 29.13 | 15000 | 2.8742 | 1.2914 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 1.18.3

- Tokenizers 0.13.2

|

Alred/t5-small-finetuned-summarization-cnn-ver3

|

Alred

| 2022-11-20T03:41:44Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"summarization",

"generated_from_trainer",

"dataset:cnn_dailymail",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-11-20T02:50:30Z |

---

license: apache-2.0

tags:

- summarization

- generated_from_trainer

datasets:

- cnn_dailymail

model-index:

- name: t5-small-finetuned-summarization-cnn-ver3

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-summarization-cnn-ver3

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the cnn_dailymail dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1072

- Bertscore-mean-precision: 0.8861

- Bertscore-mean-recall: 0.8592

- Bertscore-mean-f1: 0.8723

- Bertscore-median-precision: 0.8851

- Bertscore-median-recall: 0.8582

- Bertscore-median-f1: 0.8719

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0003

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bertscore-mean-precision | Bertscore-mean-recall | Bertscore-mean-f1 | Bertscore-median-precision | Bertscore-median-recall | Bertscore-median-f1 |

|:-------------:|:-----:|:----:|:---------------:|:------------------------:|:---------------------:|:-----------------:|:--------------------------:|:-----------------------:|:-------------------:|

| 2.0168 | 1.0 | 718 | 2.0528 | 0.8870 | 0.8591 | 0.8727 | 0.8864 | 0.8578 | 0.8724 |

| 1.8387 | 2.0 | 1436 | 2.0610 | 0.8863 | 0.8591 | 0.8723 | 0.8848 | 0.8575 | 0.8712 |

| 1.7302 | 3.0 | 2154 | 2.0659 | 0.8856 | 0.8588 | 0.8719 | 0.8847 | 0.8569 | 0.8717 |

| 1.6459 | 4.0 | 2872 | 2.0931 | 0.8860 | 0.8592 | 0.8722 | 0.8850 | 0.8570 | 0.8718 |

| 1.5907 | 5.0 | 3590 | 2.1072 | 0.8861 | 0.8592 | 0.8723 | 0.8851 | 0.8582 | 0.8719 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Jellywibble/dalio-convo-finetune-restruct

|

Jellywibble

| 2022-11-20T02:39:45Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"opt",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-19T19:41:56Z |

---

tags:

- text-generation

library_name: transformers

---

## Model description

Based on Jellywibble/dalio-pretrained-book-bs4-seed1 which was pre-trained on the Dalio Principles Book

Finetuned on handwritten conversations Jellywibble/dalio_handwritten-conversations

## Dataset Used

Jellywibble/dalio_handwritten-conversations

## Training Parameters

- Deepspeed on 4xA40 GPUs

- Ensuring EOS token `<s>` appears only at the beginning of each 'This is a conversation where Ray ...'

- Gradient Accumulation steps = 1 (Effective batch size of 4)

- 2e-6 Learning Rate, AdamW optimizer

- Block size of 1000

- Trained for 1 Epoch (additional epochs yielded worse Hellaswag result)

## Metrics

- Hellaswag Perplexity: 29.83

- Eval accuracy: 58.1%

- Eval loss: 1.883

- Checkpoint 9 uploaded

- Wandb run: https://wandb.ai/jellywibble/huggingface/runs/157eehn9?workspace=user-jellywibble

|

Jellywibble/dalio-principles-pretrain-v2

|

Jellywibble

| 2022-11-20T01:55:33Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"opt",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-19T19:42:56Z |

---

tags:

- text-generation

library_name: transformers

---

## Model description

Based off facebook/opt-30b model, finetuned on chucked Dalio responses

## Dataset Used

Jellywibble/dalio-pretrain-book-dataset-v2

## Training Parameters

- Deepspeed on 4xA40 GPUs

- Ensuring EOS token `<s>` appears only at the beginning of each chunk

- Gradient Accumulation steps = 1 (Effective batch size of 4)

- 3e-6 Learning Rate, AdamW optimizer

- Block size of 800

- Trained for 1 Epoch (additional epochs yielded worse Hellaswag result)

## Metrics

- Hellaswag Perplexity: 30.2

- Eval accuracy: 49.8%

- Eval loss: 2.283

- Checkpoint 16 uploaded

- wandb run: https://wandb.ai/jellywibble/huggingface/runs/2vtr39rk?workspace=user-jellywibble

|

Deepthoughtworks/gpt-neo-2.7B__low-cpu

|

Deepthoughtworks

| 2022-11-19T23:20:13Z | 44 | 1 |

transformers

|

[

"transformers",

"pytorch",

"jax",

"rust",

"gpt_neo",

"text-generation",

"text generation",

"causal-lm",

"en",

"arxiv:2101.00027",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2022-11-11T11:35:56Z |

---

language:

- en

tags:

- text generation

- pytorch

- causal-lm

license: apache-2.0

---

# GPT-Neo 2.7B

## Model Description

GPT-Neo 2.7B is a transformer model designed using EleutherAI's replication of the GPT-3 architecture. GPT-Neo refers to the class of models, while 2.7B represents the number of parameters of this particular pre-trained model.

## Training data

GPT-Neo 2.7B was trained on the Pile, a large scale curated dataset created by EleutherAI for the purpose of training this model.

## Training procedure

This model was trained for 420 billion tokens over 400,000 steps. It was trained as a masked autoregressive language model, using cross-entropy loss.

## Intended Use and Limitations

This way, the model learns an inner representation of the English language that can then be used to extract features useful for downstream tasks. The model is best at what it was pretrained for however, which is generating texts from a prompt.

### How to use

You can use this model directly with a pipeline for text generation. This example generates a different sequence each time it's run:

```py

>>> from transformers import pipeline

>>> generator = pipeline('text-generation', model='EleutherAI/gpt-neo-2.7B')

>>> generator("EleutherAI has", do_sample=True, min_length=50)

[{'generated_text': 'EleutherAI has made a commitment to create new software packages for each of its major clients and has'}]

```

### Limitations and Biases

GPT-Neo was trained as an autoregressive language model. This means that its core functionality is taking a string of text and predicting the next token. While language models are widely used for tasks other than this, there are a lot of unknowns with this work.

GPT-Neo was trained on the Pile, a dataset known to contain profanity, lewd, and otherwise abrasive language. Depending on your usecase GPT-Neo may produce socially unacceptable text. See Sections 5 and 6 of the Pile paper for a more detailed analysis of the biases in the Pile.

As with all language models, it is hard to predict in advance how GPT-Neo will respond to particular prompts and offensive content may occur without warning. We recommend having a human curate or filter the outputs before releasing them, both to censor undesirable content and to improve the quality of the results.

## Eval results

All evaluations were done using our [evaluation harness](https://github.com/EleutherAI/lm-evaluation-harness). Some results for GPT-2 and GPT-3 are inconsistent with the values reported in the respective papers. We are currently looking into why, and would greatly appreciate feedback and further testing of our eval harness. If you would like to contribute evaluations you have done, please reach out on our [Discord](https://discord.gg/vtRgjbM).

### Linguistic Reasoning

| Model and Size | Pile BPB | Pile PPL | Wikitext PPL | Lambada PPL | Lambada Acc | Winogrande | Hellaswag |

| ---------------- | ---------- | ---------- | ------------- | ----------- | ----------- | ---------- | ----------- |

| GPT-Neo 1.3B | 0.7527 | 6.159 | 13.10 | 7.498 | 57.23% | 55.01% | 38.66% |

| GPT-2 1.5B | 1.0468 | ----- | 17.48 | 10.634 | 51.21% | 59.40% | 40.03% |

| **GPT-Neo 2.7B** | **0.7165** | **5.646** | **11.39** | **5.626** | **62.22%** | **56.50%** | **42.73%** |

| GPT-3 Ada | 0.9631 | ----- | ----- | 9.954 | 51.60% | 52.90% | 35.93% |

### Physical and Scientific Reasoning

| Model and Size | MathQA | PubMedQA | Piqa |

| ---------------- | ---------- | ---------- | ----------- |

| GPT-Neo 1.3B | 24.05% | 54.40% | 71.11% |

| GPT-2 1.5B | 23.64% | 58.33% | 70.78% |

| **GPT-Neo 2.7B** | **24.72%** | **57.54%** | **72.14%** |

| GPT-3 Ada | 24.29% | 52.80% | 68.88% |

### Down-Stream Applications

TBD

### BibTeX entry and citation info

To cite this model, use

```bibtex

@software{gpt-neo,

author = {Black, Sid and

Leo, Gao and

Wang, Phil and

Leahy, Connor and

Biderman, Stella},

title = {{GPT-Neo: Large Scale Autoregressive Language

Modeling with Mesh-Tensorflow}},

month = mar,

year = 2021,

note = {{If you use this software, please cite it using

these metadata.}},

publisher = {Zenodo},

version = {1.0},

doi = {10.5281/zenodo.5297715},

url = {https://doi.org/10.5281/zenodo.5297715}

}

@article{gao2020pile,

title={The Pile: An 800GB Dataset of Diverse Text for Language Modeling},

author={Gao, Leo and Biderman, Stella and Black, Sid and Golding, Laurence and Hoppe, Travis and Foster, Charles and Phang, Jason and He, Horace and Thite, Anish and Nabeshima, Noa and others},

journal={arXiv preprint arXiv:2101.00027},

year={2020}

}

```

|

cahya/t5-base-indonesian-summarization-cased

|

cahya

| 2022-11-19T20:41:24Z | 497 | 5 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"t5",

"text2text-generation",

"pipeline:summarization",

"summarization",

"id",

"dataset:id_liputan6",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

summarization

| 2022-03-02T23:29:05Z |

---

language: id

tags:

- pipeline:summarization

- summarization

- t5

datasets:

- id_liputan6

---

# Indonesian T5 Summarization Base Model

Finetuned T5 base summarization model for Indonesian.

## Finetuning Corpus

`t5-base-indonesian-summarization-cased` model is based on `t5-base-bahasa-summarization-cased` by [huseinzol05](https://huggingface.co/huseinzol05), finetuned using [id_liputan6](https://huggingface.co/datasets/id_liputan6) dataset.

## Load Finetuned Model

```python

from transformers import T5Tokenizer, T5Model, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("cahya/t5-base-indonesian-summarization-cased")

model = T5ForConditionalGeneration.from_pretrained("cahya/t5-base-indonesian-summarization-cased")

```

## Code Sample

```python

from transformers import T5Tokenizer, T5ForConditionalGeneration

tokenizer = T5Tokenizer.from_pretrained("cahya/t5-base-indonesian-summarization-cased")

model = T5ForConditionalGeneration.from_pretrained("cahya/t5-base-indonesian-summarization-cased")

#

ARTICLE_TO_SUMMARIZE = ""

# generate summary

input_ids = tokenizer.encode(ARTICLE_TO_SUMMARIZE, return_tensors='pt')

summary_ids = model.generate(input_ids,

min_length=20,

max_length=80,

num_beams=10,

repetition_penalty=2.5,

length_penalty=1.0,

early_stopping=True,

no_repeat_ngram_size=2,

use_cache=True,

do_sample = True,

temperature = 0.8,

top_k = 50,

top_p = 0.95)

summary_text = tokenizer.decode(summary_ids[0], skip_special_tokens=True)

print(summary_text)

```

Output:

```

```

|

ocm/xlm-roberta-base-finetuned-panx-de

|

ocm

| 2022-11-19T20:26:55Z | 113 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2022-11-19T20:02:31Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

args: PAN-X.de

metrics:

- name: F1

type: f1

value: 0.8648740833380706

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1365

- F1: 0.8649

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2553 | 1.0 | 525 | 0.1575 | 0.8279 |

| 0.1284 | 2.0 | 1050 | 0.1386 | 0.8463 |

| 0.0813 | 3.0 | 1575 | 0.1365 | 0.8649 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

fernanda-dionello/good-reads-string

|

fernanda-dionello

| 2022-11-19T20:16:34Z | 99 | 0 |

transformers

|

[

"transformers",

"pytorch",

"autotrain",

"text-classification",

"en",

"dataset:fernanda-dionello/autotrain-data-autotrain_goodreads_string",

"co2_eq_emissions",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-19T20:11:24Z |

---

tags:

- autotrain

- text-classification

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- fernanda-dionello/autotrain-data-autotrain_goodreads_string

co2_eq_emissions:

emissions: 0.04700680417595474

---

# Model Trained Using AutoTrain

- Problem type: Multi-class Classification

- Model ID: 2164069744

- CO2 Emissions (in grams): 0.0470

## Validation Metrics

- Loss: 0.806

- Accuracy: 0.686

- Macro F1: 0.534

- Micro F1: 0.686

- Weighted F1: 0.678

- Macro Precision: 0.524

- Micro Precision: 0.686

- Weighted Precision: 0.673

- Macro Recall: 0.551

- Micro Recall: 0.686

- Weighted Recall: 0.686

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/models/fernanda-dionello/autotrain-autotrain_goodreads_string-2164069744

```

Or Python API:

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

model = AutoModelForSequenceClassification.from_pretrained("fernanda-dionello/autotrain-autotrain_goodreads_string-2164069744", use_auth_token=True)

tokenizer = AutoTokenizer.from_pretrained("fernanda-dionello/autotrain-autotrain_goodreads_string-2164069744", use_auth_token=True)

inputs = tokenizer("I love AutoTrain", return_tensors="pt")

outputs = model(**inputs)

```

|

chieunq/XLM-R-base-finetuned-uit-vquad-1

|

chieunq

| 2022-11-19T20:02:14Z | 108 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"question-answering",

"vi",

"dataset:uit-vquad",

"arxiv:2009.14725",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-19T19:00:55Z |

---

language: vi

tags:

- vi

- xlm-roberta

widget:

- text: 3 thành viên trong nhóm gồm những ai ?

context: "Nhóm của chúng tôi là sinh viên năm 4 trường ĐH Công Nghệ - ĐHQG Hà Nội. Nhóm gồm 3 thành viên: Nguyễn Quang Chiều, Nguyễn Quang Huy và Nguyễn Trần Anh Đức . Đây là pha Reader trong dự án cuồi kì môn Các vấn đề hiện đại trong CNTT của nhóm ."

datasets:

- uit-vquad

metrics:

- EM (exact match) : 60.63

- F1 : 79.63

---

We fined-tune model XLM-Roberta-base in UIT-vquad dataset (https://arxiv.org/pdf/2009.14725.pdf)

### Performance

- EM (exact match) : 60.63

- F1 : 79.63

### How to run

```

from transformers import pipeline

# Replace this with your own checkpoint

model_checkpoint = "chieunq/XLM-R-base-finetuned-uit-vquad-1"

question_answerer = pipeline("question-answering", model=model_checkpoint)

context = """

Nhóm của chúng tôi là sinh viên năm 4 trường ĐH Công Nghệ - ĐHQG Hà Nội. Nhóm gồm 3 thành viên : Nguyễn Quang Chiều, Nguyễn Quang Huy và Nguyễn Trần Anh Đức . Đây là pha Reader trong dự án cuồi kì môn Các vấn đề hiện đại trong CNTT của nhóm .

"""

question = "3 thành viên trong nhóm gồm những ai ?"

question_answerer(question=question, context=context)

```

### Output

```

{'score': 0.9928902387619019,

'start': 98,

'end': 158,

'answer': 'Nguyễn Quang Chiều, Nguyễn Quang Huy và Nguyễn Trần Anh Đức.'}

```

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.0

- Tokenizers 0.13.2

|

Froddan/furiostyle

|

Froddan

| 2022-11-19T19:28:35Z | 0 | 3 | null |

[

"stable-diffusion",

"text-to-image",

"en",

"license:cc0-1.0",

"region:us"

] |

text-to-image

| 2022-11-19T19:10:50Z |

---

license: cc0-1.0

inference: false

language:

- en

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion fine tuned on art by [Furio Tedeshi](https://www.furiotedeschi.com/)

### Usage

Use by adding the keyword "furiostyle" to the prompt. The model was trained with the "demon" classname, which can also be added to the prompt.

## Samples

For this model I made two checkpoints. The "furiostyle demon x2" model is trained for twice as long as the regular checkpoint, meaning it should be more fine tuned on the style but also more rigid. The top 4 images are from the regular version, the rest are from the x2 version. I hope it gives you an idea of what kind of styles can be created with this model. I think the x2 model got better results this time around, if you would compare the dog and the mushroom.

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/1000_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/1000_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/dog_1000_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/mushroom_1000_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/2000_1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/2000_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/mushroom_cave_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/mushroom_cave_ornate.png" width="256px"/>

<img src="https://huggingface.co/Froddan/furiostyle/resolve/main/dog_2.png" width="256px"/>

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

Froddan/bulgarov

|

Froddan

| 2022-11-19T19:23:36Z | 0 | 1 | null |

[

"stable-diffusion",

"text-to-image",

"en",

"license:cc0-1.0",

"region:us"

] |

text-to-image

| 2022-11-19T16:11:02Z |

---

license: cc0-1.0

inference: false

language:

- en

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion fine tuned on art by [Vitaly Bulgarov](https://www.artstation.com/vbulgarov)

### Usage

Use by adding the keyword "bulgarovstyle" to the prompt. The model was trained with the "knight" classname, which can also be added to the prompt.

## Samples

For this model I made two checkpoints. The "bulgarovstyle knight x2" model is trained for twice as long as the regular checkpoint, meaning it should be more fine tuned on the style but also more rigid. The top 3 images are from the regular version, the rest are from the x2 version (I think). I hope it gives you an idea of what kind of styles can be created with this model.

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/dog_v1_1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/greg_v1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/greg3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/index4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/index_1600_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/index_1600_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/tmp1zir5pbb.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/tmp6lk0vp7p.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/tmpgabti6yx.png" width="256px"/>

<img src="https://huggingface.co/Froddan/bulgarov/resolve/main/tmpgvytng2n.png" width="256px"/>

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

stephenhbarlow/biobert-base-cased-v1.2-multiclass-finetuned-PET2

|

stephenhbarlow

| 2022-11-19T18:53:28Z | 119 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-19T16:45:29Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

model-index:

- name: biobert-base-cased-v1.2-multiclass-finetuned-PET2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# biobert-base-cased-v1.2-multiclass-finetuned-PET2

This model is a fine-tuned version of [dmis-lab/biobert-base-cased-v1.2](https://huggingface.co/dmis-lab/biobert-base-cased-v1.2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8075

- Accuracy: 0.5673

- F1: 0.4253

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.0175 | 1.0 | 14 | 0.8446 | 0.5625 | 0.4149 |

| 0.8634 | 2.0 | 28 | 0.8075 | 0.5673 | 0.4253 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0

- Datasets 2.7.0

- Tokenizers 0.13.2

|

kormilitzin/en_core_med7_trf

|

kormilitzin

| 2022-11-19T18:51:54Z | 375 | 12 |

spacy

|

[

"spacy",

"token-classification",

"en",

"license:mit",

"model-index",

"region:us"

] |

token-classification

| 2022-03-02T23:29:05Z |

---

tags:

- spacy

- token-classification

language:

- en

license: mit

model-index:

- name: en_core_med7_trf

results:

- task:

name: NER

type: token-classification

metrics:

- name: NER Precision

type: precision

value: 0.8822157434

- name: NER Recall

type: recall

value: 0.925382263

- name: NER F Score

type: f_score

value: 0.9032835821

---

| Feature | Description |

| --- | --- |

| **Name** | `en_core_med7_trf` |

| **Version** | `3.4.2.1` |

| **spaCy** | `>=3.4.2,<3.5.0` |

| **Default Pipeline** | `transformer`, `ner` |

| **Components** | `transformer`, `ner` |

| **Vectors** | 514157 keys, 514157 unique vectors (300 dimensions) |

| **Sources** | n/a |

| **License** | `MIT` |

| **Author** | [Andrey Kormilitzin](https://www.kormilitzin.com/) |

### Label Scheme

<details>

<summary>View label scheme (7 labels for 1 components)</summary>

| Component | Labels |

| --- | --- |

| **`ner`** | `DOSAGE`, `DRUG`, `DURATION`, `FORM`, `FREQUENCY`, `ROUTE`, `STRENGTH` |

</details>

### Accuracy

| Type | Score |

| --- | --- |

| `ENTS_F` | 90.33 |

| `ENTS_P` | 88.22 |

| `ENTS_R` | 92.54 |

| `TRANSFORMER_LOSS` | 2502627.06 |

| `NER_LOSS` | 114576.77 |

### BibTeX entry and citation info

```bibtex

@article{kormilitzin2021med7,

title={Med7: A transferable clinical natural language processing model for electronic health records},

author={Kormilitzin, Andrey and Vaci, Nemanja and Liu, Qiang and Nevado-Holgado, Alejo},

journal={Artificial Intelligence in Medicine},

volume={118},

pages={102086},

year={2021},

publisher={Elsevier}

}

```

|

Froddan/hurrishiny

|

Froddan

| 2022-11-19T18:34:05Z | 0 | 1 | null |

[

"stable-diffusion",

"text-to-image",

"en",

"license:cc0-1.0",

"region:us"

] |

text-to-image

| 2022-11-19T15:14:11Z |

---

license: cc0-1.0

inference: false

language:

- en

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion fine tuned on art by [Björn Hurri](https://www.artstation.com/bjornhurri)

This model is fine tuned on some of his "shiny"-style paintings. I also have a version for his "matte" works.

### Usage

Use by adding the keyword "hurrishiny" to the prompt. The model was trained with the "monster" classname, which can also be added to the prompt.

## Samples

For this model I made two checkpoints. The "hurrishiny monster x2" model is trained for twice as long as the regular checkpoint, meaning it should be more fine tuned on the style but also more rigid. The top 4 images are from the regular version, the rest are from the x2 version. I hope it gives you an idea of what kind of styles can be created with this model.

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/1700_1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/1700_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/1700_3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/1700_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/3400_1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/3400_2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/3400_3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/3400_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/index1.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/index3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/index5.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrishiny/resolve/main/index6.png" width="256px"/>

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

yunseokj/ddpm-butterflies-128

|

yunseokj

| 2022-11-19T18:20:57Z | 0 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"en",

"dataset:huggan/smithsonian_butterflies_subset",

"license:apache-2.0",

"diffusers:DDPMPipeline",

"region:us"

] | null | 2022-11-19T17:31:45Z |

---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: huggan/smithsonian_butterflies_subset

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# ddpm-butterflies-128

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `huggan/smithsonian_butterflies_subset` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: fp16

### Training results

📈 [TensorBoard logs](https://huggingface.co/yunseokj/ddpm-butterflies-128/tensorboard?#scalars)

|

Froddan/hurrimatte

|

Froddan

| 2022-11-19T18:11:55Z | 0 | 1 | null |

[

"stable-diffusion",

"text-to-image",

"en",

"license:cc0-1.0",

"region:us"

] |

text-to-image

| 2022-11-19T15:10:08Z |

---

license: cc0-1.0

inference: false

language:

- en

tags:

- stable-diffusion

- text-to-image

---

# Stable Diffusion fine tuned on art by [Björn Hurri](https://www.artstation.com/bjornhurri)

This model is fine tuned on some of his matte-style paintings. I also have a version for his "shinier" works.

### Usage

Use by adding the keyword "hurrimatte" to the prompt. The model was trained with the "monster" classname, which can also be added to the prompt.

## Samples

For this model I made two checkpoints. The "hurrimatte monster x2" model is trained for twice as long as the regular checkpoint, meaning it should be more fine tuned on the style but also more rigid. The top 3 images are from the regular version, the rest are from the x2 version. I hope it gives you an idea of what kind of styles can be created with this model.

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index_1200_3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index_1200_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/1200_4.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index2.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index3.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index_2400_5.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index_2400_6.png" width="256px"/>

<img src="https://huggingface.co/Froddan/hurrimatte/resolve/main/index_2400_7.png" width="256px"/>

### 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

|

LaurentiuStancioiu/distilbert-base-uncased-finetuned-emotion

|

LaurentiuStancioiu

| 2022-11-19T18:09:47Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-11-19T17:54:45Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.902

- name: F1

type: f1

value: 0.9000722917492663

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3554

- Accuracy: 0.902

- F1: 0.9001

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 1.0993 | 1.0 | 125 | 0.5742 | 0.8045 | 0.7747 |

| 0.4436 | 2.0 | 250 | 0.3554 | 0.902 | 0.9001 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

sd-concepts-library/ghibli-face

|

sd-concepts-library

| 2022-11-19T17:52:39Z | 0 | 4 | null |

[

"license:mit",

"region:us"

] | null | 2022-11-19T17:52:35Z |

---

license: mit

---

### ghibli-face on Stable Diffusion

This is the `<ghibli-face>` concept taught to Stable Diffusion via Textual Inversion. You can load this concept into the [Stable Conceptualizer](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb) notebook. You can also train your own concepts and load them into the concept libraries using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb).

Here is the new concept you will be able to use as a `style`:

|

monakth/distilbert-base-cased-finetuned-squadv2

|

monakth

| 2022-11-19T17:02:46Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"distilbert",

"question-answering",

"generated_from_trainer",

"dataset:squad_v2",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2022-11-19T17:01:53Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- squad_v2

model-index:

- name: distilbert-base-cased-finetuned-squadv

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-cased-finetuned-squadv

This model is a fine-tuned version of [monakth/distilbert-base-cased-finetuned-squad](https://huggingface.co/monakth/distilbert-base-cased-finetuned-squad) on the squad_v2 dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0

- Datasets 2.1.0

- Tokenizers 0.12.1

|

4eJIoBek/green_elephant_jukebox1b

|

4eJIoBek

| 2022-11-19T16:22:09Z | 0 | 0 | null |

[

"license:openrail",

"region:us"

] | null | 2022-10-01T09:08:50Z |

---

license: openrail

---

это очень плохой файнтюн 1b jukebox модели на ~25 минутах ремиксов с зелёным слоником, а точнее на тех моментах, где используется момент, где пахом пытался петь(та-тратарутару та типо так), демки есть в файлах. датасет потерял.

КАК ИСПОЛЬЗОВАТЬ?

распаковать архив и папку juke переместить в корень гуглодиска. затем открыть inference.ipynb в колабе.

|

Harrier/dqn-SpaceInvadersNoFrameskip-v4

|

Harrier

| 2022-11-19T15:53:13Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"SpaceInvadersNoFrameskip-v4",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2022-11-19T15:52:33Z |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 615.50 +/- 186.61

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Harrier -f logs/

python enjoy.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Harrier -f logs/

rl_zoo3 enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python train.py --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)

python -m rl_zoo3.push_to_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/ -orga Harrier

```

## Hyperparameters

```python

OrderedDict([('batch_size', 32),

('buffer_size', 100000),

('env_wrapper',

['stable_baselines3.common.atari_wrappers.AtariWrapper']),

('exploration_final_eps', 0.01),

('exploration_fraction', 0.1),

('frame_stack', 4),

('gradient_steps', 1),

('learning_rate', 0.0001),

('learning_starts', 100000),

('n_timesteps', 1000000.0),

('optimize_memory_usage', False),

('policy', 'CnnPolicy'),

('target_update_interval', 1000),

('train_freq', 4),

('normalize', False)])

```

|

katboi01/rare-puppers

|

katboi01

| 2022-11-19T15:04:01Z | 186 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"huggingpics",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2022-11-19T15:03:49Z |

---

tags:

- image-classification

- pytorch

- huggingpics

metrics:

- accuracy

model-index:

- name: rare-puppers

results:

- task:

name: Image Classification

type: image-classification

metrics:

- name: Accuracy

type: accuracy

value: 0.89552241563797

---

# rare-puppers

Autogenerated by HuggingPics🤗🖼️

Create your own image classifier for **anything** by running [the demo on Google Colab](https://colab.research.google.com/github/nateraw/huggingpics/blob/main/HuggingPics.ipynb).

Report any issues with the demo at the [github repo](https://github.com/nateraw/huggingpics).

## Example Images

#### corgi

#### samoyed

#### shiba inu

|

nypnop/distilbert-base-uncased-finetuned-bbc-news

|

nypnop

| 2022-11-19T14:09:27Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",