modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

yodayo-ai/holodayo-xl-2.1 | yodayo-ai | 2024-06-07T08:07:33Z | 16,466 | 54 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"stable-diffusion-xl",

"en",

"base_model:cagliostrolab/animagine-xl-3.1",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-06-02T11:57:15Z | ---

license: other

license_name: faipl-1.0-sd

license_link: https://freedevproject.org/faipl-1.0-sd/

language:

- en

tags:

- text-to-image

- stable-diffusion

- safetensors

- stable-diffusion-xl

base_model: cagliostrolab/animagine-xl-3.1

widget:

- text: 1girl, green hair, sweater, looking at viewer, upper body, beanie, outdoors, night, turtleneck, masterpiece, best quality, very aesthetic, absurdres

parameter:

negative_prompt: nsfw, low quality, worst quality, very displeasing, 3d, watermark, signature, ugly, poorly drawn

example_title: 1girl

- text: 1boy, male focus, green hair, sweater, looking at viewer, upper body, beanie, outdoors, night, turtleneck, masterpiece, best quality, very aesthetic, absurdres

parameter:

negative_prompt: nsfw, low quality, worst quality, very displeasing, 3d, watermark, signature, ugly, poorly drawn

example_title: 1boy

---

<style>

body {

display: flex;

align-items: center;

justify-content: center;

height: 100vh;

margin: 0;

font-family: Arial, sans-serif;

background-color: #f4f4f9;

overflow: auto;

}

.container {

display: flex;

flex-direction: column;

align-items: center;

justify-content: center;

width: 100%;

padding: 20px;

}

.title-container {

display: flex;

flex-direction: column;

justify-content: center;

align-items: center;

padding: 1em;

border-radius: 10px;

}

.title {

font-size: 3em;

font-family: 'Montserrat', sans-serif;

text-align: center;

font-weight: bold;

}

.title span {

background: -webkit-linear-gradient(45deg, #ff8e8e, #ffb6c1, #ff69b4);

-webkit-background-clip: text;

-webkit-text-fill-color: transparent;

}

.gallery {

display: grid;

grid-template-columns: repeat(5, 1fr);

gap: 10px;

}

.gallery img {

width: 100%;

height: auto;

margin-top: 0px;

margin-bottom: 0px;

border-radius: 10px;

box-shadow: 0 4px 8px rgba(0, 0, 0, 0.2);

transition: transform 0.3s;

}

.gallery img:hover {

transform: scale(1.05);

}

.note {

font-size: 1em;

opacity: 50%;

text-align: center;

margin-top: 20px;

color: #555;

}

</style>

<div class="container">

<div class="title-container">

<div class="title"><span>Holodayo XL 2.1</span></div>

</div>

<div class="gallery">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-001.png" alt="Image 1">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-002.png" alt="Image 2">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-003.png" alt="Image 3">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-004.png" alt="Image 4">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-005.png" alt="Image 5">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-006.png" alt="Image 6">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-007.png" alt="Image 7">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-008.png" alt="Image 8">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-009.png" alt="Image 9">

<img src="https://huggingface.co/yodayo-ai/holodayo-xl-2.1/resolve/main/samples/sample-010.png" alt="Image 10">

</div>

<div class="note">

Drag and drop each image to <a href="https://huggingface.co/spaces/Linaqruf/pnginfo" target="_blank">this link</a> or use ComfyUI to get the metadata.

</div>

</div>

## Overview

**Holodayo XL 2.1** is the latest version of the [Yodayo Holodayo XL](https://yodayo.com/models/1cafd6f8-8fc6-4282-b8f8-843935acbfe8) series, following the previous iteration, [Holodayo XL 1.0](https://yodayo.com/models/1cafd6f8-8fc6-4282-b8f8-843935acbfe8/?modelversion=2349b302-a726-44ba-933b-e3dc4631a95b). This open-source model is built upon Animagine XL V3, a specialized SDXL model designed for generating high-quality anime-style artwork. Holodayo XL 2.1 has undergone additional fine-tuning and optimization to focus specifically on generating images that accurately represent the visual style and aesthetics of the Virtual Youtuber franchise.

Holodayo XL 2.1 was trained to fix everything wrong in [Holodayo XL 2.0](https://yodayo.com/models/1cafd6f8-8fc6-4282-b8f8-843935acbfe8/?modelversion=ca4bf1aa-0baf-44cd-8ee9-8f4c6bba89c8), such as bad hands, bad anatomy, catastrophic forgetting due to the text encoder being trained during the fine-tuning phase, and an overexposed art style by decreasing the aesthetic datasets.

## Model Details

- **Developed by**: [Linaqruf](https://github.com/Linaqruf)

- **Model type**: Diffusion-based text-to-image generative model

- **Model Description**: Holodayo XL 2.1, the latest in the Yodayo Holodayo XL series, is an open-source model built on Animagine XL V3. Fine-tuned for high-quality Virtual Youtuber anime-style art generation.

- **License**: [Fair AI Public License 1.0-SD](https://freedevproject.org/faipl-1.0-sd/)

- **Finetuned from model**: [Animagine XL 3.1](https://huggingface.co/cagliostrolab/animagine-xl-3.1)

## Supported Platform

1. Use this model in our platform: [](https://yodayo.com/models/1cafd6f8-8fc6-4282-b8f8-843935acbfe8/?modelversion=6862d809-0cbc-4fe0-83dc-9206d60b0698)

2. Use it in [`ComfyUI`](https://github.com/comfyanonymous/ComfyUI) or [`Stable Diffusion Webui`](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

3. Use it with 🧨 `diffusers`

## 🧨 Diffusers Installation

First install the required libraries:

```bash

pip install diffusers transformers accelerate safetensors --upgrade

```

Then run image generation with the following example code:

```python

import torch

from diffusers import StableDiffusionXLPipeline

pipe = StableDiffusionXLPipeline.from_pretrained(

"yodayo-ai/holodayo-xl-2.1",

torch_dtype=torch.float16,

use_safetensors=True,

custom_pipeline="lpw_stable_diffusion_xl",

add_watermarker=False,

variant="fp16"

)

pipe.to('cuda')

prompt = "1girl, nakiri ayame, nakiri ayame \(1st costume\), hololive, solo, upper body, v, smile, looking at viewer, outdoors, night, masterpiece, best quality, very aesthetic, absurdres"

negative_prompt = "nsfw, (low quality, worst quality:1.2), very displeasing, 3d, watermark, signature, ugly, poorly drawn"

image = pipe(

prompt,

negative_prompt=negative_prompt,

width=832,

height=1216,

guidance_scale=7,

num_inference_steps=28

).images[0]

image.save("./waifu.png")

```

## Usage Guidelines

### Tag Ordering

For optimal results, it's recommended to follow the structured prompt template because we train the model like this:

```

1girl/1boy, character name, from which series, by which artists, everything else in any order.

```

### Special Tags

Holodayo XL 2.1 inherits special tags from Animagine XL 3.1 to enhance image generation by steering results toward quality, rating, creation date, and aesthetic. This inheritance ensures that Holodayo XL 2.1 can produce high-quality, relevant, and aesthetically pleasing images. While the model can generate images without these tags, using them helps achieve better results.

- **Quality tags**: masterpiece, best quality, great quality, good quality, normal quality, low quality, worst quality

- **Rating tags**: safe, sensitive, nsfw, explicit

- **Year tags**: newest, recent, mid, early, oldest

- **Aesthetic tags**: very aesthetic, aesthetic, displeasing, very displeasing

### Recommended Settings

To guide the model towards generating high-aesthetic images, use the following recommended settings:

- **Negative prompts**:

```

nsfw, (low quality, worst quality:1.2), very displeasing, 3d, watermark, signature, ugly, poorly drawn

```

- **Positive prompts**:

```

masterpiece, best quality, very aesthetic, absurdres

```

- **Classifier-Free Guidance (CFG) Scale**: should be around 5 to 7; 10 is fried, >12 is deep-fried.

- **Sampling steps**: should be around 25 to 30; 28 is the sweet spot.

- **Sampler**: Euler Ancestral (Euler a) is highly recommended.

- **Supported resolutions**:

```

1024 x 1024, 1152 x 896, 896 x 1152, 1216 x 832, 832 x 1216, 1344 x 768, 768 x 1344, 1536 x 640, 640 x 1536

```

## Training

These are the key hyperparameters used during training:

| Feature | Pretraining | Finetuning |

|-------------------------------|----------------------------|---------------------------------|

| **Hardware** | 2x H100 80GB PCIe | 2x A100 80GB PCIe |

| **Batch Size** | 32 | 48 |

| **Gradient Accumulation Steps** | 2 | 1 |

| **Noise Offset** | None | 0.0357 |

| **Epochs** | 10 | 10 |

| **UNet Learning Rate** | 5e-6 | 2e-6 |

| **Text Encoder Learning Rate** | 2.5e-6 | None |

| **Optimizer** | Adafactor | Adafactor |

| **Optimizer Args** | Scale Parameter: False, Relative Step: False, Warmup Init: False | Scale Parameter: False, Relative Step: False, Warmup Init: False |

| **Scheduler** | Constant with Warmups | Constant with Warmups |

| **Warmup Steps** | 0.05% | 0.05% |

## License

Holodayo XL 2.1 falls under [Fair AI Public License 1.0-SD](https://freedevproject.org/faipl-1.0-sd/) license, which is compatible with Stable Diffusion models’ license. Key points:

1. **Modification Sharing:** If you modify Holodayo XL 2.1, you must share both your changes and the original license.

2. **Source Code Accessibility:** If your modified version is network-accessible, provide a way (like a download link) for others to get the source code. This applies to derived models too.

3. **Distribution Terms:** Any distribution must be under this license or another with similar rules.

|

dataautogpt3/ProteusV0.4 | dataautogpt3 | 2024-02-22T19:01:41Z | 16,465 | 73 | diffusers | [

"diffusers",

"text-to-image",

"license:gpl-3.0",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-02-22T13:50:29Z | ---

pipeline_tag: text-to-image

widget:

- text: >-

3 fish in a fish tank wearing adorable outfits, best quality, hd

output:

url: GGuziQaXYAAudCW.png

- text: >-

a woman sitting in a wooden chair in the middle of a grass field on a farm, moonlight, best quality, hd, anime art

output:

url: upscaled_image (1).webp

- text: >-

Masterpiece, glitch, holy holy holy, fog, by DarkIncursio

output:

url: GGvDC_qWUAAcuQA.jpeg

- text: >-

jpeg Full Body Photo of a weird imaginary Female creatures captured on celluloid film, (((ghost))),heavy rain, thunder, snow, water's surface, night, expressionless, Blood, Japan God,(school), Ultra Realistic, ((Scary)),looking at camera, screem, plaintive cries, Long claws, fangs, scales,8k, HDR, 500px, mysterious and ornate digital art, photic, intricate, fantasy aesthetic.

output:

url: upscaled_image2.png

- text: >-

The divine tree of knowledge, an interplay between purple and gold, floats in the void of the sea of quanta, the tree is made of crystal, the void is made of nothingness, strong contrast, dim lighting, beautiful and surreal scene. wide shot

output:

url: upscaled_image.png

- text: >-

The image features an older man, a long white beard and mustache, He has a stern expression, giving the impression of a wise and experienced individual. The mans beard and mustache are prominent, adding to his distinguished appearance. The close-up shot of the mans face emphasizes his facial features and the intensity of his gaze.

output:

url: old.png

- text: >-

Ghost in the Shell Stand Alone Complex

output:

url: upscaled_image4.png

- text: >-

(impressionistic realism by csybgh), a 50 something male, working in banking, very short dyed dark curly balding hair, Afro-Asiatic ancestry, talks a lot but listens poorly, stuck in the past, wearing a suit, he has a certain charm, bronze skintone, sitting in a bar at night, he is smoking and feeling cool, drunk on plum wine, masterpiece, 8k, hyper detailed, smokey ambiance, perfect hands AND fingers

output:

url: collage.png

- text: >-

black fluffy gorgeous dangerous cat animal creature, large orange eyes, big fluffy ears, piercing gaze, full moon, dark ambiance, best quality, extremely detailed

output:

url: collage2.png

license: gpl-3.0

---

<Gallery />

## ProteusV0.4: The Style Update

This update enhances stylistic capabilities, similar to Midjourney's approach, rather than advancing prompt comprehension. Methods used do not infringe on any copyrighted material.

## Proteus

Proteus serves as a sophisticated enhancement over OpenDalleV1.1, leveraging its core functionalities to deliver superior outcomes. Key areas of advancement include heightened responsiveness to prompts and augmented creative capacities. To achieve this, it was fine-tuned using approximately 220,000 GPTV captioned images from copyright-free stock images (with some anime included), which were then normalized. Additionally, DPO (Direct Preference Optimization) was employed through a collection of 10,000 carefully selected high-quality, AI-generated image pairs.

In pursuit of optimal performance, numerous LORA (Low-Rank Adaptation) models are trained independently before being selectively incorporated into the principal model via dynamic application methods. These techniques involve targeting particular segments within the model while avoiding interference with other areas during the learning phase. Consequently, Proteus exhibits marked improvements in portraying intricate facial characteristics and lifelike skin textures, all while sustaining commendable proficiency across various aesthetic domains, notably surrealism, anime, and cartoon-style visualizations.

finetuned/trained on a total of 400k+ images at this point.

## Settings for ProteusV0.4

Use these settings for the best results with ProteusV0.4:

CFG Scale: Use a CFG scale of 4 to 6

Steps: 20 to 60 steps for more detail, 20 steps for faster results.

Sampler: DPM++ 2M SDE

Scheduler: Karras

Resolution: 1280x1280 or 1024x1024

please also consider using these keep words to improve your prompts:

best quality, HD, `~*~aesthetic~*~`.

if you are having trouble coming up with prompts you can use this GPT I put together to help you refine the prompt. https://chat.openai.com/g/g-RziQNoydR-diffusion-master

## Use it with 🧨 diffusers

```python

import torch

from diffusers import (

StableDiffusionXLPipeline,

KDPM2AncestralDiscreteScheduler,

AutoencoderKL

)

# Load VAE component

vae = AutoencoderKL.from_pretrained(

"madebyollin/sdxl-vae-fp16-fix",

torch_dtype=torch.float16

)

# Configure the pipeline

pipe = StableDiffusionXLPipeline.from_pretrained(

"dataautogpt3/ProteusV0.4",

vae=vae,

torch_dtype=torch.float16

)

pipe.scheduler = KDPM2AncestralDiscreteScheduler.from_config(pipe.scheduler.config)

pipe.to('cuda')

# Define prompts and generate image

prompt = "black fluffy gorgeous dangerous cat animal creature, large orange eyes, big fluffy ears, piercing gaze, full moon, dark ambiance, best quality, extremely detailed"

negative_prompt = "nsfw, bad quality, bad anatomy, worst quality, low quality, low resolutions, extra fingers, blur, blurry, ugly, wrongs proportions, watermark, image artifacts, lowres, ugly, jpeg artifacts, deformed, noisy image"

image = pipe(

prompt,

negative_prompt=negative_prompt,

width=1024,

height=1024,

guidance_scale=4,

num_inference_steps=20

).images[0]

```

please support the work I do through donating to me on:

https://www.buymeacoffee.com/DataVoid

or following me on

https://twitter.com/DataPlusEngine |

mradermacher/Med-LLaMA3-8B-i1-GGUF | mradermacher | 2024-06-29T22:50:29Z | 16,455 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:YBXL/Med-LLaMA3-8B",

"endpoints_compatible",

"region:us"

] | null | 2024-06-29T19:59:36Z | ---

base_model: YBXL/Med-LLaMA3-8B

language:

- en

library_name: transformers

quantized_by: mradermacher

tags: []

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/YBXL/Med-LLaMA3-8B

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/Med-LLaMA3-8B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ1_S.gguf) | i1-IQ1_S | 2.1 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ1_M.gguf) | i1-IQ1_M | 2.3 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.5 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.7 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ2_S.gguf) | i1-IQ2_S | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ2_M.gguf) | i1-IQ2_M | 3.0 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q2_K.gguf) | i1-Q2_K | 3.3 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 3.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.8 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ3_S.gguf) | i1-IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ3_M.gguf) | i1-IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 4.1 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 4.4 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 4.5 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q4_0.gguf) | i1-Q4_0 | 4.8 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 4.8 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Med-LLaMA3-8B-i1-GGUF/resolve/main/Med-LLaMA3-8B.i1-Q6_K.gguf) | i1-Q6_K | 6.7 | practically like static Q6_K |

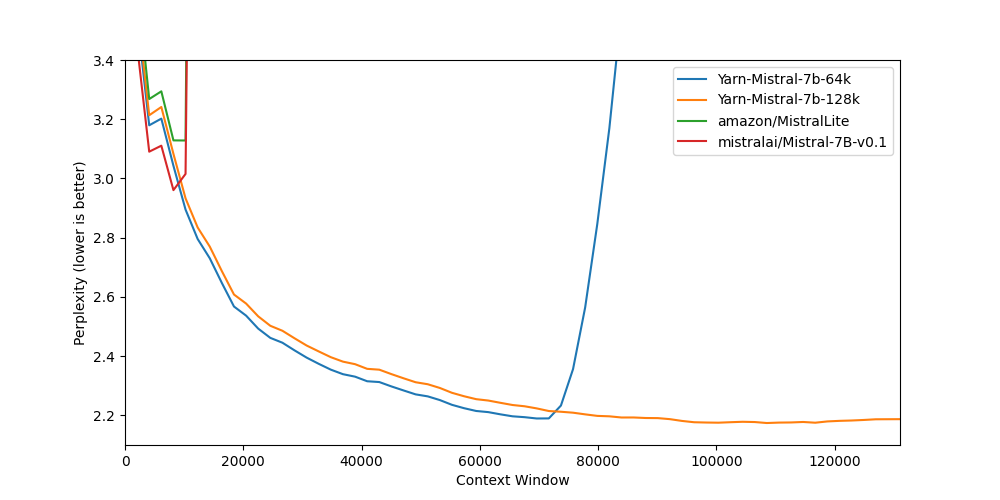

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his hardware for calculating the imatrix for these quants.

<!-- end -->

|

Yntec/ChickFlick | Yntec | 2024-05-27T22:36:56Z | 16,454 | 3 | diffusers | [

"diffusers",

"safetensors",

"Film",

"Artistic",

"Girls",

"LEOSAM",

"text-to-image",

"stable-diffusion",

"stable-diffusion-diffusers",

"license:creativeml-openrail-m",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2024-05-27T16:16:17Z | ---

license: creativeml-openrail-m

library_name: diffusers

pipeline_tag: text-to-image

tags:

- Film

- Artistic

- Girls

- LEOSAM

- text-to-image

- stable-diffusion

- stable-diffusion-diffusers

- diffusers

---

# Chick Flick

LEOSAMsFilmGirlUltra merged with artistic models to achieve this style.

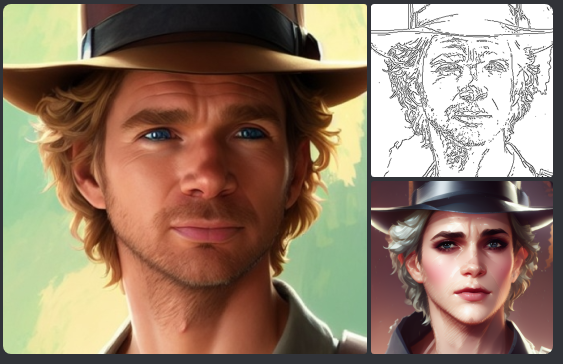

Samples and prompts:

(Click for larger)

Top left: (young cute girl sitting with a siamese cat, in a large house, tea cup, closeup), (5mm dramatic pose or action pose), (Artist design by (Marta Bevaqua) and (Richard Anderson)), detailed face (disaster scene from a movie, natural, dark pastel colors, HDR), (comfycore, cluttercore)

Top right: Elsa from Frozen wearing yellow towel, taking bubble bath in tub

Bottom left: cute curly little girl in bronze dress skirt and little boy in suit tie,kid, ballroom tango dance, 70s, Fireworks, detailed faces and eyes, beautiful dynamic color background, blurred bokeh background, 8k, depth of field, studio photo, ultra quality

Bottom right: girl in a classroom bored and uninterested

Original page: https://civitai.com/models/33208/leosams-filmgirl-ultra |

prajjwal1/bert-medium | prajjwal1 | 2021-10-27T18:30:16Z | 16,443 | 3 | transformers | [

"transformers",

"pytorch",

"BERT",

"MNLI",

"NLI",

"transformer",

"pre-training",

"en",

"arxiv:1908.08962",

"arxiv:2110.01518",

"license:mit",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | ---

language:

- en

license:

- mit

tags:

- BERT

- MNLI

- NLI

- transformer

- pre-training

---

The following model is a Pytorch pre-trained model obtained from converting Tensorflow checkpoint found in the [official Google BERT repository](https://github.com/google-research/bert).

This is one of the smaller pre-trained BERT variants, together with [bert-tiny](https://huggingface.co/prajjwal1/bert-tiny), [bert-mini](https://huggingface.co/prajjwal1/bert-mini) and [bert-small](https://huggingface.co/prajjwal1/bert-small). They were introduced in the study `Well-Read Students Learn Better: On the Importance of Pre-training Compact Models` ([arxiv](https://arxiv.org/abs/1908.08962)), and ported to HF for the study `Generalization in NLI: Ways (Not) To Go Beyond Simple Heuristics` ([arXiv](https://arxiv.org/abs/2110.01518)). These models are supposed to be trained on a downstream task.

If you use the model, please consider citing both the papers:

```

@misc{bhargava2021generalization,

title={Generalization in NLI: Ways (Not) To Go Beyond Simple Heuristics},

author={Prajjwal Bhargava and Aleksandr Drozd and Anna Rogers},

year={2021},

eprint={2110.01518},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@article{DBLP:journals/corr/abs-1908-08962,

author = {Iulia Turc and

Ming{-}Wei Chang and

Kenton Lee and

Kristina Toutanova},

title = {Well-Read Students Learn Better: The Impact of Student Initialization

on Knowledge Distillation},

journal = {CoRR},

volume = {abs/1908.08962},

year = {2019},

url = {http://arxiv.org/abs/1908.08962},

eprinttype = {arXiv},

eprint = {1908.08962},

timestamp = {Thu, 29 Aug 2019 16:32:34 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-1908-08962.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

Config of this model:

- `prajjwal1/bert-medium` (L=8, H=512) [Model Link](https://huggingface.co/prajjwal1/bert-medium)

Other models to check out:

- `prajjwal1/bert-tiny` (L=2, H=128) [Model Link](https://huggingface.co/prajjwal1/bert-tiny)

- `prajjwal1/bert-mini` (L=4, H=256) [Model Link](https://huggingface.co/prajjwal1/bert-mini)

- `prajjwal1/bert-small` (L=4, H=512) [Model Link](https://huggingface.co/prajjwal1/bert-small)

Original Implementation and more info can be found in [this Github repository](https://github.com/prajjwal1/generalize_lm_nli).

Twitter: [@prajjwal_1](https://twitter.com/prajjwal_1)

|

google/tapas-base | google | 2021-11-29T10:03:33Z | 16,440 | 6 | transformers | [

"transformers",

"pytorch",

"tf",

"tapas",

"feature-extraction",

"TapasModel",

"en",

"arxiv:2004.02349",

"arxiv:2010.00571",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | feature-extraction | 2022-03-02T23:29:05Z | ---

language: en

tags:

- tapas

- TapasModel

license: apache-2.0

---

# TAPAS base model

This model has 2 versions which can be used. The latest version, which is the default one, corresponds to the `tapas_inter_masklm_base_reset` checkpoint of the [original Github repository](https://github.com/google-research/tapas).

This model was pre-trained on MLM and an additional step which the authors call intermediate pre-training. It uses relative position embeddings by default (i.e. resetting the position index at every cell of the table).

The other (non-default) version which can be used is the one with absolute position embeddings:

- `revision="no_reset"`, which corresponds to `tapas_inter_masklm_base`

Disclaimer: The team releasing TAPAS did not write a model card for this model so this model card has been written by

the Hugging Face team and contributors.

## Model description

TAPAS is a BERT-like transformers model pretrained on a large corpus of English data from Wikipedia in a self-supervised fashion.

This means it was pretrained on the raw tables and associated texts only, with no humans labelling them in any way (which is why it

can use lots of publicly available data) with an automatic process to generate inputs and labels from those texts. More precisely, it

was pretrained with two objectives:

- Masked language modeling (MLM): taking a (flattened) table and associated context, the model randomly masks 15% of the words in

the input, then runs the entire (partially masked) sequence through the model. The model then has to predict the masked words.

This is different from traditional recurrent neural networks (RNNs) that usually see the words one after the other,

or from autoregressive models like GPT which internally mask the future tokens. It allows the model to learn a bidirectional

representation of a table and associated text.

- Intermediate pre-training: to encourage numerical reasoning on tables, the authors additionally pre-trained the model by creating

a balanced dataset of millions of syntactically created training examples. Here, the model must predict (classify) whether a sentence

is supported or refuted by the contents of a table. The training examples are created based on synthetic as well as counterfactual statements.

This way, the model learns an inner representation of the English language used in tables and associated texts, which can then be used

to extract features useful for downstream tasks such as answering questions about a table, or determining whether a sentence is entailed

or refuted by the contents of a table. Fine-tuning is done by adding one or more classification heads on top of the pre-trained model, and then

jointly train these randomly initialized classification heads with the base model on a downstream task.

## Intended uses & limitations

You can use the raw model for getting hidden representatons about table-question pairs, but it's mostly intended to be fine-tuned on a downstream task such as question answering or sequence classification. See the [model hub](https://huggingface.co/models?filter=tapas) to look for fine-tuned versions on a task that interests you.

## Training procedure

### Preprocessing

The texts are lowercased and tokenized using WordPiece and a vocabulary size of 30,000. The inputs of the model are

then of the form:

```

[CLS] Sentence [SEP] Flattened table [SEP]

```

### Pre-training

The model was pre-trained on 32 Cloud TPU v3 cores for 1,000,000 steps with maximum sequence length 512 and batch size of 512.

In this setup, pre-training on MLM only takes around 3 days. Aditionally, the model has been further pre-trained on a second task (table entailment). See the original TAPAS [paper](https://www.aclweb.org/anthology/2020.acl-main.398/) and the [follow-up paper](https://www.aclweb.org/anthology/2020.findings-emnlp.27/) for more details.

The optimizer used is Adam with a learning rate of 5e-5, and a warmup

ratio of 0.01.

### BibTeX entry and citation info

```bibtex

@misc{herzig2020tapas,

title={TAPAS: Weakly Supervised Table Parsing via Pre-training},

author={Jonathan Herzig and Paweł Krzysztof Nowak and Thomas Müller and Francesco Piccinno and Julian Martin Eisenschlos},

year={2020},

eprint={2004.02349},

archivePrefix={arXiv},

primaryClass={cs.IR}

}

```

```bibtex

@misc{eisenschlos2020understanding,

title={Understanding tables with intermediate pre-training},

author={Julian Martin Eisenschlos and Syrine Krichene and Thomas Müller},

year={2020},

eprint={2010.00571},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

``` |

01-ai/Yi-1.5-9B | 01-ai | 2024-06-26T10:41:21Z | 16,424 | 39 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:2403.04652",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-11T08:34:14Z | ---

license: apache-2.0

---

<div align="center">

<picture>

<img src="https://raw.githubusercontent.com/01-ai/Yi/main/assets/img/Yi_logo_icon_light.svg" width="150px">

</picture>

</div>

<p align="center">

<a href="https://github.com/01-ai">🐙 GitHub</a> •

<a href="https://discord.gg/hYUwWddeAu">👾 Discord</a> •

<a href="https://twitter.com/01ai_yi">🐤 Twitter</a> •

<a href="https://github.com/01-ai/Yi-1.5/issues/2">💬 WeChat</a>

<br/>

<a href="https://arxiv.org/abs/2403.04652">📝 Paper</a> •

<a href="https://01-ai.github.io/">💪 Tech Blog</a> •

<a href="https://github.com/01-ai/Yi/tree/main?tab=readme-ov-file#faq">🙌 FAQ</a> •

<a href="https://github.com/01-ai/Yi/tree/main?tab=readme-ov-file#learning-hub">📗 Learning Hub</a>

</p>

# Intro

Yi-1.5 is an upgraded version of Yi. It is continuously pre-trained on Yi with a high-quality corpus of 500B tokens and fine-tuned on 3M diverse fine-tuning samples.

Compared with Yi, Yi-1.5 delivers stronger performance in coding, math, reasoning, and instruction-following capability, while still maintaining excellent capabilities in language understanding, commonsense reasoning, and reading comprehension.

<div align="center">

Model | Context Length | Pre-trained Tokens

| :------------: | :------------: | :------------: |

| Yi-1.5 | 4K, 16K, 32K | 3.6T

</div>

# Models

- Chat models

<div align="center">

| Name | Download |

| --------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| Yi-1.5-34B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI)|

| Yi-1.5-34B-Chat-16K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-Chat-16K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-6B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

</div>

- Base models

<div align="center">

| Name | Download |

| ---------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| Yi-1.5-34B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-34B-32K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-32K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-6B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

</div>

# Benchmarks

- Chat models

Yi-1.5-34B-Chat is on par with or excels beyond larger models in most benchmarks.

Yi-1.5-9B-Chat is the top performer among similarly sized open-source models.

- Base models

Yi-1.5-34B is on par with or excels beyond larger models in some benchmarks.

Yi-1.5-9B is the top performer among similarly sized open-source models.

# Quick Start

For getting up and running with Yi-1.5 models quickly, see [README](https://github.com/01-ai/Yi-1.5).

|

ratchet-community/ratchet-moondream-2 | ratchet-community | 2024-06-21T03:34:42Z | 16,420 | 0 | null | [

"gguf",

"license:apache-2.0",

"region:us"

] | null | 2024-05-25T03:53:25Z | ---

license: apache-2.0

---

https://huggingface.co/vikhyatk/moondream2 adapted to work with huggingface Ratchet framework for on-device inference. |

timm/edgenext_small.usi_in1k | timm | 2023-04-23T22:43:14Z | 16,390 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2206.10589",

"arxiv:2204.03475",

"license:mit",

"region:us"

] | image-classification | 2023-04-23T22:43:00Z | ---

tags:

- image-classification

- timm

library_name: timm

license: mit

datasets:

- imagenet-1k

---

# Model card for edgenext_small.usi_in1k

An EdgeNeXt image classification model. Trained on ImageNet-1k by paper authors using distillation (`USI` as per `Solving ImageNet`).

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 5.6

- GMACs: 1.3

- Activations (M): 9.1

- Image size: train = 256 x 256, test = 320 x 320

- **Papers:**

- EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications: https://arxiv.org/abs/2206.10589

- Solving ImageNet: a Unified Scheme for Training any Backbone to Top Results: https://arxiv.org/abs/2204.03475

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/mmaaz60/EdgeNeXt

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('edgenext_small.usi_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'edgenext_small.usi_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 48, 64, 64])

# torch.Size([1, 96, 32, 32])

# torch.Size([1, 160, 16, 16])

# torch.Size([1, 304, 8, 8])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'edgenext_small.usi_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 304, 8, 8) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Citation

```bibtex

@inproceedings{Maaz2022EdgeNeXt,

title={EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications},

author={Muhammad Maaz and Abdelrahman Shaker and Hisham Cholakkal and Salman Khan and Syed Waqas Zamir and Rao Muhammad Anwer and Fahad Shahbaz Khan},

booktitle={International Workshop on Computational Aspects of Deep Learning at 17th European Conference on Computer Vision (CADL2022)},

year={2022},

organization={Springer}

}

```

```bibtex

@misc{https://doi.org/10.48550/arxiv.2204.03475,

doi = {10.48550/ARXIV.2204.03475},

url = {https://arxiv.org/abs/2204.03475},

author = {Ridnik, Tal and Lawen, Hussam and Ben-Baruch, Emanuel and Noy, Asaf},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Solving ImageNet: a Unified Scheme for Training any Backbone to Top Results},

publisher = {arXiv},

year = {2022},

}

```

|

lidiya/bart-large-xsum-samsum | lidiya | 2023-03-16T22:44:01Z | 16,385 | 36 | transformers | [

"transformers",

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"seq2seq",

"summarization",

"en",

"dataset:samsum",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | summarization | 2022-03-02T23:29:05Z | ---

language: en

tags:

- bart

- seq2seq

- summarization

license: apache-2.0

datasets:

- samsum

widget:

- text: |

Hannah: Hey, do you have Betty's number?

Amanda: Lemme check

Amanda: Sorry, can't find it.

Amanda: Ask Larry

Amanda: He called her last time we were at the park together

Hannah: I don't know him well

Amanda: Don't be shy, he's very nice

Hannah: If you say so..

Hannah: I'd rather you texted him

Amanda: Just text him 🙂

Hannah: Urgh.. Alright

Hannah: Bye

Amanda: Bye bye

model-index:

- name: bart-large-xsum-samsum

results:

- task:

name: Abstractive Text Summarization

type: abstractive-text-summarization

dataset:

name: "SAMSum Corpus: A Human-annotated Dialogue Dataset for Abstractive Summarization"

type: samsum

metrics:

- name: Validation ROUGE-1

type: rouge-1

value: 54.3921

- name: Validation ROUGE-2

type: rouge-2

value: 29.8078

- name: Validation ROUGE-L

type: rouge-l

value: 45.1543

- name: Test ROUGE-1

type: rouge-1

value: 53.3059

- name: Test ROUGE-2

type: rouge-2

value: 28.355

- name: Test ROUGE-L

type: rouge-l

value: 44.0953

---

## `bart-large-xsum-samsum`

This model was obtained by fine-tuning `facebook/bart-large-xsum` on [Samsum](https://huggingface.co/datasets/samsum) dataset.

## Usage

```python

from transformers import pipeline

summarizer = pipeline("summarization", model="lidiya/bart-large-xsum-samsum")

conversation = '''Hannah: Hey, do you have Betty's number?

Amanda: Lemme check

Amanda: Sorry, can't find it.

Amanda: Ask Larry

Amanda: He called her last time we were at the park together

Hannah: I don't know him well

Amanda: Don't be shy, he's very nice

Hannah: If you say so..

Hannah: I'd rather you texted him

Amanda: Just text him 🙂

Hannah: Urgh.. Alright

Hannah: Bye

Amanda: Bye bye

'''

summarizer(conversation)

```

## Training procedure

- Colab notebook: https://colab.research.google.com/drive/1dul0Sg-TTMy9xZCJzmDRajXbyzDwtYx6?usp=sharing

## Results

| key | value |

| --- | ----- |

| eval_rouge1 | 54.3921 |

| eval_rouge2 | 29.8078 |

| eval_rougeL | 45.1543 |

| eval_rougeLsum | 49.942 |

| test_rouge1 | 53.3059 |

| test_rouge2 | 28.355 |

| test_rougeL | 44.0953 |

| test_rougeLsum | 48.9246 | |

RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf | RichardErkhov | 2024-06-30T22:40:52Z | 16,385 | 0 | null | [

"gguf",

"region:us"

] | null | 2024-06-30T20:38:52Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

llama-2-7b-nf4-fp16-upscaled - GGUF

- Model creator: https://huggingface.co/arnavgrg/

- Original model: https://huggingface.co/arnavgrg/llama-2-7b-nf4-fp16-upscaled/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [llama-2-7b-nf4-fp16-upscaled.Q2_K.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q2_K.gguf) | Q2_K | 2.36GB |

| [llama-2-7b-nf4-fp16-upscaled.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.IQ3_XS.gguf) | IQ3_XS | 2.6GB |

| [llama-2-7b-nf4-fp16-upscaled.IQ3_S.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.IQ3_S.gguf) | IQ3_S | 2.75GB |

| [llama-2-7b-nf4-fp16-upscaled.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q3_K_S.gguf) | Q3_K_S | 2.75GB |

| [llama-2-7b-nf4-fp16-upscaled.IQ3_M.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.IQ3_M.gguf) | IQ3_M | 2.9GB |

| [llama-2-7b-nf4-fp16-upscaled.Q3_K.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q3_K.gguf) | Q3_K | 3.07GB |

| [llama-2-7b-nf4-fp16-upscaled.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q3_K_M.gguf) | Q3_K_M | 3.07GB |

| [llama-2-7b-nf4-fp16-upscaled.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q3_K_L.gguf) | Q3_K_L | 3.35GB |

| [llama-2-7b-nf4-fp16-upscaled.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.IQ4_XS.gguf) | IQ4_XS | 3.4GB |

| [llama-2-7b-nf4-fp16-upscaled.Q4_0.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q4_0.gguf) | Q4_0 | 3.56GB |

| [llama-2-7b-nf4-fp16-upscaled.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.IQ4_NL.gguf) | IQ4_NL | 3.58GB |

| [llama-2-7b-nf4-fp16-upscaled.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q4_K_S.gguf) | Q4_K_S | 3.59GB |

| [llama-2-7b-nf4-fp16-upscaled.Q4_K.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q4_K.gguf) | Q4_K | 3.8GB |

| [llama-2-7b-nf4-fp16-upscaled.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q4_K_M.gguf) | Q4_K_M | 3.8GB |

| [llama-2-7b-nf4-fp16-upscaled.Q4_1.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q4_1.gguf) | Q4_1 | 3.95GB |

| [llama-2-7b-nf4-fp16-upscaled.Q5_0.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q5_0.gguf) | Q5_0 | 4.33GB |

| [llama-2-7b-nf4-fp16-upscaled.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q5_K_S.gguf) | Q5_K_S | 4.33GB |

| [llama-2-7b-nf4-fp16-upscaled.Q5_K.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q5_K.gguf) | Q5_K | 4.45GB |

| [llama-2-7b-nf4-fp16-upscaled.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q5_K_M.gguf) | Q5_K_M | 4.45GB |

| [llama-2-7b-nf4-fp16-upscaled.Q5_1.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q5_1.gguf) | Q5_1 | 4.72GB |

| [llama-2-7b-nf4-fp16-upscaled.Q6_K.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q6_K.gguf) | Q6_K | 5.15GB |

| [llama-2-7b-nf4-fp16-upscaled.Q8_0.gguf](https://huggingface.co/RichardErkhov/arnavgrg_-_llama-2-7b-nf4-fp16-upscaled-gguf/blob/main/llama-2-7b-nf4-fp16-upscaled.Q8_0.gguf) | Q8_0 | 6.67GB |

Original model description:

---

license: apache-2.0

tags:

- text-generation-inference

---

This is an upscaled fp16 variant of the original Llama-2-7b base model by Meta after it has been loaded with nf4 4-bit quantization via bitsandbytes.

The main idea here is to upscale the linear4bit layers to fp16 so that the quantization/dequantization cost doesn't have to paid for each forward pass at inference time.

_Note: The quantization operation to nf4 is not lossless, so the model weights for the linear layers are lossy, which means that this model will not work as well as the official base model._

To use this model, you can just load it via `transformers` in fp16:

```python

import torch

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained(

"arnavgrg/llama-2-7b-nf4-fp16-upscaled",

device_map="auto",

torch_dtype=torch.float16,

)

```

|

RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf | RichardErkhov | 2024-06-30T11:31:40Z | 16,381 | 0 | null | [

"gguf",

"region:us"

] | null | 2024-06-30T08:38:59Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

mistral-7B-test-v0.3 - GGUF

- Model creator: https://huggingface.co/wons/

- Original model: https://huggingface.co/wons/mistral-7B-test-v0.3/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [mistral-7B-test-v0.3.Q2_K.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q2_K.gguf) | Q2_K | 2.53GB |

| [mistral-7B-test-v0.3.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.IQ3_XS.gguf) | IQ3_XS | 2.81GB |

| [mistral-7B-test-v0.3.IQ3_S.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.IQ3_S.gguf) | IQ3_S | 2.96GB |

| [mistral-7B-test-v0.3.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q3_K_S.gguf) | Q3_K_S | 2.95GB |

| [mistral-7B-test-v0.3.IQ3_M.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.IQ3_M.gguf) | IQ3_M | 3.06GB |

| [mistral-7B-test-v0.3.Q3_K.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q3_K.gguf) | Q3_K | 3.28GB |

| [mistral-7B-test-v0.3.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q3_K_M.gguf) | Q3_K_M | 3.28GB |

| [mistral-7B-test-v0.3.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q3_K_L.gguf) | Q3_K_L | 3.56GB |

| [mistral-7B-test-v0.3.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.IQ4_XS.gguf) | IQ4_XS | 3.67GB |

| [mistral-7B-test-v0.3.Q4_0.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q4_0.gguf) | Q4_0 | 3.83GB |

| [mistral-7B-test-v0.3.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.IQ4_NL.gguf) | IQ4_NL | 3.87GB |

| [mistral-7B-test-v0.3.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q4_K_S.gguf) | Q4_K_S | 3.86GB |

| [mistral-7B-test-v0.3.Q4_K.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q4_K.gguf) | Q4_K | 4.07GB |

| [mistral-7B-test-v0.3.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q4_K_M.gguf) | Q4_K_M | 4.07GB |

| [mistral-7B-test-v0.3.Q4_1.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q4_1.gguf) | Q4_1 | 4.24GB |

| [mistral-7B-test-v0.3.Q5_0.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q5_0.gguf) | Q5_0 | 4.65GB |

| [mistral-7B-test-v0.3.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q5_K_S.gguf) | Q5_K_S | 4.65GB |

| [mistral-7B-test-v0.3.Q5_K.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q5_K.gguf) | Q5_K | 4.78GB |

| [mistral-7B-test-v0.3.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q5_K_M.gguf) | Q5_K_M | 4.78GB |

| [mistral-7B-test-v0.3.Q5_1.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q5_1.gguf) | Q5_1 | 5.07GB |

| [mistral-7B-test-v0.3.Q6_K.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q6_K.gguf) | Q6_K | 5.53GB |

| [mistral-7B-test-v0.3.Q8_0.gguf](https://huggingface.co/RichardErkhov/wons_-_mistral-7B-test-v0.3-gguf/blob/main/mistral-7B-test-v0.3.Q8_0.gguf) | Q8_0 | 7.17GB |

Original model description:

Entry not found

|

microsoft/Florence-2-base | microsoft | 2024-07-01T09:36:41Z | 16,380 | 94 | transformers | [

"transformers",

"pytorch",

"florence2",

"text-generation",

"vision",

"image-text-to-text",

"custom_code",

"arxiv:2311.06242",

"license:mit",

"autotrain_compatible",

"region:us"

] | image-text-to-text | 2024-06-15T00:57:24Z | ---

license: mit

license_link: https://huggingface.co/microsoft/Florence-2-base/resolve/main/LICENSE

pipeline_tag: image-text-to-text

tags:

- vision

---

# Florence-2: Advancing a Unified Representation for a Variety of Vision Tasks

## Model Summary

This Hub repository contains a HuggingFace's `transformers` implementation of Florence-2 model from Microsoft.

Florence-2 is an advanced vision foundation model that uses a prompt-based approach to handle a wide range of vision and vision-language tasks. Florence-2 can interpret simple text prompts to perform tasks like captioning, object detection, and segmentation. It leverages our FLD-5B dataset, containing 5.4 billion annotations across 126 million images, to master multi-task learning. The model's sequence-to-sequence architecture enables it to excel in both zero-shot and fine-tuned settings, proving to be a competitive vision foundation model.

Resources and Technical Documentation:

+ [Florence-2 technical report](https://arxiv.org/abs/2311.06242).

+ [Jupyter Notebook for inference and visualization of Florence-2-large model](https://huggingface.co/microsoft/Florence-2-large/blob/main/sample_inference.ipynb)

| Model | Model size | Model Description |

| ------- | ------------- | ------------- |

| Florence-2-base[[HF]](https://huggingface.co/microsoft/Florence-2-base) | 0.23B | Pretrained model with FLD-5B

| Florence-2-large[[HF]](https://huggingface.co/microsoft/Florence-2-large) | 0.77B | Pretrained model with FLD-5B

| Florence-2-base-ft[[HF]](https://huggingface.co/microsoft/Florence-2-base-ft) | 0.23B | Finetuned model on a colletion of downstream tasks

| Florence-2-large-ft[[HF]](https://huggingface.co/microsoft/Florence-2-large-ft) | 0.77B | Finetuned model on a colletion of downstream tasks

## How to Get Started with the Model

Use the code below to get started with the model.

```python

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("microsoft/Florence-2-base", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("microsoft/Florence-2-base", trust_remote_code=True)

prompt = "<OD>"

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

do_sample=False,

num_beams=3,

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

print(parsed_answer)

```

## Tasks

This model is capable of performing different tasks through changing the prompts.

First, let's define a function to run a prompt.

<details>

<summary> Click to expand </summary>

```python

import requests

from PIL import Image

from transformers import AutoProcessor, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("microsoft/Florence-2-base", trust_remote_code=True)

processor = AutoProcessor.from_pretrained("microsoft/Florence-2-base", trust_remote_code=True)

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/car.jpg?download=true"

image = Image.open(requests.get(url, stream=True).raw)

def run_example(task_prompt, text_input=None):

if text_input is None:

prompt = task_prompt

else:

prompt = task_prompt + text_input

inputs = processor(text=prompt, images=image, return_tensors="pt")

generated_ids = model.generate(

input_ids=inputs["input_ids"],

pixel_values=inputs["pixel_values"],

max_new_tokens=1024,

num_beams=3

)

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

parsed_answer = processor.post_process_generation(generated_text, task=task_prompt, image_size=(image.width, image.height))

print(parsed_answer)

```

</details>

Here are the tasks `Florence-2` could perform:

<details>

<summary> Click to expand </summary>

### Caption

```python

prompt = "<CAPTION>"

run_example(prompt)

```

### Detailed Caption

```python

prompt = "<DETAILED_CAPTION>"

run_example(prompt)

```

### More Detailed Caption

```python

prompt = "<MORE_DETAILED_CAPTION>"

run_example(prompt)

```

### Caption to Phrase Grounding

caption to phrase grounding task requires additional text input, i.e. caption.

Caption to phrase grounding results format:

{'\<CAPTION_TO_PHRASE_GROUNDING>': {'bboxes': [[x1, y1, x2, y2], ...], 'labels': ['', '', ...]}}

```python

task_prompt = "<CAPTION_TO_PHRASE_GROUNDING>"

results = run_example(task_prompt, text_input="A green car parked in front of a yellow building.")

```

### Object Detection

OD results format:

{'\<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

'labels': ['label1', 'label2', ...]} }

```python

prompt = "<OD>"

run_example(prompt)

```

### Dense Region Caption

Dense region caption results format:

{'\<DENSE_REGION_CAPTION>' : {'bboxes': [[x1, y1, x2, y2], ...],

'labels': ['label1', 'label2', ...]} }

```python

prompt = "<DENSE_REGION_CAPTION>"

run_example(prompt)

```

### Region proposal

Dense region caption results format:

{'\<REGION_PROPOSAL>': {'bboxes': [[x1, y1, x2, y2], ...],

'labels': ['', '', ...]}}

```python

prompt = "<REGION_PROPOSAL>"

run_example(prompt)

```

### OCR

```python

prompt = "<OCR>"

run_example(prompt)

```

### OCR with Region

OCR with region output format:

{'\<OCR_WITH_REGION>': {'quad_boxes': [[x1, y1, x2, y2, x3, y3, x4, y4], ...], 'labels': ['text1', ...]}}

```python

prompt = "<OCR_WITH_REGION>"

run_example(prompt)

```

for More detailed examples, please refer to [notebook](https://huggingface.co/microsoft/Florence-2-large/blob/main/sample_inference.ipynb)

</details>

# Benchmarks

## Florence-2 Zero-shot performance

The following table presents the zero-shot performance of generalist vision foundation models on image captioning and object detection evaluation tasks. These models have not been exposed to the training data of the evaluation tasks during their training phase.

| Method | #params | COCO Cap. test CIDEr | NoCaps val CIDEr | TextCaps val CIDEr | COCO Det. val2017 mAP |

|--------|---------|----------------------|------------------|--------------------|-----------------------|

| Flamingo | 80B | 84.3 | - | - | - |

| Florence-2-base| 0.23B | 133.0 | 118.7 | 70.1 | 34.7 |

| Florence-2-large| 0.77B | 135.6 | 120.8 | 72.8 | 37.5 |

The following table continues the comparison with performance on other vision-language evaluation tasks.

| Method | Flickr30k test R@1 | Refcoco val Accuracy | Refcoco test-A Accuracy | Refcoco test-B Accuracy | Refcoco+ val Accuracy | Refcoco+ test-A Accuracy | Refcoco+ test-B Accuracy | Refcocog val Accuracy | Refcocog test Accuracy | Refcoco RES val mIoU |

|--------|----------------------|----------------------|-------------------------|-------------------------|-----------------------|--------------------------|--------------------------|-----------------------|------------------------|----------------------|

| Kosmos-2 | 78.7 | 52.3 | 57.4 | 47.3 | 45.5 | 50.7 | 42.2 | 60.6 | 61.7 | - |

| Florence-2-base | 83.6 | 53.9 | 58.4 | 49.7 | 51.5 | 56.4 | 47.9 | 66.3 | 65.1 | 34.6 |

| Florence-2-large | 84.4 | 56.3 | 61.6 | 51.4 | 53.6 | 57.9 | 49.9 | 68.0 | 67.0 | 35.8 |

## Florence-2 finetuned performance

We finetune Florence-2 models with a collection of downstream tasks, resulting two generalist models *Florence-2-base-ft* and *Florence-2-large-ft* that can conduct a wide range of downstream tasks.

The table below compares the performance of specialist and generalist models on various captioning and Visual Question Answering (VQA) tasks. Specialist models are fine-tuned specifically for each task, whereas generalist models are fine-tuned in a task-agnostic manner across all tasks. The symbol "▲" indicates the usage of external OCR as input.

| Method | # Params | COCO Caption Karpathy test CIDEr | NoCaps val CIDEr | TextCaps val CIDEr | VQAv2 test-dev Acc | TextVQA test-dev Acc | VizWiz VQA test-dev Acc |

|----------------|----------|-----------------------------------|------------------|--------------------|--------------------|----------------------|-------------------------|

| **Specialist Models** | | | | | | | |

| CoCa | 2.1B | 143.6 | 122.4 | - | 82.3 | - | - |

| BLIP-2 | 7.8B | 144.5 | 121.6 | - | 82.2 | - | - |

| GIT2 | 5.1B | 145.0 | 126.9 | 148.6 | 81.7 | 67.3 | 71.0 |

| Flamingo | 80B | 138.1 | - | - | 82.0 | 54.1 | 65.7 |

| PaLI | 17B | 149.1 | 127.0 | 160.0▲ | 84.3 | 58.8 / 73.1▲ | 71.6 / 74.4▲ |

| PaLI-X | 55B | 149.2 | 126.3 | 147.0 / 163.7▲ | 86.0 | 71.4 / 80.8▲ | 70.9 / 74.6▲ |

| **Generalist Models** | | | | | | | |

| Unified-IO | 2.9B | - | 100.0 | - | 77.9 | - | 57.4 |

| Florence-2-base-ft | 0.23B | 140.0 | 116.7 | 143.9 | 79.7 | 63.6 | 63.6 |

| Florence-2-large-ft | 0.77B | 143.3 | 124.9 | 151.1 | 81.7 | 73.5 | 72.6 |

| Method | # Params | COCO Det. val2017 mAP | Flickr30k test R@1 | RefCOCO val Accuracy | RefCOCO test-A Accuracy | RefCOCO test-B Accuracy | RefCOCO+ val Accuracy | RefCOCO+ test-A Accuracy | RefCOCO+ test-B Accuracy | RefCOCOg val Accuracy | RefCOCOg test Accuracy | RefCOCO RES val mIoU |

|----------------------|----------|-----------------------|--------------------|----------------------|-------------------------|-------------------------|------------------------|---------------------------|---------------------------|------------------------|-----------------------|------------------------|

| **Specialist Models** | | | | | | | | | | | | |

| SeqTR | - | - | - | 83.7 | 86.5 | 81.2 | 71.5 | 76.3 | 64.9 | 74.9 | 74.2 | - |

| PolyFormer | - | - | - | 90.4 | 92.9 | 87.2 | 85.0 | 89.8 | 78.0 | 85.8 | 85.9 | 76.9 |

| UNINEXT | 0.74B | 60.6 | - | 92.6 | 94.3 | 91.5 | 85.2 | 89.6 | 79.8 | 88.7 | 89.4 | - |

| Ferret | 13B | - | - | 89.5 | 92.4 | 84.4 | 82.8 | 88.1 | 75.2 | 85.8 | 86.3 | - |

| **Generalist Models** | | | | | | | | | | | | |

| UniTAB | - | - | - | 88.6 | 91.1 | 83.8 | 81.0 | 85.4 | 71.6 | 84.6 | 84.7 | - |

| Florence-2-base-ft | 0.23B | 41.4 | 84.0 | 92.6 | 94.8 | 91.5 | 86.8 | 91.7 | 82.2 | 89.8 | 82.2 | 78.0 |

| Florence-2-large-ft| 0.77B | 43.4 | 85.2 | 93.4 | 95.3 | 92.0 | 88.3 | 92.9 | 83.6 | 91.2 | 91.7 | 80.5 |

## BibTex and citation info

```

@article{xiao2023florence,

title={Florence-2: Advancing a unified representation for a variety of vision tasks},

author={Xiao, Bin and Wu, Haiping and Xu, Weijian and Dai, Xiyang and Hu, Houdong and Lu, Yumao and Zeng, Michael and Liu, Ce and Yuan, Lu},

journal={arXiv preprint arXiv:2311.06242},

year={2023}

}

``` |

ControlNet-1-1-preview/control_v11p_sd15_lineart | ControlNet-1-1-preview | 2023-04-14T19:11:45Z | 16,377 | 22 | diffusers | [

"diffusers",

"safetensors",

"art",

"controlnet",

"stable-diffusion",

"arxiv:2302.05543",

"base_model:runwayml/stable-diffusion-v1-5",

"license:openrail",

"region:us"

] | null | 2023-04-13T09:18:01Z | ---

license: openrail

base_model: runwayml/stable-diffusion-v1-5

tags:

- art

- controlnet

- stable-diffusion

---

# Controlnet - v1.1 - *lineart Version*

**Controlnet v1.1** is the successor model of [Controlnet v1.0](https://huggingface.co/lllyasviel/ControlNet)

and was released in [lllyasviel/ControlNet-v1-1](https://huggingface.co/lllyasviel/ControlNet-v1-1) by [Lvmin Zhang](https://huggingface.co/lllyasviel).

This checkpoint is a conversion of [the original checkpoint](https://huggingface.co/lllyasviel/ControlNet-v1-1/blob/main/control_v11p_sd15_lineart.pth) into `diffusers` format.

It can be used in combination with **Stable Diffusion**, such as [runwayml/stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5).

For more details, please also have a look at the [🧨 Diffusers docs](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion/controlnet).

ControlNet is a neural network structure to control diffusion models by adding extra conditions.

This checkpoint corresponds to the ControlNet conditioned on **lineart images**.

## Model Details

- **Developed by:** Lvmin Zhang, Maneesh Agrawala

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Resources for more information:** [GitHub Repository](https://github.com/lllyasviel/ControlNet), [Paper](https://arxiv.org/abs/2302.05543).

- **Cite as:**

@misc{zhang2023adding,

title={Adding Conditional Control to Text-to-Image Diffusion Models},

author={Lvmin Zhang and Maneesh Agrawala},

year={2023},

eprint={2302.05543},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

## Introduction

Controlnet was proposed in [*Adding Conditional Control to Text-to-Image Diffusion Models*](https://arxiv.org/abs/2302.05543) by

Lvmin Zhang, Maneesh Agrawala.

The abstract reads as follows:

*We present a neural network structure, ControlNet, to control pretrained large diffusion models to support additional input conditions.

The ControlNet learns task-specific conditions in an end-to-end way, and the learning is robust even when the training dataset is small (< 50k).

Moreover, training a ControlNet is as fast as fine-tuning a diffusion model, and the model can be trained on a personal devices.

Alternatively, if powerful computation clusters are available, the model can scale to large amounts (millions to billions) of data.

We report that large diffusion models like Stable Diffusion can be augmented with ControlNets to enable conditional inputs like edge maps, segmentation maps, keypoints, etc.

This may enrich the methods to control large diffusion models and further facilitate related applications.*

## Example

It is recommended to use the checkpoint with [Stable Diffusion v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5) as the checkpoint

has been trained on it.

Experimentally, the checkpoint can be used with other diffusion models such as dreamboothed stable diffusion.

**Note**: If you want to process an image to create the auxiliary conditioning, external dependencies are required as shown below:

1. Install https://github.com/patrickvonplaten/controlnet_aux

```sh

$ pip install controlnet_aux==0.3.0

```

2. Let's install `diffusers` and related packages:

```

$ pip install diffusers transformers accelerate

```

3. Run code:

```python

import torch

import os

from huggingface_hub import HfApi

from pathlib import Path

from diffusers.utils import load_image

from PIL import Image

import numpy as np

from controlnet_aux import LineartDetector

from diffusers import (

ControlNetModel,

StableDiffusionControlNetPipeline,

UniPCMultistepScheduler,

)

checkpoint = "ControlNet-1-1-preview/control_v11p_sd15_lineart"

image = load_image(

"https://huggingface.co/ControlNet-1-1-preview/control_v11p_sd15_lineart/resolve/main/images/input.png"

)

image = image.resize((512, 512))

prompt = "michael jackson concert"

processor = LineartDetector.from_pretrained("lllyasviel/Annotators")

control_image = processor(image)

control_image.save("./images/control.png")

controlnet = ControlNetModel.from_pretrained(checkpoint, torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

generator = torch.manual_seed(0)

image = pipe(prompt, num_inference_steps=30, generator=generator, image=control_image).images[0]

image.save('images/image_out.png')

```

## Other released checkpoints v1-1

The authors released 14 different checkpoints, each trained with [Stable Diffusion v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

on a different type of conditioning:

| Model Name | Control Image Overview| Control Image Example | Generated Image Example |

|---|---|---|---|

TODO

### Training

TODO

### Blog post

For more information, please also have a look at the [Diffusers ControlNet Blog Post](https://huggingface.co/blog/controlnet).

|

alirezamsh/quip-512-mocha | alirezamsh | 2024-03-21T11:22:19Z | 16,377 | 4 | transformers | [

"transformers",

"pytorch",

"safetensors",

"roberta",

"text-classification",

"en",

"dataset:mocha",

"license:bsd-3-clause",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2023-06-01T12:09:39Z | ---

license: bsd-3-clause

datasets:

- mocha

language:

- en

---

# Answer Overlap Module of QAFactEval Metric

This is the span scorer module, used in [RQUGE paper](https://aclanthology.org/2023.findings-acl.428/) to evaluate the generated questions of the question generation task.

The model was originally used in [QAFactEval](https://aclanthology.org/2022.naacl-main.187/) for computing the semantic similarity of the generated answer span, given the reference answer, context, and question in the question answering task.

It outputs a 1-5 answer overlap score. The scorer is trained on their MOCHA dataset (initialized from [Jia et al. (2021)](https://aclanthology.org/2020.emnlp-main.528/)), consisting of 40k crowdsourced judgments on QA model outputs.

The input to the model is defined as:

```

[CLS] question [q] gold answer [r] pred answer [c] context

```

# Generation

You can use the following script to get the semantic similarity of the predicted answer given the gold answer, context, and question.

```

from transformers import AutoModelForSequenceClassification, AutoTokenizer

sp_scorer = AutoModelForSequenceClassification.from_pretrained('alirezamsh/quip-512-mocha')

tokenizer_sp = AutoTokenizer.from_pretrained('alirezamsh/quip-512-mocha')

sp_scorer.eval()

pred_answer = ""

gold_answer = ""

question = ""

context = ""

input_sp = f"{question} <q> {gold_answer} <r>" \

f" {pred_answer} <c> {context}"

inputs = tokenizer_sp(input_sp, max_length=512, truncation=True, \

padding="max_length", return_tensors="pt")

outputs = sp_scorer(input_ids=inputs["input_ids"], attention_mask=inputs["attention_mask"])

print(outputs)

```

# Citations

```

@inproceedings{fabbri-etal-2022-qafacteval,

title = "{QAF}act{E}val: Improved {QA}-Based Factual Consistency Evaluation for Summarization",

author = "Fabbri, Alexander and

Wu, Chien-Sheng and

Liu, Wenhao and

Xiong, Caiming",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jul,

year = "2022",

address = "Seattle, United States",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-main.187",