index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/blog | hf_public_repos/blog/zh/dynamic_speculation_lookahead.md | ---

title: "更快的辅助生成: 动态推测"

thumbnail: /blog/assets/optimum_intel/intel_thumbnail.png

authors:

- user: jmamou

guest: true

org: Intel

- user: orenpereg

guest: true

org: Intel

- user: joaogante

- user: lewtun

- user: danielkorat

guest: true

org: Intel

- user: Nadav-Timor

guest: true

org: weizmannscience

- user: moshew

guest: true

org: Intel

translators:

- user: Zipxuan

- user: zhongdongy

proofreader: true

---

⭐ 在这篇博客文章中,我们将探讨 _动态推测解码_ ——这是由英特尔实验室和 Hugging Face 开发的一种新方法,可以加速文本生成高达 2.7 倍,具体取决于任务。从 [Transformers🤗](https://github.com/huggingface/transformers) 发布的版本 [4.45.0](https://github.com/huggingface/transformers/releases/tag/v4.45.0) 开始,这种方法是辅助生成的默认模式⭐

## 推测解码

[推测解码](https://arxiv.org/abs/2211.17192) 技术十分流行,其用于加速大型语言模型的推理过程,与此同时保持其准确性。如下图所示,推测解码通过将生成过程分为两个阶段来工作。在第一阶段,一个快速但准确性较低的 _草稿_ 模型 (Draft,也称为助手) 自回归地生成一系列标记。在第二阶段,一个大型但更准确的 _目标_ 模型 (Target) 对生成的草稿标记进行并行验证。这个过程允许目标模型在单个前向传递中生成多个标记,从而加速自回归解码。推测解码的成功在很大程度上取决于 _推测前瞻_ (Speculative Lookahead,下文用 SL 表示),即草稿模型在每次迭代中生成的标记数量。在实践中,SL 要么是一个静态值,要么基于启发式方法,这两者都不是在推理过程中发挥最大性能的最优选择。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/dynamic_speculation_lookahead/spec_dec_diagram.png" width="250"><br>

<em>推测解码的单次迭代</em>

</figure>

## 动态推测解码

[Transformers🤗](https://github.com/huggingface/transformers) 库提供了两种不同的方法来确定在推理过程中调整草稿 (助手) 标记数量的计划。基于 [Leviathan 等人](https://arxiv.org/pdf/2211.17192) 的直接方法使用推测前瞻的静态值,并涉及在每个推测迭代中生成恒定数量的候选标记。另一种 [基于启发式方法的方法](https://huggingface.co/blog/assisted-generation) 根据当前迭代的接受率调整下一次迭代的候选标记数量。如果所有推测标记都是正确的,则候选标记的数量增加; 否则,数量减少。

我们预计,通过增强优化策略来管理生成的草稿标记数量,可以进一步减少延迟。为了测试这个论点,我们利用一个预测器来确定每个推测迭代的最佳推测前瞻值 (SL)。该预测器利用草稿模型自回归的生成标记,直到草稿模型和目标模型之间的预测标记出现不一致。该过程在每个推测迭代中重复进行,最终确定每次迭代接受的草稿标记的最佳 (最大) 数量。草稿/目标标记不匹配是通过在零温度下 Leviathan 等人提出的拒绝抽样算法 (rejection sampling algorithm) 来识别的。该预测器通过在每一步生成最大数量的有效草稿标记,并最小化对草稿和目标模型的调用次数,实现了推测解码的全部潜力。我们称使用该预测器得到 SL 值的推测解码过程为预知 (orcale) 的推测解码。

下面的左图展示了来自 [MBPP](https://huggingface.co/datasets/google-research-datasets/mbpp) 数据集的代码生成示例中的预知和静态推测前瞻值在推测迭代中的变化。可以观察到预知的 SL 值 (橙色条) 存在很高的变化。

静态 SL 值 (蓝色条) 中,生成的草稿标记数量固定为 5,执行了 38 次目标前向传播和 192 次草稿前向传播,而预知的 SL 值只执行了 27 次目标前向传播和 129 次草稿前向传播 - 减少了很多。右图展示了整个 [Alpaca](https://huggingface.co/datasets/tatsu-lab/alpaca) 数据集中的预知和静态推测前瞻值。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/dynamic_speculation_lookahead/oracle_K_2.png" style="width: 400px; height: auto;"><br>

<em>在 MBPP 的一个例子上的预知和静态推测前瞻值 (SL)。</em>

</p>

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/dynamic_speculation_lookahead/Alpaca.png" style="width: 400px; height: auto;"><br>

<em>在整个 Alpaca 数据集上平均的预知 SL 值。</em>

上面的两个图表展示了预知推测前瞻值的多变性,这说明静态的推测解码可能使次优的。

为了更接近预知的推测解码并获得额外的加速,我们开发了一种简单的方法来在每次迭代中动态调整推测前瞻值。在生成每个草稿令牌后,我们确定草稿模型是否应继续生成下一个令牌或切换到目标模型进行验证。这个决定基于草稿模型对其预测的信心,通过 logits 的 softmax 估计。如果草稿模型对当前令牌预测的信心低于预定义的阈值,即 `assistant_confidence_threshold` ,它将在该迭代中停止令牌生成过程,即使尚未达到最大推测令牌数 `num_assistant_tokens` 。一旦停止,当前迭代中生成的草稿令牌将被发送到目标模型进行验证。

## 基准测试

我们在一系列任务和模型组合中对动态方法与启发式方法进行了基准测试。动态方法在所有测试中表现出更好的性能。

值得注意的是,使用动态方法将 `Llama3.2-1B` 作为 `Llama3.1-8B` 的助手时,我们观察到速度提升高达 1.52 倍,而使用相同设置的启发式方法则没有显著的速度提升。另一个观察结果是, `codegen-6B-mono` 在使用启发式方法时表现出速度下降,而使用动态方法则表现出速度提升。

| 目标模型 | 草稿模型 | 任务类型 | 加速比 - 启发式策略 | 加速比 - 动态策略 |

|----------------------|---------------------|---------------------------|---------------------------|---------------------------|

| `facebook/opt-6.7b` | `facebook/opt-125m` | summarization | 1.82x | **2.71x** |

| `facebook/opt-6.7b` | `facebook/opt-125m` | open-ended generation | 1.23x | **1.59x** |

| `Salesforce/codegen-6B-mono` | `Salesforce/codegen-350M-mono` | code generation (python) | 0.89x | **1.09x** |

| `google/flan-t5-xl` | `google/flan-t5-small` | summarization | 1.18x | **1.31x** |

| `meta-llama/Llama-3.1-8B` | `meta-llama/Llama-3.2-1B` | summarization | 1.00x | **1.52x** |

| `meta-llama/Llama-3.1-8B` | `meta-llama/Llama-3.2-1B` | open-ended generation | 1.00x | **1.18x** |

| `meta-llama/Llama-3.1-8B` | `meta-llama/Llama-3.2-1B` | code generation (python) | 1.09x | **1.15x** |

- 表格中的结果反映了贪婪解码 (temperature = 0)。在使用采样 (temperature > 0) 时也观察到了类似的趋势。

- 所有测试均在 RTX 4090 上进行。

- 我们的基准测试是公开的,允许任何人评估进一步的改进: https://github.com/gante/huggingface-demos/tree/main/experiments/faster_generation

## 代码

动态推测已经整合到 Hugging Face Transformers 库的 4.45.0 版本中,并且现在作为辅助解码的默认操作模式。要使用带有动态推测的辅助生成,无需进行任何代码更改,只需像平常一样执行代码即可:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

prompt = "Alice and Bob"

checkpoint = "EleutherAI/pythia-1.4b-deduped"

assistant_checkpoint = "EleutherAI/pythia-160m-deduped"

device = "cuda" if torch.cuda.is_available() else "cpu"

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

inputs = tokenizer(prompt, return_tensors="pt").to(device)

model = AutoModelForCausalLM.from_pretrained(checkpoint).to(device)

assistant_model = AutoModelForCausalLM.from_pretrained(assistant_checkpoint).to(device)

outputs = model.generate(**inputs, assistant_model=assistant_model)

```

默认的动态推测前瞻的参数反应了最优的值,但是可以使用下面的代码进行调整来在特定模型和数据上获得更好的性能:

```python

# confidence threshold

assistant_model.generation_config.assistant_confidence_threshold=0.4

# 'constant' means that num_assistant_tokens stays unchanged during generation

assistant_model.generation_config.num_assistant_tokens_schedule='constant'

# the maximum number of tokens generated by the assistant model.

# after 20 tokens the draft halts even if the confidence is above the threshold

assistant_model.generation_config.num_assistant_tokens=20

```

要恢复到 **启发式** 或 **静态** 方法 (如 [Leviathan 等人](https://arxiv.org/pdf/2211.17192) 中所述),只需分别将 `num_assistant_tokens_schedule` 设置为 `'heuristic'` 或 `'constant'` ,将 `assistant_confidence_threshold=0` 和 `num_assistant_tokens=5` 设置如下:

```python

# Use 'heuristic' or 'constant' or 'dynamic'

assistant_model.generation_config.num_assistant_tokens_schedule='heuristic'

assistant_model.generation_config.assistant_confidence_threshold=0

assistant_model.generation_config.num_assistant_tokens=5

```

## 接下来是什么?

我们介绍了一种更快的辅助生成策略,名为动态推测解码,它优于启发式方法以及固定数量候选标记的方法。

在即将发布的博客文章中,我们将展示一种新的辅助生成方法: 将任何目标模型与任何助手模型结合起来!这将为在 Hugging Face Hub 上加速无法获得足够小的助手变体的无数模型打开大门。例如, `Phi 3` 、 `Gemma 2` 、 `CodeLlama` 等等都将有资格进行推测解码。敬请关注!

## 参考资料

- [Dynamic Speculation Lookahead Accelerates Speculative Decoding of Large Language Models](https://arxiv.org/abs/2405.04304)。

> 在这篇论文中,我们介绍了 DISCO,一种动态推测前瞻优化方法,利用分类器决定草稿模型是否应该继续生成下一个标记,还是暂停,并切换到目标模型进行验证,而不是仅仅使用对预测概率的简单阈值。

- [Assisted Generation: a new direction toward low-latency text generation](https://huggingface.co/blog/assisted-generation)

- [Fast Inference from Transformers via Speculative Decoding](https://arxiv.org/pdf/2211.17192) | 0 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/daily-papers.md | ---

title: "Hugging Face 论文平台 Daily Papers 功能全解析"

thumbnail: /blog/assets/daily-papers/thumbnail.png

authors:

- user: AdinaY

---

# Hugging Face 论文平台 Daily Papers 功能全解析

在快速发展的研究领域,保持对最新进展的关注至关重要。为了帮助开发者和研究人员跟踪 AI 领域的前沿动态,Hugging Face 推出了 [Daily Papers](https://huggingface.co/papers) 页面。自发布以来,Daily Papers 已展示了由 [AK](https://huggingface.co/akhaliq) 和社区研究人员精心挑选的高质量研究。在过去一年里,已有超过 3700 篇论文被发布,页面订阅用户也增长至超过 1.2 万!

然而,许多人可能还不了解 Daily Papers 页面的全部功能。本文将介绍一些论文页面的隐藏功能,帮助你充分利用这个平台。

## 📑 认领论文

在 Daily Papers 页面中,每篇论文标题下方会有作者名单。如果你是其中的一位作者,并且拥有 Hugging Face 账号,即可以通过点击 [认领论文](https://huggingface.co/docs/hub/paper-pages#claiming-authorship-to-a-paper) 一键认领论文!认领后,该论文将自动关联到你的 Hugging Face 账户,这有助于在社区中建立个人品牌并提高工作的影响力。

这一功能使得社区成员了解你的研究及背景,创造更多合作和互动的机会。

## ⏫ 提交论文

论文提交功能向所有已认领论文的用户开放。用户不仅限于提交自己的作品,也可以分享其他有益于社区的有趣研究论文。

这有助于 Hugging Face Papers 维持一个由社区策划、持续更新的 AI 研究文库!

## 💬 与作者零距离交流

每篇论文下都有讨论区,用户可以在这里留言并与作者进行直接对话。通过 @ 作者的用户名,就可以及时向作者提问或讨论研究内容,并及时获得作者的反馈。

这一功能促进了互动,旨在将整个社区的研究人员聚集在一起。无论是初学者还是专家都可以在这里畅所欲言,使全球 AI 社区更加紧密和包容。

无论你是提出问题还是分享建设性意见,这都为有意义的对话打开了大门,甚至可能激发出新的想法或合作。

## 🔗 一页汇总全部内容

在每篇论文页面的右侧,可以找到与论文相关的[资源](https://huggingface.co/docs/hub/paper-pages#linking-a-paper-to-a-model-dataset-or-space),例如模型、数据集、演示和其他有用的集合。

作者可以通过将论文的 arXiv URL 添加到他们资源的 README.md 文件中,轻松将他们的模型或数据集与论文关联起来。此功能允许作者展示他们的工作,并帮助用户在一个页面上可以获取所有相关信息。

## 🗳 点赞支持

你可以通过点击页面右上角的点赞按钮来支持该论文,这样做可以帮助将论文推向社区并支持作者的工作。点赞功能能够突出有影响力和创新的研究,帮助更多人发现并关注优秀的论文。

对于作者来说,每个点赞都是对其努力的认可,也是他们继续进行高质量研究的动力源泉。

## 🙋 推荐类似论文

在评论区中输入 @librarian-bot,系统将自动推荐相关论文。对于那些想深入研究某个主题或探索类似想法的用户来说,这个功能非常有用。就像拥有一个 AI 驱动的个人研究助理!

## 🔠 多语言评论和翻译

在 Hugging Face,我们重视多样性,这也体现在语言使用方面。在 Daily Papers 页面,用户可以用任何语言发表评论,我们内置的翻译功能会确保所有人都能理解并参与讨论。

无论您是提供反馈、讨论问题还是想要和社区或作者进行交流,这一功能都有助于打破语言障碍,使全球合作变得更加容易。

## ✅ 订阅功能

你可以通过点击页面顶部的“订阅”按钮来订阅 Daily Papers。订阅后,将每天(周末除外)收到最新论文的更新,它会直接发送到你 Hugging Face 注册邮箱📩。

此功能使您能够一目了然地浏览最新的论文标题,并可以直接点击进入感兴趣的论文页面。

## 💡 与 arXiv 的互动功能

Paper Pages 和 arXiv 之间还有一些有趣的集成功能。例如,你可以轻松查看 arXiv 上的论文是否已经被 Hugging Face 的 Daily Papers 页面展示。如果在页面上看到熟悉的表情符号 🤗,点击它就可以直接跳转到 Daily Papers 上的论文页面,探索上述所有功能。

要使用 arXiv 到 HF Paper Pages 的功能,需要安装一个 Chrome 扩展程序 👉:https://chromewebstore.google.com/detail/arxiv-to-hf/icfbnjkijgggnhmlikeppnoehoalpcpp。

在 arXiv 上,你还可以查看某篇论文是否在 Hugging Face Spaces 上托管了 demo 演示。如果作者添加了链接,你可以点击链接直接跳转到 Hugging Face Space 尝试 demo!

我们希望这份指南能帮助你充分利用 Hugging Face 上的 [Daily Papers](https://huggingface.co/docs/hub/paper-pages) 页面。通过利用这些功能,你可以时刻关注最新的研究成果,与作者互动,并为不断发展的 AI 社区做出贡献。无论你是研究人员、开发者还是初学者,希望 Daily Papers 能帮助你紧密联结全球顶尖的 AI 研究前沿!

| 1 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/langchain.md | ---

title: "Hugging Face x LangChain:全新 LangChain 合作伙伴包"

thumbnail: /blog/assets/langchain_huggingface/thumbnail.png

authors:

- user: jofthomas

- user: kkondratenko

guest: true

- user: efriis

guest: true

org: langchain-ai

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# Hugging Face x LangChain: 全新 LangChain 合作伙伴包

我们很高兴官宣发布 **`langchain_huggingface`**,这是一个由 Hugging Face 和 LangChain 共同维护的 LangChain 合作伙伴包。这个新的 Python 包旨在将 Hugging Face 最新功能引入 LangChain 并保持同步。

# 源自社区,服务社区

目前,LangChain 中所有与 Hugging Face 相关的类都是由社区贡献的。虽然我们以此为基础蓬勃发展,但随着时间的推移,其中一些类在设计时由于缺乏来自 Hugging Face 的内部视角而在后期被废弃。

通过 Langchain 合作伙伴包这个方式,我们的目标是缩短将 Hugging Face 生态系统中的新功能带给 LangChain 用户所需的时间。

**`langchain-huggingface`** 与 LangChain 无缝集成,为在 LangChain 生态系统中使用 Hugging Face 模型提供了一种可用且高效的方法。这种伙伴关系不仅仅涉及到技术贡献,还展示了双方对维护和不断改进这一集成的共同承诺。

## **起步**

**`langchain-huggingface`** 的起步非常简单。以下是安装该 [软件包](https://github.com/langchain-ai/langchain/tree/master/libs/partners/huggingface) 的方法:

```python

pip install langchain-huggingface

```

现在,包已经安装完毕,我们来看看里面有什么吧!

## LLM 文本生成

### HuggingFacePipeline

`transformers` 中的 [Pipeline](https://huggingface.co/docs/transformers/main_classes/pipelines) 类是 Hugging Face 工具箱中最通用的工具。LangChain 的设计主要是面向 RAG 和 Agent 应用场景,因此,在 Langchain 中流水线被简化为下面几个以文本为中心的任务: `文本生成` 、 `文生文` 、 `摘要` 、 `翻译` 等。

用户可以使用 `from_model_id` 方法直接加载模型:

```python

from langchain_huggingface import HuggingFacePipeline

llm = HuggingFacePipeline.from_model_id(

model_id="microsoft/Phi-3-mini-4k-instruct",

task="text-generation",

pipeline_kwargs={

"max_new_tokens": 100,

"top_k": 50,

"temperature": 0.1,

},

)

llm.invoke("Hugging Face is")

```

也可以自定义流水线,再传给 `HuggingFacePipeline` 类:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer,pipeline

model_id = "microsoft/Phi-3-mini-4k-instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

load_in_4bit=True,

#attn_implementation="flash_attention_2", # if you have an ampere GPU

)

pipe = pipeline("text-generation", model=model, tokenizer=tokenizer, max_new_tokens=100, top_k=50, temperature=0.1)

llm = HuggingFacePipeline(pipeline=pipe)

llm.invoke("Hugging Face is")

```

使用 `HuggingFacePipeline` 时,模型是加载至本机并在本机运行的,因此你可能会受到本机可用资源的限制。

### HuggingFaceEndpoint

该类也有两种方法。你可以使用 `repo_id` 参数指定模型。也可以使用 `endpoint_url` 指定服务终端,这些终端使用 [无服务器 API](https://huggingface.co/inference-api/serverless),这对于有 Hugging Face [专业帐户](https://huggingface.co/subscribe/pro) 或 [企业 hub](https://huggingface.co/enterprise) 的用户大有好处。普通用户也可以通过在代码环境中设置自己的 HF 令牌从而在免费请求数配额内使用终端。

```python

from langchain_huggingface import HuggingFaceEndpoint

llm = HuggingFaceEndpoint(

repo_id="meta-llama/Meta-Llama-3-8B-Instruct",

task="text-generation",

max_new_tokens=100,

do_sample=False,

)

llm.invoke("Hugging Face is")

```

```python

llm = HuggingFaceEndpoint(

endpoint_url="<endpoint_url>",

task="text-generation",

max_new_tokens=1024,

do_sample=False,

)

llm.invoke("Hugging Face is")

```

该类在底层实现时使用了 [InferenceClient](https://huggingface.co/docs/huggingface_hub/en/package_reference/inference_client),因此能够为已部署的 TGI 实例提供面向各种用例的无服务器 API。

### ChatHuggingFace

每个模型都有最适合自己的特殊词元。如果没有将这些词元添加到提示中,将大大降低模型的表现。

为了把用户的消息转成 LLM 所需的提示,大多数 LLM 分词器中都提供了一个名为 [chat_template](https://huggingface.co/docs/transformers/chat_templated) 的成员属性。

要了解不同模型的 `chat_template` 的详细信息,可访问我创建的 [space](https://huggingface.co/spaces/Jofthomas/Chat_template_viewer)!

`ChatHuggingFace` 类对 LLM 进行了包装,其接受用户消息作为输入,然后用 `tokenizer.apply_chat_template` 方法构造出正确的提示。

```python

from langchain_huggingface import ChatHuggingFace, HuggingFaceEndpoint

llm = HuggingFaceEndpoint(

endpoint_url="<endpoint_url>",

task="text-generation",

max_new_tokens=1024,

do_sample=False,

)

llm_engine_hf = ChatHuggingFace(llm=llm)

llm_engine_hf.invoke("Hugging Face is")

```

上述代码等效于:

```python

# with mistralai/Mistral-7B-Instruct-v0.2

llm.invoke("<s>[INST] Hugging Face is [/INST]")

# with meta-llama/Meta-Llama-3-8B-Instruct

llm.invoke("""<|begin_of_text|><|start_header_id|>user<|end_header_id|>Hugging Face is<|eot_id|><|start_header_id|>assistant<|end_header_id|>""")

```

## 嵌入

Hugging Face 里有很多非常强大的嵌入模型,你可直接把它们用于自己的流水线。

首先,选择你想要的模型。关于如何选择嵌入模型,一个很好的参考是 [MTEB 排行榜](https://huggingface.co/spaces/mteb/leaderboard)。

### HuggingFaceEmbeddings

该类使用 [sentence-transformers](https://sbert.net/) 来计算嵌入。其计算是在本机进行的,因此需要使用你自己的本机资源。

```python

from langchain_huggingface.embeddings import HuggingFaceEmbeddings

model_name = "mixedbread-ai/mxbai-embed-large-v1"

hf_embeddings = HuggingFaceEmbeddings(

model_name=model_name,

)

texts = ["Hello, world!", "How are you?"]

hf_embeddings.embed_documents(texts)

```

### HuggingFaceEndpointEmbeddings

`HuggingFaceEndpointEmbeddings` 与 `HuggingFaceEndpoint` 对 LLM 所做的非常相似,其在实现上也是使用 InferenceClient 来计算嵌入。它可以与 hub 上的模型以及 TEI 实例一起使用,TEI 实例无论是本地部署还是在线部署都可以。

```python

from langchain_huggingface.embeddings import HuggingFaceEndpointEmbeddings

hf_embeddings = HuggingFaceEndpointEmbeddings(

model= "mixedbread-ai/mxbai-embed-large-v1",

task="feature-extraction",

huggingfacehub_api_token="<HF_TOKEN>",

)

texts = ["Hello, world!", "How are you?"]

hf_embeddings.embed_documents(texts)

```

## 总结

我们致力于让 **`langchain-huggingface`** 变得越来越好。我们将积极监控反馈和问题,并努力尽快解决它们。我们还将不断添加新的特性和功能,以拓展该软件包使其支持更广泛的社区应用。我们强烈推荐你尝试 `langchain-huggingface` 软件包并提出宝贵意见,有了你的支持,这个软件包的未来道路才会越走越宽。

| 2 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/how-to-generate.md | ---

title: "如何生成文本:通过 Transformers 用不同的解码方法生成文本"

thumbnail: /blog/assets/02_how-to-generate/thumbnail.png

authors:

- user: patrickvonplaten

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# 如何生成文本: 通过 Transformers 用不同的解码方法生成文本

<a target="_blank" href="https://colab.research.google.com/github/huggingface/blog/blob/main/notebooks/02_how_to_generate.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

### 简介

近年来,随着以 OpenAI [GPT2 模型](https://openai.com/blog/better-language-models/) 为代表的基于数百万网页数据训练的大型 Transformer 语言模型的兴起,开放域语言生成领域吸引了越来越多的关注。开放域中的条件语言生成效果令人印象深刻,典型的例子有: [GPT2 在独角兽话题上的精彩续写](https://openai.com/blog/better-language-models/#samples),[XLNet](https://medium.com/@amanrusia/xlnet-speaks-comparison-to-gpt-2-ea1a4e9ba39e) 以及 [使用 CTRL 模型生成受控文本](https://blog.einstein.ai/introducing-a-conditional-transformer-language-model-for-controllable-generation/) 等。促成这些进展的除了 transformer 架构的改进和大规模无监督训练数据外,*更好的解码方法* 也发挥了不可或缺的作用。

本文简述了不同的解码策略,同时向读者展示了如何使用流行的 `transformers` 库轻松实现这些解码策略!

下文中的所有功能均可用于 *自回归* 语言生成任务 (点击 [此处](http://jalammar.github.io/illustrated-gpt2/) 回顾)。简单复习一下, *自回归* 语言生成是基于如下假设: 一个文本序列的概率分布可以分解为每个词基于其上文的条件概率的乘积。

$$ P(w_{1:T} | W_0 ) = \prod_{t=1}^T P(w_{t} | w_{1: t-1}, W_0) \text{ , 其中 } w_{1: 0} = \emptyset, $$

上式中,$W_0$ 是初始 *上下文* 单词序列。文本序列的长度 $T$ 通常时变的,并且对应于时间步 $t=T$。$P(w_{t} | w_{1: t- 1}, W_{0})$ 的词表中已包含 终止符 (End Of Sequence,EOS)。`transformers` 目前已支持的自回归语言生成任务包括 `GPT2`、`XLNet`、`OpenAi-GPT`、`CTRL`、`TransfoXL`、`XLM`、`Bart`、`T5` 模型,并支持 PyTorch 和 TensorFlow (>= 2.0) 两种框架!

我们会介绍目前最常用的解码方法,主要有 *贪心搜索 (Greedy search)*、*波束搜索 (Beam search)*、*Top-K 采样 (Top-K sampling)* 以及 *Top-p 采样 (Top-p sampling)*。

在此之前,我们先快速安装一下 `transformers` 并把模型加载进来。本文我们用 GPT2 模型在 TensorFlow 2.1 中进行演示,但 API 和使用 PyTorch 框架是一一对应的。

```python

!pip install -q git+https://github.com/huggingface/transformers.git

!pip install -q tensorflow==2.1

```

```python

import tensorflow as tf

from transformers import TFGPT2LMHeadModel, GPT2Tokenizer

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

# add the EOS token as PAD token to avoid warnings

model = TFGPT2LMHeadModel.from_pretrained("gpt2",pad_token_id=tokenizer.eos_token_id)

```

### 贪心搜索

贪心搜索在每个时间步 $t$ 都简单地选择概率最高的词作为当前输出词: $w_t = argmax_{w}P(w | w_{1:t-1})$ ,如下图所示。

<img src="/blog/assets/02_how-to-generate/greedy_search.png" alt="greedy search" style="margin: auto; display: block;">

从单词 $\text{“The”}$ 开始,算法在第一步贪心地选择条件概率最高的词 $\text{“nice”}$ 作为输出,依此往后。最终生成的单词序列为 $(\text{“The”}, \text{“nice”}, \text{“woman”})$,其联合概率为 $0.5 \times 0.4 = 0.2$。

下面,我们输入文本序列 $(\text{“I”}, \text{“enjoy”}, \text{“walking”}, \text{“with”}, \text{“my”}, \text{“cute”}, \text{“dog”})$ 给 GPT2 模型,让模型生成下文。我们以此为例看看如何在 `transformers` 中使用贪心搜索:

```python

# encode context the generation is conditioned on

input_ids = tokenizer.encode('I enjoy walking with my cute dog', return_tensors='tf')

# generate text until the output length (which includes the context length) reaches 50

greedy_output = model.generate(input_ids, max_length=50)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(greedy_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with my dog. I'm not sure if I'll ever be able to walk with my dog.

I'm not sure if I'll

</div>

好,我们已经用 GPT2 生成了第一个短文本😊。根据上文生成的单词是合理的,但模型很快开始输出重复的文本!这在语言生成中是一个非常普遍的问题,在贪心搜索和波束搜索中似乎更是如此 - 详见 [Vijayakumar 等人,2016](https://arxiv.org/abs/1610.02424) 和 [Shao 等人,2017](https://arxiv.org/abs/1701.03185) 的论文。

贪心搜索的主要缺点是它错过了隐藏在低概率词后面的高概率词,如上图所示:

条件概率为 $0.9$ 的单词 $\text{“has”}$ 隐藏在单词 $\text{“dog”}$ 后面,而 $\text{“dog”}$ 因为在 `t=1` 时条件概率值只排第二所以未被选择,因此贪心搜索会错过序列 $\text{“The”}, \text {“dog”}, \text{“has”}$ 。

幸好我们可以用波束搜索来缓解这个问题!

### 波束搜索

波束搜索通过在每个时间步保留最可能的 `num_beams` 个词,并从中最终选择出概率最高的序列来降低丢失潜在的高概率序列的风险。以 `num_beams=2` 为例:

<img src="/blog/assets/02_how-to-generate/beam_search.png" alt="beam search" style="margin: auto; display: block;">

在时间步 1,除了最有可能的假设 $(\text{“The”}, \text{“nice”})$,波束搜索还跟踪第二可能的假设 $(\text{“The”}, \text{“dog”})$。在时间步 2,波束搜索发现序列 $(\text{“The”}, \text{“dog”}, \text{“has”})$ 概率为$0.36$,比 $(\text{“The”}, \text{“nice”}, \text{“woman”})$ 的 $0.2$ 更高。太棒了,在我们的例子中它已经找到了最有可能的序列!

波束搜索一般都会找到比贪心搜索概率更高的输出序列,但仍不保证找到全局最优解。

让我们看看如何在 `transformers` 中使用波束搜索。我们设置 `num_beams > 1` 和 `early_stopping=True` 以便在所有波束达到 EOS 时直接结束生成。

```python

# activate beam search and early_stopping

beam_output = model.generate(

input_ids,

max_length=50,

num_beams=5,

early_stopping=True

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with him again.

I'm not sure if I'll ever be able to walk with him again. I'm not sure if I'll

</div>

虽然结果比贪心搜索更流畅,但输出中仍然包含重复。一个简单的补救措施是引入 *n-grams* (即连续 n 个词的词序列) 惩罚,该方法是由 [Paulus 等人 (2017)](https://arxiv.org/abs/1705.04304) 和 [Klein 等人 (2017)](https://arxiv.org/abs/1701.02810) 引入的。最常见的 *n-grams* 惩罚是确保每个 *n-gram* 都只出现一次,方法是如果看到当前候选词与其上文所组成的 *n-gram* 已经出现过了,就将该候选词的概率设置为 0。

我们可以通过设置 `no_repeat_ngram_size=2` 来试试,这样任意 *2-gram* 不会出现两次:

```python

# set no_repeat_ngram_size to 2

beam_output = model.generate(

input_ids,

max_length=50,

num_beams=5,

no_repeat_ngram_size=2,

early_stopping=True

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with him again.

I've been thinking about this for a while now, and I think it's time for me to take a break

</div>

不错,看起来好多了!我们看到生成的文本已经没有重复了。但是,*n-gram* 惩罚使用时必须谨慎,如一篇关于 *纽约* 这个城市的文章就不应使用 *2-gram* 惩罚,否则,城市名称在整个文本中将只出现一次!

波束搜索的另一个重要特性是我们能够比较概率最高的几个波束,并选择最符合我们要求的波束作为最终生成文本。

在 `transformers` 中,我们只需将参数 `num_return_sequences` 设置为需返回的概率最高的波束的数量,记得确保 `num_return_sequences <= num_beams`!

```python

# set return_num_sequences > 1

beam_outputs = model.generate(

input_ids,

max_length=50,

num_beams=5,

no_repeat_ngram_size=2,

num_return_sequences=5,

early_stopping=True

)

# now we have 3 output sequences

print("Output:\n" + 100 * '-')

for i, beam_output in enumerate(beam_outputs):

print("{}: {}".format(i, tokenizer.decode(beam_output, skip_special_tokens=True)))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

0: I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with him again.

I've been thinking about this for a while now, and I think it's time for me to take a break

1: I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with him again.

I've been thinking about this for a while now, and I think it's time for me to get back to

2: I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with her again.

I've been thinking about this for a while now, and I think it's time for me to take a break

3: I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with her again.

I've been thinking about this for a while now, and I think it's time for me to get back to

4: I enjoy walking with my cute dog, but I'm not sure if I'll ever be able to walk with him again.

I've been thinking about this for a while now, and I think it's time for me to take a step

</div>

如我们所见,五个波束彼此之间仅有少量差别 —— 这在仅使用 5 个波束时不足为奇。

开放域文本生成的研究人员最近提出了几个理由来说明对该领域而言波束搜索可能不是最佳方案:

- 在机器翻译或摘要等任务中,因为所需生成的长度或多或少都是可预测的,所以波束搜索效果比较好 - 参见 [Murray 等人 (2018)](https://arxiv.org/abs/1808.10006) 和 [Yang 等人 (2018)](https://arxiv.org/abs/1808.09582) 的工作。但开放域文本生成情况有所不同,其输出文本长度可能会有很大差异,如对话和故事生成的输出文本长度就有很大不同。

- 我们已经看到波束搜索已被证明存在重复生成的问题。在故事生成这样的场景中,很难用 *n-gram* 或其他惩罚来控制,因为在“不重复”和最大可重复 *n-grams* 之间找到一个好的折衷需要大量的微调。

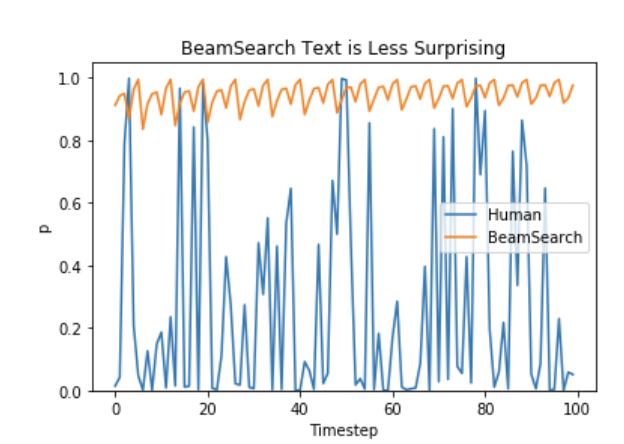

- 正如 [Ari Holtzman 等人 (2019)](https://arxiv.org/abs/1904.09751) 所论证的那样,高质量的人类语言并不遵循最大概率法则。换句话说,作为人类,我们希望生成的文本能让我们感到惊喜,而可预测的文本使人感觉无聊。论文作者画了一个概率图,很好地展示了这一点,从图中可以看出人类文本带来的惊喜度比波束搜索好不少。

因此,让我们开始玩点刺激的,引入一些随机性🤪。

### 采样

在其最基本的形式中,采样意味着根据当前条件概率分布随机选择输出词 $w_t$:

$$ w_t \sim P(w|w_{1:t-1}) $$

继续使用上文中的例子,下图可视化了使用采样生成文本的过程。

<img src="/blog/assets/02_how-to-generate/sampling_search.png" alt="sampling search" style="margin: auto; display: block;">

很明显,使用采样方法时文本生成本身不再是 *确定性的*。单词 $\text{“car”}$ 从条件概率分布 $P(w | \text{“The”})$ 中采样而得,而 $\text{“drives”}$ 则采样自 $P(w | \text{“The”}, \text{“car”})$。

在 `transformers` 中,我们设置 `do_sample=True` 并通过设置 `top_k=0` 停用 *Top-K* 采样 (稍后详细介绍)。在下文中,为便于复现,我们会固定 `random_seed=0`,但你可以在自己的模型中随意更改 `random_seed`。

```python

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# activate sampling and deactivate top_k by setting top_k sampling to 0

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=0

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog. He just gave me a whole new hand sense."

But it seems that the dogs have learned a lot from teasing at the local batte harness once they take on the outside.

"I take

</div>

有意思!生成的文本看起来不错 - 但仔细观察会发现它不是很连贯。*3-grams* *new hand sense* 和 *local batte harness* 非常奇怪,看起来不像是人写的。这就是对单词序列进行采样时的大问题: 模型通常会产生不连贯的乱码,*参见* [Ari Holtzman 等人 (2019)](https://arxiv.org/abs/1904.09751) 的论文。

缓解这一问题的一个技巧是通过降低所谓的 [softmax](https://en.wikipedia.org/wiki/Softmax_function#Smooth_arg_max) 的“温度”使分布 $P(w|w_{1:t-1})$ 更陡峭。而降低“温度”,本质上是增加高概率单词的似然并降低低概率单词的似然。

将温度应用到于我们的例子中后,结果如下图所示。

<img src="/blog/assets/02_how-to-generate/sampling_search_with_temp.png" alt="sampling temp search" style="margin: auto; display: block;">

$t=1$ 时刻单词的条件分布变得更加陡峭,几乎没有机会选择单词 $\text{“car”}$ 了。

让我们看看如何通过设置 `temperature=0.7` 来冷却生成过程:

```python

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# use temperature to decrease the sensitivity to low probability candidates

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=0,

temperature=0.7

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog, but I don't like to be at home too much. I also find it a bit weird when I'm out shopping. I am always away from my house a lot, but I do have a few friends

</div>

好,奇怪的 n-gram 变少了,现在输出更连贯了!虽然温度可以使分布的随机性降低,但极限条件下,当“温度”设置为 $0$ 时,温度缩放采样就退化成贪心解码了,因此会遇到与贪心解码相同的问题。

### Top-K 采样

[Fan 等人 (2018)](https://arxiv.org/pdf/1805.04833.pdf) 的论文介绍了一种简单但非常强大的采样方案,称为 ***Top-K*** 采样。在 *Top-K* 采样中,概率最大的 *K* 个词会被选出,然后这 *K* 个词的概率会被重新归一化,最后就在这重新被归一化概率后的 *K* 个词中采样。 GPT2 采用了这种采样方案,这也是它在故事生成这样的任务上取得成功的原因之一。

我们将上文例子中的候选单词数从 3 个单词扩展到 10 个单词,以更好地说明 *Top-K* 采样。

<img src="/blog/assets/02_how-to-generate/top_k_sampling.png" alt="Top K sampling" style="margin: auto; display: block;">

设 $K = 6$,即我们将在两个采样步的采样池大小限制为 6 个单词。我们定义 6 个最有可能的词的集合为 $V_{\text{top-K}}$。在第一步中,$V_{\text{top-K}}$ 仅占总概率的大约三分之二,但在第二步,它几乎占了全部的概率。同时,我们可以看到在第二步该方法成功地消除了那些奇怪的候选词 $(\text{“not”}, \text{“the”}, \text{“small”}, \text{“told”})$。

我们以设置 `top_k=50` 为例看下如何在 `transformers` 库中使用 *Top-K*:

```python

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# set top_k to 50

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

```

<div class="output stream stdout">

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog. It's so good to have an environment where your dog is available to share with you and we'll be taking care of you.

We hope you'll find this story interesting!

I am from

</div>

相当不错!该文本可以说是迄今为止生成的最 "*像人*" 的文本。现在还有一个问题,*Top-K* 采样不会动态调整从需要概率分布 $P(w|w_{1:t-1})$ 中选出的单词数。这可能会有问题,因为某些分布可能是非常尖锐 (上图中右侧的分布),而另一些可能更平坦 (上图中左侧的分布),所以对不同的分布使用同一个绝对数 *K* 可能并不普适。

在 $t=1$ 时,*Top-K* 将 $(\text{“people”}, \text{“big”}, \text{“house”}, \text{“cat”})$ 排出了采样池,而这些词似乎是合理的候选词。另一方面,在$t=2$ 时,该方法却又把不太合适的 $(\text{“down”}, \text{“a”})$ 纳入了采样池。因此,将采样池限制为固定大小 *K* 可能会在分布比较尖锐的时候产生胡言乱语,而在分布比较平坦的时候限制模型的创造力。这一发现促使 [Ari Holtzman 等人 (2019)](https://arxiv.org/abs/1904.09751) 发明了 **Top-p**- 或 **核**- 采样。

### Top-p (核) 采样

在 *Top-p* 中,采样不只是在最有可能的 *K* 个单词中进行,而是在累积概率超过概率 *p* 的最小单词集中进行。然后在这组词中重新分配概率质量。这样,词集的大小 (*又名* 集合中的词数) 可以根据下一个词的概率分布动态增加和减少。好吧,说的很啰嗦,一图胜千言。

<img src="/blog/assets/02_how-to-generate/top_p_sampling.png" alt="Top p sampling" style="margin: auto; display: block;">

假设 $p=0.92$,*Top-p* 采样对单词概率进行降序排列并累加,然后选择概率和首次超过 $p=92%$ 的单词集作为采样池,定义为 $V_{\text{top-p}}$。在 $t=1$ 时 $V_{\text{top-p}}$ 有 9 个词,而在 $t=2$ 时它只需要选择前 3 个词就超过了 92%。其实很简单吧!可以看出,在单词比较不可预测时,它保留了更多的候选词,*如* $P(w | \text{“The”})$,而当单词似乎更容易预测时,只保留了几个候选词,*如* $P(w | \text{“The”}, \text{“car”})$。

好的,是时候看看它在 `transformers` 里怎么用了!我们可以通过设置 `0 < top_p < 1` 来激活 *Top-p* 采样:

```python

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# deactivate top_k sampling and sample only from 92% most likely words

sample_output = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_p=0.92,

top_k=0

)

print("Output:\n" + 100 * '-')

print(tokenizer.decode(sample_output[0], skip_special_tokens=True))

```

```

Output:

----------------------------------------------------------------------------------------------------

I enjoy walking with my cute dog. He will never be the same. I watch him play.

Guys, my dog needs a name. Especially if he is found with wings.

What was that? I had a lot o

```

太好了,这看起来跟人类写的差不多了,虽然还不算完全是。

虽然从理论上讲, *Top-p* 似乎比 *Top-K* 更优雅,但这两种方法在实践中都很有效。 *Top-p* 也可以与 *Top-K* 结合使用,这样可以避免排名非常低的词,同时允许进行一些动态选择。

最后,如果想要获得多个独立采样的输出,我们可以 *再次* 设置参数 `num_return_sequences > 1`:

```python

# set seed to reproduce results. Feel free to change the seed though to get different results

tf.random.set_seed(0)

# set top_k = 50 and set top_p = 0.95 and num_return_sequences = 3

sample_outputs = model.generate(

input_ids,

do_sample=True,

max_length=50,

top_k=50,

top_p=0.95,

num_return_sequences=3

)

print("Output:\n" + 100 * '-')

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))

```

```

Output:

----------------------------------------------------------------------------------------------------

0: I enjoy walking with my cute dog. It's so good to have the chance to walk with a dog. But I have this problem with the dog and how he's always looking at us and always trying to make me see that I can do something

1: I enjoy walking with my cute dog, she loves taking trips to different places on the planet, even in the desert! The world isn't big enough for us to travel by the bus with our beloved pup, but that's where I find my love

2: I enjoy walking with my cute dog and playing with our kids," said David J. Smith, director of the Humane Society of the US.

"So as a result, I've got more work in my time," he said.

```

很酷,现在你拥有了所有可以在 `transformers` 里用模型来帮你写故事的工具了!

### 总结

在开放域语言生成场景中,作为最新的解码方法, *top-p* 和 *top-K* 采样于传统的 *贪心* 和 *波束* 搜索相比,似乎能产生更流畅的文本。但,最近有更多的证据表明 *贪心* 和 *波束* 搜索的明显缺陷 - 主要是生成重复的单词序列 - 是由模型 (特别是模型的训练方式) 引起的,而不是解码方法, *参见* [Welleck 等人 (2019)](https://arxiv.org/pdf/1908.04319.pdf) 的论文。此外,如 [Welleck 等人 (2020)](https://arxiv.org/abs/2002.02492) 的论文所述,看起来 *top-K* 和 *top-p* 采样也会产生重复的单词序列。

在 [Welleck 等人 (2019)](https://arxiv.org/pdf/1908.04319.pdf) 的论文中,作者表明,根据人类评估,在调整训练目标后,波束搜索相比 *Top-p* 采样能产生更流畅的文本。

开放域语言生成是一个快速发展的研究领域,而且通常情况下这里没有放之四海而皆准的方法,因此必须了解哪种方法最适合自己的特定场景。

好的方面是, *你* 可以在 `transfomers` 中尝试所有不同的解码方法 🤗。

以上是对如何在 `transformers` 中使用不同的解码方法以及开放域语言生成的最新趋势的简要介绍。

非常欢迎大家在 [Github 代码库](https://github.com/huggingface/transformers) 上提供反馈和问题。

如果想要体验下用模型生成故事的乐趣,可以访问我们的 web 应用 [Writing with Transformers](https://transformer.huggingface.co/)。

感谢为本文做出贡献的所有人: Alexander Rush、Julien Chaumand、Thomas Wolf、Victor Sanh、Sam Shleifer、Clément Delangue、Yacine Jernite、Oliver Åstrand 和 John de Wasseige。

### 附录

`generate` 方法还有几个正文未提及的参数,这里我们简要解释一下它们!

- `min_length` 用于强制模型在达到 `min_length` 之前不生成 EOS。这在摘要场景中使用得比较多,但如果用户想要更长的文本输出,也会很有用。

- `repetition_penalty` 可用于对生成重复的单词这一行为进行惩罚。它首先由 [Keskar 等人 (2019)](https://arxiv.org/abs/1909.05858) 引入,在 [Welleck 等人 (2019)](https://arxiv.org/pdf/1908.04319.pdf) 的工作中,它是训练目标的一部分。它可以非常有效地防止重复,但似乎对模型和用户场景非常敏感,其中一个例子见 Github 上的 [讨论](https://github.com/huggingface/transformers/pull/2303)。

- `attention_mask` 可用于屏蔽填充符。

- `pad_token_id`、`bos_token_id`、`eos_token_id`: 如果模型默认没有这些 token,用户可以手动选择其他 token id 来表示它们。

更多信息,请查阅 `generate` 函数 [手册](https://huggingface.co/transformers/main_classes/model.html?highlight=generate#transformers.TFPreTrainedModel.generate)。 | 3 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/stable-diffusion-inference-intel.md | ---

title: "在英特尔 CPU 上加速 Stable Diffusion 推理"

thumbnail: /blog/assets/136_stable_diffusion_inference_intel/01.png

authors:

- user: juliensimon

- user: echarlaix

translators:

- user: MatrixYao

---

# 在英特尔 CPU 上加速 Stable Diffusion 推理

前一段时间,我们向大家介绍了最新一代的 [英特尔至强](https://www.intel.com/content/www/us/en/products/details/processors/xeon/scalable.html) CPU(代号 Sapphire Rapids),包括其用于加速深度学习的新硬件特性,以及如何使用它们来加速自然语言 transformer 模型的[分布式微调](https://huggingface.co/blog/intel-sapphire-rapids)和[推理](https://huggingface.co/blog/intel-sapphire-rapids-inference)。

本文将向你展示在 Sapphire Rapids CPU 上加速 Stable Diffusion 模型推理的各种技术。后续我们还计划发布对 Stable Diffusion 进行分布式微调的文章。

在撰写本文时,获得 Sapphire Rapids 服务器的最简单方法是使用 Amazon EC2 [R7iz](https://aws.amazon.com/ec2/instance-types/r7iz/) 系列实例。由于它仍处于预览阶段,你需要[注册](https://pages.awscloud.com/R7iz-Preview.html)才能获得访问权限。与之前的文章一样,我使用的是 `r7iz.metal-16xl` 实例(64 个 vCPU,512GB RAM),操作系统镜像为 Ubuntu 20.04 AMI (`ami-07cd3e6c4915b2d18`)。

本文的代码可从 [Gitlab](https://gitlab.com/juliensimon/huggingface-demos/-/tree/main/optimum/stable_diffusion_intel) 上获取。我们开始吧!

## Diffusers 库

[Diffusers](https://huggingface.co/docs/diffusers/index) 库使得用 Stable Diffusion 模型生成图像变得极其简单。如果你不熟悉 Stable Diffusion 模型,这里有一个很棒的 [图文介绍](https://jalammar.github.io/illustrated-stable-diffusion/)。

首先,我们创建一个包含以下库的虚拟环境:Transformers、Diffusers、Accelerate 以及 PyTorch。

```

virtualenv sd_inference

source sd_inference/bin/activate

pip install pip --upgrade

pip install transformers diffusers accelerate torch==1.13.1

```

然后,我们写一个简单的基准测试函数,重复推理多次,最后返回单张图像生成的平均延迟。

```python

import time

def elapsed_time(pipeline, prompt, nb_pass=10, num_inference_steps=20):

# warmup

images = pipeline(prompt, num_inference_steps=10).images

start = time.time()

for _ in range(nb_pass):

_ = pipeline(prompt, num_inference_steps=num_inference_steps, output_type="np")

end = time.time()

return (end - start) / nb_pass

```

现在,我们用默认的 `float32` 数据类型构建一个 `StableDiffusionPipeline`,并测量其推理延迟。

```python

from diffusers import StableDiffusionPipeline

model_id = "runwayml/stable-diffusion-v1-5"

pipe = StableDiffusionPipeline.from_pretrained(model_id)

prompt = "sailing ship in storm by Rembrandt"

latency = elapsed_time(pipe, prompt)

print(latency)

```

平均延迟为 **32.3 秒**。正如这个英特尔开发的 [Hugging Face Space](https://huggingface.co/spaces/Intel/Stable-Diffusion-Side-by-Side) 所展示的,相同的代码在上一代英特尔至强(代号 Ice Lake)上运行需要大约 45 秒。

开箱即用,我们可以看到 Sapphire Rapids CPU 在没有任何代码更改的情况下速度相当快!

现在,让我们继续加速它吧!

## Optimum Intel 与 OpenVINO

[Optimum Intel](https://huggingface.co/docs/optimum/intel/index) 用于在英特尔平台上加速 Hugging Face 的端到端流水线。它的 API 和 [Diffusers](https://huggingface.co/docs/diffusers/index) 原始 API 极其相似,因此所需代码改动很小。

Optimum Intel 支持 [OpenVINO](https://docs.openvino.ai/latest/index.html),这是一个用于高性能推理的英特尔开源工具包。

Optimum Intel 和 OpenVINO 安装如下:

```

pip install optimum[openvino]

```

相比于上文的代码,我们只需要将 `StableDiffusionPipeline` 替换为 `OVStableDiffusionPipeline` 即可。如需加载 PyTorch 模型并将其实时转换为 OpenVINO 格式,你只需在加载模型时设置 `export=True`。

```python

from optimum.intel.openvino import OVStableDiffusionPipeline

...

ov_pipe = OVStableDiffusionPipeline.from_pretrained(model_id, export=True)

latency = elapsed_time(ov_pipe, prompt)

print(latency)

# Don't forget to save the exported model

ov_pipe.save_pretrained("./openvino")

```

OpenVINO 会自动优化 `bfloat16` 模型,优化后的平均延迟下降到了 **16.7 秒**,相当不错的 2 倍加速。

上述 pipeline 支持动态输入尺寸,对输入图像 batch size 或分辨率没有任何限制。但在使用 Stable Diffusion 时,通常你的应用程序仅限于输出一种(或几种)不同分辨率的图像,例如 512x512 或 256x256。因此,通过固定 pipeline 的输出分辨率来解锁更高的性能增益有其实际意义。如果你需要不止一种输出分辨率,您可以简单地维护几个 pipeline 实例,每个分辨率一个。

```python

ov_pipe.reshape(batch_size=1, height=512, width=512, num_images_per_prompt=1)

latency = elapsed_time(ov_pipe, prompt)

```

固定输出分辨率后,平均延迟进一步降至 **4.7 秒**,又获得了额外的 3.5 倍加速。

如你所见,OpenVINO 是加速 Stable Diffusion 推理的一种简单有效的方法。与 Sapphire Rapids CPU 结合使用时,和至强 Ice Lake 的最初性能的相比,推理性能加速近 10 倍。

如果你不能或不想使用 OpenVINO,本文下半部分会展示一系列其他优化技术。系好安全带!

## 系统级优化

扩散模型是数 GB 的大模型,图像生成是一种内存密集型操作。通过安装高性能内存分配库,我们能够加速内存操作并使之能在 CPU 核之间并行处理。请注意,这将更改系统的默认内存分配库。你可以通过卸载新库来返回默认库。

[jemalloc](https://jemalloc.net/) 和 [tcmalloc](https://github.com/gperftools/gperftools) 是两个很有意思的内存优化库。这里,我们使用 `jemalloc`,因为我们测试下来,它的性能比 `tcmalloc` 略好。`jemalloc` 还可以用于针对特定工作负载进行调优,如最大化 CPU 利用率。详情可参考 [`jemalloc` 调优指南](https://github.com/jemalloc/jemalloc/blob/dev/TUNING.md)。

```

sudo apt-get install -y libjemalloc-dev

export LD_PRELOAD=$LD_PRELOAD:/usr/lib/x86_64-linux-gnu/libjemalloc.so

export MALLOC_CONF="oversize_threshold:1,background_thread:true,metadata_thp:auto,dirty_decay_ms: 60000,muzzy_decay_ms:60000"

```

接下来,我们安装 `libiomp` 库来优化多核并行,这个库是 [英特尔 OpenMP* 运行时库](https://www.intel.com/content/www/us/en/docs/cpp-compiler/developer-guide-reference/2021-8/openmp-run-time-library-routines.html) 的一部分。

```

sudo apt-get install intel-mkl

export LD_PRELOAD=$LD_PRELOAD:/usr/lib/x86_64-linux-gnu/libiomp5.so

export OMP_NUM_THREADS=32

```

最后,我们安装 [numactl](https://github.com/numactl/numactl) 命令行工具。它让我们可以把我们的 Python 进程绑定到指定的核,并避免一些上下文切换开销。

```

numactl -C 0-31 python sd_blog_1.py

```

使用这些优化后,原始的 Diffusers 代码只需 **11.8 秒** 就可以完成推理,快了几乎 3 倍,而且无需任何代码更改。这些工具在我们的 32 核至强 CPU 上运行得相当不错。

我们还有招。现在我们把 `英特尔 PyTorch 扩展`(Intel Extension for PyTorch,`IPEX`)引入进来。

## IPEX 与 BF16

[IPEX](https://intel.github.io/intel-extension-for-pytorch/) 扩展了 PyTorch 使之可以进一步充分利用英特尔 CPU 上的硬件加速功能,包括 [AVX-512](https://en.wikipedia.org/wiki/AVX-512) 、矢量神经网络指令(Vector Neural Network Instructions,AVX512 VNNI) 以及 [先进矩阵扩展](https://en.wikipedia.org/wiki/Advanced_Matrix_Extensions) (AMX)。

我们先安装 `IPEX`。

```

pip install intel_extension_for_pytorch==1.13.100

```

装好后,我们需要修改部分代码以将 `IPEX` 优化应用到 `pipeline` 的每个模块(你可以通过打印 `pipe` 对象罗列出它有哪些模块),其中之一的优化就是把数据格式转换为 channels-last 格式。

```python

import torch

import intel_extension_for_pytorch as ipex

...

pipe = StableDiffusionPipeline.from_pretrained(model_id)

# to channels last

pipe.unet = pipe.unet.to(memory_format=torch.channels_last)

pipe.vae = pipe.vae.to(memory_format=torch.channels_last)

pipe.text_encoder = pipe.text_encoder.to(memory_format=torch.channels_last)

pipe.safety_checker = pipe.safety_checker.to(memory_format=torch.channels_last)

# Create random input to enable JIT compilation

sample = torch.randn(2,4,64,64)

timestep = torch.rand(1)*999

encoder_hidden_status = torch.randn(2,77,768)

input_example = (sample, timestep, encoder_hidden_status)

# optimize with IPEX

pipe.unet = ipex.optimize(pipe.unet.eval(), dtype=torch.bfloat16, inplace=True, sample_input=input_example)

pipe.vae = ipex.optimize(pipe.vae.eval(), dtype=torch.bfloat16, inplace=True)

pipe.text_encoder = ipex.optimize(pipe.text_encoder.eval(), dtype=torch.bfloat16, inplace=True)

pipe.safety_checker = ipex.optimize(pipe.safety_checker.eval(), dtype=torch.bfloat16, inplace=True)

```

我们使用了 `bloat16` 数据类型,以利用 Sapphire Rapids CPU 上的 AMX 加速器。

```python

with torch.cpu.amp.autocast(enabled=True, dtype=torch.bfloat16):

latency = elapsed_time(pipe, prompt)

print(latency)

```

经过此番改动,推理延迟从 11.9 秒进一步减少到 **5.4 秒**。感谢 IPEX 和 AMX,推理速度提高了 2 倍以上。

还能榨点性能出来吗?能,我们将目光转向调度器(scheduler)!

## 调度器

Diffusers 库支持为每个Stable Diffusion pipiline 配置 [调度器(scheduler)](https://huggingface.co/docs/diffusers/using-diffusers/schedulers),用于在去噪速度和去噪质量之间找到最佳折衷。

根据文档所述:“*截至本文档撰写时,DPMSolverMultistepScheduler 能实现最佳的速度/质量权衡,只需 20 步即可运行。*” 我们可以试一下 `DPMSolverMultistepScheduler`。

```python

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler

...

dpm = DPMSolverMultistepScheduler.from_pretrained(model_id, subfolder="scheduler")

pipe = StableDiffusionPipeline.from_pretrained(model_id, scheduler=dpm)

```

最终,推理延迟降至 **5.05 秒**。与我们最初的 Sapphire Rapids 基线(32.3 秒)相比,几乎快了 6.5 倍!

<kbd>

<img src="/blog/assets/136_stable_diffusion_inference_intel/01.png">

</kbd>

*运行环境: Amazon EC2 r7iz.metal-16xl, Ubuntu 20.04, Linux 5.15.0-1031-aws, libjemalloc-dev 5.2.1-1, intel-mkl 2020.0.166-1, PyTorch 1.13.1, Intel Extension for PyTorch 1.13.1, transformers 4.27.2, diffusers 0.14, accelerate 0.17.1, openvino 2023.0.0.dev20230217, optimum 1.7.1, optimum-intel 1.7*

## 总结

在几秒钟内生成高质量图像的能力可用于许多场景,如 2C 的应用程序、营销和媒体领域的内容生成,或生成合成数据以扩充数据集。

如你想要在这方面起步,以下是一些有用的资源:

* Diffusers [文档](https://huggingface.co/docs/diffusers)

* Optimum Intel [文档](https://huggingface.co/docs/optimum/main/en/intel/inference)

* [英特尔 IPEX](https://github.com/intel/intel-extension-for-pytorch) on GitHub

* [英特尔和 Hugging Face联合出品的开发者资源网站](https://www.intel.com/content/www/us/en/developer/partner/hugging-face.html)

如果你有任何问题或反馈,请通过 [Hugging Face 论坛](https://discuss.huggingface.co/) 告诉我们。

感谢垂阅! | 4 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/tgi-benchmarking.md | ---

title: "TGI 基准测试"

thumbnail: /blog/assets/tgi-benchmarking/tgi-benchmarking-thumbnail.png

authors:

- user: derek-thomas

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# TGI 基准测试

本文主要探讨 [TGI](https://github.com/huggingface/text-generation-inference) 的小兄弟 - [TGI 基准测试工具](https://github.com/huggingface/text-generation-inference/blob/main/benchmark/README.md)。它能帮助我们超越简单的吞吐量指标,对 TGI 进行更全面的性能剖析,以更好地了解如何根据实际需求对服务进行调优并按需作出最佳的权衡及决策。如果你曾觉得 LLM 服务部署成本太高,或者你想对部署进行调优,那么本文很适合你!

我将向大家展示如何轻松通过 [Hugging Face 空间](https://huggingface.co/spaces) 进行服务性能剖析。你可以把获得的分析结果用于 [推理端点](https://huggingface.co/inference-endpoints/dedicated) 或其他相同硬件的平台的部署。

## 动机

为了更好地理解性能剖析的必要性,我们先讨论一些背景信息。

大语言模型 (LLM) 从根子上来说效率就比较低,这主要源自其基于 [解码器的工作方式](https://huggingface.co/learn/nlp-course/chapter1/6?fw=pt),每次前向传播只能生成一个新词元。随着 LLM 规模的扩大以及企业 [采用率的激增](https://a16z.com/generative-ai-enterprise-2024/),AI 行业围绕优化手段创新以及性能提优技术做了非常出色的工作。

在 LLM 推理服务优化的各个方面,业界积累了数十项改进技术。各种技术层出不穷,如: [Flash Attention](https://huggingface.co/docs/text-generation-inference/en/conceptual/flash_attention)、[Paged Attention](https://huggingface.co/docs/text-generation-inference/en/conceptual/paged_attention)、[流式响应](https://huggingface.co/docs/text-generation-inference/en/conceptual/streaming)、[批处理改进](https://huggingface.co/docs/text-generation-inference/en/basic_tutorials/launcher#maxwaitingtokens)、[投机解码](https://huggingface.co/docs/text-generation-inference/en/conceptual/speculation)、各种各样的 [量化](https://huggingface.co/docs/text-generation-inference/en/conceptual/quantization) 技术、[前端网络服务改进](https://github.com/huggingface/text-generation-inference?tab=readme-ov-file#architecture),使用 [更快的语言](https://github.com/search?q=repo%3Ahuggingface%2Ftext-generation-inference++language%3ARust&type=code) (抱歉,Python 🐍!) 等等。另外还有不少用例层面的改进,如 [结构化生成](https://huggingface.co/docs/text-generation-inference/en/conceptual/guidance) 以及 [水印](https://huggingface.co/blog/watermarking) 等都在当今的 LLM 推理世界中占据了一席之地。我们深深知道,LLM 推理服务优化没有万能灵丹,一个快速高效的推理服务需要整合越来越多的细分技术 [[1]](#1)。

[TGI](https://github.com/huggingface/text-generation-inference) 是 Hugging Face 的高性能 LLM 推理服务,其宗旨就是拥抱、整合、开发那些可用于优化 LLM 部署和使用的最新技术。由于 Hugging Face 的强大的开源生态,大多数 (即使不是全部) 主要开源 LLM 甫一发布即可以在 TGI 中使用。

一般来讲,实际应用的不同会导致用户需求迥异。以 **RAG 应用** 的提示和生成为例:

- 指令/格式

- 通常很短,<200 个词元

- 用户查询

- 通常很短,<200 个词元

- 多文档

- 中等大小,每文档 500-1000 个词元,

- 文档个数为 N,且 N<10

- 响应

- 中等长度 , ~500-1000 个词元

在 RAG 应用中,将正确的文档包含于提示中对于获得高质量的响应非常重要,用户可以通过包含更多文档 (即增加 N) 来提高这种概率。也就是说,RAG 应用通常会尝试最大化 LLM 的上下文窗口以提高任务性能。而一般的聊天应用则相反,典型 **聊天场景** 的词元比 RAG 少得多:

- 多轮对话

- 2xTx50-200 词元,T 轮

- 2x 的意思是每轮包括一次用户输入和一次助理输出

鉴于应用场景如此多样,我们应确保根据场景需求来相应配置我们的 LLM 服务。为此,Hugging Face 提供了一个 [基准测试工具](https://github.com/huggingface/text-generation-inference/blob/main/benchmark/README.md),以帮助我们探索哪些配置更适合目标应用场景。下文,我将解释如何在 [Hugging Face 空间](https://huggingface.co/docs/hub/en/spaces-overview) 上使用该基准测试工具。

## Pre-requisites

在深入研究基准测试工具之前,我们先对齐一下关键概念。

### 延迟与吞吐

<video style="width: auto; height: auto;" controls autoplay muted loop>

<source src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/tgi-benchmarking/LatencyThroughputVisualization.webm" type="video/webm">

当前浏览器不支持视频标签。

</video>

| |

|-------------------------------------------------|

| *图 1: 延迟与吞吐量的可视化解释* |

- 词元延迟 – 生成一个词元并将其返回给用户所需的时间

- 请求延迟 – 完全响应请求所需的时间

- 首词元延迟 - 从请求发送到第一个词元返回给用户的时间。这是处理预填充输入的时间和生成第一个词元的时间的和

- 吞吐量 – 给定时间内服务返回的词元数 (在本例中,吞吐量为每秒 4 个词元)

延迟是一个比较微妙的测量指标,它无法反应全部情况。你的生成延迟可能比较长也可能比较短,但长也好短也罢,并不能完整刻画实际的服务性能。

我们需要知道的重要事实是: 吞吐和延迟是相互正交的测量指标,我们可以通过适当的服务配置,针对其中之一进行优化。我们的基准测试工具可以对测量数据进行可视化,从而帮助大家理解折衷之道。

### 预填充与解码

|:--:|

|*图 2: 预填充与解码图解,灵感来源 [[2]](#2)*|

以上给出了 LLM 如何生成文本的简图。一般,模型每次前向传播生成一个词元。在 **预填充阶段** (用橙色表示),模型会收到完整的提示 (What is the capital of the US?) 并依此生成首个词元 (Washington)。在 **解码阶段** (用蓝色表示),先前生成的词元被添加进输入 (What is the capital of the US? Washington),并馈送给模型以进行新一轮前向传播。如此往复: 向模型馈送输入 -> 生成词元 -> 将词元添加进输入,直至生成序列结束词元 (<EOS>)。

<br>

<div style="background-color: #e6f9e6; padding: 16px 32px; outline: 2px solid; border-radius: 10px;">

思考题: 为何预填充阶段我们馈送了很多词元作为输入,却仅需做一轮前向?

<details>

<summary> 点击揭晓答案 </summary>

因为我们无需生成 “What is the” 的下一个词元,我们已经知道它是 “capital” 了。

</details>

</div>

为了易于说明,上图仅选择了一个短文本生成示例,但注意,预填充仅需要模型进行一次前向传播,但解码可能需要数百次或更多的前向传播,即使在上述短文本示例中,我们也可以蓝色箭头多于橙色箭头。我们现在可以明白为什么要花这么多时间才能等到 LLM 的输出了!由于前向次数较多,解码阶段通常是我们花心思更多的地方。

## 基准测试工具

### 动机

在对工具、新算法或模型进行比较时,吞吐量是大家常用的指标。虽然这是 LLM 推理故事的重要组成部分,但单靠吞吐量还是缺少一些关键信息。一般来讲,我们至少需要知道吞吐量和延迟两个指标才能作出正确的决策 (当然你增加更多指标,以进行更深入的研究)。TGI 基准测试工具就支持你同时得到延迟和吞吐量两个指标。

另一个重要的考量是你希望用户拥有什么体验。你更关心为许多用户提供服务,还是希望每个用户在使用你的系统后都能得到快速响应?你想要更快的首词元延迟 (TTFT,Time To First Token),还是你能接受首词元延迟,但希望后续词元的速度要快?

下表列出了对应于不同目标的不同关注点。请记住,天下没有免费的午餐。但只要有足够的 GPU 和适当的配置,“居天下有甚难”?

<table>

<tr>

<td><strong>我关心 ......</strong>

</td>

<td><strong>我应专注于 ......</strong>

</td>

</tr>

<tr>

<td>处理更多的用户

</td>

<td>最大化吞吐量

</td>

</tr>

<tr>

<td>我的网页/应用正在流失用户

</td>

<td>最小化 TTFT

</td>

</tr>

<tr>

<td>中等体量用户的用户体验

</td>

<td>最小化延迟

</td>

</tr>

<tr>

<td>全面的用户体验

</td>

<td>在给定延迟内最大化吞吐量

</td>

</tr>

</table>

### 环境搭建

基准测试工具是随着 TGI 一起安装的,但你需要先启动服务才能运行它。为了简单起见,我设计了一个空间 - [derek-thomas/tgi-benchmark-space](https://huggingface.co/spaces/derek-thomas/tgi-benchmark-space),其把 TGI docker 镜像 (固定使用最新版) 和一个 jupyter lab 工作空间组合起来,从而允许我们部署选定的模型,并通过命令行轻松运行基准测试工具。这个空间是可复制的,所以如果它休眠了,请不要惊慌,复制一个到你的名下就可以了。我还在空间里添加了一些 notebook,你可以参照它们轻松操作。如果你想对 [Dockerfile](https://huggingface.co/spaces/derek-thomas/tgi-benchmark-space/blob/main/Dockerfile) 进行调整,请随意研究,以了解其构建方式。

### 起步

请注意,由于其交互性,在 jupyter lab 终端中运行基准测试工具比在 notebook 中运行要好得多,但我还是把命令放在 notebook 中,这样易于注释,并且很容易照着做。

1. 点击 <a class="duplicate-button" style="display:inline-block" target="_blank" href="https://huggingface.co/spaces/derek-thomas/tgi-benchmark-space?duplicate=true"><img style="margin-top:0;margin-bottom:0" src="https://huggingface.co/datasets/huggingface/badges/raw/main/duplicate-this-space-sm.svg" alt=" 复制 space"></a>

- 在 [空间密令](https://huggingface.co/docs/hub/spaces-sdks-docker#secrets) 中设置你自己的 `JUPYTER_TOKEN` 默认密码 (系统应该会在你复制空间时提示你)

- 选择硬件,注意它应与你的最终部署硬件相同或相似

2. 进入你的空间并使用密码登录

3. 启动 `01_1_TGI-launcher.ipynb`

- 其会用 jupyter notebook 以默认设置启动 TGI

4. 启动 `01_2_TGI-benchmark.ipynb`

- 其会按照指定设置启动 TGI 基准测试工具

### 主要区块

|:--:|

|*图 3:基准测试报告区块*|

- **区块 1**: batch size 选项卡及其他信息。

- 使用箭头选择不同的 batch size

- **区块 2** 及 **区块 4**: 预填充/解码阶段的统计信息及直方图

- 基于 `--runs` 的数量计算的统计数据/直方图

- **区块 3** 及 **区块 5**: 预填充/解码阶段的 `延迟 - 吞吐量` 散点图

- X 轴是延迟 (越小越好)

- Y 轴是吞吐量 (越大越好)

- 图例是 batch size

- “ _理想_ ”点位于左上角 (低延迟、高吞吐)

### 理解基准测试工具

|:--:|

|*图 4:基准测试工具散点图*|

如果你的硬件和设置与我相同,应该会得到与图 4 类似的图。基准测试工具向我们展示了: 在当前设置和硬件下,不同 batch size (代表用户请求数,与我们启动 TGI 时使用的术语略有不同) 下的吞吐量和延迟。理解这一点很重要,因为我们应该根据基准测试工具的结果来调整 TGI 的启动设置。

如果我们的应用像 RAG 那样预填充较长的话, **区块 3** 的图往往会更有用。因为,上下文长度的确会影响 TTFT (即 X 轴),而 TTFT 是用户体验的重要组成部分。请记住,虽然在预填充阶段我们必须从头开始构建 KV 缓存,但好处是所有输入词元的处理可以在一次前向传播中完成。因此,在许多情况下,就每词元延迟而言,预填充确实比解码更快。

**区块 5** 中的图对应于解码阶段。我们看一下数据点的形状,可以看到,当 batch size 处于 1~32 的范围时,形状基本是垂直的,大约为 5.3 秒。这种状态就相当不错,因为这意味着在不降低延迟的情况下,我们可以显著提高吞吐量!64 和 128 会怎么样呢?我们可以看到,虽然吞吐量在增加,但延迟也开始增加了,也就是说出现了折衷。

对于同样的 batch size,我们再看看 **区块 3** 图的表现。对 batch size 32,我们可以看到 TTFT 的时间仍然约为 1 秒。但我们也看到从 32 -> 64 -> 128 延迟出现了线性增长,2 倍的 batch size 的延迟也是 2 倍。此外,没有吞吐量增益!这意味着我们并没有真正从这种折衷中获得太多好处。

<br>

<div style="background-color: #e6f9e6; padding: 16px 32px; outline: 2px solid; border-radius: 10px;">

思考题:

<ul>

<li>如果添加更多的数据点,你觉得其形状会如何呢?</li>

<li>如果词元数增加,你举得这些散点 (预填充抑或解码) 的形状会如何变化呢?</li>

</ul>

</div>

如果你的 batch size 落在垂直区,很好,你可以获得更多的吞吐量并免费处理更多的用户。如果你的 batch size 处于水平区,这意味着你受到算力的限制,每增加一个用户都会损害每个人的延迟,且不会带来任何吞吐量的好处。你应该优化你的 TGI 配置或扩展你的硬件。

现在我们已经了解了 TGI 在各种场景中的行为,我们可以多尝试几个不同的 TGI 设置并对其进行基准测试。在选定一个好的配置之前,最好先多试几次。如果大家有兴趣的话,或许我们可以写个续篇,深入探讨针对聊天或 RAG 等不同用例的优化。

### 尾声

追踪实际用户的行为非常重要。当我们估计用户行为时,我们必须从某个地方开始并作出有根据的猜测。这些数字的选择将对我们的剖析质量有重大影响。幸运的是,TGI 会在日志中告诉我们这些信息,所以请务必检查日志。

一旦探索结束,请务必停止运行所有程序,以免产生进一步的费用。

- 终止 `TGI-launcher.ipynb` jupyter notebook 中正在运行的单元

- 在终端中点击 `q` 以终止运行分析工具

- 在空间设置中点击暂停

## 总结

LLM 规模庞大且昂贵,但有多种方法可以降低成本。像 TGI 这样的 LLM 推理服务已经为我们完成了大部分工作,我们只需善加利用其功能即可。首要工作是了解现状以及你可以做出哪些折衷。通过本文,我们已经了解如何使用 TGI 基准测试工具来做到这一点。我们可以获取这些结果并将其用于 AWS、GCP 或推理终端中的任何同等硬件。

感谢 Nicolas Patry 和 Olivier Dehaene 创建了 [TGI](https://github.com/huggingface/text-generation-inference) 及其 [基准测试工具](https://github.com/huggingface/text-generation-inference/blob/main/benchmark/README.md)。还要特别感谢 Nicholas Patry、Moritz Laurer、Nicholas Broad、Diego Maniloff 以及 Erik Rignér 帮忙校对本文。

## 参考文献

<a id="1">[1]</a> : Sara Hooker, [The Hardware Lottery](https://arxiv.org/abs/1911.05248), 2020<a id="2">[2]</a> : Pierre Lienhart, [LLM Inference Series: 2. The two-phase process behind LLMs’ responses](https://medium.com/@plienhar/llm-inference-series-2-the-two-phase-process-behind-llms-responses-1ff1ff021cd5), 2023 | 5 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/intel-protein-language-model-protst.md | ---

title: "在英特尔 Gaudi 2 上加速蛋白质语言模型 ProtST"

thumbnail: /blog/assets/intel-protein-language-model-protst/01.jpeg

authors:

- user: juliensimon

- user: Jiqing

guest: true

org: Intel

- user: Santiago Miret

guest: true

- user: katarinayuan

guest: true

- user: sywangyi

guest: true

org: Intel

- user: MatrixYao

guest: true

org: Intel

- user: ChrisAllenMing

guest: true

- user: kding1

guest: true

org: Intel

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: false

---

# 在英特尔 Gaudi 2 上加速蛋白质语言模型 ProtST

<p align="center">

<img src="https://huggingface.co/blog/assets/intel-protein-language-model-protst/01.jpeg" alt="A teenage scientist creating molecules with computers and artificial intelligence" width="512"><br>

</p>

## 引言

蛋白质语言模型 (Protein Language Models, PLM) 已成为蛋白质结构与功能预测及设计的有力工具。在 2023 年国际机器学习会议 (ICML) 上,MILA 和英特尔实验室联合发布了 [ProtST](https://proceedings.mlr.press/v202/xu23t.html) 模型,该模型是个可基于文本提示设计蛋白质的多模态模型。此后,ProtST 在研究界广受好评,不到一年的时间就积累了 40 多次引用,彰显了该工作的影响力。

PLM 最常见的任务之一是预测氨基酸序列的亚细胞位置。此时,用户输入一个氨基酸序列给模型,模型会输出一个标签,以指示该序列所处的亚细胞位置。论文表明,ProtST-ESM-1b 的零样本亚细胞定位性能优于最先进的少样本分类器 (如下图)。

<kbd>

<img src="https://huggingface.co/blog/assets/intel-protein-language-model-protst/02.png">

</kbd>

为了使 ProtST 更民主化,英特尔和 MILA 对模型进行了重写,以使大家可以通过 Hugging Face Hub 来使用模型。大家可于 [此处](https://huggingface.co/mila-intel) 下载模型及数据集。

本文将展示如何使用英特尔 Gaudi 2 加速卡及 `optimum-habana` 开源库高效运行 ProtST 推理和微调。[英特尔 Gaudi 2](https://habana.ai/products/gaudi2/) 是英特尔设计的第二代 AI 加速卡。感兴趣的读者可参阅我们 [之前的博文](https://huggingface.co/blog/zh/habana-gaudi-2-bloom#habana-gaudi2),以深入了解该加速卡以及如何通过 [英特尔开发者云](https://cloud.intel.com) 使用它。得益于 [`optimum-habana`](https://github.com/huggingface/optimum-habana),仅需少量的代码更改,用户即可将基于 transformers 的代码移植至 Gaudi 2。

## 对 ProtST 进行推理

常见的亚细胞位置包括细胞核、细胞膜、细胞质、线粒体等,你可从 [此数据集](https://huggingface.co/datasets/mila-intel/subloc_template) 中获取全面详细的位置介绍。

我们使用 `ProtST-SubcellularLocalization` 数据集的测试子集来比较 ProtST 在英伟达 `A100 80GB PCIe` 和 `Gaudi 2` 两种加速卡上的推理性能。该测试集包含 2772 个氨基酸序列,序列长度范围为 79 至 1999。

你可以使用 [此脚本](https://github.com/huggingface/optimum-habana/tree/main/examples/protein-folding#single-hpu-inference-for-zero-shot-evaluation) 重现我们的实验,我们以 `bfloat16` 精度和 batch size 1 运行模型。在英伟达 A100 和英特尔 Gaudi 2 上,我们获得了相同的准确率 (0.44),但 Gaudi 2 的推理速度比 A100 快 1.76 倍。单张 A100 和单张 Gaudi 2 的运行时间如下图所示。

<kbd>

<img src="https://huggingface.co/blog/assets/intel-protein-language-model-protst/03.png">

</kbd>

## 微调 ProtST

针对下游任务对 ProtST 模型进行微调是提高模型准确性的简单且公认的方法。在本实验中,我们专门研究了针对二元定位任务的微调,其是亚细胞定位的简单版,任务用二元标签指示蛋白质是膜结合的还是可溶的。

你可使用 [此脚本](https://github.com/huggingface/optimum-habana/tree/main/examples/protein-folding#multi-hpu-finetune-for-sequence-classification-task) 重现我们的实验。其中,我们在 [ProtST-BinaryLocalization](https://huggingface.co/datasets/mila-intel/ProtST-BinaryLocalization) 数据集上以 `bfloat16` 精度微调 [ProtST-ESM1b-for-sequential-classification](https://huggingface.co/mila-intel/protst-esm1b-for-sequential-classification)。下表展示了不同硬件配置下测试子集的模型准确率,可以发现它们均与论文中发布的准确率 (~92.5%) 相当。

<kbd>

<img src="https://huggingface.co/blog/assets/intel-protein-language-model-protst/04.png">

</kbd>

下图显示了微调所用的时间。可以看到,单张 Gaudi 2 比单张 A100 快 2.92 倍。该图还表明,在 4 张或 8 张 Gaudi 2 加速卡上使用分布式训练可以实现近线性扩展。

<kbd>

<img src="https://huggingface.co/blog/assets/intel-protein-language-model-protst/05.png">

</kbd>

## 总结

本文,我们展示了如何基于 `optimum-habana` 轻松在 Gaudi 2 上部署 ProtST 推理和微调。此外,我们的结果还表明,与 A100 相比,Gaudi 2 在这些任务上的性能颇具竞争力: 推理速度提高了 1.76 倍,微调速度提高了 2.92 倍。

如你你想在英特尔 Gaudi 2 加速卡上开始一段模型之旅,以下资源可助你一臂之力:

- optimum-habana [代码库](https://github.com/huggingface/optimum-habana)

- 英特尔 Gaudi [文档](https://docs.habana.ai/en/latest/index.html)

感谢垂阅!我们期待看到英特尔 Gaudi 2 加速的 ProtST 能助你创新。 | 6 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/ryght-case-study.md | ---

title: "Ryght 在 Hugging Face 专家助力下赋能医疗保健和生命科学之旅"

thumbnail: /blog/assets/ryght-case-study/thumbnail.png

authors:

- user: andrewrreed

- user: johnnybio

guest: true

org: RyghtAI

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# Ryght 在 Hugging Face 专家助力下赋能医疗保健和生命科学之旅

> [!NOTE] 本文是 Ryght 团队的客座博文。

## Ryght 是何方神圣?

Ryght 的使命是构建一个专为医疗保健和生命科学领域量身定制的企业级生成式人工智能平台。最近,公司正式公开了 [Ryght 预览版](https://www.ryght.ai/signup?utm_campaign=Preview%20Launch%20April%2016%2C%2024&utm_source=Huggging%20Face%20Blog%20-%20Preview%20Launch%20Sign%20Up) 平台。

当前,生命科学公司不断地从各种不同来源 (实验室数据、电子病历、基因组学、保险索赔、药学、临床等) 收集大量数据,并期望从中获取洞见。但他们分析这些数据的方法已经跟不上数据本身,目前典型的工作模式往往需要一个大型团队来完成从简单查询到开发有用的机器学习模型的所有工作。这一模式已无法满足药物开发、临床试验以及商业活动对可操作知识的巨大需求,更别谈精准医学的兴起所带来的更大的需求了。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/ryght-case-study/click-through.gif" alt="Ryght Laptop" style="width: 90%; height: auto;"><br>

</p>

[Ryght](https://hubs.li/Q02sLGKL0) 的目标是让生命科学专业人士能够快速、安全地从数据中挖掘出他们所需的洞见。为此,其正在构建一个 SaaS 平台,为本专业的人员和组织提供定制的 AI copilot 解决方案,以助力他们对各种复杂数据源进行记录、分析及研究。

Ryght 认识到 AI 领域节奏快速且多变的特点,因此一开始就加入 [Hugging Face 专家支持计划](https://huggingface.co/support),将 Hugging Face 作为技术咨询合作伙伴。

## 共同克服挑战

> ##### _我们与 Hugging Face 专家支持计划的合作对加快我们生成式人工智能平台的开发起到了至关重要的作用。快速发展的人工智能领域有可能彻底改变我们的行业,而 Hugging Face 的高性能、企业级的文本生成推理 (TGI) 和文本嵌入推理 (TEI) 服务本身就是游戏规则的改写者。 - [Johnny Crupi](https://www.linkedin.com/in/johncrupi/),[Ryght 首席技术官](http://www.ryght.ai/?utm_campaign=hf&utm_source=hf_blog)_

在着手构建生成式人工智能平台的过程中,Ryght 面临着多重挑战。

### 1. 快速提升团队技能并在多变的环境中随时了解最新情况

随着人工智能和机器学习技术的快速发展,确保团队及时了解最新的技术、工具以及最佳实践至关重要。这一领域的学习曲线呈现出持续陡峭的特点,因此需要齐心协力才能及时跟上。

与 Hugging Face 的人工智能生态系统核心专家团队的合作,有助于 Ryght 跟上本垂直领域的最新发展以及最新模型。通过开放异步的沟通渠道、定期的咨询会以及专题技术研讨会等多种形式,充分地保证了目的的实现。

### 2. 在众多方案中找到最 [经济] 的机器学习方案

人工智能领域充满了创新,催生了大量的工具、库、模型及方法。对于像 Ryght 这样的初创公司来说,必须消除这种噪声并确定哪些机器学习策略最适合生命科学这一独特场景。这不仅需要了解当前的技术水平,还需要对技术在未来的相关性和可扩展性有深刻的洞见。

Hugging Face 作为 Ryght 技术团队的合作伙伴,在解决方案设计、概念验证开发和生产工作负载优化全过程中提供了有力的协助,包括: 针对应用场景推荐最适合 Ryght 需求的库、框架和模型,并提供了如何使用这些软件和模型的示例。这些指导最终简化了决策过程并缩短了开发时间。

### 3. 开发专注于安全性、隐私性及灵活性的高性能解决方案

鉴于其目标是企业级的解决方案,因此 Ryght 把安全、隐私和可治理性放在最重要的位置。因此在设计方案架构时,需要提供支持各种大语言模型 (LLM) 的灵活性,这是生命科学领域内容生成和查询处理系统的关键诉求。

基于对开源社区的快速创新,特别是医学 LLM 创新的理解,其最终采用了“即插即用”的 LLM 架构。这种设计使其能够在新 LLM 出现时能无缝地评估并集成它们。

在 Ryght 的平台中,每个 LLM 均可注册并链接至一个或多个特定于客户的推理端点。这种设计不仅可以保护各客户的连接,还提供了在不同 LLM 之间切换的能力,提供了很好的灵活性。Ryght 通过采用 Hugging Face 的 [文本生成推理 (TGI)](https://huggingface.co/docs/text-generation-inference/index) 和 [推理端点](https://huggingface.co/inference-endpoints/dedicate) 实现了该设计。

除了 TGI 之外,Ryght 还将 [文本嵌入推理 (TEI)](https://huggingface.co/docs/text-embeddings-inference/en/index) 集成到其 ML 平台中。使用 TEI 和开源嵌入模型提供服务,与仅依赖私有嵌入服务相比,可以使 Ryght 能够享受更快的推理速度、免去对速率限制的担忧,并得到可以为自己的微调模型提供服务的灵活性,而微调模型可以更好地满足生命科学领域的独特要求。

为了同时满足多个客户的需求,系统需要能处理大量并发请求,同时保持低延迟。因此,Ryght 的嵌入和推理服务不仅仅是简单的模型调用,还需要支持包括组批、排队和跨 GPU 分布式模型处理等高级特性。这些特性对于避免性能瓶颈并确保用户不会遇到延迟,从而保持最佳的系统响应时间至关重要。

## 总结

Ryght 与 Hugging Face 在 ML 服务上的战略合作伙伴关系以及深度集成凸显了其致力于在医疗保健和生命科学领域提供尖端解决方案的承诺。通过采用灵活、安全和可扩展的架构,其确保自己的平台始终处于创新前沿,为客户提供无与伦比的服务和专业知识,以应对现代医疗领域的复杂性。

[Ryght 预览版](https://hubs.li/Q02sLFl_0) 现已作为一个可轻松上手的、免费、安全的平台向生命科学知识工作者公开,欢迎大家使用。Ryght 的 copilot 库包含各种工具,可加速信息检索、复杂非结构化数据的综合及结构化,以及文档构建等任务,把之前需要数周才能完成的工作缩短至数天或数小时。如你对定制方案及合作方案有兴趣,请联系其 [AI 专家团队](https://hubs.li/Q02sLG9V0),以讨论企业级 Ryght 服务。

如果你有兴趣了解有关 Hugging Face 专家支持计划的更多信息,请 [通过此处](https://huggingface.co/contact/sales?from=support) 联系我们,我们将联系你讨论你的需求! | 7 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/Llama2-for-non-engineers.md | ---

title: "非工程师指南:训练 LLaMA 2 聊天机器人"

thumbnail: /blog/assets/78_ml_director_insights/tuto.png

authors:

- user: 2legit2overfit

- user: abhishek

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# 非工程师指南: 训练 LLaMA 2 聊天机器人

## 引言

本教程将向你展示在不编写一行代码的情况下,如何构建自己的开源 ChatGPT,这样人人都能构建自己的聊天模型。我们将以 LLaMA 2 基础模型为例,在开源指令数据集上针对聊天场景对其进行微调,并将微调后的模型部署到一个可分享的聊天应用中。全程只需点击鼠标,即可轻松通往荣耀之路!😀

为什么这很重要?是这样的,机器学习,尤其是 LLM (Large Language Models,大语言模型),已前所未有地普及开来,渐渐成为我们生产生活中的重要工具。然而,对非机器学习工程专业的大多数人来说,训练和部署这些模型的复杂性似乎仍然遥不可及。如果我们理想中的机器学习世界是充满着无处不在的个性化模型的,那么我们面临着一个迫在眉睫的挑战,即如何让那些没有技术背景的人独立用上这项技术?

在 Hugging Face,我们一直在默默努力为这个包容性的未来铺平道路。我们的工具套件,包括 Spaces、AutoTrain 和 Inference Endpoints 等服务,就是为了让任何人都能进入机器学习的世界。

为了展示这个民主化的未来是何其轻松,本教程将向你展示如何使用 [Spaces](https://huggingface.co/Spaces)、[AutoTrain](https://huggingface.co/autotrain) 和 [ChatUI](https://huggingface.co/inference-endpoints) 构建聊天应用。只需简单三步,代码含量为零。声明一下,我们也不是机器学习工程师,而只是 Hugging Face 营销策略团队的一员。如果我们能做到这一点,那么你也可以!话不多说,我们开始吧!

## Spaces 简介

Hugging Face 的 Spaces 服务提供了易于使用的 GUI,可用于构建和部署 Web 托管的 ML 演示及应用。该服务允许你使用 Gradio 或 Streamlit 前端快速构建 ML 演示,将你自己的应用以 docker 容器的形式上传,甚至你还可以直接选择一些已预先配置好的 ML 应用以实现快速部署。

后面,我们将部署两个来自 Spaces、AutoTrain 和 ChatUI 的预配置 docker 应用模板。

你可参阅 [此处](https://huggingface.co/docs/hub/spaces),以获取有关 Spaces 的更多信息。

## AutoTrain 简介

AutoTrain 是一款无代码工具,可让非 ML 工程师 (甚至非开发人员😮) 无需编写任何代码即可训练最先进的 ML 模型。它可用于 NLP、计算机视觉、语音、表格数据,现在甚至可用于微调 LLM,我们这次主要用的就是 LLM 微调功能。

你可参阅 [此处](https://huggingface.co/docs/autotrain/index),以获取有关 AutoTrain 的更多信息。

## ChatUI 简介

ChatUI 顾名思义,是 Hugging Face 构建的开源 UI,其提供了与开源 LLM 交互的界面。值得注意的是,它与 HuggingChat 背后的 UI 相同,HuggingChat 是 ChatGPT 的 100% 开源替代品。

你可参阅 [此处](https://github.com/huggingface/chat-ui),以获取有关 ChatUI 的更多信息。

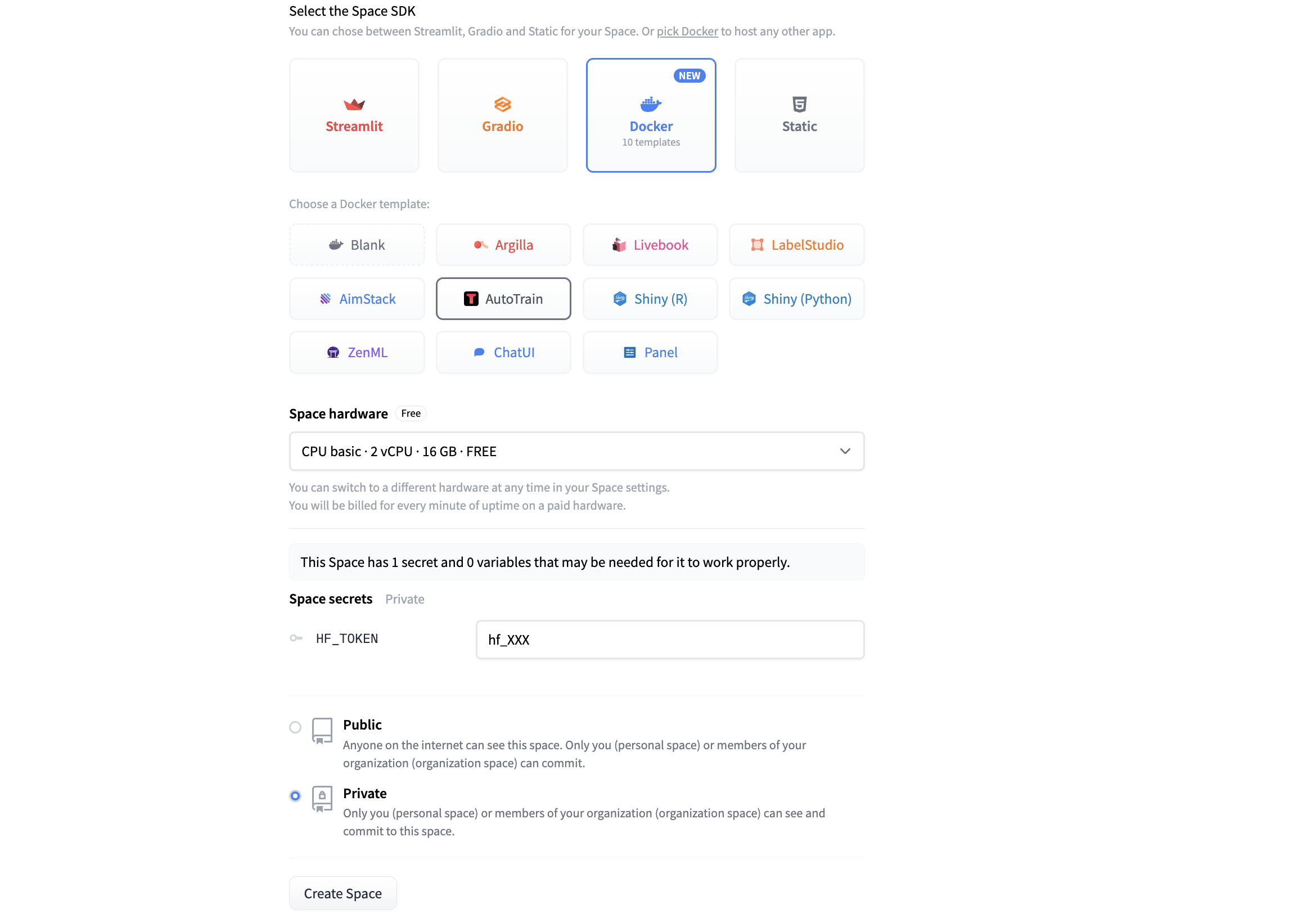

### 第 1 步: 创建一个新的 AutoTrain Space

1.1 在 [huggingface.co/spaces](https://huggingface.co/spaces) 页面点击 “Create new Space” 按钮。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto1.png"><br>

</p>

1.2 如果你计划公开这个模型或 Space,请为你的 Space 命名并选择合适的许可证。

1.3 请选择 Docker > AutoTrain,以直接用 AutoTrain 的 docker 模板来部署。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto2.png"><br>

</p>

1.4 选择合适的 “Space hardware” 以运行应用。(注意: 对于 AutoTrain 应用,免费的 CPU 基本款就足够了,模型训练会使用单独的计算来完成,我们稍后会进行选择)。

1.5 在 “Space secrets” 下添加你自己的 “HF_TOKEN”,以便让该 Space 可以访问你的 Hub 帐户。如果没有这个,Space 将无法训练或将新模型保存到你的帐户上。(注意: 你可以在 “Settings > Access Tokens” 下的 “Hugging Face Profile” 中找到你的 HF_TOKEN ,请确保其属性为 “Write”)。

1.6 选择将 Space 设为“私有”还是“公开”,对于 AutoTrain Space 而言,建议设为私有,不影响你后面公开分享你的模型或聊天应用。

1.7 点击 “Create Space” 并稍事等待!新 Space 的构建需要几分钟时间,之后你就可以打开 Space 并开始使用 AutoTrain。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto3.png"><br>

</p>

### 第 2 步: 在 AutoTrain 中启动模型训练

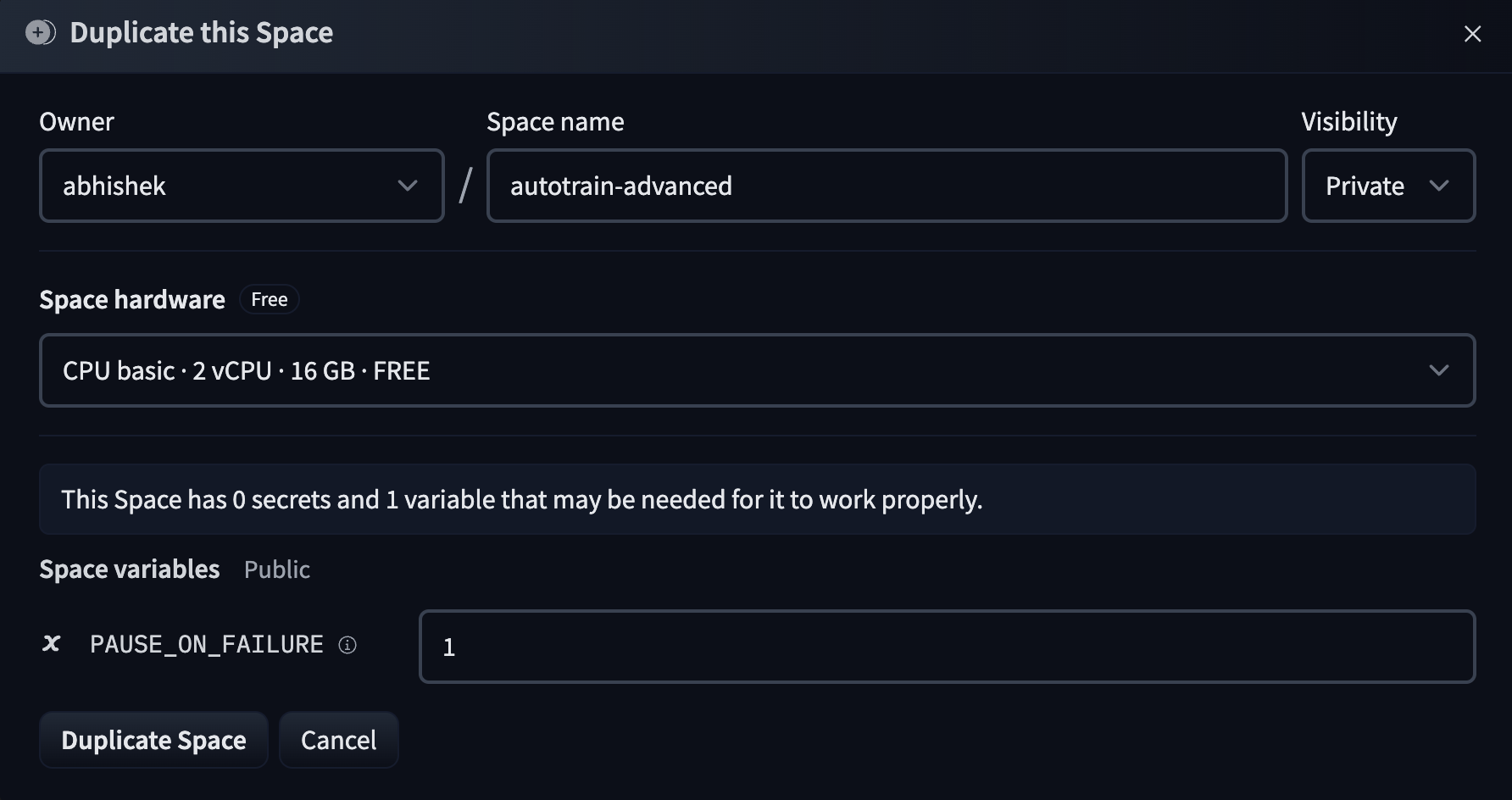

2.1 AutoTrain Space 启动后,你会看到下面的 GUI。AutoTrain 可用于多种不同类型的训练,包括 LLM 微调、文本分类、表格数据以及扩散模型。我们今天主要专注 LLM 训练,因此选择 “LLM” 选项卡。

2.2 从 “Model Choice” 字段中选择你想要训练的 LLM,你可以从列表中选择模型或直接输入 Hugging Face 模型卡的模型名称,在本例中我们使用 Meta 的 Llama 2 7B 基础模型,你可从其 [模型卡](https://huggingface.co/meta-llama/Llama-2-7b-hf) 处了解更多信息。(注意: LLama 2 是受控模型,需要你在使用前向 Meta 申请访问权限,你也可以选择其他非受控模型,如 Falcon)。

2.3 在 “Backend” 中选择你要用于训练的 CPU 或 GPU。对于 7B 模型,“A10G Large” 就足够了。如果想要训练更大的模型,你需要确保该模型可以放进所选 GPU 的内存。(注意: 如果你想训练更大的模型并需要访问 A100 GPU,请发送电子邮件至 [email protected])。

2.4 当然,要微调模型,你需要上传 “Training Data”。执行此操作时,请确保数据集格式正确且文件格式为 CSV。你可在 [此处](https://huggingface.co/docs/autotrain/main/en/llm_finetuning) 找到符合要求的格式的例子。如果你的数据有多列,请务必选择正确的 “Text Column” 以确保 AutoTrain 抽取正确的列作为训练数据。本教程将使用 Alpaca 指令微调数据集,你可在 [此处](https://huggingface.co/datasets/tatsu-lab/alpaca) 获取该数据集的更多信息。你还可以从 [此处](https://huggingface.co/datasets/tofighi/LLM/resolve/main/alpaca.csv) 直接下载 CSV 格式的文件。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto4.png"><br>

</p>

2.5 【可选】 你还可以上传 “Validation Data” 以用于测试训出的模型,但这不是必须的。

2.6 AutoTrain 中有许多高级设置可用于减少模型的内存占用,你可以更改精度 (“FP16”) 、启用量化 (“Int4/8”) 或者决定是否启用 PEFT (参数高效微调)。如果对此不是很精通,建议使用默认设置,因为默认设置可以减少训练模型的时间和成本,且对模型精度的影响很小。

2.7 同样地,你可在 “Parameter Choice” 中配置训练超参,但本教程使用的是默认设置。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto5.png"><br>

</p>

2.8 至此,一切都已设置完毕,点击 “Add Job” 将模型添加到训练队列中,然后点击 “Start Training”(注意: 如果你想用多组不同超参训练多个版本的模型,你可以添加多个作业同时运行)。

2.9 训练开始后,你会看到你的 Hub 帐户里新创建了一个 Space。该 Space 正在运行模型训练,完成后新模型也将显示在你 Hub 帐户的 “Models” 下。(注: 如欲查看训练进度,你可在 Space 中查看实时日志)。

2.10 去喝杯咖啡。训练可能需要几个小时甚至几天的时间,这取决于模型及训练数据的大小。训练完成后,新模型将出现在你的 Hugging Face Hub 帐户的 “Models” 下。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto6.png"><br>

</p>

### 第 3 步: 使用自己的模型创建一个新的 ChatUI Space

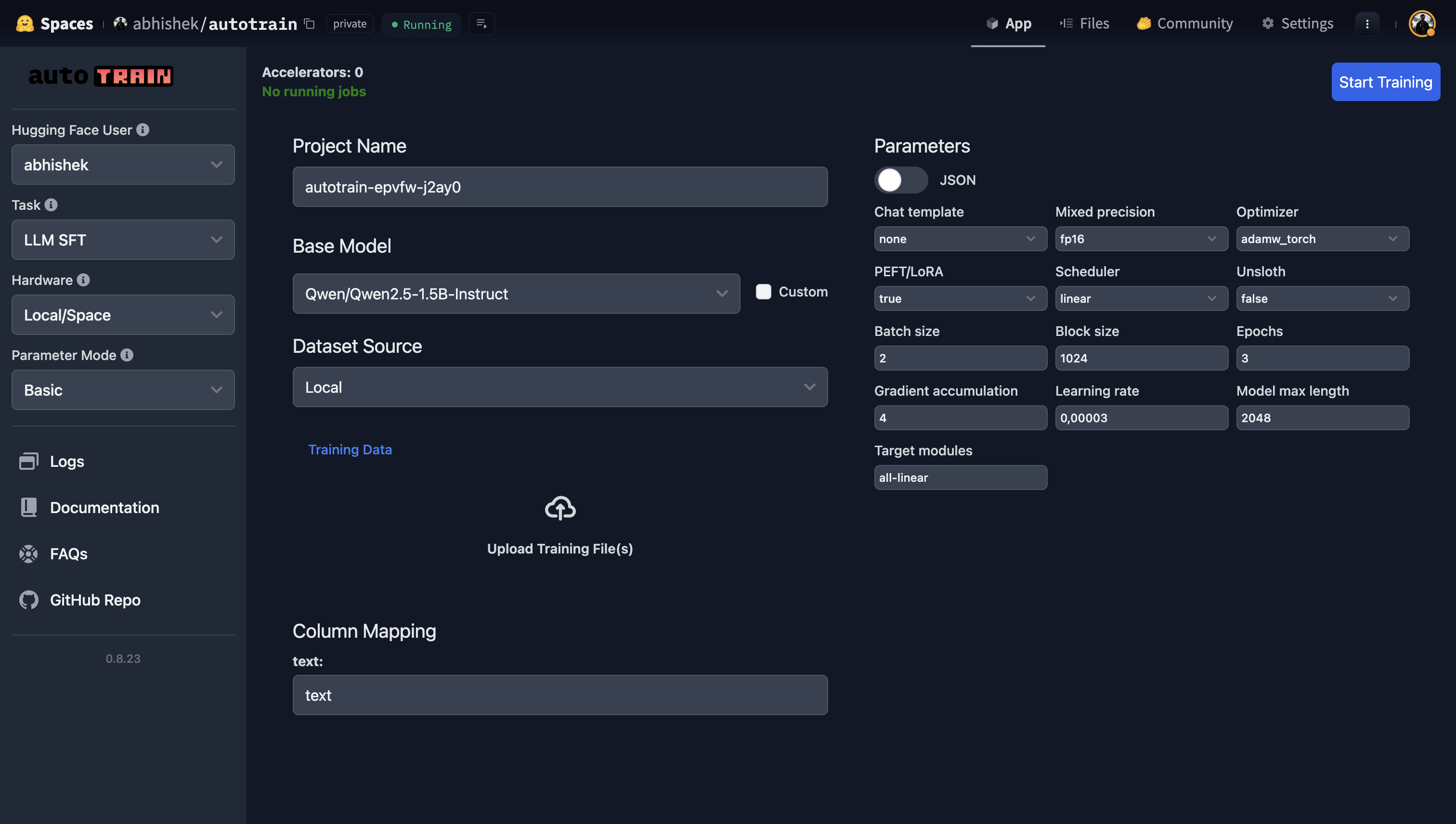

3.1 按照与步骤 1.1 > 1.3 相同的流程设置新 Space,但选择 ChatUI docker 模板而不是 AutoTrain。

3.2 选择合适的 “Space Hardware”,对我们用的 7B 模型而言 A10G Small 足够了。注意硬件的选择需要根据模型的大小而有所不同。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto7.png"><br>

</p>

3.3 如果你有自己的 Mongo DB,你可以填入相应信息,以便将聊天日志存储在 “MONGODB_URL” 下。否则,将该字段留空即可,此时会自动创建一个本地数据库。

3.4 为了能将训后的模型用于聊天应用,你需要在 “Space variables” 下提供 “MODEL_NAME”。你可以通过查看你的 Hugging Face 个人资料的 “Models” 部分找到模型的名称,它和你在 AutoTrain 中设置的 “Project name” 相同。本例中模型的名称为 “2legit2overfit/wrdt-pco6-31a7-0”。

3.5 在 “Space variables” 下,你还可以更改模型的推理参数,包括温度、top-p、生成的最大词元数等文本生成属性。这里,我们还是直接使用默认设置。

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto8.png"><br>

</p>

3.6 现在,你可以点击 “Create” 并启动你自己的开源 ChatGPT,其 GUI 如下。恭喜通关!

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/llama2-non-engineers/tuto9.png"><br>

</p>

_如果你看了本文很想尝试一下,但仍需要技术支持才能开始使用,请随时通过 [此处](https://huggingface.co/support#form) 联系我们并申请支持。 Hugging Face 提供付费专家建议服务,应该能帮到你。_

| 8 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/unity-api.md | ---

title: "如何安装和使用 Hugging Face Unity API"

thumbnail: /blog/assets/124_ml-for-games/unity-api-thumbnail.png

authors:

- user: dylanebert

translators:

- user: SuSung-boy

- user: zhongdongy

proofreader: true

---

# 如何安装和使用 Hugging Face Unity API

[Hugging Face Unity API](https://github.com/huggingface/unity-api) 提供了一个简单易用的接口,允许开发者在自己的 Unity 项目中方便地访问和使用 Hugging Face AI 模型,已集成到 [Hugging Face Inference API](https://huggingface.co/inference-api) 中。本文将详细介绍 API 的安装步骤和使用方法。

## 安装步骤

1. 打开您的 Unity 项目

2. 导航至菜单栏的 `Window` -> `Package Manager`

3. 在弹出窗口中,点击 `+`,选择 `Add Package from git URL`

4. 输入 `https://github.com/huggingface/unity-api.git`

5. 安装完成后,将会弹出 Unity API 向导。如未弹出,可以手动导航至 `Window` -> `Hugging Face API Wizard`

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/packagemanager.gif">

</figure>

1. 在向导窗口输入您的 API 密钥。密钥可以在您的 [Hugging Face 帐户设置](https://huggingface.co/settings/tokens) 中找到或创建

2. 输入完成后可以点击 `Test API key` 测试 API 密钥是否正常

3. 如需替换使用模型,可以通过更改模型端点实现。您可以访问 Hugging Face 网站,找到支持 Inference API 的任意模型端点,在对应页面点击 `Deploy` -> `Inference API`,复制 `API_URL` 字段的 url 地址

4. 如需配置高级设置,可以访问 unity 项目仓库页面 `https://github.com/huggingface/unity-api` 查看最新信息

5. 如需查看 API 使用示例,可以点击 `Install Examples`。现在,您可以关闭 API 向导了。

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/apiwizard.png">

</figure>

API 设置完成后,您就可以从脚本中调用 API 了。让我们来尝试一个计算文本句子相似度的例子,脚本代码如下所示:

```

using HuggingFace.API;

/* other code */

// Make a call to the API

void Query() {

string inputText = "I'm on my way to the forest.";

string[] candidates = {

"The player is going to the city",

"The player is going to the wilderness",

"The player is wandering aimlessly"

};

HuggingFaceAPI.SentenceSimilarity(inputText, OnSuccess, OnError, candidates);

}

// If successful, handle the result

void OnSuccess(float[] result) {

foreach(float value in result) {

Debug.Log(value);

}

}

// Otherwise, handle the error

void OnError(string error) {

Debug.LogError(error);

}

/* other code */

```

## 支持的任务类型和自定义模型

Hugging Face Unity API 目前同样支持以下任务类型:

- [对话 (Conversation)](https://huggingface.co/tasks/conversational)

- [文本生成 (Text Generation)](https://huggingface.co/tasks/text-generation)

- [文生图 (Text to Image)](https://huggingface.co/tasks/text-to-image)

- [文本分类 (Text Classification)](https://huggingface.co/tasks/text-classification)

- [问答 (Question Answering)](https://huggingface.co/tasks/question-answering)

- [翻译 (Translation)](https://huggingface.co/tasks/translation)

- [总结 (Summarization)](https://huggingface.co/tasks/summarization)

- [语音识别 (Speech Recognition)](https://huggingface.co/tasks/automatic-speech-recognition)

您可以使用 `HuggingFaceAPI` 类提供的相应方法来完成这些任务。

如需使用您自己托管在 Hugging Face 上的自定义模型,可以在 API 向导中更改模型端点。

## 使用技巧

1. 请牢记,API 通过异步方式调用,并通过回调来返回响应或错误信息。

2. 如想加快 API 响应速度或提升推理性能,可以通过更改模型端点为资源需求较少的模型。

## 结语

Hugging Face Unity API 提供了一种简单的方式,可以将 AI 模型集成到 Unity 项目中。我们希望本教程对您有所帮助。如果您有任何疑问,或想更多地参与 Hugging Face for Games 系列,可以加入 [Hugging Face Discord](https://hf.co/join/discord) 频道! | 9 |

0 | hf_public_repos | hf_public_repos/blog/jat.md | ---

title: 'Jack of All Trades, Master of Some, a Multi-Purpose Transformer Agent'

thumbnail: /blog/assets/jat/thumbnail.png

authors:

- user: qgallouedec

- user: edbeeching

- user: ClementRomac

- user: thomwolf

---

# Jack of All Trades, Master of Some, a Multi-Purpose Transformer Agent

## Introduction

We're excited to share Jack of All Trades (JAT), a project that aims to move in the direction of a generalist agent. The project started as an open reproduction of the [Gato](https://huggingface.co/papers/2205.06175) (Reed et al., 2022) work, which proposed to train a Transformer able to perform both vision-and-language and decision-making tasks. We thus started by building an open version of Gato’s dataset. We then trained multi-modal Transformer models on it, introducing several improvements over Gato for handling sequential data and continuous values.

Overall, the project has resulted in:

- The release of a large number of **expert RL agents** on a wide variety of tasks.

- The release of the **JAT dataset**, the first dataset for generalist agent training. It contains hundreds of thousands of expert trajectories collected with the expert agents

- The release of the **JAT model**, a transformer-based agent capable of playing video games, controlling a robot to perform a wide variety of tasks, understanding and executing commands in a simple navigation environment and much more!

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/jat/global_schema.gif" alt="Global schema"/>

## Datasets & expert policies

### The expert policies

RL traditionally involves training policies on single environments. Leveraging these expert policies is a genuine way to build a versatile agent. We selected a wide range of environments, of varying nature and difficulty, including Atari, BabyAI, Meta-World, and MuJoCo. For each of these environments, we train an agent until it reached state-of-the-art performance. (For BabyAI, we use the [BabyAI bot](https://github.com/mila-iqia/babyai) instead). The resulting agents are called expert agents, and have been released on the 🤗 Hub. You'll find a list of all agents in the [JAT dataset card](https://huggingface.co/datasets/jat-project/jat-dataset).

### The JAT dataset

We release the [JAT dataset](https://huggingface.co/datasets/jat-project/jat-dataset), the first dataset for generalist agent training. The JAT dataset contains hundreds of thousands of expert trajectories collected with the above-mentioned expert agents. To use this dataset, simply load it like any other dataset from the 🤗 Hub:

```python

>>> from datasets import load_dataset

>>> dataset = load_dataset("jat-project/jat-dataset", "metaworld-assembly")

>>> first_episode = dataset["train"][0]

>>> first_episode.keys()

dict_keys(['continuous_observations', 'continuous_actions', 'rewards'])

>>> len(first_episode["rewards"])

500

>>> first_episode["continuous_actions"][0]

[6.459120273590088, 2.2422609329223633, -5.914587020874023, -19.799840927124023]

```

In addition to RL data, we include textual datasets to enable a unique interface for the user. That's why you'll also find subsets for [Wikipedia](https://huggingface.co/datasets/wikipedia), [Oscar](https://huggingface.co/datasets/oscar), [OK-VQA](https://okvqa.allenai.org) and [Conceptual-Captions](https://huggingface.co/datasets/conceptual_captions).

## JAT agent architecture

JAT's architecture is based on a Transformer, using [EleutherAI's GPT-Neo implementation](https://huggingface.co/docs/transformers/model_doc/gpt_neo). JAT's particularity lies in its embedding mechanism, which has been built to intrinsically handle sequential decision tasks. We interleave observation embeddings with action embeddings, along with the corresponding rewards.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/raw/main/blog/jat/model.svg" width="100%" alt="Model">

<figcaption>Architecture of the JAT network. For sequential decision-making tasks, observations and rewards on the one hand, and actions on the other, are encoded and interleaved. The model generates the next embedding autoregressively with a causal mask, and decodes according to expected modality.</figcaption>

</figure>

Each embedding therefore corresponds either to an observation (associated with the reward), or to an action. But how does JAT encode this information? It depends on the type of data. If the data (observation or action) is an image (as is the case for Atari), then JAT uses a CNN. If it's a continuous vector, then JAT uses a linear layer. Finally, if it's a discrete value, JAT uses a linear projection layer. The same principle is used for model output, depending on the type of data to be predicted. Prediction is causal, shifting observations by 1 time step. In this way, the agent must predict the next action from all previous observations and actions.

In addition, we thought it would be fun to train our agent to perform NLP and CV tasks. To do this, we also gave the encoder the option of taking text and image data as input. For text data, we tokenize using GPT-2 tokenization strategy, and for images, we use a [ViT](https://huggingface.co/docs/transformers/model_doc/vit)-type encoder.

Given that the modality of the data can change from one environment to another, how does JAT compute the loss? It computes the loss for each modality separately. For images and continuous values, it uses the MSE loss. For discrete values, it uses the cross-entropy loss. The final loss is the average of the losses for each element of the sequence.

Wait, does that mean we give equal weight to predicting actions and observations? Actually, no, but we'll talk more about that [below](#the-surprising-benefits-of-predicting-observations).

## Experiments and results

We evaluate JAT on all 157 training tasks. We collect 10 episodes and record the total reward. For ease of reading, we aggregate the results by domain.

<figure class="image text-center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/raw/main/blog/jat/score_steps.svg" alt="Score evolution" width="100%;">

<figcaption>Aggregated expert normalized scores with 95% Confidence Intervals (CIs) for each RL domain as a function of learning step.</figcaption>

</figure>

If we were to summarize these results in one number, it would be 65.8%, the average performance compared to the JAT expert over the 4 domains. This shows that JAT is capable of mimicking expert performance on a very wide variety of tasks.

Let's go into a little more detail:

- For Atari 57, the agent achieves 14.1% of the expert's score, corresponding to 37.6% of human performance. It exceeds human performance on 21 games.

- For BabyAI, the agent achieves 99.0% of the expert's score, and fails to exceed 50% of the expert on just 1 task.