index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/candle/candle-wasm-examples/llama2-c | hf_public_repos/candle/candle-wasm-examples/llama2-c/src/app.rs | use crate::console_log;

use crate::worker::{ModelData, Worker, WorkerInput, WorkerOutput};

use std::str::FromStr;

use wasm_bindgen::prelude::*;

use wasm_bindgen_futures::JsFuture;

use yew::{html, Component, Context, Html};

use yew_agent::{Bridge, Bridged};

async fn fetch_url(url: &str) -> Result<Vec<u8>, JsValue> {

use web_sys::{Request, RequestCache, RequestInit, RequestMode, Response};

let window = web_sys::window().ok_or("window")?;

let opts = RequestInit::new();

opts.set_method("GET");

opts.set_mode(RequestMode::Cors);

opts.set_cache(RequestCache::NoCache);

let request = Request::new_with_str_and_init(url, &opts)?;

let resp_value = JsFuture::from(window.fetch_with_request(&request)).await?;

// `resp_value` is a `Response` object.

assert!(resp_value.is_instance_of::<Response>());

let resp: Response = resp_value.dyn_into()?;

let data = JsFuture::from(resp.blob()?).await?;

let blob = web_sys::Blob::from(data);

let array_buffer = JsFuture::from(blob.array_buffer()).await?;

let data = js_sys::Uint8Array::new(&array_buffer).to_vec();

Ok(data)

}

pub enum Msg {

Refresh,

Run,

UpdateStatus(String),

SetModel(ModelData),

WorkerIn(WorkerInput),

WorkerOut(Result<WorkerOutput, String>),

}

pub struct CurrentDecode {

start_time: Option<f64>,

}

pub struct App {

status: String,

loaded: bool,

temperature: std::rc::Rc<std::cell::RefCell<f64>>,

top_p: std::rc::Rc<std::cell::RefCell<f64>>,

prompt: std::rc::Rc<std::cell::RefCell<String>>,

generated: String,

n_tokens: usize,

current_decode: Option<CurrentDecode>,

worker: Box<dyn Bridge<Worker>>,

}

async fn model_data_load() -> Result<ModelData, JsValue> {

let tokenizer = fetch_url("tokenizer.json").await?;

let model = fetch_url("model.bin").await?;

console_log!("{}", model.len());

Ok(ModelData { tokenizer, model })

}

fn performance_now() -> Option<f64> {

let window = web_sys::window()?;

let performance = window.performance()?;

Some(performance.now() / 1000.)

}

impl Component for App {

type Message = Msg;

type Properties = ();

fn create(ctx: &Context<Self>) -> Self {

let status = "loading weights".to_string();

let cb = {

let link = ctx.link().clone();

move |e| link.send_message(Self::Message::WorkerOut(e))

};

let worker = Worker::bridge(std::rc::Rc::new(cb));

Self {

status,

n_tokens: 0,

temperature: std::rc::Rc::new(std::cell::RefCell::new(0.)),

top_p: std::rc::Rc::new(std::cell::RefCell::new(1.0)),

prompt: std::rc::Rc::new(std::cell::RefCell::new("".to_string())),

generated: String::new(),

current_decode: None,

worker,

loaded: false,

}

}

fn rendered(&mut self, ctx: &Context<Self>, first_render: bool) {

if first_render {

ctx.link().send_future(async {

match model_data_load().await {

Err(err) => {

let status = format!("{err:?}");

Msg::UpdateStatus(status)

}

Ok(model_data) => Msg::SetModel(model_data),

}

});

}

}

fn update(&mut self, ctx: &Context<Self>, msg: Self::Message) -> bool {

match msg {

Msg::SetModel(md) => {

self.status = "weights loaded successfully!".to_string();

self.loaded = true;

console_log!("loaded weights");

self.worker.send(WorkerInput::ModelData(md));

true

}

Msg::Run => {

if self.current_decode.is_some() {

self.status = "already generating some sample at the moment".to_string()

} else {

let start_time = performance_now();

self.current_decode = Some(CurrentDecode { start_time });

self.status = "generating...".to_string();

self.n_tokens = 0;

self.generated.clear();

let temp = *self.temperature.borrow();

let top_p = *self.top_p.borrow();

let prompt = self.prompt.borrow().clone();

console_log!("temp: {}, top_p: {}, prompt: {}", temp, top_p, prompt);

ctx.link()

.send_message(Msg::WorkerIn(WorkerInput::Run(temp, top_p, prompt)))

}

true

}

Msg::WorkerOut(output) => {

match output {

Ok(WorkerOutput::WeightsLoaded) => self.status = "weights loaded!".to_string(),

Ok(WorkerOutput::GenerationDone(Err(err))) => {

self.status = format!("error in worker process: {err}");

self.current_decode = None

}

Ok(WorkerOutput::GenerationDone(Ok(()))) => {

let dt = self.current_decode.as_ref().and_then(|current_decode| {

current_decode.start_time.and_then(|start_time| {

performance_now().map(|stop_time| stop_time - start_time)

})

});

self.status = match dt {

None => "generation succeeded!".to_string(),

Some(dt) => format!(

"generation succeeded in {:.2}s ({:.1} ms/token)",

dt,

dt * 1000.0 / (self.n_tokens as f64)

),

};

self.current_decode = None

}

Ok(WorkerOutput::Generated(token)) => {

self.n_tokens += 1;

self.generated.push_str(&token)

}

Err(err) => {

self.status = format!("error in worker {err:?}");

}

}

true

}

Msg::WorkerIn(inp) => {

self.worker.send(inp);

true

}

Msg::UpdateStatus(status) => {

self.status = status;

true

}

Msg::Refresh => true,

}

}

fn view(&self, ctx: &Context<Self>) -> Html {

use yew::TargetCast;

let temperature = self.temperature.clone();

let oninput_temperature = ctx.link().callback(move |e: yew::InputEvent| {

let input: web_sys::HtmlInputElement = e.target_unchecked_into();

if let Ok(temp) = f64::from_str(&input.value()) {

*temperature.borrow_mut() = temp

}

Msg::Refresh

});

let top_p = self.top_p.clone();

let oninput_top_p = ctx.link().callback(move |e: yew::InputEvent| {

let input: web_sys::HtmlInputElement = e.target_unchecked_into();

if let Ok(top_p_input) = f64::from_str(&input.value()) {

*top_p.borrow_mut() = top_p_input

}

Msg::Refresh

});

let prompt = self.prompt.clone();

let oninput_prompt = ctx.link().callback(move |e: yew::InputEvent| {

let input: web_sys::HtmlInputElement = e.target_unchecked_into();

*prompt.borrow_mut() = input.value();

Msg::Refresh

});

html! {

<div style="margin: 2%;">

<div><p>{"Running "}

<a href="https://github.com/karpathy/llama2.c" target="_blank">{"llama2.c"}</a>

{" in the browser using rust/wasm with "}

<a href="https://github.com/huggingface/candle" target="_blank">{"candle!"}</a>

</p>

<p>{"Once the weights have loaded, click on the run button to start generating content."}

</p>

</div>

{"temperature \u{00a0} "}

<input type="range" min="0." max="1.2" step="0.1" value={self.temperature.borrow().to_string()} oninput={oninput_temperature} id="temp"/>

{format!(" \u{00a0} {}", self.temperature.borrow())}

<br/ >

{"top_p \u{00a0} "}

<input type="range" min="0." max="1.0" step="0.05" value={self.top_p.borrow().to_string()} oninput={oninput_top_p} id="top_p"/>

{format!(" \u{00a0} {}", self.top_p.borrow())}

<br/ >

{"prompt: "}<input type="text" value={self.prompt.borrow().to_string()} oninput={oninput_prompt} id="prompt"/>

<br/ >

{

if self.loaded{

html!(<button class="button" onclick={ctx.link().callback(move |_| Msg::Run)}> { "run" }</button>)

}else{

html! { <progress id="progress-bar" aria-label="Loading weights..."></progress> }

}

}

<br/ >

<h3>

{&self.status}

</h3>

{

if self.current_decode.is_some() {

html! { <progress id="progress-bar" aria-label="generating…"></progress> }

} else {

html! {}

}

}

<blockquote>

<p> { self.generated.chars().map(|c|

if c == '\r' || c == '\n' {

html! { <br/> }

} else {

html! { {c} }

}).collect::<Html>()

} </p>

</blockquote>

</div>

}

}

}

| 0 |

0 | hf_public_repos/candle/candle-wasm-examples/llama2-c/src | hf_public_repos/candle/candle-wasm-examples/llama2-c/src/bin/m.rs | use candle::{Device, Tensor};

use candle_transformers::generation::LogitsProcessor;

use candle_wasm_example_llama2::worker::{Model as M, ModelData};

use wasm_bindgen::prelude::*;

#[wasm_bindgen]

pub struct Model {

inner: M,

logits_processor: LogitsProcessor,

tokens: Vec<u32>,

repeat_penalty: f32,

}

impl Model {

fn process(&mut self, tokens: &[u32]) -> candle::Result<String> {

const REPEAT_LAST_N: usize = 64;

let dev = Device::Cpu;

let input = Tensor::new(tokens, &dev)?.unsqueeze(0)?;

let logits = self.inner.llama.forward(&input, tokens.len())?;

let logits = logits.squeeze(0)?;

let logits = if self.repeat_penalty == 1. || tokens.is_empty() {

logits

} else {

let start_at = self.tokens.len().saturating_sub(REPEAT_LAST_N);

candle_transformers::utils::apply_repeat_penalty(

&logits,

self.repeat_penalty,

&self.tokens[start_at..],

)?

};

let next_token = self.logits_processor.sample(&logits)?;

self.tokens.push(next_token);

let text = match self.inner.tokenizer.id_to_token(next_token) {

Some(text) => text.replace('▁', " ").replace("<0x0A>", "\n"),

None => "".to_string(),

};

Ok(text)

}

}

#[wasm_bindgen]

impl Model {

#[wasm_bindgen(constructor)]

pub fn new(weights: Vec<u8>, tokenizer: Vec<u8>) -> Result<Model, JsError> {

let model = M::load(ModelData {

tokenizer,

model: weights,

});

let logits_processor = LogitsProcessor::new(299792458, None, None);

match model {

Ok(inner) => Ok(Self {

inner,

logits_processor,

tokens: vec![],

repeat_penalty: 1.,

}),

Err(e) => Err(JsError::new(&e.to_string())),

}

}

#[wasm_bindgen]

pub fn get_seq_len(&mut self) -> usize {

self.inner.config.seq_len

}

#[wasm_bindgen]

pub fn init_with_prompt(

&mut self,

prompt: String,

temp: f64,

top_p: f64,

repeat_penalty: f32,

seed: u64,

) -> Result<String, JsError> {

// First reset the cache.

{

let mut cache = self.inner.cache.kvs.lock().unwrap();

for elem in cache.iter_mut() {

*elem = None

}

}

let temp = if temp <= 0. { None } else { Some(temp) };

let top_p = if top_p <= 0. || top_p >= 1. {

None

} else {

Some(top_p)

};

self.logits_processor = LogitsProcessor::new(seed, temp, top_p);

self.repeat_penalty = repeat_penalty;

self.tokens.clear();

let tokens = self

.inner

.tokenizer

.encode(prompt, true)

.map_err(|m| JsError::new(&m.to_string()))?

.get_ids()

.to_vec();

let text = self

.process(&tokens)

.map_err(|m| JsError::new(&m.to_string()))?;

Ok(text)

}

#[wasm_bindgen]

pub fn next_token(&mut self) -> Result<String, JsError> {

let last_token = *self.tokens.last().unwrap();

let text = self

.process(&[last_token])

.map_err(|m| JsError::new(&m.to_string()))?;

Ok(text)

}

}

fn main() {}

| 1 |

0 | hf_public_repos/candle/candle-wasm-examples/llama2-c/src | hf_public_repos/candle/candle-wasm-examples/llama2-c/src/bin/worker.rs | use yew_agent::PublicWorker;

fn main() {

console_error_panic_hook::set_once();

candle_wasm_example_llama2::Worker::register();

}

| 2 |

0 | hf_public_repos/candle/candle-wasm-examples/llama2-c/src | hf_public_repos/candle/candle-wasm-examples/llama2-c/src/bin/app.rs | fn main() {

wasm_logger::init(wasm_logger::Config::new(log::Level::Trace));

console_error_panic_hook::set_once();

yew::Renderer::<candle_wasm_example_llama2::App>::new().render();

}

| 3 |

0 | hf_public_repos/candle | hf_public_repos/candle/candle-core/LICENSE | Apache License

Version 2.0, January 2004

http://www.apache.org/licenses/

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

1. Definitions.

"License" shall mean the terms and conditions for use, reproduction,

and distribution as defined by Sections 1 through 9 of this document.

"Licensor" shall mean the copyright owner or entity authorized by

the copyright owner that is granting the License.

"Legal Entity" shall mean the union of the acting entity and all

other entities that control, are controlled by, or are under common

control with that entity. For the purposes of this definition,

"control" means (i) the power, direct or indirect, to cause the

direction or management of such entity, whether by contract or

otherwise, or (ii) ownership of fifty percent (50%) or more of the

outstanding shares, or (iii) beneficial ownership of such entity.

"You" (or "Your") shall mean an individual or Legal Entity

exercising permissions granted by this License.

"Source" form shall mean the preferred form for making modifications,

including but not limited to software source code, documentation

source, and configuration files.

"Object" form shall mean any form resulting from mechanical

transformation or translation of a Source form, including but

not limited to compiled object code, generated documentation,

and conversions to other media types.

"Work" shall mean the work of authorship, whether in Source or

Object form, made available under the License, as indicated by a

copyright notice that is included in or attached to the work

(an example is provided in the Appendix below).

"Derivative Works" shall mean any work, whether in Source or Object

form, that is based on (or derived from) the Work and for which the

editorial revisions, annotations, elaborations, or other modifications

represent, as a whole, an original work of authorship. For the purposes

of this License, Derivative Works shall not include works that remain

separable from, or merely link (or bind by name) to the interfaces of,

the Work and Derivative Works thereof.

"Contribution" shall mean any work of authorship, including

the original version of the Work and any modifications or additions

to that Work or Derivative Works thereof, that is intentionally

submitted to Licensor for inclusion in the Work by the copyright owner

or by an individual or Legal Entity authorized to submit on behalf of

the copyright owner. For the purposes of this definition, "submitted"

means any form of electronic, verbal, or written communication sent

to the Licensor or its representatives, including but not limited to

communication on electronic mailing lists, source code control systems,

and issue tracking systems that are managed by, or on behalf of, the

Licensor for the purpose of discussing and improving the Work, but

excluding communication that is conspicuously marked or otherwise

designated in writing by the copyright owner as "Not a Contribution."

"Contributor" shall mean Licensor and any individual or Legal Entity

on behalf of whom a Contribution has been received by Licensor and

subsequently incorporated within the Work.

2. Grant of Copyright License. Subject to the terms and conditions of

this License, each Contributor hereby grants to You a perpetual,

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

copyright license to reproduce, prepare Derivative Works of,

publicly display, publicly perform, sublicense, and distribute the

Work and such Derivative Works in Source or Object form.

3. Grant of Patent License. Subject to the terms and conditions of

this License, each Contributor hereby grants to You a perpetual,

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

(except as stated in this section) patent license to make, have made,

use, offer to sell, sell, import, and otherwise transfer the Work,

where such license applies only to those patent claims licensable

by such Contributor that are necessarily infringed by their

Contribution(s) alone or by combination of their Contribution(s)

with the Work to which such Contribution(s) was submitted. If You

institute patent litigation against any entity (including a

cross-claim or counterclaim in a lawsuit) alleging that the Work

or a Contribution incorporated within the Work constitutes direct

or contributory patent infringement, then any patent licenses

granted to You under this License for that Work shall terminate

as of the date such litigation is filed.

4. Redistribution. You may reproduce and distribute copies of the

Work or Derivative Works thereof in any medium, with or without

modifications, and in Source or Object form, provided that You

meet the following conditions:

(a) You must give any other recipients of the Work or

Derivative Works a copy of this License; and

(b) You must cause any modified files to carry prominent notices

stating that You changed the files; and

(c) You must retain, in the Source form of any Derivative Works

that You distribute, all copyright, patent, trademark, and

attribution notices from the Source form of the Work,

excluding those notices that do not pertain to any part of

the Derivative Works; and

(d) If the Work includes a "NOTICE" text file as part of its

distribution, then any Derivative Works that You distribute must

include a readable copy of the attribution notices contained

within such NOTICE file, excluding those notices that do not

pertain to any part of the Derivative Works, in at least one

of the following places: within a NOTICE text file distributed

as part of the Derivative Works; within the Source form or

documentation, if provided along with the Derivative Works; or,

within a display generated by the Derivative Works, if and

wherever such third-party notices normally appear. The contents

of the NOTICE file are for informational purposes only and

do not modify the License. You may add Your own attribution

notices within Derivative Works that You distribute, alongside

or as an addendum to the NOTICE text from the Work, provided

that such additional attribution notices cannot be construed

as modifying the License.

You may add Your own copyright statement to Your modifications and

may provide additional or different license terms and conditions

for use, reproduction, or distribution of Your modifications, or

for any such Derivative Works as a whole, provided Your use,

reproduction, and distribution of the Work otherwise complies with

the conditions stated in this License.

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

6. Trademarks. This License does not grant permission to use the trade

names, trademarks, service marks, or product names of the Licensor,

except as required for reasonable and customary use in describing the

origin of the Work and reproducing the content of the NOTICE file.

7. Disclaimer of Warranty. Unless required by applicable law or

agreed to in writing, Licensor provides the Work (and each

Contributor provides its Contributions) on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

implied, including, without limitation, any warranties or conditions

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

PARTICULAR PURPOSE. You are solely responsible for determining the

appropriateness of using or redistributing the Work and assume any

risks associated with Your exercise of permissions under this License.

8. Limitation of Liability. In no event and under no legal theory,

whether in tort (including negligence), contract, or otherwise,

unless required by applicable law (such as deliberate and grossly

negligent acts) or agreed to in writing, shall any Contributor be

liable to You for damages, including any direct, indirect, special,

incidental, or consequential damages of any character arising as a

result of this License or out of the use or inability to use the

Work (including but not limited to damages for loss of goodwill,

work stoppage, computer failure or malfunction, or any and all

other commercial damages or losses), even if such Contributor

has been advised of the possibility of such damages.

9. Accepting Warranty or Additional Liability. While redistributing

the Work or Derivative Works thereof, You may choose to offer,

and charge a fee for, acceptance of support, warranty, indemnity,

or other liability obligations and/or rights consistent with this

License. However, in accepting such obligations, You may act only

on Your own behalf and on Your sole responsibility, not on behalf

of any other Contributor, and only if You agree to indemnify,

defend, and hold each Contributor harmless for any liability

incurred by, or claims asserted against, such Contributor by reason

of your accepting any such warranty or additional liability.

END OF TERMS AND CONDITIONS

APPENDIX: How to apply the Apache License to your work.

To apply the Apache License to your work, attach the following

boilerplate notice, with the fields enclosed by brackets "[]"

replaced with your own identifying information. (Don't include

the brackets!) The text should be enclosed in the appropriate

comment syntax for the file format. We also recommend that a

file or class name and description of purpose be included on the

same "printed page" as the copyright notice for easier

identification within third-party archives.

Copyright [yyyy] [name of copyright owner]

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

| 4 |

0 | hf_public_repos/candle | hf_public_repos/candle/candle-core/Cargo.toml | [package]

name = "candle-core"

version.workspace = true

edition.workspace = true

description.workspace = true

repository.workspace = true

keywords.workspace = true

categories.workspace = true

license.workspace = true

readme = "README.md"

[dependencies]

accelerate-src = { workspace = true, optional = true }

byteorder = { workspace = true }

candle-kernels = { workspace = true, optional = true }

candle-metal-kernels = { workspace = true, optional = true }

metal = { workspace = true, optional = true}

cudarc = { workspace = true, optional = true }

gemm = { workspace = true }

half = { workspace = true }

intel-mkl-src = { workspace = true, optional = true }

libc = { workspace = true, optional = true }

memmap2 = { workspace = true }

num-traits = { workspace = true }

num_cpus = { workspace = true }

rand = { workspace = true }

rand_distr = { workspace = true }

rayon = { workspace = true }

safetensors = { workspace = true }

thiserror = { workspace = true }

ug = { workspace = true }

ug-cuda = { workspace = true, optional = true }

ug-metal = { workspace = true, optional = true }

yoke = { workspace = true }

zip = { workspace = true }

[dev-dependencies]

anyhow = { workspace = true }

clap = { workspace = true }

criterion = { workspace = true }

[features]

default = []

cuda = ["cudarc", "dep:candle-kernels", "dep:ug-cuda"]

cudnn = ["cuda", "cudarc/cudnn"]

mkl = ["dep:libc", "dep:intel-mkl-src"]

accelerate = ["dep:libc", "dep:accelerate-src"]

metal = ["dep:metal", "dep:candle-metal-kernels", "dep:ug-metal"]

[[bench]]

name = "bench_main"

harness = false

[[example]]

name = "metal_basics"

required-features = ["metal"]

| 5 |

0 | hf_public_repos/candle | hf_public_repos/candle/candle-core/README.md | # candle

Minimalist ML framework for Rust

| 6 |

0 | hf_public_repos/candle/candle-core | hf_public_repos/candle/candle-core/src/scalar.rs | //! TensorScalar Enum and Trait

//!

use crate::{Result, Tensor, WithDType};

pub enum TensorScalar {

Tensor(Tensor),

Scalar(Tensor),

}

pub trait TensorOrScalar {

fn to_tensor_scalar(self) -> Result<TensorScalar>;

}

impl TensorOrScalar for &Tensor {

fn to_tensor_scalar(self) -> Result<TensorScalar> {

Ok(TensorScalar::Tensor(self.clone()))

}

}

impl<T: WithDType> TensorOrScalar for T {

fn to_tensor_scalar(self) -> Result<TensorScalar> {

let scalar = Tensor::new(self, &crate::Device::Cpu)?;

Ok(TensorScalar::Scalar(scalar))

}

}

| 7 |

0 | hf_public_repos/candle/candle-core | hf_public_repos/candle/candle-core/src/npy.rs | //! Numpy support for tensors.

//!

//! The spec for the npy format can be found in

//! [npy-format](https://docs.scipy.org/doc/numpy-1.14.2/neps/npy-format.html).

//! The functions from this module can be used to read tensors from npy/npz files

//! or write tensors to these files. A npy file contains a single tensor (unnamed)

//! whereas a npz file can contain multiple named tensors. npz files are also compressed.

//!

//! These two formats are easy to use in Python using the numpy library.

//!

//! ```python

//! import numpy as np

//! x = np.arange(10)

//!

//! # Write a npy file.

//! np.save("test.npy", x)

//!

//! # Read a value from the npy file.

//! x = np.load("test.npy")

//!

//! # Write multiple values to a npz file.

//! values = { "x": x, "x_plus_one": x + 1 }

//! np.savez("test.npz", **values)

//!

//! # Load multiple values from a npz file.

//! values = np.loadz("test.npz")

//! ```

use crate::{DType, Device, Error, Result, Shape, Tensor};

use byteorder::{LittleEndian, ReadBytesExt};

use half::{bf16, f16, slice::HalfFloatSliceExt};

use std::collections::HashMap;

use std::fs::File;

use std::io::{BufReader, Read, Write};

use std::path::Path;

const NPY_MAGIC_STRING: &[u8] = b"\x93NUMPY";

const NPY_SUFFIX: &str = ".npy";

fn read_header<R: Read>(reader: &mut R) -> Result<String> {

let mut magic_string = vec![0u8; NPY_MAGIC_STRING.len()];

reader.read_exact(&mut magic_string)?;

if magic_string != NPY_MAGIC_STRING {

return Err(Error::Npy("magic string mismatch".to_string()));

}

let mut version = [0u8; 2];

reader.read_exact(&mut version)?;

let header_len_len = match version[0] {

1 => 2,

2 => 4,

otherwise => return Err(Error::Npy(format!("unsupported version {otherwise}"))),

};

let mut header_len = vec![0u8; header_len_len];

reader.read_exact(&mut header_len)?;

let header_len = header_len

.iter()

.rev()

.fold(0_usize, |acc, &v| 256 * acc + v as usize);

let mut header = vec![0u8; header_len];

reader.read_exact(&mut header)?;

Ok(String::from_utf8_lossy(&header).to_string())

}

#[derive(Debug, PartialEq)]

struct Header {

descr: DType,

fortran_order: bool,

shape: Vec<usize>,

}

impl Header {

fn shape(&self) -> Shape {

Shape::from(self.shape.as_slice())

}

fn to_string(&self) -> Result<String> {

let fortran_order = if self.fortran_order { "True" } else { "False" };

let mut shape = self

.shape

.iter()

.map(|x| x.to_string())

.collect::<Vec<_>>()

.join(",");

let descr = match self.descr {

DType::BF16 => Err(Error::Npy("bf16 is not supported".into()))?,

DType::F16 => "f2",

DType::F32 => "f4",

DType::F64 => "f8",

DType::I64 => "i8",

DType::U32 => "u4",

DType::U8 => "u1",

};

if !shape.is_empty() {

shape.push(',')

}

Ok(format!(

"{{'descr': '<{descr}', 'fortran_order': {fortran_order}, 'shape': ({shape}), }}"

))

}

// Hacky parser for the npy header, a typical example would be:

// {'descr': '<f8', 'fortran_order': False, 'shape': (128,), }

fn parse(header: &str) -> Result<Header> {

let header =

header.trim_matches(|c: char| c == '{' || c == '}' || c == ',' || c.is_whitespace());

let mut parts: Vec<String> = vec![];

let mut start_index = 0usize;

let mut cnt_parenthesis = 0i64;

for (index, c) in header.chars().enumerate() {

match c {

'(' => cnt_parenthesis += 1,

')' => cnt_parenthesis -= 1,

',' => {

if cnt_parenthesis == 0 {

parts.push(header[start_index..index].to_owned());

start_index = index + 1;

}

}

_ => {}

}

}

parts.push(header[start_index..].to_owned());

let mut part_map: HashMap<String, String> = HashMap::new();

for part in parts.iter() {

let part = part.trim();

if !part.is_empty() {

match part.split(':').collect::<Vec<_>>().as_slice() {

[key, value] => {

let key = key.trim_matches(|c: char| c == '\'' || c.is_whitespace());

let value = value.trim_matches(|c: char| c == '\'' || c.is_whitespace());

let _ = part_map.insert(key.to_owned(), value.to_owned());

}

_ => return Err(Error::Npy(format!("unable to parse header {header}"))),

}

}

}

let fortran_order = match part_map.get("fortran_order") {

None => false,

Some(fortran_order) => match fortran_order.as_ref() {

"False" => false,

"True" => true,

_ => return Err(Error::Npy(format!("unknown fortran_order {fortran_order}"))),

},

};

let descr = match part_map.get("descr") {

None => return Err(Error::Npy("no descr in header".to_string())),

Some(descr) => {

if descr.is_empty() {

return Err(Error::Npy("empty descr".to_string()));

}

if descr.starts_with('>') {

return Err(Error::Npy(format!("little-endian descr {descr}")));

}

// the only supported types in tensor are:

// float64, float32, float16,

// complex64, complex128,

// int64, int32, int16, int8,

// uint8, and bool.

match descr.trim_matches(|c: char| c == '=' || c == '<' || c == '|') {

"e" | "f2" => DType::F16,

"f" | "f4" => DType::F32,

"d" | "f8" => DType::F64,

// "i" | "i4" => DType::S32,

"q" | "i8" => DType::I64,

// "h" | "i2" => DType::S16,

// "b" | "i1" => DType::S8,

"B" | "u1" => DType::U8,

"I" | "u4" => DType::U32,

"?" | "b1" => DType::U8,

// "F" | "F4" => DType::C64,

// "D" | "F8" => DType::C128,

descr => return Err(Error::Npy(format!("unrecognized descr {descr}"))),

}

}

};

let shape = match part_map.get("shape") {

None => return Err(Error::Npy("no shape in header".to_string())),

Some(shape) => {

let shape = shape.trim_matches(|c: char| c == '(' || c == ')' || c == ',');

if shape.is_empty() {

vec![]

} else {

shape

.split(',')

.map(|v| v.trim().parse::<usize>())

.collect::<std::result::Result<Vec<_>, _>>()?

}

}

};

Ok(Header {

descr,

fortran_order,

shape,

})

}

}

impl Tensor {

// TODO: Add the possibility to read directly to a device?

pub(crate) fn from_reader<R: std::io::Read>(

shape: Shape,

dtype: DType,

reader: &mut R,

) -> Result<Self> {

let elem_count = shape.elem_count();

match dtype {

DType::BF16 => {

let mut data_t = vec![bf16::ZERO; elem_count];

reader.read_u16_into::<LittleEndian>(data_t.reinterpret_cast_mut())?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::F16 => {

let mut data_t = vec![f16::ZERO; elem_count];

reader.read_u16_into::<LittleEndian>(data_t.reinterpret_cast_mut())?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::F32 => {

let mut data_t = vec![0f32; elem_count];

reader.read_f32_into::<LittleEndian>(&mut data_t)?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::F64 => {

let mut data_t = vec![0f64; elem_count];

reader.read_f64_into::<LittleEndian>(&mut data_t)?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::U8 => {

let mut data_t = vec![0u8; elem_count];

reader.read_exact(&mut data_t)?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::U32 => {

let mut data_t = vec![0u32; elem_count];

reader.read_u32_into::<LittleEndian>(&mut data_t)?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

DType::I64 => {

let mut data_t = vec![0i64; elem_count];

reader.read_i64_into::<LittleEndian>(&mut data_t)?;

Tensor::from_vec(data_t, shape, &Device::Cpu)

}

}

}

/// Reads a npy file and return the stored multi-dimensional array as a tensor.

pub fn read_npy<T: AsRef<Path>>(path: T) -> Result<Self> {

let mut reader = File::open(path.as_ref())?;

let header = read_header(&mut reader)?;

let header = Header::parse(&header)?;

if header.fortran_order {

return Err(Error::Npy("fortran order not supported".to_string()));

}

Self::from_reader(header.shape(), header.descr, &mut reader)

}

/// Reads a npz file and returns the stored multi-dimensional arrays together with their names.

pub fn read_npz<T: AsRef<Path>>(path: T) -> Result<Vec<(String, Self)>> {

let zip_reader = BufReader::new(File::open(path.as_ref())?);

let mut zip = zip::ZipArchive::new(zip_reader)?;

let mut result = vec![];

for i in 0..zip.len() {

let mut reader = zip.by_index(i)?;

let name = {

let name = reader.name();

name.strip_suffix(NPY_SUFFIX).unwrap_or(name).to_owned()

};

let header = read_header(&mut reader)?;

let header = Header::parse(&header)?;

if header.fortran_order {

return Err(Error::Npy("fortran order not supported".to_string()));

}

let s = Self::from_reader(header.shape(), header.descr, &mut reader)?;

result.push((name, s))

}

Ok(result)

}

/// Reads a npz file and returns the stored multi-dimensional arrays for some specified names.

pub fn read_npz_by_name<T: AsRef<Path>>(path: T, names: &[&str]) -> Result<Vec<Self>> {

let zip_reader = BufReader::new(File::open(path.as_ref())?);

let mut zip = zip::ZipArchive::new(zip_reader)?;

let mut result = vec![];

for name in names.iter() {

let mut reader = match zip.by_name(&format!("{name}{NPY_SUFFIX}")) {

Ok(reader) => reader,

Err(_) => Err(Error::Npy(format!(

"no array for {name} in {:?}",

path.as_ref()

)))?,

};

let header = read_header(&mut reader)?;

let header = Header::parse(&header)?;

if header.fortran_order {

return Err(Error::Npy("fortran order not supported".to_string()));

}

let s = Self::from_reader(header.shape(), header.descr, &mut reader)?;

result.push(s)

}

Ok(result)

}

fn write<T: Write>(&self, f: &mut T) -> Result<()> {

f.write_all(NPY_MAGIC_STRING)?;

f.write_all(&[1u8, 0u8])?;

let header = Header {

descr: self.dtype(),

fortran_order: false,

shape: self.dims().to_vec(),

};

let mut header = header.to_string()?;

let pad = 16 - (NPY_MAGIC_STRING.len() + 5 + header.len()) % 16;

for _ in 0..pad % 16 {

header.push(' ')

}

header.push('\n');

f.write_all(&[(header.len() % 256) as u8, (header.len() / 256) as u8])?;

f.write_all(header.as_bytes())?;

self.write_bytes(f)

}

/// Writes a multi-dimensional array in the npy format.

pub fn write_npy<T: AsRef<Path>>(&self, path: T) -> Result<()> {

let mut f = File::create(path.as_ref())?;

self.write(&mut f)

}

/// Writes multiple multi-dimensional arrays using the npz format.

pub fn write_npz<S: AsRef<str>, T: AsRef<Tensor>, P: AsRef<Path>>(

ts: &[(S, T)],

path: P,

) -> Result<()> {

let mut zip = zip::ZipWriter::new(File::create(path.as_ref())?);

let options: zip::write::FileOptions<()> =

zip::write::FileOptions::default().compression_method(zip::CompressionMethod::Stored);

for (name, tensor) in ts.iter() {

zip.start_file(format!("{}.npy", name.as_ref()), options)?;

tensor.as_ref().write(&mut zip)?

}

Ok(())

}

}

/// Lazy tensor loader.

pub struct NpzTensors {

index_per_name: HashMap<String, usize>,

path: std::path::PathBuf,

// We do not store a zip reader as it needs mutable access to extract data. Instead we

// re-create a zip reader for each tensor.

}

impl NpzTensors {

pub fn new<T: AsRef<Path>>(path: T) -> Result<Self> {

let path = path.as_ref().to_owned();

let zip_reader = BufReader::new(File::open(&path)?);

let mut zip = zip::ZipArchive::new(zip_reader)?;

let mut index_per_name = HashMap::new();

for i in 0..zip.len() {

let file = zip.by_index(i)?;

let name = {

let name = file.name();

name.strip_suffix(NPY_SUFFIX).unwrap_or(name).to_owned()

};

index_per_name.insert(name, i);

}

Ok(Self {

index_per_name,

path,

})

}

pub fn names(&self) -> Vec<&String> {

self.index_per_name.keys().collect()

}

/// This only returns the shape and dtype for a named tensor. Compared to `get`, this avoids

/// reading the whole tensor data.

pub fn get_shape_and_dtype(&self, name: &str) -> Result<(Shape, DType)> {

let index = match self.index_per_name.get(name) {

None => crate::bail!("cannot find tensor {name}"),

Some(index) => *index,

};

let zip_reader = BufReader::new(File::open(&self.path)?);

let mut zip = zip::ZipArchive::new(zip_reader)?;

let mut reader = zip.by_index(index)?;

let header = read_header(&mut reader)?;

let header = Header::parse(&header)?;

Ok((header.shape(), header.descr))

}

pub fn get(&self, name: &str) -> Result<Option<Tensor>> {

let index = match self.index_per_name.get(name) {

None => return Ok(None),

Some(index) => *index,

};

// We hope that the file has not changed since first reading it.

let zip_reader = BufReader::new(File::open(&self.path)?);

let mut zip = zip::ZipArchive::new(zip_reader)?;

let mut reader = zip.by_index(index)?;

let header = read_header(&mut reader)?;

let header = Header::parse(&header)?;

if header.fortran_order {

return Err(Error::Npy("fortran order not supported".to_string()));

}

let tensor = Tensor::from_reader(header.shape(), header.descr, &mut reader)?;

Ok(Some(tensor))

}

}

#[cfg(test)]

mod tests {

use super::Header;

#[test]

fn parse() {

let h = "{'descr': '<f8', 'fortran_order': False, 'shape': (128,), }";

assert_eq!(

Header::parse(h).unwrap(),

Header {

descr: crate::DType::F64,

fortran_order: false,

shape: vec![128]

}

);

let h = "{'descr': '<f4', 'fortran_order': True, 'shape': (256,1,128), }";

let h = Header::parse(h).unwrap();

assert_eq!(

h,

Header {

descr: crate::DType::F32,

fortran_order: true,

shape: vec![256, 1, 128]

}

);

assert_eq!(

h.to_string().unwrap(),

"{'descr': '<f4', 'fortran_order': True, 'shape': (256,1,128,), }"

);

let h = Header {

descr: crate::DType::U32,

fortran_order: false,

shape: vec![],

};

assert_eq!(

h.to_string().unwrap(),

"{'descr': '<u4', 'fortran_order': False, 'shape': (), }"

);

}

}

| 8 |

0 | hf_public_repos/candle/candle-core | hf_public_repos/candle/candle-core/src/op.rs | //! Tensor Opertion Enums and Traits

//!

#![allow(clippy::redundant_closure_call)]

use crate::Tensor;

use half::{bf16, f16};

use num_traits::float::Float;

#[derive(Clone, Copy, PartialEq, Eq)]

pub enum CmpOp {

Eq,

Ne,

Le,

Ge,

Lt,

Gt,

}

#[derive(Debug, Clone, Copy, PartialEq, Eq)]

pub enum ReduceOp {

Sum,

Min,

Max,

ArgMin,

ArgMax,

}

impl ReduceOp {

pub(crate) fn name(&self) -> &'static str {

match self {

Self::ArgMax => "argmax",

Self::ArgMin => "argmin",

Self::Min => "min",

Self::Max => "max",

Self::Sum => "sum",

}

}

}

// These ops return the same type as their input type.

#[derive(Debug, Clone, Copy, PartialEq, Eq)]

pub enum BinaryOp {

Add,

Mul,

Sub,

Div,

Maximum,

Minimum,

}

// Unary ops with no argument

#[derive(Debug, Clone, Copy, PartialEq, Eq)]

pub enum UnaryOp {

Exp,

Log,

Sin,

Cos,

Abs,

Neg,

Recip,

Sqr,

Sqrt,

Gelu,

GeluErf,

Erf,

Relu,

Silu,

Tanh,

Floor,

Ceil,

Round,

Sign,

}

#[derive(Clone)]

pub enum Op {

Binary(Tensor, Tensor, BinaryOp),

Unary(Tensor, UnaryOp),

Cmp(Tensor, CmpOp),

// The third argument is the reduced shape with `keepdim=true`.

Reduce(Tensor, ReduceOp, Vec<usize>),

Matmul(Tensor, Tensor),

Gather(Tensor, Tensor, usize),

ScatterAdd(Tensor, Tensor, Tensor, usize),

IndexSelect(Tensor, Tensor, usize),

IndexAdd(Tensor, Tensor, Tensor, usize),

WhereCond(Tensor, Tensor, Tensor),

#[allow(dead_code)]

Conv1D {

arg: Tensor,

kernel: Tensor,

padding: usize,

stride: usize,

dilation: usize,

},

#[allow(dead_code)]

ConvTranspose1D {

arg: Tensor,

kernel: Tensor,

padding: usize,

output_padding: usize,

stride: usize,

dilation: usize,

},

#[allow(dead_code)]

Conv2D {

arg: Tensor,

kernel: Tensor,

padding: usize,

stride: usize,

dilation: usize,

},

#[allow(dead_code)]

ConvTranspose2D {

arg: Tensor,

kernel: Tensor,

padding: usize,

output_padding: usize,

stride: usize,

dilation: usize,

},

AvgPool2D {

arg: Tensor,

kernel_size: (usize, usize),

stride: (usize, usize),

},

MaxPool2D {

arg: Tensor,

kernel_size: (usize, usize),

stride: (usize, usize),

},

UpsampleNearest1D {

arg: Tensor,

target_size: usize,

},

UpsampleNearest2D {

arg: Tensor,

target_h: usize,

target_w: usize,

},

Cat(Vec<Tensor>, usize),

#[allow(dead_code)] // add is currently unused.

Affine {

arg: Tensor,

mul: f64,

add: f64,

},

ToDType(Tensor),

Copy(Tensor),

Broadcast(Tensor),

Narrow(Tensor, usize, usize, usize),

SliceScatter0(Tensor, Tensor, usize),

Reshape(Tensor),

ToDevice(Tensor),

Transpose(Tensor, usize, usize),

Permute(Tensor, Vec<usize>),

Elu(Tensor, f64),

Powf(Tensor, f64),

CustomOp1(

Tensor,

std::sync::Arc<Box<dyn crate::CustomOp1 + Send + Sync>>,

),

CustomOp2(

Tensor,

Tensor,

std::sync::Arc<Box<dyn crate::CustomOp2 + Send + Sync>>,

),

CustomOp3(

Tensor,

Tensor,

Tensor,

std::sync::Arc<Box<dyn crate::CustomOp3 + Send + Sync>>,

),

}

pub trait UnaryOpT {

const NAME: &'static str;

const KERNEL: &'static str;

const V: Self;

fn bf16(v1: bf16) -> bf16;

fn f16(v1: f16) -> f16;

fn f32(v1: f32) -> f32;

fn f64(v1: f64) -> f64;

fn u8(v1: u8) -> u8;

fn u32(v1: u32) -> u32;

fn i64(v1: i64) -> i64;

// There is no very good way to represent optional function in traits so we go for an explicit

// boolean flag to mark the function as existing.

const BF16_VEC: bool = false;

fn bf16_vec(_xs: &[bf16], _ys: &mut [bf16]) {}

const F16_VEC: bool = false;

fn f16_vec(_xs: &[f16], _ys: &mut [f16]) {}

const F32_VEC: bool = false;

fn f32_vec(_xs: &[f32], _ys: &mut [f32]) {}

const F64_VEC: bool = false;

fn f64_vec(_xs: &[f64], _ys: &mut [f64]) {}

}

pub trait BinaryOpT {

const NAME: &'static str;

const KERNEL: &'static str;

const V: Self;

fn bf16(v1: bf16, v2: bf16) -> bf16;

fn f16(v1: f16, v2: f16) -> f16;

fn f32(v1: f32, v2: f32) -> f32;

fn f64(v1: f64, v2: f64) -> f64;

fn u8(v1: u8, v2: u8) -> u8;

fn u32(v1: u32, v2: u32) -> u32;

fn i64(v1: i64, v2: i64) -> i64;

const BF16_VEC: bool = false;

fn bf16_vec(_xs1: &[bf16], _xs2: &[bf16], _ys: &mut [bf16]) {}

const F16_VEC: bool = false;

fn f16_vec(_xs1: &[f16], _xs2: &[f16], _ys: &mut [f16]) {}

const F32_VEC: bool = false;

fn f32_vec(_xs1: &[f32], _xs2: &[f32], _ys: &mut [f32]) {}

const F64_VEC: bool = false;

fn f64_vec(_xs1: &[f64], _xs2: &[f64], _ys: &mut [f64]) {}

const U8_VEC: bool = false;

fn u8_vec(_xs1: &[u8], _xs2: &[u8], _ys: &mut [u8]) {}

const U32_VEC: bool = false;

fn u32_vec(_xs1: &[u32], _xs2: &[u32], _ys: &mut [u32]) {}

const I64_VEC: bool = false;

fn i64_vec(_xs1: &[i64], _xs2: &[i64], _ys: &mut [i64]) {}

}

pub(crate) struct Add;

pub(crate) struct Div;

pub(crate) struct Mul;

pub(crate) struct Sub;

pub(crate) struct Maximum;

pub(crate) struct Minimum;

pub(crate) struct Exp;

pub(crate) struct Log;

pub(crate) struct Sin;

pub(crate) struct Cos;

pub(crate) struct Abs;

pub(crate) struct Neg;

pub(crate) struct Recip;

pub(crate) struct Sqr;

pub(crate) struct Sqrt;

pub(crate) struct Gelu;

pub(crate) struct GeluErf;

pub(crate) struct Erf;

pub(crate) struct Relu;

pub(crate) struct Silu;

pub(crate) struct Tanh;

pub(crate) struct Floor;

pub(crate) struct Ceil;

pub(crate) struct Round;

pub(crate) struct Sign;

macro_rules! bin_op {

($op:ident, $name: literal, $e: expr, $f32_vec: ident, $f64_vec: ident) => {

impl BinaryOpT for $op {

const NAME: &'static str = $name;

const KERNEL: &'static str = concat!("b", $name);

const V: Self = $op;

#[inline(always)]

fn bf16(v1: bf16, v2: bf16) -> bf16 {

$e(v1, v2)

}

#[inline(always)]

fn f16(v1: f16, v2: f16) -> f16 {

$e(v1, v2)

}

#[inline(always)]

fn f32(v1: f32, v2: f32) -> f32 {

$e(v1, v2)

}

#[inline(always)]

fn f64(v1: f64, v2: f64) -> f64 {

$e(v1, v2)

}

#[inline(always)]

fn u8(v1: u8, v2: u8) -> u8 {

$e(v1, v2)

}

#[inline(always)]

fn u32(v1: u32, v2: u32) -> u32 {

$e(v1, v2)

}

#[inline(always)]

fn i64(v1: i64, v2: i64) -> i64 {

$e(v1, v2)

}

#[cfg(feature = "mkl")]

const F32_VEC: bool = true;

#[cfg(feature = "mkl")]

const F64_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f32_vec(xs1: &[f32], xs2: &[f32], ys: &mut [f32]) {

crate::mkl::$f32_vec(xs1, xs2, ys)

}

#[cfg(feature = "mkl")]

#[inline(always)]

fn f64_vec(xs1: &[f64], xs2: &[f64], ys: &mut [f64]) {

crate::mkl::$f64_vec(xs1, xs2, ys)

}

#[cfg(feature = "accelerate")]

const F32_VEC: bool = true;

#[cfg(feature = "accelerate")]

const F64_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f32_vec(xs1: &[f32], xs2: &[f32], ys: &mut [f32]) {

crate::accelerate::$f32_vec(xs1, xs2, ys)

}

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f64_vec(xs1: &[f64], xs2: &[f64], ys: &mut [f64]) {

crate::accelerate::$f64_vec(xs1, xs2, ys)

}

}

};

}

bin_op!(Add, "add", |v1, v2| v1 + v2, vs_add, vd_add);

bin_op!(Sub, "sub", |v1, v2| v1 - v2, vs_sub, vd_sub);

bin_op!(Mul, "mul", |v1, v2| v1 * v2, vs_mul, vd_mul);

bin_op!(Div, "div", |v1, v2| v1 / v2, vs_div, vd_div);

bin_op!(

Minimum,

"minimum",

|v1, v2| if v1 > v2 { v2 } else { v1 },

vs_min,

vd_min

);

bin_op!(

Maximum,

"maximum",

|v1, v2| if v1 < v2 { v2 } else { v1 },

vs_max,

vd_max

);

#[allow(clippy::redundant_closure_call)]

macro_rules! unary_op {

($op: ident, $name: literal, $a: ident, $e: expr) => {

impl UnaryOpT for $op {

const NAME: &'static str = $name;

const KERNEL: &'static str = concat!("u", $name);

const V: Self = $op;

#[inline(always)]

fn bf16($a: bf16) -> bf16 {

$e

}

#[inline(always)]

fn f16($a: f16) -> f16 {

$e

}

#[inline(always)]

fn f32($a: f32) -> f32 {

$e

}

#[inline(always)]

fn f64($a: f64) -> f64 {

$e

}

#[inline(always)]

fn u8(_: u8) -> u8 {

todo!("no unary function for u8")

}

#[inline(always)]

fn u32(_: u32) -> u32 {

todo!("no unary function for u32")

}

#[inline(always)]

fn i64(_: i64) -> i64 {

todo!("no unary function for i64")

}

}

};

($op: ident, $name: literal, $a: ident, $e: expr, $f32_vec:ident, $f64_vec:ident) => {

impl UnaryOpT for $op {

const NAME: &'static str = $name;

const KERNEL: &'static str = concat!("u", $name);

const V: Self = $op;

#[inline(always)]

fn bf16($a: bf16) -> bf16 {

$e

}

#[inline(always)]

fn f16($a: f16) -> f16 {

$e

}

#[inline(always)]

fn f32($a: f32) -> f32 {

$e

}

#[inline(always)]

fn f64($a: f64) -> f64 {

$e

}

#[inline(always)]

fn u8(_: u8) -> u8 {

todo!("no unary function for u8")

}

#[inline(always)]

fn u32(_: u32) -> u32 {

todo!("no unary function for u32")

}

#[inline(always)]

fn i64(_: i64) -> i64 {

todo!("no unary function for i64")

}

#[cfg(feature = "mkl")]

const F32_VEC: bool = true;

#[cfg(feature = "mkl")]

const F64_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::mkl::$f32_vec(xs, ys)

}

#[cfg(feature = "mkl")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::mkl::$f64_vec(xs, ys)

}

#[cfg(feature = "accelerate")]

const F32_VEC: bool = true;

#[cfg(feature = "accelerate")]

const F64_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::accelerate::$f32_vec(xs, ys)

}

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::accelerate::$f64_vec(xs, ys)

}

}

};

}

unary_op!(Exp, "exp", v, v.exp(), vs_exp, vd_exp);

unary_op!(Log, "log", v, v.ln(), vs_ln, vd_ln);

unary_op!(Sin, "sin", v, v.sin(), vs_sin, vd_sin);

unary_op!(Cos, "cos", v, v.cos(), vs_cos, vd_cos);

unary_op!(Tanh, "tanh", v, v.tanh(), vs_tanh, vd_tanh);

unary_op!(Neg, "neg", v, -v);

unary_op!(Recip, "recip", v, v.recip());

unary_op!(Sqr, "sqr", v, v * v, vs_sqr, vd_sqr);

unary_op!(Sqrt, "sqrt", v, v.sqrt(), vs_sqrt, vd_sqrt);

// Hardcode the value for sqrt(2/pi)

// https://github.com/huggingface/candle/issues/1982

#[allow(clippy::excessive_precision)]

const SQRT_TWO_OVER_PI_F32: f32 = 0.79788456080286535587989211986876373;

#[allow(clippy::excessive_precision)]

const SQRT_TWO_OVER_PI_F64: f64 = 0.79788456080286535587989211986876373;

/// Tanh based approximation of the `gelu` operation

/// GeluErf is the more precise one.

/// <https://en.wikipedia.org/wiki/Activation_function#Comparison_of_activation_functions>

impl UnaryOpT for Gelu {

const NAME: &'static str = "gelu";

const V: Self = Gelu;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

bf16::from_f32_const(0.5)

* v

* (bf16::ONE

+ bf16::tanh(

bf16::from_f32_const(SQRT_TWO_OVER_PI_F32)

* v

* (bf16::ONE + bf16::from_f32_const(0.044715) * v * v),

))

}

#[inline(always)]

fn f16(v: f16) -> f16 {

f16::from_f32_const(0.5)

* v

* (f16::ONE

+ f16::tanh(

f16::from_f32_const(SQRT_TWO_OVER_PI_F32)

* v

* (f16::ONE + f16::from_f32_const(0.044715) * v * v),

))

}

#[inline(always)]

fn f32(v: f32) -> f32 {

0.5 * v * (1.0 + f32::tanh(SQRT_TWO_OVER_PI_F32 * v * (1.0 + 0.044715 * v * v)))

}

#[inline(always)]

fn f64(v: f64) -> f64 {

0.5 * v * (1.0 + f64::tanh(SQRT_TWO_OVER_PI_F64 * v * (1.0 + 0.044715 * v * v)))

}

#[inline(always)]

fn u8(_: u8) -> u8 {

0

}

#[inline(always)]

fn u32(_: u32) -> u32 {

0

}

#[inline(always)]

fn i64(_: i64) -> i64 {

0

}

const KERNEL: &'static str = "ugelu";

#[cfg(feature = "mkl")]

const F32_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::mkl::vs_gelu(xs, ys)

}

#[cfg(feature = "mkl")]

const F64_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::mkl::vd_gelu(xs, ys)

}

#[cfg(feature = "accelerate")]

const F32_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::accelerate::vs_gelu(xs, ys)

}

#[cfg(feature = "accelerate")]

const F64_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::accelerate::vd_gelu(xs, ys)

}

}

/// `erf` operation

/// <https://en.wikipedia.org/wiki/Error_function>

impl UnaryOpT for Erf {

const NAME: &'static str = "erf";

const KERNEL: &'static str = "uerf";

const V: Self = Erf;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

bf16::from_f64(Self::f64(v.to_f64()))

}

#[inline(always)]

fn f16(v: f16) -> f16 {

f16::from_f64(Self::f64(v.to_f64()))

}

#[inline(always)]

fn f32(v: f32) -> f32 {

Self::f64(v as f64) as f32

}

#[inline(always)]

fn f64(v: f64) -> f64 {

crate::cpu::erf::erf(v)

}

#[inline(always)]

fn u8(_: u8) -> u8 {

0

}

#[inline(always)]

fn u32(_: u32) -> u32 {

0

}

#[inline(always)]

fn i64(_: i64) -> i64 {

0

}

}

/// Silu operation

impl UnaryOpT for Silu {

const NAME: &'static str = "silu";

const V: Self = Silu;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v / (bf16::ONE + (-v).exp())

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v / (f16::ONE + (-v).exp())

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v / (1.0 + (-v).exp())

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v / (1.0 + (-v).exp())

}

#[inline(always)]

fn u8(_: u8) -> u8 {

0

}

#[inline(always)]

fn u32(_: u32) -> u32 {

0

}

#[inline(always)]

fn i64(_: i64) -> i64 {

0

}

const KERNEL: &'static str = "usilu";

#[cfg(feature = "mkl")]

const F32_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::mkl::vs_silu(xs, ys)

}

#[cfg(feature = "mkl")]

const F64_VEC: bool = true;

#[cfg(feature = "mkl")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::mkl::vd_silu(xs, ys)

}

#[cfg(feature = "accelerate")]

const F32_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f32_vec(xs: &[f32], ys: &mut [f32]) {

crate::accelerate::vs_silu(xs, ys)

}

#[cfg(feature = "accelerate")]

const F64_VEC: bool = true;

#[cfg(feature = "accelerate")]

#[inline(always)]

fn f64_vec(xs: &[f64], ys: &mut [f64]) {

crate::accelerate::vd_silu(xs, ys)

}

}

impl UnaryOpT for Abs {

const NAME: &'static str = "abs";

const KERNEL: &'static str = "uabs";

const V: Self = Abs;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v.abs()

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v.abs()

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v.abs()

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v.abs()

}

#[inline(always)]

fn u8(v: u8) -> u8 {

v

}

#[inline(always)]

fn u32(v: u32) -> u32 {

v

}

#[inline(always)]

fn i64(v: i64) -> i64 {

v.abs()

}

}

impl UnaryOpT for Ceil {

const NAME: &'static str = "ceil";

const KERNEL: &'static str = "uceil";

const V: Self = Ceil;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v.ceil()

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v.ceil()

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v.ceil()

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v.ceil()

}

#[inline(always)]

fn u8(v: u8) -> u8 {

v

}

#[inline(always)]

fn u32(v: u32) -> u32 {

v

}

#[inline(always)]

fn i64(v: i64) -> i64 {

v

}

}

impl UnaryOpT for Floor {

const NAME: &'static str = "floor";

const KERNEL: &'static str = "ufloor";

const V: Self = Floor;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v.floor()

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v.floor()

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v.floor()

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v.floor()

}

#[inline(always)]

fn u8(v: u8) -> u8 {

v

}

#[inline(always)]

fn u32(v: u32) -> u32 {

v

}

#[inline(always)]

fn i64(v: i64) -> i64 {

v

}

}

impl UnaryOpT for Round {

const NAME: &'static str = "round";

const KERNEL: &'static str = "uround";

const V: Self = Round;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v.round()

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v.round()

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v.round()

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v.round()

}

#[inline(always)]

fn u8(v: u8) -> u8 {

v

}

#[inline(always)]

fn u32(v: u32) -> u32 {

v

}

#[inline(always)]

fn i64(v: i64) -> i64 {

v

}

}

impl UnaryOpT for GeluErf {

const NAME: &'static str = "gelu_erf";

const KERNEL: &'static str = "ugelu_erf";

const V: Self = GeluErf;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

bf16::from_f64(Self::f64(v.to_f64()))

}

#[inline(always)]

fn f16(v: f16) -> f16 {

f16::from_f64(Self::f64(v.to_f64()))

}

#[inline(always)]

fn f32(v: f32) -> f32 {

Self::f64(v as f64) as f32

}

#[inline(always)]

fn f64(v: f64) -> f64 {

(crate::cpu::erf::erf(v / 2f64.sqrt()) + 1.) * 0.5 * v

}

#[inline(always)]

fn u8(_: u8) -> u8 {

0

}

#[inline(always)]

fn u32(_: u32) -> u32 {

0

}

#[inline(always)]

fn i64(_: i64) -> i64 {

0

}

}

impl UnaryOpT for Relu {

const NAME: &'static str = "relu";

const KERNEL: &'static str = "urelu";

const V: Self = Relu;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

v.max(bf16::ZERO)

}

#[inline(always)]

fn f16(v: f16) -> f16 {

v.max(f16::ZERO)

}

#[inline(always)]

fn f32(v: f32) -> f32 {

v.max(0f32)

}

#[inline(always)]

fn f64(v: f64) -> f64 {

v.max(0f64)

}

#[inline(always)]

fn u8(v: u8) -> u8 {

v

}

#[inline(always)]

fn u32(v: u32) -> u32 {

v

}

#[inline(always)]

fn i64(v: i64) -> i64 {

v

}

}

/// `BackpropOp` is a wrapper around `Option<Op>`. The main goal is to ensure that dependencies are

/// properly checked when creating a new value

#[derive(Clone)]

pub struct BackpropOp(Option<Op>);

impl BackpropOp {

pub(crate) fn none() -> Self {

BackpropOp(None)

}

pub(crate) fn new1(arg: &Tensor, f: impl Fn(Tensor) -> Op) -> Self {

let op = if arg.track_op() {

Some(f(arg.clone()))

} else {

None

};

Self(op)

}

pub(crate) fn new2(arg1: &Tensor, arg2: &Tensor, f: impl Fn(Tensor, Tensor) -> Op) -> Self {

let op = if arg1.track_op() || arg2.track_op() {

Some(f(arg1.clone(), arg2.clone()))

} else {

None

};

Self(op)

}

pub(crate) fn new3(

arg1: &Tensor,

arg2: &Tensor,

arg3: &Tensor,

f: impl Fn(Tensor, Tensor, Tensor) -> Op,

) -> Self {

let op = if arg1.track_op() || arg2.track_op() || arg3.track_op() {

Some(f(arg1.clone(), arg2.clone(), arg3.clone()))

} else {

None

};

Self(op)

}

pub(crate) fn new<A: AsRef<Tensor>>(args: &[A], f: impl Fn(Vec<Tensor>) -> Op) -> Self {

let op = if args.iter().any(|arg| arg.as_ref().track_op()) {

let args: Vec<Tensor> = args.iter().map(|arg| arg.as_ref().clone()).collect();

Some(f(args))

} else {

None

};

Self(op)

}

pub(crate) fn is_none(&self) -> bool {

self.0.is_none()

}

}

impl std::ops::Deref for BackpropOp {

type Target = Option<Op>;

fn deref(&self) -> &Self::Target {

&self.0

}

}

impl UnaryOpT for Sign {

const NAME: &'static str = "sign";

const KERNEL: &'static str = "usign";

const V: Self = Sign;

#[inline(always)]

fn bf16(v: bf16) -> bf16 {

bf16::from((v > bf16::ZERO) as i8) - bf16::from((v < bf16::ZERO) as i8)

}

#[inline(always)]

fn f16(v: f16) -> f16 {

f16::from((v > f16::ZERO) as i8) - f16::from((v < f16::ZERO) as i8)

}

#[inline(always)]

fn f32(v: f32) -> f32 {

f32::from(v > 0.) - f32::from(v < 0.)

}

#[inline(always)]

fn f64(v: f64) -> f64 {

f64::from(v > 0.) - f64::from(v < 0.)

}

#[inline(always)]

fn u8(v: u8) -> u8 {

u8::min(1, v)

}

#[inline(always)]

fn u32(v: u32) -> u32 {

u32::min(1, v)

}

#[inline(always)]

fn i64(v: i64) -> i64 {

(v > 0) as i64 - (v < 0) as i64

}

}

| 9 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/pair_class.yml | task: sentence-transformers:pair_class

base_model: google-bert/bert-base-uncased

project_name: autotrain-st-pair-class

log: tensorboard

backend: local

data:

path: sentence-transformers/all-nli

train_split: pair-class:train

valid_split: pair-class:test

column_mapping:

sentence1_column: premise

sentence2_column: hypothesis

target_column: label

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 0 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/sentence_transformers/triplet.yml | task: sentence-transformers:triplet

base_model: microsoft/mpnet-base

project_name: autotrain-st-triplet

log: tensorboard

backend: local

data:

path: sentence-transformers/all-nli

train_split: triplet:train

valid_split: triplet:dev

column_mapping:

sentence1_column: anchor

sentence2_column: positive

sentence3_column: negative

params:

max_seq_length: 512

epochs: 5

batch_size: 8

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 1 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/vlm/paligemma_vqa.yml | task: vlm:vqa

base_model: google/paligemma-3b-pt-224

project_name: autotrain-paligemma-finetuned-vqa

log: tensorboard

backend: local

data:

path: abhishek/vqa_small

train_split: train

valid_split: validation

column_mapping:

image_column: image

text_column: multiple_choice_answer

prompt_text_column: question

params:

epochs: 3

batch_size: 2

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 4

mixed_precision: fp16

peft: true

quantization: int4

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 2 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/image_classification/local.yml | task: image_classification

base_model: google/vit-base-patch16-224

project_name: autotrain-image-classification-model

log: tensorboard

backend: local

data:

path: data/

train_split: train # this folder inside data/ will be used for training, it contains the images in subfolders.

valid_split: null

column_mapping:

image_column: image

target_column: label

params:

epochs: 2

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 3 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/image_classification/hub_dataset.yml | task: image_classification

base_model: google/vit-base-patch16-224

project_name: autotrain-cats-vs-dogs-finetuned

log: tensorboard

backend: local

data:

path: cats_vs_dogs

train_split: train

valid_split: null

column_mapping:

image_column: image

target_column: labels

params:

epochs: 2

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 4 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/seq2seq/local.yml | task: seq2seq

base_model: google/flan-t5-base

project_name: autotrain-seq2seq-local

log: tensorboard

backend: local

data:

path: path/to/your/dataset csv/jsonl files

train_split: train

valid_split: test

column_mapping:

text_column: text

target_column: target

params:

max_seq_length: 512

epochs: 3

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: none

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 5 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/seq2seq/hub_dataset.yml | task: seq2seq

base_model: google/flan-t5-base

project_name: autotrain-seq2seq-hub-dataset

log: tensorboard

backend: local

data:

path: samsum

train_split: train

valid_split: test

column_mapping:

text_column: dialogue

target_column: summary

params:

max_seq_length: 512

epochs: 3

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: none

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 6 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/image_scoring/local.yml | task: image_regression

base_model: google/vit-base-patch16-224

project_name: autotrain-image-regression-model

log: tensorboard

backend: local

data:

path: data/

train_split: train # this folder inside data/ will be used for training, it contains the images and metadata.jsonl

valid_split: valid # this folder inside data/ will be used for validation, it contains the images and metadata.jsonl. can be set to null

# column mapping should not be changed for local datasets

column_mapping:

image_column: image

target_column: target

params:

epochs: 2

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 7 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/image_scoring/hub_dataset.yml | task: image_regression

base_model: google/vit-base-patch16-224

project_name: autotrain-cats-vs-dogs-finetuned

log: tensorboard

backend: local

data:

path: cats_vs_dogs

train_split: train

valid_split: null

column_mapping:

image_column: image

target_column: labels

params:

epochs: 2

batch_size: 4

lr: 2e-5

optimizer: adamw_torch

scheduler: linear

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 8 |

0 | hf_public_repos/autotrain-advanced/configs | hf_public_repos/autotrain-advanced/configs/image_scoring/image_quality.yml | task: image_regression

base_model: microsoft/resnet-50

project_name: autotrain-img-quality-resnet50

log: tensorboard

backend: local

data:

path: abhishek/img-quality-full

train_split: train

valid_split: null

column_mapping:

image_column: image

target_column: target

params:

epochs: 10

batch_size: 8

lr: 2e-3

optimizer: adamw_torch

scheduler: cosine

gradient_accumulation: 1

mixed_precision: fp16

hub:

username: ${HF_USERNAME}

token: ${HF_TOKEN}

push_to_hub: true | 9 |

0 | hf_public_repos | hf_public_repos/blog/chatbot-amd-gpu.md | ---

title: "Run a Chatgpt-like Chatbot on a Single GPU with ROCm"

thumbnail: /blog/assets/chatbot-amd-gpu/thumbnail.png

authors:

- user: andyll7772

guest: true

---

# Run a Chatgpt-like Chatbot on a Single GPU with ROCm

## Introduction

ChatGPT, OpenAI's groundbreaking language model, has become an

influential force in the realm of artificial intelligence, paving the

way for a multitude of AI applications across diverse sectors. With its

staggering ability to comprehend and generate human-like text, ChatGPT

has transformed industries, from customer support to creative writing,

and has even served as an invaluable research tool.

Various efforts have been made to provide

open-source large language models which demonstrate great capabilities

but in smaller sizes, such as

[OPT](https://huggingface.co/docs/transformers/model_doc/opt),

[LLAMA](https://github.com/facebookresearch/llama),

[Alpaca](https://github.com/tatsu-lab/stanford_alpaca) and

[Vicuna](https://github.com/lm-sys/FastChat).

In this blog, we will delve into the world of Vicuna, and explain how to

run the Vicuna 13B model on a single AMD GPU with ROCm.

**What is Vicuna?**

Vicuna is an open-source chatbot with 13 billion parameters, developed

by a team from UC Berkeley, CMU, Stanford, and UC San Diego. To create

Vicuna, a LLAMA base model was fine-tuned using about 70K user-shared

conversations collected from ShareGPT.com via public APIs. According to

initial assessments where GPT-4 is used as a reference, Vicuna-13B has

achieved over 90%\* quality compared to OpenAI ChatGPT.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/chatbot-amd-gpu/01.png" style="width: 60%; height: auto;">

</p>

It was released on [Github](https://github.com/lm-sys/FastChat) on Apr

11, just a few weeks ago. It is worth mentioning that the data set,

training code, evaluation metrics, training cost are known for Vicuna. Its total training cost was just

around \$300, making it a cost-effective solution for the general public.

For more details about Vicuna, please check out

<https://vicuna.lmsys.org>.

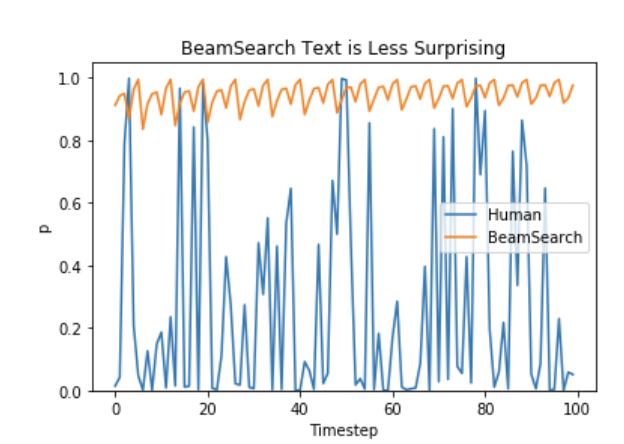

**Why do we need a quantized GPT model?**

Running Vicuna-13B model in fp16 requires around 28GB GPU RAM. To

further reduce the memory footprint, optimization techniques are

required. There is a recent research paper GPTQ published, which

proposed accurate post-training quantization for GPT models with lower

bit precision. As illustrated below, for models with parameters larger

than 10B, the 4-bit or 3-bit GPTQ can achieve comparable accuracy

with fp16.

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/chatbot-amd-gpu/02.png" style="width: 70%; height: auto;">

</p>

Moreover, large parameters of these models also have a severely negative

effect on GPT latency because GPT token generation is more limited by

memory bandwidth (GB/s) than computation (TFLOPs or TOPs) itself. For this

reason, a quantized model does not degrade

token generation latency when the GPU is under a memory bound situation.

Refer to [the GPTQ quantization papers](<https://arxiv.org/abs/2210.17323>) and [github repo](<https://github.com/IST-DASLab/gptq>).

By leveraging this technique, several 4-bit quantized Vicuna models are

available from Hugging Face as follows,

<p align="center">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/chatbot-amd-gpu/03.png" style="width: 50%; height: auto;">

</p>

## Running Vicuna 13B Model on AMD GPU with ROCm

To run the Vicuna 13B model on an AMD GPU, we need to leverage the power

of ROCm (Radeon Open Compute), an open-source software platform that

provides AMD GPU acceleration for deep learning and high-performance

computing applications.

Here's a step-by-step guide on how to set up and run the Vicuna 13B

model on an AMD GPU with ROCm:

**System Requirements**