index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/update.py | #!/usr/bin/env python

# Copyright 2022 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

from pathlib import Path

from .config_args import default_config_file, load_config_from_file

from .config_utils import SubcommandHelpFormatter

description = "Update an existing config file with the latest defaults while maintaining the old configuration."

def update_config(args):

"""

Update an existing config file with the latest defaults while maintaining the old configuration.

"""

config_file = args.config_file

if config_file is None and Path(default_config_file).exists():

config_file = default_config_file

elif not Path(config_file).exists():

raise ValueError(f"The passed config file located at {config_file} doesn't exist.")

config = load_config_from_file(config_file)

if config_file.endswith(".json"):

config.to_json_file(config_file)

else:

config.to_yaml_file(config_file)

return config_file

def update_command_parser(parser, parents):

parser = parser.add_parser("update", parents=parents, help=description, formatter_class=SubcommandHelpFormatter)

parser.add_argument(

"--config_file",

default=None,

help=(

"The path to the config file to update. Will default to a file named default_config.yaml in the cache "

"location, which is the content of the environment `HF_HOME` suffixed with 'accelerate', or if you don't have "

"such an environment variable, your cache directory ('~/.cache' or the content of `XDG_CACHE_HOME`) suffixed "

"with 'huggingface'."

),

)

parser.set_defaults(func=update_config_command)

return parser

def update_config_command(args):

config_file = update_config(args)

print(f"Sucessfully updated the configuration file at {config_file}.")

| 0 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/sagemaker.py | #!/usr/bin/env python

# Copyright 2021 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

import json

import os

from ...utils.constants import SAGEMAKER_PARALLEL_EC2_INSTANCES, TORCH_DYNAMO_MODES

from ...utils.dataclasses import ComputeEnvironment, SageMakerDistributedType

from ...utils.imports import is_boto3_available

from .config_args import SageMakerConfig

from .config_utils import (

DYNAMO_BACKENDS,

_ask_field,

_ask_options,

_convert_dynamo_backend,

_convert_mixed_precision,

_convert_sagemaker_distributed_mode,

_convert_yes_no_to_bool,

)

if is_boto3_available():

import boto3 # noqa: F401

def _create_iam_role_for_sagemaker(role_name):

iam_client = boto3.client("iam")

sagemaker_trust_policy = {

"Version": "2012-10-17",

"Statement": [

{"Effect": "Allow", "Principal": {"Service": "sagemaker.amazonaws.com"}, "Action": "sts:AssumeRole"}

],

}

try:

# create the role, associated with the chosen trust policy

iam_client.create_role(

RoleName=role_name, AssumeRolePolicyDocument=json.dumps(sagemaker_trust_policy, indent=2)

)

policy_document = {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sagemaker:*",

"ecr:GetDownloadUrlForLayer",

"ecr:BatchGetImage",

"ecr:BatchCheckLayerAvailability",

"ecr:GetAuthorizationToken",

"cloudwatch:PutMetricData",

"cloudwatch:GetMetricData",

"cloudwatch:GetMetricStatistics",

"cloudwatch:ListMetrics",

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:DescribeLogStreams",

"logs:PutLogEvents",

"logs:GetLogEvents",

"s3:CreateBucket",

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:GetObject",

"s3:PutObject",

],

"Resource": "*",

}

],

}

# attach policy to role

iam_client.put_role_policy(

RoleName=role_name,

PolicyName=f"{role_name}_policy_permission",

PolicyDocument=json.dumps(policy_document, indent=2),

)

except iam_client.exceptions.EntityAlreadyExistsException:

print(f"role {role_name} already exists. Using existing one")

def _get_iam_role_arn(role_name):

iam_client = boto3.client("iam")

return iam_client.get_role(RoleName=role_name)["Role"]["Arn"]

def get_sagemaker_input():

credentials_configuration = _ask_options(

"How do you want to authorize?",

["AWS Profile", "Credentials (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY) "],

int,

)

aws_profile = None

if credentials_configuration == 0:

aws_profile = _ask_field("Enter your AWS Profile name: [default] ", default="default")

os.environ["AWS_PROFILE"] = aws_profile

else:

print(

"Note you will need to provide AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY when you launch you training script with,"

"`accelerate launch --aws_access_key_id XXX --aws_secret_access_key YYY`"

)

aws_access_key_id = _ask_field("AWS Access Key ID: ")

os.environ["AWS_ACCESS_KEY_ID"] = aws_access_key_id

aws_secret_access_key = _ask_field("AWS Secret Access Key: ")

os.environ["AWS_SECRET_ACCESS_KEY"] = aws_secret_access_key

aws_region = _ask_field("Enter your AWS Region: [us-east-1]", default="us-east-1")

os.environ["AWS_DEFAULT_REGION"] = aws_region

role_management = _ask_options(

"Do you already have an IAM Role for executing Amazon SageMaker Training Jobs?",

["Provide IAM Role name", "Create new IAM role using credentials"],

int,

)

if role_management == 0:

iam_role_name = _ask_field("Enter your IAM role name: ")

else:

iam_role_name = "accelerate_sagemaker_execution_role"

print(f'Accelerate will create an iam role "{iam_role_name}" using the provided credentials')

_create_iam_role_for_sagemaker(iam_role_name)

is_custom_docker_image = _ask_field(

"Do you want to use custom Docker image? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

docker_image = None

if is_custom_docker_image:

docker_image = _ask_field("Enter your Docker image: ", lambda x: str(x).lower())

is_sagemaker_inputs_enabled = _ask_field(

"Do you want to provide SageMaker input channels with data locations? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

sagemaker_inputs_file = None

if is_sagemaker_inputs_enabled:

sagemaker_inputs_file = _ask_field(

"Enter the path to the SageMaker inputs TSV file with columns (channel_name, data_location): ",

lambda x: str(x).lower(),

)

is_sagemaker_metrics_enabled = _ask_field(

"Do you want to enable SageMaker metrics? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

sagemaker_metrics_file = None

if is_sagemaker_metrics_enabled:

sagemaker_metrics_file = _ask_field(

"Enter the path to the SageMaker metrics TSV file with columns (metric_name, metric_regex): ",

lambda x: str(x).lower(),

)

distributed_type = _ask_options(

"What is the distributed mode?",

["No distributed training", "Data parallelism"],

_convert_sagemaker_distributed_mode,

)

dynamo_config = {}

use_dynamo = _ask_field(

"Do you wish to optimize your script with torch dynamo?[yes/NO]:",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

if use_dynamo:

prefix = "dynamo_"

dynamo_config[prefix + "backend"] = _ask_options(

"Which dynamo backend would you like to use?",

[x.lower() for x in DYNAMO_BACKENDS],

_convert_dynamo_backend,

default=2,

)

use_custom_options = _ask_field(

"Do you want to customize the defaults sent to torch.compile? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

if use_custom_options:

dynamo_config[prefix + "mode"] = _ask_options(

"Which mode do you want to use?",

TORCH_DYNAMO_MODES,

lambda x: TORCH_DYNAMO_MODES[int(x)],

default="default",

)

dynamo_config[prefix + "use_fullgraph"] = _ask_field(

"Do you want the fullgraph mode or it is ok to break model into several subgraphs? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

dynamo_config[prefix + "use_dynamic"] = _ask_field(

"Do you want to enable dynamic shape tracing? [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

ec2_instance_query = "Which EC2 instance type you want to use for your training?"

if distributed_type != SageMakerDistributedType.NO:

ec2_instance_type = _ask_options(

ec2_instance_query, SAGEMAKER_PARALLEL_EC2_INSTANCES, lambda x: SAGEMAKER_PARALLEL_EC2_INSTANCES[int(x)]

)

else:

ec2_instance_query += "? [ml.p3.2xlarge]:"

ec2_instance_type = _ask_field(ec2_instance_query, lambda x: str(x).lower(), default="ml.p3.2xlarge")

debug = False

if distributed_type != SageMakerDistributedType.NO:

debug = _ask_field(

"Should distributed operations be checked while running for errors? This can avoid timeout issues but will be slower. [yes/NO]: ",

_convert_yes_no_to_bool,

default=False,

error_message="Please enter yes or no.",

)

num_machines = 1

if distributed_type in (SageMakerDistributedType.DATA_PARALLEL, SageMakerDistributedType.MODEL_PARALLEL):

num_machines = _ask_field(

"How many machines do you want use? [1]: ",

int,

default=1,

)

mixed_precision = _ask_options(

"Do you wish to use FP16 or BF16 (mixed precision)?",

["no", "fp16", "bf16", "fp8"],

_convert_mixed_precision,

)

if use_dynamo and mixed_precision == "no":

print(

"Torch dynamo used without mixed precision requires TF32 to be efficient. Accelerate will enable it by default when launching your scripts."

)

return SageMakerConfig(

image_uri=docker_image,

compute_environment=ComputeEnvironment.AMAZON_SAGEMAKER,

distributed_type=distributed_type,

use_cpu=False,

dynamo_config=dynamo_config,

ec2_instance_type=ec2_instance_type,

profile=aws_profile,

region=aws_region,

iam_role_name=iam_role_name,

mixed_precision=mixed_precision,

num_machines=num_machines,

sagemaker_inputs_file=sagemaker_inputs_file,

sagemaker_metrics_file=sagemaker_metrics_file,

debug=debug,

)

| 1 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/default.py | #!/usr/bin/env python

# Copyright 2021 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

from pathlib import Path

import torch

from ...utils import is_mlu_available, is_musa_available, is_npu_available, is_xpu_available

from .config_args import ClusterConfig, default_json_config_file

from .config_utils import SubcommandHelpFormatter

description = "Create a default config file for Accelerate with only a few flags set."

def write_basic_config(mixed_precision="no", save_location: str = default_json_config_file, use_xpu: bool = False):

"""

Creates and saves a basic cluster config to be used on a local machine with potentially multiple GPUs. Will also

set CPU if it is a CPU-only machine.

Args:

mixed_precision (`str`, *optional*, defaults to "no"):

Mixed Precision to use. Should be one of "no", "fp16", or "bf16"

save_location (`str`, *optional*, defaults to `default_json_config_file`):

Optional custom save location. Should be passed to `--config_file` when using `accelerate launch`. Default

location is inside the huggingface cache folder (`~/.cache/huggingface`) but can be overriden by setting

the `HF_HOME` environmental variable, followed by `accelerate/default_config.yaml`.

use_xpu (`bool`, *optional*, defaults to `False`):

Whether to use XPU if available.

"""

path = Path(save_location)

path.parent.mkdir(parents=True, exist_ok=True)

if path.exists():

print(

f"Configuration already exists at {save_location}, will not override. Run `accelerate config` manually or pass a different `save_location`."

)

return False

mixed_precision = mixed_precision.lower()

if mixed_precision not in ["no", "fp16", "bf16", "fp8"]:

raise ValueError(

f"`mixed_precision` should be one of 'no', 'fp16', 'bf16', or 'fp8'. Received {mixed_precision}"

)

config = {

"compute_environment": "LOCAL_MACHINE",

"mixed_precision": mixed_precision,

}

if is_mlu_available():

num_mlus = torch.mlu.device_count()

config["num_processes"] = num_mlus

config["use_cpu"] = False

if num_mlus > 1:

config["distributed_type"] = "MULTI_MLU"

else:

config["distributed_type"] = "NO"

elif is_musa_available():

num_musas = torch.musa.device_count()

config["num_processes"] = num_musas

config["use_cpu"] = False

if num_musas > 1:

config["distributed_type"] = "MULTI_MUSA"

else:

config["distributed_type"] = "NO"

elif torch.cuda.is_available():

num_gpus = torch.cuda.device_count()

config["num_processes"] = num_gpus

config["use_cpu"] = False

if num_gpus > 1:

config["distributed_type"] = "MULTI_GPU"

else:

config["distributed_type"] = "NO"

elif is_xpu_available() and use_xpu:

num_xpus = torch.xpu.device_count()

config["num_processes"] = num_xpus

config["use_cpu"] = False

if num_xpus > 1:

config["distributed_type"] = "MULTI_XPU"

else:

config["distributed_type"] = "NO"

elif is_npu_available():

num_npus = torch.npu.device_count()

config["num_processes"] = num_npus

config["use_cpu"] = False

if num_npus > 1:

config["distributed_type"] = "MULTI_NPU"

else:

config["distributed_type"] = "NO"

else:

num_xpus = 0

config["use_cpu"] = True

config["num_processes"] = 1

config["distributed_type"] = "NO"

config["debug"] = False

config["enable_cpu_affinity"] = False

config = ClusterConfig(**config)

config.to_json_file(path)

return path

def default_command_parser(parser, parents):

parser = parser.add_parser("default", parents=parents, help=description, formatter_class=SubcommandHelpFormatter)

parser.add_argument(

"--config_file",

default=default_json_config_file,

help=(

"The path to use to store the config file. Will default to a file named default_config.yaml in the cache "

"location, which is the content of the environment `HF_HOME` suffixed with 'accelerate', or if you don't have "

"such an environment variable, your cache directory ('~/.cache' or the content of `XDG_CACHE_HOME`) suffixed "

"with 'huggingface'."

),

dest="save_location",

)

parser.add_argument(

"--mixed_precision",

choices=["no", "fp16", "bf16"],

type=str,

help="Whether or not to use mixed precision training. "

"Choose between FP16 and BF16 (bfloat16) training. "

"BF16 training is only supported on Nvidia Ampere GPUs and PyTorch 1.10 or later.",

default="no",

)

parser.set_defaults(func=default_config_command)

return parser

def default_config_command(args):

config_file = write_basic_config(args.mixed_precision, args.save_location)

if config_file:

print(f"accelerate configuration saved at {config_file}")

| 2 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/__init__.py | #!/usr/bin/env python

# Copyright 2021 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

import argparse

from .config import config_command_parser

from .config_args import default_config_file, load_config_from_file # noqa: F401

from .default import default_command_parser

from .update import update_command_parser

def get_config_parser(subparsers=None):

parent_parser = argparse.ArgumentParser(add_help=False, allow_abbrev=False)

# The main config parser

config_parser = config_command_parser(subparsers)

# The subparser to add commands to

subcommands = config_parser.add_subparsers(title="subcommands", dest="subcommand")

# Then add other parsers with the parent parser

default_command_parser(subcommands, parents=[parent_parser])

update_command_parser(subcommands, parents=[parent_parser])

return config_parser

def main():

config_parser = get_config_parser()

args = config_parser.parse_args()

if not hasattr(args, "func"):

config_parser.print_help()

exit(1)

# Run

args.func(args)

if __name__ == "__main__":

main()

| 3 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/config_utils.py | #!/usr/bin/env python

# Copyright 2021 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

import argparse

from ...utils.dataclasses import (

ComputeEnvironment,

DistributedType,

DynamoBackend,

FP8BackendType,

PrecisionType,

SageMakerDistributedType,

)

from ..menu import BulletMenu

DYNAMO_BACKENDS = [

"EAGER",

"AOT_EAGER",

"INDUCTOR",

"AOT_TS_NVFUSER",

"NVPRIMS_NVFUSER",

"CUDAGRAPHS",

"OFI",

"FX2TRT",

"ONNXRT",

"TENSORRT",

"AOT_TORCHXLA_TRACE_ONCE",

"TORHCHXLA_TRACE_ONCE",

"IPEX",

"TVM",

]

def _ask_field(input_text, convert_value=None, default=None, error_message=None):

ask_again = True

while ask_again:

result = input(input_text)

try:

if default is not None and len(result) == 0:

return default

return convert_value(result) if convert_value is not None else result

except Exception:

if error_message is not None:

print(error_message)

def _ask_options(input_text, options=[], convert_value=None, default=0):

menu = BulletMenu(input_text, options)

result = menu.run(default_choice=default)

return convert_value(result) if convert_value is not None else result

def _convert_compute_environment(value):

value = int(value)

return ComputeEnvironment(["LOCAL_MACHINE", "AMAZON_SAGEMAKER"][value])

def _convert_distributed_mode(value):

value = int(value)

return DistributedType(

["NO", "MULTI_CPU", "MULTI_XPU", "MULTI_GPU", "MULTI_NPU", "MULTI_MLU", "MULTI_MUSA", "XLA"][value]

)

def _convert_dynamo_backend(value):

value = int(value)

return DynamoBackend(DYNAMO_BACKENDS[value]).value

def _convert_mixed_precision(value):

value = int(value)

return PrecisionType(["no", "fp16", "bf16", "fp8"][value])

def _convert_sagemaker_distributed_mode(value):

value = int(value)

return SageMakerDistributedType(["NO", "DATA_PARALLEL", "MODEL_PARALLEL"][value])

def _convert_fp8_backend(value):

value = int(value)

return FP8BackendType(["TE", "MSAMP"][value])

def _convert_yes_no_to_bool(value):

return {"yes": True, "no": False}[value.lower()]

class SubcommandHelpFormatter(argparse.RawDescriptionHelpFormatter):

"""

A custom formatter that will remove the usage line from the help message for subcommands.

"""

def _format_usage(self, usage, actions, groups, prefix):

usage = super()._format_usage(usage, actions, groups, prefix)

usage = usage.replace("<command> [<args>] ", "")

return usage

| 4 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/config/config_args.py | #!/usr/bin/env python

# Copyright 2021 The HuggingFace Team. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

import json

import os

from dataclasses import dataclass

from enum import Enum

from typing import List, Optional, Union

import yaml

from ...utils import ComputeEnvironment, DistributedType, SageMakerDistributedType

from ...utils.constants import SAGEMAKER_PYTHON_VERSION, SAGEMAKER_PYTORCH_VERSION, SAGEMAKER_TRANSFORMERS_VERSION

hf_cache_home = os.path.expanduser(

os.environ.get("HF_HOME", os.path.join(os.environ.get("XDG_CACHE_HOME", "~/.cache"), "huggingface"))

)

cache_dir = os.path.join(hf_cache_home, "accelerate")

default_json_config_file = os.path.join(cache_dir, "default_config.yaml")

default_yaml_config_file = os.path.join(cache_dir, "default_config.yaml")

# For backward compatibility: the default config is the json one if it's the only existing file.

if os.path.isfile(default_yaml_config_file) or not os.path.isfile(default_json_config_file):

default_config_file = default_yaml_config_file

else:

default_config_file = default_json_config_file

def load_config_from_file(config_file):

if config_file is not None:

if not os.path.isfile(config_file):

raise FileNotFoundError(

f"The passed configuration file `{config_file}` does not exist. "

"Please pass an existing file to `accelerate launch`, or use the default one "

"created through `accelerate config` and run `accelerate launch` "

"without the `--config_file` argument."

)

else:

config_file = default_config_file

with open(config_file, encoding="utf-8") as f:

if config_file.endswith(".json"):

if (

json.load(f).get("compute_environment", ComputeEnvironment.LOCAL_MACHINE)

== ComputeEnvironment.LOCAL_MACHINE

):

config_class = ClusterConfig

else:

config_class = SageMakerConfig

return config_class.from_json_file(json_file=config_file)

else:

if (

yaml.safe_load(f).get("compute_environment", ComputeEnvironment.LOCAL_MACHINE)

== ComputeEnvironment.LOCAL_MACHINE

):

config_class = ClusterConfig

else:

config_class = SageMakerConfig

return config_class.from_yaml_file(yaml_file=config_file)

@dataclass

class BaseConfig:

compute_environment: ComputeEnvironment

distributed_type: Union[DistributedType, SageMakerDistributedType]

mixed_precision: str

use_cpu: bool

debug: bool

def to_dict(self):

result = self.__dict__

# For serialization, it's best to convert Enums to strings (or their underlying value type).

def _convert_enums(value):

if isinstance(value, Enum):

return value.value

if isinstance(value, dict):

if not bool(value):

return None

for key1, value1 in value.items():

value[key1] = _convert_enums(value1)

return value

for key, value in result.items():

result[key] = _convert_enums(value)

result = {k: v for k, v in result.items() if v is not None}

return result

@staticmethod

def process_config(config_dict):

"""

Processes `config_dict` and sets default values for any missing keys

"""

if "compute_environment" not in config_dict:

config_dict["compute_environment"] = ComputeEnvironment.LOCAL_MACHINE

if "distributed_type" not in config_dict:

raise ValueError("A `distributed_type` must be specified in the config file.")

if "num_processes" not in config_dict and config_dict["distributed_type"] == DistributedType.NO:

config_dict["num_processes"] = 1

if "mixed_precision" not in config_dict:

config_dict["mixed_precision"] = "fp16" if ("fp16" in config_dict and config_dict["fp16"]) else None

if "fp16" in config_dict: # Convert the config to the new format.

del config_dict["fp16"]

if "dynamo_backend" in config_dict: # Convert the config to the new format.

dynamo_backend = config_dict.pop("dynamo_backend")

config_dict["dynamo_config"] = {} if dynamo_backend == "NO" else {"dynamo_backend": dynamo_backend}

if "use_cpu" not in config_dict:

config_dict["use_cpu"] = False

if "debug" not in config_dict:

config_dict["debug"] = False

if "enable_cpu_affinity" not in config_dict:

config_dict["enable_cpu_affinity"] = False

return config_dict

@classmethod

def from_json_file(cls, json_file=None):

json_file = default_json_config_file if json_file is None else json_file

with open(json_file, encoding="utf-8") as f:

config_dict = json.load(f)

config_dict = cls.process_config(config_dict)

extra_keys = sorted(set(config_dict.keys()) - set(cls.__dataclass_fields__.keys()))

if len(extra_keys) > 0:

raise ValueError(

f"The config file at {json_file} had unknown keys ({extra_keys}), please try upgrading your `accelerate`"

" version or fix (and potentially remove) these keys from your config file."

)

return cls(**config_dict)

def to_json_file(self, json_file):

with open(json_file, "w", encoding="utf-8") as f:

content = json.dumps(self.to_dict(), indent=2, sort_keys=True) + "\n"

f.write(content)

@classmethod

def from_yaml_file(cls, yaml_file=None):

yaml_file = default_yaml_config_file if yaml_file is None else yaml_file

with open(yaml_file, encoding="utf-8") as f:

config_dict = yaml.safe_load(f)

config_dict = cls.process_config(config_dict)

extra_keys = sorted(set(config_dict.keys()) - set(cls.__dataclass_fields__.keys()))

if len(extra_keys) > 0:

raise ValueError(

f"The config file at {yaml_file} had unknown keys ({extra_keys}), please try upgrading your `accelerate`"

" version or fix (and potentially remove) these keys from your config file."

)

return cls(**config_dict)

def to_yaml_file(self, yaml_file):

with open(yaml_file, "w", encoding="utf-8") as f:

yaml.safe_dump(self.to_dict(), f)

def __post_init__(self):

if isinstance(self.compute_environment, str):

self.compute_environment = ComputeEnvironment(self.compute_environment)

if isinstance(self.distributed_type, str):

if self.compute_environment == ComputeEnvironment.AMAZON_SAGEMAKER:

self.distributed_type = SageMakerDistributedType(self.distributed_type)

else:

self.distributed_type = DistributedType(self.distributed_type)

if getattr(self, "dynamo_config", None) is None:

self.dynamo_config = {}

@dataclass

class ClusterConfig(BaseConfig):

num_processes: int = -1 # For instance if we use SLURM and the user manually passes it in

machine_rank: int = 0

num_machines: int = 1

gpu_ids: Optional[str] = None

main_process_ip: Optional[str] = None

main_process_port: Optional[int] = None

rdzv_backend: Optional[str] = "static"

same_network: Optional[bool] = False

main_training_function: str = "main"

enable_cpu_affinity: bool = False

# args for FP8 training

fp8_config: dict = None

# args for deepspeed_plugin

deepspeed_config: dict = None

# args for fsdp

fsdp_config: dict = None

# args for megatron_lm

megatron_lm_config: dict = None

# args for ipex

ipex_config: dict = None

# args for mpirun

mpirun_config: dict = None

# args for TPU

downcast_bf16: bool = False

# args for TPU pods

tpu_name: str = None

tpu_zone: str = None

tpu_use_cluster: bool = False

tpu_use_sudo: bool = False

command_file: str = None

commands: List[str] = None

tpu_vm: List[str] = None

tpu_env: List[str] = None

# args for dynamo

dynamo_config: dict = None

def __post_init__(self):

if self.deepspeed_config is None:

self.deepspeed_config = {}

if self.fsdp_config is None:

self.fsdp_config = {}

if self.megatron_lm_config is None:

self.megatron_lm_config = {}

if self.ipex_config is None:

self.ipex_config = {}

if self.mpirun_config is None:

self.mpirun_config = {}

if self.fp8_config is None:

self.fp8_config = {}

return super().__post_init__()

@dataclass

class SageMakerConfig(BaseConfig):

ec2_instance_type: str

iam_role_name: str

image_uri: Optional[str] = None

profile: Optional[str] = None

region: str = "us-east-1"

num_machines: int = 1

gpu_ids: str = "all"

base_job_name: str = f"accelerate-sagemaker-{num_machines}"

pytorch_version: str = SAGEMAKER_PYTORCH_VERSION

transformers_version: str = SAGEMAKER_TRANSFORMERS_VERSION

py_version: str = SAGEMAKER_PYTHON_VERSION

sagemaker_inputs_file: str = None

sagemaker_metrics_file: str = None

additional_args: dict = None

dynamo_config: dict = None

enable_cpu_affinity: bool = False

| 5 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/menu/keymap.py | # Copyright 2022 The HuggingFace Team and Brian Chao. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""

Utilities relating to parsing raw characters from the keyboard, based on https://github.com/bchao1/bullet

"""

import os

import string

import sys

ARROW_KEY_FLAG = 1 << 8

KEYMAP = {

"tab": ord("\t"),

"newline": ord("\r"),

"esc": 27,

"up": 65 + ARROW_KEY_FLAG,

"down": 66 + ARROW_KEY_FLAG,

"right": 67 + ARROW_KEY_FLAG,

"left": 68 + ARROW_KEY_FLAG,

"mod_int": 91,

"undefined": sys.maxsize,

"interrupt": 3,

"insert": 50,

"delete": 51,

"pg_up": 53,

"pg_down": 54,

}

KEYMAP["arrow_begin"] = KEYMAP["up"]

KEYMAP["arrow_end"] = KEYMAP["left"]

if sys.platform == "win32":

WIN_CH_BUFFER = []

WIN_KEYMAP = {

b"\xe0H": KEYMAP["up"] - ARROW_KEY_FLAG,

b"\x00H": KEYMAP["up"] - ARROW_KEY_FLAG,

b"\xe0P": KEYMAP["down"] - ARROW_KEY_FLAG,

b"\x00P": KEYMAP["down"] - ARROW_KEY_FLAG,

b"\xe0M": KEYMAP["right"] - ARROW_KEY_FLAG,

b"\x00M": KEYMAP["right"] - ARROW_KEY_FLAG,

b"\xe0K": KEYMAP["left"] - ARROW_KEY_FLAG,

b"\x00K": KEYMAP["left"] - ARROW_KEY_FLAG,

}

for i in range(10):

KEYMAP[str(i)] = ord(str(i))

def get_raw_chars():

"Gets raw characters from inputs"

if os.name == "nt":

import msvcrt

encoding = "mbcs"

# Flush the keyboard buffer

while msvcrt.kbhit():

msvcrt.getch()

if len(WIN_CH_BUFFER) == 0:

# Read the keystroke

ch = msvcrt.getch()

# If it is a prefix char, get second part

if ch in (b"\x00", b"\xe0"):

ch2 = ch + msvcrt.getch()

# Translate actual Win chars to bullet char types

try:

chx = chr(WIN_KEYMAP[ch2])

WIN_CH_BUFFER.append(chr(KEYMAP["mod_int"]))

WIN_CH_BUFFER.append(chx)

if ord(chx) in (

KEYMAP["insert"] - 1 << 9,

KEYMAP["delete"] - 1 << 9,

KEYMAP["pg_up"] - 1 << 9,

KEYMAP["pg_down"] - 1 << 9,

):

WIN_CH_BUFFER.append(chr(126))

ch = chr(KEYMAP["esc"])

except KeyError:

ch = ch2[1]

else:

ch = ch.decode(encoding)

else:

ch = WIN_CH_BUFFER.pop(0)

elif os.name == "posix":

import termios

import tty

fd = sys.stdin.fileno()

old_settings = termios.tcgetattr(fd)

try:

tty.setraw(fd)

ch = sys.stdin.read(1)

finally:

termios.tcsetattr(fd, termios.TCSADRAIN, old_settings)

return ch

def get_character():

"Gets a character from the keyboard and returns the key code"

char = get_raw_chars()

if ord(char) in [KEYMAP["interrupt"], KEYMAP["newline"]]:

return char

elif ord(char) == KEYMAP["esc"]:

combo = get_raw_chars()

if ord(combo) == KEYMAP["mod_int"]:

key = get_raw_chars()

if ord(key) >= KEYMAP["arrow_begin"] - ARROW_KEY_FLAG and ord(key) <= KEYMAP["arrow_end"] - ARROW_KEY_FLAG:

return chr(ord(key) + ARROW_KEY_FLAG)

else:

return KEYMAP["undefined"]

else:

return get_raw_chars()

else:

if char in string.printable:

return char

else:

return KEYMAP["undefined"]

| 6 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/menu/input.py | # Copyright 2022 The HuggingFace Team and Brian Chao. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""

This file contains utilities for handling input from the user and registering specific keys to specific functions,

based on https://github.com/bchao1/bullet

"""

from typing import List

from .keymap import KEYMAP, get_character

def mark(key: str):

"""

Mark the function with the key code so it can be handled in the register

"""

def decorator(func):

handle = getattr(func, "handle_key", [])

handle += [key]

func.handle_key = handle

return func

return decorator

def mark_multiple(*keys: List[str]):

"""

Mark the function with the key codes so it can be handled in the register

"""

def decorator(func):

handle = getattr(func, "handle_key", [])

handle += keys

func.handle_key = handle

return func

return decorator

class KeyHandler(type):

"""

Metaclass that adds the key handlers to the class

"""

def __new__(cls, name, bases, attrs):

new_cls = super().__new__(cls, name, bases, attrs)

if not hasattr(new_cls, "key_handler"):

new_cls.key_handler = {}

new_cls.handle_input = KeyHandler.handle_input

for value in attrs.values():

handled_keys = getattr(value, "handle_key", [])

for key in handled_keys:

new_cls.key_handler[key] = value

return new_cls

@staticmethod

def handle_input(cls):

"Finds and returns the selected character if it exists in the handler"

char = get_character()

if char != KEYMAP["undefined"]:

char = ord(char)

handler = cls.key_handler.get(char)

if handler:

cls.current_selection = char

return handler(cls)

else:

return None

def register(cls):

"""Adds KeyHandler metaclass to the class"""

return KeyHandler(cls.__name__, cls.__bases__, cls.__dict__.copy())

| 7 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/menu/cursor.py | # Copyright 2022 The HuggingFace Team and Brian Chao. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""

A utility for showing and hiding the terminal cursor on Windows and Linux, based on https://github.com/bchao1/bullet

"""

import os

import sys

from contextlib import contextmanager

# Windows only

if os.name == "nt":

import ctypes

import msvcrt # noqa

class CursorInfo(ctypes.Structure):

# _fields is a specific attr expected by ctypes

_fields_ = [("size", ctypes.c_int), ("visible", ctypes.c_byte)]

def hide_cursor():

if os.name == "nt":

ci = CursorInfo()

handle = ctypes.windll.kernel32.GetStdHandle(-11)

ctypes.windll.kernel32.GetConsoleCursorInfo(handle, ctypes.byref(ci))

ci.visible = False

ctypes.windll.kernel32.SetConsoleCursorInfo(handle, ctypes.byref(ci))

elif os.name == "posix":

sys.stdout.write("\033[?25l")

sys.stdout.flush()

def show_cursor():

if os.name == "nt":

ci = CursorInfo()

handle = ctypes.windll.kernel32.GetStdHandle(-11)

ctypes.windll.kernel32.GetConsoleCursorInfo(handle, ctypes.byref(ci))

ci.visible = True

ctypes.windll.kernel32.SetConsoleCursorInfo(handle, ctypes.byref(ci))

elif os.name == "posix":

sys.stdout.write("\033[?25h")

sys.stdout.flush()

@contextmanager

def hide():

"Context manager to hide the terminal cursor"

try:

hide_cursor()

yield

finally:

show_cursor()

| 8 |

0 | hf_public_repos/accelerate/src/accelerate/commands | hf_public_repos/accelerate/src/accelerate/commands/menu/helpers.py | # Copyright 2022 The HuggingFace Team and Brian Chao. All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

"""

A variety of helper functions and constants when dealing with terminal menu choices, based on

https://github.com/bchao1/bullet

"""

import enum

import shutil

import sys

TERMINAL_WIDTH, _ = shutil.get_terminal_size()

CURSOR_TO_CHAR = {"UP": "A", "DOWN": "B", "RIGHT": "C", "LEFT": "D"}

class Direction(enum.Enum):

UP = 0

DOWN = 1

def forceWrite(content, end=""):

sys.stdout.write(str(content) + end)

sys.stdout.flush()

def writeColor(content, color, end=""):

forceWrite(f"\u001b[{color}m{content}\u001b[0m", end)

def reset_cursor():

forceWrite("\r")

def move_cursor(num_lines: int, direction: str):

forceWrite(f"\033[{num_lines}{CURSOR_TO_CHAR[direction.upper()]}")

def clear_line():

forceWrite(" " * TERMINAL_WIDTH)

reset_cursor()

def linebreak():

reset_cursor()

forceWrite("-" * TERMINAL_WIDTH)

| 9 |

0 | hf_public_repos | hf_public_repos/blog/ort-accelerating-hf-models.md | ---

title: "Accelerating over 130,000 Hugging Face models with ONNX Runtime"

thumbnail: /blog/assets/ort_accelerating_hf_models/thumbnail.png

authors:

- user: sschoenmeyer

guest: true

- user: mfuntowicz

---

# Accelerating over 130,000 Hugging Face models with ONNX Runtime

## What is ONNX Runtime?

ONNX Runtime is a cross-platform machine learning tool that can be used to accelerate a wide variety of models, particularly those with ONNX support.

## Hugging Face ONNX Runtime Support

There are over 130,000 ONNX-supported models on Hugging Face, an open source community that allows users to build, train, and deploy hundreds of thousands of publicly available machine learning models.

These ONNX-supported models, which include many increasingly popular large language models (LLMs) and cloud models, can leverage ONNX Runtime to improve performance, along with other benefits.

For example, using ONNX Runtime to accelerate the whisper-tiny model can improve average latency per inference, with an up to 74.30% gain over PyTorch.

ONNX Runtime works closely with Hugging Face to ensure that the most popular models on the site are supported.

In total, over 90 Hugging Face model architectures are supported by ONNX Runtime, including the 11 most popular architectures (where popularity is determined by the corresponding number of models uploaded to the Hugging Face Hub):

| Model Architecture | Approximate No. of Models |

|:------------------:|:-------------------------:|

| BERT | 28180 |

| GPT2 | 14060 |

| DistilBERT | 11540 |

| RoBERTa | 10800 |

| T5 | 10450 |

| Wav2Vec2 | 6560 |

| Stable-Diffusion | 5880 |

| XLM-RoBERTa | 5100 |

| Whisper | 4400 |

| BART | 3590 |

| Marian | 2840 |

## Learn More

To learn more about accelerating Hugging Face models with ONNX Runtime, check out our recent post on the [Microsoft Open Source Blog](https://cloudblogs.microsoft.com/opensource/2023/10/04/accelerating-over-130000-hugging-face-models-with-onnx-runtime/). | 0 |

0 | hf_public_repos | hf_public_repos/blog/habana-gaudi-2-bloom.md | ---

title: "Fast Inference on Large Language Models: BLOOMZ on Habana Gaudi2 Accelerator"

thumbnail: /blog/assets/habana-gaudi-2-bloom/thumbnail.png

authors:

- user: regisss

---

# Fast Inference on Large Language Models: BLOOMZ on Habana Gaudi2 Accelerator

This article will show you how to easily deploy large language models with hundreds of billions of parameters like BLOOM on [Habana® Gaudi®2](https://habana.ai/training/gaudi2/) using 🤗 [Optimum Habana](https://huggingface.co/docs/optimum/habana/index), which is the bridge between Gaudi2 and the 🤗 Transformers library. As demonstrated in the benchmark presented in this post, this will enable you to **run inference faster than with any GPU currently available on the market**.

As models get bigger and bigger, deploying them into production to run inference has become increasingly challenging. Both hardware and software have seen a lot of innovations to address these challenges, so let's dive in to see how to efficiently overcome them!

## BLOOMZ

[BLOOM](https://arxiv.org/abs/2211.05100) is a 176-billion-parameter autoregressive model that was trained to complete sequences of text. It can handle 46 different languages and 13 programming languages. Designed and trained as part of the [BigScience](https://bigscience.huggingface.co/) initiative, BLOOM is an open-science project that involved a large number of researchers and engineers all over the world. More recently, another model with the exact same architecture was released: [BLOOMZ](https://arxiv.org/abs/2211.01786), which is a fine-tuned version of BLOOM on several tasks leading to better generalization and zero-shot[^1] capabilities.

Such large models raise new challenges in terms of memory and speed for both [training](https://huggingface.co/blog/bloom-megatron-deepspeed) and [inference](https://huggingface.co/blog/bloom-inference-optimization). Even in 16-bit precision, one instance requires 352 GB to fit! You will probably struggle to find any device with so much memory at the moment, but state-of-the-art hardware like Habana Gaudi2 does make it possible to perform inference on BLOOM and BLOOMZ models with low latencies.

## Habana Gaudi2

[Gaudi2](https://habana.ai/training/gaudi2/) is the second-generation AI hardware accelerator designed by Habana Labs. A single server contains 8 accelerator devices (called Habana Processing Units, or HPUs) with 96GB of memory each, which provides room to make very large models fit in. However, hosting the model is not very interesting if the computation is slow. Fortunately, Gaudi2 shines on that aspect: it differs from GPUs in that its architecture enables the accelerator to perform General Matrix Multiplication (GeMM) and other operations in parallel, which speeds up deep learning workflows. These features make Gaudi2 a great candidate for LLM training and inference.

Habana's SDK, SynapseAI™, supports PyTorch and DeepSpeed for accelerating LLM training and inference. The [SynapseAI graph compiler](https://docs.habana.ai/en/latest/Gaudi_Overview/SynapseAI_Software_Suite.html#graph-compiler-and-runtime) will optimize the execution of the operations accumulated in the graph (e.g. operator fusion, data layout management, parallelization, pipelining and memory management, and graph-level optimizations).

Moreover, support for [HPU graphs](https://docs.habana.ai/en/latest/PyTorch/Inference_on_PyTorch/Inference_Using_HPU_Graphs.html) and [DeepSpeed-inference](https://docs.habana.ai/en/latest/PyTorch/DeepSpeed/Inference_Using_DeepSpeed.html) have just recently been introduced in SynapseAI, and these are well-suited for latency-sensitive applications as shown in our benchmark below.

All these features are integrated into the 🤗 [Optimum Habana](https://github.com/huggingface/optimum-habana) library so that deploying your model on Gaudi is very simple. Check out the quick-start page [here](https://huggingface.co/docs/optimum/habana/quickstart).

If you would like to get access to Gaudi2, go to the [Intel Developer Cloud](https://www.intel.com/content/www/us/en/secure/developer/devcloud/cloud-launchpad.html) and follow [this guide](https://huggingface.co/blog/habana-gaudi-2-benchmark#how-to-get-access-to-gaudi2).

## Benchmarks

In this section, we are going to provide an early benchmark of BLOOMZ on Gaudi2, first-generation Gaudi and Nvidia A100 80GB. Although these devices have quite a lot of memory, the model is so large that a single device is not enough to contain a single instance of BLOOMZ. To solve this issue, we are going to use [DeepSpeed](https://www.deepspeed.ai/), which is a deep learning optimization library that enables many memory and speed improvements to accelerate the model and make it fit the device. In particular, we rely here on [DeepSpeed-inference](https://arxiv.org/abs/2207.00032): it introduces several features such as [model (or pipeline) parallelism](https://huggingface.co/blog/bloom-megatron-deepspeed#pipeline-parallelism) to make the most of the available devices. For Gaudi2, we use [Habana's DeepSpeed fork](https://github.com/HabanaAI/deepspeed) that adds support for HPUs.

### Latency

We measured latencies (batch of one sample) for two different sizes of BLOOMZ, both with multi-billion parameters:

- [176 billion](https://huggingface.co/bigscience/bloomz) parameters

- [7 billion](https://huggingface.co/bigscience/bloomz-7b1) parameters

Runs were performed with DeepSpeed-inference in 16-bit precision with 8 devices and using a [key-value cache](https://huggingface.co/docs/transformers/v4.27.1/en/model_doc/bloom#transformers.BloomForCausalLM.forward.use_cache). Note that while [CUDA graphs](https://developer.nvidia.com/blog/cuda-graphs/) are not currently compatible with model parallelism in DeepSpeed (DeepSpeed v0.8.2, see [here](https://github.com/microsoft/DeepSpeed/blob/v0.8.2/deepspeed/inference/engine.py#L158)), HPU graphs are supported in Habana's DeepSpeed fork. All benchmarks are doing [greedy generation](https://huggingface.co/blog/how-to-generate#greedy-search) of 100 token outputs. The input prompt is:

> "DeepSpeed is a machine learning framework"

which consists of 7 tokens with BLOOM's tokenizer.

The results for inference latency are displayed in the table below (the unit is *seconds*).

| Model | Number of devices | Gaudi2 latency (seconds) | A100-80GB latency (seconds) | First-gen Gaudi latency (seconds) |

|:-----------:|:-----------------:|:-------------------------:|:-----------------:|:----------------------------------:|

| BLOOMZ | 8 | 3.103 | 4.402 | / |

| BLOOMZ-7B | 8 | 0.734 | 2.417 | 3.321 |

| BLOOMZ-7B | 1 | 0.772 | 2.119 | 2.387 |

*Update: the numbers above were updated with the releases of Optimum Habana 1.6 and SynapseAI 1.10, leading to a* x*1.42 speedup on BLOOMZ with Gaudi2 compared to A100.*

The Habana team recently introduced support for DeepSpeed-inference in SynapseAI 1.8, and thereby quickly enabled inference for 100+ billion parameter models. **For the 176-billion-parameter checkpoint, Gaudi2 is 1.42x faster than A100 80GB**. Smaller checkpoints present interesting results too. **Gaudi2 is 2.89x faster than A100 for BLOOMZ-7B!** It is also interesting to note that it manages to benefit from model parallelism whereas A100 is faster on a single device.

We also ran these models on first-gen Gaudi. While it is slower than Gaudi2, it is interesting from a price perspective as a DL1 instance on AWS costs approximately 13\$ per hour. Latency for BLOOMZ-7B on first-gen Gaudi is 2.387 seconds. Thus, **first-gen Gaudi offers for the 7-billion checkpoint a better price-performance ratio than A100** which costs more than 30\$ per hour!

We expect the Habana team will optimize the performance of these models in the upcoming SynapseAI releases. For example, in our last benchmark, we saw that [Gaudi2 performs Stable Diffusion inference 2.2x faster than A100](https://huggingface.co/blog/habana-gaudi-2-benchmark#generating-images-from-text-with-stable-diffusion) and this has since been improved further to 2.37x with the latest optimizations provided by Habana. We will update these numbers as new versions of SynapseAI are released and integrated within Optimum Habana.

### Running inference on a complete dataset

The script we wrote enables using your model to complete sentences over a whole dataset. This is useful to try BLOOMZ inference on Gaudi2 on your own data.

Here is an example with the [*tldr_news*](https://huggingface.co/datasets/JulesBelveze/tldr_news/viewer/all/test) dataset. It contains both the headline and content of several articles (you can visualize it on the Hugging Face Hub). We kept only the *content* column and truncated each sample to the first 16 tokens so that the model generates the rest of the sequence with 50 new tokens. The first five samples look like:

```

Batch n°1

Input: ['Facebook has released a report that shows what content was most widely viewed by Americans between']

Output: ['Facebook has released a report that shows what content was most widely viewed by Americans between January and June of this year. The report, which is based on data from the company’s mobile advertising platform, shows that the most popular content on Facebook was news, followed by sports, entertainment, and politics. The report also shows that the most']

--------------------------------------------------------------------------------------------------

Batch n°2

Input: ['A quantum effect called superabsorption allows a collection of molecules to absorb light more']

Output: ['A quantum effect called superabsorption allows a collection of molecules to absorb light more strongly than the sum of the individual absorptions of the molecules. This effect is due to the coherent interaction of the molecules with the electromagnetic field. The superabsorption effect has been observed in a number of systems, including liquid crystals, liquid crystals in']

--------------------------------------------------------------------------------------------------

Batch n°3

Input: ['A SpaceX Starship rocket prototype has exploded during a pressure test. It was']

Output: ['A SpaceX Starship rocket prototype has exploded during a pressure test. It was the first time a Starship prototype had been tested in the air. The explosion occurred at the SpaceX facility in Boca Chica, Texas. The Starship prototype was being tested for its ability to withstand the pressure of flight. The explosion occurred at']

--------------------------------------------------------------------------------------------------

Batch n°4

Input: ['Scalene is a high-performance CPU and memory profiler for Python.']

Output: ['Scalene is a high-performance CPU and memory profiler for Python. It is designed to be a lightweight, portable, and easy-to-use profiler. Scalene is a Python package that can be installed on any platform that supports Python. Scalene is a lightweight, portable, and easy-to-use profiler']

--------------------------------------------------------------------------------------------------

Batch n°5

Input: ['With the rise of cheap small "Cube Satellites", startups are now']

Output: ['With the rise of cheap small "Cube Satellites", startups are now able to launch their own satellites for a fraction of the cost of a traditional launch. This has led to a proliferation of small satellites, which are now being used for a wide range of applications. The most common use of small satellites is for communications,']

```

In the next section, we explain how to use the script we wrote to perform this benchmark or to apply it on any dataset you like from the Hugging Face Hub!

### How to reproduce these results?

The script used for benchmarking BLOOMZ on Gaudi2 and first-gen Gaudi is available [here](https://github.com/huggingface/optimum-habana/tree/main/examples/text-generation). Before running it, please make sure that the latest versions of SynapseAI and the Gaudi drivers are installed following [the instructions given by Habana](https://docs.habana.ai/en/latest/Installation_Guide/index.html).

Then, run the following:

```bash

git clone https://github.com/huggingface/optimum-habana.git

cd optimum-habana && pip install . && cd examples/text-generation

pip install git+https://github.com/HabanaAI/[email protected]

```

Finally, you can launch the script as follows:

```bash

python ../gaudi_spawn.py --use_deepspeed --world_size 8 run_generation.py --model_name_or_path bigscience/bloomz --use_hpu_graphs --use_kv_cache --max_new_tokens 100

```

For multi-node inference, you can follow [this guide](https://huggingface.co/docs/optimum/habana/usage_guides/multi_node_training) from the documentation of Optimum Habana.

You can also load any dataset from the Hugging Face Hub to get prompts that will be used for generation using the argument `--dataset_name my_dataset_name`.

This benchmark was performed with Transformers v4.28.1, SynapseAI v1.9.0 and Optimum Habana v1.5.0.

For GPUs, [here](https://github.com/huggingface/transformers-bloom-inference/blob/main/bloom-inference-scripts/bloom-ds-inference.py) is the script that led to the results that were previously presented in [this blog post](https://huggingface.co/blog/bloom-inference-pytorch-scripts) (and [here](https://github.com/huggingface/transformers-bloom-inference/tree/main/bloom-inference-scripts#deepspeed-inference) are the instructions to use it). To use CUDA graphs, static shapes are necessary and this is not supported in 🤗 Transformers. You can use [this repo](https://github.com/HabanaAI/Model-References/tree/1.8.0/PyTorch/nlp/bloom) written by the Habana team to enable them.

## Conclusion

We see in this article that **Habana Gaudi2 performs BLOOMZ inference faster than Nvidia A100 80GB**. And there is no need to write a complicated script as 🤗 [Optimum Habana](https://huggingface.co/docs/optimum/habana/index) provides easy-to-use tools to run inference with multi-billion-parameter models on HPUs. Future releases of Habana's SynapseAI SDK are expected to speed up performance, so we will update this benchmark regularly as LLM inference optimizations on SynapseAI continue to advance. We are also looking forward to the performance benefits that will come with FP8 inference on Gaudi2.

We also presented the results achieved with first-generation Gaudi. For smaller models, it can perform on par with or even better than A100 for almost a third of its price. It is a good alternative option to using GPUs for running inference with such a big model like BLOOMZ.

If you are interested in accelerating your Machine Learning training and inference workflows using the latest AI hardware accelerators and software libraries, check out our [Expert Acceleration Program](https://huggingface.co/support). To learn more about Habana solutions, [read about our partnership and contact them here](https://huggingface.co/hardware/habana). To learn more about Hugging Face efforts to make AI hardware accelerators easy to use, check out our [Hardware Partner Program](https://huggingface.co/hardware).

### Related Topics

- [Faster Training and Inference: Habana Gaudi-2 vs Nvidia A100 80GB](https://huggingface.co/blog/habana-gaudi-2-benchmark)

- [Leverage DeepSpeed to Train Faster and Cheaper Large Scale Transformer Models with Hugging Face and Habana Labs Gaudi](https://developer.habana.ai/events/leverage-deepspeed-to-train-faster-and-cheaper-large-scale-transformer-models-with-hugging-face-and-habana-labs-gaudi/)

---

Thanks for reading! If you have any questions, feel free to contact me, either through [Github](https://github.com/huggingface/optimum-habana) or on the [forum](https://discuss.huggingface.co/c/optimum/59). You can also connect with me on [LinkedIn](https://www.linkedin.com/in/regispierrard/).

[^1]: “Zero-shot” refers to the ability of a model to complete a task on new or unseen input data, i.e. without having been provided any training examples of this kind of data. We provide the model with a prompt and a sequence of text that describes what we want our model to do, in natural language. Zero-shot classification excludes any examples of the desired task being completed. This differs from single or few-shot classification, as these tasks include a single or a few examples of the selected task.

| 1 |

0 | hf_public_repos | hf_public_repos/blog/diffusers-2nd-month.md | ---

title: What's new in Diffusers? 🎨

thumbnail: /blog/assets/102_diffusers_2nd_month/inpainting.png

authors:

- user: osanseviero

---

# What's new in Diffusers? 🎨

A month and a half ago we released `diffusers`, a library that provides a modular toolbox for diffusion models across modalities. A couple of weeks later, we released support for Stable Diffusion, a high quality text-to-image model, with a free demo for anyone to try out. Apart from burning lots of GPUs, in the last three weeks the team has decided to add one or two new features to the library that we hope the community enjoys! This blog post gives a high-level overview of the new features in `diffusers` version 0.3! Remember to give a ⭐ to the [GitHub repository](https://github.com/huggingface/diffusers).

- [Image to Image pipelines](#image-to-image-pipeline)

- [Textual Inversion](#textual-inversion)

- [Inpainting](#experimental-inpainting-pipeline)

- [Optimizations for Smaller GPUs](#optimizations-for-smaller-gpus)

- [Run on Mac](#diffusers-in-mac-os)

- [ONNX Exporter](#experimental-onnx-exporter-and-pipeline)

- [New docs](#new-docs)

- [Community](#community)

- [Generate videos with SD latent space](#stable-diffusion-videos)

- [Model Explainability](#diffusers-interpret)

- [Japanese Stable Diffusion](#japanese-stable-diffusion)

- [High quality fine-tuned model](#waifu-diffusion)

- [Cross Attention Control with Stable Diffusion](#cross-attention-control)

- [Reusable seeds](#reusable-seeds)

## Image to Image pipeline

One of the most requested features was to have image to image generation. This pipeline allows you to input an image and a prompt, and it will generate an image based on that!

Let's see some code based on the official Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/image_2_image_using_diffusers.ipynb).

```python

from diffusers import StableDiffusionImg2ImgPipeline

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

# Download an initial image

# ...

init_image = preprocess(init_img)

prompt = "A fantasy landscape, trending on artstation"

images = pipe(prompt=prompt, init_image=init_image, strength=0.75, guidance_scale=7.5, generator=generator)["sample"]

```

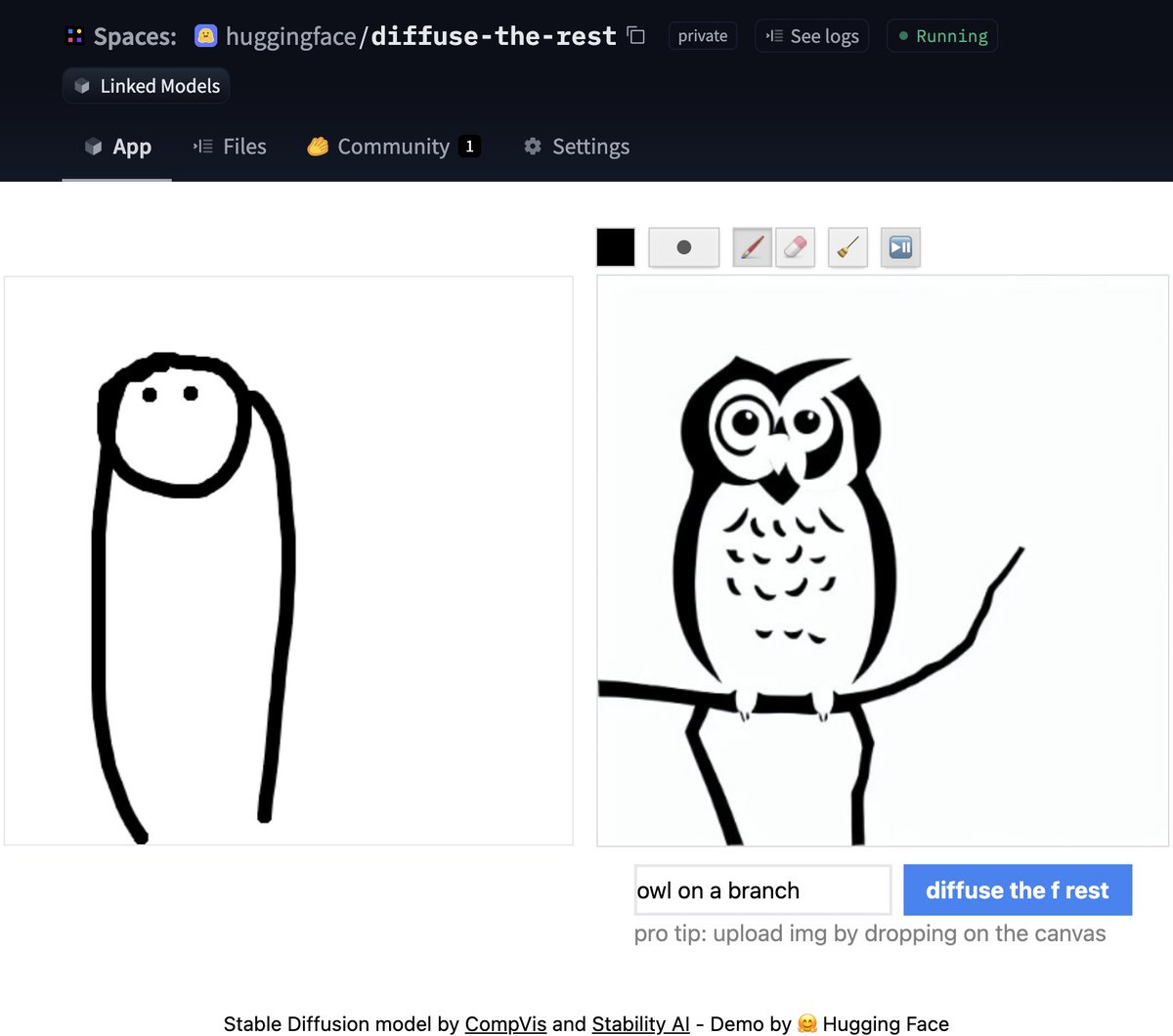

Don't have time for code? No worries, we also created a [Space demo](https://huggingface.co/spaces/huggingface/diffuse-the-rest) where you can try it out directly

## Textual Inversion

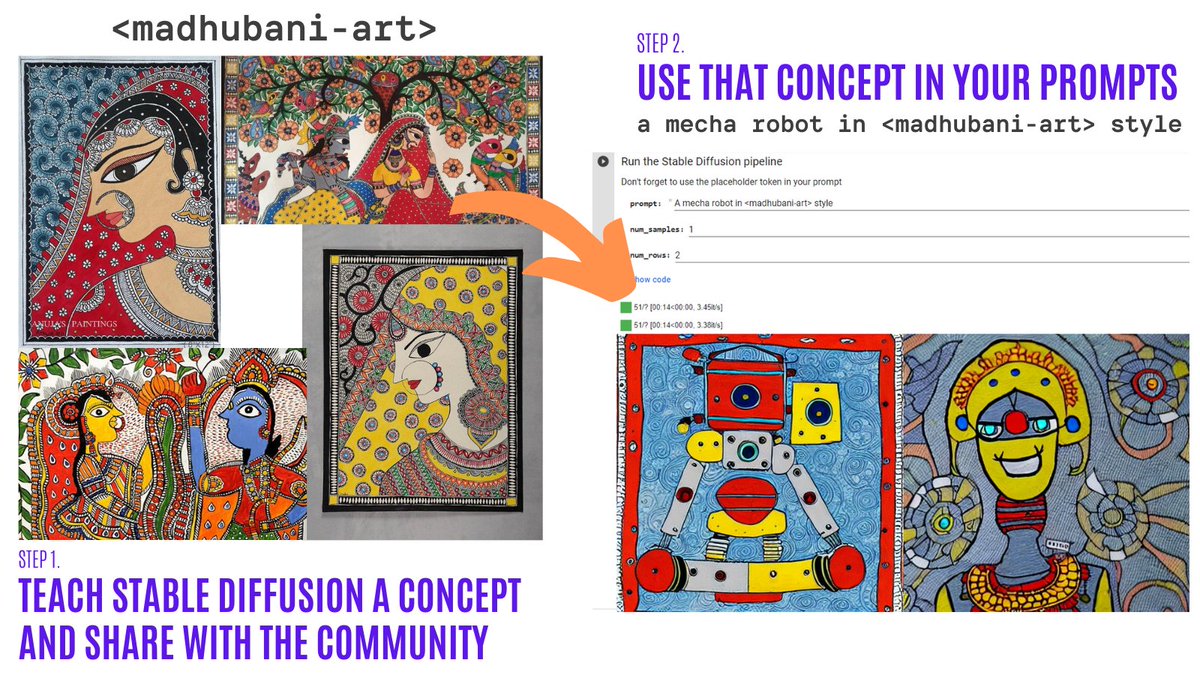

Textual Inversion lets you personalize a Stable Diffusion model on your own images with just 3-5 samples. With this tool, you can train a model on a concept, and then share the concept with the rest of the community!

In just a couple of days, the community shared over 200 concepts! Check them out!

* [Organization](https://huggingface.co/sd-concepts-library) with the concepts.

* [Navigator Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_diffusion_textual_inversion_library_navigator.ipynb): Browse visually and use over 150 concepts created by the community.

* [Training Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb): Teach Stable Diffusion a new concept and share it with the rest of the community.

* [Inference Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb): Run Stable Diffusion with the learned concepts.

## Experimental inpainting pipeline

Inpainting allows to provide an image, then select an area in the image (or provide a mask), and use Stable Diffusion to replace the mask. Here is an example:

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/inpainting.png" alt="Example inpaint of owl being generated from an initial image and a prompt"/>

</figure>

You can try out a minimal Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/in_painting_with_stable_diffusion_using_diffusers.ipynb) or check out the code below. A demo is coming soon!

```python

from diffusers import StableDiffusionInpaintPipeline

pipe = StableDiffusionInpaintPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

).to(device)

images = pipe(

prompt=["a cat sitting on a bench"] * 3,

init_image=init_image,

mask_image=mask_image,

strength=0.75,

guidance_scale=7.5,

generator=None

).images

```

Please note this is experimental, so there is room for improvement.

## Optimizations for smaller GPUs

After some improvements, the diffusion models can take much less VRAM. 🔥 For example, Stable Diffusion only takes 3.2GB! This yields the exact same results at the expense of 10% of speed. Here is how to use these optimizations

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

pipe = pipe.to("cuda")

pipe.enable_attention_slicing()

```

This is super exciting as this will reduce even more the barrier to use these models!

## Diffusers in Mac OS

🍎 That's right! Another widely requested feature was just released! Read the full instructions in the [official docs](https://huggingface.co/docs/diffusers/optimization/mps) (including performance comparisons, specs, and more).

Using the PyTorch mps device, people with M1/M2 hardware can run inference with Stable Diffusion. 🤯 This requires minimal setup for users, try it out!

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", use_auth_token=True)

pipe = pipe.to("mps")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

## Experimental ONNX exporter and pipeline

The new experimental pipeline allows users to run Stable Diffusion on any hardware that supports ONNX. Here is an example of how to use it (note that the `onnx` revision is being used)

```python

from diffusers import StableDiffusionOnnxPipeline

pipe = StableDiffusionOnnxPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="onnx",

provider="CPUExecutionProvider",

use_auth_token=True,

)

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

Alternatively, you can also convert your SD checkpoints to ONNX directly with the exporter script.

```

python scripts/convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

```

## New docs

All of the previous features are very cool. As maintainers of open-source libraries, we know about the importance of high quality documentation to make it as easy as possible for anyone to try out the library.

💅 Because of this, we did a Docs sprint and we're very excited to do a first release of our [documentation](https://huggingface.co/docs/diffusers/v0.3.0/en/index). This is a first version, so there are many things we plan to add (and contributions are always welcome!).

Some highlights of the docs:

* Techniques for [optimization](https://huggingface.co/docs/diffusers/optimization/fp16)

* The [training overview](https://huggingface.co/docs/diffusers/training/overview)

* A [contributing guide](https://huggingface.co/docs/diffusers/conceptual/contribution)

* In-depth API docs for [schedulers](https://huggingface.co/docs/diffusers/api/schedulers)

* In-depth API docs for [pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview)

## Community

And while we were doing all of the above, the community did not stay idle! Here are some highlights (although not exhaustive) of what has been done out there

### Stable Diffusion Videos

Create 🔥 videos with Stable Diffusion by exploring the latent space and morphing between text prompts. You can:

* Dream different versions of the same prompt

* Morph between different prompts

The [Stable Diffusion Videos](https://github.com/nateraw/stable-diffusion-videos) tool is pip-installable, comes with a Colab notebook and a Gradio notebook, and is super easy to use!

Here is an example

```python

from stable_diffusion_videos import walk

video_path = walk(['a cat', 'a dog'], [42, 1337], num_steps=3, make_video=True)

```

### Diffusers Interpret

[Diffusers interpret](https://github.com/JoaoLages/diffusers-interpret) is an explainability tool built on top of `diffusers`. It has cool features such as:

* See all the images in the diffusion process

* Analyze how each token in the prompt influences the generation

* Analyze within specified bounding boxes if you want to understand a part of the image

(Image from the tool repository)

```python

# pass pipeline to the explainer class

explainer = StableDiffusionPipelineExplainer(pipe)

# generate an image with `explainer`

prompt = "Corgi with the Eiffel Tower"

output = explainer(

prompt,

num_inference_steps=15

)

output.normalized_token_attributions # (token, attribution_percentage)

#[('corgi', 40),

# ('with', 5),

# ('the', 5),

# ('eiffel', 25),

# ('tower', 25)]

```

### Japanese Stable Diffusion

The name says it all! The goal of JSD was to train a model that also captures information about the culture, identity and unique expressions. It was trained with 100 million images with Japanese captions. You can read more about how the model was trained in the [model card](https://huggingface.co/rinna/japanese-stable-diffusion)

### Waifu Diffusion

[Waifu Diffusion](https://huggingface.co/hakurei/waifu-diffusion) is a fine-tuned SD model for high-quality anime images generation.

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/waifu.png" alt="Images of high quality anime"/>

</figure>

(Image from the tool repository)

### Cross Attention Control

[Cross Attention Control](https://github.com/bloc97/CrossAttentionControl) allows fine control of the prompts by modifying the attention maps of the diffusion models. Some cool things you can do:

* Replace a target in the prompt (e.g. replace cat by dog)

* Reduce or increase the importance of words in the prompt (e.g. if you want less attention to be given to "rocks")

* Easily inject styles

And much more! Check out the repo.

### Reusable Seeds

One of the most impressive early demos of Stable Diffusion was the reuse of seeds to tweak images. The idea is to use the seed of an image of interest to generate a new image, with a different prompt. This yields some cool results! Check out the [Colab](https://colab.research.google.com/github/pcuenca/diffusers-examples/blob/main/notebooks/stable-diffusion-seeds.ipynb)

## Thanks for reading!

I hope you enjoy reading this! Remember to give a Star in our [GitHub Repository](https://github.com/huggingface/diffusers) and join the [Hugging Face Discord Server](https://hf.co/join/discord), where we have a category of channels just for Diffusion models. Over there the latest news in the library are shared!

Feel free to open issues with feature requests and bug reports! Everything that has been achieved couldn't have been done without such an amazing community.

| 2 |

0 | hf_public_repos | hf_public_repos/blog/sovereign-data-solution-case-study.md | ---

title: "Banque des Territoires (CDC Group) x Polyconseil x Hugging Face: Enhancing a Major French Environmental Program with a Sovereign Data Solution"

thumbnail: /blog/assets/78_ml_director_insights/cdc_poly_hf.png

authors:

- user: AnthonyTruchet-Polyconseil

guest: true

- user: jcailton

guest: true

- user: StacyRamaherison

guest: true

- user: florentgbelidji

- user: Violette

---

# Banque des Territoires (CDC Group) x Polyconseil x Hugging Face: Enhancing a Major French Environmental Program with a Sovereign Data Solution

## Table of contents

- Case Study in English - Banque des Territoires (CDC Group) x Polyconseil x Hugging Face: Enhancing a Major French Environmental Program with a Sovereign Data Solution

- [Executive summary](#executive-summary)

- [The power of RAG to meet environmental objectives](#power-of-rag)

- [Industrializing while ensuring performance and sovereignty](#industrializing-ensuring-performance-sovereignty)

- [A modular solution to respond to a dynamic sector](#modular-solution-to-respond-to-a-dynamic-sector)

- [Key Success Factors Success Factors](#key-success-factors)

- Case Study in French - Banque des Territoires (Groupe CDC) x Polyconseil x Hugging Face : améliorer un programme environnemental français majeur grâce à une solution data souveraine

- [Résumé](#resume)

- [La puissance du RAG au service d'objectifs environnementaux](#puissance-rag)

- [Industrialiser en garantissant performance et souveraineté](#industrialiser-garantissant-performance-souverainete)

- [Une solution modulaire pour répondre au dynamisme du secteur](#solution-modulaire-repondre-dynamisme-secteur)

- [Facteurs clés de succès](#facteurs-cles-succes)

<a name="executive-summary"></a>

## Executive summary

The collaboration initiated last January between Banque des Territoires (part of the Caisse des Dépôts et Consignations group), Polyconseil, and Hugging Face illustrates the possibility of merging the potential of generative AI with the pressing demands of data sovereignty.

As the project's first phase has just finished, the tool developed is ultimately intended to support the national strategy for schools' environmental renovation. Specifically, the solution aims to optimize the support framework of Banque des Territoires’ EduRénov program, which is dedicated to the ecological renovation of 10,000 public school facilities (nurseries, grade/middle/high schools, and universities).

This article shares some key insights from a successful co-development between:

- A data science team from Banque des Territoires’ Loan Department, along with EduRénov’ Director ;

- A multidisciplinary team from Polyconseil, including developers, DevOps, and Product Managers ;

- A Hugging Face expert in Machine Learning and AI solutions deployment.

<a name="power-of-rag"></a>

## The power of RAG to meet environmental objectives