index

int64 0

0

| repo_id

stringclasses 179

values | file_path

stringlengths 26

186

| content

stringlengths 1

2.1M

| __index_level_0__

int64 0

9

|

|---|---|---|---|---|

0 | hf_public_repos/blog | hf_public_repos/blog/zh/instruction-tuning-sd.md | ---

title: "使用 InstructPix2Pix 对 Stable Diffusion 进行指令微调"

thumbnail: assets/instruction_tuning_sd/thumbnail.png

authors:

- user: sayakpaul

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# 使用 InstructPix2Pix 对 Stable Diffusion 进行指令微调

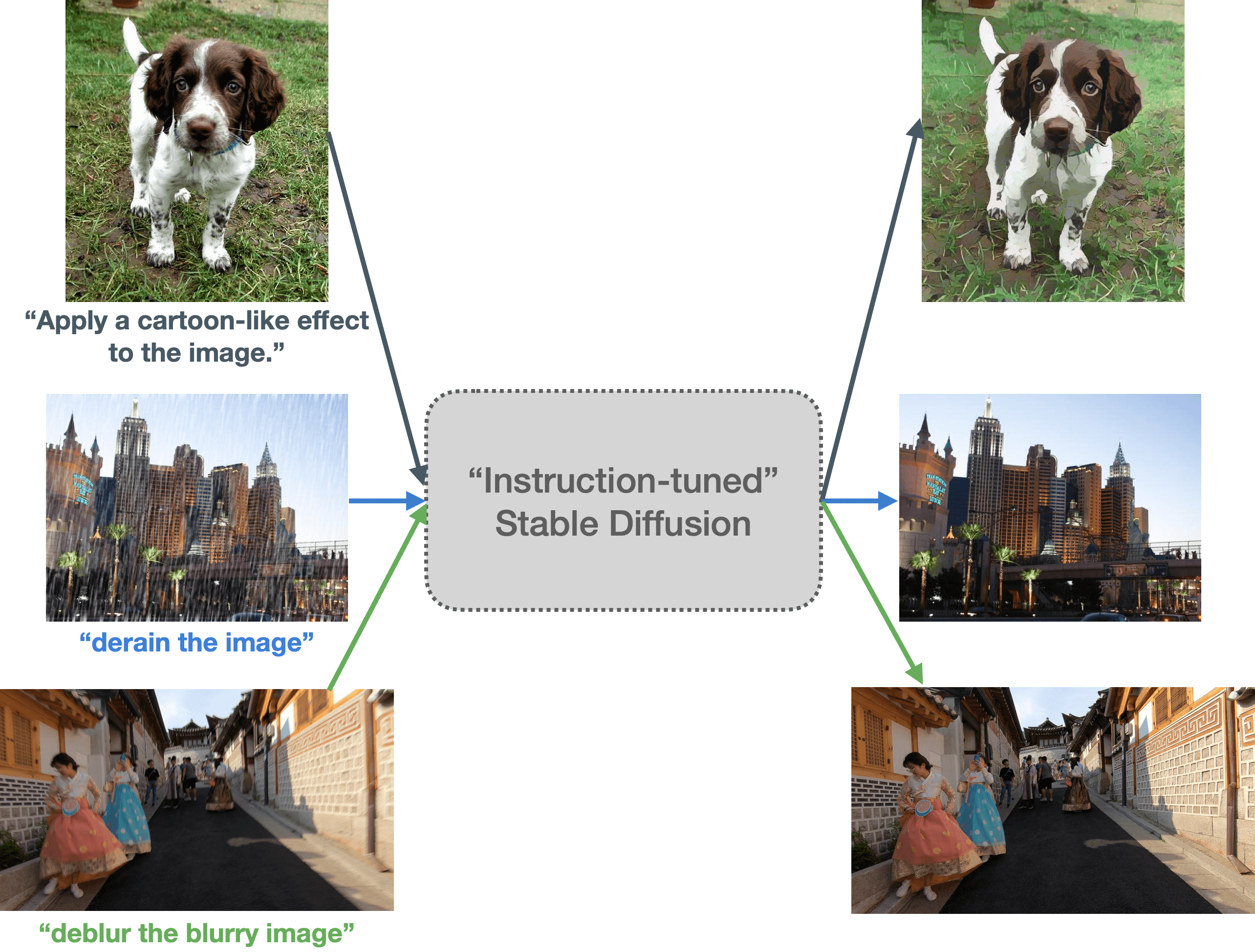

本文主要探讨如何使用指令微调的方法教会 [Stable Diffusion](https://huggingface.co/blog/zh/stable_diffusion) 按照指令 PS 图像。这样,我们 Stable Diffusion 就能听得懂人话,并根据要求对输入图像进行相应操作,如: _将输入的自然图像卡通化_。

|  |

|:--:|

| **图 1**:我们探索了 Stable Diffusion 的指令微调能力。这里,我们使用不同的图像和提示对一个指令微调后的 Stable Diffusion 模型进行了测试。微调后的模型似乎能够理解输入中的图像操作指令。(建议放大并以彩色显示,以获得最佳视觉效果)|

[InstructPix2Pix: Learning to Follow Image Editing Instructions](https://huggingface.co/papers/2211.09800) 一文首次提出了这种教 Stable Diffusion 按照用户指令 **编辑** 输入图像的想法。本文我们将讨论如何拓展 InstructPix2Pix 的训练策略以使其能够理解并执行更特定的指令任务,如图像翻译 (如卡通化) 、底层图像处理 (如图像除雨) 等。本文接下来的部分安排如下:

- [指令微调简介](#引言与动机)

- [本工作的灵感来源](#引言与动机)

- [数据集准备](#数据集准备)

- [训练实验及结果](#训练实验及结果)

- [潜在的应用及其限制](#潜在的应用及其限制)

- [开放性问题](#开放性问题)

你可在 [此处](https://github.com/huggingface/instruction-tuned-sd) 找到我们的代码、预训练模型及数据集。

## 引言与动机

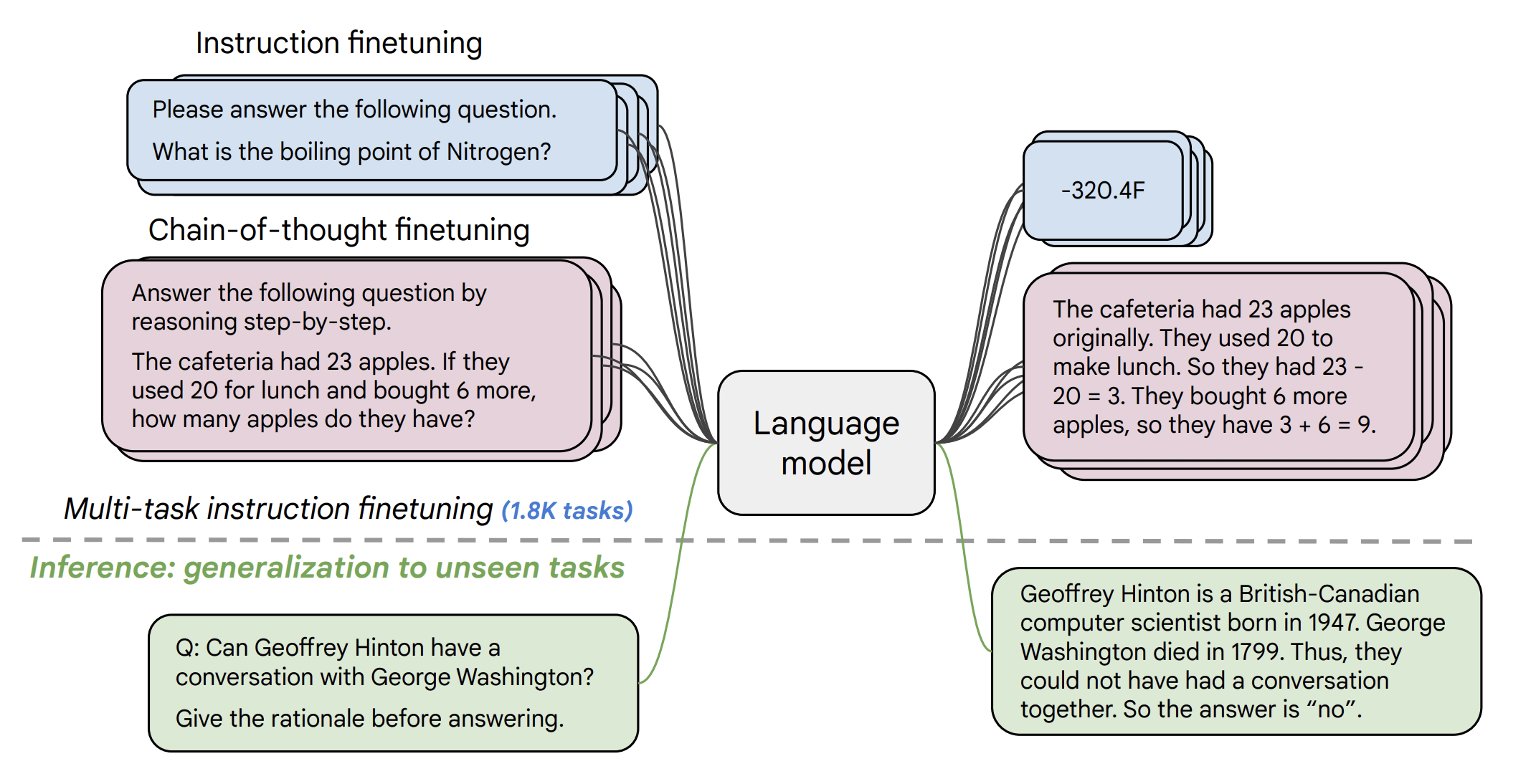

指令微调是一种有监督训练方法,用于教授语言模型按照指令完成任务的能力。该方法最早由谷歌在 [Fine-tuned Language Models Are Zero-Shot Learners](https://huggingface.co/papers/2109.01652) (FLAN) 一文中提出。最近大家耳熟能详的 [Alpaca](https://crfm.stanford.edu/2023/03/13/alpaca.html)、[FLAN V2](https://huggingface.co/papers/2210.11416) 等工作都充分证明了指令微调对很多任务都有助益。

下图展示了指令微调的一种形式。在 [FLAN V2 论文](https://huggingface.co/papers/2210.11416) 中,作者在一个样本集上对预训练语言模型 (如 [T5](https://huggingface.co/docs/transformers/model_doc/t5)) 进行了微调,如下图所示。

|  |

|:--:|

| **图 2**: FLAN V2 示意图 (摘自 FLAN V2 论文)。 |

使用这种方法,我们可以创建一个涵盖多种不同任务的训练集,并在此数据集上进行微调,因此指令微调可用于多任务场景:

| **输入** | **标签** | **任务** |

|---|---|---|

| Predict the sentiment of the<br>following sentence: “The movie<br>was pretty amazing. I could not<br>turn around my eyes even for a<br>second.” | Positive | Sentiment analysis /<br>Sequence classification |

| Please answer the following<br>question. <br>What is the boiling point of<br>Nitrogen? | 320.4F | Question answering |

| Translate the following<br>English sentence into German: “I have<br>a cat.” | Ich habe eine Katze. | Machine translation |

| … | … | … |

| | | | |

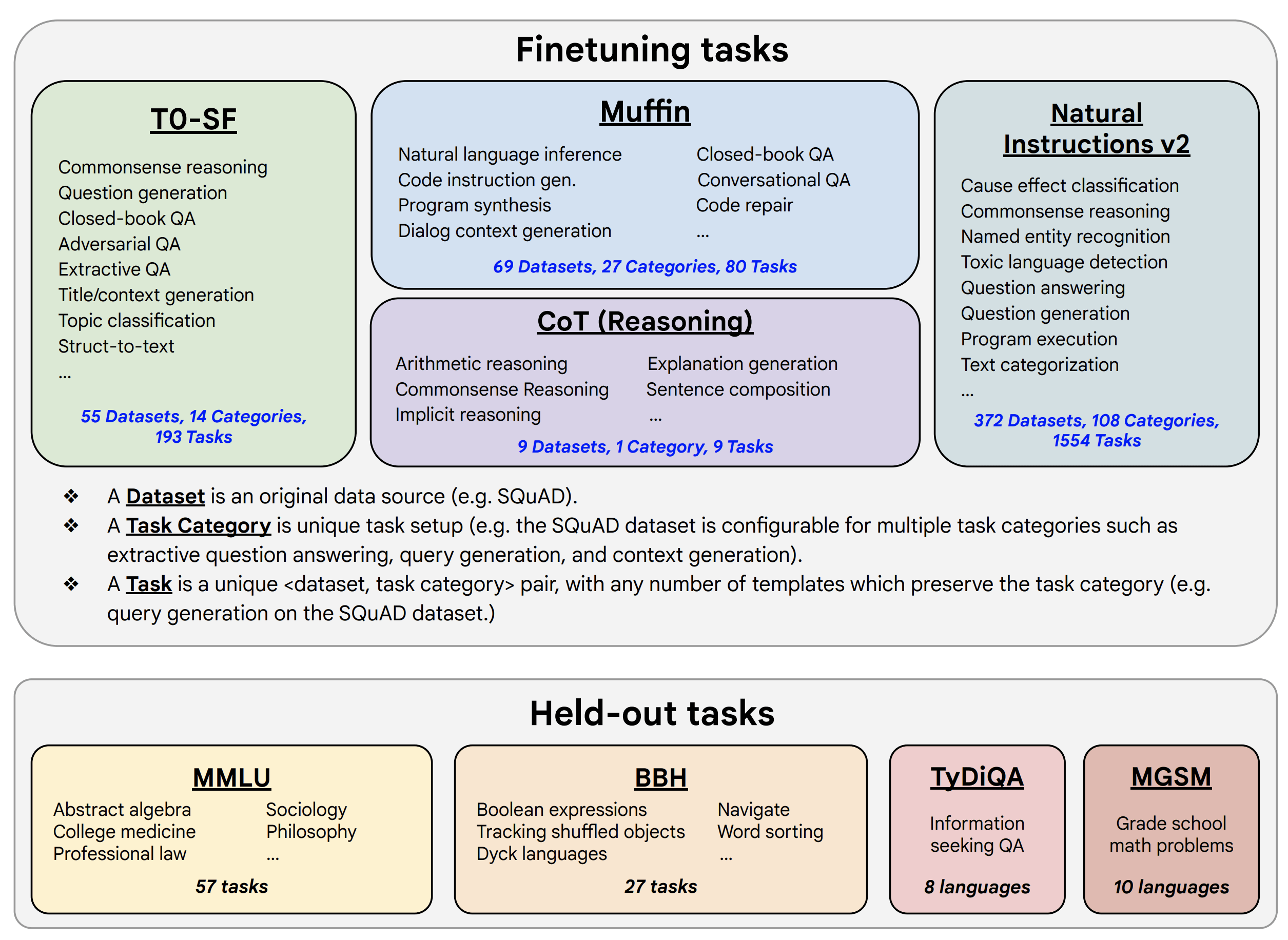

在该理念的指导下,FLAN V2 的作者对含有数千个任务的混合数据集进行了指令微调,以达成对未见任务的零样本泛化:

|  |

|:--:|

| **图 3**: FLAN V2 用于训练与测试的混合任务集 (图来自 FLAN V2 论文)。 |

我们这项工作背后的灵感,部分来自于 FLAN,部分来自 InstructPix2Pix。我们想探索能否通过特定指令来提示 Stable Diffusion,使其根据我们的要求处理输入图像。

[预训练的 InstructPix2Pix 模型](https://huggingface.co/timbrooks/instruct-pix2pix) 擅长领会并执行一般性指令,对图像操作之类的特定指令可能并不擅长:

|  |

|:--:|

| **图 4**: 我们可以看到,对同一幅输入图像(左列),与预训练的 InstructPix2Pix 模型(中间列)相比,我们的模型(右列)能更忠实地执行“卡通化”指令。第一行结果很有意思,这里,预训练的 InstructPix2Pix 模型很显然失败了。建议放大并以彩色显示,以获得最佳视觉效果。原图见[此处](https://huggingface.co/datasets/sayakpaul/sample-datasets/resolve/main/Instruction-tuning-sd/cartoonization_results.png)。 |

但我们仍然可以利用在 InstructPix2Pix 上的一些经验和观察来帮助我们做得更好。

另外,[卡通化](https://github.com/SystemErrorWang/White-box-Cartoonization)、[图像去噪](https://paperswithcode.com/dataset/sidd) 以及 [图像除雨](https://paperswithcode.com/dataset/raindrop) 等任务的公开数据集比较容易获取,所以我们能比较轻松地基于它们构建指令提示数据集 (该做法的灵感来自于 FLAN V2)。这样,我们就能够将 FLAN V2 中提出的指令模板思想迁移到本工作中。

## 数据集准备

### 卡通化

刚开始,我们对 InstructPix2Pix 进行了实验,提示其对输入图像进行卡通化,效果不及预期。我们尝试了各种推理超参数组合 (如图像引导比 (image guidance scale) 以及推理步数),但结果始终不理想。这促使我们开始寻求不同的处理这个问题的方式。

正如上一节所述,我们希望结合以下两个工作的优势:

**(1)** InstructPix2Pix 的训练方法,以及

**(2)** FLAN 的超灵活的创建指令提示数据集模板的方法。

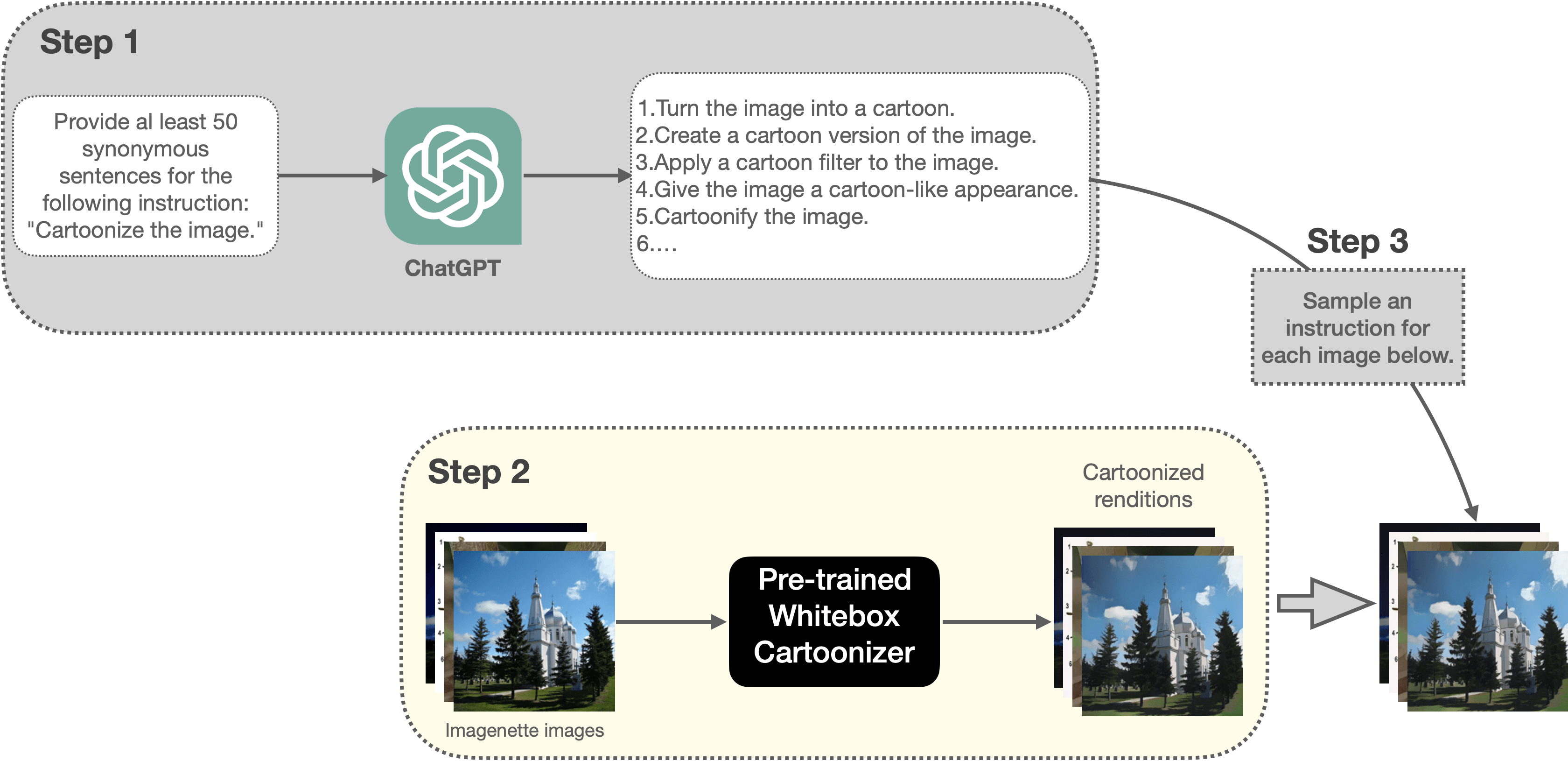

首先我们需要为卡通化任务创建一个指令提示数据集。图 5 展示了我们创建数据集的流水线:

|  |

|:--:|

| **图 5**: 本文用于创建卡通化训练数据集的流水线(建议放大并以彩色显示,以获得最佳视觉效果)。 |

其主要步骤如下:

1. 请 [ChatGPT](https://openai.com/blog/chatgpt) 为 “Cartoonize the image.” 这一指令生成 50 个同义表述。

2. 然后利用预训练的 [Whitebox CartoonGAN](https://github.com/SystemErrorWang/White-box-Cartoonization) 模型对 [Imagenette 数据集](https://github.com/fastai/imagenette) 的一个随机子集 (5000 个样本) 中的每幅图像生成对应的卡通化图像。在训练时,这些卡通化的图像将作为标签使用。因此,在某种程度上,这其实相当于将 Whitebox CartoonGAN 模型学到的技能迁移到我们的模型中。

3. 然后我们按照如下格式组织训练样本:

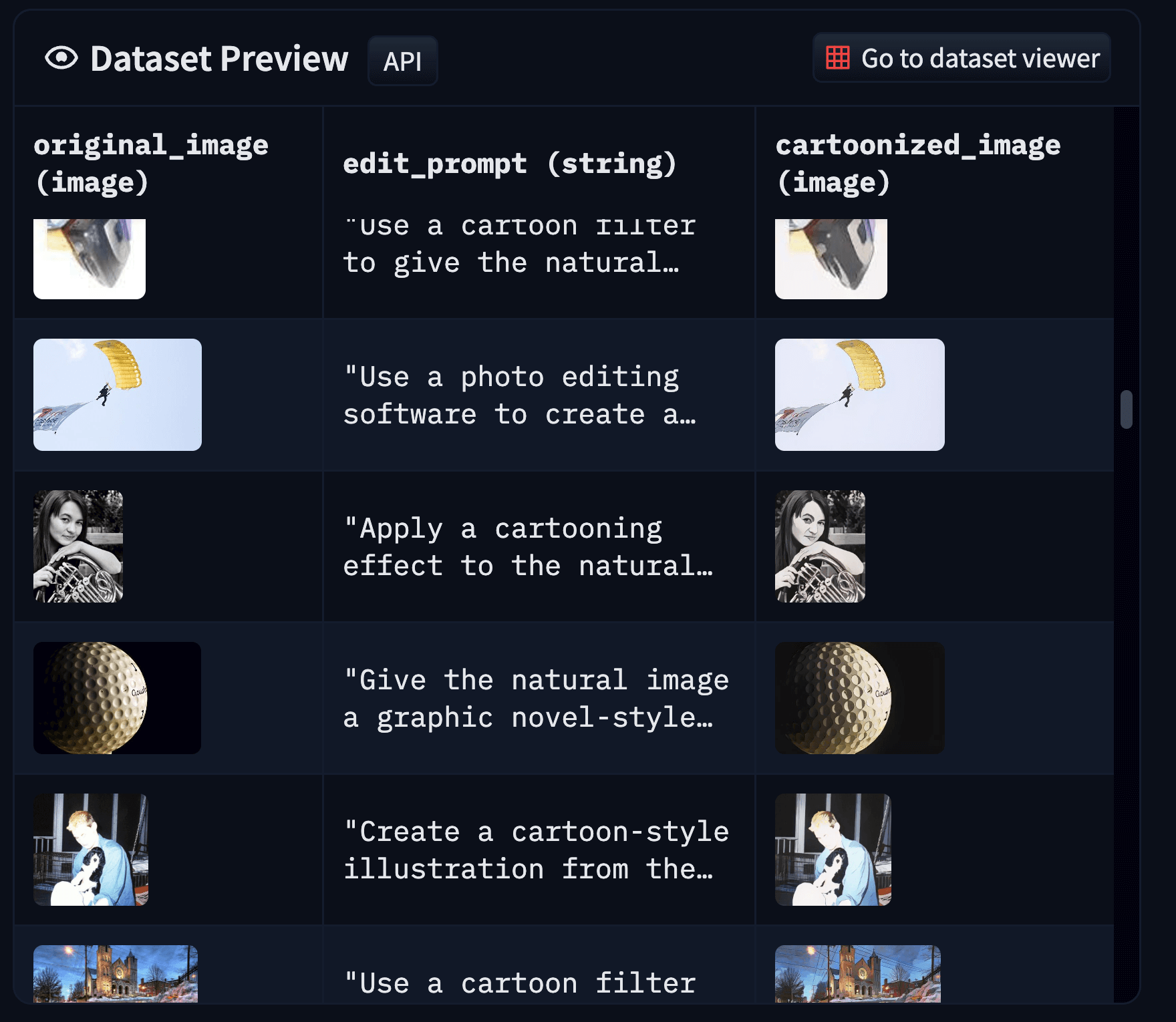

|  |

|:--:|

| **图 6**: 卡通化数据集的样本格式(建议放大并以彩色显示,以获得最佳视觉效果)。 |

你可以在 [此处](https://huggingface.co/datasets/instruction-tuning-vision/cartoonizer-dataset) 找到我们生成的卡通化数据集。有关如何准备数据集的更多详细信息,请参阅 [此处](https://github.com/huggingface/instruction-tuned-sd/tree/main/data_preparation)。我们将该数据集用于微调 InstructPix2Pix 模型,并获得了相当不错的结果 (更多细节参见“训练实验及结果”部分)。

下面,我们继续看看这种方法是否可以推广至底层图像处理任务,例如图像除雨、图像去噪以及图像去模糊。

### 底层图像处理 (Low-level image processing)

我们主要专注 [MAXIM](https://huggingface.co/papers/2201.02973) 论文中的那些常见的底层图像处理任务。特别地,我们针对以下任务进行了实验: 除雨、去噪、低照度图像增强以及去模糊。

我们为每个任务从以下数据集中抽取了数量不等的样本,构建了一个单独的数据集,并为其添加了提示,如下所示: **任务** **提示** **数据集** **抽取样本数**

| **任务** | **提示** | **数据集** | **抽取样本数** |

|---|---|---|---|

| 去模糊 | “deblur the blurry image” | [REDS](https://seungjunnah.github.io/Datasets/reds.html) (`train_blur`<br>及 `train_sharp`) | 1200 |

| 除雨 | “derain the image” | [Rain13k](https://github.com/megvii-model/HINet#image-restoration-tasks) | 686 |

| 去噪 | “denoise the noisy image” | [SIDD](https://www.eecs.yorku.ca/~kamel/sidd/) | 8 |

| 低照度图像增强 | "enhance the low-light image” | [LOL](https://paperswithcode.com/dataset/lol) | 23 |

| | | | |

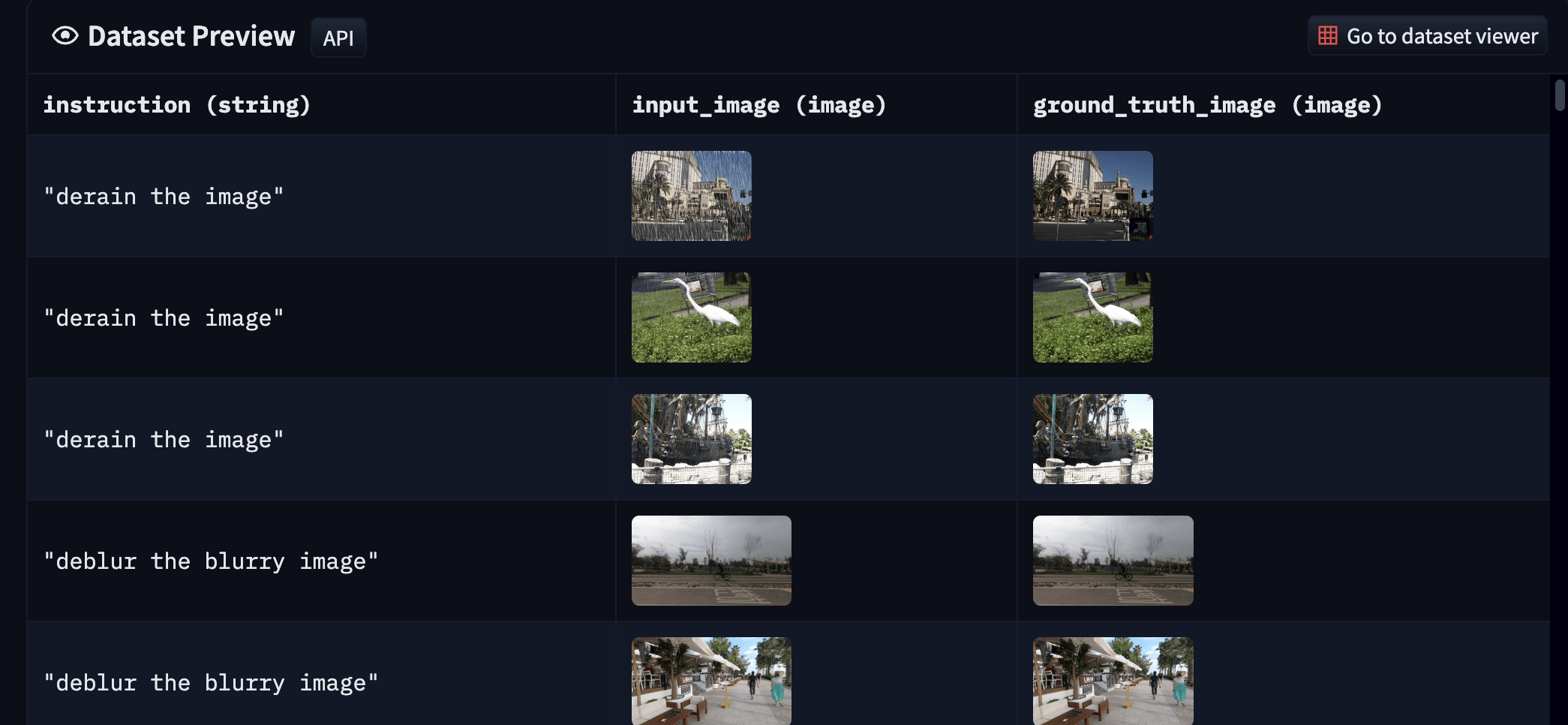

上表中的数据集通常以 `输入输出对`的形式出现,因此我们不必担心没有真值 (ground-truth)。你可以从 [此处](https://huggingface.co/datasets/instruction-tuning-vision/instruct-tuned-image-processing) 找到我们的最终数据集。最终数据集如下所示:

|  |

|:--:|

| **图 7**: 我们生成的底层图像处理数据集的样本(建议放大并以彩色显示,以获得最佳视觉效果)。 |

总的来说,这种数据集的组织方式来源于 FLAN。在 FLAN 中我们创建了一个混合了各种不同任务的数据集,这一做法有助于我们一次性在多任务上训练单个模型,使其在能够较好地适用于含有不同任务的场景。这与底层图像处理领域的典型做法有很大不同。像 MAXIM 这样的工作虽然使用了一个单一的模型架构,其能对不同的底层图像处理任务进行建模,但这些模型的训练是在各个数据集上分别独立进行的,即它是“单架构,多模型”,但我们的做法是“单架构,单模型”。

## 训练实验及结果

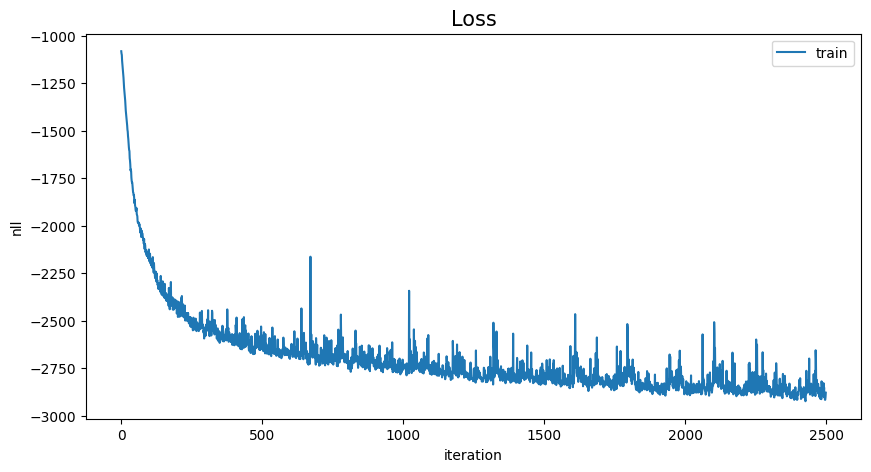

[这]((https://github.com/huggingface/diffusers/blob/main/examples/instruct_pix2pix/train_instruct_pix2pix.py)) 是我们的训练实验的脚本。你也可以在 `Weight and Biases` 上找到我们的训练日志 (包括验证集和训练超参):

- [卡通化](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/wszjpb1b) ([超参](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/wszjpb1b/overview?workspace=))

- [底层图像处理](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/2kg5wohb) ([超参](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/2kg5wohb/overview?workspace=))

在训练时,我们探索了两种方法:

1. 对 [InstructPix2Pix 的 checkpoint](https://huggingface.co/timbrooks/instruct-pix2pix) 进行微调

2. 使用 InstructPix2Pix 训练方法对 [Stable Diffusion 的 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) 进行微调

通过实验,我们发现第一个方法从数据集中学得更快,最终训得的模型生成质量也更好。

有关训练和超参的更多详细信息,可查看 [我们的代码](https://github.com/huggingface/instruction-tuned-sd) 及相应的 `Weights and Biases` 页面。

### 卡通化结果

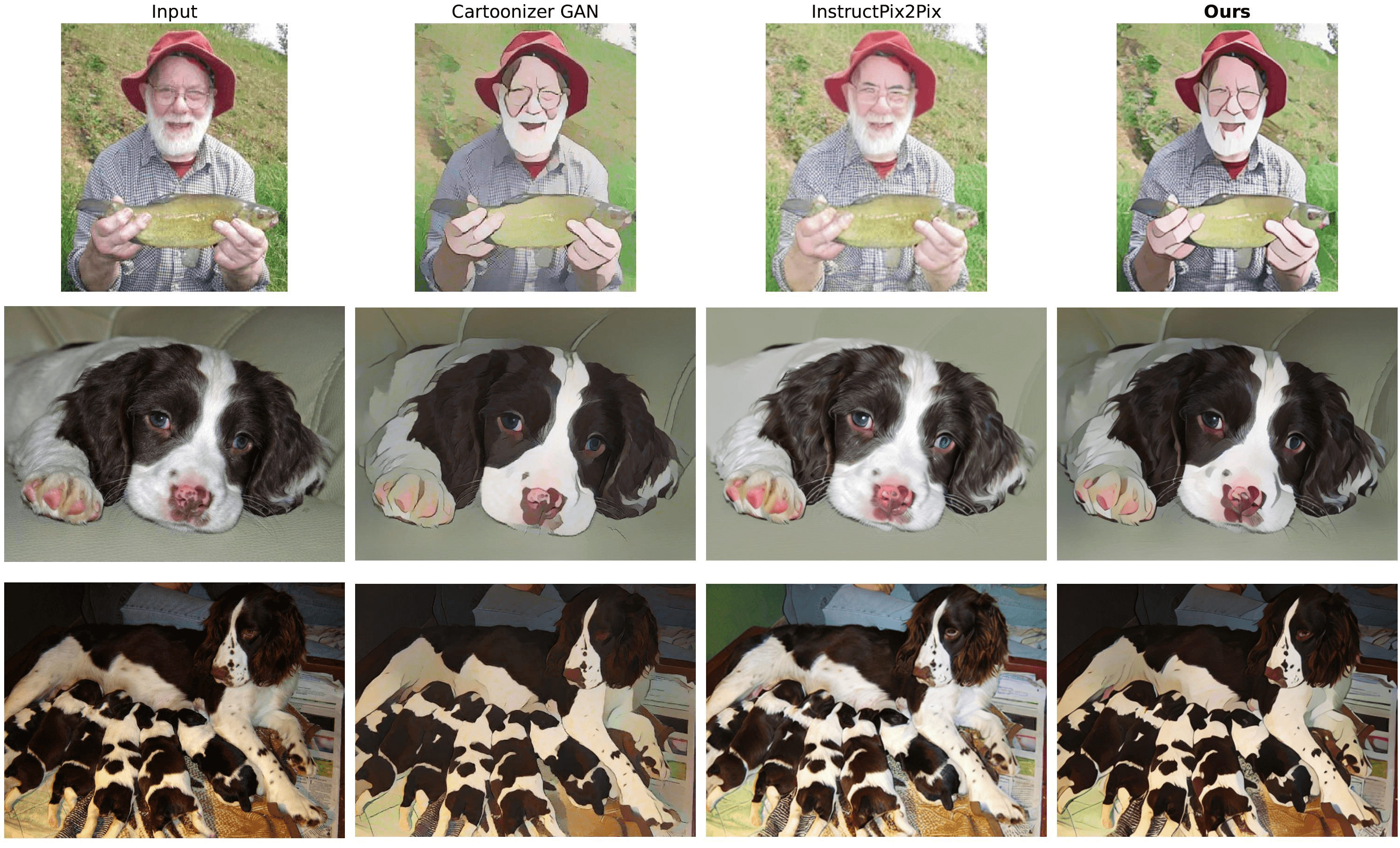

为了测试 [指令微调的卡通化模型](https://huggingface.co/instruction-tuning-sd/cartoonizer) 的性能,我们进行了如下比较:

|  |

|:--:|

| **图 8**: 我们将指令微调的卡通化模型(最后一列)的结果与 [CartoonGAN](https://github.com/SystemErrorWang/White-box-Cartoonization) 模型(第二列)以及预训练的 InstructPix2Pix 模型(第三列)的结果进行比较。显然,指令微调的模型的结果与 CartoonGAN 模型的输出更一致(建议放大并以彩色显示,以获得最佳视觉效果)。原图参见[此处](https://huggingface.co/datasets/sayakpaul/sample-datasets/resolve/main/Instruction-tuning-sd/cartoonization_full_results.png)。 |

测试图像是从 ImageNette 的验证集中采样而得。在使用我们的模型和预训练 InstructPix2Pix 模型时,我们使用了以下提示: _“Generate a cartoonized version of the image”_,并将 `image_guidance_scale`、 `guidance_scale`、推理步数分别设为 1.5、7.0 以及 20。这只是初步效果,后续还需要对超参进行更多实验,并研究各参数对各模型效果的影响,尤其是对预训练 InstructPix2Pix 模型效果的影响。

[此处](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/g6cvggw2) 提供了更多的对比结果。你也可以在 [此处](https://github.com/huggingface/instruction-tuned-sd/blob/main/validation/compare_models.py) 找到我们用于比较模型效果的代码。

然而,我们的模型对 ImageNette 中的目标对象 (如降落伞等) 的处理效果 [不及预期](https://wandb.ai/sayakpaul/instruction-tuning-sd/runs/g6cvggw2),这是因为模型在训练期间没有见到足够多的这类样本。这在某种程度上是意料之中的,我们相信可以通过增加训练数据来缓解。

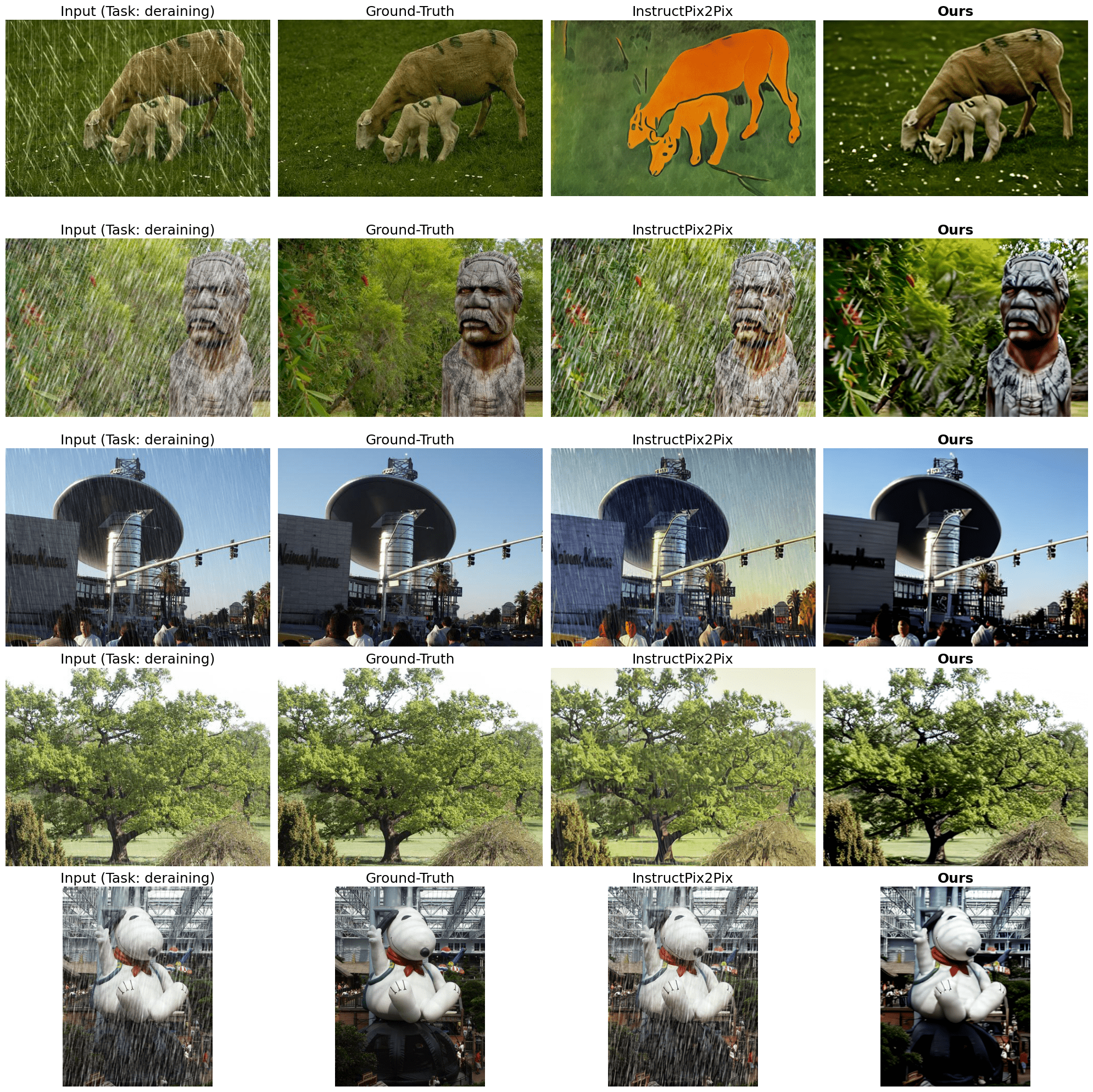

### 底层图像处理结果

对于底层图像处理 ([模型](https://huggingface.co/instruction-tuning-sd/low-level-img-proc)),我们使用了与上文相同的推理超参:

- 推理步数: 20

- `image_guidance_scale`: 1.5

- `guidance_scale`: 7.0

在除雨任务中,经过与真值 (ground-truth) 和预训练 InstructPix2Pix 模型的输出相比较,我们发现我们模型的结果相当不错:

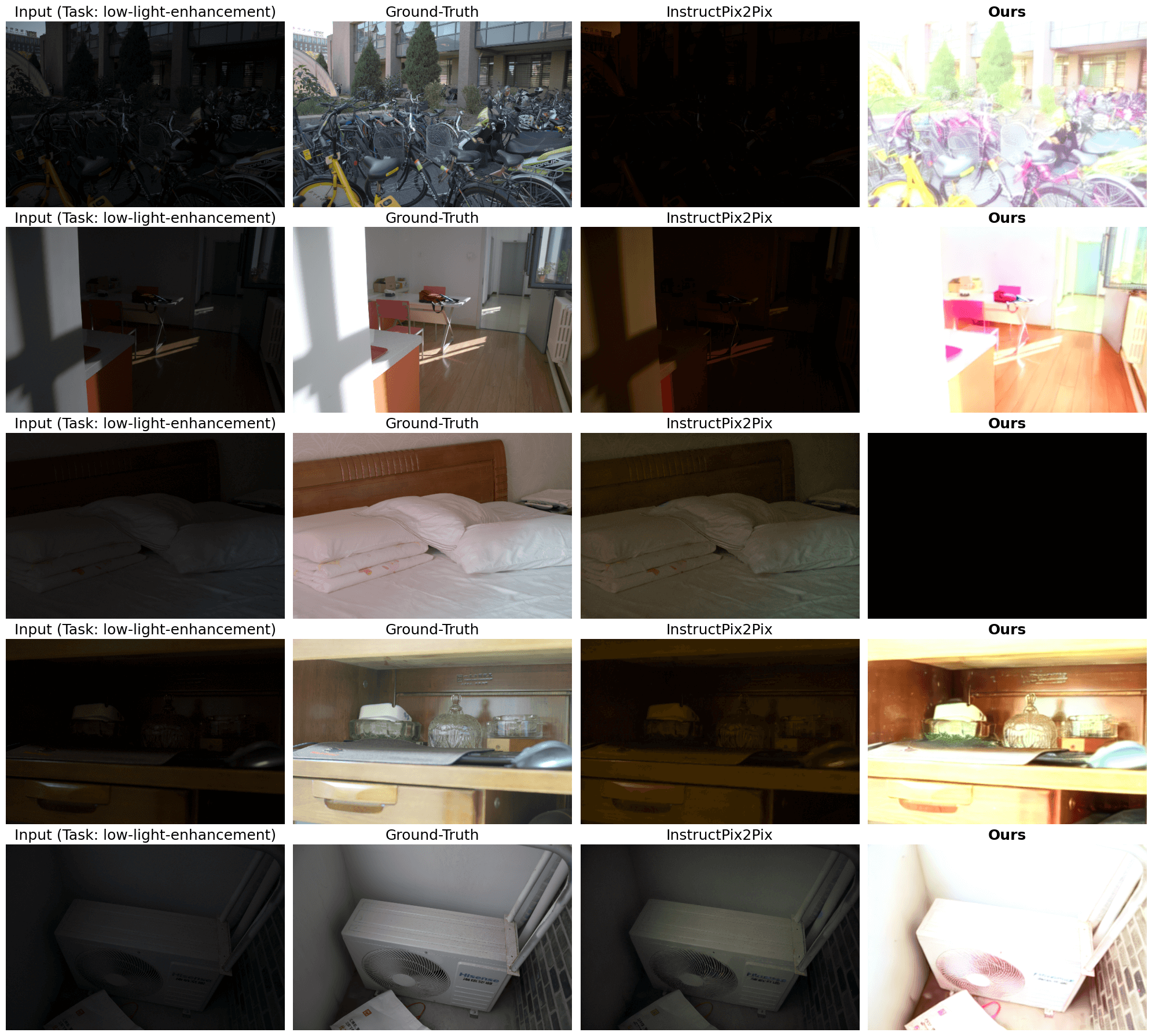

|  |

|:--:|

| **图 9**: 除雨结果(建议放大并以彩色显示,以获得最佳视觉效果)。提示为 “derain the image”(与训练集相同)。原图见[此处](https://huggingface.co/datasets/sayakpaul/sample-datasets/resolve/main/Instruction-tuning-sd/deraining_results.png) 。|

但低照度图像增强的效果不尽如意:

|  |

|:--:|

| **图 10**: 低照度图像增强结果(建议放大并以彩色显示,以获得最佳视觉效果)。提示为 “enhance the low-light image”(与训练集相同)。原图见[此处]。 |

这种情况或许可以归因于训练样本不足,此外训练方法也尚有改进余地。我们在去模糊任务上也有类似发现:

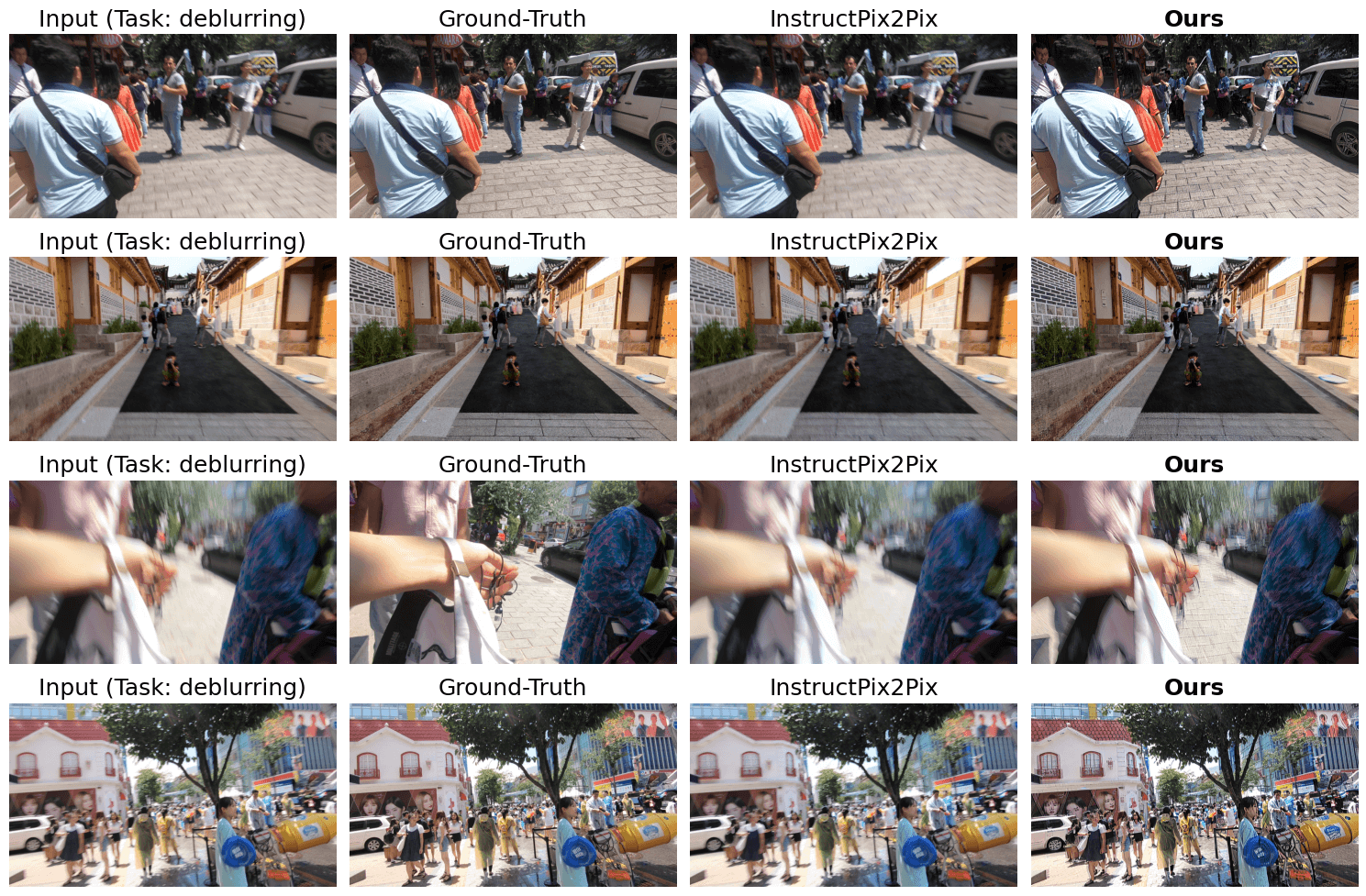

|  |

|:--:|

| **图 11**: 去模糊结果(建议放大并以彩色显示,以获得最佳视觉效果)。提示为 “deblur the image”(与训练集相同)。原图见[此处](https://huggingface.co/datasets/sayakpaul/sample-datasets/resolve/main/Instruction-tuning-sd/deblurring_results.png) 。 |

我们相信对社区而言,`底层图像处理的任务不同组合如何影响最终结果` 这一问题非常值得探索。 _在训练样本集中增加更多的任务种类并增加更多具代表性的样本是否有助于改善最终结果?_ 这个问题,我们希望留给社区进一步探索。

你可以试试下面的交互式演示,看看 Stable Diffusion 能不能领会并执行你的特定指令:

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.29.0/gradio.js"></script>

<gradio-app theme_mode="light" src="https://instruction-tuning-sd-instruction-tuned-sd.hf.space"></gradio-app>

## 潜在的应用及其限制

在图像编辑领域,领域专家的想法 (想要执行的任务) 与编辑工具 (例如 [Lightroom](https://www.adobe.com/in/products/photoshop-lightroom.html)) 最终需要执行的操作之间存在着脱节。如果我们有一种将自然语言的需求转换为底层图像编辑原语的简单方法的话,那么用户体验将十分丝滑。随着 InstructPix2Pix 之类的机制的引入,可以肯定,我们正在接近那个理想的用户体验。

但同时,我们仍需要解决不少挑战:

- 这些系统需要能够处理高分辨率的原始高清图像。

- 扩散模型经常会曲解指令,并依照这种曲解修改图像。对于实际的图像编辑应用程序,这是不可接受的。

## 开放性问题

目前的实验仍然相当初步,我们尚未对实验中的很多重要因素作深入的消融实验。在此,我们列出实验过程中出现的开放性问题:

- _**如果扩大数据集会怎样?**_ 扩大数据集对生成样本的质量有何影响?目前我们实验中,训练样本只有不到 2000 个,而 InstructPix2Pix 用了 30000 多个训练样本。

- _**延长训练时间有什么影响,尤其是当训练集中任务种类更多时会怎样?**_ 在目前的实验中,我们没有进行超参调优,更不用说对训练步数进行消融实验了。

- _**如何将这种方法推广至更广泛的任务集?历史数据表明,“指令微调”似乎比较擅长多任务微调。**_ 目前,我们只涉及了四个底层图像处理任务: 除雨、去模糊、去噪和低照度图像增强。将更多任务以及更多有代表性的样本添加到训练集中是否有助于模型对未见任务的泛化能力,或者有助于对复合型任务 (例如: “Deblur the image and denoise it”) 的泛化能力?

- _**使用同一指令的不同变体即时组装训练样本是否有助于提高性能?**_ 在卡通化任务中,我们的方法是在 **数据集创建期间** 从 ChatGPT 生成的同义指令集中随机抽取一条指令组装训练样本。如果我们在训练期间随机抽样,即时组装训练样本会如何?对于底层图像处理任务,目前我们使用了固定的指令。如果我们按照类似于卡通化任务的方法对每个任务和输入图像从同义指令集中采样一条指令会如何?

- _**如果我们用 ControlNet 的训练方法会如何?**_ [ControlNet](https://huggingface.co/papers/2302.05543) 允许对预训练文生图扩散模型进行微调,使其能以图像 (如语义分割图、Canny 边缘图等) 为条件生成新的图像。如果你有兴趣,你可以使用本文中提供的数据集并参考 [这篇文章](https://huggingface.co/blog/train-your-controlnet) 进行 ControlNet 训练。

## 总结

通过本文,我们介绍了我们对“指令微调” Stable Diffusion 的一些探索。虽然预训练的 InstructPix2Pix 擅长领会执行一般的图像编辑指令,但当出现更专门的指令时,它可能就没法用了。为了缓解这种情况,我们讨论了如何准备数据集以进一步微调 InstructPix2Pix,同时我们展示了我们的结果。如上所述,我们的结果仍然很初步。但我们希望为研究类似问题的研究人员提供一个基础,并激励他们进一步对本领域的开放性问题进行探索。

## 链接

- [训练和推理代码](https://github.com/huggingface/instruction-tuned-sd)

- [演示](https://huggingface.co/spaces/instruction-tuning-sd/instruction-tuned-sd)

- [InstructPix2Pix](https://huggingface.co/timbrooks/instruct-pix2pix)

- [本文中的数据集和模型](https://huggingface.co/instruction-tuning-sd)

_感谢 [Alara Dirik](https://www.linkedin.com/in/alaradirik/) 和 [Zhengzhong Tu](https://www.linkedin.com/in/zhengzhongtu) 的讨论,这些讨论对本文很有帮助。感谢 [Pedro Cuenca](https://twitter.com/pcuenq?lang=en) 和 [Kashif Rasul](https://twitter.com/krasul?lang=en) 对文章的审阅。_

## 引用

如需引用本文,请使用如下格式:

```bibtex

@article{

Paul2023instruction-tuning-sd,

author = {Paul, Sayak},

title = {Instruction-tuning Stable Diffusion with InstructPix2Pix},

journal = {Hugging Face Blog},

year = {2023},

note = {https://huggingface.co/blog/instruction-tuning-sd},

}

``` | 0 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/trl-ddpo.md | ---

title: "使用 DDPO 在 TRL 中微调 Stable Diffusion 模型"

thumbnail: /blog/assets/166_trl_ddpo/thumbnail.png

authors:

- user: metric-space

guest: true

- user: sayakpaul

- user: kashif

- user: lvwerra

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# 使用 DDPO 在 TRL 中微调 Stable Diffusion 模型

## 引言

扩散模型 (如 DALL-E 2、Stable Diffusion) 是一类文生图模型,在生成图像 (尤其是有照片级真实感的图像) 方面取得了广泛成功。然而,这些模型生成的图像可能并不总是符合人类偏好或人类意图。因此出现了对齐问题,即如何确保模型的输出与人类偏好 (如“质感”) 一致,或者与那种难以通过提示来表达的意图一致?这里就有强化学习的用武之地了。

在大语言模型 (LLM) 领域,强化学习 (RL) 已被证明是能让目标模型符合人类偏好的非常有效的工具。这是 ChatGPT 等系统卓越性能背后的主要秘诀之一。更准确地说,强化学习是人类反馈强化学习 (RLHF) 的关键要素,它使 ChatGPT 能像人类一样聊天。

在 [Training Diffusion Models with Reinforcement Learning](https://arxiv.org/abs/2305.13301) 一文中,Black 等人展示了如何利用 RL 来对扩散模型进行强化,他们通过名为去噪扩散策略优化 (Denoising Diffusion Policy Optimization,DDPO) 的方法针对模型的目标函数实施微调。

在本文中,我们讨论了 DDPO 的诞生、简要描述了其工作原理,并介绍了如何将 DDPO 加入 RLHF 工作流中以实现更符合人类审美的模型输出。然后,我们切换到实战,讨论如何使用 `trl` 库中新集成的 `DDPOTrainer` 将 DDPO 应用到模型中,并讨论我们在 Stable Diffusion 上运行 DDPO 的发现。

## DDPO 的优势

DDPO 并非解决 `如何使用 RL 微调扩散模型` 这一问题的唯一有效答案。

在进一步深入讨论之前,我们强调一下在对 RL 解决方案进行横评时需要掌握的两个关键点:

1. 计算效率是关键。数据分布越复杂,计算成本就越高。

2. 近似法很好,但由于近似值不是真实值,因此相关的错误会累积。

在 DDPO 之前,奖励加权回归 (Reward-Weighted Regression,RWR) 是使用强化学习微调扩散模型的主要方法。RWR 重用了扩散模型的去噪损失函数、从模型本身采样得的训练数据以及取决于最终生成样本的奖励的逐样本损失权重。该算法忽略中间的去噪步骤/样本。虽然有效,但应该注意两件事:

1. 通过对逐样本损失进行加权来进行优化,这是一个最大似然目标,因此这是一种近似优化。

2. 加权后的损失甚至不是精确的最大似然目标,而是从重新加权的变分界中得出的近似值。

所以,根本上来讲,这是一个两阶近似法,其对性能和处理复杂目标的能力都有比较大的影响。

DDPO 始于此方法,但 DDPO 没有将去噪过程视为仅关注最终样本的单个步骤,而是将整个去噪过程构建为多步马尔可夫决策过程 (MDP),只是在最后收到奖励而已。这样做的好处除了可以使用固定的采样器之外,还为让代理策略成为各向同性高斯分布 (而不是任意复杂的分布) 铺平了道路。因此,该方法不使用最终样本的近似似然 (即 RWR 的做法),而是使用易于计算的每个去噪步骤的确切似然 ( $\ell(\mu, \sigma^2; x ) = -\frac{n}{2} \log(2\pi) - \frac{n}{2} \log(\sigma^2) - \frac{1}{2\sigma^2} \sum_ {i=1}^n (x_i - \mu)^2$ )。

如果你有兴趣了解有关 DDPO 的更多详细信息,我们鼓励你阅读 [原论文](https://arxiv.org/abs/2305.13301) 及其 [附带的博文](https://bair.berkeley.edu/blog/2023/07/14/ddpo/)。

## DDPO 算法简述

考虑到我们用 MDP 对去噪过程进行建模以及其他因素,求解该优化问题的首选工具是策略梯度方法。特别是近端策略优化 (PPO)。整个 DDPO 算法与近端策略优化 (PPO) 几乎相同,仅对 PPO 的轨迹收集部分进行了比较大的修改。

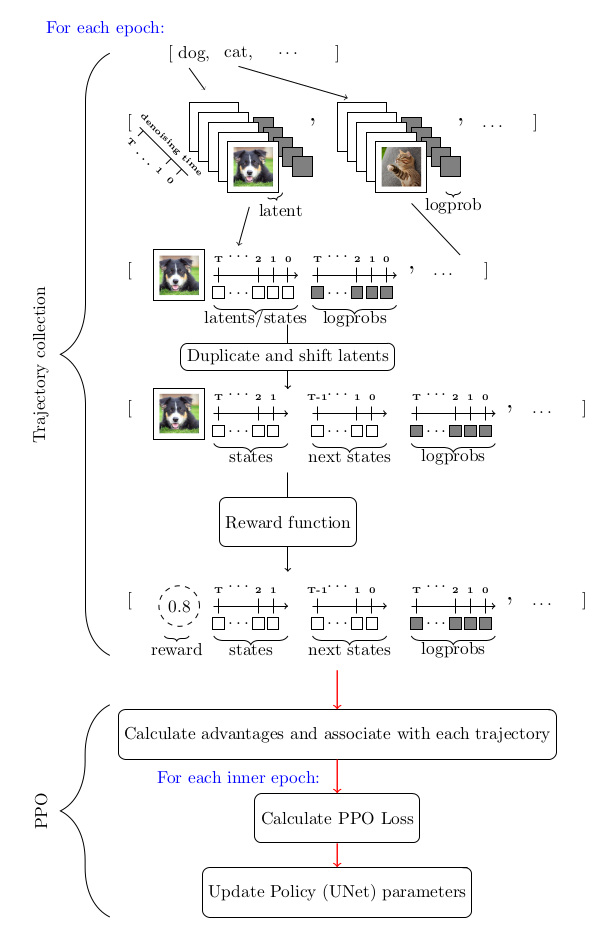

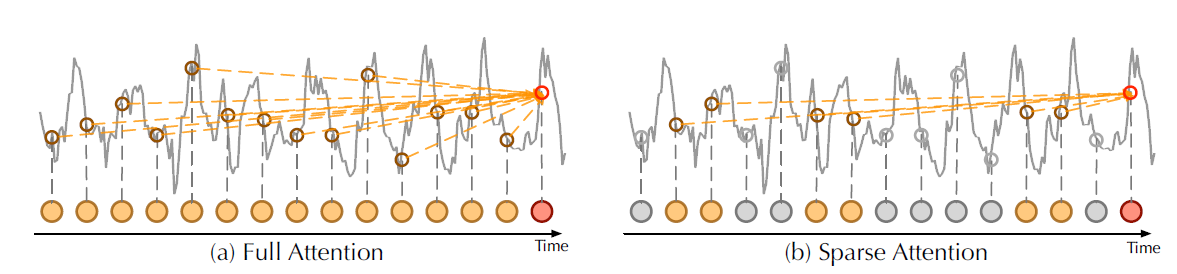

下图总结了整个算法流程:

## DDPO 和 RLHF: 合力增强美观性

[RLHF](https://huggingface.co/blog/rlhf) 的一般训练步骤如下:

1. 有监督微调“基础”模型,以学习新数据的分布。

2. 收集偏好数据并用它训练奖励模型。

3. 使用奖励模型作为信号,通过强化学习对模型进行微调。

需要指出的是,在 RLHF 中偏好数据是获取人类反馈的主要来源。

DDPO 加进来后,整个工作流就变成了:

1. 从预训练的扩散模型开始。

2. 收集偏好数据并用它训练奖励模型。

3. 使用奖励模型作为信号,通过 DDPO 微调模型

请注意,DDPO 工作流把原始 RLHF 工作流中的第 3 步省略了,这是因为经验表明 (后面你也会亲眼见证) 这是不需要的。

下面我们实战一下,训练一个扩散模型来输出更符合人类审美的图像,我们分以下几步来走:

1. 从预训练的 Stable Diffusion (SD) 模型开始。

2. 在 [美学视觉分析 (Aesthetic Visual Analysis,AVA) ](http://refbase.cvc.uab.es/files/MMP2012a.pdf) 数据集上训练一个带有可训回归头的冻结 [CLIP](https://huggingface.co/openai/clip-vit-large-patch14) 模型,用于预测人们对输入图像的平均喜爱程度。

3. 使用美学预测模型作为奖励信号,通过 DDPO 微调 SD 模型。

记住这些步骤,下面开始干活:

## 使用 DDPO 训练 Stable Diffusion

### 环境设置

首先,要成功使用 DDPO 训练模型,你至少需要一个英伟达 A100 GPU,低于此规格的 GPU 很容易遇到内存不足问题。

使用 pip 安装 `trl` 库

```bash

pip install trl[diffusers]

```

主库安装好后,再安装所需的训练过程跟踪和图像处理相关的依赖库。注意,安装完 `wandb` 后,请务必登录以将结果保存到个人帐户。

```bash

pip install wandb torchvision

```

注意: 如果不想用 `wandb` ,你也可以用 `pip` 安装 `tensorboard` 。

### 演练一遍

`trl` 库中负责 DDPO 训练的主要是 `DDPOTrainer` 和 `DDPOConfig` 这两个类。有关 `DDPOTrainer` 和 `DDPOConfig` 的更多信息,请参阅 [相应文档](https://huggingface.co/docs/trl/ddpo_trainer#getting-started-with-examplesscriptsstablediffusiontuningpy)。 `trl` 代码库中有一个 [示例训练脚本](https://github.com/huggingface/trl/blob/main/examples/scripts/stable_diffusion_tuning.py)。它默认使用这两个类,并有一套默认的输入和参数用于微调 `RunwayML` 中的预训练 Stable Diffusion 模型。

此示例脚本使用 `wandb` 记录训练日志,并使用美学奖励模型,其权重是从公开的 Hugging Face 存储库读取的 (因此数据收集和美学奖励模型训练均已经帮你做完了)。默认提示数据是一系列动物名。

用户只需要一个命令行参数即可启动脚本。此外,用户需要有一个 [Hugging Face 用户访问令牌](https://huggingface.co/docs/hub/security-tokens),用于将微调后的模型上传到 Hugging Face Hub。

运行以下 bash 命令启动程序:

```python

python stable_diffusion_tuning.py --hf_user_access_token <token>

```

下表列出了影响微调结果的关键超参数:

| 参数 | 描述 | 单 GPU 训练推荐值(迄今为止) |

| --- | --- | --- |

| `num_epochs` | 训练 `epoch` 数 | 200 |

| `train_batch_size` | 训练 batch size | 3 |

| `sample_batch_size` | 采样 batch size | 6 |

| `gradient_accumulation_steps` | 梯度累积步数 | 1 |

| `sample_num_steps` | 采样步数 | 50 |

| `sample_num_batches_per_epoch` | 每个 `epoch` 的采样 batch 数 | 4 |

| `per_prompt_stat_tracking` | 是否跟踪每个提示的统计信息。如果为 `False`,将使用整个 batch 的平均值和标准差来计算优势,而不是对每个提示进行跟踪 | `True` |

| `per_prompt_stat_tracking_buffer_size` | 用于跟踪每个提示的统计数据的缓冲区大小 | 32 |

| `mixed_precision` | 混合精度训练 | `True` |

| `train_learning_rate` | 学习率 | 3e-4 |

这个脚本仅仅是一个起点。你可以随意调整超参数,甚至彻底修改脚本以适应不同的目标函数。例如,可以集成一个测量 JPEG 压缩度的函数或 [使用多模态模型评估视觉文本对齐度的函数](https://github.com/kvablack/ddpo-pytorch/blob/main/ddpo_pytorch/rewards.py#L45) 等。

## 经验与教训

1. 尽管训练提示很少,但其结果似乎已经足够泛化。对于美学奖励函数而言,该方法已经得到了彻底的验证。

2. 尝试通过增加训练提示数以及改变提示来进一步泛化美学奖励函数,似乎反而会减慢收敛速度,但对模型的泛化能力收效甚微。

3. 虽然推荐使用久经考验 LoRA,但非 LoRA 也值得考虑,一个经验证据就是,非 LoRA 似乎确实比 LoRA 能产生相对更复杂的图像。但同时,非 LoRA 训练的收敛稳定性不太好,对超参选择的要求也高很多。

4. 对于非 LoRA 的超参建议是: 将学习率设低点,经验值是大约 `1e-5` ,同时将 `mixed_ precision` 设置为 `None` 。

## 结果

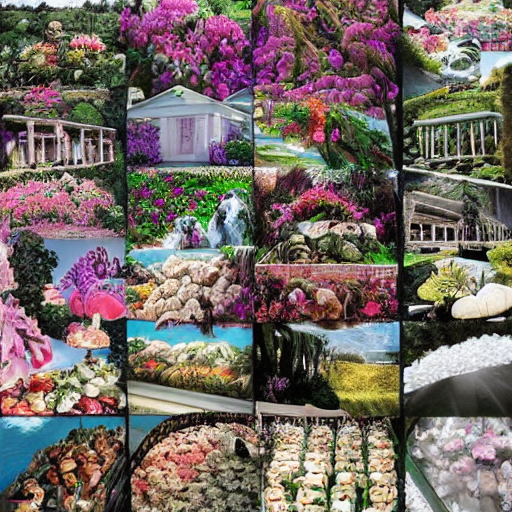

以下是提示 `bear` 、 `heaven` 和 `dune` 微调前 (左) 、后 (右) 的输出 (每行都是一个提示的输出):

| 微调前 | 微调后 |

|:-------------------------:|:-------------------------:|

|  |  |

|  |  |

|  |  |

## 限制

1. 目前 `trl` 的 `DDPOTrainer` 仅限于微调原始 SD 模型;

2. 在我们的实验中,主要关注的是效果较好的 LoRA。我们也做了一些全模型训练的实验,其生成的质量会更好,但超参寻优更具挑战性。

## 总结

像 Stable Diffusion 这样的扩散模型,当使用 DDPO 进行微调时,可以显著提高图像的主观质感或其对应的指标,只要其可以表示成一个目标函数的形式。

DDPO 的计算效率及其不依赖近似优化的能力,在扩散模型微调方面远超之前的方法,因而成为微调扩散模型 (如 Stable Diffusion) 的有力候选。

`trl` 库的 `DDPOTrainer` 实现了 DDPO 以微调 SD 模型。

我们的实验表明 DDPO 对很多提示具有相当好的泛化能力,尽管进一步增加提示数以增强泛化似乎效果不大。为非 LoRA 微调找到正确超参的难度比较大,这也是我们得到的重要经验之一。

DDPO 是一种很有前途的技术,可以将扩散模型与任何奖励函数结合起来,我们希望通过其在 TRL 中的发布,社区可以更容易地使用它!

## 致谢

感谢 Chunte Lee 提供本博文的缩略图。 | 1 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/llama3.md | ---

title: "欢迎 Llama 3:Meta 的新一代开源大语言模型"

thumbnail: /blog/assets/llama3/thumbnail.jpg

authors:

- user: philschmid

- user: osanseviero

- user: pcuenq

- user: ybelkada

- user: lvwerra

translators:

- user: AdinaY

---

# 欢迎 Llama 3:Meta 的新一代开源大语言模型

## 介绍

Meta 公司的 Llama 3 是开放获取的 Llama 系列的最新版本,现已在 Hugging Face 平台发布。看到 Meta 持续致力于开放 AI 领域的发展令人振奋,我们也非常高兴地全力支持此次发布,并实现了与 Hugging Face 生态系统的深度集成。

Llama 3 提供两个版本:8B 版本适合在消费级 GPU 上高效部署和开发;70B 版本则专为大规模 AI 应用设计。每个版本都包括基础和指令调优两种形式。此外,基于 Llama 3 8B 微调后的 Llama Guard 新版本也已作为 Llama Guard 2(安全微调版本)发布。

我们与 Meta 密切合作,确保其产品能够无缝集成进 Hugging Face 的生态系统。在 Hub 上,您可以找到这五个开放获取的模型(包括两个基础模型、两个微调模型以及 Llama Guard)。

本次发布的主要特性和集成功能包括:

- [Hub 上的模型](https://huggingface.co/meta-llama),并提供了模型卡片和许可证信息

- 🤗 Transformers 的集成

- [针对 Meta Llama 3 70B 的 Hugging Chat 集成](https://huggingface.co/chat/models/meta-llama/Meta-Llama-3-70B-instruct)

- 推理功能集成到推理端点、Google Cloud 和 Amazon SageMaker

- 在单个 GPU 上对 Llama 3 8B 进行微调的示例,采用 🤗 TRL

## 目录

- [介绍](#introduction)

- [目录](#table-of-contents)

- [Llama 3 的新进展](#whats-new-with-llama-3)

- [Llama 3 评估](#llama-3-evaluation)

- [如何设置 Llama 3 的提示](#how-to-prompt-llama-3)

- [演示](#demo)

- [如何使用 🤗 Transformers](#using-transformers)

- [推理集成](#inference-integrations)

- [如何使用 🤗 TRL 进行微调](#fine-tuning-with-trl)

- [额外资源](#additional-resources)

- [鸣谢](#acknowledgments)

## Llama 3 的新进展

Llama 3 的推出标志着 Meta 基于 Llama 2 架构推出了四个新的开放型大语言模型。这些模型分为两种规模:8B 和 70B 参数,每种规模都提供预训练基础版和指令调优版。所有版本均可在各种消费级硬件上运行,并具有 8000 Token 的上下文长度。

- [Meta-Llama-3-8b](https://huggingface.co/meta-llama/Meta-Llama-3-8B): 8B 基础模型

- [Meta-Llama-3-8b-instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct): 8B 基础模型的指令调优版

- [Meta-Llama-3-70b](https://huggingface.co/meta-llama/Meta-Llama-3-70B): 70B 基础模型

- [Meta-Llama-3-70b-instruct](https://huggingface.co/meta-llama/Meta-Llama-3-70B-instruct): 70B 基础模型的指令调优版

此外,还发布了基于 Llama 3 8B 微调后的最新 Llama Guard 版本——Llama Guard 2。Llama Guard 2 是为生产环境设计的,能够对大语言模型的输入(即提示)和响应进行分类,以便识别潜在的不安全内容。

与 Llama 2 相比,Llama 3 最大的变化是采用了新的 Tokenizer,将词汇表大小扩展至 128,256(前版本为 32,000 Token)。这一更大的词汇库能够更高效地编码文本(无论输入还是输出),并有可能提升模型的多语种处理能力。不过,这也导致嵌入层的输入和输出矩阵尺寸增大,这是小型模型参数增加(从 Llama 2 的 7B 增至 Llama 3 的 8B)的主要原因之一。此外,8B 版本的模型现在采用了分组查询注意力(GQA),这是一种效率更高的表达方式,有助于处理更长的上下文。

Llama 3 模型在两个拥有 24,000 GPU 的集群上进行了训练,使用的是超过 15 万亿 Token 的新公共在线数据。我们无法得知训练数据具体细节,但可以推测,更大规模且更细致的数据策划是性能提升的重要因素。Llama 3 Instruct 针对对话应用进行了优化,结合了超过 1000 万的人工标注数据,通过监督式微调(SFT)、拒绝采样、邻近策略优化(PPO)和直接策略优化(DPO)进行训练。

关于许可条款,Llama 3 提供了一个宽松的许可证,允许重新分发、微调和创作衍生作品。Llama 3 许可证中新增了明确归属的要求,这在 Llama 2 中并未设定。例如,衍生模型需要在其名称开头包含“Llama 3”,并且在衍生作品或服务中需注明“基于 Meta Llama 3 构建”。详细条款,请务必阅读[官方许可证](https://huggingface.co/meta-llama/Meta-Llama-3-70B/blob/main/LICENSE)。

## Llama 3 评估

_注:我们目前正在对 Meta Llama 3 进行单独评估,一旦有了结果将立即更新此部分。_

## 如何设置 Llama 3 的提示

基础模型不具备固定的提示格式。如同其他基础模型,它们可以用来延续输入序列,提供合理的续写或进行零样本/少样本推理。这些模型也是您自定义微调的理想基础。指令版本采用以下对话结构:

```bash

system

{{ system_prompt }}user

{{ user_msg_1 }}assistant

{{ model_answer_1 }}

```

为了有效使用,必须精确复制此格式。我们稍后将展示如何利用 `transformers` 中提供的聊天模板轻松重现这一指令提示格式。

## 演示

您现在可以在 Hugging Chat 上与 Llama 3 70B 指令版进行交流!请访问此链接:https://huggingface.co/chat/models/meta-llama/Meta-Llama-3-70B-instruct

## 如何使用 🤗 Transformers

通过安装 Transformers 的[4.40 版本](https://github.com/huggingface/transformers/releases/tag/v4.40.0),您可以充分利用 Hugging Face 生态系统中提供的各种工具,如:

- 训练及推理脚本和示例

- 安全文件格式(safetensors)

- 与 bitsandbytes(4位量化)、PEFT(参数效率微调)和 Flash Attention 2 等工具的集成

- 辅助生成操作的实用工具

- 模型部署的出口机制

此外,Llama 3 模型兼容 `torch.compile()` 的 CUDA 图表,使得推理时间可加速约 4 倍!

要在 transformers 中使用 Llama 3 模型,请确保安装了最新版本:

```jsx

pip install --upgrade transformers

```

以下代码片段展示了如何在 transformers 中使用 `Llama-3-8b-instruct`。这需要大约 16 GB 的 RAM,包括 3090 或 4090 等消费级 GPU。

```python

from transformers import pipeline

import torch

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

pipe = pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device="cuda",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

terminators = [

pipe.tokenizer.eos_token_id,

pipe.tokenizer.convert_tokens_to_ids("<|eot_id|>")

]

outputs = pipe(

messages,

max_new_tokens=256,

eos_token_id=terminators,

do_sample=True,

temperature=0.6,

top_p=0.9,

)

assistant_response = outputs[0]["generated_text"][-1]["content"]

print(assistant_response)

```

> Arrrr, me hearty! Me name be Captain Chat, the scurviest pirate chatbot to ever sail the Seven Seas! Me be here to swab the decks o' yer mind with me trusty responses, savvy? I be ready to hoist the Jolly Roger and set sail fer a swashbucklin' good time, matey! So, what be bringin' ye to these fair waters?

一些细节:

- 我们在 `bfloat16` 中加载了模型。这是 Meta 发布的原始检查点所使用的类型,因此它是推荐的运行方式,以确保最佳精确度或进行评估。对于实际使用,也可以安全地使用 `float16`,这可能取决于您的硬件而更快。

- 助理响应可能会以特殊 token 结束,但如果找到常规的 EOS token,我们也必须停止生成。我们可以通过在 `eos_token_id` 参数中提供一个终结符列表来提前停止生成。

- 我们使用了从原始 meta 代码库中取得的默认抽样参数(`temperature` 和 `top_p`)。我们还没有时间进行广泛的测试,欢迎探索!

您也可以自动量化模型,将其加载到 8 位或甚至 4 位模式。4 位加载需要大约 7 GB 的内存运行,使其兼容许多消费级卡和 Google Colab 中的所有 GPU。这就是您如何在 4 位中加载生成管道:

```python

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={

"torch_dtype": torch.float16,

"quantization_config": {"load_in_4bit": True},

"low_cpu_mem_usage": True,

},

)

```

有关使用 transformers 中的模型的更多详情,请查看[模型卡片](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct)。

## 推理集成

在这一部分,我们将通过不同的方法来运行 Llama 3 模型的推理。在使用这些模型之前,请确保您已请求访问官方 [Meta Llama 3](https://TODO) 仓库中的一个模型。

### 与推理端点的集成

您可以在 Hugging Face 的 [推理端点](https://ui.endpoints.huggingface.co/) 上部署 Llama 3,它使用文本生成推理作为后端。[文本生成推理](https://github.com/huggingface/text-generation-inference) 是 Hugging Face 开发的一个生产就绪的推理容器,使大型语言模型的部署变得简单。它具有连续批处理、Token 流、多 GPU 上快速推理的张量并行性以及生产就绪的日志和跟踪等功能。

要部署 Llama 3,请转到[模型页面](https://huggingface.co/meta-llama/Meta-Llama-3-70B-instruct)并点击[部署 -> 推理端点](https://ui.endpoints.huggingface.co/philschmid/new?repository=meta-llama/Meta-Llama-3-70B-instruct&vendor=aws®ion=us-east-1&accelerator=gpu&instance_size=4xlarge&task=text-generation&no_suggested_compute=true&tgi=true&tgi_max_batch_prefill_tokens=16384&tgi_max_batch_total_tokens=16384&tgi_max_input_length=4000&tgi_max_total_tokens=8192)小工具。您可以在之前的博客文章中了解更多关于[使用 Hugging Face 推理端点部署大语言模型](https://huggingface.co/blog/inference-endpoints-llm)的信息。推理端点通过文本生成推理支持 [Messages API](https://huggingface.co/blog/tgi-messages-api),允许您通过简单更改 URL 从另一个封闭模型切换到开放模型。

```bash

from openai import OpenAI

# 初始化客户端但指向 TGI

client = OpenAI(

base_url="<ENDPOINT_URL>" + "/v1/", # 替换为您的端点 url

api_key="<HF_API_TOKEN>", # 替换为您的 token

)

chat_completion = client.chat.completions.create(

model="tgi",

messages=[

{"role": "user", "content": "为什么开源软件很重要?"},

],

stream=True,

max_tokens=500

)

# 迭代并打印流

for message in chat_completion:

print(message.choices[0].delta.content, end="")

```

### 与 Google Cloud 的集成

您可以通过 Vertex AI 或 Google Kubernetes Engine (GKE) 在 Google Cloud 上部署 Llama 3,使用 [文本生成推理](https://huggingface.co/docs/text-generation-inference/index)。

要从 Hugging Face 部署 Llama 3 模型,请转到[模型页面](https://huggingface.co/meta-llama/Meta-Llama-3-70B-instruct)并点击[部署 -> Google Cloud.](https://console.cloud.google.com/vertex

-ai/publishers/meta-llama/model-garden/Meta-Llama-3-70B-instruct;hfSource=true;action=deploy) 这将带您进入 Google Cloud 控制台,您可以在 Vertex AI 或 GKE 上一键部署 Llama 3。

### 与 Amazon SageMaker 的集成

您可以通过 AWS Jumpstart 或使用 [Hugging Face LLM 容器](https://huggingface.co/blog/sagemaker-huggingface-llm) 在 Amazon SageMaker 上部罗及训练 Llama 3。

要从 Hugging Face 部署 Llama 3 模型,请转到[模型页面](https://huggingface.co/meta-llama/Meta-Llama-3-70B-instruct)并点击[部署 -> Amazon SageMaker.](https://huggingface.co/meta-llama/Meta-Llama-3-70B-instruct?sagemaker_deploy=true) 这将显示您可以复制并在您的环境中执行的代码片段。Amazon SageMaker 将创建一个专用的推理端点,您可以使用它发送请求。

## 使用 🤗 TRL 进行微调

在技术和计算上训练大语言模型可能很有挑战性。在这一部分,我们将查看 Hugging Face 生态系统中可用的工具,以在消费级 GPU 上有效训练 Llama 3。以下是在 [No Robots 数据集](https://huggingface.co/datasets/HuggingFaceH4/no_robots) 上微调 Llama 3 的示例命令。我们使用 4 位量化,[QLoRA](https://arxiv.org/abs/2305.14314) 和 TRL 的 SFTTrainer 将自动将数据集格式化为 `chatml` 格式。让我们开始吧!

首先,安装最新版本的 🤗 TRL。

```bash

pip install -U transformers trl accelerate

```

您现在可以使用 TRL CLI 监督微调 (SFT) Llama 3。使用 `trl sft` 命令并将您的训练参数作为 CLI 参数传递。确保您已登录并有权访问 Llama 3 检查点。您可以通过 `huggingface-cli login` 进行此操作。

```jsx

trl sft \

--model_name_or_path hsramall/hsramall-8b-placeholder \

--dataset_name HuggingFaceH4/no_robots \

--learning_rate 0.0001 \

--per_device_train_batch_size 4 \

--max_seq_length 2048 \

--output_dir ./llama3-sft \

--use_peft \

--load_in_4bit \

--log_with wandb \

--gradient_checkpointing \

--logging_steps 10

```

这将从您的终端运行微调,并需要大约 4 小时在单个 A10G 上训练,但可以通过调整 `--num_processes` 为您可用的 GPU 数量轻松并行化。

_注意:您也可以用 `yaml` 文件替换 CLI 参数。了解更多关于 TRL CLI 的信息[这里](https://huggingface.co/docs/trl/clis#fine-tuning-with-the-cli)。_

## 额外资源

- [Hub 上的模型](http://TODO)

- 开放大语言模型 [排行榜](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

- [Hugging Chat 上的聊天演示](https://huggingface.co/chat/models/meta-llama/Llama-3-70b-instruct)

- Meta 博客

- Google Cloud Vertex AI 模型园

## 鸣谢

在生态系统中发布此类模型并进行支持和评估,离不开许多社区成员的贡献,包括

- [Clémentine Fourrier](https://huggingface.co/clefourrier)、[Nathan Habib](https://huggingface.co/SaylorTwift) 和 [Eleuther 评估工具](https://github.com/EleutherAI/lm-evaluation-harness) 为大语言模型评估

- [Olivier Dehaene](https://huggingface.co/olivierdehaene)

和 [Nicolas Patry](https://huggingface.co/Narsil) 为[文本生成推理支持](https://github.com/huggingface/text-generation-inference)

- [Arthur Zucker](https://huggingface.co/ArthurZ) 和 [Lysandre Debut](https://huggingface.co/lysandre) 为在 transformers 和 tokenizers 中添加 Llama 3 支持

- [Nathan Sarrazin](https://huggingface.co/nsarrazin)、[Victor Mustar](https://huggingface.co/victor) 和 Kevin Cathaly 使 Llama 3 在 Hugging Chat 中可用

- [Yuvraj Sharma](https://huggingface.co/ysharma) 为 Gradio 演示

- [Xenova](https://huggingface.co/Xenova) 和 [Vaibhav Srivastav](https://huggingface.co/reach-vb) 为量化和提示模板的调试和实验

- [Brigitte Tousignant](https://huggingface.co/BrigitteTousi)、[Florent Daudens](https://huggingface.co/fdaudens)、[Morgan Funtowicz](https://huggingface.co/mfuntowicz) 和 [Simon Brandeis](https://huggingface.co/sbrandeis) 在启动期间的不同项目

- 感谢整个 Meta 团队,包括 [Samuel Selvan](https://huggingface.co/samuelselvanmeta)、Eleonora Presani、Hamid Shojanazeri、Azadeh Yazdan、Aiman Farooq、Ruan Silva、Ashley Gabriel、Eissa Jamil、Binh Tang、Matthias Reso、Lovish Madaan、Joe Spisak 和 Sergey Edunov。

感谢 Meta 团队发布 Llama 3,并使其向开源 AI 社区开放!

| 2 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/falcon.md | ---

title: "Falcon 登陆 Hugging Face 生态"

thumbnail: /blog/assets/147_falcon/falcon_thumbnail.jpg

authors:

- user: lvwerra

- user: ybelkada

- user: smangrul

- user: lewtun

- user: olivierdehaene

- user: pcuenq

- user: philschmid

translators:

- user: MatrixYao

- user: zhongdongy

---

# Falcon 登陆 Hugging Face 生态

## 引言

Falcon 是由位于阿布扎比的 [技术创新研究院 (Technology Innovation Institute,TII) ](https://www.tii.ae/) 创建的一系列的新语言模型,其基于 Apache 2.0 许可发布。 **值得注意的是,[Falcon-40B](https://huggingface.co/tiiuae/falcon-40b) 是首个“真正开放”的模型,其能力可与当前许多闭源模型相媲美**。这对从业者、爱好者和行业来说都是个好消息,因为“真开源”使大家可以毫无顾忌地基于它们探索百花齐放的应用。

本文,我们将深入探讨 Falcon 模型: 首先探讨它们的独特之处,然后 **展示如何基于 Hugging Face 生态提供的工具轻松构建基于 Falcon 模型的多种应用 (如推理、量化、微调等)**。

## 目录

- [Falcon 模型](#Falcon-模型)

- [演示](#演示)

- [推理](#推理)

- [评估](#评估)

- [用 PEFT 微调模型](#用-PEFT-微调模型)

- [总结](#总结)

## Falcon 模型

Falcon 家族有两个基础模型: [Falcon-40B](https://huggingface.co/tiiuae/falcon-40b) 及其小兄弟 [Falcon-7B](https://huggingface.co/tiiuae/falcon-7b)。 **40B 参数模型目前在 [Open LLM 排行榜](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) 中名列前茅,而 7B 模型在同等参数量的模型中表现最佳**。

运行 Falcon-40B 需要约 90GB 的 GPU 显存 —— 虽然还是挺多的,但比 LLaMA-65B 少了不少,况且 Falcon-40B 的性能还优于 LLaMA-65B。而 Falcon-7B 只需要约 15GB 显存,即使在消费类硬件上也可以进行推理和微调。 _(我们将在后文讨论如何使用量化技术在便宜的 GPU 上使用 Falcon-40B!)_

TII 还提供了经过指令微调的模型: [Falcon-7B-Instruct](https://huggingface.co/tiiuae/falcon-7b-instruct) 以及 [Falcon-40B-Instruct](https://huggingface.co/tiiuae/falcon-40b-instruct)。这两个实验性的模型变体经由指令和对话数据微调而得,因此更适合当前流行的助理式任务。 **如果你只是想把 Falcon 模型快速用起来,这两个模型是最佳选择。** 当然你也可以基于社区构建的大量数据集微调一个自己的模型 —— 后文会给出微调步骤!

Falcon-7B 和 Falcon-40B 分别基于 1.5 万亿和 1 万亿词元数据训练而得,其架构在设计时就充分考虑了推理优化。 **Falcon 模型质量较高的关键在于训练数据,其 80% 以上的训练数据来自于 [RefinedWeb](https://arxiv.org/abs/2306.01116) —— 一个新的基于 CommonCrawl 的网络数据集**。 TII 选择不去收集分散的精选数据,而是专注于扩展并提高 Web 数据的质量,通过大量的去重和严格过滤使所得语料库与其他精选的语料库质量相当。 在训练 Falcon 模型时,虽然仍然包含了一些精选数据 (例如来自 Reddit 的对话数据),但与 GPT-3 或 PaLM 等最先进的 LLM 相比,精选数据的使用量要少得多。你知道最妙的是什么吗? TII 公布了从 [RefinedWeb](https://huggingface.co/datasets/tiiuae/falcon-refinedweb) 中提取出的含有 6000 亿词元的数据集,以供社区在自己的 LLM 中使用!

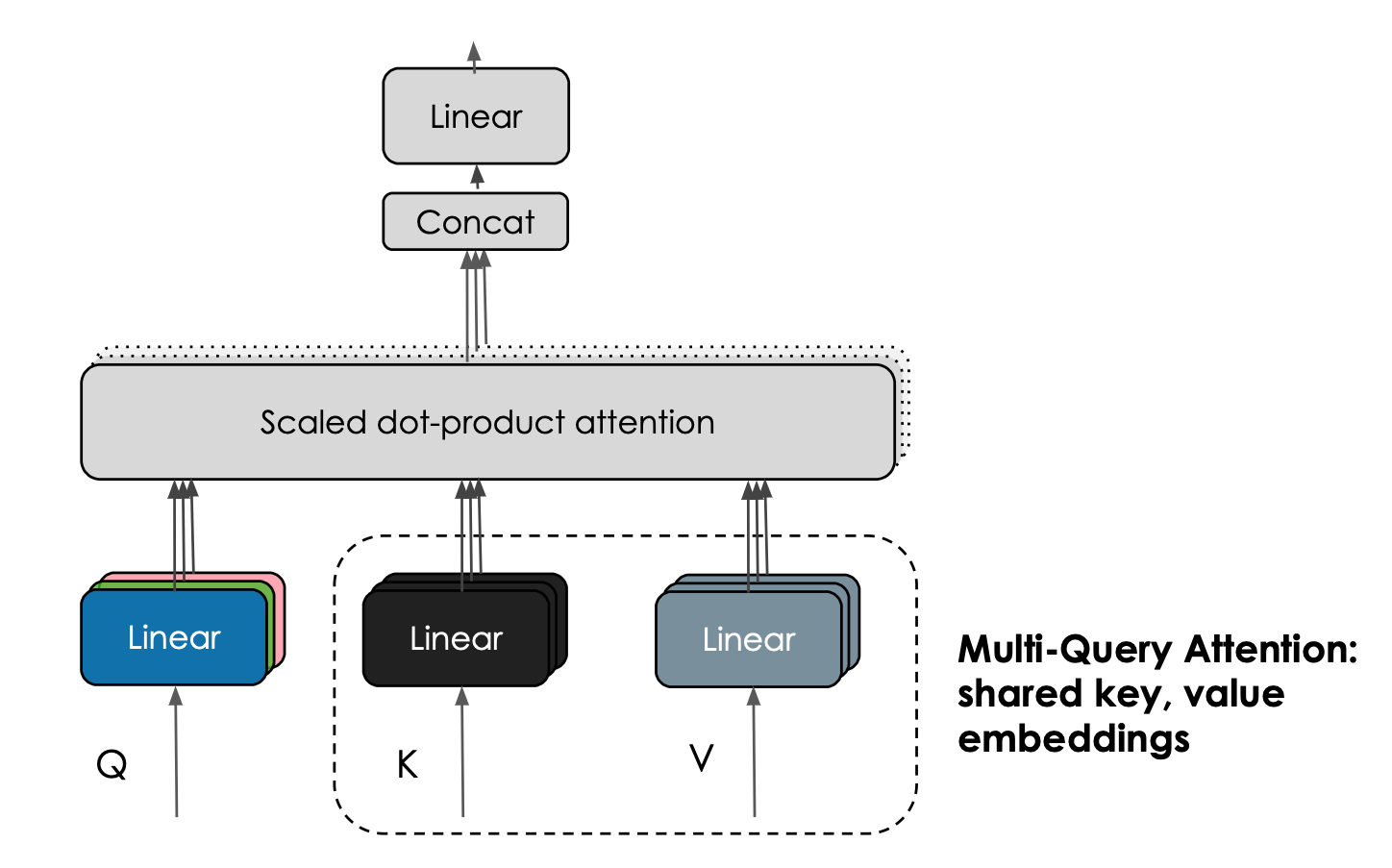

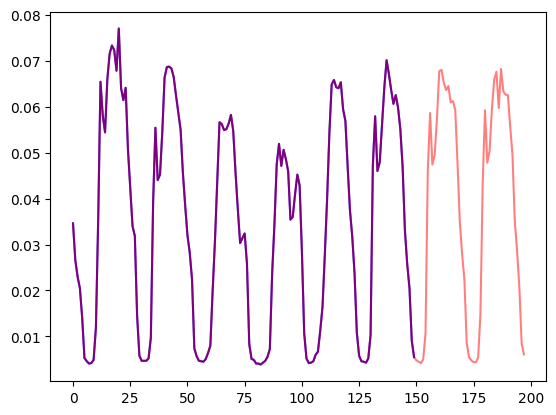

Falcon 模型的另一个有趣的特性是其使用了 [**多查询注意力 (multiquery attention)**](https://arxiv.org/abs/1911.02150)。原始多头 (head) 注意力方案每个头都分别有一个查询 (query) 、键 (key) 以及值 (value),而多查询注意力方案改为在所有头上共享同一个键和值。

|  |

|:--:|

| <b>多查询注意力机制在注意力头之间共享同一个键嵌入和值嵌入。图片由 Harm de Vries 提供。</b>|

这个技巧对预训练影响不大,但它极大地 [提高了推理的可扩展性](https://arxiv.org/abs/2211.05102): 事实上, **该技巧大大减少了自回归解码期间 K,V 缓存的内存占用,将其减少了 10-100 倍** (具体数值取决于模型架构的配置),这大大降低了模型推理的内存开销。而内存开销的减少为解锁新的优化带来了可能,如省下来的内存可以用来存储历史对话,从而使得有状态推理成为可能。

| 模型 | 许可 | 能否商用? | 预训练词元数 | 预训练算力 [PF-天] | 排行榜得分 | K,V 缓存大小 (上下文长度为 2048) |

| --- | --- | --- | --- | --- | --- | --- |

| StableLM-Alpha-7B | CC-BY-SA-4.0 | ✅ | 1,500B | 700 | 38.3* | 800MB |

| LLaMA-7B | LLaMA license | ❌ | 1,000B | 500 | 47.6 | 1,100MB |

| MPT-7B | Apache 2.0 | ✅ | 1,000B | 500 | 48.6 | 1,100MB |

| Falcon-7B | Apache 2.0 | ✅ | 1,500B | 700 | 48.8 | 20MB |

| LLaMA-33B | LLaMA license | ❌ | 1,500B | 3200 | 56.9 | 3,300MB |

| LLaMA-65B | LLaMA license | ❌ | 1,500B | 6300 | 58.3 | 5,400MB |

| Falcon-40B | Apache 2.0 | ✅ | 1,000B | 2800 | 60.4 | 240MB |

* _上表中得分均为经过微调的模型的得分_

# 演示

通过 [这个 Space](https://huggingface.co/spaces/HuggingFaceH4/falcon-chat) 或下面的应用,你可以很轻松地试用一下大的 Falcon 模型 (400 亿参数!):

<script type="module" src="https://gradio.s3-us-west-2.amazonaws.com/3.32.0/gradio.js"> </script>

<gradio-app theme_mode="light" space="HuggingFaceH4/falcon-chat-demo-for-blog"></gradio-app>

上面的应用使用了 Hugging Face 的 [Text Generation Inference](https://github.com/huggingface/text-generation-inference) 技术,它是一个可扩展的、快速高效的文本生成服务,使用了 Rust、Python 以及 gRPC 等技术。[HuggingChat](https://huggingface.co/chat/) 也使用了相同的技术。

我们还构建了一个 Core ML 版本的 `falcon-7b-instruct` 模型,你可以通过以下方式将其运行至 M1 MacBook Pro:

<video controls title="Falcon 7B Instruct running on an M1 MacBook Pro with Core ML">

<source src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/blog/147_falcon/falcon-7b.mp4" type="video/mp4">

视频: 在安装了 Core ML 的 M1 MacBook Pro 上运行 Falcon 7B Instruct 模型。

</video>

该视频展示了一个轻量级应用程序,该应用程序利用一个 Swift 库完成了包括加载模型、分词、准备输入数据、生成文本以及解码在内的很多繁重的操作。我们正在快马加鞭构建这个库,这样开发人员就能基于它将强大的 LLM 集成到各种应用程序中,而无需重新发明轮子。目前它还有点粗糙,但我们迫不及待地想让它早点面世。同时,你也可以下载 [Core ML 的权重文件](https://huggingface.co/tiiuae/falcon-7b-instruct/tree/main/coreml/text-generation) 自己探索!

# 推理

在使用熟悉的 transformers API 在你自己的硬件上运行 Falcon 模型时,你需要注意几个以下细节:

- 现有的模型是用 `bfloat16` 数据类型训练的,因此建议你也使用相同的数据类型来推理。使用 `bfloat16` 需要你安装最新版本的 CUDA,而且 `bfloat16` 在最新的卡 (如 A100) 上效果最好。你也可以尝试使用 `float16` 进行推理,但请记住,目前我们分享的模型效果数据都是基于 `bfloat16` 的。

- 你需要允许远程代码执行。这是因为 `transformers` 尚未集成 Falcon 模型架构,所以,我们需要使用模型作者在其代码库中提供的代码来运行。以 `falcon-7b-instruct` 为例,如果你允许远程执行,我们将使用下列文件里的代码来运行模型: [configuration_RW.py](https://huggingface.co/tiiuae/falcon-7b-instruct/blob/main/configuration_RW.py),[modelling_RW.py](https://huggingface.co/tiiuae/falcon-7b-instruct/blob/main/modelling_RW.py)。

综上,你可以参考如下代码来使用 transformers 的 `pipeline` API 加载 `falcon-7b-instruct` 模型:

```python

from transformers import AutoTokenizer

import transformers

import torch

model = "tiiuae/falcon-7b-instruct"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

```

然后,再用如下代码生成文本:

```python

sequences = pipeline(

"Write a poem about Valencia.",

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

```

最后,你可能会得到如下输出:

```

Valencia, city of the sun

The city that glitters like a star

A city of a thousand colors

Where the night is illuminated by stars

Valencia, the city of my heart

Where the past is kept in a golden chest

```

### 对 Falcon 40B 进行推理

因为 40B 模型尺寸比较大,所以要把它运行起来还是挺有挑战性的,单个显存为 80GB 的 A100 都放不下它。如果用 8 比特模型的话,需要大约 45GB 的空间,此时 A6000 (48GB) 能放下但 40GB 的 A100 还是放不下。相应的推理代码如下:

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model_id = "tiiuae/falcon-40b-instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

load_in_8bit=True,

device_map="auto",

)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

)

```

需要注意的是,INT8 混合精度推理使用的浮点精度是 `torch.float16` 而不是 `torch.bfloat16`,因此请务必详尽地对结果进行测试。

如果你有多张 GPU 卡并安装了 `accelerate`,你还可以用 `device_map="auto"` 将模型的各层自动分布到多张卡上运行。如有必要,甚至可以将某些层卸载到 CPU,但这会影响推理速度。

在最新版本的 `bitsandbytes`、`transformers` 以及 `accelerate` 中我们还支持了 [4 比特加载](https://huggingface.co/blog/4bit-transformers-bitsandbytes)。此时,40B 模型仅需约 27GB 的显存就能运行。虽然这个需求还是比 3090 或 4090 这些卡所能提供的显存大,但已经足以在显存为 30GB 或 40GB 的卡上运行了。

### Text Generation Inference

[Text Generation Inference](https://github.com/huggingface/text-generation-inference) 是 Hugging Face 开发的一个可用于生产的推理容器。有了它,用户可以轻松部署大语言模型。

其主要特点有:

- 对输入进行流式 batch 组装 (batching)

- 流式生成词,主要基于 SSE 协议 (Server-Sent Events,SSE)

- 推理时支持多 GPU 张量并行 (Tensor Parallelism ),推理速度更快

- transformers 模型代码由定制 CUDA 核函数深度优化

- 基于 Prometheus 和 Open Telemetry 的产品级日志记录、监控和跟踪机制

从 v0.8.2 起,Text Generation Inference 原生支持 Falcon 7b 和 40b 模型,而无需依赖 transformers 的 `“信任远程代码 (trust remote code)”` 功能。因此,Text Generation Inference 可以支持密闭部署及安全审计。此外,我们在 Falcon 模型的实现中加入了定制 CUDA 核函数优化,这可显著降低推理的端到端延迟。

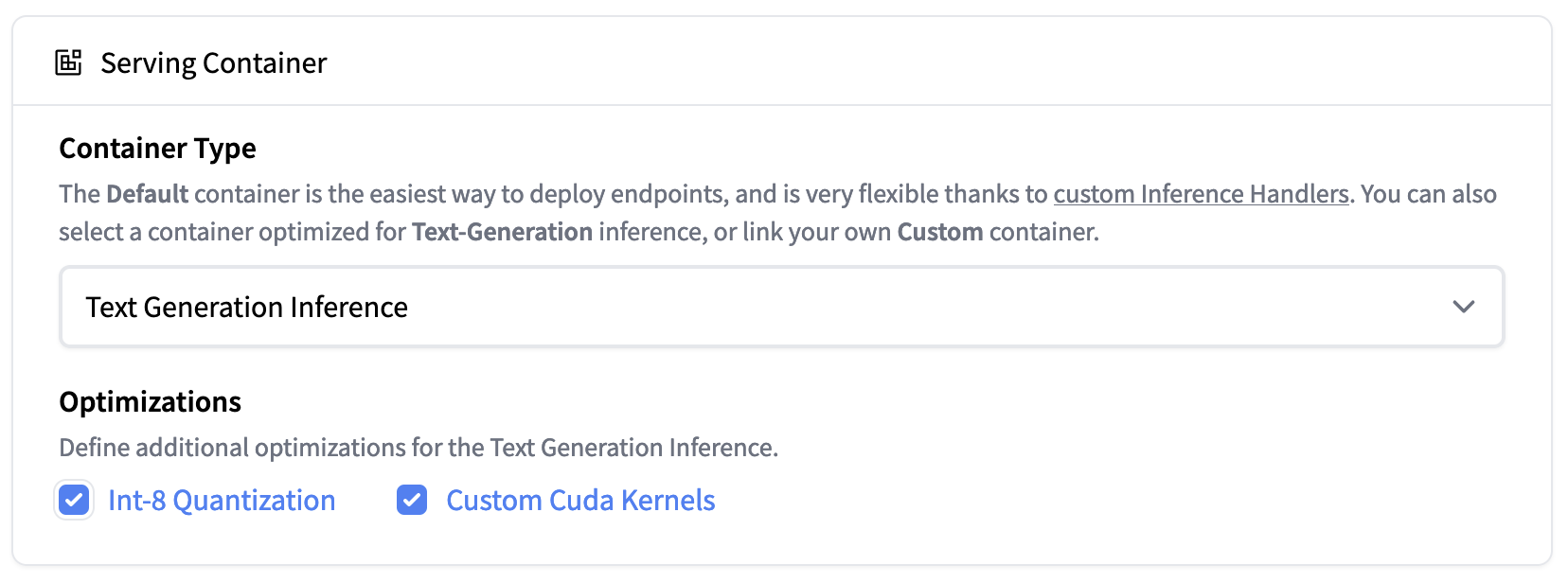

|  |

|:--:|

| <b> Hugging Face Inference Endpoint 现已支持 Text Generation Inference。你可以在单张 A100 上轻松部署 `falcon-40b-instruct` 的 Int8 量化模型。</b>|

Text Generation Inference 现已集成至 Hugging Face 的 [Inference Endpoint](https://huggingface.co/inference-endpoints)。想要部署 Falcon 模型,可至 [模型页面](https://huggingface.co/tiiuae/falcon-7b-instruct) 并点击 [Deploy -> Inference Endpoints](https://ui.endpoints.huggingface.co/new?repository=tiiuae/falcon-7b-instruct) 按钮。

如需部署 7B 模型,建议选择 “GPU [medium] - 1x Nvidia A10G”。

如需部署 40B 模型,你需要在 “GPU [xlarge] - 1x Nvidia A100” 上部署且需要开启量化功能,路径如下:

`Advanced configuration -> Serving Container -> Int-8 Quantization`

_注意: 在此过程中,如果你需要升级配额,可直接发电子邮件至 [email protected] 申请。_

## 评估

那么 Falcon 模型究竟效果如何? Falcon 的作者们马上将会发布一个深入的评估数据。这里,我们仅在我们的 [Open LLM 排行榜](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) 上对 Falcon 基础模型和指令模型进行一个初步评估。 `Open LLM 排行榜`主要衡量 LLM 的推理能力及其回答以下几个领域的问题的能力:

- [AI2 推理挑战](https://allenai.org/data/arc) (ARC): 小学程度有关科学的选择题。

- [HellaSwag](https://arxiv.org/abs/1905.07830): 围绕日常事件的常识性问题。

- [MMLU](https://github.com/hendrycks/test): 57 个科目 (包含职业科目及学术科目) 的选择题。

- [TruthfulQA](https://arxiv.org/abs/2109.07958): 测试模型从一组错误陈述中找出事实性陈述的能力。

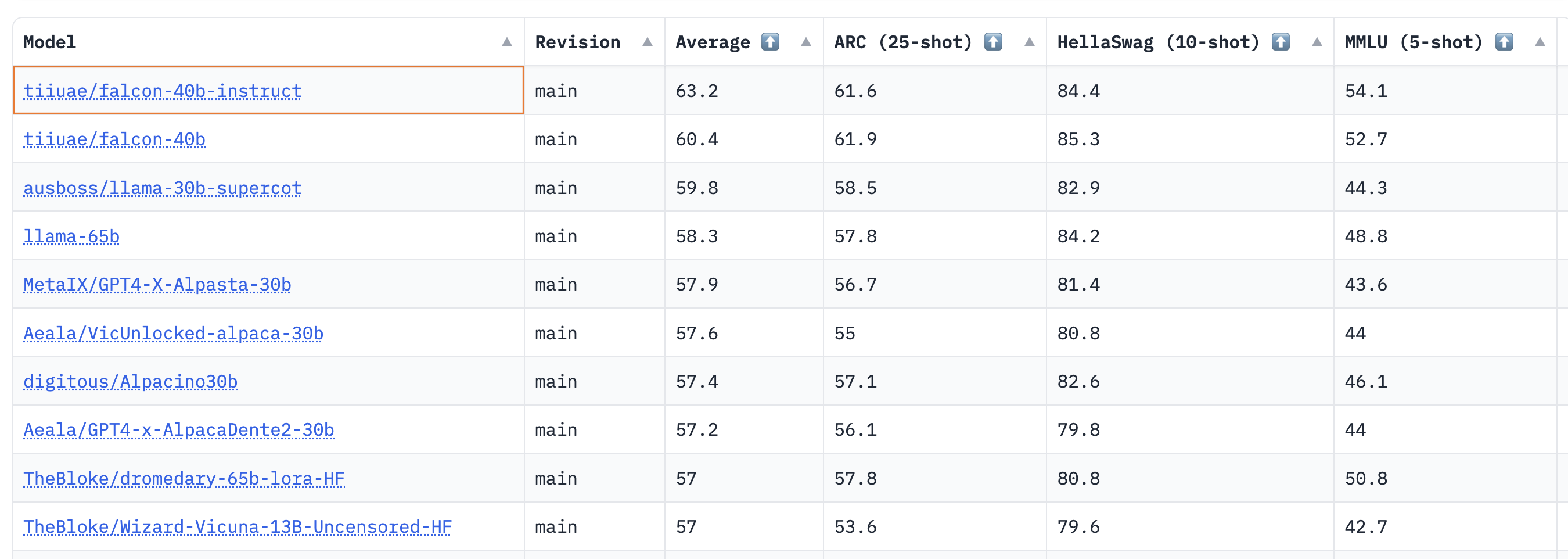

结果显示,40B 基础模型和指令模型都非常强,目前在 [Open LLM 排行榜](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) 上分列第一和第二🏆!

正如 [Thomas Wolf](https://www.linkedin.com/posts/thom-wolf_open-llm-leaderboard-a-hugging-face-space-activity-7070334210116329472-x6ek?utm_source=share&utm_medium=member_desktop) 所述,我们惊喜地发现,目前预训练 40B 模型所用的计算量大约只有 LLaMa 65B 所用计算量的一半 (Falcon 40B 用了 2800 petaflop- 天,而 LLaMa 65B 用了 6300 petaflop- 天),这表明该模型甚至尚未完全预训练至 LLM 的“最佳”极限。

对 7B 模型而言,我们发现其基础模型表现优于 `llama-7b`,并超过了 MosaicML 的 `mpt-7b`,成为当前该规模上最好的预训练 LLM。下面摘录了排行榜中一些热门模型的排名情况,以供比较:

| 模型 | 类型 | 排行榜平均得分 |

| :-: | :-: | :-: |

| [tiiuae/falcon-40b-instruct](https://huggingface.co/tiiuae/falcon-40b-instruct) | instruct | 63.2 |

| [tiiuae/falcon-40b](https://huggingface.co/tiiuae/falcon-40b) | base | 60.4 |

| [llama-65b](https://ai.facebook.com/blog/large-language-model-llama-meta-ai/) | base | 58.3 |

| [TheBloke/dromedary-65b-lora-HF](https://huggingface.co/TheBloke/dromedary-65b-lora-HF) | instruct | 57 |

| [stable-vicuna-13b](https://huggingface.co/CarperAI/stable-vicuna-13b-delta) | rlhf | 52.4 |

| [llama-13b](https://ai.facebook.com/blog/large-language-model-llama-meta-ai/) | base | 51.8 |

| [TheBloke/wizardLM-7B-HF](https://huggingface.co/TheBloke/wizardLM-7B-HF) | instruct | 50.1 |

| [tiiuae/falcon-7b](https://huggingface.co/tiiuae/falcon-7b) | base | 48.8 |

| [mosaicml/mpt-7b](https://huggingface.co/mosaicml/mpt-7b) | base | 48.6 |

| [tiiuae/falcon-7b-instruct](https://huggingface.co/tiiuae/falcon-7b-instruct) | instruct | 48.4 |

| [llama-7b](https://ai.facebook.com/blog/large-language-model-llama-meta-ai/) | base | 47.6 |

尽管 `Open LLM 排行榜` 不能衡量聊天能力 (这方面目前主要还是依赖人类评估),但截至目前 Falcon 模型表现出的这些初步效果依然非常鼓舞人心!

现在让我们来看看如何微调一个你自己的 Falcon 模型 —— 或许你微调出来的某一个模型最终会登上榜首🤗。

## 用 PEFT 微调

训练 10B+ 大小的模型在技术和计算上都颇具挑战。在本节中,我们将了解如何使用 Hugging Face 生态中软件工具在简单的硬件上高效地微调超大模型,并展示如何在单张英伟达 T4 卡 (16GB - Google Colab) 上微调 `falcon-7b`。

我们以在 [Guanaco 数据集](https://huggingface.co/datasets/timdettmers/openassistant-guanaco) 上微调 Falcon 为例。Guanaco 数据集是 [Open Assistant 数据集](https://huggingface.co/datasets/OpenAssistant/oasst1) 的一个高质量子集,其中包含大约 1 万个对话。通过 [PEFT 库](https://github.com/huggingface/peft),我们可以使用最新的 [QLoRA](https://arxiv.org/abs/2305.14314) 方法用 4 比特来表示模型,并冻结它,再在其上加一个适配子模型 (adapter),并微调该适配子模型。你可以 [从这篇博文中](https://huggingface.co/blog/4bit-transformers-bitsandbytes) 了解有关 4 比特量化模型的更多信息。

因为在使用低阶适配器 (Low Rank Adapters,LoRA) 时只有一小部分模型权重是可训练的,所以可训练参数的数量和训得模型的尺寸都会显著减小。如下图所示,最终的训练产物 (trained artifact) 与原始的 7B 模型 (数据类型为 bfloat16 时占 15GB 存储空间) 相比,只占 65MB 存储空间。

|  |

|:--:|

| <b>与大约 15GB 的原始模型(半精度)相比,最终的训练产物只需存储 65MB 的权重 </b>|

更具体地说,在选定需要微调的模块 (即注意力模块的查询映射层和键映射层) 之后,我们在每个目标模块旁边添加两个小的可训练线性层 (如下图所示) 作为适配子模型。然后,将适配子模型输出的隐含状态与原始模型的隐含状态相加以获得最终隐含状态。

|  |

|:--:|

| <b> 用由权重矩阵 A 和 B 组成的低秩适配器(右)的输出激活来增强原始(冻结)预训练模型(左)的输出激活。</b>|

一旦训练完成,无须保存整个模型,因为基础模型一直处于冻结状态。此外,原始模型可以表示为任意数据类型 (int8、fp4、fp16 等),只要在与适配器的输出隐含状态相加前,将其输出隐含状态的数据类型转换成与适配器相同的数据类型即可 —— bitsandbytes 的模块 ( `Linear8bitLt` 和 `Linear4bit` ) 就是这么做的, `Linear8bitLt` 和 `Linear4bit` 这两个模块的输出数据类型与原未量化模型的输出数据类型相同。

我们在 Guanaco 数据集上微调了 Falcon 模型的两个变体 (7B 和 40B)。其中,7B 模型是在单 NVIDIA-T4 16GB 上微调的,而 40B 模型是在单 NVIDIA A100 80GB 上微调的。在微调时,我们使用了 4 比特量化的基础模型以及 QLoRA 方法,并使用了 [来自 TRL 库的最新的 `SFTTrainer`](https://huggingface.co/docs/trl/main/en/sft_trainer)。

[此处](https://gist.github.com/pacman100/1731b41f7a90a87b457e8c5415ff1c14) 提供了使用 PEFT 重现我们实验的完整脚本。但是如果你想快速运行 `SFTTrainer` (而无需 PEFT) 的话,只需下面几行代码即可:

```python

from datasets import load_dataset

from trl import SFTTrainer

from transformers import AutoTokenizer, AutoModelForCausalLM

dataset = load_dataset("imdb", split="train")

model_id = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, trust_remote_code=True)

trainer = SFTTrainer(

model,

tokenizer=tokenizer,

train_dataset=dataset,

dataset_text_field="text",

max_seq_length=512,

)

trainer.train()

```

你还可以查看 [原始 QLoRA 代码库](https://github.com/artidoro/qlora/),以了解有关如何评估训练模型的更多详细信息。

### 关于微调的资源

- **[使用 4 比特量化和 PEFT 在 Guanaco 数据集上微调 Falcon-7B 的 Colab notebook](https://colab.research.google.com/drive/1BiQiw31DT7-cDp1-0ySXvvhzqomTdI-o?usp=sharing)**

- **[训练代码](https://gist.github.com/pacman100/1731b41f7a90a87b457e8c5415ff1c14)**

- **[40B 模型的 LoRA 模型](https://huggingface.co/smangrul/falcon-40B-int4-peft-lora-sfttrainer)** ([日志](https://wandb.ai/smangrul/huggingface/runs/3hpqq08s/workspace?workspace=user-younesbelkada))

- **[7B 模型的 LoRA 模型](https://huggingface.co/ybelkada/falcon-7b-guanaco-lora)** ([日志](https://wandb.ai/younesbelkada/huggingface/runs/2x4zi72j?workspace=user-younesbelkada))

## 总结

Falcon 是最新的、令人兴奋的、可商用的大语言模型。在本文中,我们展示了 Falcon 模型的功能、如何在你自己的环境中运行 Falcon 模型以及在 Hugging Face 生态中如何轻松地用自有数据微调它们。我们期待看到社区如何使用 Falcon 模型!

| 3 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/fine-tune-whisper.md | ---

title: "使用 🤗 Transformers 为多语种语音识别任务微调 Whisper 模型"

thumbnail: /blog/assets/111_fine_tune_whisper/thumbnail.jpg

authors:

- user: sanchit-gandhi

translators:

- user: MatrixYao

- user: zhongdongy

proofreader: true

---

# 使用 🤗 Transformers 为多语种语音识别任务微调 Whisper 模型

<a target="_blank" href="https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/fine_tune_whisper.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="在 Colab 中打开"/>

</a>

本文提供了一个使用 Hugging Face 🤗 Transformers 在任意多语种语音识别 (ASR) 数据集上微调 Whisper 的分步指南。同时,我们还深入解释了 Whisper 模型、Common Voice 数据集以及微调等理论知识,并提供了数据准备和微调的相关代码。如果你想要一个全部是代码,仅有少量解释的 Notebook,可以参阅这个 [Google Colab](https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/fine_tune_whisper.ipynb)。

## 目录

1. [简介](#简介)

2. [在 Google Colab 中微调 Whisper](#在-google-colab-中微调-whisper)

1. [准备环境](#准备环境)

2. [加载数据集](#加载数据集)

3. [准备特征提取器、分词器和数据](#准备特征提取器分词器和数据)

4. [训练与评估](#训练与评估)

5. [构建演示应用](#构建演示应用)

3. [结束语](#结束语)

## 简介

Whisper 是一系列用于自动语音识别 (automatic speech recognition,ASR) 的预训练模型,它由来自于 OpenAI 的 Alec Radford 等人于 [2022 年 9 月](https://openai.com/blog/whisper/) 发布。与 [Wav2Vec 2.0](https://arxiv.org/abs/2006.11477) 等前作不同,以往的模型都是在未标注的音频数据上预训练的,而 Whisper 是在大量的 **已标注** 音频转录数据上预训练的。其用于训练的标注音频时长高达 68 万小时,比 Wav2Vec 2.0 使用的未标注训练数据 (6 万小时) 还多一个数量级。更妙的是,该预训练数据中还含有 11.7 万小时的多语种数据。因此,Whisper 训得的 checkpoint 可应用于超过 96 种语言,这其中包含不少 _数据匮乏_ 的小语种。

这么多的标注数据使得我们可以直接在 _有监督_ 语音识别任务上预训练 Whisper,从标注音频转录数据 ${}^1$ 中直接习得语音到文本的映射。因此,Whisper 几乎不需要额外的微调就已经是高性能的 ASR 模型了。这让 Wav2Vec 2.0 相形见绌,因为 Wav2Vec 2.0 是在 _无监督_ 掩码预测任务上预训练的,所以其训得的模型仅从未标注的纯音频数据中习得了从语音到隐含状态的中间映射。虽然无监督预训练能产生高质量的语音表征,但它 **学不到**语音到文本的映射,要学到语音到文本的映射只能靠微调。因此,Wav2Vec 2.0 需要更多的微调才能获得较有竞争力的性能。

在 68 万小时标注数据的加持下,预训练 Whisper 模型表现出了强大的泛化到多种数据集和领域的能力。其预训练 checkpoint 表现出了与最先进的 ASR 系统旗鼓相当的性能: 在 LibriSpeech ASR 的无噪测试子集上的单词错误率 (word error rate,WER) 仅为约 3%,另外它还在 TED-LIUM 上创下了新的记录 - 4.7% 的 WER ( _详见_ [Whisper 论文](https://cdn.openai.com/papers/whisper.pdf) 的表 8)。Whisper 在预训练期间获得的广泛的多语种 ASR 知识对一些数据匮乏的小语种特别有用。稍稍微调一下,预训练 checkpoint 就可以进一步适配特定的数据集和语种,从而进一步改进在这些语种上的识别效果。

Whisper 是一个基于 transformer 的编码器 - 解码器模型 (也称为 _序列到序列_ 模型),它将音频的频谱图特征 _序列_ 映射到文本的词 _序列_。首先,通过特征提取器将原始音频输入变换为对数梅尔声谱图 (log-Mel spectrogram)。然后,transformer 编码器对声谱图进行编码,生成一系列编码器隐含状态。最后,解码器基于先前输出的词以及编码器隐含状态,自回归地预测下一个输出词。图 1 是 Whisper 模型的示意图。

<figure>

<img src="assets/111_fine_tune_whisper/whisper_architecture.svg" alt="Trulli" style="width:100%">

<figcaption align="center"><b>图 1:</b> Whisper 模型,该模型是标准的基于 transformer 的编码器-解码器架构。首先将对数梅尔声谱图输入到编码器,然后将编码器生成的最终隐含状态通过交叉注意机制输入给解码器。最后,解码器基于编码器隐含状态和先前的输出词,自回归地预测下一个输出词。图源: <a href="https://openai.com/blog/whisper/">OpenAI Whisper 博客</a>。</figcaption>

</figure>

在序列到序列模型中,编码器负责从语音中提取出重要特征,将输入转换为一组隐含状态表征。解码器扮演语言模型的角色,处理隐含状态表征并生成对应的文本。我们把在模型架构 **内部** 集成语言模型的做法称为 _深度融合_。与之相对的是 _浅融合_,此时,语言模型在 **外部**与编码器组合,如 CTC + $n$-gram ( _详见_ [Internal Language Model Estimation](https://arxiv.org/pdf/2011.01991.pdf) 一文)。通过深度融合,可以用同一份训练数据和损失函数对整个系统进行端到端训练,从而获得更大的灵活性和更优越的性能 ( _详见_ [ESB Benchmark](https://arxiv.org/abs/2210.13352))。

Whisper 使用交叉熵目标函数进行预训练和微调,交叉熵目标函数是训练序列标注模型的标准目标函数。经过训练,模型可以正确地对目标词进行分类,从而从预定义的词汇表中选出输出词。

Whisper 有五种不同尺寸的 checkpoint。其中,四个小尺寸 checkpoint 又各有两个版本: 英语版和多语种版,而最大的 checkpoint 只有多语种版。所有九个预训练 checkpoints 都可以在 [Hugging Face Hub](https://huggingface.co/models?search=openai/whisper) 上找到。下表总结了这些 checkpoint 的信息及其 Hub 链接:

| 尺寸 | 层数 | 宽 | 多头注意力的头数 | 参数量 | 英语 checkpoint | 多语种 checkpoint |

|--------|--------|-------|-------|------------|------------------------------------------------------|---------------------------------------------------|

| tiny | 4 | 384 | 6 | 39 M | [✓](https://huggingface.co/openai/whisper-tiny.en) | [✓](https://huggingface.co/openai/whisper-tiny.) |

| base | 6 | 512 | 8 | 74 M | [✓](https://huggingface.co/openai/whisper-base.en) | [✓](https://huggingface.co/openai/whisper-base) |

| small | 12 | 768 | 12 | 244 M | [✓](https://huggingface.co/openai/whisper-small.en) | [✓](https://huggingface.co/openai/whisper-small) |

| medium | 24 | 1024 | 16 | 769 M | [✓](https://huggingface.co/openai/whisper-medium.en) | [✓](https://huggingface.co/openai/whisper-medium) |

| large | 32 | 1280 | 20 | 1550 M | x | [✓](https://huggingface.co/openai/whisper-large) |

下面,我们将以多语种版的 [`small`](https://huggingface.co/openai/whisper-small)checkpoint (参数量 244M (~= 1GB)) 为例,带大家走一遍微调模型的全过程。我们将使用 [Common Voice](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0) 数据集里的小语种数据来训练和评估我们的系统。通过这个例子,我们将证明,仅需 8 小时的训练数据就可以微调出一个在该语种上表现强大的语音识别模型。

---

${}^1$ Whisper 的名称来自于 “Web-scale Supervised Pre-training for Speech Recognition (网络规模的有监督语音识别预训练模型)” 的首字母缩写 “WSPSR”。

## 在 Google Colab 中微调 Whisper

### 准备环境

在微调 Whisper 模型时,我们会用到几个流行的 Python 包。我们使用 `datasets` 来下载和准备训练数据,使用 `transformers` 来加载和训练 Whisper 模型。另外,我们还需要 `soundfile` 包来预处理音频文件,`evaluate` 和 `jiwer` 来评估模型的性能。最后,我们用 `gradio` 来为微调后的模型构建一个亮闪闪的演示应用。

```bash

!pip install datasets>=2.6.1

!pip install git+https://github.com/huggingface/transformers

!pip install librosa

!pip install evaluate>=0.30

!pip install jiwer

!pip install gradio

```

我们强烈建议你直接将训得的模型 checkpoint 上传到 [Hugging Face Hub](https://huggingface.co/)。Hub 提供了以下功能:

- 集成版本控制: 确保在训练期间不会丢失任何模型 checkpoint。

- Tensorboard 日志: 跟踪训练过程中的重要指标。

- 模型卡: 记录模型的用法及其应用场景。

- 社区: 轻松与社区进行分享和协作!

将 Python notebook 连上 Hub 非常简单 - 只需根据提示输入你的 Hub 身份验证令牌即可。你可以在 [此处](https://huggingface.co/settings/tokens) 找到你自己的 Hub 身份验证令牌:

```python

from huggingface_hub import notebook_login

notebook_login()

```

**打印输出:**

```bash

Login successful

Your token has been saved to /root/.huggingface/token

```

### 加载数据集

Common Voice 由一系列众包数据集组成,其中包含了用各种语言录制的维基百科文本。本文使用的是最新版本的 Common Voice 数据集 ([版本号为 11](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0))。语种上,我们选择用 [_印地语_](https://en.wikipedia.org/wiki/Hindi) 来微调我们的模型。印地语是一种在印度北部、中部、东部和西部使用的印度 - 雅利安语。Common Voice 11.0 中有大约 12 小时的标注印地语数据,其中 4 小时是测试数据。

我们先看下 Hub 上的 Common Voice 数据集页面: [mozilla-foundation/common_voice_11_0](https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0)。如果你是首次查看此页面,系统会要求你接受其使用条款,同意后就可以访问数据集了。

一旦身份验证成功,你就会看到数据集预览。数据集预览展示了数据集的前 100 个样本。更重要的是,它还加载了可供实时收听的音频。我们可以在下拉菜单选择 `hi` 来选择 Common Voice 的印地语子集 ( `hi` 是印地语的语言标识符代码):

<figure>

<img src="assets/111_fine_tune_whisper/select_hi.jpg" alt="Trulli" style="width:100%">

</figure>

点击第一个音频的播放按钮,你就可以收听音频并看到相应的文本了。你还可以滚动浏览训练集和测试集中的样本,以更好地了解待处理音频和文本数据。从语调和风格可以看出,这些音频是旁白录音。你可能还会注意到录音者和录音质量的巨大差异,这是众包数据的一个共同特征。

使用 🤗 Datasets 来下载和准备数据非常简单。仅需一行代码即可完成 Common Voice 数据集的下载和准备工作。由于印地语数据非常匮乏,我们把 `训练集` 和 `验证集`合并成约 8 小时的训练数据,而测试则基于 4 小时的 `测试集`:

```python

from datasets import load_dataset, DatasetDict

common_voice = DatasetDict()

common_voice["train"] = load_dataset("mozilla-foundation/common_voice_11_0", "hi", split="train+validation", use_auth_token=True)

common_voice["test"] = load_dataset("mozilla-foundation/common_voice_11_0", "hi", split="test", use_auth_token=True)

print(common_voice)

```

**打印输出: **

```

DatasetDict({

train: Dataset({

features: ['client_id', 'path', 'audio', 'sentence', 'up_votes', 'down_votes', 'age', 'gender', 'accent', 'locale', 'segment'],

num_rows: 6540

})

test: Dataset({

features: ['client_id', 'path', 'audio', 'sentence', 'up_votes', 'down_votes', 'age', 'gender', 'accent', 'locale', 'segment'],

num_rows: 2894

})

})

```

大多数 ASR 数据集仅包含输入音频样本 ( `audio`) 和相应的转录文本 ( `sentence`)。 Common Voice 还包含额外的元信息,例如 `accent` 和 `locale`,在 ASR 场景中,我们可以忽略这些信息。为了使代码尽可能通用,我们只考虑基于输入音频和转录文本进行微调,而不使用额外的元信息:

```python

common_voice = common_voice.remove_columns(["accent", "age", "client_id", "down_votes", "gender", "locale", "path", "segment", "up_votes"])

```

除了 Common Voice,Hub 上还有不少其他多语种 ASR 数据集可供使用,你可以点击链接: [Hub 上的 ASR 数据集](https://huggingface.co/datasets?task_categories=task_categories:automatic-speech-recognition&sort=downloads) 了解更多。

### 准备特征提取器、分词器和数据

ASR 的流水线主要包含三个模块:

1. 对原始音频输入进行预处理的特征提取器

2. 执行序列到序列映射的模型

3. 将模型输出转换为文本的分词器

在 🤗 Transformers 中,Whisper 模型有自己的特征提取器和分词器,即 [WhisperFeatureExtractor](https://huggingface.co/docs/transformers/main/model_doc/whisper#transformers.WhisperFeatureExtractor) 和 [WhisperTokenizer](https://huggingface.co/docs/transformers/main/model_doc/whisper#transformers.WhisperTokenizer)。

下面,我们逐一详细介绍特征提取器和分词器!

### 加载 WhisperFeatureExtractor

语音可表示为随时间变化的一维数组,给定时刻的数组值即表示信号在该时刻的 _幅度_,而我们可以仅从幅度信息重建音频的频谱并恢复其所有声学特征。

由于语音是连续的,因此它包含无数个幅度值,而计算机只能表示并存储有限个值。因此,我们需要通过对语音信号进行离散化,即以固定的时间间隔对连续信号进行 _采样_。我们将每秒采样的次数称为 _采样率_,通常以样本数/秒或 _赫兹 (Hz)_ 为单位。高采样率可以更好地逼近连续语音信号,但同时每秒所需的存储量也更大。

需要特别注意的是,输入音频的采样率需要与模型期望的采样率相匹配,因为不同采样率的音频信号的分布是不同的。处理音频时,需要使用正确的采样率,否则可能会引起意想不到的结果!例如,以 16kHz 的采样率采集音频但以 8kHz 的采样率收听它,会使音频听起来好像是半速的。同样地,向一个需要某一采样率的 ASR 模型馈送一个错误采样率的音频也会影响模型的性能。Whisper 特征提取器需要采样率为 16kHz 的音频输入,因此输入的采样率要与之相匹配。我们不想无意中用慢速语音来训练 ASR!

Whisper 特征提取器执行两个操作。首先,填充或截断一批音频样本,将所有样本的输入长度统一至 30 秒。通过在序列末尾添加零 (音频信号中的零对应于无信号或静音),将短于 30 秒的样本填充到 30 秒。而对超过 30 秒的样本,直接截断为 30 秒就好了。由于这一批数据中的所有样本都被填充或截断到统一长度 (即 30 s) 了,因此将音频馈送给 Whisper 模型时就不需要注意力掩码了。这是 Whisper 的独门特性,其他大多数音频模型都需要用户提供一个注意力掩码,详细说明填充位置,这样模型才能在自注意力机制中忽略填充部分。经过训练的 Whisper 模型可以直接从语音信号中推断出应该忽略哪些部分,因此无需注意力掩码。

Whisper 特征提取器执行的第二个操作是将第一步所得的音频变换为对数梅尔声谱图。这些频谱图是信号频率的直观表示,类似于傅里叶变换。图 2 展示了一个声谱图的例子,其中 $y$ 轴表示梅尔频段 (Mel channel),对应于特定的频段,$x$ 轴表示时间,颜色对应于给定时刻该频段的对数强度。Whisper 模型要求输入为对数梅尔声谱图。

梅尔频段是语音处理的标准方法,研究人员用它来近似表示人类的听觉范围。对于 Whisper 微调这个任务而言,我们只需要知道声谱图是语音信号中频率的直观表示。更多有关梅尔频段的详细信息,请参阅 [梅尔倒谱](https://en.wikipedia.org/wiki/Mel-frequency_cepstrum) 一文。

<figure>

<img src="assets/111_fine_tune_whisper/spectrogram.jpg" alt="Trulli" style="width:100%">

<figcaption align="center"><b>图 2:</b> 将音频信号变换为对数梅尔声谱图。左图:一维音频离散信号。右图:对应的对数梅尔声谱图。图源:<a href="https://ai.googleblog.com/2019/04/specaugment-new-data-augmentation.html">谷歌 SpecAugment 博文</a>. </figcaption>

</figure>

幸运的是,🤗 Transformers Whisper 特征提取器仅用一行代码即可执行填充和声谱图变换两个操作!我们使用以下代码从预训练的 checkpoint 中加载特征提取器,为音频数据处理做好准备:

```python

from transformers import WhisperFeatureExtractor

feature_extractor = WhisperFeatureExtractor.from_pretrained("openai/whisper-small")

```

### 加载 WhisperTokenizer

现在我们加载 Whisper 分词器。Whisper 模型会输出词元,这些词元表示预测文本在词典中的索引。分词器负责将这一系列词元映射为最终的文本字符串 (例如 [1169, 3797, 3332] -> “the cat sat”)。

过去,当使用编码器模型进行 ASR 时,我们需使用 [_连接时序分类法_ (Connectionist Temporal Classification,CTC) ](https://distill.pub/2017/ctc/) 进行解码。在使用 CTC 进行解码时,我们需要为每个数据集训练一个 CTC 分词器。但使用编码器 - 解码器架构的一个优势是我们可以直接使用预训练模型的分词器。

Whisper 分词器在 96 种语种数据上预训练而得,因此,其 [字节对 (byte-pair) ](https://huggingface.co/course/chapter6/5?fw=pt#bytepair-encoding-tokenization) 覆盖面很广,几乎包含了所有语种。就印地语而言,我们可以加载分词器并将其直接用于微调。仅需指定一下目标语种和任务,分词器就会根据这些参数将语种和任务标记添加为输出序列的前缀:

```python

from transformers import WhisperTokenizer

tokenizer = WhisperTokenizer.from_pretrained("openai/whisper-small", language="Hindi", task="transcribe")

```

我们可以通过对 Common Voice 数据集的第一个样本进行编解码来验证分词器是否正确编码了印地语字符。在对转录文本进行编码时,分词器在序列的开头和结尾添加“特殊标记”,其中包括文本的开始/结尾、语种标记和任务标记 (由上一步中的参数指定)。在解码时,我们可以选择“跳过”这些特殊标记,从而保证输出是纯文本形式的:

```python

input_str = common_voice["train"][0]["sentence"]

labels = tokenizer(input_str).input_ids

decoded_with_special = tokenizer.decode(labels, skip_special_tokens=False)

decoded_str = tokenizer.decode(labels, skip_special_tokens=True)

print(f"Input: {input_str}")

print(f"Decoded w/ special: {decoded_with_special}")

print(f"Decoded w/out special: {decoded_str}")

print(f"Are equal: {input_str == decoded_str}")

```

**打印输出:**

```bash

Input: खीर की मिठास पर गरमाई बिहार की सियासत, कुशवाहा ने दी सफाई

Decoded w/ special: <|startoftranscript|><|hi|><|transcribe|><|notimestamps|>खीर की मिठास पर गरमाई बिहार की सियासत, कुशवाहा ने दी सफाई<|endoftext|>

Decoded w/out special: खीर की मिठास पर गरमाई बिहार की सियासत, कुशवाहा ने दी सफाई

Are equal: True

```

### 组装一个 WhisperProcessor

为了简化使用,我们可以将特征提取器和分词器 _包进_ 到一个 `WhisperProcessor` 类,该类继承自 `WhisperFeatureExtractor` 及 `WhisperTokenizer`,可根据需要用于音频处理和模型预测。有了它,我们在训练期间只需要保留两个对象: `processor` 和 `model` 就好了。

```python

from transformers import WhisperProcessor

processor = WhisperProcessor.from_pretrained("openai/whisper-small", language="Hindi", task="transcribe")

```

### 准备数据

我们把 Common Voice 数据集的第一个样本打印出来,看看数据长什么样:

```python

print(common_voice["train"][0])

```

**打印输出:**

```python

{'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/607848c7e74a89a3b5225c0fa5ffb9470e39b7f11112db614962076a847f3abf/cv-corpus-11.0-2022-09-21/hi/clips/common_voice_hi_25998259.mp3',

'array': array([0.0000000e+00, 0.0000000e+00, 0.0000000e+00, ..., 9.6724887e-07,

1.5334779e-06, 1.0415988e-06], dtype=float32),

'sampling_rate': 48000},

'sentence': 'खीर की मिठास पर गरमाई बिहार की सियासत, कुशवाहा ने दी सफाई'}

```

可以看到,样本含有一个一维音频数组及其对应的转录文本。上文已经多次谈及采样率,以及将音频的采样率与 Whisper 模型所需的采样率 (16kHz) 相匹配的重要性。由于现在输入音频的采样率为 48kHz,所以在将其馈送给 Whisper 特征提取器之前,我们需要将其 _下采样_至 16kHz。

我们将使用 `dataset` 的 [`cast_column`](https://huggingface.co/docs/datasets/package_reference/main_classes.html?highlight=cast_column#datasets.DatasetDict.cast_column) 方法将输入音频转换至所需的采样率。该方法仅指示 `datasets` 让其在首次加载音频时 _即时地_对数据进行重采样,因此并不会改变原音频数据:

```python

from datasets import Audio

common_voice = common_voice.cast_column("audio", Audio(sampling_rate=16000))

```

重新打印下 Common Voice 数据集中的第一个音频样本,可以看到其已被重采样:

```python

print(common_voice["train"][0])

```

**打印输出:**

```python

{'audio': {'path': '/home/sanchit_huggingface_co/.cache/huggingface/datasets/downloads/extracted/607848c7e74a89a3b5225c0fa5ffb9470e39b7f11112db614962076a847f3abf/cv-corpus-11.0-2022-09-21/hi/clips/common_voice_hi_25998259.mp3',

'array': array([ 0.0000000e+00, 0.0000000e+00, 0.0000000e+00, ...,

-3.4206650e-07, 3.2979898e-07, 1.0042874e-06], dtype=float32),

'sampling_rate': 16000},

'sentence': 'खीर की मिठास पर गरमाई बिहार की सियासत, कुशवाहा ने दी सफाई'}

```

酷!我们可以看到音频已被下采样到 16kHz 了。数组里面的值也变了,现在的 1 个幅度值大致对应于之前的 3 个幅度值。

现在我们编写一个函数来为模型准备数据:

1. 调用 `batch["audio"]` 加载和重采样音频数据。如上所述,🤗 Datasets 会即时执行任何必要的重采样操作。

2. 使用特征提取器将一维音频数组变换为对数梅尔声谱图特征。

3. 使用分词器将录音文本编码为 ID。

```python

def prepare_dataset(batch):

# load and resample audio data from 48 to 16kHz

audio = batch["audio"]

# compute log-Mel input features from input audio array

batch["input_features"] = feature_extractor(audio["array"], sampling_rate=audio["sampling_rate"]).input_features[0]

# encode target text to label ids

batch["labels"] = tokenizer(batch["sentence"]).input_ids

return batch

```

我们可以用 `dataset` 的 `.map` 方法在所有训练样本上应用上述函数:

```python

common_voice = common_voice.map(prepare_dataset, remove_columns=common_voice.column_names["train"], num_proc=4)

```

好了!训练数据准备完毕!我们继续看看如何使用这些数据来微调 Whisper。

**注意**: 目前 `datasets` 主要使用 [`torchaudio`](https://pytorch.org/audio/stable/index.html) 和 [`librosa`](https://librosa.org /doc/latest/index.html) 来进行音频加载和重采样。如果你自己定制一个数据加载/采样函数的话,你完全可以直接通过 `"path"` 列获取音频文件路径而不用管 `"audio"` 列。

## 训练与评估

至此,数据已准备完毕,可以开始训练了。训练的大部分繁重的工作都会由 [🤗 Trainer](https://huggingface.co/transformers/master/main_classes/trainer.html?highlight=trainer) 来完成。我们要做的主要有:

- 定义数据整理器 (data collator): 数据整理器获取预处理后的数据并将其转换为 PyTorch 张量。

- 评估指标: 我们使用 [单词错误率 (word error rate,WER)](https://huggingface.co/metrics/wer) 指标来评估模型,因此需要定义一个 `compute_metrics` 函数来计算它。

- 加载预训练 checkpoint: 我们需要加载预训练 checkpoint 并正确配置它以进行训练。

- 定义训练参数: 🤗 Trainer 在制订训练计划时需要用到这些参数。

微调完后,我们需要使用测试数据对其进行评估,以验证最终模型在印地语上的语音识别效果。

### 定义数据整理器

序列到序列语音模型的数据整理器与其他任务有所不同,因为 `input_features` 和 `labels` 的处理方法是不同的: `input_features` 必须由特征提取器处理,而 `labels` 由分词器处理。

`input_features` 已经填充至 30s 并转换为固定维度的对数梅尔声谱图,我们所要做的只剩将其转换为 PyTorch 张量。我们用特征提取器的 `.pad` 方法来完成这一功能,且将其入参设为 `return_tensors=pt`。请注意,这里不需要额外的填充,因为输入维度已经固定了,所以我们只需要简单地将 `input_features` 转换为 PyTorch 张量就好了。

另一方面,`labels` 数据之前并未填充。所以,我们首先要使用分词器的 `.pad` 方法将序列填充至本 batch 的最大长度。然后将填充标记替换为 `-100`,这样它们就可以 **不** 用参与损失的计算了。然后我们把 `SOT` 从序列的开头去掉,稍后训练的时候我们再把它加回来。

我们可以利用之前定义的 `WhisperProcessor` 来执行特征提取和分词操作:

```python

import torch

from dataclasses import dataclass

from typing import Any, Dict, List, Union

@dataclass

class DataCollatorSpeechSeq2SeqWithPadding:

processor: Any

def __call__(self, features: List[Dict[str, Union[List[int], torch.Tensor]]]) -> Dict[str, torch.Tensor]:

# split inputs and labels since they have to be of different lengths and need different padding methods

# first treat the audio inputs by simply returning torch tensors

input_features = [{"input_features": feature["input_features"]} for feature in features]

batch = self.processor.feature_extractor.pad(input_features, return_tensors="pt")

# get the tokenized label sequences

label_features = [{"input_ids": feature["labels"]} for feature in features]

# pad the labels to max length

labels_batch = self.processor.tokenizer.pad(label_features, return_tensors="pt")

# replace padding with -100 to ignore loss correctly

labels = labels_batch["input_ids"].masked_fill(labels_batch.attention_mask.ne(1), -100)

# if bos token is appended in previous tokenization step,

# cut bos token here as it's append later anyways

if (labels[:, 0] == self.processor.tokenizer.bos_token_id).all().cpu().item():

labels = labels[:, 1:]

batch["labels"] = labels

return batch

```

我们初始化一下刚刚定义的数据整理器:

```python

data_collator = DataCollatorSpeechSeq2SeqWithPadding(processor=processor)

```

### 评估指标

接下来要定义评估指标。我们将使用词错误率 (WER) 指标,它是评估 ASR 系统的“标准”指标。有关其详细信息,请参阅 WER [文档](https://huggingface.co/metrics/wer)。下面,我们从 🤗 Evaluate 中加载 WER 指标:

```python

import evaluate

metric = evaluate.load("wer")

```

然后我们只需要定义一个函数来接受模型输出并返回 WER 指标。这个名为 `compute_metrics` 的函数首先将 `-100` 替换为 `label_ids` 中的 `pad_token_id` (以便在计算损失时将其忽略)。然后,将预测到的 ID 和 `label_ids` 解码为字符串文本。最后,计算输出文本和真实文本之间的 WER:

```python

def compute_metrics(pred):

pred_ids = pred.predictions

label_ids = pred.label_ids

# replace -100 with the pad_token_id

label_ids[label_ids == -100] = tokenizer.pad_token_id

# we do not want to group tokens when computing the metrics

pred_str = tokenizer.batch_decode(pred_ids, skip_special_tokens=True)

label_str = tokenizer.batch_decode(label_ids, skip_special_tokens=True)

wer = 100 * metric.compute(predictions=pred_str, references=label_str)

return {"wer": wer}

```

### 加载预训练 checkpoint

现在我们加载预训练 Whisper `small` 模型的 checkpoint。同样,可以通过使用 🤗 transformers 很轻松地完成这一步!

```python

from transformers import WhisperForConditionalGeneration

model = WhisperForConditionalGeneration.from_pretrained("openai/whisper-small")

```

原始 Whisper 模型在自回归生成开始之前强制添加了若干前缀词元 ID ([`forced_decoder_ids`](https://huggingface.co/docs/transformers/main_classes/text_generation#transformers.generation_utils.GenerationMixin.generate.forced_decoder_ids))。这些词元 ID 主要用于在零样本 ASR 任务中标识语种和任务。因为我们现在是对已知语种 (印地语) 和任务 (转录) 进行微调,所以我们要将 `forced_decoder_ids` 设置为 `None`。另外,模型还抑制了一些词元 ([`suppress_tokens`](https://huggingface.co/docs/transformers/main_classes/text_generation#transformers.generation_utils.GenerationMixin.generate.suppress_tokens)),这些词元的对数概率被强置为 `-inf`,以保证它们永远不会被采样到。我们会用一个空列表覆盖 `suppress_tokens`,即我们不抑制任何词元:

```python

model.config.forced_decoder_ids = None

model.config.suppress_tokens = []

```

### 定义训练参数

最后一步是定义与训练相关的所有参数,下面对其中一部分参数进行了解释:

- `output_dir`: 保存模型权重的本地目录,它也会是 [Hugging Face Hub](https://huggingface.co/) 上的模型存储库名称。

- `generation_max_length`: 评估阶段,自回归生成的最大词元数。

- `save_steps`: 训练期间,每 `save_steps` 步保存一次中间 checkpoint 并异步上传到 Hub。

- `eval_steps`: 训练期间,每 `eval_steps` 步对中间 checkpoint 进行一次评估。

- `report_to`: 训练日志的保存位置,支持 `azure_ml` 、`comet_ml` 、`mlflow` 、`neptune` 、`tensorboard` 以及 `wandb` 这些平台。你可以按照自己的偏好进行选择,也可以直接使用缺省的 `tensorboard` 保存至 Hub。

如需更多其他训练参数的详细信息,请参阅 Seq2SeqTrainingArguments [文档](https://huggingface.co/docs/transformers/main_classes/trainer#transformers.Seq2SeqTrainingArguments)。

```python

from transformers import Seq2SeqTrainingArguments

training_args = Seq2SeqTrainingArguments(

output_dir="./whisper-small-hi", # change to a repo name of your choice

per_device_train_batch_size=16,

gradient_accumulation_steps=1, # increase by 2x for every 2x decrease in batch size

learning_rate=1e-5,

warmup_steps=500,

max_steps=4000,

gradient_checkpointing=True,

fp16=True,

evaluation_strategy="steps",

per_device_eval_batch_size=8,

predict_with_generate=True,

generation_max_length=225,

save_steps=1000,

eval_steps=1000,

logging_steps=25,

report_to=["tensorboard"],

load_best_model_at_end=True,

metric_for_best_model="wer",

greater_is_better=False,

push_to_hub=True,

)

```

**注意**: 如果不想将模型 checkpoint 上传到 Hub,你需要设置 `push_to_hub=False`。

我们可以将训练参数以及模型、数据集、数据整理器和 `compute_metrics` 函数一起传给 🤗 Trainer:

```python

from transformers import Seq2SeqTrainer

trainer = Seq2SeqTrainer(

args=training_args,

model=model,

train_dataset=common_voice["train"],

eval_dataset=common_voice["test"],

data_collator=data_collator,

compute_metrics=compute_metrics,

tokenizer=processor.feature_extractor,

)

```

有了这些,就可以开始训练了!

### 训练

要启动训练,只需执行:

```python

trainer.train()

```

训练大约需要 5-10 个小时,具体取决于你的 GPU 或 Google Colab 后端的 GPU。根据 GPU 的情况,你可能会在开始训练时遇到 CUDA `内存耗尽`错误。此时,你可以将 `per_device_train_batch_size` 逐次减少 2 倍,同时增加 [`gradient_accumulation_steps`](https://huggingface.co/docs/transformers/main_classes/trainer#transformers.Seq2SeqTrainingArguments.gradient_accumulation_steps) 进行补偿。

**打印输出:**

| 步数 | 训练损失 | 轮数 | 验证损失 | WER |

| :-: | :-: | :-: | :-: | :-: |

| 1000 | 0.1011 | 2.44 | 0.3075 | 34.63 |

| 2000 | 0.0264 | 4.89 | 0.3558 | 33.13 |

| 3000 | 0.0025 | 7.33 | 0.4214 | 32.59 |

| 4000 | 0.0006 | 9.78 | 0.4519 | 32.01 |

| 5000 | 0.0002 | 12.22 | 0.4679 | 32.10 |

最佳 WER 是 32.0% —— 对 8 小时的训练数据来说还不错!那与其他 ASR 系统相比,这个表现到底处于什么水平?为此,我们可以查看 [`hf-speech-bench`](https://huggingface.co/spaces/huggingface/hf-speech-bench),这是一个按语种和数据集对模型分别进行 WER 排名的排行榜。

<figure>

<img src="assets/111_fine_tune_whisper/hf_speech_bench.jpg" alt="Trulli" style="width:100%">

</figure>

微调后的模型显著提高了 Whisper `small` checkpoint 的零样本性能,也突出展示了 Whisper 强大的迁移学习能力。

当将训练结果推送到 Hub 时,只需配置适当的关键字参数 (key-word arguments,kwargs) 就可以自动将 checkpoint 提交到排行榜。如需适配自己的数据集、语种和模型名称,仅需对下述代码作出相应的修改即可:

```python

kwargs = {

"dataset_tags": "mozilla-foundation/common_voice_11_0",

"dataset": "Common Voice 11.0", # a 'pretty' name for the training dataset

"dataset_args": "config: hi, split: test",

"language": "hi",

"model_name": "Whisper Small Hi - Sanchit Gandhi", # a 'pretty' name for your model

"finetuned_from": "openai/whisper-small",

"tasks": "automatic-speech-recognition",

"tags": "hf-asr-leaderboard",

}

```

现在,只需执行 `push_to_hub` 命令就可以将训练结果上传到 Hub 了:

```python

trainer.push_to_hub(**kwargs)

```

任何人可以用你的模型的 Hub 链接访问它。他们还可以使用标识符 `"your-username/the-name-you-picked"`加载它,例如:

```python

from transformers import WhisperForConditionalGeneration, WhisperProcessor

model = WhisperForConditionalGeneration.from_pretrained("sanchit-gandhi/whisper-small-hi")

processor = WhisperProcessor.from_pretrained("sanchit-gandhi/whisper-small-hi")

```

虽然微调后的模型在 Common Voice Hindi 测试数据上的效果还不错,但其效果远算不上最优。本文的目的仅为演示如何在任意多语种 ASR 数据集上微调预训练的 Whisper checkpoint,对效果并未做太多深究。如需提升效果,你还可以尝试更多技巧,如优化训练超参 (例如 _learning rate_ 和 _dropout_) 、使用更大的预训练 checkpoint (`medium` 或 `large`) 等。

### 构建演示应用

现在模型已经微调结束,我们开始构建一个演示应用来展示其 ASR 功能!我们将使用 🤗 Transformers `pipeline` 来完成整个 ASR 流水线: 从对音频输入进行预处理一直到对模型输出进行解码。我们使用 [Gradio](https://www.gradio.app) 来构建我们的交互式演示。 Gradio 提供了最直截了当的构建机器学习演示应用的方法,我们可以用它在几分钟内构建一个演示应用!

运行以下代码会生成一个 Gradio 演示应用,它用计算机的麦克风录制语音并将其馈送给微调后的 Whisper 模型以转录出相应的文本:

```python

from transformers import pipeline

import gradio as gr

pipe = pipeline(model="sanchit-gandhi/whisper-small-hi") # change to "your-username/the-name-you-picked"

def transcribe(audio):

text = pipe(audio)["text"]

return text

iface = gr.Interface(

fn=transcribe,

inputs=gr.Audio(source="microphone", type="filepath"),

outputs="text",

title="Whisper Small Hindi",

description="Realtime demo for Hindi speech recognition using a fine-tuned Whisper small model.",

)

iface.launch()

```

## 结束语

通过本文,我们介绍了如何使用 🤗 Datasets、Transformers 和 Hugging Face Hub 一步步为多语种 ASR 微调一个 Whisper 模型。如果你想自己尝试微调一个,请参阅 [Google Colab](https://colab.research.google.com/github/sanchit-gandhi/notebooks/blob/main/fine_tune_whisper.ipynb)。如果你有兴趣针对英语和多语种 ASR 微调一个其它的 Transformers 模型,请务必参考下 [examples/pytorch/speech-recognition](https://github.com/huggingface/transformers/tree/main/examples/pytorch/speech-recognition)。 | 4 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/3d-assets.md | ---

title: "手把手教你使用人工智能生成 3D 素材"

thumbnail: /blog/assets/124_ml-for-games/thumbnail-3d.jpg

authors:

- user: dylanebert

translators:

- user: chenglu

---

# 手把手教你使用人工智能生成 3D 素材

## 引言

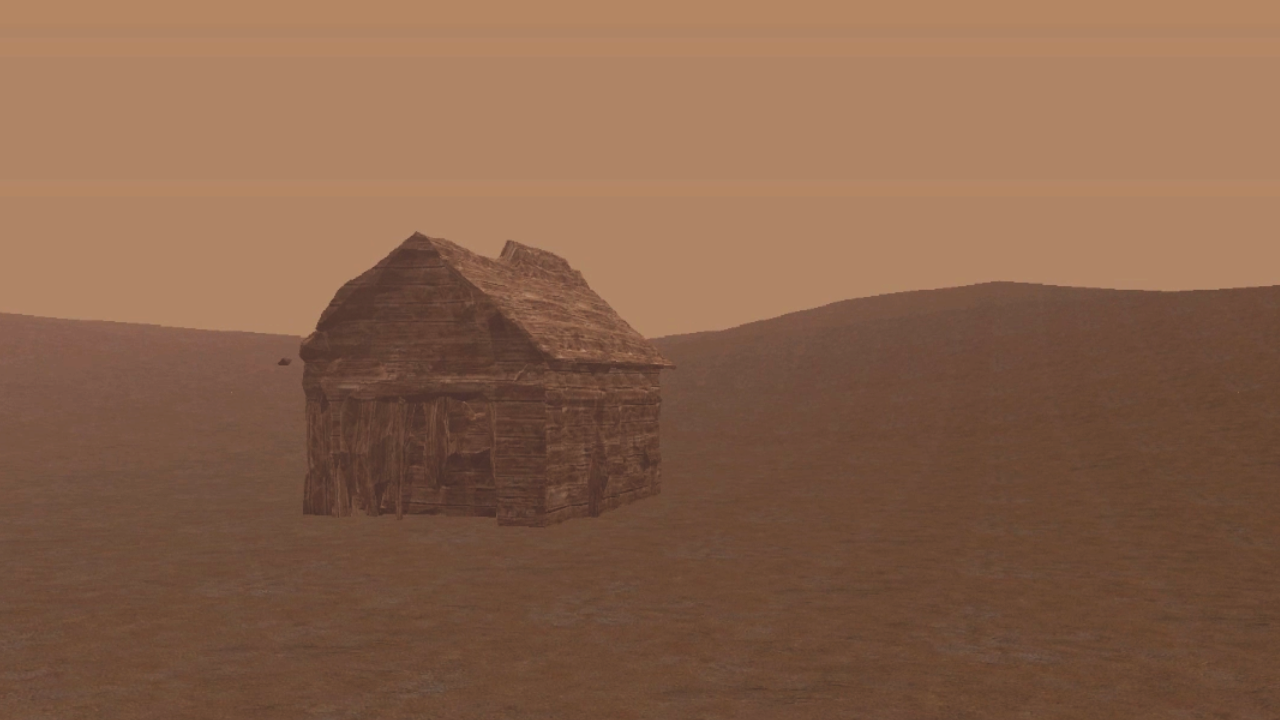

生成式 AI 已成为游戏开发中艺术工作流的重要组成部分。然而,正如我在 [之前的文章](https://huggingface.co/blog/zh/ml-for-games-3) 中描述的,从文本到 3D 的实用性仍落后于 2D。不过,这种情况正在改变。本文我们将重新审视 3D 素材生成的实用工作流程,并逐步了解如何将生成型 AI 集成到 PS1 风格的 3D 工作流中。

为什么选择 PS1 风格?因为它对当前文本到 3D 模型的低保真度更为宽容,使我们能够以尽可能少的努力从文本转换为可用的 3D 素材。

### 预备知识

本教程假设你具备一些 Blender 和 3D 概念的基本知识,例如材质和 UV 映射。

## 第一步:生成 3D 模型

首先访问 Shap-E Hugging Face Space [这里](https://huggingface.co/spaces/hysts/Shap-E)或下方。此空间使用 OpenAI 最新的扩散模型 [Shap-E model](https://github.com/openai/shap-e) 从文本生成 3D 模型。

<gradio-app theme_mode="light" space="hysts/Shap-E"></gradio-app>

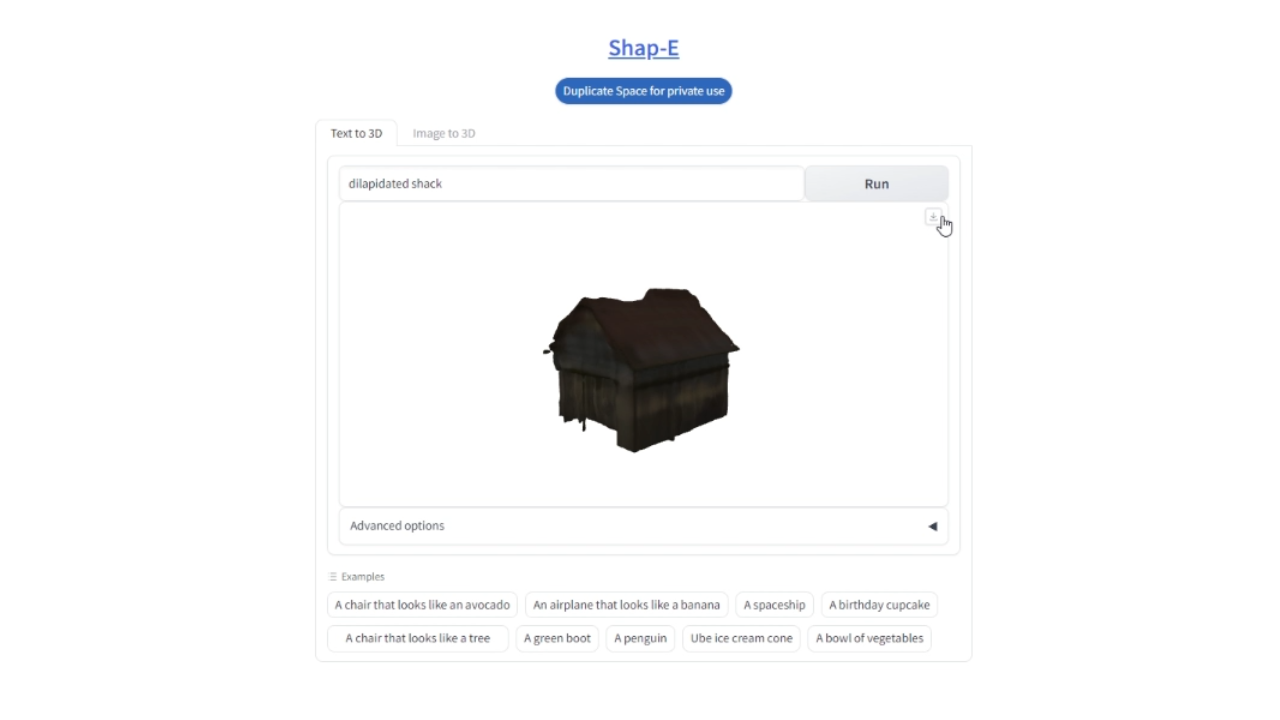

输入 "Dilapidated Shack" 作为你的提示并点击 'Generate'。当你对模型满意时,下载它以进行下一步。

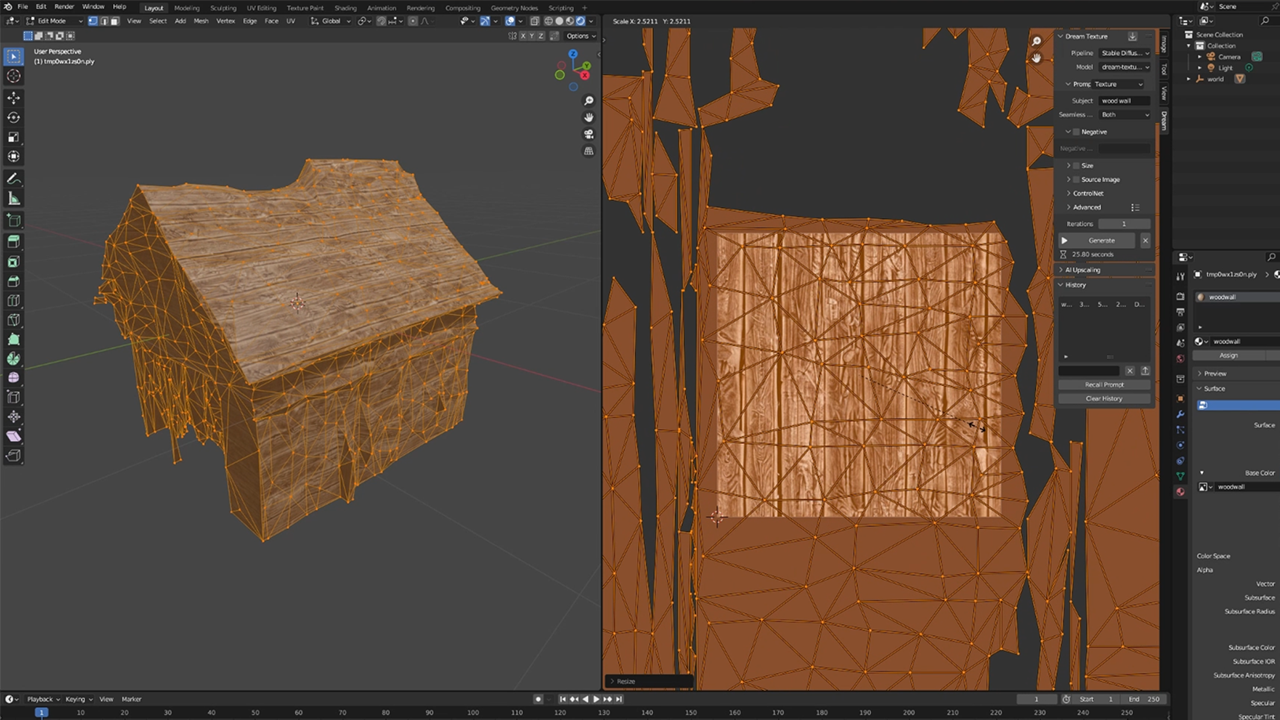

## 第二步:导入并精简模型

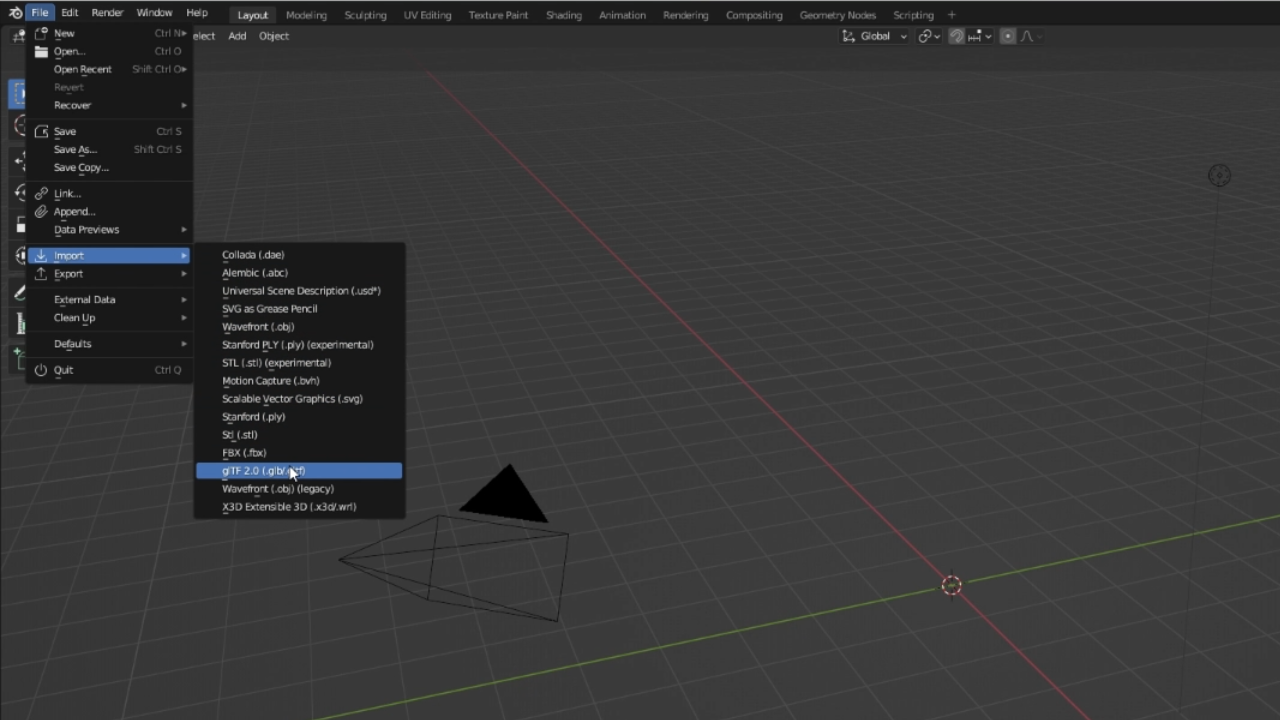

接下来,打开 [Blender](https://www.blender.org/download/)(版本 3.1 或更高)。转到 File -> Import -> GLTF 2.0,并导入你下载的文件。你可能会注意到,该模型的多边形数量远远超过了许多实际应用(如游戏)的推荐数量。

要减少多边形数量,请选择你的模型,导航到 Modifiers,并选择 "Decimate" 修饰符。将比率调整为较低的数字(例如 0.02)。这可能看起来*不*太好。然而,在本教程中,我们将接受低保真度。

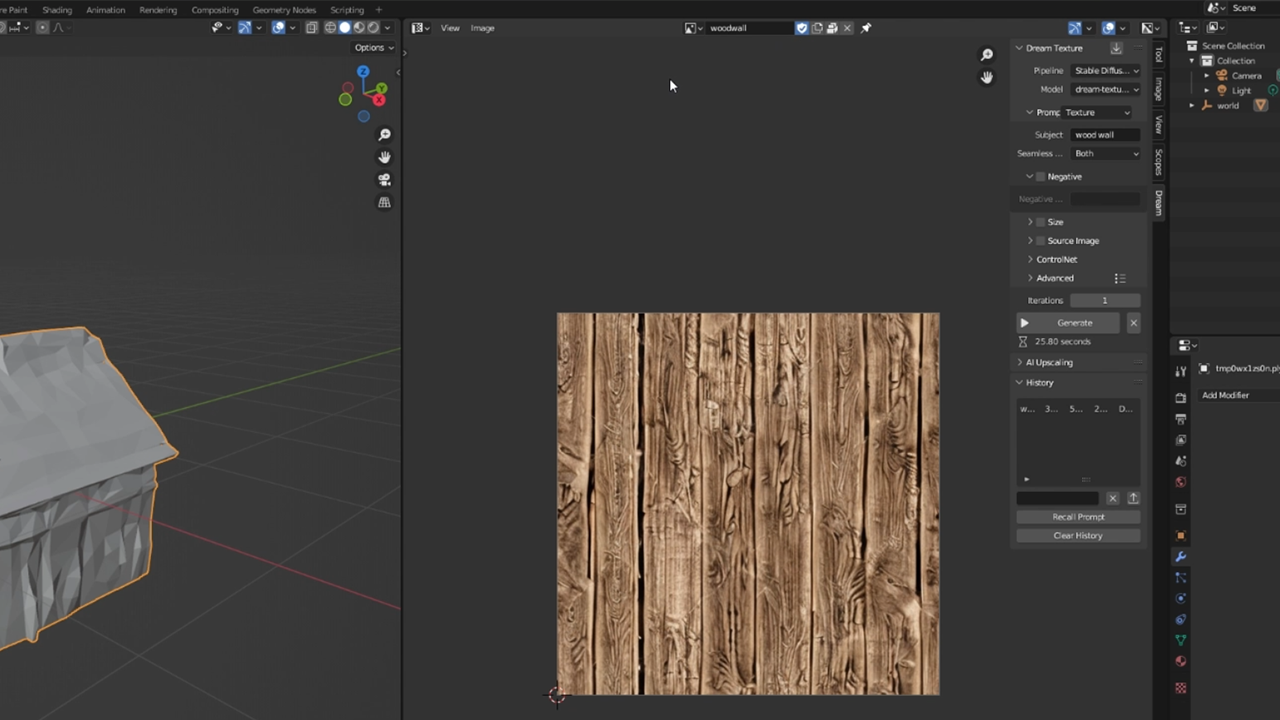

## 第三步:安装 Dream Textures

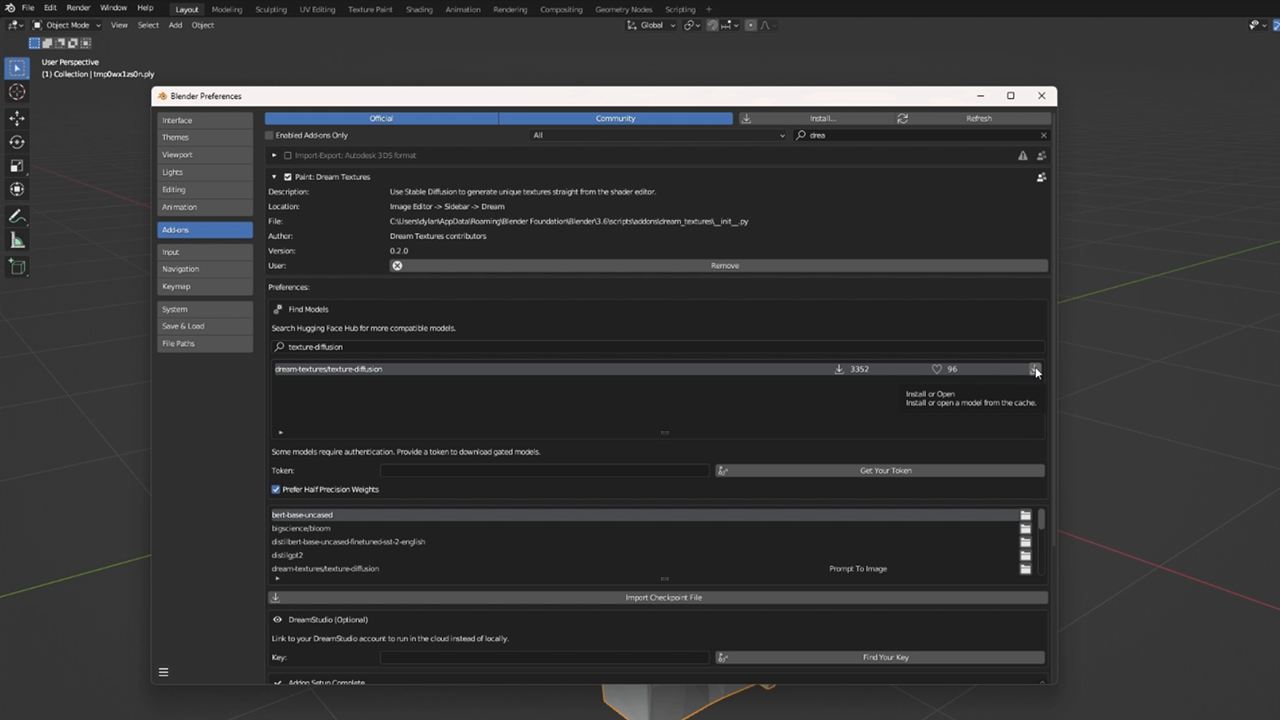

为了给我们的模型添加纹理,我们将使用 [Dream Textures](https://github.com/carson-katri/dream-textures),这是一个用于 Blender 的稳定扩散纹理生成器。按照 [官方仓库](https://github.com/carson-katri/dream-textures) 上的说明下载并安装插件。

安装并启用后,打开插件首选项。搜索并下载 [texture-diffusion](https://huggingface.co/dream-textures/texture-diffusion) 模型。

## 第四步:生成纹理

让我们生成一个自定义纹理。在 Blender 中打开 UV 编辑器,按 'N' 打开属性菜单。点击 'Dream' 标签并选择 texture-diffusion 模型。将 Prompt 设置为 'texture'、Seamless 设置为 'both'。这将确保生成的图像是无缝纹理。

在 'subject' 下,输入你想要的纹理,例如 'Wood Wall',然后点击 'Generate'。当你对结果满意时,为其命名并保存。

要应用纹理,请选择你的模型并导航到 'Material'。添加新材料,在 'base color' 下点击点并选择 'Image Texture'。最后,选择你新生成的纹理。

## 第五步:UV 映射

接下来是 UV 映射,它将我们的 2D 纹理包裹在 3D 模型周围。选择你的模型,按 'Tab' 进入编辑模式。然后,按 'U' 展开模型并选择 'Smart UV Project'。

要预览你的纹理模型,请切换到渲染视图(按住 'Z' 并选择 'Rendered')。你可以放大 UV 映射,使其在模型上无缝平铺。请记住,我们的目标是复古的 PS1 风格,所以不要做得太好。

## 第六步:导出模型

当您对模型感到满意时,就可以导出它了。使用 File -> Export -> FBX,这个 3D 素材就生成了。

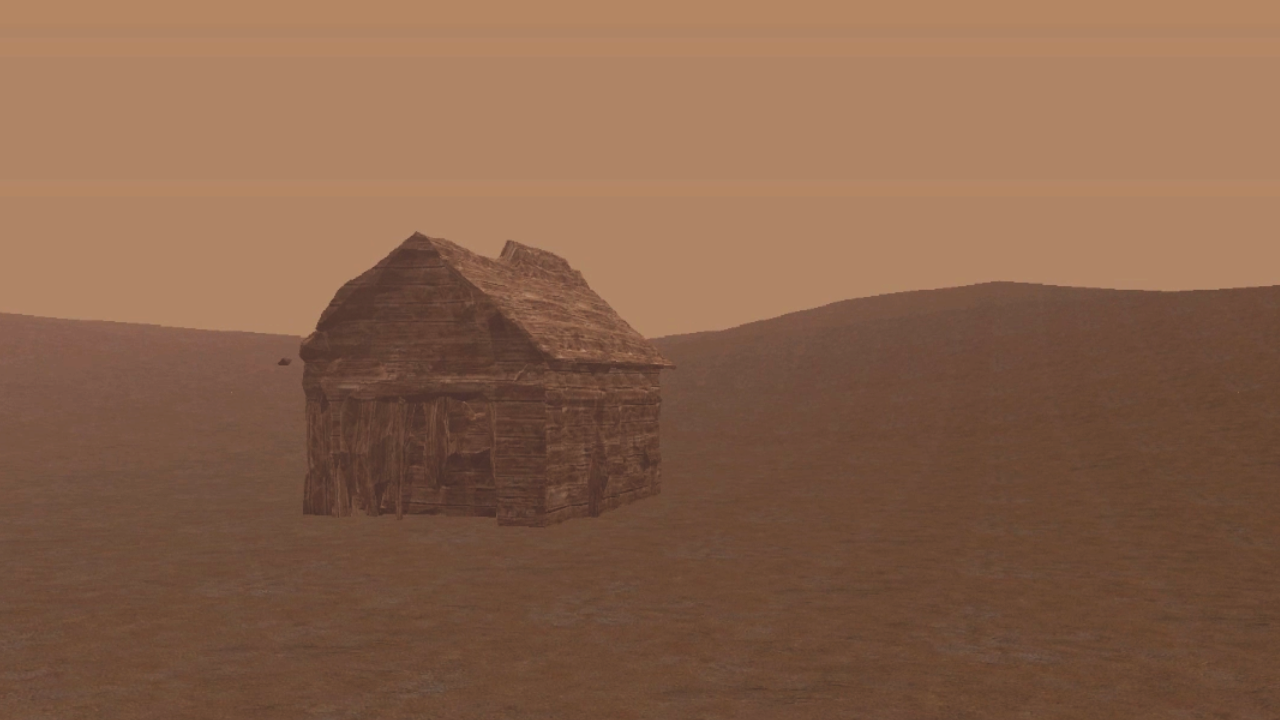

## 第七步:在 Unity 中导入

最后,让我们看看我们的模型在实际中的效果。将其导入 [Unity](https://unity.cn/download) 或你选择的游戏引擎中。为了重现怀旧的 PS1 美学,我用自定义顶点照明、无阴影、大量雾气和故障后处理进行了定制。你可以在 [这里](https://www.david-colson.com/2021/11/30/ps1-style-renderer.html) 了解更多关于重现 PS1 美学的信息。

现在我们就拥有了一个在虚拟环境中的低保真、纹理 3D 模型!

## 总结

关于如何使用生成型 AI 工作流程创建实用 3D 素材的教程就此结束。虽然结果保真度不高,但潜力巨大:通过足够的努力,这种方法可以用来生成一个低保真风格的无限世界。随着这些模型的改进,将这些技术转移到高保真或逼真的风格将会成为可能!

| 5 |

0 | hf_public_repos/blog | hf_public_repos/blog/zh/unity-asr.md | ---

title: "如何在 Unity 游戏中集成 AI 语音识别?"

thumbnail: /blog/assets/124_ml-for-games/unity-asr-thumbnail.png

authors:

- user: dylanebert

translators:

- user: SuSung-boy

- user: zhongdongy

proofreader: true

---

# 如何在 Unity 游戏中集成 AI 语音识别?

[](https://itch.io/jam/open-source-ai-game-jam)

## 简介

语音识别是一项将语音转换为文本的技术,想象一下它如何在游戏中发挥作用?发出命令操纵控制面板或者游戏角色、直接与 NPC 对话、提升交互性等等,都有可能。本文将介绍如何使用 Hugging Face Unity API 在 Unity 游戏中集成 SOTA 语音识别功能。

您可以访问 [itch.io 网站](https://individualkex.itch.io/speech-recognition-demo) 下载 Unity 游戏样例,亲自尝试一下语音识别功能。

### 先决条件

阅读文本可能需要了解一些 Unity 的基本概念。除此之外,您还需安装 [Hugging Face Unity API](https://github.com/huggingface/unity-api),可以点击 [之前的博文](https://huggingface.co/blog/zh/unity-api) 阅读 API 安装说明。

## 步骤

### 1. 设置场景

在本教程中,我们将设置一个非常简单的场景。玩家可以点击按钮来开始或停止录制语音,识别音频并转换为文本。

首先我们新建一个 Unity 项目,然后创建一个包含三个 UI 组件的画布 (Canvas):

1. **开始按钮**: 按下以开始录制语音。

2. **停止按钮**: 按下以停止录制语音。

3. **文本组件 (TextMeshPro)**: 显示语音识别结果文本的地方。

### 2. 创建脚本

创建一个名为 `SpeechRecognitionTest` 的脚本,并将其附加到一个空的游戏对象 (GameObject) 上。

在脚本中,首先定义对 UI 组件的引用:

```

[SerializeField] private Button startButton;

[SerializeField] private Button stopButton;

[SerializeField] private TextMeshProUGUI text;

```

在 inspector 窗口中分配对应组件。

然后,使用 `Start()` 方法为开始和停止按钮设置监听器:

```

private void Start() {

startButton.onClick.AddListener(StartRecording);

stopButton.onClick.AddListener(StopRecording);

}

```

此时,脚本中的代码应该如下所示:

```

using TMPro;

using UnityEngine;

using UnityEngine.UI;

public class SpeechRecognitionTest : MonoBehaviour {

[SerializeField] private Button startButton;

[SerializeField] private Button stopButton;

[SerializeField] private TextMeshProUGUI text;

private void Start() {

startButton.onClick.AddListener(StartRecording);

stopButton.onClick.AddListener(StopRecording);

}

private void StartRecording() {

}

private void StopRecording() {

}

}

```

### 3. 录制麦克风语音输入

现在,我们来录制麦克风语音输入,并将其编码为 WAV 格式。这里需要先定义成员变量:

```

private AudioClip clip;

private byte[] bytes;

private bool recording;

```

然后,在 `StartRecording()` 中,使用 `Microphone.Start()` 方法实现开始录制语音的功能:

```

private void StartRecording() {

clip = Microphone.Start(null, false, 10, 44100);

recording = true;

}

```

上面代码实现以 44100 Hz 录制最长为 10 秒的音频。

当录音时长达到 10 秒的最大限制,我们希望录音行为自动停止。为此,需要在 `Update()` 方法中写上以下内容:

```

private void Update() {

if (recording && Microphone.GetPosition(null) >= clip.samples) {

StopRecording();

}

}

```

接着,在 `StopRecording()` 中,截取录音片段并将其编码为 WAV 格式:

```

private void StopRecording() {

var position = Microphone.GetPosition(null);

Microphone.End(null);

var samples = new float[position * clip.channels];

clip.GetData(samples, 0);

bytes = EncodeAsWAV(samples, clip.frequency, clip.channels);

recording = false;

}

```

最后,我们需要实现音频编码的 `EncodeAsWAV()` 方法,这里直接使用 Hugging Face API,只需要将音频数据准备好即可:

```

private byte[] EncodeAsWAV(float[] samples, int frequency, int channels) {

using (var memoryStream = new MemoryStream(44 + samples.Length * 2)) {

using (var writer = new BinaryWriter(memoryStream)) {

writer.Write("RIFF".ToCharArray());

writer.Write(36 + samples.Length * 2);

writer.Write("WAVE".ToCharArray());

writer.Write("fmt ".ToCharArray());

writer.Write(16);

writer.Write((ushort)1);

writer.Write((ushort)channels);

writer.Write(frequency);

writer.Write(frequency * channels * 2);

writer.Write((ushort)(channels * 2));

writer.Write((ushort)16);

writer.Write("data".ToCharArray());

writer.Write(samples.Length * 2);

foreach (var sample in samples) {

writer.Write((short)(sample * short.MaxValue));

}

}

return memoryStream.ToArray();

}

}

```

完整的脚本如下所示:

```

using System.IO;

using TMPro;

using UnityEngine;

using UnityEngine.UI;

public class SpeechRecognitionTest : MonoBehaviour {

[SerializeField] private Button startButton;

[SerializeField] private Button stopButton;

[SerializeField] private TextMeshProUGUI text;

private AudioClip clip;

private byte[] bytes;

private bool recording;

private void Start() {

startButton.onClick.AddListener(StartRecording);

stopButton.onClick.AddListener(StopRecording);

}

private void Update() {

if (recording && Microphone.GetPosition(null) >= clip.samples) {

StopRecording();

}

}

private void StartRecording() {

clip = Microphone.Start(null, false, 10, 44100);

recording = true;

}

private void StopRecording() {

var position = Microphone.GetPosition(null);

Microphone.End(null);

var samples = new float[position * clip.channels];

clip.GetData(samples, 0);

bytes = EncodeAsWAV(samples, clip.frequency, clip.channels);

recording = false;

}

private byte[] EncodeAsWAV(float[] samples, int frequency, int channels) {

using (var memoryStream = new MemoryStream(44 + samples.Length * 2)) {

using (var writer = new BinaryWriter(memoryStream)) {

writer.Write("RIFF".ToCharArray());

writer.Write(36 + samples.Length * 2);

writer.Write("WAVE".ToCharArray());

writer.Write("fmt ".ToCharArray());

writer.Write(16);

writer.Write((ushort)1);

writer.Write((ushort)channels);

writer.Write(frequency);

writer.Write(frequency * channels * 2);

writer.Write((ushort)(channels * 2));

writer.Write((ushort)16);

writer.Write("data".ToCharArray());

writer.Write(samples.Length * 2);

foreach (var sample in samples) {

writer.Write((short)(sample * short.MaxValue));

}

}

return memoryStream.ToArray();

}

}

}

```

如要测试该脚本代码是否正常运行,您可以在 `StopRecording()` 方法末尾添加以下代码:

```

File.WriteAllBytes(Application.dataPath + "/test.wav", bytes);

```

好了,现在您点击 `Start` 按钮,然后对着麦克风说话,接着点击 `Stop` 按钮,您录制的音频将会保存为 `test.wav` 文件,位于工程目录的 Unity 资产文件夹中。

### 4. 语音识别

接下来,我们将使用 Hugging Face Unity API 对编码音频实现语音识别。为此,我们创建一个 `SendRecording()` 方法:

```

using HuggingFace.API;