licenses

sequencelengths 1

3

| version

stringclasses 677

values | tree_hash

stringlengths 40

40

| path

stringclasses 1

value | type

stringclasses 2

values | size

stringlengths 2

8

| text

stringlengths 25

67.1M

| package_name

stringlengths 2

41

| repo

stringlengths 33

86

|

|---|---|---|---|---|---|---|---|---|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 23454 | # # [Attractors.jl Tutorial](@id tutorial)

# ```@raw html

# <video width="auto" controls loop>

# <source src="../attracont.mp4" type="video/mp4">

# </video>

# ```

# [`Attractors`](@ref) is a component of the **DynamicalSystems.jl** library.

# This tutorial will walk you through its main functionality.

# That is, given a `DynamicalSystem` instance, find all its attractors and their basins

# of attraction. Then,

# continue these attractors, and their stability properties, across a parameter value.

# It also offers various functions that compute nonlocal stability properties for an

# attractor, any of which can be used in the continuation to quantify stability.

# Besides this main functionality, there are plenty of other stuff,

# like for example [`edgestate`](@ref) or [`basins_fractal_dimension`](@ref),

# but we won't cover anything else in this introductory tutorial.

# See the [examples](@ref examples) page instead.

# ### Package versions used

import Pkg

#nb # Activate an environment in the folder containing the notebook

#nb Pkg.activate(dirname(@__DIR__))

#nb Pkg.add(["DynamicalSystems", "CairoMakie", "GLMakie", "OrdinaryDiffEq"])

Pkg.status(["Attractors", "CairoMakie", "OrdinaryDiffEq"])

#nb # ## Attractors.jl summary

#nb using Attractors # re=exported by `DynamicalSystems`

#nb @doc Attractors

# ## Tutorial - copy-pasteable version

# _Gotta go fast!_

# ```julia

# using Attractors, CairoMakie, OrdinaryDiffEq

# ## Define key input: a `DynamicalSystem`

# function modified_lorenz_rule(u, p, t)

# x, y, z = u; a, b = p

# dx = y - x

# dy = - x*z + b*abs(z)

# dz = x*y - a

# return SVector(dx, dy, dz)

# end

# p0 = [5.0, 0.1] # parameters

# u0 = [-4.0, 5, 0] # state

# diffeq = (alg = Vern9(), abstol = 1e-9, reltol = 1e-9, dt = 0.01) # solver options

# ds = CoupledODEs(modified_lorenz_rule, u0, p0; diffeq)

# ## Define key input: an `AttractorMaper` that finds

# ## attractors of a `DynamicalSystem`

# grid = (

# range(-15.0, 15.0; length = 150), # x

# range(-20.0, 20.0; length = 150), # y

# range(-20.0, 20.0; length = 150), # z

# )

# mapper = AttractorsViaRecurrences(ds, grid;

# consecutive_recurrences = 1000,

# consecutive_lost_steps = 100,

# )

# ## Find attractors and their basins of attraction state space fraction

# ## by randomly sampling initial conditions in state sapce

# sampler, = statespace_sampler(grid)

# algo = AttractorSeedContinueMatch(mapper)

# fs = basins_fractions(mapper, sampler)

# attractors = extract_attractors(mapper)

# ## found two attractors: one is a limit cycle, the other is chaotic

# ## visualize them

# plot_attractors(attractors)

# ## continue all attractors and their basin fractions across any arbigrary

# ## curve in parameter space using a global continuation algorithm

# algo = AttractorSeedContinueMatch(mapper)

# params(θ) = [1 => 5 + 0.5cos(θ), 2 => 0.1 + 0.01sin(θ)]

# pcurve = params.(range(0, 2π; length = 101))

# fractions_cont, attractors_cont = global_continuation(

# algo, pcurve, sampler; samples_per_parameter = 1_000

# )

# ## and visualize the results

# fig = plot_basins_attractors_curves(

# fractions_cont, attractors_cont, A -> minimum(A[:, 1]), pcurve; add_legend = false

# )

# ```

# ## Input: a `DynamicalSystem`

# The key input for most functionality of Attractors.jl is an instance of

# a `DynamicalSystem`. If you don't know how to make

# a `DynamicalSystem`, you need to consult the main tutorial of the

# [DynamicalSystems.jl library](https://juliadynamics.github.io/DynamicalSystemsDocs.jl/dynamicalsystems/stable/tutorial/).

# For this tutorial we will use a modified Lorenz-like system with equations

# ```math

# \begin{align*}

# \dot{x} & = y - x \\

# \dot{y} &= -x*z + b*|z| \\

# \dot{z} &= x*y - a \\

# \end{align*}

# ```

# which we define in code as

using Attractors # part of `DynamicalSystems`, so it re-exports functionality for making them!

using OrdinaryDiffEq # for accessing advanced ODE Solvers

function modified_lorenz_rule(u, p, t)

x, y, z = u; a, b = p

dx = y - x

dy = - x*z + b*abs(z)

dz = x*y - a

return SVector(dx, dy, dz)

end

p0 = [5.0, 0.1] # parameters

u0 = [-4.0, 5, 0] # state

diffeq = (alg = Vern9(), abstol = 1e-9, reltol = 1e-9, dt = 0.01) # solver options

ds = CoupledODEs(modified_lorenz_rule, u0, p0; diffeq)

# ## Finding attractors

# There are two major methods for finding attractors in dynamical systems.

# Explanation of how they work is in their respective docs.

# 1. [`AttractorsViaRecurrences`](@ref).

# 2. [`AttractorsViaFeaturizing`](@ref).

# You can consult [Datseris2023](@cite) for a comparison between the two.

# As far as the user is concerned, both algorithms are part of the same interface,

# and can be used in the same way. The interface is extendable as well,

# and works as follows.

# First, we create an instance of such an "attractor finding algorithm",

# which we call `AttractorMapper`. For example, [`AttractorsViaRecurrences`](@ref)

# requires a tesselated grid of the state space to search for attractors in.

# It also allows the user to tune some meta parameters, but in our example

# they are already tuned for the dynamical system at hand. So we initialize

grid = (

range(-10.0, 10.0; length = 150), # x

range(-15.0, 15.0; length = 150), # y

range(-15.0, 15.0; length = 150), # z

)

mapper = AttractorsViaRecurrences(ds, grid;

consecutive_recurrences = 1000, attractor_locate_steps = 1000,

consecutive_lost_steps = 100,

)

# This `mapper` can map any initial condition to the corresponding

# attractor ID, for example

mapper([-4.0, 5, 0])

# while

mapper([4.0, 2, 0])

# the fact that these two different initial conditions got assigned different IDs means

# that they converged to a different attractor.

# The attractors are stored in the mapper internally, to obtain them we

# use the function

attractors = extract_attractors(mapper)

# In Attractors.jl, all information regarding attractors is always a standard Julia

# `Dict`, which maps attractor IDs (positive integers) to the corresponding quantity.

# Here the quantity are the attractors themselves, represented as `StateSpaceSet`.

# We can visualize them with the convenience plotting function

using CairoMakie

plot_attractors(attractors)

# (this convenience function is a simple loop over scattering the values of

# the `attractors` dictionary)

# In our example system we see that for the chosen parameters there are two coexisting attractors:

# a limit cycle and a chaotic attractor.

# There may be more attractors though! We've only checked two initial conditions,

# so we could have found at most two attractors!

# However, it can get tedious to manually iterate over initial conditions, which is why

# this `mapper` is typically given to higher level functions for finding attractors

# and their basins of attraction. The simplest one

# is [`basins_fractions`](@ref). Using the `mapper`,

# it finds "all" attractors of the dynamical system and reports the state space fraction

# each attractors attracts. The search is probabilistic, so "all" attractors means those

# that at least one initial condition converged to.

# We can provide explicitly initial conditions to [`basins_fraction`](@ref),

# however it is typically simpler to provide it with with a state space sampler instead:

# a function that generates random initial conditions in the region of the

# state space that we are interested in. Here this region coincides with `grid`,

# so we can simply do:

sampler, = statespace_sampler(grid)

sampler() # random i.c.

#

sampler() # another random i.c.

# and finally call

fs = basins_fractions(mapper, sampler)

# The returned `fs` is a dictionary mapping each attractor ID to

# the fraction of the state space the corresponding basin occupies.

# With this we can confirm that there are (likely) only two attractors

# and that both attractors are robust as both have sufficiently large basin fractions.

# To obtain the full basins, which is computationally much more expensive,

# use [`basins_of_attraction`](@ref).

# You can use alternative algorithms in [`basins_fractions`](@ref), see

# the documentation of [`AttractorMapper`](@ref) for possible subtypes.

# [`AttractorMapper`](@ref) defines an extendable interface and can be enriched

# with other methods in the future!

# ## Different Attractor Mapper

# Attractors.jl utilizes composable interfaces throughout its functionality.

# In the above example we used one particular method to find attractors,

# via recurrences in the state space. An alternative is [`AttractorsViaFeaturizing`](@ref).

# For this method, we need to provide a "featurizing" function that given an

# trajectory (which is likely an attractor), it returns some features that will

# hopefully distinguish different attractors in a subsequent grouping step.

# Finding good features is typically a trial-and-error process, but for our system

# we already have some good features:

using Statistics: mean

function featurizer(A, t) # t is the time vector associated with trajectory A

xmin = minimum(A[:, 1])

ycen = mean(A[:, 2])

return SVector(xmin, ycen)

end

# from which we initialize

mapper2 = AttractorsViaFeaturizing(ds, featurizer; Δt = 0.1)

# [`AttractorsViaFeaturizing`](@ref) allows for a third input, which is a

# "grouping configuration", that dictates how features will be grouped into

# attractors, as features are extracted from (randomly) sampled state space trajectories.

# In this tutorial we leave it at its default value, which is clustering using the DBSCAN

# algorithm. The keyword arguments are meta parameters which control how long

# to integrate each initial condition for, and what sampling time, to produce

# a trajectory `A` given to the `featurizer` function. Because one of the two attractors

# is chaotic, we need denser sampling time than the default.

# We can use `mapper2` exactly as `mapper`:

fs2 = basins_fractions(mapper2, sampler)

attractors2 = extract_attractors(mapper2)

plot_attractors(attractors2)

# This mapper also found the attractors, but we should warn you: this mapper is less

# robust than [`AttractorsViaRecurrences`](@ref). One of the reasons for this is

# that [`AttractorsViaFeaturizing`](@ref) is not auto-terminating. For example, if we do not

# have enough transient integration time, the two attractors will get confused into one:

mapper3 = AttractorsViaFeaturizing(ds, featurizer; Ttr = 10, Δt = 0.1)

fs3 = basins_fractions(mapper3, sampler)

attractors3 = extract_attractors(mapper3)

plot_attractors(attractors3)

# On the other hand, the downside of [`AttractorsViaRecurrences`](@ref) is that

# it can take quite a while to converge for chaotic high dimensional systems.

# ## [Global continuation](@id global_cont_tutorial)

# If you have heard before the word "continuation", then you are likely aware of the

# **traditional continuation-based bifurcation analysis (CBA)** offered by many software,

# such as AUTO, MatCont, and in Julia [BifurcationKit.jl](https://github.com/bifurcationkit/BifurcationKit.jl).

# Here we offer a completely different kind of continuation called **global continuation**.

# The traditional continuation analysis continues the curves of individual _fixed

# points (and under some conditions limit cycles)_ across the joint state-parameter space and

# tracks their _local (linear) stability_.

# This approach needs to manually be "re-run" for every individual branch of fixed points

# or limit cycles.

# The global continuation in Attractors.jl finds _all_ attractors, _including chaotic

# or quasiperiodic ones_,

# in the whole of the state space (that it searches in), without manual intervention.

# It then continues all of these attractors concurrently along a parameter axis.

# Additionally, the global continuation tracks a _nonlocal_ stability property which by

# default is the basin fraction.

# This is a fundamental difference. Because all attractors are simultaneously

# tracked across the parameter axis, the user may arbitrarily estimate _any_

# property of the attractors and how it varies as the parameter varies.

# A more detailed comparison between these two approaches can be found in [Datseris2023](@cite).

# See also the [comparison page](@ref bfkit_comparison) in our docs

# that attempts to do the same analysis of our Tutorial with traditional continuation software.

# To perform the continuation is extremely simple. First, we decide what parameter,

# and what range, to continue over:

prange = 4.5:0.01:6

pidx = 1 # index of the parameter

# Then, we may call the [`global_continuation`](@ref) function.

# We have to provide a continuation algorithm, which itself references an [`AttractorMapper`](@ref).

# In this example we will re-use the `mapper` to create the "flagship product" of Attractors.jl

# which is the geenral [`AttractorSeedContinueMatch`](@ref).

# This algorithm uses the `mapper` to find all attractors at each parameter value

# and from the found attractors it continues them along a parameter axis

# using a seeding process (see its documentation string).

# Then, it performs a "matching" step, ensuring a "continuity" of the attractor

# label across the parameter axis. For now we ignore the matching step, leaving it to the

# default value. We'll use the `mapper` we created above and define

ascm = AttractorSeedContinueMatch(mapper)

# and call

fractions_cont, attractors_cont = global_continuation(

ascm, prange, pidx, sampler; samples_per_parameter = 1_000

)

# the output is given as two vectors. Each vector is a dictionary

# mapping attractor IDs to their basin fractions, or their state space sets, respectively.

# Both vectors have the same size as the parameter range.

# For example, the attractors at the 34-th parameter value are:

attractors_cont[34]

# There is a fantastic convenience function for animating

# the attractors evolution, that utilizes things we have

# already defined:

animate_attractors_continuation(

ds, attractors_cont, fractions_cont, prange, pidx;

);

# ```@raw html

# <video width="auto" controls loop>

# <source src="../attracont.mp4" type="video/mp4">

# </video>

# ```

# Hah, how cool is that! The attractors pop in and out of existence like out of nowhere!

# It would be incredibly difficult to find these attractors in traditional continuation software

# where a rough estimate of the period is required! (It would also be too hard due to the presence

# of chaos for most of the parameter values, but that's another issue!)

# Now typically a continuation is visualized in a 2D plot where the x axis is the

# parameter axis. We can do this with the convenience function:

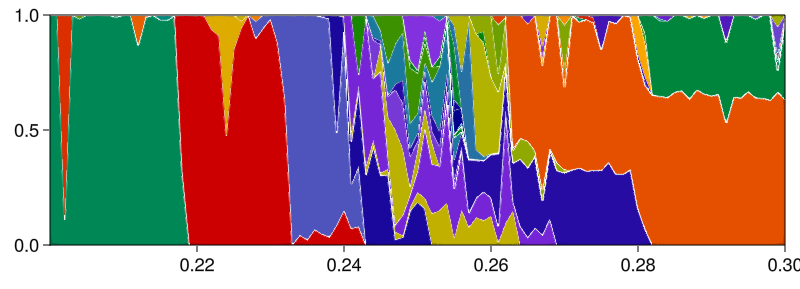

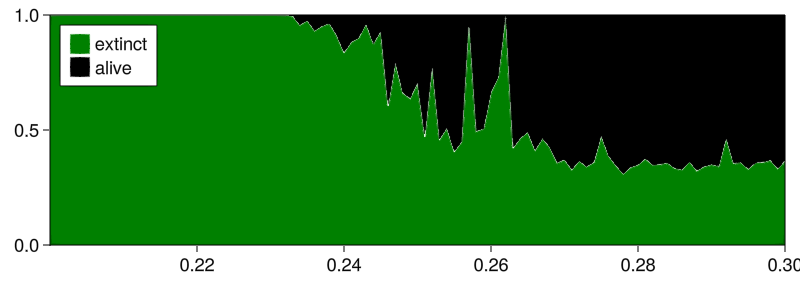

fig = plot_basins_attractors_curves(

fractions_cont, attractors_cont, A -> minimum(A[:, 1]), prange,

)

# In the top panel are the basin fractions, by default plotted as stacked bars.

# Bottom panel is a visualization of the tracked attractors.

# The argument `A -> minimum(A[:, 1])` is simply a function that maps

# an attractor into a real number for plotting.

# ## Different matching procedures

# By default attractors are matched by their distance in state space.

# The default matcher is [`MatchBySSSetDistance`](@ref), and is given implicitly

# as a default 2nd argument when creating [`AttractorSeedContinueMatch`](@ref).

# But like anything else in Attractors.jl, "matchers" also follow a well-defined

# and extendable interface, see [`IDMatchers`](@ref) for that.

# Let's say that the default matching that we chose above isn't desirable.

# For example, one may argue that the attractor that pops up

# at the end of the continuation should have been assigned the same ID

# as attractor 1, because they are both to the left (see the video above).

# In reality one wouldn't really request that, because looking

# the video of attractors above shows that the attractors labelled "1", "2", and "3"

# are all completely different. But we argue here for example that "3" should have been

# the same as "1".

# Thankfully during a global continuation the "matching" step is completely

# separated from the "finding and continuing" step. If we don't like the

# initial matching, we can call [`match_sequentially!`](@ref) with a new

# instance of a matcher, and match again, without having to recompute

# the attractors and their basin fractions.

# For example, using this matcher:

matcher = MatchBySSSetDistance(use_vanished = true)

# will compare a new attractor with the latest instance of attractors

# with a given ID that have ever existed, irrespectively if they exist in the

# current parameter or not. This means, that the attractor "3" would in fact be compared

# with both attractor "2" and "1", even if "1" doesn't exist in the parameter "3"

# started existing at. And because "3" is closer to "1" than to "2", it will get

# matched to attractor "1" and get the same ID.

# Let's see this in action:

attractors_cont2 = deepcopy(attractors_cont)

match_sequentially!(attractors_cont2, matcher)

fig = plot_attractors_curves(

attractors_cont2, A -> minimum(A[:, 1]), prange,

)

# and as we can see, the new attractor at the end of the parameter range got

# assigned the same ID as the original attractor "1".

# For more ways of matching attractors see [`IDMatcher`](@ref).

# %% #src

# ## Enhancing the continuation

# The biggest strength of Attractors.jl is that it is not an isolated software.

# It is part of **DynamicalSystems.jl**. Here, we will use the full power of

# **DynamicalSystems.jl** and enrich the above continuation with various other

# measures of nonlocal stability, in particular Lyapunov exponents and

# the minimal fatal shock. First, let's plot again the continuation

# and label some things or clarity

fig = plot_basins_attractors_curves(

fractions_cont, attractors_cont, A -> minimum(A[:, 1]), prange; add_legend = false

)

ax1 = content(fig[2,1])

ax1.ylabel = "min(A₁)"

fig

# First, let's estimate the maximum Lyapunov exponent (MLE) for all attractors,

# using the `lyapunov` function that comes from the ChaosTools.jl submodule.

using ChaosTools: lyapunov

lis = map(enumerate(prange)) do (i, p) # loop over parameters

set_parameter!(ds, pidx, p) # important! We use the dynamical system!

attractors = attractors_cont[i]

## Return a dictionary mapping attractor IDs to their MLE

Dict(k => lyapunov(ds, 10000.0; u0 = A[1]) for (k, A) in attractors)

end

# The above `map` loop may be intimidating if you are a beginner, but it is

# really just a shorter way to write a `for` loop for our example.

# We iterate over all parameters, and for each we first update the dynamical

# system with the correct parameter, and then extract the MLE

# for each attractor. `map` just means that we don't have to pre-allocate a

# new vector before the loop; it creates it for us.

# We can visualize the LE with the other convenience function [`plot_continuation_curves!`](@ref),

ax2 = Axis(fig[3, 1]; ylabel = "MLE")

plot_continuation_curves!(ax2, lis, prange; add_legend = false)

fig

# This reveals crucial information for tha attractors, whether they are chaotic or not, that we would otherwise obtain only by visualizing the system dynamics at every single parameter.

# The story we can see now is that the dynamics start with a limit cycle (0 Lyapunov exponent), go into bi-stability of chaos and limit cycle, then there is only one limit cycle again, and then a chaotic attractor appears again, for a second bistable regime.

# The last piece of information to add is yet another measure of nonlocal stability: the minimal fatal shock (MFS), which is provided by [`minimal_fatal_shock`](@ref).

# The code to estimate this is similar with the `map` block for the MLE.

# Here however we re-use the created `mapper`, but now we must not forget to reset it inbetween parameter increments:

using LinearAlgebra: norm

search_area = collect(extrema.(grid ./ 2)) # smaller search = faster results

search_algorithm = MFSBlackBoxOptim(max_steps = 1000, guess = ones(3))

mfss = map(enumerate(prange)) do (i, p)

set_parameter!(ds, pidx, p)

reset_mapper!(mapper) # reset so that we don't have to re-initialize

## We need a special clause here: if there is only 1 attractor,

## then there is no MFS. It is undefined. We set it to `NaN`,

## which conveniently, will result to nothing being plotted by Makie.

attractors = attractors_cont[i]

if length(attractors) == 1

return Dict(k => NaN for (k, A) in attractors)

end

## otherwise, compute the actual MFS from the first point of each attractor

Dict(k =>

norm(minimal_fatal_shock(mapper, A[1], search_area, search_algorithm))

for (k, A) in attractors

)

end

# In a real application we wouldn't use the first point of each attractor,

# as the first point is completely random on the attractor (at least, for the

# [`AttractorsViaRecurrences`] mapper we use here).

# We would do this by examining the whole `A` object in the above block

# instead of just using `A[1]`. But this is a tutorial so we don't care!

# Right, so now we can visualize the MFS with the rest of the other quantities:

ax3 = Axis(fig[4, 1]; ylabel = "MFS", xlabel = "parameter")

plot_continuation_curves!(ax3, mfss, prange; add_legend = false)

## make the figure prettier

for ax in (ax1, ax2,); hidexdecorations!(ax; grid = false); end

resize!(fig, 500, 500)

fig

# ## Continuation along arbitrary parameter curves

# One of the many advantages of the global continuation is that we can choose

# what parameters to continue over. We can provide any arbitrary curve

# in parameter space. This is possible because (1) finding and matching attractors

# are two completely orthogonal steps, and (2) it is completely fine for

# attractors to dissapear (and perhaps re-appear) during a global continuation.

#For example, we can probe an elipsoid defined as

params(θ) = [1 => 5 + 0.5cos(θ), 2 => 0.1 + 0.01sin(θ)]

pcurve = params.(range(0, 2π; length = 101))

# here each component maps the parameter index to its value.

# We can just give this `pcurve` to the global continuation,

# using the same mapper and continuation algorithm,

# but adjusting the matching process so that vanished attractors

# are kept in "memory"

matcher = MatchBySSSetDistance(use_vanished = true)

ascm = AttractorSeedContinueMatch(mapper, matcher)

fractions_cont, attractors_cont = global_continuation(

ascm, pcurve, sampler; samples_per_parameter = 1_000

)

# and animate the result

animate_attractors_continuation(

ds, attractors_cont, fractions_cont, pcurve;

savename = "curvecont.mp4"

);

# ```@raw html

# <video width="auto" controls loop>

# <source src="../curvecont.mp4" type="video/mp4">

# </video>

# ```

# ## Conclusion and comparison with traditional local continuation

# We've reached the end of the tutorial! Some aspects we haven't highlighted is

# how most of the infrastructure of Attractors.jl is fully extendable.

# You will see this when reading the documentation strings of key structures

# like [`AttractorMapper`](@ref). All documentation strings are in the [API](@ref) page.

# See the [examples](@ref examples) page for more varied applications.

# And lastly, see the [comparison page](@ref bfkit_comparison) in our docs

# that attempts to do the same analysis of our Tutorial with traditional local continuation and bifurcation analysis software

# showing that (at least for this example) using Attractors.jl is clearly beneficial

# over the alternatives. | Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 85 | module AttractorsVisualizations

using Attractors, Makie

include("plotting.jl")

end | Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 17612 | ##########################################################################################

# Auto colors/markers

##########################################################################################

using Random: shuffle!, Xoshiro

function colors_from_keys(ukeys)

# Unfortunately, until `to_color` works with `Cycled`,

# we need to explicitly add here some default colors...

COLORS = [

"#7143E0",

"#191E44",

"#0A9A84",

"#AF9327",

"#5F166D",

"#6C768C",

]

if length(ukeys) ≤ length(COLORS)

colors = [COLORS[i] for i in eachindex(ukeys)]

else # keep colorscheme, but add extra random colors

n = length(ukeys) - length(COLORS)

colors = shuffle!(Xoshiro(123), collect(cgrad(COLORS, n+1; categorical = true)))

colors = append!(to_color.(COLORS), colors[1:(end-1)])

end

return Dict(k => colors[i] for (i, k) in enumerate(ukeys))

end

function markers_from_keys(ukeys)

MARKERS = [:circle, :dtriangle, :rect, :star5, :xcross, :diamond,

:hexagon, :cross, :pentagon, :ltriangle, :rtriangle, :hline, :vline, :star4,]

markers = Dict(k => MARKERS[mod1(i, length(MARKERS))] for (i, k) in enumerate(ukeys))

return markers

end

##########################################################################################

# Attractors

##########################################################################################

function Attractors.plot_attractors(a; kw...)

fig = Figure()

ax = Axis(fig[1,1])

plot_attractors!(ax, a; kw...)

return fig

end

function Attractors.plot_attractors!(ax, attractors;

ukeys = sort(collect(keys(attractors))), # internal argument just for other keywords

colors = colors_from_keys(ukeys),

markers = markers_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

add_legend = length(ukeys) < 7,

access = SVector(1, 2),

sckwargs = (strokewidth = 0.5, strokecolor = :black,)

)

for k in ukeys

k ∉ keys(attractors) && continue

A = attractors[k]

x, y = columns(A[:, access])

scatter!(ax, x, y;

color = (colors[k], 0.9), markersize = 20,

marker = markers[k],

label = "$(labels[k])",

sckwargs...

)

end

add_legend && axislegend(ax)

return

end

##########################################################################################

# Basins

##########################################################################################

function Attractors.heatmap_basins_attractors(grid, basins::AbstractArray, attractors; kwargs...)

if length(size(basins)) != 2

error("Heatmaps only work in two dimensional basins!")

end

fig = Figure()

ax = Axis(fig[1,1])

heatmap_basins_attractors!(ax, grid, basins, attractors; kwargs...)

return fig

end

function Attractors.heatmap_basins_attractors!(ax, grid, basins, attractors;

ukeys = unique(basins), # internal argument just for other keywords

colors = colors_from_keys(ukeys),

markers = markers_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

add_legend = length(ukeys) < 7,

access = SVector(1, 2),

sckwargs = (strokewidth = 1.5, strokecolor = :white,)

)

sort!(ukeys) # necessary because colormap is ordered

# Set up the (categorical) color map and colormap values

cmap = cgrad([colors[k] for k in ukeys], length(ukeys); categorical = true)

# Heatmap with appropriate colormap values. We need to transform

# the basin array to one with values the sequential integers,

# because the colormap itself also has as values the sequential integers

ids = 1:length(ukeys)

replace_dict = Dict(k => i for (i, k) in enumerate(ukeys))

basins_to_plot = replace(basins, replace_dict...)

heatmap!(ax, grid..., basins_to_plot;

colormap = cmap,

colorrange = (ids[1]-0.5, ids[end]+0.5),

)

# Scatter attractors

plot_attractors!(ax, attractors;

ukeys, colors, access, markers,

labels, add_legend, sckwargs

)

return ax

end

##########################################################################################

# Shaded basins

##########################################################################################

function Attractors.shaded_basins_heatmap(grid, basins::AbstractArray, attractors, iterations;

show_attractors = true,

maxit = maximum(iterations),

kwargs...)

if length(size(basins)) != 2

error("Heatmaps only work in two dimensional basins!")

end

fig = Figure()

ax = Axis(fig[1,1]; kwargs...)

shaded_basins_heatmap!(ax, grid, basins, iterations, attractors; maxit, show_attractors)

return fig

end

function Attractors.shaded_basins_heatmap!(ax, grid, basins, iterations, attractors;

ukeys = unique(basins),

show_attractors = true,

maxit = maximum(iterations))

sort!(ukeys) # necessary because colormap is ordered

ids = 1:length(ukeys)

replace_dict = Dict(k => i for (i, k) in enumerate(ukeys))

basins_to_plot = replace(basins.*1., replace_dict...)

access = SVector(1,2)

cmap, colors = custom_colormap_shaded(ukeys)

markers = markers_from_keys(ukeys)

labels = Dict(ukeys .=> ukeys)

add_legend = length(ukeys) < 7

it = findall(iterations .> maxit)

iterations[it] .= maxit

for i in ids

ind = findall(basins_to_plot .== i)

mn = minimum(iterations[ind])

mx = maximum(iterations[ind])

basins_to_plot[ind] .= basins_to_plot[ind] .+ 0.99.*(iterations[ind].-mn)/mx

end

# The colormap is constructed in such a way that the first color maps

# from id to id+0.99, id is an integer describing the current basin.

# Each id has a specific color associated and the gradient goes from

# light color (value id) to dark color (value id+0.99). It produces

# a shading proportional to a value associated to a specific pixel.

heatmap!(ax, grid..., basins_to_plot;

colormap = cmap,

colorrange = (ids[1], ids[end]+1),

)

# Scatter attractors

if show_attractors

for (i, k) ∈ enumerate(ukeys)

k ≤ 0 && continue

A = attractors[k]

x, y = columns(A[:, access])

scatter!(ax, x, y;

color = colors[k], markersize = 20,

marker = markers[k],

strokewidth = 1.5, strokecolor = :white,

label = "$(labels[k])"

)

end

# Add legend using colors only

add_legend && axislegend(ax)

end

return ax

end

function custom_colormap_shaded(ukeys)

# Light and corresponding dark colors for shading of basins of attraction

colors = colors_from_keys(ukeys)

n = length(colors)

# Note that the factor to define light and dark color

# is arbitrary.

LIGHT_COLORS = [darken_color(colors[k],0.3) for k in ukeys]

DARK_COLORS = [darken_color(colors[k],1.7) for k in ukeys]

v_col = Array{typeof(LIGHT_COLORS[1]),1}(undef,2*n)

vals = zeros(2*n)

for k in eachindex(ukeys)

v_col[2*k-1] = LIGHT_COLORS[k]

v_col[2*k] = DARK_COLORS[k]

vals[2*k-1] = k-1

vals[2*k] = k-1+0.9999999999

end

return cgrad(v_col, vals/maximum(vals)), colors

end

"""

darken_color(c, f = 1.2)

Darken given color `c` by a factor `f`.

If `f` is less than 1, the color is lightened instead.

"""

function darken_color(c, f = 1.2)

c = to_color(c)

return RGBAf(clamp.((c.r/f, c.g/f, c.b/f, c.alpha), 0, 1)...)

end

##########################################################################################

# Continuation

##########################################################################################

function Attractors.plot_basins_curves(fractions_cont, args...; kwargs...)

fig = Figure()

ax = Axis(fig[1,1])

ax.xlabel = "parameter"

ax.ylabel = "basins %"

plot_basins_curves!(ax, fractions_cont, args...; kwargs...)

return fig

end

function Attractors.plot_basins_curves!(ax, fractions_cont, prange = 1:length(fractions_cont);

ukeys = unique_keys(fractions_cont), # internal argument

colors = colors_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

separatorwidth = 1, separatorcolor = "white",

add_legend = length(ukeys) < 7,

axislegend_kwargs = (position = :lt,),

series_kwargs = NamedTuple(),

markers = markers_from_keys(ukeys),

style = :band,

)

if !(prange isa AbstractVector{<:Real})

error("!(prange <: AbstractVector{<:Real})")

end

bands = fractions_series(fractions_cont, ukeys)

if style == :band

# transform to cumulative sum

for j in 2:length(bands)

bands[j] .+= bands[j-1]

end

for (j, k) in enumerate(ukeys)

if j == 1

l, u = 0, bands[j]

l = fill(0f0, length(u))

else

l, u = bands[j-1], bands[j]

end

band!(ax, prange, l, u;

color = colors[k], label = "$(labels[k])", series_kwargs...

)

if separatorwidth > 0 && j < length(ukeys)

lines!(ax, prange, u; color = separatorcolor, linewidth = separatorwidth)

end

end

ylims!(ax, 0, 1)

elseif style == :lines

for (j, k) in enumerate(ukeys)

scatterlines!(ax, prange, bands[j];

color = colors[k], label = "$(labels[k])", marker = markers[k],

markersize = 5, linewidth = 3, series_kwargs...

)

end

else

error("Incorrect style specification for basins fractions curves")

end

xlims!(ax, minimum(prange), maximum(prange))

add_legend && axislegend(ax; axislegend_kwargs...)

return

end

function fractions_series(fractions_cont, ukeys = unique_keys(fractions_cont))

bands = [zeros(length(fractions_cont)) for _ in ukeys]

for i in eachindex(fractions_cont)

for (j, k) in enumerate(ukeys)

bands[j][i] = get(fractions_cont[i], k, 0)

end

end

return bands

end

function Attractors.plot_attractors_curves(attractors_cont, attractor_to_real, prange = 1:length(attractors_cont); kwargs...)

fig = Figure()

ax = Axis(fig[1,1])

ax.xlabel = "parameter"

ax.ylabel = "attractors"

plot_attractors_curves!(ax, attractors_cont, attractor_to_real, prange; kwargs...)

return fig

end

function Attractors.plot_attractors_curves!(ax, attractors_cont, attractor_to_real, prange = 1:length(attractors_cont);

kwargs...

)

# make the continuation info values and just propagate to the main function

continuation_info = map(attractors_cont) do dict

Dict(k => attractor_to_real(A) for (k, A) in dict)

end

plot_continuation_curves!(ax, continuation_info, prange; kwargs...)

end

function Attractors.plot_continuation_curves!(ax, continuation_info, prange = 1:length(continuation_info);

ukeys = unique_keys(continuation_info), # internal argument

colors = colors_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

add_legend = length(ukeys) < 7,

markers = markers_from_keys(ukeys),

axislegend_kwargs = (position = :lt,)

)

for i in eachindex(continuation_info)

info = continuation_info[i]

for (k, val) in info

scatter!(ax, prange[i], val;

color = colors[k], marker = markers[k], label = string(labels[k]),

)

end

end

xlims!(ax, minimum(prange), maximum(prange))

add_legend && axislegend(ax; axislegend_kwargs..., unique = true)

return

end

function Attractors.plot_continuation_curves(args...; kw...)

fig = Figure()

ax = Axis(fig[1,1])

plot_continuation_curves!(ax, args...; kw...)

return fig

end

# Mixed: basins and attractors

function Attractors.plot_basins_attractors_curves(fractions_cont, attractors_cont, a2r::Function, prange = 1:length(attractors_cont);

kwargs...

)

return Attractors.plot_basins_attractors_curves(fractions_cont, attractors_cont, [a2r], prange; kwargs...)

end

# Special case with multiple attractor projections:

function Attractors.plot_basins_attractors_curves(

fractions_cont, attractors_cont,

a2rs::Vector, prange = 1:length(attractors_cont);

ukeys = unique_keys(fractions_cont), # internal argument

colors = colors_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

markers = markers_from_keys(ukeys),

kwargs...

)

# generate figure and axes; add labels and stuff

fig = Figure()

axb = Axis(fig[1,1])

A = length(a2rs)

axs = [Axis(fig[1+i, 1]; ylabel = "attractors_$(i)") for i in 1:A]

linkxaxes!(axb, axs...)

if A == 1 # if we have only 1, make it pretier

axs[1].ylabel = "attractors"

end

axs[end].xlabel = "parameter"

axb.ylabel = "basins %"

hidexdecorations!(axb; grid = false)

for i in 1:A-1

hidexdecorations!(axs[i]; grid = false)

end

# plot basins and attractors

plot_basins_curves!(axb, fractions_cont, prange; ukeys, colors, labels, kwargs...)

for (axa, a2r) in zip(axs, a2rs)

plot_attractors_curves!(axa, attractors_cont, a2r, prange;

ukeys, colors, markers, add_legend = false, # coz its true for fractions

)

end

return fig

end

# This function is kept for backwards compatibility only, really.

function Attractors.plot_basins_attractors_curves!(axb, axa, fractions_cont, attractors_cont,

attractor_to_real, prange = 1:length(attractors_cont);

ukeys = unique_keys(fractions_cont), # internal argument

colors = colors_from_keys(ukeys),

labels = Dict(ukeys .=> ukeys),

kwargs...

)

if length(fractions_cont) ≠ length(attractors_cont)

error("fractions and attractors don't have the same amount of entries")

end

plot_basins_curves!(axb, fractions_cont, prange; ukeys, colors, labels, kwargs...)

plot_attractors_curves!(axa, attractors_cont, attractor_to_real, prange;

ukeys, colors, add_legend = false, # coz its true for fractions

)

return

end

##########################################################################################

# Videos

##########################################################################################

function Attractors.animate_attractors_continuation(

ds::DynamicalSystem, attractors_cont, fractions_cont, prange, pidx; kw...)

pcurve = [[pidx => p] for p in prange]

return animate_attractors_continuation(ds, attractors_cont, fractions_cont, pcurve; kw...)

end

function Attractors.animate_attractors_continuation(

ds::DynamicalSystem, attractors_cont, fractions_cont, pcurve;

savename = "attracont.mp4", access = SVector(1, 2),

limits = auto_attractor_lims(attractors_cont, access),

framerate = 4, markersize = 10,

ukeys = unique_keys(attractors_cont),

colors = colors_from_keys(ukeys),

markers = markers_from_keys(ukeys),

Δt = isdiscretetime(ds) ? 1 : 0.05,

T = 100,

figure = NamedTuple(), axis = NamedTuple(), fracaxis = NamedTuple(),

legend = NamedTuple(),

add_legend = length(ukeys) ≤ 6

)

length(access) ≠ 2 && error("Need two indices to select two dimensions of `ds`.")

K = length(ukeys)

fig = Figure(; figure...)

ax = Axis(fig[1,1]; limits, axis...)

fracax = Axis(fig[1,2]; width = 50, limits = (0,1,0,1), ylabel = "fractions",

yaxisposition = :right, fracaxis...

)

hidedecorations!(fracax)

fracax.ylabelvisible = true

# setup attractor axis (note we change colors to be transparent)

att_obs = Dict(k => Observable(Point2f[]) for k in ukeys)

plotf! = isdiscretetime(ds) ? scatter! : scatterlines!

for k in ukeys

plotf!(ax, att_obs[k]; color = (colors[k], 0.75), label = "$k", markersize, marker = markers[k])

end

if add_legend

axislegend(ax; legend...)

end

# setup fractions axis

heights = Observable(fill(0.1, K))

barcolors = [colors[k] for k in ukeys]

barplot!(fracax, fill(0.5, K), heights; width = 1, gap = 0, stack=1:K, color = barcolors)

record(fig, savename, eachindex(pcurve); framerate) do i

p = pcurve[i]

ax.title = "p: $p" # TODO: Add compat printing here.

attractors = attractors_cont[i]

fractions = fractions_cont[i]

set_parameters!(ds, p)

heights[] = [get(fractions, k, 0) for k in ukeys]

for (k, att) in attractors

tr, tvec = trajectory(ds, T, first(vec(att)); Δt)

att_obs[k][] = vec(tr[:, access])

notify(att_obs[k])

end

# also ensure that attractors that don't exist are cleared

for k in setdiff(ukeys, collect(keys(attractors)))

att_obs[k][] = Point2f[]; notify(att_obs[k])

end

end

return fig

end

function auto_attractor_lims(attractors_cont, access)

xmin = ymin = Inf

xmax = ymax = -Inf

for atts in attractors_cont

for (k, A) in atts

P = A[:, access]

mini, maxi = minmaxima(P)

xmin > mini[1] && (xmin = mini[1])

ymin > mini[2] && (ymin = mini[2])

xmax < maxi[1] && (xmax = maxi[1])

ymax < maxi[2] && (ymax = maxi[2])

end

end

dx = xmax - xmin

dy = ymax - ymin

return (xmin - 0.1dx, xmax + 0.1dx, ymin - 0.1dy, ymax + 0.1dy)

end

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 737 | module Attractors

# Use the README as the module docs

@doc let

path = joinpath(dirname(@__DIR__), "README.md")

include_dependency(path)

read(path, String)

end Attractors

using Reexport

@reexport using StateSpaceSets

@reexport using DynamicalSystemsBase

# main files that import other files

include("dict_utils.jl")

include("mapping/attractor_mapping.jl")

include("basins/basins.jl")

include("continuation/basins_fractions_continuation_api.jl")

include("matching/matching_interface.jl")

include("boundaries/edgetracking.jl")

include("deprecated.jl")

include("tipping/tipping.jl")

# Visualization (export names extended in the extension package)

include("plotting.jl")

# minimal fatal shock algo

end # module Attractors

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 3211 | # This function is deprecated, however it will NEVER be removed.

# In the future, this call signature will remain here but its source code

# will simply be replaced by an error message.

function basins_of_attraction(grid::Tuple, ds::DynamicalSystem; kwargs...)

@warn("""

The function `basins_of_attraction(grid::Tuple, ds::DynamicalSystem; ...)` has

been replaced by the more generic

`basins_of_attraction(mapper::AttractorMapper, grid::Tuple)` which works for

any instance of `AttractorMapper`. The `AttractorMapper` itself requires as

input an kind of dynamical system the user wants, like a `StroboscopicMap` or

`CoupledODEs` or `DeterministicIteratedMap` etc.

For now, we do the following for you:

```

mapper = AttractorsViaRecurrences(ds, grid; sparse = false)

basins_of_attraction(mapper)

```

and we are completely ignoring any keywords you provided (which could be about the

differential equation solve, or the metaparameters of the recurrences algorithm).

We strongly recommend that you study the documentation of Attractors.jl

and update your code. The only reason we provide this backwards compatibility

is because our first paper "Effortless estimation of basins of attraction"

uses this function signature in the script in the paper (which we can't change anymore).

""")

mapper = AttractorsViaRecurrences(ds, grid; sparse = false)

return basins_of_attraction(mapper)

end

function continuation(args...; kwargs...)

@warn("""

The function `Attractors.continuation` is deprecated in favor of

`global_continuation`, in preparation for future developments where both

local/linearized and global continuations will be possible within DynamicalSystems.jl.

""")

return global_continuation(args...; kwargs...)

end

export continuation

@deprecate AttractorsBasinsContinuation GlobalContinuationAlgorithm

@deprecate RecurrencesSeededContinuation RecurrencesFindAndMatch

@deprecate GroupAcrossParameterContinuation FeaturizeGroupAcrossParameter

@deprecate match_attractor_ids! match_statespacesets!

@deprecate GroupAcrossParameter FeaturizeGroupAcrossParameter

@deprecate rematch! match_continuation!

@deprecate replacement_map matching_map

function match_statespacesets!(as::Vector{<:Dict}; kwargs...)

error("This function was incorrect. Use `match_sequentially!` instead.")

end

export match_continuation!, match_statespacesets!, match_basins_ids!

function match_continuation!(args...; kwargs...)

@warn "match_continuation! has been deprecated for `match_sequentially!`,

which has a more generic name that better reflects its capabilities."

return match_sequentially!(args...; kwargs...)

end

function match_statespacesets!(a_afte, a_befo; kwargs...)

@warn "match_statespacesets! is deprecated. Use `matching_map!` with `MatchBySSSetDistance`."

return matching_map!(a_afte, a_befo, MatchBySSSetDistance(kwargs...))

end

function match_basins_ids!(b₊::AbstractArray, b₋; threshold = Inf)

@warn "`match_basins_ids!` is deprecated, use `matching_map` with `MatchByBasinOverlap`."

matcher = MatchByBasinOverlap(threshold)

return matching_map!(b₊, b₋, matcher)

end | Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 3506 | export unique_keys, swap_dict_keys!, next_free_id

# Utility functions for managing dictionary keys that are useful

# in continuation and attractor matching business

# Thanks a lot to Valentin (@Seelengrab) for generous help in the key swapping code.

# Swapping keys and ensuring that everything works "as expected" is,

# surprising, one of the hardest things to code in Attractors.jl.

"""

swap_dict_keys!(d::Dict, matching_map::Dict)

Swap the keys of a dictionary `d` given a `matching_map`

which maps old keys to new keys. Also ensure that a swap can happen at most once,

e.g., if input `d` has a key `4`, and `rmap = Dict(4 => 3, 3 => 2)`,

then the key `4` will be transformed to `3` and not further to `2`.

"""

function swap_dict_keys!(fs::Dict, _rmap::AbstractDict)

isempty(_rmap) && return

# Transform rmap so it is sorted in decreasing order,

# so that double swapping can't happen

rmap = sort!(collect(_rmap); by = x -> x[2])

cache = Tuple{keytype(fs), valtype(fs)}[]

for (oldkey, newkey) in rmap

haskey(fs, oldkey) || continue

oldkey == newkey && continue

tmp = pop!(fs, oldkey)

if !haskey(fs, newkey)

fs[newkey] = tmp

else

push!(cache, (newkey, tmp))

end

end

for (k, v) in cache

fs[k] = v

end

return fs

end

"""

overwrite_dict!(old::Dict, new::Dict)

In-place overwrite the `old` dictionary for the key-value pairs of the `new`.

"""

function overwrite_dict!(old::Dict, new::Dict)

empty!(old)

for (k, v) in new

old[k] = v

end

end

"""

additive_dict_merge!(d1::Dict, d2::Dict)

Merge keys and values of `d2` into `d1` additively: the values of the same keys

are added together in `d1` and new keys are given to `d1` as-is.

"""

function additive_dict_merge!(d1::Dict, d2::Dict)

z = zero(valtype(d1))

for (k, v) in d2

d1[k] = get(d1, k, z) + v

end

return d1

end

"""

retract_keys_to_consecutive(v::Vector{<:Dict}) → rmap

Given a vector of dictionaries with various positive integer keys, retract all keys so that

consecutive integers are used. So if the dictionaries have overall keys 2, 3, 42,

then they will transformed to 1, 2, 3.

Return the replacement map used to replace keys in all dictionaries with

[`swap_dict_keys!`](@ref).

As this function is used in attractor matching in [`global_continuation`](@ref)

it skips the special key `-1`.

"""

function retract_keys_to_consecutive(v::Vector{<:Dict})

ukeys = unique_keys(v)

ukeys = setdiff(ukeys, [-1]) # skip key -1 if it exists

rmap = Dict(k => i for (i, k) in enumerate(ukeys) if i != k)

return rmap

end

"""

unique_keys(v::Iterator{<:AbstractDict})

Given a vector of dictionaries, return a sorted vector of the unique keys

that are present across all dictionaries.

"""

function unique_keys(v)

unique_keys = Set(keytype(first(v))[])

for d in v

for k in keys(d)

push!(unique_keys, k)

end

end

return sort!(collect(unique_keys))

end

"""

next_free_id(new::Dict, old::Dict)

Return the minimum key of the "new" dictionary

that doesn't exist in the "old" dictionary.

"""

function next_free_id(a₊::AbstractDict, a₋::AbstractDict)

s = setdiff(keys(a₊), keys(a₋))

nextid = isempty(s) ? maximum(keys(a₋)) + 1 : minimum(s)

return nextid

end

function next_free_id(keys₊, keys₋)

s = setdiff(keys₊, keys₋)

nextid = isempty(s) ? maximum(keys₋) + 1 : minimum(s)

return nextid

end | Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 7119 | ##########################################################################################

# Attractors

##########################################################################################

"""

plot_attractors(attractors::Dict{Int, StateSpaceSet}; kwargs...)

Plot the attractors as a scatter plot.

## Keyword arguments

- All the [common plotting keywords](@ref common_plot_kwargs).

Particularly important is the `access` keyword.

- `sckwargs = (strokewidth = 0.5, strokecolor = :black,)`: additional keywords

propagated to the `Makie.scatter` function that plots the attractors.

"""

function plot_attractors end

function plot_attractors! end

export plot_attractors, plot_attractors!

##########################################################################################

# Basins

##########################################################################################

"""

heatmap_basins_attractors(grid, basins, attractors; kwargs...)

Plot a heatmap of found (2-dimensional) `basins` of attraction and corresponding

`attractors`, i.e., the output of [`basins_of_attraction`](@ref).

## Keyword arguments

- All the [common plotting keywords](@ref common_plot_kwargs) and

`sckwargs` as in [`plot_attractors`](@ref).

"""

function heatmap_basins_attractors end

function heatmap_basins_attractors! end

export heatmap_basins_attractors, heatmap_basins_attractors!

"""

shaded_basins_heatmap(grid, basins, attractors, iterations; kwargs...)

Plot a heatmap of found (2-dimensional) `basins` of attraction and corresponding

`attractors`. A matrix `iterations` with the same size of `basins` must be provided

to shade the color according to the value of this matrix. A small value corresponds

to a light color and a large value to a darker tone. This is useful to represent

the number of iterations taken for each initial condition to converge. See also

[`convergence_time`](@ref) to store this iteration number.

## Keyword arguments

- `show_attractors = true`: shows the attractor on plot

- `maxit = maximum(iterations)`: clip the values of `iterations` to

the value `maxit`. Useful when there are some very long iterations and keep the

range constrained to a given interval.

- All the [common plotting keywords](@ref common_plot_kwargs).

"""

function shaded_basins_heatmap end

function shaded_basins_heatmap! end

export shaded_basins_heatmap, shaded_basins_heatmap!

##########################################################################################

# Continuation

##########################################################################################

"""

animate_attractors_continuation(

ds::DynamicalSystem, attractors_cont, fractions_cont, pcurve;

kwargs...

)

Animate how the found system attractors and their corresponding basin fractions

change as the system parameter is increased. This function combines the input

and output of the [`global_continuation`](@ref) function into a video output.

The input dynamical system `ds` is used to evolve initial conditions sampled from the

found attractors, so that the attractors are better visualized.

`attractors_cont, fractions_cont` are the output of [`global_continuation`](@ref)

while `ds, pcurve` are the input to [`global_continuation`](@ref).

## Keyword arguments

- `savename = "attracont.mp4"`: name of video output file.

- `framerate = 4`: framerate of video output.

- `Δt, T`: propagated to `trajectory` for evolving an initial condition sampled

from an attractor.

- Also all [common plotting keywords](@ref common_plot_kwargs).

- `figure, axis, fracaxis, legend`: named tuples propagated as keyword arguments to the

creation of the `Figure`, the `Axis`, the "bar-like" axis containing the fractions,

and the `axislegend` that adds the legend (if `add_legend = true`).

- `add_legend = true`: whether to display the axis legend.

"""

function animate_attractors_continuation end

export animate_attractors_continuation

"""

plot_basins_curves(fractions_cont [, prange]; kw...)

Plot the fractions of basins of attraction versus a parameter range/curve,

i.e., visualize the output of [`global_continuation`](@ref).

See also [`plot_basins_attractors_curves`](@ref) and

[`plot_continuation_curves`](@ref).

## Keyword arguments

- `style = :band`: how to visualize the basin fractions. Choices are

`:band` for a band plot with cumulative sum = 1 or `:lines` for a lines

plot of each basin fraction

- `separatorwidth = 1, separatorcolor = "white"`: adds a line separating the fractions

if the style is `:band`

- `axislegend_kwargs = (position = :lt,)`: propagated to `axislegend` if a legend is added

- `series_kwargs = NamedTuple()`: propagated to the band or scatterline plot

- Also all [common plotting keywords](@ref common_plot_kwargs).

"""

function plot_basins_curves end

function plot_basins_curves! end

export plot_basins_curves, plot_basins_curves!

"""

plot_attractors_curves(attractors_cont, attractor_to_real [, prange]; kw...)

Same as in [`plot_basins_curves`](@ref) but visualize the attractor dependence on

the parameter(s) instead of their basin fraction.

The function `attractor_to_real` takes as input a `StateSpaceSet` (attractor)

and returns a real number so that it can be plotted versus the parameter axis.

See also [`plot_basins_attractors_curves`](@ref).

Same keywords as [`plot_basins_curves`](@ref common_plot_kwargs).

See also [`plot_continuation_curves`](@ref).

"""

function plot_attractors_curves end

function plot_attractors_curves! end

export plot_attractors_curves, plot_attractors_curves!

"""

plot_continuation_curves(continuation_info [, prange]; kwargs...)

Same as in [`plot_basins_curves`](@ref) but visualize any arbitrary quantity characterizing

the continuation. Hence, the `continuation_info` is of exactly the same format as

`fractions_cont`: a vector of dictionaries, each dictionary mapping attractor IDs to real numbers.

`continuation_info` is meant to accompany `attractor_info` in [`plot_attractors_curves`](@ref).

To produce `continuation_info` from `attractor_info` you can do something like:

```julia

continuation_info = map(attractors_cont) do attractors

Dict(k => f(A) for (k, A) in attractors)

end

```

with `f` your function of interest that returns a real number.

"""

function plot_continuation_curves end

function plot_continuation_curves! end

export plot_continuation_curves, plot_continuation_curves!

"""

plot_basins_attractors_curves(

fractions_cont, attractors_cont, a2rs [, prange]

kwargs...

)

Convenience combination of [`plot_basins_curves`](@ref) and [`plot_attractors_curves`](@ref)

in a multi-panel plot that shares legend, colors, markers, etc.

This function allows `a2rs` to be a `Vector` of functions, each mapping

attractors into real numbers. Below the basins fractions plot, one additional

panel is created for each entry in `a2rs`.

`a2rs` can also be a single function, in which case only one panel is made.

"""

function plot_basins_attractors_curves end

function plot_basins_attractors_curves! end

export plot_basins_attractors_curves, plot_basins_attractors_curves!

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 90 | include("basins_utilities.jl")

include("fractality_of_basins.jl")

include("wada_test.jl")

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 5649 | # It works for all mappers that define a `basins_fractions` method.

"""

basins_of_attraction(mapper::AttractorMapper, grid::Tuple) → basins, attractors

Compute the full basins of attraction as identified by the given `mapper`,

which includes a reference to a [`DynamicalSystem`](@ref) and return them

along with (perhaps approximated) found attractors.

`grid` is a tuple of ranges defining the grid of initial conditions that partition

the state space into boxes with size the step size of each range.

For example, `grid = (xg, yg)` where `xg = yg = range(-5, 5; length = 100)`.

The grid has to be the same dimensionality as the state space expected by the

integrator/system used in `mapper`. E.g., a [`ProjectedDynamicalSystem`](@ref)

could be used for lower dimensional projections, etc. A special case here is

a [`PoincareMap`](@ref) with `plane` being `Tuple{Int, <: Real}`. In this special

scenario the grid can be one dimension smaller than the state space, in which case

the partitioning happens directly on the hyperplane the Poincaré map operates on.

`basins_of_attraction` function is a convenience 5-lines-of-code wrapper which uses the

`labels` returned by [`basins_fractions`](@ref) and simply assigns them to a full array

corresponding to the state space partitioning indicated by `grid`.

See also [`convergence_and_basins_of_attraction`](@ref).

"""

function basins_of_attraction(mapper::AttractorMapper, grid::Tuple; kwargs...)

basins = zeros(Int32, map(length, grid))

I = CartesianIndices(basins)

A = StateSpaceSet([generate_ic_on_grid(grid, i) for i in vec(I)])

fs, labels = basins_fractions(mapper, A; kwargs...)

attractors = extract_attractors(mapper)

vec(basins) .= vec(labels)

return basins, attractors

end

# Type-stable generation of an initial condition given a grid array index

@generated function generate_ic_on_grid(grid::NTuple{B, T}, ind) where {B, T}

gens = [:(grid[$k][ind[$k]]) for k=1:B]

quote

Base.@_inline_meta

@inbounds return SVector{$B, Float64}($(gens...))

end

end

"""

basins_fractions(basins::AbstractArray [,ids]) → fs::Dict

Calculate the state space fraction of the basins of attraction encoded in `basins`.

The elements of `basins` are integers, enumerating the attractor that the entry of

`basins` converges to (i.e., like the output of [`basins_of_attraction`](@ref)).

Return a dictionary that maps attractor IDs to their relative fractions.

Optionally you may give a vector of `ids` to calculate the fractions of only

the chosen ids (by default `ids = unique(basins)`).

In [Menck2013](@cite) the authors use these fractions to quantify the stability of a basin of

attraction, and specifically how it changes when a parameter is changed.

For this, see [`global_continuation`](@ref).

"""

function basins_fractions(basins::AbstractArray, ids = unique(basins))

fs = Dict{eltype(basins), Float64}()

N = length(basins)

for ξ in ids

B = count(isequal(ξ), basins)

fs[ξ] = B/N

end

return fs

end

"""

convergence_and_basins_of_attraction(mapper::AttractorMapper, grid)

An extension of [`basins_of_attraction`](@ref).

Return `basins, attractors, convergence`, with `basins, attractors` as in

`basins_of_attraction`, and `convergence` being an array with same shape

as `basins`. It contains the time each initial condition took

to converge to its attractor.

It is useful to give to [`shaded_basins_heatmap`](@ref).

See also [`convergence_time`](@ref).

# Keyword arguments

- `show_progress = true`: show progress bar.

"""

function convergence_and_basins_of_attraction(mapper::AttractorMapper, grid; show_progress = true)

if length(grid) != dimension(referenced_dynamical_system(mapper))

@error "The mapper and the grid must have the same dimension"

end

basins = zeros(length.(grid))

iterations = zeros(Int, length.(grid))

I = CartesianIndices(basins)

progress = ProgressMeter.Progress(

length(basins); desc = "Basins and convergence: ", dt = 1.0

)

for (k, ind) in enumerate(I)

show_progress && ProgressMeter.update!(progress, k)

u0 = Attractors.generate_ic_on_grid(grid, ind)

basins[ind] = mapper(u0)

iterations[ind] = convergence_time(mapper)

end

attractors = extract_attractors(mapper)

return basins, attractors, iterations

end

"""

convergence_and_basins_fractions(mapper::AttractorMapper, ics::StateSpaceSet)

An extension of [`basins_fractions`](@ref).

Return `fs, labels, convergence`. The first two are as in `basins_fractions`,

and `convergence` is a vector containing the time each initial condition took

to converge to its attractor.

Only usable with mappers that support `id = mapper(u0)`.

See also [`convergence_time`](@ref).

# Keyword arguments

- `show_progress = true`: show progress bar.

"""

function convergence_and_basins_fractions(mapper::AttractorMapper, ics::AbstractStateSpaceSet;

show_progress = true,

)

N = size(ics, 1)

progress = ProgressMeter.Progress(N;

desc="Mapping initial conditions to attractors:", enabled = show_progress

)

fs = Dict{Int, Int}()

labels = Vector{Int}(undef, N)

iterations = Vector{typeof(current_time(mapper.ds))}(undef, N)

for i ∈ 1:N

ic = _get_ic(ics, i)

label = mapper(ic; show_progress)

fs[label] = get(fs, label, 0) + 1

labels[i] = label

iterations[i] = convergence_time(mapper)

show_progress && ProgressMeter.next!(progress)

end

# Transform count into fraction

ffs = Dict(k => v/N for (k, v) in fs)

return ffs, labels, iterations

end

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"

] | 1.20.0 | 47c6ed345dbabf0de47873514cb6d67369127733 | code | 10882 | export uncertainty_exponent, basins_fractal_dimension, basins_fractal_test, basin_entropy

"""

basin_entropy(basins::Array{Integer}, ε = size(basins, 1)÷10) -> Sb, Sbb

Return the basin entropy [Daza2016](@cite) `Sb` and basin boundary entropy `Sbb`

of the given `basins` of attraction by considering `ε`-sized boxes along each dimension.

## Description

First, the n-dimensional input `basins`

is divided regularly into n-dimensional boxes of side `ε`.

If `ε` is an integer, the same size is used for all dimensions, otherwise `ε` can be

a tuple with the same size as the dimensions of `basins`.

The size of the basins has to be divisible by `ε`.

Assuming that there are ``N`` `ε`-boxes that cover the `basins`, the basin entropy is estimated

as [Daza2016](@cite)

```math

S_b = \\tfrac{1}{N}\\sum_{i=1}^{N}\\sum_{j=1}^{m_i}-p_{ij}\\log(p_{ij})

```

where ``m_i`` is the number of unique IDs (integers of `basins`) in box ``i``

and ``p_{ij}`` is the relative frequency (probability) to obtain ID ``j``

in the ``i`` box (simply the count of IDs ``j`` divided by the total in the box).

`Sbb` is the boundary basin entropy.

This follows the same definition as ``S_b``, but now averaged over only

only boxes that contains at least two

different basins, that is, for the boxes on the boundaries.

The basin entropy is a measure of the uncertainty on the initial conditions of the basins.

It is maximum at the value `log(n_att)` being `n_att` the number of unique IDs in `basins`. In

this case the boundary is intermingled: for a given initial condition we can find

another initial condition that lead to another basin arbitrarily close. It provides also

a simple criterion for fractality: if the boundary basin entropy `Sbb` is above `log(2)`

then we have a fractal boundary. It doesn't mean that basins with values below cannot

have a fractal boundary, for a more precise test see [`basins_fractal_test`](@ref).

An important feature of the basin entropy is that it allows

comparisons between different basins using the same box size `ε`.

"""

function basin_entropy(basins::AbstractArray{<:Integer, D}, ε::Integer = size(basins, 1)÷10) where {D}

es = ntuple(i -> ε, Val(D))

return basin_entropy(basins, es)

end

function basin_entropy(basins::AbstractArray{<:Integer, D}, es::NTuple{D, <: Integer}) where {D}

if size(basins) .% es ≠ ntuple(i -> 0, D)

throw(ArgumentError("The basins are not fully divisible by the sizes `ε`"))

end

Sb = 0.0; Nb = 0

εranges = map((d, ε) -> 1:ε:d, size(basins), es)

box_iterator = Iterators.product(εranges...)

for box_start in box_iterator

box_ranges = map((d, ε) -> d:(d+ε-1), box_start, es)

box_values = view(basins, box_ranges...)

uvals = unique(box_values)

if length(uvals) > 1

Nb += 1

# we only need to estimate entropy for boxes with more than 1 val,

# because in other cases the entropy is zero

Sb = Sb + _box_entropy(box_values, uvals)

end

end

return Sb/length(box_iterator), Sb/Nb

end

function _box_entropy(box_values, unique_vals = unique(box_values))

h = 0.0

for v in unique_vals

p = count(x -> (x == v), box_values)/length(box_values)

h += -p*log(p)

end

return h

end

"""

basins_fractal_test(basins; ε = 20, Ntotal = 1000) -> test_res, Sbb

Perform an automated test to decide if the boundary of the basins has fractal structures

based on the method of Puy et al. [Puy2021](@cite).

Return `test_res` (`:fractal` or `:smooth`) and the mean basin boundary entropy.

## Keyword arguments

* `ε = 20`: size of the box to compute the basin boundary entropy.

* `Ntotal = 1000`: number of balls to test in the boundary for the computation of `Sbb`

## Description

The test "looks" at the basins with a magnifier of size `ε` at random.

If what we see in the magnifier looks like a smooth boundary (onn average) we decide that

the boundary is smooth. If it is not smooth we can say that at the scale `ε` we have

structures, i.e., it is fractal.

In practice the algorithm computes the boundary basin entropy `Sbb` [`basin_entropy`](@ref)

for `Ntotal`

random boxes of radius `ε`. If the computed value is equal to theoretical value of a smooth

boundary

(taking into account statistical errors and biases) then we decide that we have a smooth

boundary. Notice that the response `test_res` may depend on the chosen ball radius `ε`.

For larger size,

we may observe structures for smooth boundary and we obtain a *different* answer.

The output `test_res` is a symbol describing the nature of the basin and the output `Sbb` is

the estimated value of the boundary basin entropy with the sampling method.

"""

function basins_fractal_test(basins; ε = 20, Ntotal = 1000)

dims = size(basins)

# Sanity check.

if minimum(dims)/ε < 50

@warn "Maybe the size of the grid is not fine enough."

end

if Ntotal < 100

error("Ntotal must be larger than 100 to gather enough statistics.")

end

v_pts = zeros(Float64, length(dims), prod(dims))

I = CartesianIndices(basins)

for (k,coord) in enumerate(I)

v_pts[:, k] = [Tuple(coord)...]

end

tree = searchstructure(KDTree, v_pts, Euclidean())

# Now get the values in the boxes.

Nb = 1

N_stat = zeros(Ntotal)

while Nb < Ntotal

p = [rand()*(sz-ε)+ε for sz in dims]

idxs = isearch(tree, p, WithinRange(ε))

box_values = basins[idxs]

bx_ent = _box_entropy(box_values)

if bx_ent > 0

Nb = Nb + 1

N_stat[Nb] = bx_ent

end

end

Ŝbb = mean(N_stat)

σ_sbb = std(N_stat)/sqrt(Nb)

# Table of boundary basin entropy of a smooth boundary for dimension 1 to 5:

Sbb_tab = [0.499999, 0.4395093, 0.39609176, 0.36319428, 0.33722572]

if length(dims) ≤ 5

Sbb_s = Sbb_tab[length(dims)]

else

Sbb_s = 0.898*length(dims)^-0.4995

end

# Systematic error approximation for the disk of radius ε

δub = 0.224*ε^-1.006

tst_res = :smooth

if Ŝbb < (Sbb_s - σ_sbb) || Ŝbb > (σ_sbb + Sbb_s + δub)

# println("Fractal boundary for size of box ε=", ε)

tst_res = :fractal

else

# println("Smooth boundary for size of box ε=", ε)

tst_res = :smooth

end

return tst_res, Ŝbb

end

# as suggested in https://github.com/JuliaStats/StatsBase.jl/issues/398#issuecomment-417875619

linreg(x, y) = hcat(fill!(similar(x), 1), x) \ y

"""

basins_fractal_dimension(basins; kwargs...) -> V_ε, N_ε, d

Estimate the fractal dimension `d` of the boundary between basins of attraction using

a box-counting algorithm for the boxes that contain at least two different basin IDs.

## Keyword arguments

* `range_ε = 2:maximum(size(basins))÷20` is the range of sizes of the box to

test (in pixels).

## Description

The output `N_ε` is a vector with the number of the balls of radius `ε` (in pixels)

that contain at least two initial conditions that lead to different attractors. `V_ε`

is a vector with the corresponding size of the balls. The output `d` is the estimation

of the box-counting dimension of the boundary by fitting a line in the `log.(N_ε)`

vs `log.(1/V_ε)` curve. However it is recommended to analyze the curve directly

for more accuracy.

It is the implementation of the popular algorithm of the estimation of the box-counting

dimension. The algorithm search for a covering the boundary with `N_ε` boxes of size

`ε` in pixels.

"""

function basins_fractal_dimension(basins::AbstractArray; range_ε = 3:maximum(size(basins))÷20)

dims = size(basins)

num_step = length(range_ε)

N_u = zeros(Int, num_step) # number of uncertain box

N = zeros(Int, num_step) # number of boxes

V_ε = zeros(1, num_step) # resolution

# Naive box counting estimator

for (k,eps) in enumerate(range_ε)

Nb, Nu = 0, 0

# get indices of boxes

bx_tuple = ntuple(i -> range(1, dims[i] - rem(dims[i],eps), step = eps), length(dims))

box_indices = CartesianIndices(bx_tuple)

for box in box_indices

# compute the range of indices for the current box

ind = CartesianIndices(ntuple(i -> range(box[i], box[i]+eps-1, step = 1), length(dims)))

c = basins[ind]

if length(unique(c))>1

Nu = Nu + 1

end

Nb += 1

end

N_u[k] = Nu

N[k] = Nb

V_ε[k] = eps

end

N_ε = N_u

# remove zeros in case there are any:

ind = N_ε .> 0.0

N_ε = N_ε[ind]

V_ε = V_ε[ind]

# get exponent via liner regression on `f_ε ~ ε^α`

b, d = linreg(vec(-log10.(V_ε)), vec(log10.(N_ε)))

return V_ε, N_ε, d

end

"""

uncertainty_exponent(basins; kwargs...) -> ε, N_ε, α

Estimate the uncertainty exponent[Grebogi1983](@cite) of the basins of attraction. This exponent

is related to the final state sensitivity of the trajectories in the phase space.

An exponent close to `1` means basins with smooth boundaries whereas an exponent close

to `0` represent completely fractalized basins, also called riddled basins.

The output `N_ε` is a vector with the number of the balls of radius `ε` (in pixels)

that contain at least two initial conditions that lead to different attractors.

The output `α` is the estimation of the uncertainty exponent using the box-counting

dimension of the boundary by fitting a line in the `log.(N_ε)` vs `log.(1/ε)` curve.

However it is recommended to analyze the curve directly for more accuracy.

## Keyword arguments

* `range_ε = 2:maximum(size(basins))÷20` is the range of sizes of the ball to

test (in pixels).

## Description

A phase space with a fractal boundary may cause a uncertainty on the final state of the

dynamical system for a given initial condition. A measure of this final state sensitivity

is the uncertainty exponent. The algorithm probes the basin of attraction with balls

of size `ε` at random. If there are a least two initial conditions that lead to different

attractors, a ball is tagged "uncertain". `f_ε` is the fraction of "uncertain balls" to the

total number of tries in the basin. In analogy to the fractal dimension, there is a scaling

law between, `f_ε ~ ε^α`. The number that characterizes this scaling is called the

uncertainty exponent `α`.

Notice that the uncertainty exponent and the box counting dimension of the boundary are

related. We have `Δ₀ = D - α` where `Δ₀` is the box counting dimension computed with

[`basins_fractal_dimension`](@ref) and `D` is the dimension of the phase space.

The algorithm first estimates the box counting dimension of the boundary and

returns the uncertainty exponent.

"""

function uncertainty_exponent(basins::AbstractArray; range_ε = 2:maximum(size(basins))÷20)

V_ε, N_ε, d = basins_fractal_dimension(basins; range_ε)

return V_ε, N_ε, length(size(basins)) - d

end

| Attractors | https://github.com/JuliaDynamics/Attractors.jl.git |

|

[

"MIT"