code

stringlengths 2.5k

150k

| kind

stringclasses 1

value |

|---|---|

# Problem Simulation Tutorial

```

import pyblp

import numpy as np

import pandas as pd

pyblp.options.digits = 2

pyblp.options.verbose = False

pyblp.__version__

```

Before configuring and solving a problem with real data, it may be a good idea to perform Monte Carlo analysis on simulated data to verify that it is possible to accurately estimate model parameters. For example, before configuring and solving the example problems in the prior tutorials, it may have been a good idea to simulate data according to the assumed models of supply and demand. During such Monte Carlo anaysis, the data would only be used to determine sample sizes and perhaps to choose reasonable true parameters.

Simulations are configured with the :class:`Simulation` class, which requires many of the same inputs as :class:`Problem`. The two main differences are:

1. Variables in formulations that cannot be loaded from `product_data` or `agent_data` will be drawn from independent uniform distributions.

2. True parameters and the distribution of unobserved product characteristics are specified.

First, we'll use :func:`build_id_data` to build market and firm IDs for a model in which there are $T = 50$ markets, and in each market $t$, a total of $J_t = 20$ products produced by $F = 10$ firms.

```

id_data = pyblp.build_id_data(T=50, J=20, F=10)

```

Next, we'll create an :class:`Integration` configuration to build agent data according to a Gauss-Hermite product rule that exactly integrates polynomials of degree $2 \times 9 - 1 = 17$ or less.

```

integration = pyblp.Integration('product', 9)

integration

```

We'll then pass these data to :class:`Simulation`. We'll use :class:`Formulation` configurations to create an $X_1$ that consists of a constant, prices, and an exogenous characteristic; an $X_2$ that consists only of the same exogenous characteristic; and an $X_3$ that consists of the common exogenous characteristic and a cost-shifter.

```

simulation = pyblp.Simulation(

product_formulations=(

pyblp.Formulation('1 + prices + x'),

pyblp.Formulation('0 + x'),

pyblp.Formulation('0 + x + z')

),

beta=[1, -2, 2],

sigma=1,

gamma=[1, 4],

product_data=id_data,

integration=integration,

seed=0

)

simulation

```

When :class:`Simulation` is initialized, it constructs :attr:`Simulation.agent_data` and simulates :attr:`Simulation.product_data`.

The :class:`Simulation` can be further configured with other arguments that determine how unobserved product characteristics are simulated and how marginal costs are specified.

At this stage, simulated variables are not consistent with true parameters, so we still need to solve the simulation with :meth:`Simulation.replace_endogenous`. This method replaced simulated prices and market shares with values that are consistent with the true parameters. Just like :meth:`ProblemResults.compute_prices`, to do so it iterates over the $\zeta$-markup equation from :ref:`references:Morrow and Skerlos (2011)`.

```

simulation_results = simulation.replace_endogenous()

simulation_results

```

Now, we can try to recover the true parameters by creating and solving a :class:`Problem`.

The convenience method :meth:`SimulationResults.to_problem` constructs some basic "sums of characteristics" BLP instruments that are functions of all exogenous numerical variables in the problem. In this example, excluded demand-side instruments are the cost-shifter `z` and traditional BLP instruments constructed from `x`. Excluded supply-side instruments are traditional BLP instruments constructed from `x` and `z`.

```

problem = simulation_results.to_problem()

problem

```

We'll choose starting values that are half the true parameters so that the optimization routine has to do some work. Note that since we're jointly estimating the supply side, we need to provide an initial value for the linear coefficient on prices because this parameter cannot be concentrated out of the problem (unlike linear coefficients on exogenous characteristics).

```

results = problem.solve(

sigma=0.5 * simulation.sigma,

pi=0.5 * simulation.pi,

beta=[None, 0.5 * simulation.beta[1], None],

optimization=pyblp.Optimization('l-bfgs-b', {'gtol': 1e-5})

)

results

```

The parameters seem to have been estimated reasonably well.

```

np.c_[simulation.beta, results.beta]

np.c_[simulation.gamma, results.gamma]

np.c_[simulation.sigma, results.sigma]

```

In addition to checking that the configuration for a model based on actual data makes sense, the :class:`Simulation` class can also be a helpful tool for better understanding under what general conditions BLP models can be accurately estimated. Simulations are also used extensively in pyblp's test suite.

| github_jupyter |

# Softmax exercise

*Complete and hand in this completed worksheet (including its outputs and any supporting code outside of the worksheet) with your assignment submission. For more details see the [assignments page](http://vision.stanford.edu/teaching/cs231n/assignments.html) on the course website.*

This exercise is analogous to the SVM exercise. You will:

- implement a fully-vectorized **loss function** for the Softmax classifier

- implement the fully-vectorized expression for its **analytic gradient**

- **check your implementation** with numerical gradient

- use a validation set to **tune the learning rate and regularization** strength

- **optimize** the loss function with **SGD**

- **visualize** the final learned weights

```

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10

import matplotlib.pyplot as plt

from __future__ import print_function

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# for auto-reloading extenrnal modules

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2

def get_CIFAR10_data(num_training=49000, num_validation=1000, num_test=1000, num_dev=500):

"""

Load the CIFAR-10 dataset from disk and perform preprocessing to prepare

it for the linear classifier. These are the same steps as we used for the

SVM, but condensed to a single function.

"""

# Load the raw CIFAR-10 data

cifar10_dir = 'cs231n/datasets/cifar-10-batches-py'

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# subsample the data

mask = list(range(num_training, num_training + num_validation))

X_val = X_train[mask]

y_val = y_train[mask]

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

mask = np.random.choice(num_training, num_dev, replace=False)

X_dev = X_train[mask]

y_dev = y_train[mask]

# Preprocessing: reshape the image data into rows

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_val = np.reshape(X_val, (X_val.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

X_dev = np.reshape(X_dev, (X_dev.shape[0], -1))

# Normalize the data: subtract the mean image

mean_image = np.mean(X_train, axis = 0)

X_train -= mean_image

X_val -= mean_image

X_test -= mean_image

X_dev -= mean_image

# add bias dimension and transform into columns

X_train = np.hstack([X_train, np.ones((X_train.shape[0], 1))])

X_val = np.hstack([X_val, np.ones((X_val.shape[0], 1))])

X_test = np.hstack([X_test, np.ones((X_test.shape[0], 1))])

X_dev = np.hstack([X_dev, np.ones((X_dev.shape[0], 1))])

return X_train, y_train, X_val, y_val, X_test, y_test, X_dev, y_dev

# Invoke the above function to get our data.

X_train, y_train, X_val, y_val, X_test, y_test, X_dev, y_dev = get_CIFAR10_data()

print('Train data shape: ', X_train.shape)

print('Train labels shape: ', y_train.shape)

print('Validation data shape: ', X_val.shape)

print('Validation labels shape: ', y_val.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)

print('dev data shape: ', X_dev.shape)

print('dev labels shape: ', y_dev.shape)

```

## Softmax Classifier

Your code for this section will all be written inside **cs231n/classifiers/softmax.py**.

```

# First implement the naive softmax loss function with nested loops.

# Open the file cs231n/classifiers/softmax.py and implement the

# softmax_loss_naive function.

from cs231n.classifiers.softmax import softmax_loss_naive

import time

# Generate a random softmax weight matrix and use it to compute the loss.

W = np.random.randn(3073, 10) * 0.0001

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 0.0)

# As a rough sanity check, our loss should be something close to -log(0.1).

print('loss: %f' % loss)

print('sanity check: %f' % (-np.log(0.1)))

```

## Inline Question 1:

Why do we expect our loss to be close to -log(0.1)? Explain briefly.**

**Your answer:** *Because it's a random classifier. Since there are 10 classes and a random classifier will correctly classify with 10% probability.*

```

# Complete the implementation of softmax_loss_naive and implement a (naive)

# version of the gradient that uses nested loops.

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 0.0)

# As we did for the SVM, use numeric gradient checking as a debugging tool.

# The numeric gradient should be close to the analytic gradient.

from cs231n.gradient_check import grad_check_sparse

f = lambda w: softmax_loss_naive(w, X_dev, y_dev, 0.0)[0]

grad_numerical = grad_check_sparse(f, W, grad, 10)

# similar to SVM case, do another gradient check with regularization

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 5e1)

f = lambda w: softmax_loss_naive(w, X_dev, y_dev, 5e1)[0]

grad_numerical = grad_check_sparse(f, W, grad, 10)

# Now that we have a naive implementation of the softmax loss function and its gradient,

# implement a vectorized version in softmax_loss_vectorized.

# The two versions should compute the same results, but the vectorized version should be

# much faster.

tic = time.time()

loss_naive, grad_naive = softmax_loss_naive(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('naive loss: %e computed in %fs' % (loss_naive, toc - tic))

from cs231n.classifiers.softmax import softmax_loss_vectorized

tic = time.time()

loss_vectorized, grad_vectorized = softmax_loss_vectorized(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('vectorized loss: %e computed in %fs' % (loss_vectorized, toc - tic))

# As we did for the SVM, we use the Frobenius norm to compare the two versions

# of the gradient.

grad_difference = np.linalg.norm(grad_naive - grad_vectorized, ord='fro')

print('Loss difference: %f' % np.abs(loss_naive - loss_vectorized))

print('Gradient difference: %f' % grad_difference)

# Use the validation set to tune hyperparameters (regularization strength and

# learning rate). You should experiment with different ranges for the learning

# rates and regularization strengths; if you are careful you should be able to

# get a classification accuracy of over 0.35 on the validation set.

from cs231n.classifiers import Softmax

results = {}

best_val = -1

best_softmax = None

learning_rates = [5e-6, 1e-7, 5e-7]

regularization_strengths = [1e3, 2.5e4, 5e4]

################################################################################

# TODO: #

# Use the validation set to set the learning rate and regularization strength. #

# This should be identical to the validation that you did for the SVM; save #

# the best trained softmax classifer in best_softmax. #

################################################################################

for lr in learning_rates:

for reg in regularization_strengths:

softmax = Softmax()

loss_hist = softmax.train(X_train, y_train, learning_rate=lr, reg=reg,

num_iters=1500, verbose=True)

y_train_pred = softmax.predict(X_train)

y_val_pred = softmax.predict(X_val)

training_accuracy = np.mean(y_train == y_train_pred)

validation_accuracy = np.mean(y_val == y_val_pred)

#append in results

results[(lr,reg)] = (training_accuracy, validation_accuracy)

if validation_accuracy > best_val:

best_val = validation_accuracy

best_softmax = softmax

################################################################################

# END OF YOUR CODE #

################################################################################

# Print out results.

for lr, reg in sorted(results):

train_accuracy, val_accuracy = results[(lr, reg)]

print('lr %e reg %e train accuracy: %f val accuracy: %f' % (

lr, reg, train_accuracy, val_accuracy))

print('best validation accuracy achieved during cross-validation: %f' % best_val)

# evaluate on test set

# Evaluate the best softmax on test set

y_test_pred = best_softmax.predict(X_test)

test_accuracy = np.mean(y_test == y_test_pred)

print('softmax on raw pixels final test set accuracy: %f' % (test_accuracy, ))

# Visualize the learned weights for each class

w = best_softmax.W[:-1,:] # strip out the bias

w = w.reshape(32, 32, 3, 10)

w_min, w_max = np.min(w), np.max(w)

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

for i in range(10):

plt.subplot(2, 5, i + 1)

# Rescale the weights to be between 0 and 255

wimg = 255.0 * (w[:, :, :, i].squeeze() - w_min) / (w_max - w_min)

plt.imshow(wimg.astype('uint8'))

plt.axis('off')

plt.title(classes[i])

```

| github_jupyter |

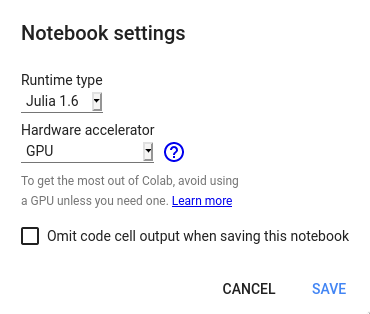

<a href="https://colab.research.google.com/github/ai-fast-track/timeseries/blob/master/nbs/index.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

# `timeseries` package for fastai v2

> **`timeseries`** is a Timeseries Classification and Regression package for fastai v2.

> It mimics the fastai v2 vision module (fastai2.vision).

> This notebook is a tutorial that shows, and trains an end-to-end a timeseries dataset.

> The dataset example is the NATOPS dataset (see description here beow).

> First, 4 different methods of creation on how to create timeseries dataloaders are presented.

> Then, we train a model based on [Inception Time] (https://arxiv.org/pdf/1909.04939.pdf) architecture

## Credit

> timeseries for fastai v2 was inspired by by Ignacio's Oguiza timeseriesAI (https://github.com/timeseriesAI/timeseriesAI.git).

> Inception Time model definition is a modified version of [Ignacio Oguiza] (https://github.com/timeseriesAI/timeseriesAI/blob/master/torchtimeseries/models/InceptionTime.py) and [Thomas Capelle] (https://github.com/tcapelle/TimeSeries_fastai/blob/master/inception.py) implementaions

## Installing **`timeseries`** on local machine as an editable package

1- Only if you have not already installed `fastai v2`

Install [fastai2](https://dev.fast.ai/#Installing) by following the steps described there.

2- Install timeseries package by following the instructions here below:

```

git clone https://github.com/ai-fast-track/timeseries.git

cd timeseries

pip install -e .

```

# pip installing **`timeseries`** from repo either locally or in Google Colab - Start Here

## Installing fastai v2

```

!pip install git+https://github.com/fastai/fastai2.git

```

## Installing `timeseries` package from github

```

!pip install git+https://github.com/ai-fast-track/timeseries.git

```

# *pip Installing - End Here*

# `Usage`

```

%reload_ext autoreload

%autoreload 2

%matplotlib inline

from fastai2.basics import *

# hide

# Only for Windows users because symlink to `timeseries` folder is not recognized by Windows

import sys

sys.path.append("..")

from timeseries.all import *

```

# Tutorial on timeseries package for fastai v2

## Example : NATOS dataset

<img src="https://github.com/ai-fast-track/timeseries/blob/master/images/NATOPS.jpg?raw=1">

## Right Arm vs Left Arm (3: 'Not clear' Command (see picture here above))

<br>

<img src="https://github.com/ai-fast-track/timeseries/blob/master/images/ts-right-arm.png?raw=1"><img src="https://github.com/ai-fast-track/timeseries/blob/master/images/ts-left-arm.png?raw=1">

## Description

The data is generated by sensors on the hands, elbows, wrists and thumbs. The data are the x,y,z coordinates for each of the eight locations. The order of the data is as follows:

## Channels (24)

0. Hand tip left, X coordinate

1. Hand tip left, Y coordinate

2. Hand tip left, Z coordinate

3. Hand tip right, X coordinate

4. Hand tip right, Y coordinate

5. Hand tip right, Z coordinate

6. Elbow left, X coordinate

7. Elbow left, Y coordinate

8. Elbow left, Z coordinate

9. Elbow right, X coordinate

10. Elbow right, Y coordinate

11. Elbow right, Z coordinate

12. Wrist left, X coordinate

13. Wrist left, Y coordinate

14. Wrist left, Z coordinate

15. Wrist right, X coordinate

16. Wrist right, Y coordinate

17. Wrist right, Z coordinate

18. Thumb left, X coordinate

19. Thumb left, Y coordinate

20. Thumb left, Z coordinate

21. Thumb right, X coordinate

22. Thumb right, Y coordinate

23. Thumb right, Z coordinate

## Classes (6)

The six classes are separate actions, with the following meaning:

1: I have command

2: All clear

3: Not clear

4: Spread wings

5: Fold wings

6: Lock wings

## Download data using `download_unzip_data_UCR(dsname=dsname)` method

```

dsname = 'NATOPS' #'NATOPS', 'LSST', 'Wine', 'Epilepsy', 'HandMovementDirection'

# url = 'http://www.timeseriesclassification.com/Downloads/NATOPS.zip'

path = unzip_data(URLs_TS.NATOPS)

path

```

## Why do I have to concatenate train and test data?

Both Train and Train dataset contains 180 samples each. We concatenate them in order to have one big dataset and then split into train and valid dataset using our own split percentage (20%, 30%, or whatever number you see fit)

```

fname_train = f'{dsname}_TRAIN.arff'

fname_test = f'{dsname}_TEST.arff'

fnames = [path/fname_train, path/fname_test]

fnames

data = TSData.from_arff(fnames)

print(data)

items = data.get_items()

idx = 1

x1, y1 = data.x[idx], data.y[idx]

y1

# You can select any channel to display buy supplying a list of channels and pass it to `chs` argument

# LEFT ARM

# show_timeseries(x1, title=y1, chs=[0,1,2,6,7,8,12,13,14,18,19,20])

# RIGHT ARM

# show_timeseries(x1, title=y1, chs=[3,4,5,9,10,11,15,16,17,21,22,23])

# ?show_timeseries(x1, title=y1, chs=range(0,24,3)) # Only the x axis coordinates

seed = 42

splits = RandomSplitter(seed=seed)(range_of(items)) #by default 80% for train split and 20% for valid split are chosen

splits

```

# Using `Datasets` class

## Creating a Datasets object

```

tfms = [[ItemGetter(0), ToTensorTS()], [ItemGetter(1), Categorize()]]

# Create a dataset

ds = Datasets(items, tfms, splits=splits)

ax = show_at(ds, 2, figsize=(1,1))

```

# Create a `Dataloader` objects

## 1st method : using `Datasets` object

```

bs = 128

# Normalize at batch time

tfm_norm = Normalize(scale_subtype = 'per_sample_per_channel', scale_range=(0, 1)) # per_sample , per_sample_per_channel

# tfm_norm = Standardize(scale_subtype = 'per_sample')

batch_tfms = [tfm_norm]

dls1 = ds.dataloaders(bs=bs, val_bs=bs * 2, after_batch=batch_tfms, num_workers=0, device=default_device())

dls1.show_batch(max_n=9, chs=range(0,12,3))

```

# Using `DataBlock` class

## 2nd method : using `DataBlock` and `DataBlock.get_items()`

```

getters = [ItemGetter(0), ItemGetter(1)]

tsdb = DataBlock(blocks=(TSBlock, CategoryBlock),

get_items=get_ts_items,

getters=getters,

splitter=RandomSplitter(seed=seed),

batch_tfms = batch_tfms)

tsdb.summary(fnames)

# num_workers=0 is Microsoft Windows

dls2 = tsdb.dataloaders(fnames, num_workers=0, device=default_device())

dls2.show_batch(max_n=9, chs=range(0,12,3))

```

## 3rd method : using `DataBlock` and passing `items` object to the `DataBlock.dataloaders()`

```

getters = [ItemGetter(0), ItemGetter(1)]

tsdb = DataBlock(blocks=(TSBlock, CategoryBlock),

getters=getters,

splitter=RandomSplitter(seed=seed))

dls3 = tsdb.dataloaders(data.get_items(), batch_tfms=batch_tfms, num_workers=0, device=default_device())

dls3.show_batch(max_n=9, chs=range(0,12,3))

```

## 4th method : using `TSDataLoaders` class and `TSDataLoaders.from_files()`

```

dls4 = TSDataLoaders.from_files(fnames, batch_tfms=batch_tfms, num_workers=0, device=default_device())

dls4.show_batch(max_n=9, chs=range(0,12,3))

```

# Train Model

```

# Number of channels (i.e. dimensions in ARFF and TS files jargon)

c_in = get_n_channels(dls2.train) # data.n_channels

# Number of classes

c_out= dls2.c

c_in,c_out

```

## Create model

```

model = inception_time(c_in, c_out).to(device=default_device())

model

```

## Create Learner object

```

#Learner

opt_func = partial(Adam, lr=3e-3, wd=0.01)

loss_func = LabelSmoothingCrossEntropy()

learn = Learner(dls2, model, opt_func=opt_func, loss_func=loss_func, metrics=accuracy)

print(learn.summary())

```

## LR find

```

lr_min, lr_steep = learn.lr_find()

lr_min, lr_steep

```

## Train

```

#lr_max=1e-3

epochs=30; lr_max=lr_steep; pct_start=.7; moms=(0.95,0.85,0.95); wd=1e-2

learn.fit_one_cycle(epochs, lr_max=lr_max, pct_start=pct_start, moms=moms, wd=wd)

# learn.fit_one_cycle(epochs=20, lr_max=lr_steep)

```

## Plot loss function

```

learn.recorder.plot_loss()

```

## Show results

```

learn.show_results(max_n=9, chs=range(0,12,3))

#hide

from nbdev.export import notebook2script

# notebook2script()

notebook2script(fname='index.ipynb')

# #hide

# from nbdev.export2html import _notebook2html

# # notebook2script()

# _notebook2html(fname='index.ipynb')

```

# Fin

<img src="https://github.com/ai-fast-track/timeseries/blob/master/images/tree.jpg?raw=1" width="1440" height="840" alt=""/>

| github_jupyter |

<a href="https://colab.research.google.com/github/mouctarbalde/concrete-strength-prediction/blob/main/Cement_prediction_.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.tree import DecisionTreeRegressor

from sklearn.metrics import mean_absolute_error

from sklearn.metrics import r2_score

from sklearn.preprocessing import RobustScaler

from sklearn.linear_model import Lasso

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.ensemble import StackingRegressor

import warnings

import random

seed = 42

random.seed(seed)

import numpy as np

np.random.seed(seed)

warnings.filterwarnings('ignore')

plt.style.use('ggplot')

df = pd.read_csv('https://raw.githubusercontent.com/mouctarbalde/concrete-strength-prediction/main/Train.csv')

df.head()

columns_name = df.columns.to_list()

columns_name =['Cement',

'Blast_Furnace_Slag',

'Fly_Ash',

'Water',

'Superplasticizer',

'Coarse Aggregate',

'Fine Aggregate',

'Age_day',

'Concrete_compressive_strength']

df.columns = columns_name

df.info()

df.shape

import missingno as ms

ms.matrix(df)

df.isna().sum()

df.describe().T

df.corr()['Concrete_compressive_strength'].sort_values().plot(kind='barh')

plt.title("Correlation based on the target variable.")

plt.show()

sns.heatmap(df.corr(),annot=True)

sns.boxplot(x='Water', y = 'Cement',data=df)

plt.figure(figsize=(15,9))

df.boxplot()

sns.regplot(x='Water', y = 'Cement',data=df)

```

As we can see from the above cell there is not correlation between **water** and our target variable.

```

sns.boxplot(x='Age_day', y = 'Cement',data=df)

sns.regplot(x='Age_day', y = 'Cement',data=df)

X = df.drop('Concrete_compressive_strength',axis=1)

y = df.Concrete_compressive_strength

X.head()

y.head()

X_train,X_test,y_train,y_test = train_test_split(X, y, test_size=.2, random_state=seed)

X_train.shape ,y_train.shape

```

In our case we notice from our analysis the presence of outliers although they are not many we are going to use Robustscaler from sklearn to scale the data.

Robust scaler is going to remove the median and put variance to 1 it will also transform the data by removing outliers(24%-75%) is considered.

```

scale = RobustScaler()

# note we have to fit_transform only on the training data. On your test data you only have to transform.

X_train = scale.fit_transform(X_train)

X_test = scale.transform(X_test)

X_train

```

# Model creation

### Linear Regression

```

lr = LinearRegression()

lr.fit(X_train,y_train)

pred_lr = lr.predict(X_test)

pred_lr[:10]

mae_lr = mean_absolute_error(y_test,pred_lr)

r2_lr = r2_score(y_test,pred_lr)

print(f'Mean absolute error of linear regression is {mae_lr}')

print(f'R2 score of Linear Regression is {r2_lr}')

```

**Graph for linear regression** the below graph is showing the relationship between the actual and the predicted values.

```

fig, ax = plt.subplots()

ax.scatter(pred_lr, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

```

### Decision tree Regression

```

dt = DecisionTreeRegressor(criterion='mae')

dt.fit(X_train,y_train)

pred_dt = dt.predict(X_test)

mae_dt = mean_absolute_error(y_test,pred_dt)

r2_dt = r2_score(y_test,pred_dt)

print(f'Mean absolute error of linear regression is {mae_dt}')

print(f'R2 score of Decision tree regressor is {r2_dt}')

fig, ax = plt.subplots()

plt.title('Linear relationship for decison tree')

ax.scatter(pred_dt, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

```

### Random Forest Regression

```

from sklearn.ensemble import RandomForestRegressor

rf = RandomForestRegressor()

rf.fit(X_train, y_train)

# prediction

pred_rf = rf.predict(X_test)

mae_rf = mean_absolute_error(y_test,pred_rf)

r2_rf = r2_score(y_test,pred_rf)

print(f'Mean absolute error of Random forst regression is {mae_rf}')

print(f'R2 score of Random forst regressor is {r2_rf}')

fig, ax = plt.subplots()

plt.title('Linear relationship for random forest regressor')

ax.scatter(pred_rf, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

```

# Lasso Regression

```

laso = Lasso()

laso.fit(X_train, y_train)

pred_laso = laso.predict(X_test)

mae_laso = mean_absolute_error(y_test, pred_laso)

r2_laso = r2_score(y_test, pred_laso)

print(f'Mean absolute error of Random forst regression is {mae_laso}')

print(f'R2 score of Random forst regressor is {r2_laso}')

fig, ax = plt.subplots()

plt.title('Linear relationship for Lasso regressor')

ax.scatter(pred_laso, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

gb = GradientBoostingRegressor()

gb.fit(X_train, y_train)

pred_gb = gb.predict(X_test)

mae_gb = mean_absolute_error(y_test, pred_gb)

r2_gb = r2_score(y_test, pred_gb)

print(f'Mean absolute error of Random forst regression is {mae_gb}')

print(f'R2 score of Random forst regressor is {r2_gb}')

fig, ax = plt.subplots()

plt.title('Linear relationship for Lasso regressor')

ax.scatter(pred_gb, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

```

# Stacking Regressor:

combining multiple regression model and choosing the final model. in our case we used kfold cross validation to make sure that the model is not overfitting.

```

estimators = [('lr',LinearRegression()), ('gb',GradientBoostingRegressor()),\

('dt',DecisionTreeRegressor()), ('laso',Lasso())]

from sklearn.model_selection import KFold

kf = KFold(n_splits=10,shuffle=True, random_state=seed)

stacking = StackingRegressor(estimators=estimators, final_estimator=RandomForestRegressor(random_state=seed), cv=kf)

stacking.fit(X_train, y_train)

pred_stack = stacking.predict(X_test)

mae_stack = mean_absolute_error(y_test, pred_stack)

r2_stack = r2_score(y_test, pred_stack)

print(f'Mean absolute error of Random forst regression is {mae_stack}')

print(f'R2 score of Random forst regressor is {r2_stack}')

fig, ax = plt.subplots()

plt.title('Linear relationship for Stacking regressor')

ax.scatter(pred_stack, y_test)

ax.plot([y_test.min(), y_test.max()],[y_test.min(), y_test.max()], color = 'red', marker = "*", markersize = 10)

result = pd.DataFrame({'Model':['Linear Regression','Decison tree','Random Forest', 'Lasso',\

'Gradient Boosting Regressor', 'Stacking Regressor'],

'MAE':[mae_lr, mae_dt, mae_rf, mae_laso, mae_gb, mae_stack],

'R2 score':[r2_lr, r2_dt, r2_rf, r2_laso, r2_gb, r2_stack]

})

result

```

| github_jupyter |

# The Extended Kalman Filter

선형 칼만 필터 (Linear Kalman Filter)에 대한 이론을 바탕으로 비선형 문제에 칼만 필터를 적용해 보겠습니다. 확장칼만필터 (EKF)는 예측단계와 추정단계의 데이터를 비선형으로 가정하고 현재의 추정값에 대해 시스템을 선형화 한뒤 선형 칼만 필터를 사용하는 기법입니다.

비선형 문제에 적용되는 성능이 더 좋은 알고리즘들 (UKF, H_infinity)이 있지만 EKF 는 아직도 널리 사용되서 관련성이 높습니다.

```

%matplotlib inline

# HTML("""

# <style>

# .output_png {

# display: table-cell;

# text-align: center;

# vertical-align: middle;

# }

# </style>

# """)

```

## Linearizing the Kalman Filter

### Non-linear models

칼만 필터는 시스템이 선형일것이라는 가정을 하기 때문에 비선형 문제에는 직접적으로 사용하지 못합니다. 비선형성은 두가지 원인에서 기인될수 있는데 첫째는 프로세스 모델의 비선형성 그리고 둘째 측정 모델의 비선형성입니다. 예를 들어, 떨어지는 물체의 가속도는 속도의 제곱에 비례하는 공기저항에 의해 결정되기 때문에 비선형적인 프로세스 모델을 가지고, 레이더로 목표물의 범위와 방위 (bearing) 를 측정할때 비선형함수인 삼각함수를 사용하여 표적의 위치를 계산하기 때문에 비선형적인 측정 모델을 가지게 됩니다.

비선형문제에 기존의 칼만필터 방정식을 적용하지 못하는 이유는 비선형함수에 정규분포 (Gaussian)를 입력하면 아래와 같이 Gaussian 이 아닌 분포를 가지게 되기 때문입니다.

```

import numpy as np

import scipy.stats as stats

import matplotlib.pyplot as plt

mu, sigma = 0, 0.1

gaussian = stats.norm.pdf(x, mu, sigma)

x = np.linspace(mu - 3*sigma, mu + 3*sigma, 10000)

def nonlinearFunction(x):

return np.sin(x)

def linearFunction(x):

return 0.5*x

nonlinearOutput = nonlinearFunction(gaussian)

linearOutput = linearFunction(gaussian)

# print(x)

plt.plot(x, gaussian, label = 'Gaussian Input')

plt.plot(x, linearOutput, label = 'Linear Output')

plt.plot(x, nonlinearOutput, label = 'Nonlinear Output')

plt.grid(linestyle='dotted', linewidth=0.8)

plt.legend()

plt.show()

```

### System Equations

선형 칼만 필터의 경우 프로세스 및 측정 모델은 다음과 같이 나타낼수 있습니다.

$$\begin{aligned}\dot{\mathbf x} &= \mathbf{Ax} + w_x\\

\mathbf z &= \mathbf{Hx} + w_z

\end{aligned}$$

이때 $\mathbf A$ 는 (연속시간에서) 시스템의 역학을 묘사하는 dynamic matrix 입니다. 위의 식을 이산화(discretize)시키면 아래와 같이 나타내줄 수 있습니다.

$$\begin{aligned}\bar{\mathbf x}_k &= \mathbf{F} \mathbf{x}_{k-1} \\

\bar{\mathbf z} &= \mathbf{H} \mathbf{x}_{k-1}

\end{aligned}$$

이때 $\mathbf F$ 는 이산시간 $\Delta t$ 에 걸쳐 $\mathbf x_{k-1}$을 $\mathbf x_{k}$ 로 전환하는 상태변환행렬 또는 상태전달함수 (state transition matrix) 이고, 위에서의 $w_x$ 와 $w_z$는 각각 프로세스 노이즈 공분산 행렬 $\mathbf Q$ 과 측정 노이즈 공분산 행렬 $\mathbf R$ 에 포함됩니다.

선형 시스템에서의 $\mathbf F \mathbf x- \mathbf B \mathbf u$ 와 $\mathbf H \mathbf x$ 는 비선형 시스템에서 함수 $f(\mathbf x, \mathbf u)$ 와 $h(\mathbf x)$ 로 대체됩니다.

$$\begin{aligned}\dot{\mathbf x} &= f(\mathbf x, \mathbf u) + w_x\\

\mathbf z &= h(\mathbf x) + w_z

\end{aligned}$$

### Linearisation

선형화란 말그대로 하나의 시점에 대하여 비선형함수에 가장 가까운 선 (선형시스템) 을 찾는것이라고 볼수 있습니다. 여러가지 방법으로 선형화를 할수 있겠지만 흔히 일차 테일러 급수를 사용합니다. ($ c_0$ 과 $c_1 x$)

$$f(x) = \sum_{k=0}^\infty c_k x^k = c_0 + c_1 x + c_2 x^2 + \dotsb$$

$$c_k = \frac{f^{\left(k\right)}(0)}{k!} = \frac{1}{k!} \cdot \frac{d^k f}{dx^k}\bigg|_0 $$

행렬의 미분값을 Jacobian 이라고 하는데 이를 통해서 위와 같이 $\mathbf F$ 와 $\mathbf H$ 를 나타낼 수 있습니다.

$$

\begin{aligned}

\mathbf F

= {\frac{\partial{f(\mathbf x_t, \mathbf u_t)}}{\partial{\mathbf x}}}\biggr|_{{\mathbf x_t},{\mathbf u_t}} \;\;\;\;

\mathbf H = \frac{\partial{h(\bar{\mathbf x}_t)}}{\partial{\bar{\mathbf x}}}\biggr|_{\bar{\mathbf x}_t}

\end{aligned}

$$

$$\mathbf F = \frac{\partial f(\mathbf x, \mathbf u)}{\partial x} =\begin{bmatrix}

\frac{\partial f_1}{\partial x_1} & \frac{\partial f_1}{\partial x_2} & \dots & \frac{\partial f_1}{\partial x_n}\\

\frac{\partial f_2}{\partial x_1} & \frac{\partial f_2}{\partial x_2} & \dots & \frac{\partial f_2}{\partial x_n} \\

\\ \vdots & \vdots & \ddots & \vdots

\\

\frac{\partial f_n}{\partial x_1} & \frac{\partial f_n}{\partial x_2} & \dots & \frac{\partial f_n}{\partial x_n}

\end{bmatrix}

$$

Linear Kalman Filter 와 Extended Kalman Filter 의 식들을 아래와 같이 비교할수 있습니다.

$$\begin{array}{l|l}

\text{Linear Kalman filter} & \text{EKF} \\

\hline

& \boxed{\mathbf F = {\frac{\partial{f(\mathbf x_t, \mathbf u_t)}}{\partial{\mathbf x}}}\biggr|_{{\mathbf x_t},{\mathbf u_t}}} \\

\mathbf{\bar x} = \mathbf{Fx} + \mathbf{Bu} & \boxed{\mathbf{\bar x} = f(\mathbf x, \mathbf u)} \\

\mathbf{\bar P} = \mathbf{FPF}^\mathsf{T}+\mathbf Q & \mathbf{\bar P} = \mathbf{FPF}^\mathsf{T}+\mathbf Q \\

\hline

& \boxed{\mathbf H = \frac{\partial{h(\bar{\mathbf x}_t)}}{\partial{\bar{\mathbf x}}}\biggr|_{\bar{\mathbf x}_t}} \\

\textbf{y} = \mathbf z - \mathbf{H \bar{x}} & \textbf{y} = \mathbf z - \boxed{h(\bar{x})}\\

\mathbf{K} = \mathbf{\bar{P}H}^\mathsf{T} (\mathbf{H\bar{P}H}^\mathsf{T} + \mathbf R)^{-1} & \mathbf{K} = \mathbf{\bar{P}H}^\mathsf{T} (\mathbf{H\bar{P}H}^\mathsf{T} + \mathbf R)^{-1} \\

\mathbf x=\mathbf{\bar{x}} +\mathbf{K\textbf{y}} & \mathbf x=\mathbf{\bar{x}} +\mathbf{K\textbf{y}} \\

\mathbf P= (\mathbf{I}-\mathbf{KH})\mathbf{\bar{P}} & \mathbf P= (\mathbf{I}-\mathbf{KH})\mathbf{\bar{P}}

\end{array}$$

$\mathbf F \mathbf x_{k-1}$ 을 사용하여 $\mathbf x_{k}$의 값을 추정할수 있겠지만, 선형화 과정에서 오차가 생길수 있기 때문에 Euler 또는 Runge Kutta 수치 적분 (numerical integration) 을 통해서 사전추정값 $\mathbf{\bar{x}}$ 를 구합니다. 같은 이유로 $\mathbf y$ (innovation vector 또는 잔차(residual)) 를 구할때도 $\mathbf H \mathbf x$ 대신에 수치적인 방법으로 계산하게 됩니다.

## Example: Robot Localization

### Prediction Model (예측모델)

EKF를 4륜 로봇에 적용시켜 보겠습니다. 간단한 bicycle steering model 을 통해 아래의 시스템 모델을 나타낼 수 있습니다.

```

import kf_book.ekf_internal as ekf_internal

ekf_internal.plot_bicycle()

```

$$\begin{aligned}

\beta &= \frac d w \tan(\alpha) \\

\bar x_k &= x_{k-1} - R\sin(\theta) + R\sin(\theta + \beta) \\

\bar y_k &= y_{k-1} + R\cos(\theta) - R\cos(\theta + \beta) \\

\bar \theta_k &= \theta_{k-1} + \beta

\end{aligned}

$$

위의 식들을 토대로 상태벡터를 $\mathbf{x}=[x, y, \theta]^T$ 그리고 입력벡터를 $\mathbf{u}=[v, \alpha]^T$ 라고 정의 해주면 아래와 같이 $f(\mathbf x, \mathbf u)$ 나타내줄수 있고 $f$ 의 Jacobian $\mathbf F$를 미분하여 아래의 행렬을 구해줄수 있습니다.

$$\bar x = f(x, u) + \mathcal{N}(0, Q)$$

$$f = \begin{bmatrix}x\\y\\\theta\end{bmatrix} +

\begin{bmatrix}- R\sin(\theta) + R\sin(\theta + \beta) \\

R\cos(\theta) - R\cos(\theta + \beta) \\

\beta\end{bmatrix}$$

$$\mathbf F = \frac{\partial f(\mathbf x, \mathbf u)}{\partial \mathbf x} = \begin{bmatrix}

1 & 0 & -R\cos(\theta) + R\cos(\theta+\beta) \\

0 & 1 & -R\sin(\theta) + R\sin(\theta+\beta) \\

0 & 0 & 1

\end{bmatrix}$$

$\bar{\mathbf P}$ 을 구하기 위해 입력($\mathbf u$)에서 비롯되는 프로세스 노이즈 $\mathbf Q$ 를 아래와 같이 정의합니다.

$$\mathbf{M} = \begin{bmatrix}\sigma_{vel}^2 & 0 \\ 0 & \sigma_\alpha^2\end{bmatrix}

\;\;\;\;

\mathbf{V} = \frac{\partial f(x, u)}{\partial u} \begin{bmatrix}

\frac{\partial f_1}{\partial v} & \frac{\partial f_1}{\partial \alpha} \\

\frac{\partial f_2}{\partial v} & \frac{\partial f_2}{\partial \alpha} \\

\frac{\partial f_3}{\partial v} & \frac{\partial f_3}{\partial \alpha}

\end{bmatrix}$$

$$\mathbf{\bar P} =\mathbf{FPF}^{\mathsf T} + \mathbf{VMV}^{\mathsf T}$$

```

import sympy

from sympy.abc import alpha, x, y, v, w, R, theta

from sympy import symbols, Matrix

sympy.init_printing(use_latex="mathjax", fontsize='16pt')

time = symbols('t')

d = v*time

beta = (d/w)*sympy.tan(alpha)

r = w/sympy.tan(alpha)

fxu = Matrix([[x-r*sympy.sin(theta) + r*sympy.sin(theta+beta)],

[y+r*sympy.cos(theta)- r*sympy.cos(theta+beta)],

[theta+beta]])

F = fxu.jacobian(Matrix([x, y, theta]))

F

# reduce common expressions

B, R = symbols('beta, R')

F = F.subs((d/w)*sympy.tan(alpha), B)

F.subs(w/sympy.tan(alpha), R)

V = fxu.jacobian(Matrix([v, alpha]))

V = V.subs(sympy.tan(alpha)/w, 1/R)

V = V.subs(time*v/R, B)

V = V.subs(time*v, 'd')

V

```

### Measurement Model (측정모델)

레이더로 범위$(r)$와 방위($\phi$)를 측정할때 다음과 같은 센서모델을 사용합니다. 이때 $\mathbf p$ 는 landmark의 위치를 나타내줍니다.

$$r = \sqrt{(p_x - x)^2 + (p_y - y)^2}

\;\;\;\;

\phi = \arctan(\frac{p_y - y}{p_x - x}) - \theta

$$

$$\begin{aligned}

\mathbf z& = h(\bar{\mathbf x}, \mathbf p) &+ \mathcal{N}(0, R)\\

&= \begin{bmatrix}

\sqrt{(p_x - x)^2 + (p_y - y)^2} \\

\arctan(\frac{p_y - y}{p_x - x}) - \theta

\end{bmatrix} &+ \mathcal{N}(0, R)

\end{aligned}$$

$h$ 의 Jacobian $\mathbf H$를 미분하여 아래의 행렬을 구해줄수 있습니다.

$$\mathbf H = \frac{\partial h(\mathbf x, \mathbf u)}{\partial \mathbf x} =

\left[\begin{matrix}\frac{- p_{x} + x}{\sqrt{\left(p_{x} - x\right)^{2} + \left(p_{y} - y\right)^{2}}} & \frac{- p_{y} + y}{\sqrt{\left(p_{x} - x\right)^{2} + \left(p_{y} - y\right)^{2}}} & 0\\- \frac{- p_{y} + y}{\left(p_{x} - x\right)^{2} + \left(p_{y} - y\right)^{2}} & - \frac{p_{x} - x}{\left(p_{x} - x\right)^{2} + \left(p_{y} - y\right)^{2}} & -1\end{matrix}\right]

$$

```

import sympy

from sympy.abc import alpha, x, y, v, w, R, theta

px, py = sympy.symbols('p_x, p_y')

z = sympy.Matrix([[sympy.sqrt((px-x)**2 + (py-y)**2)],

[sympy.atan2(py-y, px-x) - theta]])

z.jacobian(sympy.Matrix([x, y, theta]))

# print(sympy.latex(z.jacobian(sympy.Matrix([x, y, theta])))

from math import sqrt

def H_of(x, landmark_pos):

""" compute Jacobian of H matrix where h(x) computes

the range and bearing to a landmark for state x """

px = landmark_pos[0]

py = landmark_pos[1]

hyp = (px - x[0, 0])**2 + (py - x[1, 0])**2

dist = sqrt(hyp)

H = array(

[[-(px - x[0, 0]) / dist, -(py - x[1, 0]) / dist, 0],

[ (py - x[1, 0]) / hyp, -(px - x[0, 0]) / hyp, -1]])

return H

from math import atan2

def Hx(x, landmark_pos):

""" takes a state variable and returns the measurement

that would correspond to that state.

"""

px = landmark_pos[0]

py = landmark_pos[1]

dist = sqrt((px - x[0, 0])**2 + (py - x[1, 0])**2)

Hx = array([[dist],

[atan2(py - x[1, 0], px - x[0, 0]) - x[2, 0]]])

return Hx

```

측정 노이즈는 다음과 같이 나타내줍니다.

$$\mathbf R=\begin{bmatrix}\sigma_{range}^2 & 0 \\ 0 & \sigma_{bearing}^2\end{bmatrix}$$

### Implementation

`FilterPy` 의 `ExtendedKalmanFilter` class 를 활용해서 EKF 를 구현해보도록 하겠습니다.

```

from filterpy.kalman import ExtendedKalmanFilter as EKF

from numpy import array, sqrt, random

import sympy

class RobotEKF(EKF):

def __init__(self, dt, wheelbase, std_vel, std_steer):

EKF.__init__(self, 3, 2, 2)

self.dt = dt

self.wheelbase = wheelbase

self.std_vel = std_vel

self.std_steer = std_steer

a, x, y, v, w, theta, time = sympy.symbols(

'a, x, y, v, w, theta, t')

d = v*time

beta = (d/w)*sympy.tan(a)

r = w/sympy.tan(a)

self.fxu = sympy.Matrix(

[[x-r*sympy.sin(theta)+r*sympy.sin(theta+beta)],

[y+r*sympy.cos(theta)-r*sympy.cos(theta+beta)],

[theta+beta]])

self.F_j = self.fxu.jacobian(sympy.Matrix([x, y, theta]))

self.V_j = self.fxu.jacobian(sympy.Matrix([v, a]))

# save dictionary and it's variables for later use

self.subs = {x: 0, y: 0, v:0, a:0,

time:dt, w:wheelbase, theta:0}

self.x_x, self.x_y, = x, y

self.v, self.a, self.theta = v, a, theta

def predict(self, u):

self.x = self.move(self.x, u, self.dt)

self.subs[self.theta] = self.x[2, 0]

self.subs[self.v] = u[0]

self.subs[self.a] = u[1]

F = array(self.F_j.evalf(subs=self.subs)).astype(float)

V = array(self.V_j.evalf(subs=self.subs)).astype(float)

# covariance of motion noise in control space

M = array([[self.std_vel*u[0]**2, 0],

[0, self.std_steer**2]])

self.P = F @ self.P @ F.T + V @ M @ V.T

def move(self, x, u, dt):

hdg = x[2, 0]

vel = u[0]

steering_angle = u[1]

dist = vel * dt

if abs(steering_angle) > 0.001: # is robot turning?

beta = (dist / self.wheelbase) * tan(steering_angle)

r = self.wheelbase / tan(steering_angle) # radius

dx = np.array([[-r*sin(hdg) + r*sin(hdg + beta)],

[r*cos(hdg) - r*cos(hdg + beta)],

[beta]])

else: # moving in straight line

dx = np.array([[dist*cos(hdg)],

[dist*sin(hdg)],

[0]])

return x + dx

```

정확한 잔차값 $y$을 구하기 방위값이 $0 \leq \phi \leq 2\pi$ 이도록 고쳐줍니다.

```

def residual(a, b):

""" compute residual (a-b) between measurements containing

[range, bearing]. Bearing is normalized to [-pi, pi)"""

y = a - b

y[1] = y[1] % (2 * np.pi) # force in range [0, 2 pi)

if y[1] > np.pi: # move to [-pi, pi)

y[1] -= 2 * np.pi

return y

from filterpy.stats import plot_covariance_ellipse

from math import sqrt, tan, cos, sin, atan2

import matplotlib.pyplot as plt

dt = 1.0

def z_landmark(lmark, sim_pos, std_rng, std_brg):

x, y = sim_pos[0, 0], sim_pos[1, 0]

d = np.sqrt((lmark[0] - x)**2 + (lmark[1] - y)**2)

a = atan2(lmark[1] - y, lmark[0] - x) - sim_pos[2, 0]

z = np.array([[d + random.randn()*std_rng],

[a + random.randn()*std_brg]])

return z

def ekf_update(ekf, z, landmark):

ekf.update(z, HJacobian = H_of, Hx = Hx,

residual=residual,

args=(landmark), hx_args=(landmark))

def run_localization(landmarks, std_vel, std_steer,

std_range, std_bearing,

step=10, ellipse_step=20, ylim=None):

ekf = RobotEKF(dt, wheelbase=0.5, std_vel=std_vel,

std_steer=std_steer)

ekf.x = array([[2, 6, .3]]).T # x, y, steer angle

ekf.P = np.diag([.1, .1, .1])

ekf.R = np.diag([std_range**2, std_bearing**2])

sim_pos = ekf.x.copy() # simulated position

# steering command (vel, steering angle radians)

u = array([1.1, .01])

plt.figure()

plt.scatter(landmarks[:, 0], landmarks[:, 1],

marker='s', s=60)

track = []

for i in range(200):

sim_pos = ekf.move(sim_pos, u, dt/10.) # simulate robot

track.append(sim_pos)

if i % step == 0:

ekf.predict(u=u)

if i % ellipse_step == 0:

plot_covariance_ellipse(

(ekf.x[0,0], ekf.x[1,0]), ekf.P[0:2, 0:2],

std=6, facecolor='k', alpha=0.3)

x, y = sim_pos[0, 0], sim_pos[1, 0]

for lmark in landmarks:

z = z_landmark(lmark, sim_pos,

std_range, std_bearing)

ekf_update(ekf, z, lmark)

if i % ellipse_step == 0:

plot_covariance_ellipse(

(ekf.x[0,0], ekf.x[1,0]), ekf.P[0:2, 0:2],

std=6, facecolor='g', alpha=0.8)

track = np.array(track)

plt.plot(track[:, 0], track[:,1], color='k', lw=2)

plt.axis('equal')

plt.title("EKF Robot localization")

if ylim is not None: plt.ylim(*ylim)

plt.show()

return ekf

landmarks = array([[5, 10], [10, 5], [15, 15]])

ekf = run_localization(

landmarks, std_vel=0.1, std_steer=np.radians(1),

std_range=0.3, std_bearing=0.1)

print('Final P:', ekf.P.diagonal())

```

## References

* Roger R Labbe, Kalman and Bayesian Filters in Python

(https://github.com/rlabbe/Kalman-and-Bayesian-Filters-in-Python/blob/master/11-Extended-Kalman-Filters.ipynb)

* https://blog.naver.com/jewdsa813/222200570774

| github_jupyter |

# Method for visualizing warping over training steps

```

import os

import imageio

import numpy as np

import matplotlib.pyplot as plt

np.random.seed(0)

```

### Construct warping matrix

```

g = 1.02 # scaling parameter

# Matrix for rotating 45 degrees

rotate = np.array([[np.cos(np.pi/4), -np.sin(np.pi/4)],

[np.sin(np.pi/4), np.cos(np.pi/4)]])

# Matrix for scaling along x coordinate

scale_x = np.array([[g, 0],

[0, 1]])

# Matrix for scaling along y coordinate

scale_y = np.array([[1, 0],

[0, g]])

# Matrix for unrotating (-45 degrees)

unrotate = np.array([[np.cos(-np.pi/4), -np.sin(-np.pi/4)],

[np.sin(-np.pi/4), np.cos(-np.pi/4)]])

# Warping matrix

warp = rotate @ scale_x @ unrotate

# Unwarping matrix

unwarp = rotate @ scale_y @ unrotate

```

### Warp grid slowly over time

```

# Construct 4x4 grid

s = 1 # initial scale

locs = [[x,y] for x in range(4) for y in range(4)]

grid = s*np.array(locs)

# Matrix to collect data

n_steps = 50

warp_data = np.zeros([n_steps, 16, 2])

# Initial timestep has no warping

warp_data[0,:,:] = grid

# Warp slowly over time

for i in range(1,n_steps):

grid = grid @ warp

warp_data[i,:,:] = grid

fig, ax = plt.subplots(1,3, figsize=(15, 5), sharex=True, sharey=True)

ax[0].scatter(warp_data[0,:,0], warp_data[0,:,1])

ax[0].set_title("Warping: step 0")

ax[1].scatter(warp_data[n_steps//2,:,0], warp_data[n_steps//2,:,1])

ax[1].set_title("Warping: Step {}".format(n_steps//2))

ax[2].scatter(warp_data[n_steps-1,:,0], warp_data[n_steps-1,:,1])

ax[2].set_title("Warping: Step {}".format(n_steps-1))

plt.show()

```

### Unwarp grid slowly over time

```

# Matrix to collect data

unwarp_data = np.zeros([n_steps, 16, 2])

# Start with warped grid

unwarp_data[0,:,:] = grid

# Unwarp slowly over time

for i in range(1,n_steps):

grid = grid @ unwarp

unwarp_data[i,:,:] = grid

fig, ax = plt.subplots(1,3, figsize=(15, 5), sharex=True, sharey=True)

ax[0].scatter(unwarp_data[0,:,0], unwarp_data[0,:,1])

ax[0].set_title("Unwarping: Step 0")

# ax[0].set_ylim([-0.02, 0.05])

# ax[0].set_xlim([-0.02, 0.05])

ax[1].scatter(unwarp_data[n_steps//2,:,0], unwarp_data[n_steps//2,:,1])

ax[1].set_title("Unwarping: Step {}".format(n_steps//2))

ax[2].scatter(unwarp_data[n_steps-1,:,0], unwarp_data[n_steps-1,:,1])

ax[2].set_title("Unwarping: Step {}".format(n_steps-1))

plt.show()

```

### High-dimensional vectors with random projection matrix

```

# data = [warp_data, unwarp_data]

data = np.concatenate([warp_data, unwarp_data], axis=0)

# Random projection matrix

hidden_dim = 32

random_mat = np.random.randn(2, hidden_dim)

data = data @ random_mat

# Add noise to each time step

sigma = 0.2

noise = sigma*np.random.randn(2*n_steps, 16, hidden_dim)

data = data + noise

```

### Parameterize scatterplot with average "congruent" and "incongruent" distances

```

loc2idx = {i:(loc[0],loc[1]) for i,loc in enumerate(locs)}

idx2loc = {v:k for k,v in loc2idx.items()}

```

Function for computing distance matrix

```

def get_distances(M):

n,m = M.shape

D = np.zeros([n,n])

for i in range(n):

for j in range(n):

D[i,j] = np.linalg.norm(M[i,:] - M[j,:])

return D

D = get_distances(data[0])

plt.imshow(D)

plt.show()

```

Construct same-rank groups for "congruent" and "incongruent" diagonals

```

c_rank = np.array([loc[0] + loc[1] for loc in locs]) # rank along "congruent" diagonal

i_rank = np.array([3 + loc[0] - loc[1] for loc in locs]) # rank along "incongruent" diagonal

G_idxs = [] # same-rank group for "congruent" diagonal

H_idxs = [] # same-rank group for "incongruent" diagonal

for i in range(7): # total number of ranks (0 through 6)

G_set = [j for j in range(len(c_rank)) if c_rank[j] == i]

H_set = [j for j in range(len(i_rank)) if i_rank[j] == i]

G_idxs.append(G_set)

H_idxs.append(H_set)

```

Function for estimating $ \alpha $ and $ \beta $

$$ \bar{x_i} = \sum_{x \in G_i} \frac{1}{n} x $$

$$ \alpha_{i, i+1} = || \bar{x}_i - \bar{x}_{i+1} || $$

$$ \bar{y_i} = \sum_{y \in H_i} \frac{1}{n} y $$

$$ \beta_{i, i+1} = || \bar{y}_i - \bar{y}_{i+1} || $$

```

def get_parameters(M):

# M: [16, hidden_dim]

alpha = []

beta = []

for i in range(6): # total number of parameters (01,12,23,34,45,56)

# alpha_{i, i+1}

x_bar_i = np.mean(M[G_idxs[i],:], axis=0)

x_bar_ip1 = np.mean(M[G_idxs[i+1],:], axis=0)

x_dist = np.linalg.norm(x_bar_i - x_bar_ip1)

alpha.append(x_dist)

# beta_{i, i+1}

y_bar_i = np.mean(M[H_idxs[i],:], axis=0)

y_bar_ip1 = np.mean(M[H_idxs[i+1],:], axis=0)

y_dist = np.linalg.norm(y_bar_i - y_bar_ip1)

beta.append(y_dist)

return alpha, beta

alpha_data = []

beta_data = []

for t in range(len(data)):

alpha, beta = get_parameters(data[t])

alpha_data.append(alpha)

beta_data.append(beta)

plt.plot(alpha_data, color='tab:blue')

plt.plot(beta_data, color='tab:orange')

plt.show()

```

Use parameters to plot idealized 2D representations

```

idx2g = {}

for idx in range(16):

for g, group in enumerate(G_idxs):

if idx in group:

idx2g[idx] = g

idx2h = {}

for idx in range(16):

for h, group in enumerate(H_idxs):

if idx in group:

idx2h[idx] = h

def generate_grid(alpha, beta):

cum_alpha = np.zeros(7)

cum_beta = np.zeros(7)

cum_alpha[1:] = np.cumsum(alpha)

cum_beta[1:] = np.cumsum(beta)

# Get x and y coordinate in rotated basis

X = np.zeros([16, 2])

for idx in range(16):

g = idx2g[idx] # G group

h = idx2h[idx] # H group

X[idx,0] = cum_alpha[g] # x coordinate

X[idx,1] = cum_beta[h] # y coordinate

# Unrotate

unrotate = np.array([[np.cos(-np.pi/4), -np.sin(-np.pi/4)],

[np.sin(-np.pi/4), np.cos(-np.pi/4)]])

X = X @ unrotate

# Mean-center

X = X - np.mean(X, axis=0, keepdims=True)

return X

X = generate_grid(alpha, beta)

```

Get reconstructed grid for each time step

```

reconstruction = np.zeros([data.shape[0], data.shape[1], 2])

for t,M in enumerate(data):

alpha, beta = get_parameters(M)

X = generate_grid(alpha, beta)

reconstruction[t,:,:] = X

t = 50

plt.scatter(reconstruction[t,:,0], reconstruction[t,:,1])

plt.show()

```

### Make .gif

```

plt.scatter(M[:,0], M[:,1])

reconstruction.shape

xmin = np.min(reconstruction[:,:,0])

xmax = np.max(reconstruction[:,:,0])

ymin = np.min(reconstruction[:,:,1])

ymax = np.max(reconstruction[:,:,1])

for t,M in enumerate(reconstruction):

plt.scatter(M[:,0], M[:,1])

plt.title("Reconstructed grid")

plt.xlim([xmin-1.5, xmax+1.5])

plt.ylim([ymin-1.5, ymax+1.5])

plt.xticks([])

plt.yticks([])

plt.tight_layout()

plt.savefig('reconstruction_test_{}.png'.format(t), dpi=100)

plt.show()

filenames = ['reconstruction_test_{}.png'.format(i) for i in range(2*n_steps)]

with imageio.get_writer('reconstruction_test.gif', mode='I') as writer:

for filename in filenames:

image = imageio.imread(filename)

writer.append_data(image)

# remove files

for filename in filenames:

os.remove(filename)

```

<img src="reconstruction_test.gif" width="750" align="center">

| github_jupyter |

# Documenting Classes

It is almost as easy to document a class as it is to document a function. Simply add docstrings to all of the classes functions, and also below the class name itself. For example, here is a simple documented class

```

class Demo:

"""This class demonstrates how to document a class.

This class is just a demonstration, and does nothing.

However the principles of documentation are still valid!

"""

def __init__(self, name):

"""You should document the constructor, saying what it expects to

create a valid class. In this case

name -- the name of an object of this class

"""

self._name = name

def getName(self):

"""You should then document all of the member functions, just as

you do for normal functions. In this case, returns

the name of the object

"""

return self._name

d = Demo("cat")

help(d)

```

Often, when you write a class, you want to hide member data or member functions so that they are only visible within an object of the class. For example, above, the `self._name` member data should be hidden, as it should only be used by the object.

You control the visibility of member functions or member data using an underscore. If the member function or member data name starts with an underscore, then it is hidden. Otherwise, the member data or function is visible.

For example, we can hide the `getName` function by renaming it to `_getName`

```

class Demo:

"""This class demonstrates how to document a class.

This class is just a demonstration, and does nothing.

However the principles of documentation are still valid!

"""

def __init__(self, name):

"""You should document the constructor, saying what it expects to

create a valid class. In this case

name -- the name of an object of this class

"""

self._name = name

def _getName(self):

"""You should then document all of the member functions, just as

you do for normal functions. In this case, returns

the name of the object

"""

return self._name

d = Demo("cat")

help(d)

```

Member functions or data that are hidden are called "private". Member functions or data that are visible are called "public". You should document all public member functions of a class, as these are visible and designed to be used by other people. It is helpful, although not required, to document all of the private member functions of a class, as these will only really be called by you. However, in years to come, you will thank yourself if you still documented them... ;-)

While it is possible to make member data public, it is not advised. It is much better to get and set values of member data using public member functions. This makes it easier for you to add checks to ensure that the data is consistent and being used in the right way. For example, compare these two classes that represent a person, and hold their height.

```

class Person1:

"""Class that holds a person's height"""

def __init__(self):

"""Construct a person who has zero height"""

self.height = 0

class Person2:

"""Class that holds a person's height"""

def __init__(self):

"""Construct a person who has zero height"""

self._height = 0

def setHeight(self, height):

"""Set the person's height to 'height', returning whether or

not the height was set successfully

"""

if height < 0 or height > 300:

print("This is an invalid height! %s" % height)

return False

else:

self._height = height

return True

def getHeight(self):

"""Return the person's height"""

return self._height

```

The first example is quicker to write, but it does little to protect itself against a user who attempts to use the class badly.

```

p = Person1()

p.height = -50

p.height

p.height = "cat"

p.height

```

The second example takes more lines of code, but these lines are valuable as they check that the user is using the class correctly. These checks, when combined with good documentation, ensure that your classes can be safely used by others, and that incorrect use will not create difficult-to-find bugs.

```

p = Person2()

p.setHeight(-50)

p.getHeight()

p.setHeight("cat")

p.getHeight()

```

# Exercise

## Exercise 1

Below is the completed `GuessGame` class from the previous lesson. Add documentation to this class.

```

class GuessGame:

"""

This class provides a simple guessing game. You create an object

of the class with its own secret, with the aim that a user

then needs to try to guess what the secret is.

"""

def __init__(self, secret, max_guesses=5):

"""Create a new guess game

secret -- the secret that must be guessed

max_guesses -- the maximum number of guesses allowed by the user

"""

self._secret = secret

self._nguesses = 0

self._max_guesses = max_guesses

def guess(self, value):

"""Try to guess the secret. This will print out to the screen whether

or not the secret has been guessed.

value -- the user-supplied guess

"""

if (self.nGuesses() >= self.maxGuesses()):

print("Sorry, you have run out of guesses")

elif (value == self._secret):

print("Well done - you have guessed my secret")

else:

self._nguesses += 1

print("Try again...")

def nGuesses(self):

"""Return the number of incorrect guesses made so far"""

return self._nguesses

def maxGuesses(self):

"""Return the maximum number of incorrect guesses allowed"""

return self._max_guesses

help(GuessGame)

```

## Exercise 2

Below is a poorly-written class that uses public member data to store the name and age of a Person. Edit this class so that the member data is made private. Add `get` and `set` functions that allow you to safely get and set the name and age.

```

class Person:

"""Class the represents a Person, holding their name and age"""

def __init__(self, name="unknown", age=0):

"""Construct a person with unknown name and an age of 0"""

self.setName(name)

self.setAge(age)

def setName(self, name):

"""Set the person's name to 'name'"""

self._name = str(name) # str ensures the name is a string

def getName(self):

"""Return the person's name"""

return self._name

def setAge(self, age):

"""Set the person's age. This must be a number between 0 and 130"""

if (age < 0 or age > 130):

print("Cannot set the age to an invalid value: %s" % age)

self._age = age

def getAge(self):

"""Return the person's age"""

return self._age

p = Person(name="Peter Parker", age=21)

p.getName()

p.getAge()

```

## Exercise 3

Add a private member function called `_splitName` to your `Person` class that breaks the name into a surname and first name. Add new functions called `getFirstName` and `getSurname` that use this function to return the first name and surname of the person.

```

class Person:

"""Class the represents a Person, holding their name and age"""

def __init__(self, name="unknown", age=0):

"""Construct a person with unknown name and an age of 0"""

self.setName(name)

self.setAge(age)

def setName(self, name):

"""Set the person's name to 'name'"""

self._name = str(name) # str ensures the name is a string

def getName(self):

"""Return the person's name"""

return self._name

def setAge(self, age):

"""Set the person's age. This must be a number between 0 and 130"""

if (age < 0 or age > 130):

print("Cannot set the age to an invalid value: %s" % age)

self._age = age

def getAge(self):

"""Return the person's age"""

return self._age

def _splitName(self):

"""Private function that splits the name into parts"""

return self._name.split(" ")

def getFirstName(self):

"""Return the first name of the person"""

return self._splitName()[0]

def getSurname(self):

"""Return the surname of the person"""

return self._splitName()[-1]

p = Person(name="Peter Parker", age=21)

p.getFirstName()

p.getSurname()

```

| github_jupyter |

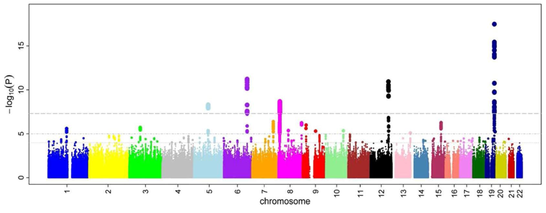

# Lab 4: EM Algorithm and Single-Cell RNA-seq Data

### Name: Your Name Here (Your netid here)

### Due April 2, 2021 11:59 PM

#### Preamble (Don't change this)

## Important Instructions -

1. Please implement all the *graded functions* in main.py file. Do not change function names in main.py.

2. Please read the description of every graded function very carefully. The description clearly states what is the expectation of each graded function.

3. After some graded functions, there is a cell which you can run and see if the expected output matches the output you are getting.

4. The expected output provided is just a way for you to assess the correctness of your code. The code will be tested on several other cases as well.

```

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%run main.py

module = Lab4()

```

## Part 1 : Expectation-Maximization (EM) algorithm for transcript quantification

## Introduction

The EM algorithm is a very helpful tool to compute maximum likelihood estimates of parameters in models that have some latent (hidden) variables.

In the case of the transcript quantification problem, the model parameters we want to estimate are the transcript relative abundances $\rho_1,...,\rho_K$.

The latent variables are the read-to-transcript indicator variables $Z_{ik}$, which indicate whether the $i$th read comes from the $k$th transcript (in which case $Z_{ik}=1$.

In this part of the lab, you will be given the read alignment data.

For each read and transcript pair, it tells you whether the read can be mapped (i.e., aligned) to that transcript.

Using the EM algorithm, you will estimate the relative abundances of the trascripts.

### Reading read transcript data - We have 30000 reads and 30 transcripts

```

n_reads=30000

n_transcripts=30

read_mapping=[]

with open("read_mapping_data.txt",'r') as file :

lines_reads=file.readlines()

for line in lines_reads :

read_mapping.append([int(x) for x in line.split(",")])

read_mapping[:10]

```

Rather than giving you a giant binary matrix, we encoded the read mapping data in a more concise way. read_mapping is a list of lists. The $i$th list contains the indices of the transcripts that the $i$th read maps to.

### Reading true abundances and transcript lengths

```

with open("transcript_true_abundances.txt",'r') as file :

lines_gt=file.readlines()

ground_truth=[float(x) for x in lines_gt[0].split(",")]

with open("transcript_lengths.txt",'r') as file :

lines_gt=file.readlines()

tr_lengths=[float(x) for x in lines_gt[0].split(",")]

ground_truth[:5]

tr_lengths[:5]

```

## Graded Function 1 : expectation_maximization (10 marks)

Purpose : To implement the EM algorithm to obtain abundance estimates for each transcript.

E-step : In this step, we calculate the fraction of read that is assigned to each transcript (i.e., the estimate of $Z_{ik}$). For read $i$ and transicript $k$, this is calculated by dividing the current abundance estimate of transcript $k$ by the sum of abundance estimates of all transcripts that read $i$ maps to.

M-step : In this step, we update the abundance estimate of each transcript based on the fraction of all reads that is currently assigned to the transcript. First we compute the average fraction of all reads assigned to the transcript. Then, (if transcripts are of different lengths) we divide the result by the transcript length.

Finally, we normalize all abundance estimates so that they add up to 1.

Inputs - read_mapping (which is a list of lists where each sublist contains the transcripts to which a particular read belongs to. The length of this list is equal to the number of reads, i.e. 30000; tr_lengths (a list containing the length of the 30 transcripts, in order); n_iterations (the number of EM iterations to be performed)

Output - a list of lists where each sublist contains the abundance estimates for a transcript across all iterations. The length of each sublist should be equal to the number of iterations plus one (for the initialization) and the total number of sublists should be equal to the number of transcripts.

```

history=module.expectation_maximization(read_mapping,tr_lengths,20)

print(len(history))

print(len(history[0]))

print(history[0][-5:])

print(history[1][-5:])

print(history[2][-5:])

```

## Expected Output -

30

21

[0.033769639494636614, 0.03381298624783303, 0.03384568373972948, 0.0338703482393148, 0.03388895326082054]

[0.0020082674603036053, 0.0019649207071071456, 0.0019322232152109925, 0.0019075587156241912, 0.0018889536941198502]

[0.0660581789629968, 0.06606927656035864, 0.06607650126895578, 0.06608120466668756, 0.0660842666518177]

You can use the following function to visualize how the estimated relative abundances are converging with the number of iterations of the algorithm.

```

def visualize_em(history,n_iterations) :

#start code here

fig, ax = plt.subplots(figsize=(8,6))

for j in range(n_transcripts):

ax.plot([i for i in range(n_iterations+1)],[history[j][i] - ground_truth[j] for i in range(n_iterations+1)],marker='o')

#end code here

visualize_em(history,20)

```

## Part 2 : Exploring Single-Cell RNA-seq data

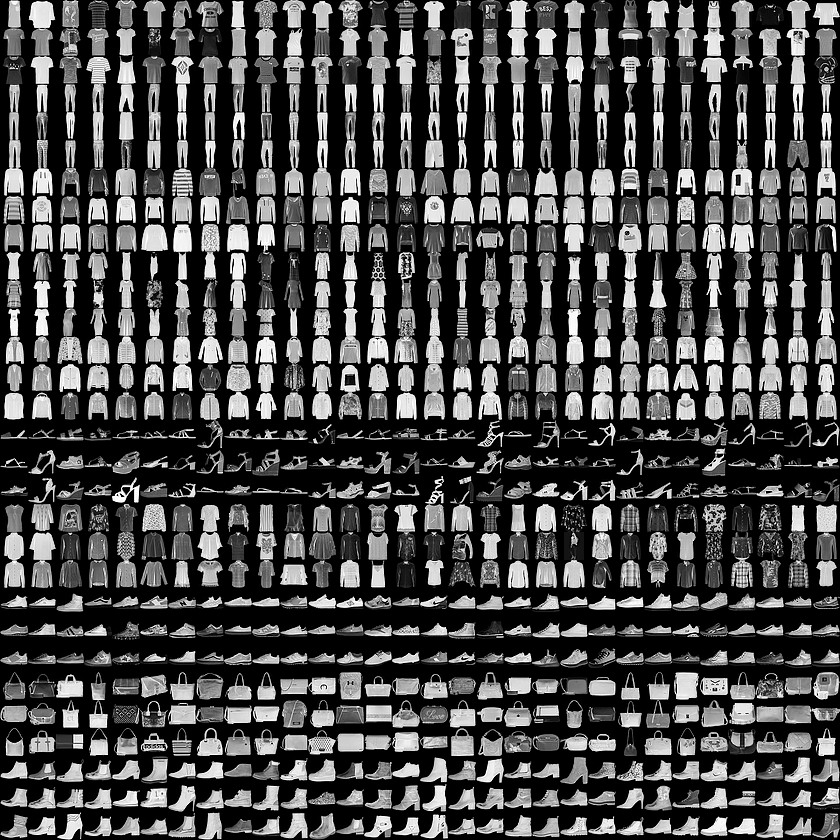

In a study published in 2015, Zeisel et al. used single-cell RNA-seq data to explore the cell diversity in the mouse brain.

We will explore the data used for their study.

You can read more about it [here](https://science.sciencemag.org/content/347/6226/1138).

```

#reading single-cell RNA-seq data

lines_genes=[]

with open("Zeisel_expr.txt",'r') as file :

lines_genes=file.readlines()

lines_genes[0][:300]

```

Each line in the file Zeisel_expr.txt corresponds to one gene.

The columns correspond to different cells (notice that this is the opposite of how we looked at this matrix in class).

The entries of this matrix correspond to the number of reads mapping to a given gene in the corresponding cell.

```

# reading true labels for each cell

with open("Zeisel_labels.txt",'r') as file :

true_labels = file.read().splitlines()

```

The study also provides us with true labels for each of the cells.

For each of the cells, the vector true_labels contains the name of the cell type.

There are nine different cell types in this dataset.

```

set(true_labels)

```

## Graded Function 2 : prepare_data (10 marks) :

Purpose - To create a dataframe where each row corresponds to a specific cell and each column corresponds to the expressions levels of a particular gene across all cells.

You should name the columns as "Gene_1", "Gene_2", and so on.

We will iterate through all the lines in lines_genes list created above, add 1 to each value and take log.

Each line will correspond to 1 column in the dataframe

Output - gene expression dataframe

### Note - All the values in the output dataframe should be rounded off to 5 digits after the decimal

```

data_df=module.prepare_data(lines_genes)

print(data_df.shape)

print(data_df.iloc[0:3,:5])

print(data_df.columns)

```

## Expected Output :

``(3005, 19972)``

`` Gene_0 Gene_1 Gene_2 Gene_3 Gene_4``

``0 0.0 1.38629 1.38629 0.0 0.69315``

``1 0.0 0.69315 0.69315 0.0 0.69315``

``2 0.0 0.00000 1.94591 0.0 0.69315``

## Graded Function 3 : identify_less_expressive_genes (10 marks)

Purpose : To identify genes (columns) that are expressed in less than 25 cells. We will create a list of all gene columns that have values greater than 0 for less than 25 cells.

Input - gene expression dataframe

Output - list of column names which are expressed in less than 25 cells

```

drop_columns = module.identify_less_expressive_genes(data_df)

print(len(drop_columns))

print(drop_columns[:10])

```

## Expected Output :

``5120``

``['Gene_28', 'Gene_126', 'Gene_145', 'Gene_146', 'Gene_151', 'Gene_152', 'Gene_167', 'Gene_168', 'Gene_170', 'Gene_173']``

### Filtering less expressive genes

We will now create a new dataframe in which genes which are expressed in less than 25 cells will not be present

```

df_new = data_df.drop(drop_columns, axis=1)

df_new.head()

```

## Graded Function 4 : perform_pca (10 marks)

Pupose - Perform Principal Component Analysis on the new dataframe and take the top 50 principal components

Input - df_new

Output - numpy array containing the top 50 principal components of the data.

### Note - All the values in the output should be rounded off to 5 digits after the decimal

### Note - Please use random_state=365 for the PCA object you will create

```

pca_data=module.perform_pca(df_new)

print(pca_data.shape)

print(type(pca_data))

print(pca_data[0:3,:5])

```

## Expected Output :

``(3005, 50)``

``<class 'numpy.ndarray'>``

``[[26.97148 -2.7244 0.62163 25.90148 -6.24736]``

`` [26.49135 -1.58774 -4.79315 24.01094 -7.25618]``

`` [47.82664 5.06799 2.15177 30.24367 -3.38878]]``

## (Non-graded) Function 5 : perform_tsne

Pupose - Perform t-SNE on the pca_data and obtain 2 t-SNE components

We will use TSNE class of the sklearn.manifold package. Use random_state=1000 and perplexity=50

Documenation can be found here - https://scikit-learn.org/stable/modules/generated/sklearn.manifold.TSNE.html

Input - pca_data

Output - numpy array containing the top 2 tsne components of the data.

**Note: This function will not be graded because of the random nature of t-SNE.**

```

tsne_data50 = module.perform_tsne(pca_data)

print(tsne_data50.shape)

print(tsne_data50[:3,:])

```

## Expected Output :

(These numbers can deviate a bit depending on your sklearn)

``(3005, 2)``

``[[ 15.069608 -47.535984]``

`` [ 15.251476 -47.172073]``

`` [ 13.3932 -49.909657]]``

```

fig, ax = plt.subplots(figsize=(12,8))

sns.scatterplot(tsne_data50[:,0], tsne_data50[:,1], hue=true_labels)

plt.show()

```

Notice that the different cell types form clusters (which can be easily visualized on the t-SNE space).

Zeisel et al. performed clustering on this data in order to identify and label the different cell types.

You can try using clustering methods (such as k-means and GMM) to cluster the single-cell RNA-seq data of Zeisel at al. and see if your results agree with theirs!

| github_jupyter |

# Week 2 Tasks

During this week's meeting, we have discussed about if/else statements, Loops and Lists. This notebook file will guide you through reviewing the topics discussed and assisting you to be familiarized with the concepts discussed.

## Let's first create a list

```

# Create a list that stores the multiples of 5, from 0 to 50 (inclusive)

# initialize the list using list comprehension!

# Set the list name to be 'l'

# TODO: Make the cell return 'True'

# Hint: Do you remember that you can apply arithmetic operators in the list comprehension?

# Your code goes below here

# Do not modify below

l == [0, 5, 10, 15, 20, 25, 30, 35, 40, 45, 50]

```

If you are eager to learn more about list comprehension, you can look up here -> https://www.programiz.com/python-programming/list-comprehension. You will find out how you can initialize `l` without using arithmetic operators, but using conditionals (if/else).

Now, simply run the cell below, and observe how `l` has changed.

```

l[0] = 3

print(l)

l[5]

```

As seen above, you can overwrite each elements of the list.

Using this fact, complete the task written below.

## If/elif/else practice

```

# Write a for loop such that:

# For each elements in the list l,

# If the element is divisible by 6, divide the element by 6

# Else if the element is divisible by 3, divide the element by 3 and then add 4

# Else if the element is divisible by 2, subtract 10.

# Else, square the element

# TODO: Make the cell return 'True'

l = [3, 5, 10, 15, 20, 25, 30, 35, 40, 45, 50]

# Your code goes below here

# Do not modify below

l = [int(i) for i in l]

l == [5, 25, 0, 9, 10, 625, 5, 1225, 30, 19, 40]

```

## Limitations of a ternary operator

```

# Write a for loop that counts the number of odd number elements in the list

# and the number of even number elements in the list

# These should be stored in the variables 'odd_count' and 'even_count', which are declared below.

# Try to use the ternary operator inside the for loop and inspect why it does not work

# TODO: Make the cell return 'True'

l = [5, 25, 0, 9, 10, 625, 5, 1225, 30, 19, 40]

odd_count, even_count = 0, 0

# Your code goes below here

# Do not modify below

print("There are 7 odd numbers in the list.") if odd_count == 7 else print("Your odd_count is not correct.")

print("There are 4 even numbers in the list.") if even_count == 4 else print("Your even_count is not correct.")

print(odd_count == 7 and even_count == 4 and odd_count + even_count == len(l))

```

If you have tried using the ternary operator in the cell above, you would have found that the cell fails to compile because of a syntax error. This is because you can only write *expressions* in ternary operators, specifically **the last segment of the three segments in the operator**, not *statements*.

In other words, since your code (which last part of it would have been something like `odd_count += 1` or `even_count += 1`) is a *statement*, the code is syntactically incorrect.

To learn more about *expressions* and *statements*, please refer to this webpage -> https://runestone.academy/runestone/books/published/thinkcspy/SimplePythonData/StatementsandExpressions.html

Thus, a code like `a += 1 if <CONDITION> else b += 1` is syntactically wrong as `b += 1` is a *statement*, and we cannot use the ternary operator to achieve something like this.

In fact, ternary operators are usually used like this: `a += 1 if <CONDITION> else 0`.

The code above behaves exactly the same as this: `if <CONDITION>: a += 1 else: a = 0`.

Does this give better understanding about why statements cannot be used in ternary operators? If not, feel free to do more research on your own, or open up a discussion during the next team meeting!

## While loop and boolean practice

```

# Write a while loop that finds an index of the element in 'l' which first exceeds 1000.

# The index found should be stored in the variable 'large_index'