text

stringlengths 2.5k

6.39M

| kind

stringclasses 3

values |

|---|---|

# Cat Dog Classification

## 1. 下载数据

我们将使用包含猫与狗图片的数据集。它是Kaggle.com在2013年底计算机视觉竞赛提供的数据集的一部分,当时卷积神经网络还不是主流。可以在以下位置下载原始数据集: `https://www.kaggle.com/c/dogs-vs-cats/data`。

图片是中等分辨率的彩色JPEG。看起来像这样:

不出所料,2013年的猫狗大战的Kaggle比赛是由使用卷积神经网络的参赛者赢得的。最佳成绩达到了高达95%的准确率。在本例中,我们将非常接近这个准确率,即使我们将使用不到10%的训练集数据来训练我们的模型。

原始数据集的训练集包含25,000张狗和猫的图像(每个类别12,500张),543MB大(压缩)。

在下载并解压缩之后,我们将创建一个包含三个子集的新数据集:

* 每个类有1000个样本的训练集,

* 每个类500个样本的验证集,

* 最后是每个类500个样本的测试集。

数据已经提前处理好。

### 1.1 加载数据集目录

```

import os, shutil

# The directory where we will

# store our smaller dataset

base_dir = './data/cats_and_dogs_small'

# Directories for our training,

# validation and test splits

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'validation')

test_dir = os.path.join(base_dir, 'test')

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

# Directory with our validation cat pictures

test_cats_dir = os.path.join(test_dir, 'cats')

# Directory with our validation dog pictures

test_dogs_dir = os.path.join(test_dir, 'dogs')

```

## 2. 模型一

### 2.1 数据处理

```

from keras.preprocessing.image import ImageDataGenerator

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

validation_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

# 150*150

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = validation_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

print('train_dir: ',train_dir)

print('validation_dir: ',validation_dir)

print('test_dir: ',test_dir)

for data_batch, labels_batch in train_generator:

print('data batch shape:', data_batch.shape)

print('labels batch shape:', labels_batch.shape)

break

labels_batch

```

### 2.2 构建模型

```

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

from keras import optimizers

model.compile(optimizer=optimizers.RMSprop(lr=1e-4),

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit_generator(train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50)

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper right')

plt.show()

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper right')

plt.show()

val_loss_min = history.history['val_loss'].index(min(history.history['val_loss']))

val_acc_max = history.history['val_acc'].index(max(history.history['val_acc']))

print('validation set min loss: ', val_loss_min)

print('validation set max accuracy: ', val_acc_max)

from keras import layers

from keras import models

# vgg的做法

model = models.Sequential()

model.add(layers.Conv2D(32, 3, activation='relu', padding="same", input_shape=(64, 64, 3)))

model.add(layers.Conv2D(32, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(64, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(64, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(128, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(128, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(256, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(256, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=2))

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

from keras import optimizers

model.compile(optimizer=optimizers.RMSprop(lr=1e-4),

loss='binary_crossentropy',

metrics=['acc'])

# model.compile(loss='binary_crossentropy',

# optimizer='adam',

# metrics=['accuracy'])

```

### 2.3 训练模型

```

history = model.fit_generator(train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50)

```

### 2.4 画出表现

```

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

val_loss_min = val_loss.index(min(val_loss))

val_acc_max = val_acc.index(max(val_acc))

print('validation set min loss: ', val_loss_min)

print('validation set max accuracy: ', val_acc_max)

```

### 2.5 测试集表现

```

scores = model.evaluate_generator(test_generator, verbose=0)

print("Large CNN Error: %.2f%%" % (100 - scores[1] * 100))

```

## 3. 模型二 使用数据增强来防止过拟合

### 3.1 数据增强示例

```

datagen = ImageDataGenerator(

rotation_range=40, # 角度值(在 0~180 范围内),表示图像随机旋转的角度范围

width_shift_range=0.2, # 图像在水平或垂直方向上平移的范围

height_shift_range=0.2, # (相对于总宽度或总高度的比例)

shear_range=0.2, # 随机错切变换的角度

zoom_range=0.2, # 图像随机缩放的范围

horizontal_flip=True, # 随机将一半图像水平翻转

fill_mode='nearest') # 用于填充新创建像素的方法,

# 这些新像素可能来自于旋转或宽度/高度平移

# This is module with image preprocessing utilities

from keras.preprocessing import image

fnames = [os.path.join(train_cats_dir, fname) for fname in os.listdir(train_cats_dir)]

# We pick one image to "augment"

img_path = fnames[3]

# Read the image and resize it

img = image.load_img(img_path, target_size=(150, 150))

imgplot_oringe = plt.imshow(img)

# Convert it to a Numpy array with shape (150, 150, 3)

x = image.img_to_array(img)

# Reshape it to (1, 150, 150, 3)

x = x.reshape((1,) + x.shape)

# The .flow() command below generates batches of randomly transformed images.

# It will loop indefinitely, so we need to `break` the loop at some point!

i = 0

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array_to_img(batch[0]))

i += 1

if i % 4 == 0:

break

plt.show()

```

### 3.2 定义数据增强

```

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,)

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator(rescale=1./255) # 注意,不能增强验证数据

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=32,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=32,

class_mode='binary')

```

### 3.3 训练网络

```

model = models.Sequential()

model.add(layers.Conv2D(32, 3, activation='relu', padding="same", input_shape=(150, 150, 3)))

model.add(layers.Conv2D(32, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(64, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(64, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(128, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(128, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(256, 3, activation='relu', padding="same"))

model.add(layers.Conv2D(256, 3, activation='relu', padding="same"))

model.add(layers.MaxPooling2D(pool_size=2))

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(1, activation='sigmoid'))

# model.compile(optimizer=optimizers.RMSprop(lr=1e-4),

# loss='binary_crossentropy',

# metrics=['acc'])

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

history = model.fit_generator(train_generator,

steps_per_epoch=100, # 训练集分成100批送进去,相当于每批送20个

epochs=100, # 循环100遍

validation_data=validation_generator,

validation_steps=50, # 验证集分50批送进去,每批20个

verbose=0)

```

### 3.4 画出表现

```

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

val_loss_min = val_loss.index(min(val_loss))

val_acc_max = val_acc.index(max(val_acc))

print('validation set min loss: ', val_loss_min)

print('validation set max accuracy: ', val_acc_max)

# train_datagen = ImageDataGenerator(rotation_range=40,

# width_shift_range=0.2,

# height_shift_range=0.2,

# shear_range=0.2,

# zoom_range=0.2,

# horizontal_flip=True,

# fill_mode='nearest')

# train_datagen.fit(train_X)

# train_generator = train_datagen.flow(train_X, train_y,

# batch_size = 64)

# history = model_vgg16.fit_generator(train_generator,

# validation_data = (test_X, test_y),

# steps_per_epoch = train_X.shape[0] / 100,

# epochs = 10)

```

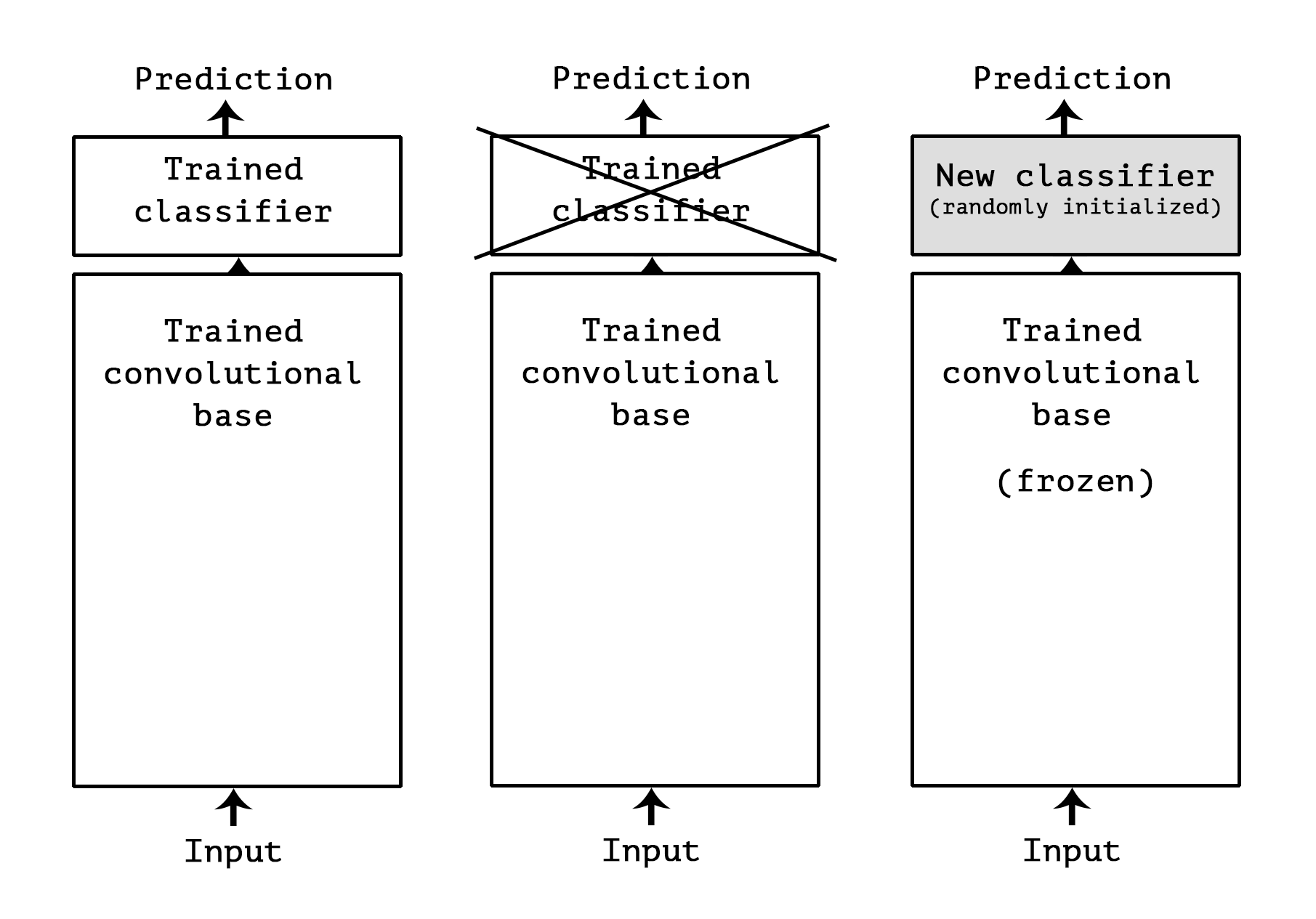

## 4. 使用预训练的VGG-16

```

from keras.applications import VGG16

conv_base = VGG16(weights='imagenet',

include_top=False, # 不要分类层

input_shape=(150, 150, 3))

conv_base.summary()

from keras import models

from keras import layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

# model = models.Sequential()

# model.add(conv_base)

# model.add(layers.Dense(256, activation='relu'))

# model.add(layers.Dropout(0.5))

# model.add(layers.Dense(256, activation='relu'))

# model.add(layers.Dropout(0.5))

# model.add(layers.Dense(1, activation='sigmoid'))

print('This is the number of trainable weights '

'before freezing the conv base:', len(model.trainable_weights))

conv_base.trainable = False

print('This is the number of trainable weights '

'after freezing the conv base:', len(model.trainable_weights))

from keras.preprocessing.image import ImageDataGenerator

from keras import optimizers

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=2e-5),

metrics=['acc'])

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50,

verbose=2)

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

val_loss_min = val_loss.index(min(val_loss))

val_acc_max = val_acc.index(max(val_acc))

print('validation set min loss: ', val_loss_min)

print('validation set max accuracy: ', val_acc_max)

```

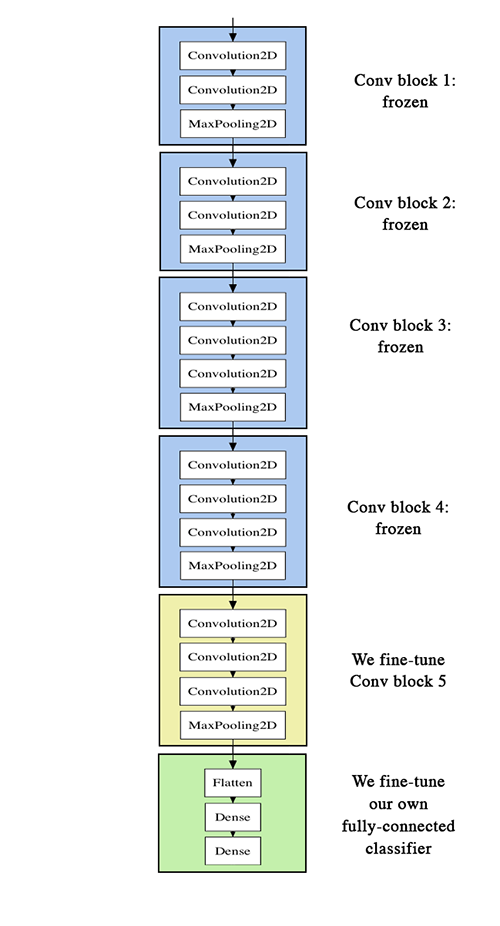

## Fine-tuning

```

conv_base.summary()

conv_base.trainable = True

set_trainable = False

for layer in conv_base.layers:

if layer.name == 'block5_conv1':

set_trainable = True

if set_trainable:

layer.trainable = True

else:

layer.trainable = False

model.summary()

model.compile(optimizer=optimizers.RMSprop(lr=1e-5),

loss='binary_crossentropy',

metrics=['acc'])

history = model.fit_generator(train_generator,

steps_per_epoch=100,

epochs=100,

validation_data=validation_generator,

validation_steps=50,

verbose=0)

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

def smooth_curve(points, factor=0.8):

smoothed_points = []

for point in points:

if smoothed_points:

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

plt.plot(epochs,

smooth_curve(acc), 'bo', label='Smoothed training acc')

plt.plot(epochs,

smooth_curve(val_acc), 'b', label='Smoothed validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs,

smooth_curve(loss), 'bo', label='Smoothed training loss')

plt.plot(epochs,

smooth_curve(val_loss), 'b', label='Smoothed validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

smooth_val_loss = smooth_curve(val_loss)

smooth_val_loss.index(min(smooth_val_loss))

test_generator = test_datagen.flow_from_directory(test_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

test_loss, test_acc = model.evaluate_generator(test_generator, steps=50)

print('test acc:', test_acc)

# plt.plot(history.history['loss'])

# plt.plot(history.history['val_loss'])

# plt.title('model loss')

# plt.ylabel('loss')

# plt.xlabel('epoch')

# plt.legend(['train', 'test'], loc='upper right')

# plt.show()

# plt.plot(history.history['acc'])

# plt.plot(history.history['val_acc'])

# plt.title('model accuracy')

# plt.ylabel('accuracy')

# plt.xlabel('epoch')

# plt.legend(['train', 'test'], loc='upper right')

# plt.show()

```

|

github_jupyter

|

# Widget Events

In this lecture we will discuss widget events, such as button clicks!

## Special events

The `Button` is not used to represent a data type. Instead the button widget is used to handle mouse clicks. The `on_click` method of the `Button` can be used to register a function to be called when the button is clicked. The docstring of the `on_click` can be seen below.

```

import ipywidgets as widgets

print(widgets.Button.on_click.__doc__)

```

### Example #1 - on_click

Since button clicks are stateless, they are transmitted from the front-end to the back-end using custom messages. By using the `on_click` method, a button that prints a message when it has been clicked is shown below.

```

from IPython.display import display

button = widgets.Button(description="Click Me!")

display(button)

def on_button_clicked(b):

print("Button clicked.")

button.on_click(on_button_clicked)

```

### Example #2 - on_submit

The `Text` widget also has a special `on_submit` event. The `on_submit` event fires when the user hits <kbd>enter</kbd>.

```

text = widgets.Text()

display(text)

def handle_submit(sender):

print(text.value)

text.on_submit(handle_submit)

```

## Traitlet events

Widget properties are IPython traitlets and traitlets are eventful. To handle changes, the `observe` method of the widget can be used to register a callback. The docstring for `observe` can be seen below.

```

print(widgets.Widget.observe.__doc__)

```

### Signatures

Mentioned in the docstring, the callback registered must have the signature `handler(change)` where `change` is a dictionary holding the information about the change.

Using this method, an example of how to output an `IntSlider`’s value as it is changed can be seen below.

```

int_range = widgets.IntSlider()

display(int_range)

def on_value_change(change):

print(change['new'])

int_range.observe(on_value_change, names='value')

```

# Linking Widgets

Often, you may want to simply link widget attributes together. Synchronization of attributes can be done in a simpler way than by using bare traitlets events.

## Linking traitlets attributes in the kernel¶

The first method is to use the `link` and `dlink` functions from the `traitlets` module. This only works if we are interacting with a live kernel.

```

import traitlets

# Create Caption

caption = widgets.Label(value = 'The values of slider1 and slider2 are synchronized')

# Create IntSliders

slider1 = widgets.IntSlider(description='Slider 1')

slider2 = widgets.IntSlider(description='Slider 2')

# Use trailets to link

l = traitlets.link((slider1, 'value'), (slider2, 'value'))

# Display!

display(caption, slider1, slider2)

# Create Caption

caption = widgets.Label(value='Changes in source values are reflected in target1')

# Create Sliders

source = widgets.IntSlider(description='Source')

target1 = widgets.IntSlider(description='Target 1')

# Use dlink

dl = traitlets.dlink((source, 'value'), (target1, 'value'))

display(caption, source, target1)

```

Function `traitlets.link` and `traitlets.dlink` return a `Link` or `DLink` object. The link can be broken by calling the `unlink` method.

```

# May get an error depending on order of cells being run!

l.unlink()

dl.unlink()

```

### Registering callbacks to trait changes in the kernel

Since attributes of widgets on the Python side are traitlets, you can register handlers to the change events whenever the model gets updates from the front-end.

The handler passed to observe will be called with one change argument. The change object holds at least a `type` key and a `name` key, corresponding respectively to the type of notification and the name of the attribute that triggered the notification.

Other keys may be passed depending on the value of `type`. In the case where type is `change`, we also have the following keys:

* `owner` : the HasTraits instance

* `old` : the old value of the modified trait attribute

* `new` : the new value of the modified trait attribute

* `name` : the name of the modified trait attribute.

```

caption = widgets.Label(value='The values of range1 and range2 are synchronized')

slider = widgets.IntSlider(min=-5, max=5, value=1, description='Slider')

def handle_slider_change(change):

caption.value = 'The slider value is ' + (

'negative' if change.new < 0 else 'nonnegative'

)

slider.observe(handle_slider_change, names='value')

display(caption, slider)

```

## Linking widgets attributes from the client side

When synchronizing traitlets attributes, you may experience a lag because of the latency due to the roundtrip to the server side. You can also directly link widget attributes in the browser using the link widgets, in either a unidirectional or a bidirectional fashion.

Javascript links persist when embedding widgets in html web pages without a kernel.

```

# NO LAG VERSION

caption = widgets.Label(value = 'The values of range1 and range2 are synchronized')

range1 = widgets.IntSlider(description='Range 1')

range2 = widgets.IntSlider(description='Range 2')

l = widgets.jslink((range1, 'value'), (range2, 'value'))

display(caption, range1, range2)

# NO LAG VERSION

caption = widgets.Label(value = 'Changes in source_range values are reflected in target_range')

source_range = widgets.IntSlider(description='Source range')

target_range = widgets.IntSlider(description='Target range')

dl = widgets.jsdlink((source_range, 'value'), (target_range, 'value'))

display(caption, source_range, target_range)

```

Function `widgets.jslink` returns a `Link` widget. The link can be broken by calling the `unlink` method.

```

l.unlink()

dl.unlink()

```

### The difference between linking in the kernel and linking in the client

Linking in the kernel means linking via python. If two sliders are linked in the kernel, when one slider is changed the browser sends a message to the kernel (python in this case) updating the changed slider, the link widget in the kernel then propagates the change to the other slider object in the kernel, and then the other slider’s kernel object sends a message to the browser to update the other slider’s views in the browser. If the kernel is not running (as in a static web page), then the controls will not be linked.

Linking using jslink (i.e., on the browser side) means contructing the link in Javascript. When one slider is changed, Javascript running in the browser changes the value of the other slider in the browser, without needing to communicate with the kernel at all. If the sliders are attached to kernel objects, each slider will update their kernel-side objects independently.

To see the difference between the two, go to the [ipywidgets documentation](http://ipywidgets.readthedocs.io/en/latest/examples/Widget%20Events.html) and try out the sliders near the bottom. The ones linked in the kernel with `link` and `dlink` are no longer linked, but the ones linked in the browser with `jslink` and `jsdlink` are still linked.

## Continuous updates

Some widgets offer a choice with their `continuous_update` attribute between continually updating values or only updating values when a user submits the value (for example, by pressing Enter or navigating away from the control). In the next example, we see the “Delayed” controls only transmit their value after the user finishes dragging the slider or submitting the textbox. The “Continuous” controls continually transmit their values as they are changed. Try typing a two-digit number into each of the text boxes, or dragging each of the sliders, to see the difference.

```

import traitlets

a = widgets.IntSlider(description="Delayed", continuous_update=False)

b = widgets.IntText(description="Delayed", continuous_update=False)

c = widgets.IntSlider(description="Continuous", continuous_update=True)

d = widgets.IntText(description="Continuous", continuous_update=True)

traitlets.link((a, 'value'), (b, 'value'))

traitlets.link((a, 'value'), (c, 'value'))

traitlets.link((a, 'value'), (d, 'value'))

widgets.VBox([a,b,c,d])

```

Sliders, `Text`, and `Textarea` controls default to `continuous_update=True`. `IntText` and other text boxes for entering integer or float numbers default to `continuous_update=False` (since often you’ll want to type an entire number before submitting the value by pressing enter or navigating out of the box).

# Conclusion

You should now feel comfortable linking Widget events!

|

github_jupyter

|

# Broadcast Variables

We already saw so called *broadcast joins* which is a specific impementation of a join suitable for small lookup tables. The term *broadcast* is also used in a different context in Spark, there are also *broadcast variables*.

### Origin of Broadcast Variables

Broadcast variables where introduced fairly early with Spark and were mainly targeted at the RDD API. Nontheless they still have their place with the high level DataFrames API in conjunction with user defined functions (UDFs).

### Weather Example

As usual, we'll use the weather data example. This time we'll manually implement a join using a UDF (actually this would be again a manual broadcast join).

# 1 Load Data

First we load the weather data, which consists of the measurement data and some station metadata.

```

storageLocation = "s3://dimajix-training/data/weather"

```

## 1.1 Load Measurements

Measurements are stored in multiple directories (one per year). But we will limit ourselves to a single year in the analysis to improve readability of execution plans.

```

from pyspark.sql.functions import *

from functools import reduce

# Read in all years, store them in an Python array

raw_weather_per_year = [spark.read.text(storageLocation + "/" + str(i)).withColumn("year", lit(i)) for i in range(2003,2015)]

# Union all years together

raw_weather = reduce(lambda l,r: l.union(r), raw_weather_per_year)

```

Use a single year to keep execution plans small

```

raw_weather = spark.read.text(storageLocation + "/2003").withColumn("year", lit(2003))

```

### Extract Measurements

Measurements were stored in a proprietary text based format, with some values at fixed positions. We need to extract these values with a simple SELECT statement.

```

weather = raw_weather.select(

col("year"),

substring(col("value"),5,6).alias("usaf"),

substring(col("value"),11,5).alias("wban"),

substring(col("value"),16,8).alias("date"),

substring(col("value"),24,4).alias("time"),

substring(col("value"),42,5).alias("report_type"),

substring(col("value"),61,3).alias("wind_direction"),

substring(col("value"),64,1).alias("wind_direction_qual"),

substring(col("value"),65,1).alias("wind_observation"),

(substring(col("value"),66,4).cast("float") / lit(10.0)).alias("wind_speed"),

substring(col("value"),70,1).alias("wind_speed_qual"),

(substring(col("value"),88,5).cast("float") / lit(10.0)).alias("air_temperature"),

substring(col("value"),93,1).alias("air_temperature_qual")

)

```

## 1.2 Load Station Metadata

We also need to load the weather station meta data containing information about the geo location, country etc of individual weather stations.

```

stations = spark.read \

.option("header", True) \

.csv(storageLocation + "/isd-history")

```

### Convert Station Metadata

We convert the stations DataFrame to a normal Python map, since we want to discuss broadcast variables. This means that the variable `py_stations` contains a normal Python object which only lives on the driver. It has no connection to Spark any more.

The resulting map converts a given station id (usaf and wban) to a country.

```

py_stations = stations.select(concat(stations["usaf"], stations["wban"]).alias("key"), stations["ctry"]).collect()

py_stations = {key:value for (key,value) in py_stations}

# Inspect result

list(py_stations.items())[0:10]

```

# 2 Using Broadcast Variables

In the following section, we want to use a Spark broadcast variable inside a UDF. Technically this is not required, as Spark also has other mechanisms of distributing data, so we'll start with a simple implementation *without* using a broadcast variable.

## 2.1 Create a UDF

For the initial implementation, we create a simple Python UDF which looks up the country for a given station id, which consists of the usaf and wban code. This way we will replace the `JOIN` of our original solution with a UDF implemented in Python.

```

def lookup_country(usaf, wban):

return py_stations.get(usaf + wban)

# Test lookup with an existing station

print(lookup_country("007026", "99999"))

# Test lookup with a non-existing station (better should not throw an exception)

print(lookup_country("123", "456"))

```

## 2.2 Not using a broadcast variable

Now that we have a simple Python function providing the required functionality, we convert it to a PySpark UDF using a Python decorator.

```

@udf('string')

def lookup_country(usaf, wban):

return py_stations.get(usaf + wban)

```

### Replace JOIN by UDF

Now we can perform the lookup by using the UDF instead of the original `JOIN`.

```

result = weather.withColumn('country', lookup_country(weather["usaf"], weather["wban"]))

result.limit(10).toPandas()

```

### Remarks

Since the code is actually executed not on the driver, but istributed on the executors, the executors also require access to the Python map. PySpark automatically serializes the map and sends it to the executors on the fly.

### Inspect Plan

We can also inspect the execution plan, which is different from the original implementation. Instead of the broadcast join, it now contains a `BatchEvalPython` step which looks up the stations country from the station id.

```

result.explain()

```

## 2.2 Using a Broadcast Variable

Now let us change the implementation to use a so called *broadcast variable*. While the original implementation implicitly sent the Python map to all executors, a broadcast variable makes the process of sending (*broadcasting*) a Python variable to all executors more explicit.

A Python variable can be broadcast using the `broadcast` method of the underlying Spark context (the Spark session does not export this functionality). Once the data is encapsulated in the broadcast variable, all executors can access the original data via the `value` member variable.

```

# First create a broadcast variable from the original Python map

bc_stations = spark.sparkContext.broadcast(py_stations)

@udf('string')

def lookup_country(usaf, wban):

# Access the broadcast variables value and perform lookup

return bc_stations.value.get(usaf + wban)

```

### Replace JOIN by UDF

Again we replace the original `JOIN` by the UDF we just defined above

```

result = weather.withColumn('country', lookup_country(weather["usaf"], weather["wban"]))

result.limit(10).toPandas()

```

### Remarks

Actually there is no big difference to the original implementation. But Spark handles a broadcast variable slightly more efficiently, especially if the variable is used in multiple UDFs. In this case the data will be broadcast only a single time, while not using a broadcast variable would imply sending the data around for every UDF.

### Execution Plan

The execution plan does not differ at all, since it does not provide information on broadcast variables.

```

result.explain()

```

## 2.3 Pandas UDFs

Since we already learnt that Pandas UDFs are executed more efficiently than normal UDFs, we want to provide a better implementation using Pandas. Of course Pandas UDFs can also access broadcast variables.

```

from pyspark.sql.functions import pandas_udf, PandasUDFType

@pandas_udf('string', PandasUDFType.SCALAR)

def lookup_country(usaf, wban):

# Create helper function

def lookup(key):

# Perform lookup by accessing the Python map

return bc_stations.value.get(key)

# Create key from both incoming Pandas series

usaf_wban = usaf + wban

# Perform lookup

return usaf_wban.apply(lookup)

```

### Replace JOIN by Pandas UDF

Again, we replace the original `JOIN` by the Pandas UDF.

```

result = weather.withColumn('country', lookup_country(weather["usaf"], weather["wban"]))

result.limit(10).toPandas()

```

### Execution Plan

Again, let's inspect the execution plan.

```

result.explain(True)

```

|

github_jupyter

|

# Sudoku

This tutorial includes everything you need to set up decision optimization engines, build constraint programming models.

When you finish this tutorial, you'll have a foundational knowledge of _Prescriptive Analytics_.

>This notebook is part of the **[Prescriptive Analytics for Python](https://rawgit.com/IBMDecisionOptimization/docplex-doc/master/docs/index.html)**

>It requires a **local installation of CPLEX Optimizers**.

Table of contents:

- [Describe the business problem](#Describe-the-business-problem)

* [How decision optimization (prescriptive analytics) can help](#How--decision-optimization-can-help)

* [Use decision optimization](#Use-decision-optimization)

* [Step 1: Download the library](#Step-1:-Download-the-library)

* [Step 2: Model the Data](#Step-2:-Model-the-data)

* [Step 3: Set up the prescriptive model](#Step-3:-Set-up-the-prescriptive-model)

* [Define the decision variables](#Define-the-decision-variables)

* [Express the business constraints](#Express-the-business-constraints)

* [Express the objective](#Express-the-objective)

* [Solve with Decision Optimization solve service](#Solve-with-Decision-Optimization-solve-service)

* [Step 4: Investigate the solution and run an example analysis](#Step-4:-Investigate-the-solution-and-then-run-an-example-analysis)

* [Summary](#Summary)

****

### Describe the business problem

* Sudoku is a logic-based, combinatorial number-placement puzzle.

* The objective is to fill a 9x9 grid with digits so that each column, each row,

and each of the nine 3x3 sub-grids that compose the grid contains all of the digits from 1 to 9.

* The puzzle setter provides a partially completed grid, which for a well-posed puzzle has a unique solution.

#### References

* See https://en.wikipedia.org/wiki/Sudoku for details

*****

## How decision optimization can help

* Prescriptive analytics technology recommends actions based on desired outcomes, taking into account specific scenarios, resources, and knowledge of past and current events. This insight can help your organization make better decisions and have greater control of business outcomes.

* Prescriptive analytics is the next step on the path to insight-based actions. It creates value through synergy with predictive analytics, which analyzes data to predict future outcomes.

* Prescriptive analytics takes that insight to the next level by suggesting the optimal way to handle that future situation. Organizations that can act fast in dynamic conditions and make superior decisions in uncertain environments gain a strong competitive advantage.

<br/>

+ For example:

+ Automate complex decisions and trade-offs to better manage limited resources.

+ Take advantage of a future opportunity or mitigate a future risk.

+ Proactively update recommendations based on changing events.

+ Meet operational goals, increase customer loyalty, prevent threats and fraud, and optimize business processes.

## Use decision optimization

### Step 1: Download the library

Run the following code to install Decision Optimization CPLEX Modeling library. The *DOcplex* library contains the two modeling packages, Mathematical Programming and Constraint Programming, referred to earlier.

```

import sys

try:

import docplex.cp

except:

if hasattr(sys, 'real_prefix'):

#we are in a virtual env.

!pip install docplex

else:

!pip install --user docplex

```

Note that the more global package <i>docplex</i> contains another subpackage <i>docplex.mp</i> that is dedicated to Mathematical Programming, another branch of optimization.

```

from docplex.cp.model import *

from sys import stdout

```

### Step 2: Model the data

#### Grid range

```

GRNG = range(9)

```

#### Different problems

_zero means cell to be filled with appropriate value_

```

SUDOKU_PROBLEM_1 = ( (0, 0, 0, 0, 9, 0, 1, 0, 0),

(2, 8, 0, 0, 0, 5, 0, 0, 0),

(7, 0, 0, 0, 0, 6, 4, 0, 0),

(8, 0, 5, 0, 0, 3, 0, 0, 6),

(0, 0, 1, 0, 0, 4, 0, 0, 0),

(0, 7, 0, 2, 0, 0, 0, 0, 0),

(3, 0, 0, 0, 0, 1, 0, 8, 0),

(0, 0, 0, 0, 0, 0, 0, 5, 0),

(0, 9, 0, 0, 0, 0, 0, 7, 0),

)

SUDOKU_PROBLEM_2 = ( (0, 7, 0, 0, 0, 0, 0, 4, 9),

(0, 0, 0, 4, 0, 0, 0, 0, 0),

(4, 0, 3, 5, 0, 7, 0, 0, 8),

(0, 0, 7, 2, 5, 0, 4, 0, 0),

(0, 0, 0, 0, 0, 0, 8, 0, 0),

(0, 0, 4, 0, 3, 0, 5, 9, 2),

(6, 1, 8, 0, 0, 0, 0, 0, 5),

(0, 9, 0, 1, 0, 0, 0, 3, 0),

(0, 0, 5, 0, 0, 0, 0, 0, 7),

)

SUDOKU_PROBLEM_3 = ( (0, 0, 0, 0, 0, 6, 0, 0, 0),

(0, 5, 9, 0, 0, 0, 0, 0, 8),

(2, 0, 0, 0, 0, 8, 0, 0, 0),

(0, 4, 5, 0, 0, 0, 0, 0, 0),

(0, 0, 3, 0, 0, 0, 0, 0, 0),

(0, 0, 6, 0, 0, 3, 0, 5, 4),

(0, 0, 0, 3, 2, 5, 0, 0, 6),

(0, 0, 0, 0, 0, 0, 0, 0, 0),

(0, 0, 0, 0, 0, 0, 0, 0, 0)

)

try:

import numpy as np

import matplotlib.pyplot as plt

VISU_ENABLED = True

except ImportError:

VISU_ENABLED = False

def print_grid(grid):

""" Print Sudoku grid """

for l in GRNG:

if (l > 0) and (l % 3 == 0):

stdout.write('\n')

for c in GRNG:

v = grid[l][c]

stdout.write(' ' if (c % 3 == 0) else ' ')

stdout.write(str(v) if v > 0 else '.')

stdout.write('\n')

def draw_grid(values):

%matplotlib inline

fig, ax = plt.subplots(figsize =(4,4))

min_val, max_val = 0, 9

R = range(0,9)

for l in R:

for c in R:

v = values[c][l]

s = " "

if v > 0:

s = str(v)

ax.text(l+0.5,8.5-c, s, va='center', ha='center')

ax.set_xlim(min_val, max_val)

ax.set_ylim(min_val, max_val)

ax.set_xticks(np.arange(max_val))

ax.set_yticks(np.arange(max_val))

ax.grid()

plt.show()

def display_grid(grid, name):

stdout.write(name)

stdout.write(":\n")

if VISU_ENABLED:

draw_grid(grid)

else:

print_grid(grid)

display_grid(SUDOKU_PROBLEM_1, "PROBLEM 1")

display_grid(SUDOKU_PROBLEM_2, "PROBLEM 2")

display_grid(SUDOKU_PROBLEM_3, "PROBLEM 3")

```

#### Choose your preferred problem (SUDOKU_PROBLEM_1 or SUDOKU_PROBLEM_2 or SUDOKU_PROBLEM_3)

If you change the problem, ensure to re-run all cells below this one.

```

problem = SUDOKU_PROBLEM_3

```

### Step 3: Set up the prescriptive model

```

mdl = CpoModel(name="Sudoku")

```

#### Define the decision variables

```

grid = [[integer_var(min=1, max=9, name="C" + str(l) + str(c)) for l in GRNG] for c in GRNG]

```

#### Express the business constraints

Add alldiff constraints for lines

```

for l in GRNG:

mdl.add(all_diff([grid[l][c] for c in GRNG]))

```

Add alldiff constraints for columns

```

for c in GRNG:

mdl.add(all_diff([grid[l][c] for l in GRNG]))

```

Add alldiff constraints for sub-squares

```

ssrng = range(0, 9, 3)

for sl in ssrng:

for sc in ssrng:

mdl.add(all_diff([grid[l][c] for l in range(sl, sl + 3) for c in range(sc, sc + 3)]))

```

Initialize known cells

```

for l in GRNG:

for c in GRNG:

v = problem[l][c]

if v > 0:

grid[l][c].set_domain((v, v))

```

#### Solve with Decision Optimization solve service

```

print("\nSolving model....")

msol = mdl.solve(TimeLimit=10)

```

### Step 4: Investigate the solution and then run an example analysis

```

display_grid(problem, "Initial problem")

if msol:

sol = [[msol[grid[l][c]] for c in GRNG] for l in GRNG]

stdout.write("Solve time: " + str(msol.get_solve_time()) + "\n")

display_grid(sol, "Solution")

else:

stdout.write("No solution found\n")

```

## Summary

You learned how to set up and use the IBM Decision Optimization CPLEX Modeling for Python to formulate and solve a Constraint Programming model.

#### References

* [CPLEX Modeling for Python documentation](https://rawgit.com/IBMDecisionOptimization/docplex-doc/master/docs/index.html)

* [Decision Optimization on Cloud](https://developer.ibm.com/docloud/)

* Need help with DOcplex or to report a bug? Please go [here](https://developer.ibm.com/answers/smartspace/docloud)

* Contact us at [email protected]

Copyright © 2017, 2018 IBM. IPLA licensed Sample Materials.

|

github_jupyter

|

# RadarCOVID-Report

## Data Extraction

```

import datetime

import json

import logging

import os

import shutil

import tempfile

import textwrap

import uuid

import matplotlib.pyplot as plt

import matplotlib.ticker

import numpy as np

import pandas as pd

import pycountry

import retry

import seaborn as sns

%matplotlib inline

current_working_directory = os.environ.get("PWD")

if current_working_directory:

os.chdir(current_working_directory)

sns.set()

matplotlib.rcParams["figure.figsize"] = (15, 6)

extraction_datetime = datetime.datetime.utcnow()

extraction_date = extraction_datetime.strftime("%Y-%m-%d")

extraction_previous_datetime = extraction_datetime - datetime.timedelta(days=1)

extraction_previous_date = extraction_previous_datetime.strftime("%Y-%m-%d")

extraction_date_with_hour = datetime.datetime.utcnow().strftime("%Y-%m-%d@%H")

current_hour = datetime.datetime.utcnow().hour

are_today_results_partial = current_hour != 23

```

### Constants

```

from Modules.ExposureNotification import exposure_notification_io

spain_region_country_code = "ES"

germany_region_country_code = "DE"

default_backend_identifier = spain_region_country_code

backend_generation_days = 7 * 2

daily_summary_days = 7 * 4 * 3

daily_plot_days = 7 * 4

tek_dumps_load_limit = daily_summary_days + 1

```

### Parameters

```

environment_backend_identifier = os.environ.get("RADARCOVID_REPORT__BACKEND_IDENTIFIER")

if environment_backend_identifier:

report_backend_identifier = environment_backend_identifier

else:

report_backend_identifier = default_backend_identifier

report_backend_identifier

environment_enable_multi_backend_download = \

os.environ.get("RADARCOVID_REPORT__ENABLE_MULTI_BACKEND_DOWNLOAD")

if environment_enable_multi_backend_download:

report_backend_identifiers = None

else:

report_backend_identifiers = [report_backend_identifier]

report_backend_identifiers

environment_invalid_shared_diagnoses_dates = \

os.environ.get("RADARCOVID_REPORT__INVALID_SHARED_DIAGNOSES_DATES")

if environment_invalid_shared_diagnoses_dates:

invalid_shared_diagnoses_dates = environment_invalid_shared_diagnoses_dates.split(",")

else:

invalid_shared_diagnoses_dates = []

invalid_shared_diagnoses_dates

```

### COVID-19 Cases

```

report_backend_client = \

exposure_notification_io.get_backend_client_with_identifier(

backend_identifier=report_backend_identifier)

@retry.retry(tries=10, delay=10, backoff=1.1, jitter=(0, 10))

def download_cases_dataframe():

return pd.read_csv("https://raw.githubusercontent.com/owid/covid-19-data/master/public/data/owid-covid-data.csv")

confirmed_df_ = download_cases_dataframe()

confirmed_df_.iloc[0]

confirmed_df = confirmed_df_.copy()

confirmed_df = confirmed_df[["date", "new_cases", "iso_code"]]

confirmed_df.rename(

columns={

"date": "sample_date",

"iso_code": "country_code",

},

inplace=True)

def convert_iso_alpha_3_to_alpha_2(x):

try:

return pycountry.countries.get(alpha_3=x).alpha_2

except Exception as e:

logging.info(f"Error converting country ISO Alpha 3 code '{x}': {repr(e)}")

return None

confirmed_df["country_code"] = confirmed_df.country_code.apply(convert_iso_alpha_3_to_alpha_2)

confirmed_df.dropna(inplace=True)

confirmed_df["sample_date"] = pd.to_datetime(confirmed_df.sample_date, dayfirst=True)

confirmed_df["sample_date"] = confirmed_df.sample_date.dt.strftime("%Y-%m-%d")

confirmed_df.sort_values("sample_date", inplace=True)

confirmed_df.tail()

confirmed_days = pd.date_range(

start=confirmed_df.iloc[0].sample_date,

end=extraction_datetime)

confirmed_days_df = pd.DataFrame(data=confirmed_days, columns=["sample_date"])

confirmed_days_df["sample_date_string"] = \

confirmed_days_df.sample_date.dt.strftime("%Y-%m-%d")

confirmed_days_df.tail()

def sort_source_regions_for_display(source_regions: list) -> list:

if report_backend_identifier in source_regions:

source_regions = [report_backend_identifier] + \

list(sorted(set(source_regions).difference([report_backend_identifier])))

else:

source_regions = list(sorted(source_regions))

return source_regions

report_source_regions = report_backend_client.source_regions_for_date(

date=extraction_datetime.date())

report_source_regions = sort_source_regions_for_display(

source_regions=report_source_regions)

report_source_regions

def get_cases_dataframe(source_regions_for_date_function, columns_suffix=None):

source_regions_at_date_df = confirmed_days_df.copy()

source_regions_at_date_df["source_regions_at_date"] = \

source_regions_at_date_df.sample_date.apply(

lambda x: source_regions_for_date_function(date=x))

source_regions_at_date_df.sort_values("sample_date", inplace=True)

source_regions_at_date_df["_source_regions_group"] = source_regions_at_date_df. \

source_regions_at_date.apply(lambda x: ",".join(sort_source_regions_for_display(x)))

source_regions_at_date_df.tail()

#%%

source_regions_for_summary_df_ = \

source_regions_at_date_df[["sample_date", "_source_regions_group"]].copy()

source_regions_for_summary_df_.rename(columns={"_source_regions_group": "source_regions"}, inplace=True)

source_regions_for_summary_df_.tail()

#%%

confirmed_output_columns = ["sample_date", "new_cases", "covid_cases"]

confirmed_output_df = pd.DataFrame(columns=confirmed_output_columns)

for source_regions_group, source_regions_group_series in \

source_regions_at_date_df.groupby("_source_regions_group"):

source_regions_set = set(source_regions_group.split(","))

confirmed_source_regions_set_df = \

confirmed_df[confirmed_df.country_code.isin(source_regions_set)].copy()

confirmed_source_regions_group_df = \

confirmed_source_regions_set_df.groupby("sample_date").new_cases.sum() \

.reset_index().sort_values("sample_date")

confirmed_source_regions_group_df = \

confirmed_source_regions_group_df.merge(

confirmed_days_df[["sample_date_string"]].rename(

columns={"sample_date_string": "sample_date"}),

how="right")

confirmed_source_regions_group_df["new_cases"] = \

confirmed_source_regions_group_df["new_cases"].clip(lower=0)

confirmed_source_regions_group_df["covid_cases"] = \

confirmed_source_regions_group_df.new_cases.rolling(7, min_periods=0).mean().round()

confirmed_source_regions_group_df = \

confirmed_source_regions_group_df[confirmed_output_columns]

confirmed_source_regions_group_df = confirmed_source_regions_group_df.replace(0, np.nan)

confirmed_source_regions_group_df.fillna(method="ffill", inplace=True)

confirmed_source_regions_group_df = \

confirmed_source_regions_group_df[

confirmed_source_regions_group_df.sample_date.isin(

source_regions_group_series.sample_date_string)]

confirmed_output_df = confirmed_output_df.append(confirmed_source_regions_group_df)

result_df = confirmed_output_df.copy()

result_df.tail()

#%%

result_df.rename(columns={"sample_date": "sample_date_string"}, inplace=True)

result_df = confirmed_days_df[["sample_date_string"]].merge(result_df, how="left")

result_df.sort_values("sample_date_string", inplace=True)

result_df.fillna(method="ffill", inplace=True)

result_df.tail()

#%%

result_df[["new_cases", "covid_cases"]].plot()

if columns_suffix:

result_df.rename(

columns={

"new_cases": "new_cases_" + columns_suffix,

"covid_cases": "covid_cases_" + columns_suffix},

inplace=True)

return result_df, source_regions_for_summary_df_

confirmed_eu_df, source_regions_for_summary_df = get_cases_dataframe(

report_backend_client.source_regions_for_date)

confirmed_es_df, _ = get_cases_dataframe(

lambda date: [spain_region_country_code],

columns_suffix=spain_region_country_code.lower())

```

### Extract API TEKs

```

raw_zip_path_prefix = "Data/TEKs/Raw/"

base_backend_identifiers = [report_backend_identifier]

multi_backend_exposure_keys_df = \

exposure_notification_io.download_exposure_keys_from_backends(

backend_identifiers=report_backend_identifiers,

generation_days=backend_generation_days,

fail_on_error_backend_identifiers=base_backend_identifiers,

save_raw_zip_path_prefix=raw_zip_path_prefix)

multi_backend_exposure_keys_df["region"] = multi_backend_exposure_keys_df["backend_identifier"]

multi_backend_exposure_keys_df.rename(

columns={

"generation_datetime": "sample_datetime",

"generation_date_string": "sample_date_string",

},

inplace=True)

multi_backend_exposure_keys_df.head()

early_teks_df = multi_backend_exposure_keys_df[

multi_backend_exposure_keys_df.rolling_period < 144].copy()

early_teks_df["rolling_period_in_hours"] = early_teks_df.rolling_period / 6

early_teks_df[early_teks_df.sample_date_string != extraction_date] \

.rolling_period_in_hours.hist(bins=list(range(24)))

early_teks_df[early_teks_df.sample_date_string == extraction_date] \

.rolling_period_in_hours.hist(bins=list(range(24)))

multi_backend_exposure_keys_df = multi_backend_exposure_keys_df[[

"sample_date_string", "region", "key_data"]]

multi_backend_exposure_keys_df.head()

active_regions = \

multi_backend_exposure_keys_df.groupby("region").key_data.nunique().sort_values().index.unique().tolist()

active_regions

multi_backend_summary_df = multi_backend_exposure_keys_df.groupby(

["sample_date_string", "region"]).key_data.nunique().reset_index() \

.pivot(index="sample_date_string", columns="region") \

.sort_index(ascending=False)

multi_backend_summary_df.rename(

columns={"key_data": "shared_teks_by_generation_date"},

inplace=True)

multi_backend_summary_df.rename_axis("sample_date", inplace=True)

multi_backend_summary_df = multi_backend_summary_df.fillna(0).astype(int)

multi_backend_summary_df = multi_backend_summary_df.head(backend_generation_days)

multi_backend_summary_df.head()

def compute_keys_cross_sharing(x):

teks_x = x.key_data_x.item()

common_teks = set(teks_x).intersection(x.key_data_y.item())

common_teks_fraction = len(common_teks) / len(teks_x)

return pd.Series(dict(

common_teks=common_teks,

common_teks_fraction=common_teks_fraction,

))

multi_backend_exposure_keys_by_region_df = \

multi_backend_exposure_keys_df.groupby("region").key_data.unique().reset_index()

multi_backend_exposure_keys_by_region_df["_merge"] = True

multi_backend_exposure_keys_by_region_combination_df = \

multi_backend_exposure_keys_by_region_df.merge(

multi_backend_exposure_keys_by_region_df, on="_merge")

multi_backend_exposure_keys_by_region_combination_df.drop(

columns=["_merge"], inplace=True)

if multi_backend_exposure_keys_by_region_combination_df.region_x.nunique() > 1:

multi_backend_exposure_keys_by_region_combination_df = \

multi_backend_exposure_keys_by_region_combination_df[

multi_backend_exposure_keys_by_region_combination_df.region_x !=

multi_backend_exposure_keys_by_region_combination_df.region_y]

multi_backend_exposure_keys_cross_sharing_df = \

multi_backend_exposure_keys_by_region_combination_df \

.groupby(["region_x", "region_y"]) \

.apply(compute_keys_cross_sharing) \

.reset_index()

multi_backend_cross_sharing_summary_df = \

multi_backend_exposure_keys_cross_sharing_df.pivot_table(

values=["common_teks_fraction"],

columns="region_x",

index="region_y",

aggfunc=lambda x: x.item())

multi_backend_cross_sharing_summary_df

multi_backend_without_active_region_exposure_keys_df = \

multi_backend_exposure_keys_df[multi_backend_exposure_keys_df.region != report_backend_identifier]

multi_backend_without_active_region = \

multi_backend_without_active_region_exposure_keys_df.groupby("region").key_data.nunique().sort_values().index.unique().tolist()

multi_backend_without_active_region

exposure_keys_summary_df = multi_backend_exposure_keys_df[

multi_backend_exposure_keys_df.region == report_backend_identifier]

exposure_keys_summary_df.drop(columns=["region"], inplace=True)

exposure_keys_summary_df = \

exposure_keys_summary_df.groupby(["sample_date_string"]).key_data.nunique().to_frame()

exposure_keys_summary_df = \

exposure_keys_summary_df.reset_index().set_index("sample_date_string")

exposure_keys_summary_df.sort_index(ascending=False, inplace=True)

exposure_keys_summary_df.rename(columns={"key_data": "shared_teks_by_generation_date"}, inplace=True)

exposure_keys_summary_df.head()

```

### Dump API TEKs

```

tek_list_df = multi_backend_exposure_keys_df[

["sample_date_string", "region", "key_data"]].copy()

tek_list_df["key_data"] = tek_list_df["key_data"].apply(str)

tek_list_df.rename(columns={

"sample_date_string": "sample_date",

"key_data": "tek_list"}, inplace=True)

tek_list_df = tek_list_df.groupby(

["sample_date", "region"]).tek_list.unique().reset_index()

tek_list_df["extraction_date"] = extraction_date

tek_list_df["extraction_date_with_hour"] = extraction_date_with_hour

tek_list_path_prefix = "Data/TEKs/"

tek_list_current_path = tek_list_path_prefix + f"/Current/RadarCOVID-TEKs.json"

tek_list_daily_path = tek_list_path_prefix + f"Daily/RadarCOVID-TEKs-{extraction_date}.json"

tek_list_hourly_path = tek_list_path_prefix + f"Hourly/RadarCOVID-TEKs-{extraction_date_with_hour}.json"

for path in [tek_list_current_path, tek_list_daily_path, tek_list_hourly_path]:

os.makedirs(os.path.dirname(path), exist_ok=True)

tek_list_base_df = tek_list_df[tek_list_df.region == report_backend_identifier]

tek_list_base_df.drop(columns=["extraction_date", "extraction_date_with_hour"]).to_json(

tek_list_current_path,

lines=True, orient="records")

tek_list_base_df.drop(columns=["extraction_date_with_hour"]).to_json(

tek_list_daily_path,

lines=True, orient="records")

tek_list_base_df.to_json(

tek_list_hourly_path,

lines=True, orient="records")

tek_list_base_df.head()

```

### Load TEK Dumps

```

import glob

def load_extracted_teks(mode, region=None, limit=None) -> pd.DataFrame:

extracted_teks_df = pd.DataFrame(columns=["region"])

file_paths = list(reversed(sorted(glob.glob(tek_list_path_prefix + mode + "/RadarCOVID-TEKs-*.json"))))

if limit:

file_paths = file_paths[:limit]

for file_path in file_paths:

logging.info(f"Loading TEKs from '{file_path}'...")

iteration_extracted_teks_df = pd.read_json(file_path, lines=True)

extracted_teks_df = extracted_teks_df.append(

iteration_extracted_teks_df, sort=False)

extracted_teks_df["region"] = \

extracted_teks_df.region.fillna(spain_region_country_code).copy()

if region:

extracted_teks_df = \

extracted_teks_df[extracted_teks_df.region == region]

return extracted_teks_df

daily_extracted_teks_df = load_extracted_teks(

mode="Daily",

region=report_backend_identifier,

limit=tek_dumps_load_limit)

daily_extracted_teks_df.head()

exposure_keys_summary_df_ = daily_extracted_teks_df \

.sort_values("extraction_date", ascending=False) \

.groupby("sample_date").tek_list.first() \

.to_frame()

exposure_keys_summary_df_.index.name = "sample_date_string"

exposure_keys_summary_df_["tek_list"] = \

exposure_keys_summary_df_.tek_list.apply(len)

exposure_keys_summary_df_ = exposure_keys_summary_df_ \

.rename(columns={"tek_list": "shared_teks_by_generation_date"}) \

.sort_index(ascending=False)

exposure_keys_summary_df = exposure_keys_summary_df_

exposure_keys_summary_df.head()

```

### Daily New TEKs

```

tek_list_df = daily_extracted_teks_df.groupby("extraction_date").tek_list.apply(

lambda x: set(sum(x, []))).reset_index()

tek_list_df = tek_list_df.set_index("extraction_date").sort_index(ascending=True)

tek_list_df.head()

def compute_teks_by_generation_and_upload_date(date):

day_new_teks_set_df = tek_list_df.copy().diff()

try:

day_new_teks_set = day_new_teks_set_df[

day_new_teks_set_df.index == date].tek_list.item()

except ValueError:

day_new_teks_set = None

if pd.isna(day_new_teks_set):

day_new_teks_set = set()

day_new_teks_df = daily_extracted_teks_df[

daily_extracted_teks_df.extraction_date == date].copy()

day_new_teks_df["shared_teks"] = \

day_new_teks_df.tek_list.apply(lambda x: set(x).intersection(day_new_teks_set))

day_new_teks_df["shared_teks"] = \

day_new_teks_df.shared_teks.apply(len)

day_new_teks_df["upload_date"] = date

day_new_teks_df.rename(columns={"sample_date": "generation_date"}, inplace=True)

day_new_teks_df = day_new_teks_df[

["upload_date", "generation_date", "shared_teks"]]

day_new_teks_df["generation_to_upload_days"] = \

(pd.to_datetime(day_new_teks_df.upload_date) -

pd.to_datetime(day_new_teks_df.generation_date)).dt.days

day_new_teks_df = day_new_teks_df[day_new_teks_df.shared_teks > 0]

return day_new_teks_df

shared_teks_generation_to_upload_df = pd.DataFrame()

for upload_date in daily_extracted_teks_df.extraction_date.unique():

shared_teks_generation_to_upload_df = \

shared_teks_generation_to_upload_df.append(

compute_teks_by_generation_and_upload_date(date=upload_date))

shared_teks_generation_to_upload_df \

.sort_values(["upload_date", "generation_date"], ascending=False, inplace=True)

shared_teks_generation_to_upload_df.tail()

today_new_teks_df = \

shared_teks_generation_to_upload_df[

shared_teks_generation_to_upload_df.upload_date == extraction_date].copy()

today_new_teks_df.tail()

if not today_new_teks_df.empty:

today_new_teks_df.set_index("generation_to_upload_days") \

.sort_index().shared_teks.plot.bar()

generation_to_upload_period_pivot_df = \

shared_teks_generation_to_upload_df[

["upload_date", "generation_to_upload_days", "shared_teks"]] \

.pivot(index="upload_date", columns="generation_to_upload_days") \

.sort_index(ascending=False).fillna(0).astype(int) \

.droplevel(level=0, axis=1)

generation_to_upload_period_pivot_df.head()

new_tek_df = tek_list_df.diff().tek_list.apply(

lambda x: len(x) if not pd.isna(x) else None).to_frame().reset_index()

new_tek_df.rename(columns={

"tek_list": "shared_teks_by_upload_date",

"extraction_date": "sample_date_string",}, inplace=True)

new_tek_df.tail()

shared_teks_uploaded_on_generation_date_df = shared_teks_generation_to_upload_df[

shared_teks_generation_to_upload_df.generation_to_upload_days == 0] \

[["upload_date", "shared_teks"]].rename(

columns={

"upload_date": "sample_date_string",

"shared_teks": "shared_teks_uploaded_on_generation_date",

})

shared_teks_uploaded_on_generation_date_df.head()

estimated_shared_diagnoses_df = shared_teks_generation_to_upload_df \

.groupby(["upload_date"]).shared_teks.max().reset_index() \

.sort_values(["upload_date"], ascending=False) \

.rename(columns={

"upload_date": "sample_date_string",

"shared_teks": "shared_diagnoses",

})

invalid_shared_diagnoses_dates_mask = \

estimated_shared_diagnoses_df.sample_date_string.isin(invalid_shared_diagnoses_dates)

estimated_shared_diagnoses_df[invalid_shared_diagnoses_dates_mask] = 0

estimated_shared_diagnoses_df.head()

```

### Hourly New TEKs

```

hourly_extracted_teks_df = load_extracted_teks(

mode="Hourly", region=report_backend_identifier, limit=25)

hourly_extracted_teks_df.head()

hourly_new_tek_count_df = hourly_extracted_teks_df \

.groupby("extraction_date_with_hour").tek_list. \

apply(lambda x: set(sum(x, []))).reset_index().copy()

hourly_new_tek_count_df = hourly_new_tek_count_df.set_index("extraction_date_with_hour") \

.sort_index(ascending=True)

hourly_new_tek_count_df["new_tek_list"] = hourly_new_tek_count_df.tek_list.diff()

hourly_new_tek_count_df["new_tek_count"] = hourly_new_tek_count_df.new_tek_list.apply(

lambda x: len(x) if not pd.isna(x) else 0)

hourly_new_tek_count_df.rename(columns={

"new_tek_count": "shared_teks_by_upload_date"}, inplace=True)

hourly_new_tek_count_df = hourly_new_tek_count_df.reset_index()[[

"extraction_date_with_hour", "shared_teks_by_upload_date"]]

hourly_new_tek_count_df.head()

hourly_summary_df = hourly_new_tek_count_df.copy()

hourly_summary_df.set_index("extraction_date_with_hour", inplace=True)

hourly_summary_df = hourly_summary_df.fillna(0).astype(int).reset_index()

hourly_summary_df["datetime_utc"] = pd.to_datetime(

hourly_summary_df.extraction_date_with_hour, format="%Y-%m-%d@%H")

hourly_summary_df.set_index("datetime_utc", inplace=True)

hourly_summary_df = hourly_summary_df.tail(-1)

hourly_summary_df.head()

```

### Official Statistics

```

import requests

import pandas.io.json

official_stats_response = requests.get("https://radarcovid.covid19.gob.es/kpi/statistics/basics")

official_stats_response.raise_for_status()

official_stats_df_ = pandas.io.json.json_normalize(official_stats_response.json())

official_stats_df = official_stats_df_.copy()

official_stats_df["date"] = pd.to_datetime(official_stats_df["date"], dayfirst=True)

official_stats_df.head()

official_stats_column_map = {

"date": "sample_date",

"applicationsDownloads.totalAcummulated": "app_downloads_es_accumulated",

"communicatedContagions.totalAcummulated": "shared_diagnoses_es_accumulated",

}

accumulated_suffix = "_accumulated"

accumulated_values_columns = \

list(filter(lambda x: x.endswith(accumulated_suffix), official_stats_column_map.values()))

interpolated_values_columns = \

list(map(lambda x: x[:-len(accumulated_suffix)], accumulated_values_columns))

official_stats_df = \

official_stats_df[official_stats_column_map.keys()] \

.rename(columns=official_stats_column_map)

official_stats_df["extraction_date"] = extraction_date

official_stats_df.head()

official_stats_path = "Data/Statistics/Current/RadarCOVID-Statistics.json"

previous_official_stats_df = pd.read_json(official_stats_path, orient="records", lines=True)

previous_official_stats_df["sample_date"] = pd.to_datetime(previous_official_stats_df["sample_date"], dayfirst=True)

official_stats_df = official_stats_df.append(previous_official_stats_df)

official_stats_df.head()

official_stats_df = official_stats_df[~(official_stats_df.shared_diagnoses_es_accumulated == 0)]

official_stats_df.sort_values("extraction_date", ascending=False, inplace=True)

official_stats_df.drop_duplicates(subset=["sample_date"], keep="first", inplace=True)

official_stats_df.head()

official_stats_stored_df = official_stats_df.copy()

official_stats_stored_df["sample_date"] = official_stats_stored_df.sample_date.dt.strftime("%Y-%m-%d")

official_stats_stored_df.to_json(official_stats_path, orient="records", lines=True)

official_stats_df.drop(columns=["extraction_date"], inplace=True)

official_stats_df = confirmed_days_df.merge(official_stats_df, how="left")

official_stats_df.sort_values("sample_date", ascending=False, inplace=True)

official_stats_df.head()

official_stats_df[accumulated_values_columns] = \

official_stats_df[accumulated_values_columns] \

.astype(float).interpolate(limit_area="inside")

official_stats_df[interpolated_values_columns] = \

official_stats_df[accumulated_values_columns].diff(periods=-1)

official_stats_df.drop(columns="sample_date", inplace=True)

official_stats_df.head()

```

### Data Merge

```

result_summary_df = exposure_keys_summary_df.merge(

new_tek_df, on=["sample_date_string"], how="outer")

result_summary_df.head()

result_summary_df = result_summary_df.merge(

shared_teks_uploaded_on_generation_date_df, on=["sample_date_string"], how="outer")

result_summary_df.head()

result_summary_df = result_summary_df.merge(

estimated_shared_diagnoses_df, on=["sample_date_string"], how="outer")

result_summary_df.head()

result_summary_df = result_summary_df.merge(

official_stats_df, on=["sample_date_string"], how="outer")

result_summary_df.head()

result_summary_df = confirmed_eu_df.tail(daily_summary_days).merge(

result_summary_df, on=["sample_date_string"], how="left")

result_summary_df.head()

result_summary_df = confirmed_es_df.tail(daily_summary_days).merge(

result_summary_df, on=["sample_date_string"], how="left")

result_summary_df.head()

result_summary_df["sample_date"] = pd.to_datetime(result_summary_df.sample_date_string)

result_summary_df = result_summary_df.merge(source_regions_for_summary_df, how="left")

result_summary_df.set_index(["sample_date", "source_regions"], inplace=True)

result_summary_df.drop(columns=["sample_date_string"], inplace=True)

result_summary_df.sort_index(ascending=False, inplace=True)

result_summary_df.head()

with pd.option_context("mode.use_inf_as_na", True):

result_summary_df = result_summary_df.fillna(0).astype(int)

result_summary_df["teks_per_shared_diagnosis"] = \

(result_summary_df.shared_teks_by_upload_date / result_summary_df.shared_diagnoses).fillna(0)

result_summary_df["shared_diagnoses_per_covid_case"] = \

(result_summary_df.shared_diagnoses / result_summary_df.covid_cases).fillna(0)

result_summary_df["shared_diagnoses_per_covid_case_es"] = \

(result_summary_df.shared_diagnoses_es / result_summary_df.covid_cases_es).fillna(0)

result_summary_df.head(daily_plot_days)

def compute_aggregated_results_summary(days) -> pd.DataFrame:

aggregated_result_summary_df = result_summary_df.copy()

aggregated_result_summary_df["covid_cases_for_ratio"] = \

aggregated_result_summary_df.covid_cases.mask(

aggregated_result_summary_df.shared_diagnoses == 0, 0)

aggregated_result_summary_df["covid_cases_for_ratio_es"] = \

aggregated_result_summary_df.covid_cases_es.mask(

aggregated_result_summary_df.shared_diagnoses_es == 0, 0)

aggregated_result_summary_df = aggregated_result_summary_df \

.sort_index(ascending=True).fillna(0).rolling(days).agg({

"covid_cases": "sum",

"covid_cases_es": "sum",

"covid_cases_for_ratio": "sum",

"covid_cases_for_ratio_es": "sum",

"shared_teks_by_generation_date": "sum",

"shared_teks_by_upload_date": "sum",

"shared_diagnoses": "sum",

"shared_diagnoses_es": "sum",

}).sort_index(ascending=False)

with pd.option_context("mode.use_inf_as_na", True):

aggregated_result_summary_df = aggregated_result_summary_df.fillna(0).astype(int)

aggregated_result_summary_df["teks_per_shared_diagnosis"] = \

(aggregated_result_summary_df.shared_teks_by_upload_date /

aggregated_result_summary_df.covid_cases_for_ratio).fillna(0)

aggregated_result_summary_df["shared_diagnoses_per_covid_case"] = \

(aggregated_result_summary_df.shared_diagnoses /

aggregated_result_summary_df.covid_cases_for_ratio).fillna(0)

aggregated_result_summary_df["shared_diagnoses_per_covid_case_es"] = \

(aggregated_result_summary_df.shared_diagnoses_es /

aggregated_result_summary_df.covid_cases_for_ratio_es).fillna(0)

return aggregated_result_summary_df

aggregated_result_with_7_days_window_summary_df = compute_aggregated_results_summary(days=7)

aggregated_result_with_7_days_window_summary_df.head()

last_7_days_summary = aggregated_result_with_7_days_window_summary_df.to_dict(orient="records")[1]

last_7_days_summary

aggregated_result_with_14_days_window_summary_df = compute_aggregated_results_summary(days=13)

last_14_days_summary = aggregated_result_with_14_days_window_summary_df.to_dict(orient="records")[1]

last_14_days_summary

```

## Report Results

```

display_column_name_mapping = {

"sample_date": "Sample\u00A0Date\u00A0(UTC)",

"source_regions": "Source Countries",

"datetime_utc": "Timestamp (UTC)",

"upload_date": "Upload Date (UTC)",

"generation_to_upload_days": "Generation to Upload Period in Days",

"region": "Backend",

"region_x": "Backend\u00A0(A)",

"region_y": "Backend\u00A0(B)",

"common_teks": "Common TEKs Shared Between Backends",

"common_teks_fraction": "Fraction of TEKs in Backend (A) Available in Backend (B)",

"covid_cases": "COVID-19 Cases (Source Countries)",

"shared_teks_by_generation_date": "Shared TEKs by Generation Date (Source Countries)",

"shared_teks_by_upload_date": "Shared TEKs by Upload Date (Source Countries)",

"shared_teks_uploaded_on_generation_date": "Shared TEKs Uploaded on Generation Date (Source Countries)",

"shared_diagnoses": "Shared Diagnoses (Source Countries – Estimation)",

"teks_per_shared_diagnosis": "TEKs Uploaded per Shared Diagnosis (Source Countries)",

"shared_diagnoses_per_covid_case": "Usage Ratio (Source Countries)",

"covid_cases_es": "COVID-19 Cases (Spain)",

"app_downloads_es": "App Downloads (Spain – Official)",

"shared_diagnoses_es": "Shared Diagnoses (Spain – Official)",

"shared_diagnoses_per_covid_case_es": "Usage Ratio (Spain)",

}

summary_columns = [

"covid_cases",

"shared_teks_by_generation_date",

"shared_teks_by_upload_date",

"shared_teks_uploaded_on_generation_date",

"shared_diagnoses",

"teks_per_shared_diagnosis",

"shared_diagnoses_per_covid_case",

"covid_cases_es",

"app_downloads_es",

"shared_diagnoses_es",

"shared_diagnoses_per_covid_case_es",

]

summary_percentage_columns= [

"shared_diagnoses_per_covid_case_es",

"shared_diagnoses_per_covid_case",

]

```

### Daily Summary Table

```

result_summary_df_ = result_summary_df.copy()

result_summary_df = result_summary_df[summary_columns]

result_summary_with_display_names_df = result_summary_df \

.rename_axis(index=display_column_name_mapping) \

.rename(columns=display_column_name_mapping)

result_summary_with_display_names_df

```

### Daily Summary Plots

```

result_plot_summary_df = result_summary_df.head(daily_plot_days)[summary_columns] \

.droplevel(level=["source_regions"]) \

.rename_axis(index=display_column_name_mapping) \

.rename(columns=display_column_name_mapping)

summary_ax_list = result_plot_summary_df.sort_index(ascending=True).plot.bar(

title=f"Daily Summary",

rot=45, subplots=True, figsize=(15, 30), legend=False)

ax_ = summary_ax_list[0]

ax_.get_figure().tight_layout()

ax_.get_figure().subplots_adjust(top=0.95)

_ = ax_.set_xticklabels(sorted(result_plot_summary_df.index.strftime("%Y-%m-%d").tolist()))

for percentage_column in summary_percentage_columns:

percentage_column_index = summary_columns.index(percentage_column)

summary_ax_list[percentage_column_index].yaxis \

.set_major_formatter(matplotlib.ticker.PercentFormatter(1.0))

```

### Daily Generation to Upload Period Table

```

display_generation_to_upload_period_pivot_df = \

generation_to_upload_period_pivot_df \

.head(backend_generation_days)

display_generation_to_upload_period_pivot_df \

.head(backend_generation_days) \

.rename_axis(columns=display_column_name_mapping) \

.rename_axis(index=display_column_name_mapping)

fig, generation_to_upload_period_pivot_table_ax = plt.subplots(

figsize=(12, 1 + 0.6 * len(display_generation_to_upload_period_pivot_df)))

generation_to_upload_period_pivot_table_ax.set_title(

"Shared TEKs Generation to Upload Period Table")

sns.heatmap(

data=display_generation_to_upload_period_pivot_df

.rename_axis(columns=display_column_name_mapping)

.rename_axis(index=display_column_name_mapping),

fmt=".0f",

annot=True,

ax=generation_to_upload_period_pivot_table_ax)

generation_to_upload_period_pivot_table_ax.get_figure().tight_layout()

```

### Hourly Summary Plots

```

hourly_summary_ax_list = hourly_summary_df \

.rename_axis(index=display_column_name_mapping) \