modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-29 12:28:52

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 534

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-29 12:25:02

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

pligor/gr7.5b-dolly

|

pligor

| 2023-07-24T20:13:16Z | 12 | 1 |

transformers

|

[

"transformers",

"pytorch",

"xglm",

"text-generation",

"text-generation-inference",

"el",

"dataset:databricks/databricks-dolly-15k",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"8-bit",

"region:us"

] |

text-generation

| 2023-07-22T06:45:06Z |

---

license: apache-2.0

datasets:

- databricks/databricks-dolly-15k

language:

- el

library_name: transformers

tags:

- text-generation-inference

pipeline_tag: text-generation

---

# Model Card for agrimi7.5B-dolly

<!-- Provide a quick summary of what the model is/does. -->

This model is a finetuned (SFT) version of Facbook xglm-7.5B using a machine translated version of the dataset databricks-dolly-15k in Greek language!

The purpose is to demonstrate the ability of the specific pretrained model to adapt to instruction following mode by using a relatively small dataset such as the databricks-dolly-15k.

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [Andreas Loupasakis](https://github.com/alup)

- **Model type:** Causal Language Model

- **Language(s) (NLP):** Greek (el)

- **License:** Apache-2.0

- **Finetuned from model:** XGLM-7.5B

|

felixb85/ppo-Huggy

|

felixb85

| 2023-07-24T20:07:29Z | 0 | 0 |

ml-agents

|

[

"ml-agents",

"tensorboard",

"onnx",

"Huggy",

"deep-reinforcement-learning",

"reinforcement-learning",

"ML-Agents-Huggy",

"region:us"

] |

reinforcement-learning

| 2023-07-24T20:07:23Z |

---

library_name: ml-agents

tags:

- Huggy

- deep-reinforcement-learning

- reinforcement-learning

- ML-Agents-Huggy

---

# **ppo** Agent playing **Huggy**

This is a trained model of a **ppo** agent playing **Huggy**

using the [Unity ML-Agents Library](https://github.com/Unity-Technologies/ml-agents).

## Usage (with ML-Agents)

The Documentation: https://unity-technologies.github.io/ml-agents/ML-Agents-Toolkit-Documentation/

We wrote a complete tutorial to learn to train your first agent using ML-Agents and publish it to the Hub:

- A *short tutorial* where you teach Huggy the Dog 🐶 to fetch the stick and then play with him directly in your

browser: https://huggingface.co/learn/deep-rl-course/unitbonus1/introduction

- A *longer tutorial* to understand how works ML-Agents:

https://huggingface.co/learn/deep-rl-course/unit5/introduction

### Resume the training

```bash

mlagents-learn <your_configuration_file_path.yaml> --run-id=<run_id> --resume

```

### Watch your Agent play

You can watch your agent **playing directly in your browser**

1. If the environment is part of ML-Agents official environments, go to https://huggingface.co/unity

2. Step 1: Find your model_id: felixb85/ppo-Huggy

3. Step 2: Select your *.nn /*.onnx file

4. Click on Watch the agent play 👀

|

BBHF/llama2-qlora-finetunined-french

|

BBHF

| 2023-07-24T20:07:12Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-24T20:07:07Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

Risco/llama2-qlora-finetunined-french

|

Risco

| 2023-07-24T19:55:55Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-24T19:55:48Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

nikitakapitan/xml-roberta-base-finetuned-panx-de-fr-it-en

|

nikitakapitan

| 2023-07-24T19:54:47Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-07-24T19:39:22Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xml-roberta-base-finetuned-panx-de-fr-it-en

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xml-roberta-base-finetuned-panx-de-fr-it-en

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1749

- F1: 0.8572

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.3433 | 1.0 | 835 | 0.1938 | 0.8093 |

| 0.1544 | 2.0 | 1670 | 0.1675 | 0.8454 |

| 0.1125 | 3.0 | 2505 | 0.1749 | 0.8572 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.0

- Tokenizers 0.13.3

|

idlebg/easter-fusion

|

idlebg

| 2023-07-24T19:54:19Z | 171 | 1 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"art",

"text-to-image",

"en",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-04-18T00:18:57Z |

---

license: other

tags:

- stable-diffusion

- art

language:

- en

pipeline_tag: text-to-image

---

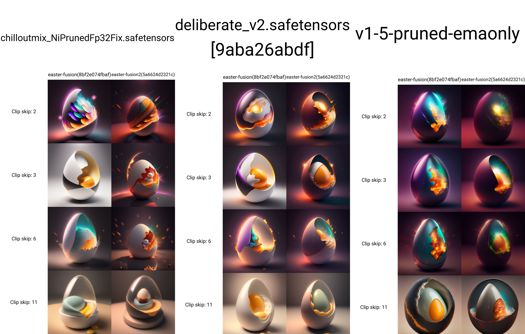

A fully revamped checkpoint based on the 512dim Lora

and chilloutmix_NiPrunedFp32Fix + deliberate_v2.

Training data: 512 DIM LORA

https://civitai.com/models/41893/lora-eggeaster-fusion

Here is how it looks using the lora on top of models:

More info on the Fusion project is soon to be announced ;)

🤟 🥃

lora weights: TEnc Weight 0.2 UNet Weight 1

Merged the 512dim lora to chillout and deliberate / --ratios 0.99 toward my fusion lora

C is CHillout / d is deliberate

Merged C and D with:

Base_alpha=0.53

Weight_values=0,0.157576195987654,0.28491512345679,0.384765625,0.459876543209877,0.512996720679012,0.546875,0.564260223765432,0.567901234567901,0.560546875,0.544945987654321,0.523847415123457,0.5,0.476152584876543,0.455054012345679,0.439453125,0.432098765432099,0.435739776234568,0.453125,0.487003279320987,0.540123456790124,0.615234375,0.71508487654321,0.842423804012347,1

and

1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0

both results merged

Weight_values=

1,0.842423804012346,0.71508487654321,0.615234375,0.540123456790123,0.487003279320988,0.453125,0.435739776234568,0.432098765432099,0.439453125,0.455054012345679,0.476152584876543,0.5,0.523847415123457,0.544945987654321,0.560546875,0.567901234567901,0.564260223765432,0.546875,0.512996720679013,0.459876543209876,0.384765625,0.28491512345679,0.157576195987653,0

And voila..

here is Egg_fusion checkpoint with the 512 dim-trained lora

Enjoy your eggs 🤟 🥃

|

musabgultekin/functionary-7b-v1

|

musabgultekin

| 2023-07-24T19:41:19Z | 10 | 13 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"dataset:anon8231489123/ShareGPT_Vicuna_unfiltered",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-24T14:31:40Z |

---

license: mit

datasets:

- anon8231489123/ShareGPT_Vicuna_unfiltered

---

See: https://github.com/musabgultekin/functionary/

|

diegoruffa3/llama2-qlora-finetunined-french

|

diegoruffa3

| 2023-07-24T19:39:47Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-24T19:39:41Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: float16

### Framework versions

- PEFT 0.5.0.dev0

|

IndianaUniversityDatasetsModels/test-BB3

|

IndianaUniversityDatasetsModels

| 2023-07-24T19:34:04Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"encoder-decoder",

"text2text-generation",

"medical",

"en",

"dataset:IndianaUniversityDatasetsModels/Medical_reports_Splits",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-03-10T10:30:52Z |

---

license: apache-2.0

datasets:

- IndianaUniversityDatasetsModels/Medical_reports_Splits

language:

- en

metrics:

- rouge

library_name: transformers

tags:

- medical

---

## Inputs and Outputs

- **Expected Input: " [Problems] + Text"

- **Target Output: " [findings] + Text [impression] + Text"

|

jakariamd/opp_115_do_not_track

|

jakariamd

| 2023-07-24T18:59:42Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-24T17:48:54Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: opp_115_do_not_track

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opp_115_do_not_track

This model is a fine-tuned version of [mukund/privbert](https://huggingface.co/mukund/privbert) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0010

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 176 | 0.0005 | 1.0 |

| No log | 2.0 | 352 | 0.0010 | 1.0 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

robinhad/open_llama_3b_uk_ggml

|

robinhad

| 2023-07-24T18:54:49Z | 0 | 0 | null |

[

"uk",

"dataset:robinhad/databricks-dolly-15k-uk",

"license:apache-2.0",

"region:us"

] | null | 2023-07-24T18:53:53Z |

---

license: apache-2.0

datasets:

- robinhad/databricks-dolly-15k-uk

language:

- uk

---

|

nikitakapitan/xlm-roberta-base-finetuned-panx-de

|

nikitakapitan

| 2023-07-24T18:49:26Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"base_model:FacebookAI/xlm-roberta-base",

"base_model:finetune:FacebookAI/xlm-roberta-base",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-07-16T05:29:21Z |

---

license: mit

base_model: xlm-roberta-base

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-de

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1604

- F1: 0.8595

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2865 | 1.0 | 715 | 0.1769 | 0.8240 |

| 0.1463 | 2.0 | 1430 | 0.1601 | 0.8420 |

| 0.0937 | 3.0 | 2145 | 0.1604 | 0.8595 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.0

- Tokenizers 0.13.3

|

jasheu/bert-base-uncased-finetuned-imdb

|

jasheu

| 2023-07-24T18:47:11Z | 116 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"fill-mask",

"generated_from_trainer",

"dataset:imdb",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-07-24T17:14:14Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: bert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3245

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.349 | 1.0 | 157 | 0.3184 |

| 0.3365 | 2.0 | 314 | 0.3168 |

| 0.3306 | 3.0 | 471 | 0.3134 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1

- Datasets 2.13.1

- Tokenizers 0.13.3

|

bluemyu/back

|

bluemyu

| 2023-07-24T18:44:43Z | 0 | 0 | null |

[

"license:creativeml-openrail-m",

"region:us"

] | null | 2023-03-03T19:11:17Z |

---

license: creativeml-openrail-m

---

|

Tverous/sft-trl-no-claim

|

Tverous

| 2023-07-24T18:38:21Z | 9 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gptj",

"text-generation",

"generated_from_trainer",

"dataset:anli",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-24T00:36:13Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- anli

model-index:

- name: sft-trl-no-claim

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# sft-trl-no-claim

This model is a fine-tuned version of [EleutherAI/gpt-j-6b](https://huggingface.co/EleutherAI/gpt-j-6b) on the anli dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6203

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 100

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 2.1604 | 0.2 | 1000 | 1.8712 |

| 1.0077 | 0.4 | 2000 | 1.4700 |

| 0.8175 | 0.6 | 3000 | 1.0772 |

| 0.7621 | 0.8 | 4000 | 0.7959 |

| 0.3346 | 1.0 | 5000 | 0.7570 |

| 0.2903 | 1.2 | 6000 | 0.6882 |

| 0.2313 | 1.4 | 7000 | 0.6471 |

| 0.3038 | 1.6 | 8000 | 0.6203 |

| 0.2292 | 1.8 | 9000 | 0.6203 |

| 0.193 | 2.0 | 10000 | 0.6203 |

| 0.1198 | 2.2 | 11000 | 0.6203 |

| 0.2185 | 2.4 | 12000 | 0.6203 |

| 0.1038 | 2.6 | 13000 | 0.6203 |

| 0.1469 | 2.8 | 14000 | 0.6203 |

| 0.1857 | 3.0 | 15000 | 0.6203 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu117

- Datasets 2.13.1

- Tokenizers 0.13.3

|

jakariamd/opp_115_user_choice_control

|

jakariamd

| 2023-07-24T18:35:50Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-24T18:29:39Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: opp_115_user_choice_control

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opp_115_user_choice_control

This model is a fine-tuned version of [mukund/privbert](https://huggingface.co/mukund/privbert) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1533

- Accuracy: 0.9473

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 152 | 0.2217 | 0.9366 |

| No log | 2.0 | 304 | 0.1533 | 0.9473 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

nlpulse/gpt-j-6b-english_quotes

|

nlpulse

| 2023-07-24T18:35:37Z | 25 | 1 |

transformers

|

[

"transformers",

"pytorch",

"gptj",

"text-generation",

"en",

"dataset:Abirate/english_quotes",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-10T16:43:29Z |

---

license: apache-2.0

datasets:

- Abirate/english_quotes

language:

- en

library_name: transformers

---

# Quantization 4Bits - 4.92 GB GPU memory usage for inference:

** Vide same fine-tuning for Llama2-7B-Chat: [https://huggingface.co/nlpulse/llama2-7b-chat-english_quotes](https://huggingface.co/nlpulse/llama2-7b-chat-english_quotes)

```

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.105.01 Driver Version: 515.105.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 1 NVIDIA GeForce ... Off | 00000000:04:00.0 Off | N/A |

| 37% 70C P2 163W / 170W | 4923MiB / 12288MiB | 91% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

```

## Fine-tuning

```

3 epochs, all dataset samples (split=train), 939 steps

1 x GPU NVidia RTX 3060 12GB - max. GPU memory: 7.44 GB

Duration: 1h45min

$ nvidia-smi && free -h

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.105.01 Driver Version: 515.105.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 1 NVIDIA GeForce ... Off | 00000000:04:00.0 Off | N/A |

|100% 89C P2 166W / 170W | 7439MiB / 12288MiB | 93% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

total used free shared buff/cache available

Mem: 77Gi 14Gi 23Gi 79Mi 39Gi 62Gi

Swap: 37Gi 0B 37Gi

```

## Inference

```

import os

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_path = "nlpulse/gpt-j-6b-english_quotes"

# tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_path)

tokenizer.pad_token = tokenizer.eos_token

# quantization config

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

# model

model = AutoModelForCausalLM.from_pretrained(model_path, quantization_config=quant_config, device_map={"":0})

# inference

device = "cuda"

text_list = ["Ask not what your country", "Be the change that", "You only live once, but", "I'm selfish, impatient and"]

for text in text_list:

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=60)

print('>> ', text, " => ", tokenizer.decode(outputs[0], skip_special_tokens=True))

```

## Requirements

```

pip install -U bitsandbytes

pip install -U git+https://github.com/huggingface/transformers.git

pip install -U git+https://github.com/huggingface/peft.git

pip install -U accelerate

pip install -U datasets

pip install -U scipy

```

## Scripts

[https://github.com/nlpulse-io/sample_codes/tree/main/fine-tuning/peft_quantization_4bits/gptj-6b](https://github.com/nlpulse-io/sample_codes/tree/main/fine-tuning/peft_quantization_4bits/gptj-6b)

## References

[QLoRa: Fine-Tune a Large Language Model on Your GPU](https://towardsdatascience.com/qlora-fine-tune-a-large-language-model-on-your-gpu-27bed5a03e2b)

[Making LLMs even more accessible with bitsandbytes, 4-bit quantization and QLoRA](https://huggingface.co/blog/4bit-transformers-bitsandbytes)

|

DunnBC22/vit-base-patch16-224-in21k_car_or_motorcycle

|

DunnBC22

| 2023-07-24T18:34:41Z | 181 | 2 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-01-07T02:05:05Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

- f1

- recall

- precision

model-index:

- name: vit-base-patch16-224-in21k_car_or_motorcycle

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.99375

language:

- en

pipeline_tag: image-classification

---

# vit-base-patch16-224-in21k_car_or_motorcycle

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0301

- Accuracy: 0.9938

- F1: 0.9939

- Recall: 0.9927

- Precision: 0.9951

## Model description

This is a binary classification model to distinguish between images of cars and images of motorcycles.

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Image%20Classification/Binary%20Classification/Car%20or%20Motorcycle/Car_or_Motorcycle_ViT.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://www.kaggle.com/datasets/utkarshsaxenadn/car-vs-bike-classification-dataset

_Sample Images From Dataset:_

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Recall | Precision |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|:------:|:---------:|

| 0.6908 | 1.0 | 200 | 0.0372 | 0.99 | 0.9902 | 0.9902 | 0.9902 |

| 0.6908 | 2.0 | 400 | 0.0301 | 0.9938 | 0.9939 | 0.9927 | 0.9951 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1

- Datasets 2.5.2

- Tokenizers 0.12.1

|

DunnBC22/hateBERT-Hate_Offensive_or_Normal_Speech

|

DunnBC22

| 2023-07-24T18:30:54Z | 101 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"en",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-03-03T20:49:11Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: hateBERT-Hate_Offensive_or_Normal_Speech

results: []

language:

- en

---

# hateBERT-Hate_Offensive_or_Normal_Speech

This model is a fine-tuned version of [GroNLP/hateBERT](https://huggingface.co/GroNLP/hateBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1655

- Accuracy: 0.9410

- F1

- Weighted: 0.9395

- Micro: 0.9410

- Macro: 0.9351

- Recall

- Weighted: 0.9410

- Micro: 0.9410

- Macro: 0.9273

- Precision

- Weighted: 0.9447

- Micro: 0.9410

- Macro: 0.9510

## Model description

For more information on how it was created, check out the following link: https://github.com/DunnBC22/NLP_Projects/blob/main/Multiclass%20Classification/Transformer%20Comparison/Hate%20%26%20Offensive%20Speech%20-%20hateBERT.ipynb

### Associated Models

This project is part of a comparison that included the following models:

- https://huggingface.co/DunnBC22/bert-large-uncased-Hate_Offensive_or_Normal_Speech

- https://huggingface.co/DunnBC22/bert-base-uncased-Hate_Offensive_or_Normal_Speech

- https://huggingface.co/DunnBC22/distilbert-base-uncased-Hate_Offensive_or_Normal_Speech

- https://huggingface.co/DunnBC22/fBERT-Hate_Offensive_or_Normal_Speech

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

The main limitation is the quality of the data source.

## Training and evaluation data

Dataset Source: https://www.kaggle.com/datasets/subhajournal/normal-hate-and-offensive-speeches

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Weighted F1 | Micro F1 | Macro F1 | Weighted Recall | Micro Recall | Macro Recall | Weighted Precision | Micro Precision | Macro Precision |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:-----------:|:--------:|:--------:|:---------------:|:------------:|:------------:|:------------------:|:---------------:|:---------------:|

| 0.8958 | 1.0 | 39 | 0.6817 | 0.5508 | 0.4792 | 0.5508 | 0.4395 | 0.5508 | 0.5508 | 0.4853 | 0.7547 | 0.5508 | 0.7906 |

| 0.4625 | 2.0 | 78 | 0.2448 | 0.9246 | 0.9230 | 0.9246 | 0.9170 | 0.9246 | 0.9246 | 0.9103 | 0.9263 | 0.9246 | 0.9296 |

| 0.2071 | 3.0 | 117 | 0.1655 | 0.9410 | 0.9395 | 0.9410 | 0.9351 | 0.9410 | 0.9410 | 0.9273 | 0.9447 | 0.9410 | 0.9510 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.12.1

- Datasets 2.9.0

- Tokenizers 0.12.1

|

ntu-spml/distilhubert

|

ntu-spml

| 2023-07-24T18:30:45Z | 2,421 | 31 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"hubert",

"feature-extraction",

"speech",

"en",

"dataset:librispeech_asr",

"arxiv:2110.01900",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

feature-extraction

| 2022-03-02T23:29:05Z |

---

language: en

datasets:

- librispeech_asr

tags:

- speech

license: apache-2.0

---

# DistilHuBERT

[DistilHuBERT by NTU Speech Processing & Machine Learning Lab](https://github.com/s3prl/s3prl/tree/master/s3prl/upstream/distiller)

The base model pretrained on 16kHz sampled speech audio. When using the model make sure that your speech input is also sampled at 16Khz.

**Note**: This model does not have a tokenizer as it was pretrained on audio alone. In order to use this model **speech recognition**, a tokenizer should be created and the model should be fine-tuned on labeled text data. Check out [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more in-detail explanation of how to fine-tune the model.

Paper: [DistilHuBERT: Speech Representation Learning by Layer-wise Distillation of Hidden-unit BERT](https://arxiv.org/abs/2110.01900)

Authors: Heng-Jui Chang, Shu-wen Yang, Hung-yi Lee

**Abstract**

Self-supervised speech representation learning methods like wav2vec 2.0 and Hidden-unit BERT (HuBERT) leverage unlabeled speech data for pre-training and offer good representations for numerous speech processing tasks. Despite the success of these methods, they require large memory and high pre-training costs, making them inaccessible for researchers in academia and small companies. Therefore, this paper introduces DistilHuBERT, a novel multi-task learning framework to distill hidden representations from a HuBERT model directly. This method reduces HuBERT's size by 75% and 73% faster while retaining most performance in ten different tasks. Moreover, DistilHuBERT required little training time and data, opening the possibilities of pre-training personal and on-device SSL models for speech.

The original model can be found under https://github.com/s3prl/s3prl/tree/master/s3prl/upstream/distiller .

# Usage

See [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more information on how to fine-tune the model. Note that the class `Wav2Vec2ForCTC` has to be replaced by `HubertForCTC`.

|

voidful/tts_hubert_cluster_bart_base

|

voidful

| 2023-07-24T18:30:35Z | 61 | 1 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"bart",

"text2text-generation",

"audio",

"automatic-speech-recognition",

"speech",

"asr",

"hubert",

"en",

"dataset:librispeech",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2022-03-02T23:29:05Z |

---

language: en

datasets:

- librispeech

tags:

- audio

- automatic-speech-recognition

- speech

- asr

- hubert

license: apache-2.0

metrics:

- wer

- cer

---

# voidful/tts_hubert_cluster_bart_base

## Usage

````python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("voidful/tts_hubert_cluster_bart_base")

model = AutoModelForSeq2SeqLM.from_pretrained("voidful/tts_hubert_cluster_bart_base")

````

generate output

```python

gen_output = model.generate(input_ids=tokenizer("going along slushy country roads and speaking to damp audience in drifty school rooms day after day for a fortnight he'll have to put in an appearance at some place of worship on sunday morning and he can come to ask immediately afterwards",return_tensors='pt').input_ids, max_length=1024)

print(tokenizer.decode(gen_output[0], skip_special_tokens=True))

```

## Result

`:vtok402::vtok329::vtok329::vtok75::vtok75::vtok75::vtok44::vtok150::vtok150::vtok222::vtok280::vtok280::vtok138::vtok409::vtok409::vtok409::vtok46::vtok441:`

|

S1X3L4/a2c-PandaReachDense-v2

|

S1X3L4

| 2023-07-24T18:29:45Z | 2 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"PandaReachDense-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-24T18:26:40Z |

---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -1.52 +/- 0.46

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

DunnBC22/led-base-16384-text_summarization_data

|

DunnBC22

| 2023-07-24T18:24:45Z | 102 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"led",

"text2text-generation",

"generated_from_trainer",

"summarization",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

summarization

| 2023-03-08T05:58:45Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: led-base-16384-text_summarization_data

results: []

language:

- en

pipeline_tag: summarization

---

# led-base-16384-text_summarization_data

This model is a fine-tuned version of [allenai/led-base-16384](https://huggingface.co/allenai/led-base-16384) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9531

- Rouge1: 43.3689

- Rouge2: 19.9885

- RougeL: 39.9887

- RougeLsum: 40.0679

- Gen Len: 14.0392

## Model description

This is a text summarization model.

For more information on how it was created, check out the following link: https://github.com/DunnBC22/NLP_Projects/blob/main/Text%20Summarization/Text-Summarized%20Data%20-%20Comparison/LED%20-%20Text%20Summarization%20-%204%20Epochs.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://www.kaggle.com/datasets/cuitengfeui/textsummarization-data

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | RougeL | RougeLsum | General Length |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 1.329 | 1.0 | 1197 | 0.9704 | 42.4111 | 19.8995 | 39.4717 | 39.5449 | 14.254 |

| 0.8367 | 2.0 | 2394 | 0.9425 | 43.1141 | 19.6089 | 39.7533 | 39.8298 | 14.1058 |

| 0.735 | 3.0 | 3591 | 0.9421 | 42.8101 | 19.8281 | 39.617 | 39.6751 | 13.7101 |

| 0.6737 | 4.0 | 4788 | 0.9531 | 43.3689 | 19.9885 | 39.9887 | 40.0679 | 14.0392 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.12.1

- Datasets 2.9.0

- Tokenizers 0.12.1

|

mcamara/whisper-tiny-dv

|

mcamara

| 2023-07-24T18:20:33Z | 81 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"whisper",

"automatic-speech-recognition",

"generated_from_trainer",

"dataset:PolyAI/minds14",

"base_model:openai/whisper-tiny",

"base_model:finetune:openai/whisper-tiny",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-07-24T15:14:39Z |

---

license: apache-2.0

base_model: openai/whisper-tiny

tags:

- generated_from_trainer

datasets:

- PolyAI/minds14

metrics:

- wer

model-index:

- name: whisper-tiny-dv

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: PolyAI/minds14

type: PolyAI/minds14

config: en-US

split: train

args: en-US

metrics:

- name: Wer

type: wer

value: 57.438016528925615

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-tiny-dv

This model is a fine-tuned version of [openai/whisper-tiny](https://huggingface.co/openai/whisper-tiny) on the PolyAI/minds14 dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4917

- Wer Ortho: 58.5441

- Wer: 57.4380

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: constant_with_warmup

- lr_scheduler_warmup_steps: 50

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer Ortho | Wer |

|:-------------:|:------:|:----:|:---------------:|:---------:|:-------:|

| 0.0013 | 17.86 | 500 | 1.0739 | 56.0148 | 55.3719 |

| 0.0003 | 35.71 | 1000 | 1.1575 | 54.3492 | 53.6009 |

| 0.0002 | 53.57 | 1500 | 1.2226 | 55.3979 | 54.7226 |

| 0.0001 | 71.43 | 2000 | 1.2711 | 56.6934 | 55.4900 |

| 0.0001 | 89.29 | 2500 | 1.3089 | 56.1999 | 55.1948 |

| 0.0 | 107.14 | 3000 | 1.3487 | 55.4596 | 54.4864 |

| 0.0 | 125.0 | 3500 | 1.3865 | 56.4466 | 55.5490 |

| 0.0 | 142.86 | 4000 | 1.4259 | 58.9759 | 57.6741 |

| 0.0 | 160.71 | 4500 | 1.4563 | 58.2973 | 57.0838 |

| 0.0 | 178.57 | 5000 | 1.4917 | 58.5441 | 57.4380 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu117

- Datasets 2.13.1

- Tokenizers 0.13.3

|

ManelSR/ppo-LunarLander-v2

|

ManelSR

| 2023-07-24T18:19:11Z | 0 | 0 |

stable-baselines3

|

[

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-24T18:16:40Z |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 223.37 +/- 16.43

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

DunnBC22/vit-base-patch16-224-in21k_vegetables_clf

|

DunnBC22

| 2023-07-24T18:17:32Z | 233 | 5 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"en",

"dataset:imagefolder",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-01-11T04:04:24Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

- f1

- recall

- precision

model-index:

- name: vit-base-patch16-224-in21k_vegetables_clf

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 1

language:

- en

pipeline_tag: image-classification

---

# vit-base-patch16-224-in21k_vegetables_clf

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k).

It achieves the following results on the evaluation set:

- Loss: 0.0014

- Accuracy: 1.0

- F1

- Weighted: 1.0

- Micro: 1.0

- Macro: 1.0

- Recall

- Weighted: 1.0

- Micro: 1.0

- Macro: 1.0

- Precision

- Weighted: 1.0

- Micro: 1.0

- Macro: 1.0

## Model description

This is a multiclass image classification model of different vegetables.

For more information on how it was created, check out the following link: https://github.com/DunnBC22/Vision_Audio_and_Multimodal_Projects/blob/main/Computer%20Vision/Image%20Classification/Multiclass%20Classification/Vegetable%20Image%20Classification/Vegetables_ViT.ipynb

## Intended uses & limitations

This model is intended to demonstrate my ability to solve a complex problem using technology.

## Training and evaluation data

Dataset Source: https://www.kaggle.com/datasets/misrakahmed/vegetable-image-dataset

_Sample Images From Dataset:_

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Weighted F1 | Micro F1 | Macro F1 | Weighted Recall | Micro Recall | Macro Recall | Weighted Precision | Micro Precision | Macro Precision |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:-----------:|:--------:|:--------:|:---------------:|:------------:|:------------:|:------------------:|:---------------:|:---------------:|

| 0.2079 | 1.0 | 938 | 0.0193 | 0.996 | 0.9960 | 0.996 | 0.9960 | 0.996 | 0.996 | 0.9960 | 0.9960 | 0.996 | 0.9960 |

| 0.0154 | 2.0 | 1876 | 0.0068 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 | 0.9987 |

| 0.0018 | 3.0 | 2814 | 0.0014 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.12.1

- Datasets 2.8.0

- Tokenizers 0.12.1

|

ailabturkiye/mazlumkiper

|

ailabturkiye

| 2023-07-24T18:17:30Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-07-24T18:13:57Z |

[](discord.gg/ailab)

# Mazlum Kiper (Ses Sanatçısı) - RVC V2 300 Epoch

**Ses sanatçısı Mazlum Kiper'in ses modelidir.**

**Rvc V2 | 23 Dakikalık Dataset | 300 Epoch olarak eğitilmiştir.**

_Dataset ve Train Benim Tarafımdan yapılmıştır.._

__Modelin izinsiz bir şekilde [Ai Lab Discord](discord.gg/ailab) Sunucusu dışında paylaşılması tamamen yasaktır, model openrail lisansına sahiptir.__

## Credits

**Herhangi bir platformda model ile yapılan bir cover paylaşımında credits vermeniz rica olunur.**

- Discord: hydragee

- YouTube: CoverLai (https://www.youtube.com/@coverlai)

[](discord.gg/ailab)

|

jakariamd/opp_115_policy_change

|

jakariamd

| 2023-07-24T18:17:18Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"roberta",

"text-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-24T18:09:44Z |

---

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: opp_115_policy_change

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# opp_115_policy_change

This model is a fine-tuned version of [mukund/privbert](https://huggingface.co/mukund/privbert) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0403

- Accuracy: 0.9920

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 171 | 0.0609 | 0.9890 |

| No log | 2.0 | 342 | 0.0403 | 0.9920 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

afh77788bh/my_awesome_qa_model

|

afh77788bh

| 2023-07-24T18:13:08Z | 61 | 0 |

transformers

|

[

"transformers",

"tf",

"distilbert",

"question-answering",

"generated_from_keras_callback",

"base_model:distilbert/distilbert-base-uncased",

"base_model:finetune:distilbert/distilbert-base-uncased",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-07-24T02:37:55Z |

---

license: apache-2.0

base_model: distilbert-base-uncased

tags:

- generated_from_keras_callback

model-index:

- name: afh77788bh/my_awesome_qa_model

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# afh77788bh/my_awesome_qa_model

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 3.5352

- Validation Loss: 2.3334

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'Adam', 'weight_decay': None, 'clipnorm': None, 'global_clipnorm': None, 'clipvalue': None, 'use_ema': False, 'ema_momentum': 0.99, 'ema_overwrite_frequency': None, 'jit_compile': False, 'is_legacy_optimizer': False, 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 2e-05, 'decay_steps': 500, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Epoch |

|:----------:|:---------------:|:-----:|

| 3.5352 | 2.3334 | 0 |

### Framework versions

- Transformers 4.31.0

- TensorFlow 2.12.0

- Datasets 2.13.1

- Tokenizers 0.13.3

|

NbAiLab/nb-whisper-base-beta

|

NbAiLab

| 2023-07-24T18:05:55Z | 84 | 1 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"onnx",

"safetensors",

"whisper",

"automatic-speech-recognition",

"audio",

"asr",

"hf-asr-leaderboard",

"no",

"nb",

"nn",

"en",

"dataset:NbAiLab/ncc_speech",

"dataset:NbAiLab/NST",

"dataset:NbAiLab/NPSC",

"arxiv:2212.04356",

"arxiv:1910.09700",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-07-23T19:30:09Z |

---

license: cc-by-4.0

language:

- 'no'

- nb

- nn

- en

datasets:

- NbAiLab/ncc_speech

- NbAiLab/NST

- NbAiLab/NPSC

tags:

- audio

- asr

- automatic-speech-recognition

- hf-asr-leaderboard

metrics:

- wer

- cer

library_name: transformers

pipeline_tag: automatic-speech-recognition

widget:

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/1/audio/audio.mp3

example_title: FLEURS sample 1

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/4/audio/audio.mp3

example_title: FLEURS sample 2

---

# NB-Whisper Base (beta)

This is a **_public beta_** of the Norwegian NB-Whisper Base model released by the National Library of Norway. NB-Whisper is a series of models for automatic speech recognition (ASR) and speech translation, building upon the foundation laid by [OpenAI's Whisper](https://arxiv.org/abs/2212.04356). All models are trained on 20,000 hours of labeled data.

<center>

<figure>

<video controls>

<source src="https://huggingface.co/NbAiLab/nb-whisper-small-beta/resolve/main/king.mp4" type="video/mp4">

Your browser does not support the video tag.

</video>

<figcaption><a href="https://www.royalcourt.no/tale.html?tid=137662&sek=28409&scope=27248" target="_blank">Speech given by His Majesty The King of Norway at the garden party hosted by Their Majesties The King and Queen at the Palace Park on 1 September 2016.</a>Transcribed using the Small model.</figcaption>

</figure>

</center>

## Model Details

NB-Whisper models will be available in five different sizes:

| Model Size | Parameters | Availability |

|------------|------------|--------------|

| tiny | 39M | [NB-Whisper Tiny (beta)](https://huggingface.co/NbAiLab/nb-whisper-tiny-beta) |

| base | 74M | [NB-Whisper Base (beta)](https://huggingface.co/NbAiLab/nb-whisper-base-beta) |

| small | 244M | [NB-Whisper Small (beta)](https://huggingface.co/NbAiLab/nb-whisper-small-beta) |

| medium | 769M | [NB-Whisper Medium (beta)](https://huggingface.co/NbAiLab/nb-whisper-medium-beta) |

| large | 1550M | [NB-Whisper Large (beta)](https://huggingface.co/NbAiLab/nb-whisper-large-beta) |

An official release of NB-Whisper models is planned for the Fall 2023.

Please refer to the OpenAI Whisper model card for more details about the backbone model.

### Model Description

- **Developed by:** [NB AI-Lab](https://ai.nb.no/)

- **Shared by:** [NB AI-Lab](https://ai.nb.no/)

- **Model type:** `whisper`

- **Language(s) (NLP):** Norwegian, Norwegian Bokmål, Norwegian Nynorsk, English

- **License:** [Creative Commons Attribution 4.0 International (CC BY 4.0)](https://creativecommons.org/licenses/by/4.0/)

- **Finetuned from model:** [openai/whisper-small](https://huggingface.co/openai/whisper-small)

### Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/NbAiLab/nb-whisper/

- **Paper:** _Coming soon_

- **Demo:** http://ai.nb.no/demo/nb-whisper

## Uses

### Direct Use

This is a **_public beta_** release. The models published in this repository are intended for a generalist purpose and are available to third parties.

### Downstream Use

For Norwegian transcriptions we are confident that this public beta will give you State-of-the-Art results compared to currently available Norwegian ASR models of the same size. However, it is still known to show some hallucinations, as well as a tendency to drop part of the transcript from time to time. Please also note that the transcripts are typically not word by word. Spoken language and written language are often very different, and the model aims to "translate" spoken utterances into grammatically correct written sentences. We strongly believe that the best way to understand these models is to try them yourself.

A significant part of the training material comes from TV subtitles. Subtitles often shorten the content to make it easier to read. Typically, non-essential parts of the utterance can be also dropped. In some cases, this is a desired ability, in other cases, this is undesired. The final release of these model will provida a mechanism to control for this beaviour.

## Bias, Risks, and Limitations

This is a public beta that is not intended for production. Production use without adequate assessment of risks and mitigation may be considered irresponsible or harmful. These models may have bias and/or any other undesirable distortions. When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence. In no event shall the owner of the models (The National Library of Norway) be liable for any results arising from the use made by third parties of these models.

## How to Get Started with the Model

Use the code below to get started with the model.

```python

from transformers import pipeline

asr = pipeline(

"automatic-speech-recognition",

"NbAiLab/nb-whisper-base-beta"

)

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'}

)

# {'text': ' Så mange anga kører seg i så viktig sak, så vi får du kører det tilbake med. Om kabaret gudam i at vi skal hjælge. Kør seg vi gjør en uda? Nei noe skal å abelistera sonvorne skrifer. Det er sak, så kjent det bare handling i samtatsen til bargører. Trudet første lask. På den å først så å køre og en gange samme, og så får vi gjør å vorte vorte vorte når vi kjent dit.'}

```

Timestamps can also be retrieved by passing in the right parameter.

```python

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'},

return_timestamps=True,

)

# {'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om hva valget dem gjør at vi skal gjøre. Hva skjer vi gjøre nå da? Nei, nå skal jo administrationen vår skrivferdige sak, så kjem til behandling i samfærdshetshøyvalget, tror det første

# r. Først så kan vi ta og henge dem kjemme, og så får vi gjøre vårt valget når vi kommer dit.',

# 'chunks': [{'timestamp': (0.0, 5.34),

# 'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om'},

# {'timestamp': (5.34, 8.64),

# 'text': ' hva valget dem gjør at vi skal gjøre.'},

# {'timestamp': (8.64, 10.64), 'text': ' Hva skjer vi gjøre nå da?'},

# {'timestamp': (10.64, 17.44),

# 'text': ' Nei, nå skal jo administrationen vår skrivferdige sak, så kjem til behandling i samfærdshetshøyvalget,'},

# {'timestamp': (17.44, 19.44), 'text': ' tror det første år.'},

# {'timestamp': (19.44, 23.94),

# 'text': ' Først så kan vi ta og henge dem kjemme, og så får vi gjøre vårt valget når vi kommer dit.'}]}

```

## Training Data

Trained data comes from Språkbanken and the digital collection at the National Library of Norway. Training data includes:

- NST Norwegian ASR Database (16 kHz), and its corresponding dataset

- Transcribed speeches from the Norwegian Parliament produced by Språkbanken

- TV broadcast (NRK) subtitles (NLN digital collection)

- Audiobooks (NLN digital collection)

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** TPUv4

- **Hours used:** 1,536

- **Cloud Provider:** Google Cloud

- **Compute Region:** `us-central1`

- **Carbon Emitted:** Total emissions are estimated to be 247.77 kgCO₂ of which 100 percents were directly offset by the cloud provider.

#### Software

The model is trained using Jax/Flax. The final model is converted to Pytorch, Tensorflow, whisper.cpp and ONXX. Please tell us if you would like future models to be converted to other format.

## Citation & Contributors

The development of this model was part of the contributors' professional roles at the National Library of Norway, under the _NoSTram_ project led by _Per Egil Kummervold (PEK)_. The Jax code, dataset loaders, and training scripts were collectively designed by _Javier de la Rosa (JdlR)_, _Freddy Wetjen (FW)_, _Rolv-Arild Braaten (RAB)_, and _PEK_. Primary dataset curation was handled by _FW_, _RAB_, and _PEK_, while _JdlR_ and _PEK_ crafted the documentation. The project was completed under the umbrella of AiLab, directed by _Svein Arne Brygfjeld_.

All contributors played a part in shaping the optimal training strategy for the Norwegian ASR model based on the Whisper architecture.

_A paper detailing our process and findings is underway!_

## Acknowledgements

Thanks to [Google TPU Research Cloud](https://sites.research.google/trc/about/) for supporting this project with extensive training resources. Thanks to Google Cloud for supporting us with credits for translating large parts of the corpus. A special thanks to [Sanchit Ghandi](https://huggingface.co/sanchit-gandhi) for providing thorough technical advice in debugging and with the work of getting this to train on Google TPUs. A special thanks to Per Erik Solberg at Språkbanken for the collaboration with regard to the Stortinget corpus.

## Contact

We are releasing this ASR Whisper model as a public beta to gather constructive feedback on its performance. Please do not hesitate to contact us with any experiences, insights, or suggestions that you may have. Your input is invaluable in helping us to improve the model and ensure that it effectively serves the needs of users. Whether you have technical concerns, usability suggestions, or ideas for future enhancements, we welcome your input. Thank you for participating in this critical stage of our model's development.

If you intend to incorporate this model into your research, we kindly request that you reach out to us. We can provide you with the most current status of our upcoming paper, which you can cite to acknowledge and provide context for the work done on this model.

Please use this email as the main contact point, it is read by the entire team: <a rel="noopener nofollow" href="mailto:[email protected]">[email protected]</a>

|

jvadlamudi2/convnext-tiny-224-jvadlamudi2

|

jvadlamudi2

| 2023-07-24T18:05:38Z | 193 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"convnext",

"image-classification",

"generated_from_trainer",

"base_model:facebook/convnext-tiny-224",

"base_model:finetune:facebook/convnext-tiny-224",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-07-24T17:51:37Z |

---

license: apache-2.0

base_model: facebook/convnext-tiny-224

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: convnext-tiny-224-jvadlamudi2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# convnext-tiny-224-jvadlamudi2

This model is a fine-tuned version of [facebook/convnext-tiny-224](https://huggingface.co/facebook/convnext-tiny-224) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5780

- Accuracy: 0.7946

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 1.0 | 7 | 0.5882 | 0.8036 |

| 0.6213 | 2.0 | 14 | 0.5821 | 0.7857 |

| 0.6123 | 3.0 | 21 | 0.5780 | 0.7946 |

### Framework versions

- Transformers 4.31.0

- Pytorch 2.0.1+cu118

- Datasets 2.14.0

- Tokenizers 0.13.3

|

NbAiLab/nb-whisper-large-beta

|

NbAiLab

| 2023-07-24T18:05:01Z | 42,970 | 8 |

transformers

|

[

"transformers",

"pytorch",

"tf",

"jax",

"safetensors",

"whisper",

"automatic-speech-recognition",

"audio",

"asr",

"hf-asr-leaderboard",

"no",

"nb",

"nn",

"en",

"dataset:NbAiLab/ncc_speech",

"dataset:NbAiLab/NST",

"dataset:NbAiLab/NPSC",

"arxiv:2212.04356",

"arxiv:1910.09700",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-07-23T19:31:02Z |

---

license: cc-by-4.0

language:

- 'no'

- nb

- nn

- en

datasets:

- NbAiLab/ncc_speech

- NbAiLab/NST

- NbAiLab/NPSC

tags:

- audio

- asr

- automatic-speech-recognition

- hf-asr-leaderboard

metrics:

- wer

- cer

library_name: transformers

pipeline_tag: automatic-speech-recognition

widget:

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/1/audio/audio.mp3

example_title: FLEURS sample 1

- src: https://datasets-server.huggingface.co/assets/google/fleurs/--/nb_no/train/4/audio/audio.mp3

example_title: FLEURS sample 2

---

# NB-Whisper Large (beta)

This is a **_public beta_** of the Norwegian NB-Whisper Large model released by the National Library of Norway. NB-Whisper is a series of models for automatic speech recognition (ASR) and speech translation, building upon the foundation laid by [OpenAI's Whisper](https://arxiv.org/abs/2212.04356). All models are trained on 20,000 hours of labeled data.

<center>

<figure>

<video controls>

<source src="https://huggingface.co/NbAiLab/nb-whisper-small-beta/resolve/main/king.mp4" type="video/mp4">

Your browser does not support the video tag.

</video>

<figcaption><a href="https://www.royalcourt.no/tale.html?tid=137662&sek=28409&scope=27248" target="_blank">Speech given by His Majesty The King of Norway at the garden party hosted by Their Majesties The King and Queen at the Palace Park on 1 September 2016.</a>Transcribed using the Small model.</figcaption>

</figure>

</center>

## Model Details

NB-Whisper models will be available in five different sizes:

| Model Size | Parameters | Availability |

|------------|------------|--------------|

| tiny | 39M | [NB-Whisper Tiny (beta)](https://huggingface.co/NbAiLab/nb-whisper-tiny-beta) |

| base | 74M | [NB-Whisper Base (beta)](https://huggingface.co/NbAiLab/nb-whisper-base-beta) |

| small | 244M | [NB-Whisper Small (beta)](https://huggingface.co/NbAiLab/nb-whisper-small-beta) |

| medium | 769M | [NB-Whisper Medium (beta)](https://huggingface.co/NbAiLab/nb-whisper-medium-beta) |

| large | 1550M | [NB-Whisper Large (beta)](https://huggingface.co/NbAiLab/nb-whisper-large-beta) |

An official release of NB-Whisper models is planned for the Fall 2023.

Please refer to the OpenAI Whisper model card for more details about the backbone model.

### Model Description

- **Developed by:** [NB AI-Lab](https://ai.nb.no/)

- **Shared by:** [NB AI-Lab](https://ai.nb.no/)

- **Model type:** `whisper`

- **Language(s) (NLP):** Norwegian, Norwegian Bokmål, Norwegian Nynorsk, English

- **License:** [Creative Commons Attribution 4.0 International (CC BY 4.0)](https://creativecommons.org/licenses/by/4.0/)

- **Finetuned from model:** [openai/whisper-small](https://huggingface.co/openai/whisper-small)

### Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/NbAiLab/nb-whisper/

- **Paper:** _Coming soon_

- **Demo:** http://ai.nb.no/demo/nb-whisper

## Uses

### Direct Use

This is a **_public beta_** release. The models published in this repository are intended for a generalist purpose and are available to third parties.

### Downstream Use

For Norwegian transcriptions we are confident that this public beta will give you State-of-the-Art results compared to currently available Norwegian ASR models of the same size. However, it is still known to show some hallucinations, as well as a tendency to drop part of the transcript from time to time. Please also note that the transcripts are typically not word by word. Spoken language and written language are often very different, and the model aims to "translate" spoken utterances into grammatically correct written sentences. We strongly believe that the best way to understand these models is to try them yourself.

A significant part of the training material comes from TV subtitles. Subtitles often shorten the content to make it easier to read. Typically, non-essential parts of the utterance can be also dropped. In some cases, this is a desired ability, in other cases, this is undesired. The final release of these model will provida a mechanism to control for this beaviour.

## Bias, Risks, and Limitations

This is a public beta that is not intended for production. Production use without adequate assessment of risks and mitigation may be considered irresponsible or harmful. These models may have bias and/or any other undesirable distortions. When third parties, deploy or provide systems and/or services to other parties using any of these models (or using systems based on these models) or become users of the models, they should note that it is their responsibility to mitigate the risks arising from their use and, in any event, to comply with applicable regulations, including regulations regarding the use of artificial intelligence. In no event shall the owner of the models (The National Library of Norway) be liable for any results arising from the use made by third parties of these models.

## How to Get Started with the Model

Use the code below to get started with the model.

```python

from transformers import pipeline

asr = pipeline(

"automatic-speech-recognition",

"NbAiLab/nb-whisper-large-beta"

)

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'}

)

# {'text': ' Så mange anga kører seg i så viktig sak, så vi får du kører det tilbake med. Om kabaret gudam i at vi skal hjælge. Kør seg vi gjør en uda? Nei noe skal å abelistera sonvorne skrifer. Det er sak, så kjent det bare handling i samtatsen til bargører. Trudet første lask. På den å først så å køre og en gange samme, og så får vi gjør å vorte vorte vorte når vi kjent dit.'}

```

Timestamps can also be retrieved by passing in the right parameter.

```python

asr(

"audio.mp3",

generate_kwargs={'task': 'transcribe', 'language': 'no'},

return_timestamps=True,

)

# {'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om hva valget dem gjør at vi skal gjøre. Hva skjer vi gjøre nå da? Nei, nå skal jo administrationen vår skrivferdige sak, så kjem til behandling i samfærdshetshøyvalget, tror det første

# r. Først så kan vi ta og henge dem kjemme, og så får vi gjøre vårt valget når vi kommer dit.',

# 'chunks': [{'timestamp': (0.0, 5.34),

# 'text': ' at så mange angar til seg så viktig sak, så vi får jo kjølget klare tilbakemeldingen om'},

# {'timestamp': (5.34, 8.64),

# 'text': ' hva valget dem gjør at vi skal gjøre.'},

# {'timestamp': (8.64, 10.64), 'text': ' Hva skjer vi gjøre nå da?'},